Metaverse4Deaf: Assistive Technology for Inclusion of People with

Hearing Impairment in Distance Education Through a Metaverse-Based

Environment

Adson Damasceno

1 a

, Lidiane Silva

2

, Eudenia Barros

2

and Francisco Oliveira

2

1

State University of Ceara (UECE), Mombac¸a Campus, Mombac¸a CE, Brazil

2

UECE, State University of Ceara, Fortaleza, Brazil

Keywords:

Human-Computer Interaction, Assistive Technology, People with Disabilities, Distance Education, Metaverse.

Abstract:

In the context of the COVID-19 pandemic, emphasizing the importance of digital transformation in education,

this work in progress (Position Paper) addresses the imperative of inclusion and accessibility, particularly for

individuals with disabilities. While the Dell Accessible Learning (DAL) platform has benefited 60,000+ users

in remote learning, incorporating disruptive technologies like Metaverse could improve hands-on learning for

people with disabilities. Metaverse augmented and virtual reality offers unique opportunities, but challenges

remain in ensuring accessibility features. Focused on enhancing the DAL for deaf people, the research involves

prototyping Metaverse solutions, considering impacts on users with and without disabilities. Challenges in-

clude optimizing the user experience and representing behaviors such as gestures and facial expressions.As

an innovation proposal, this ongoing research involved collecting user data to elicit requirements, prototyp-

ing, and developing the minimum viable product of the metaverse environment, in addition to usability and

acceptance tests with groups of users with disabilities. Central to the Metaverse is the representation of hu-

man behaviors, necessitating the understanding and translation of gestures from individuals with disabilities.

The investigation culminates in a proposal validation experiment, a fundamental step towards achieving truly

inclusive and accessible education through the Metaverse.

1 INTRODUCTION

Metaverse plays a vital role in education. As indi-

cated by scholars, there are several potential appli-

cations of the Metaverse in education, such as medi-

cal, nursing, and healthcare education, science educa-

tion, military training, and manufacturing training as

well as language learning (Jovanovi

´

c and Milosavl-

jevi

´

c, 2022). The concept of Metaverse first oc-

curred in 1992 and drew attention with its movie

Ready Player One (Cline, 2011). Metaverses are

immersive three-dimensional virtual worlds (VWs)

in which people interact as avatars with each other

and with software agents, using the metaphor of the

real world but without its physical limitations. This

broad concept of a metaverse builds on and general-

izes from existing definitions of VW metaverses, pro-

viding virtual team members with new ways of man-

aging and overcoming geographic and other barriers

to collaboration. These environments have the po-

a

https://orcid.org/0000-0001-6570-4103

tential for rich and engaging collaboration, but their

capabilities have yet to be examined in depth (Davis

et al., 2009). Metaverses offer immersive virtual re-

alities with potential applications in distance educa-

tion. Integrating Metaverse with assistive technology

enables educational advancements and inclusive par-

ticipation (Damasceno et al., 2023). The original goal

of using Metaverse in education is encouraging more

people to engage in them. As a result, it is necessary

to create an inclusive virtual environment that con-

siders as many different participant requirements as

possible. For example, affordability is inevitably an

issue for poor groups, but they urgently consider ed-

ucation a life-changing opportunity. Respecting the

needs of special learners, such as people with disabil-

ities or religious, is often more important than provid-

ing a high-quality education (Tlili et al., 2022).

There is a need to research the literature on the

state of the art of Assistive Technology (AT) for Dis-

tance Education (DE) Research in a Metaverse-Based

Environment to explore the interaction between the

Metaverse, AT, and DE. We searched previous liter-

510

Damasceno, A., Silva, L., Barros, E. and Oliveira, F.

Metaverse4Deaf: Assistive Technology for Inclusion of People with Hearing Impairment in Distance Education Through a Metaverse-Based Environment.

DOI: 10.5220/0012700200003693

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 16th International Conference on Computer Supported Education (CSEDU 2024) - Volume 1, pages 510-517

ISBN: 978-989-758-697-2; ISSN: 2184-5026

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

ature to gather, organize, and analyze evidence. Al-

though we found a limited number of studies explic-

itly addressing accessibility in the Metaverse, these

articles emphasized the need for solutions to ensure

that People with Disabilities (PwD) can fully access

and participate in these virtual environments. Chal-

lenges associated with implementing accessibility in

the Metaverse include adapting interfaces for differ-

ent disabilities, the availability of compatible AT, and

raising awareness among developers about the spe-

cific needs of PwD. These findings encouraged us to

research and develop inclusive and accessible educa-

tional solutions in the Metaverse, promoting equal op-

portunities and rights for all.

Our Problem Statement is that PwD students do

not feel comfortable in collaborative environments

because of their disability. So, a metaverse-based

platform where disability limitations are not revealed

can provide a more welcoming and inclusive envi-

ronment for PwD by facilitating collaboration, given

that collaboration is essential for learning to integrate

into communities. This position paper proposes an

integrative framework for including people with hear-

ing impairment in DE through a metaverse-based en-

vironment. The research findings from the present

study help develop a better understanding of how to

evolve in research and develop innovative solutions

through the Metaverse that enable grounding con-

straints for PwD, especially in this study, people who

are deaf or hard of hearing.

The remainder of this paper is organized as fol-

lows. Section 2 gives an overview of the Education,

Metaverse, and Assistive Technologies. In Section 3,

we discuss our methodology. Section 4 describes the

proposed framework and discusses Human-Computer

Interaction (HCI) theories in our approach. Further-

more, it presents a real case in practice, and Section 5

mentions the conclusions we can draw from our work.

2 BACKGROUND

Metaverse in Education. Lin et al. (2022) ex-

plore integrating traditional education with Web 2.0

through MOOCs, highlighting certain limitations,

such as the lack of engaging content and low stu-

dent participation. Recognizing the imminent arrival

of Generation Z, who are familiar with online edu-

cation, preparing for a new revolution in educational

models becomes crucial. Leveraging the potential of

the Metaverse can be particularly beneficial since it

offers interaction, authenticity, and immersive expe-

riences. According to Fernandes and Werner (2022),

the Metaverse is a new paradigm under construction

where social, immersive virtual reality platforms will

be compatible with several kinds of applications.

In this context, applications of this metaverse must

serve as relevant tools for PwD. Ball (2022) empha-

sized the importance of ensuring the metaverse is ac-

cessible to individuals with disabilities, recognizing

them as valuable contributors. However, he did not

specify any particular disability or explain how acces-

sibility and inclusion should be effectively achieved.

In this direction, there is a conceptual highlight of AT

as an association between products and services, con-

sidering that these are associated with both the need

for information accessibility and physical accessibil-

ity (Vianna and Pinto, 2017).

Assistive Technology. Assistive technologies play a

vital role in the daily practice of Virtual Learning En-

vironments (VLE), triggering significant educational

transformations. The integration of information tech-

nology in education has been crucial to enhancing

students’ access and participation, regardless of their

abilities or limitations. AT is a term used to identify

the arsenal of resources and services that contribute

to providing or expanding the functional abilities of

PwD and, consequently, promoting their independent

life and inclusion (Bersch, 2008).

3 RESEARCH METHOD

Literature Survey. We carried out a bibliographic

survey to gather, organize, and analyze the evidence

available in the literature and research trends on the

metaverse, AT, and DE (Damasceno et al., 2023). To

achieve the proposed objective, we carry out a Rapid

Review (RR) (Cartaxo et al., 2018), as it has essen-

tial characteristics that: 1) reduce the costs of com-

plicated methods, 2) provide specific evidence, 3) op-

erate in close collaboration with professionals and re-

late results by convincing means. The aspects consid-

ered are relevant to our context, as some authors are

professionals from a company that specializes in cre-

ating innovative and accessible solutions for different

groups, including DE and TA, seeking to obtain ini-

tial insights into integrating the metaverse alongside

these two concepts.

Answering the Following Questions. RQ1) How

has the publication of primary studies evolved over

the years? RQ2) What domains are being explored

in studies addressing accessibility in DE within the

metaverse context, and how are they approaching

it? RQ3) What challenges are associated with imple-

menting accessibility in educational metaverse plat-

forms? RQ4) What research and empirical strategies

and methodological approach are being used?

Metaverse4Deaf: Assistive Technology for Inclusion of People with Hearing Impairment in Distance Education Through a Metaverse-Based

Environment

511

Our RR findings indicate that most proposals

were published in Journals (57%) between the years

2020 and 2023. The Chemistry Lab context was the

main focus of most studies, comprising three stud-

ies (42.5%). Most returned studies employed eval-

uation research (57.1%) as their strategy. Empirical

experiments were conducted in 42.8% of the stud-

ies, and the quality-quantitative method was applied

in 71.4%. Regarding the challenges of implementing

accessibility in the metaverse, we highlighted the in-

strumentation, technical problems, complex systems,

student performance, sense of presence, and accessi-

bility assessment. Finally, we generated an Evidence

Briefing

1

based on our findings to make the RR more

appealing to practitioners.

Related Works. The studies explored metaverse and

education (Alfaisal et al., 2022) (Onggirawan et al.,

2023), and metaverse and accessibility (Fernandes

and Werner, 2022). Unlike these studies, our review

considered the three pillars together to understand

how accessibility is being considered in metaverse-

based distance learning environments. In addition,

we identify open challenges to meet practitioners’

perceptions in a real-world environment and sup-

port researchers by pointing out study possibilities to

assist PwD. This study proposes an innovative ap-

proach to promote inclusive and accessible educa-

tion through an integrative framework to include peo-

ple with hearing impairments in distance education

through a metaverse-based environment, Figure 1.

Figure 1: Infographic for framework vision with processes.

4 FRAMEWORK M4DEAFVERSE

4.1 Metaverse and Grounding

Constraints

According to Kendon (2004), willing or not, humans,

when in co-presence, continuously inform one an-

other about their intentions, interests, feelings, and

1

https://zenodo.org/records/8286438

ideas using visible bodily action. For example, it

is through the orientation of the body and, mainly,

through the orientation of the eyes that information is

provided about the direction and nature of a person´s

attention. Considering the presence of avatars in the

metaverse, access to the avatar’s embodied behavior

will undoubtedly provide more opportunities for un-

derstanding for a deaf student, and this behavior is not

restricted to signing in sign language.

Considering grounding constraints, metaverse

technology can enable Clark’s common ground con-

straints. That is, it can provide a common basis of

understanding and reference among participants in an

interaction. The metaverse is a virtual representation

of the real world or a shared virtual space where peo-

ple can interact, collaborate, and participate in dif-

ferent activities. In the context of the metaverse,

“grounding constraints” can be the elements or char-

acteristics that ensure participants have a shared un-

derstanding of the virtual environment. This includes

representing objects, actions, and interactions in a

consistent and understandable way. Clark’s “common

ground” theory refers to the knowledge shared be-

tween interlocutors in a conversation. The baseline al-

lows for effective communication, as both sides have

a mutual understanding of what is being discussed. In

the context of the metaverse, “grounding constraints”

can be the design elements, conventions, and norms

that guarantee the creation of this ”common ground.”

This may include the consistent representation of ob-

jects, gestures, facial expressions, and other forms of

nonverbal communication in the virtual environment.

Metaverse technology can enable common ground

constraints, as proposed by Herbert Clark. Such con-

straints can refer to the information, perspectives, and

understandings shared between people in a commu-

nicative interaction (Clark, 1992). Metaverse is an

enormous framework that owns many digital features

of the future. There are numerous benefits in the

Metaverse world, like interaction, authenticity, and

portability. As a result, the new educational system

has to be readdressed to retain its accessibility and

prolong its existence (Lin et al., 2022).

The metaverse enables grounding constraints. Ac-

cording to Lin et al. (2022), there are changes that

the metaverse can bring to education. Among these

changes, we highlight in this study the one related to

connection, that is, it could be the issue of connect-

ing remotely; it is a quick connection, and it will offer

you communication and interaction with other people

at any time and anywhere. So, one of the positive con-

sequences is to make it possible to “see what I see.”

The avatar can see the other avatars cohabiting in

the same virtual world. “Grounding Constraints” re-

CSEDU 2024 - 16th International Conference on Computer Supported Education

512

fer to the synchronization of perceptions between par-

ticipants, such as what they see, hear, or feel. In the

context of the metaverse, this synchronization is en-

hanced as users simultaneously share the same virtual

space, which significantly improves communication

for people with hearing impairments. That is, it re-

lates to what, for example, “what I see,” “what you

see,” “if we are seeing the same thing,” “if we are

hearing the same thing,” “if we are feeling feeling the

same temperature,” are things that facilitate commu-

nication, so that is why the metaverse will be better

able to improve these “Grounding Constraints” be-

cause the subject will see things that other subjects

will see at the same time, in short, the sense of pres-

ence it must, be that thing that we call immediate

physical co-presence, that is when people are in the

same place, they see the same things, feel the same

things, see each other’s bodies, see each other’s be-

havior incorporated from the other and this greatly fa-

cilitates communications.

That said, imagine the scenario where the teacher

teaches a little class and divides the students into

groups to carry out an activity. Metaverse technol-

ogy can play a significant role in improving such con-

straints. Among the possibilities, we highlight a) Vir-

tual Presence. In the metaverse, participants can cre-

ate avatars representing their virtual presence. This

allows for a richer form of communication, as ges-

tures, facial expressions, and even movements can

be represented. This makes understanding colleagues’

intentions and emotions easier, reinforcing common

ground, b) Content Sharing. Metaverse platforms

often offer tools for real-time content sharing. Dur-

ing the discussion, students can share presentations,

documents, and other relevant information. This in-

stant and synchronized sharing helps keep everyone

in the same context of information, promoting com-

mon ground, c) Collaborative Environment. Meta-

verse environments can provide collaborative virtual

spaces where groups can discuss the designated activ-

ity. These environments can simulate classrooms or

meeting rooms, creating a sense of co-presence, sim-

ilar to being physically at a table. This helps in build-

ing a shared common ground, d) Multimodal Inter-

action. With metaverse technology, communication

is not limited to text alone. Audio, video, and other

multimodal interaction modes are available. This va-

riety of modes of communication allows for a richer

expression of ideas, facilitating mutual understanding

and thus contributing to the development of a robust

common ground, e) Immediate Feedback. Meta-

verse tools often allow for immediate feedback. Dur-

ing the group activity discussion, participants can pro-

vide instant feedback, clarify doubts, and correct mis-

understandings. This helps with continually adapting

the common ground as the conversation progresses,

f) Accessibility and Inclusion. The metaverse can be

a more inclusive solution, allowing the participation

of students who, due to geographic or physical limi-

tations, cannot be physically present. This contributes

to a more diverse common ground.

4.2 Strategies for Inclusion

In HCI, it is imperative to recognize that many of

the methods, models, and techniques used in this

field are based on different psychological theories,

mainly cognitive, ethnographic, and semiotic. Card et

al. (1983) proposed a psychology applied to informa-

tion processing among these theoretical approaches

in HCI. According to them, HCI involves the user

and the computer engaging in a communicative dia-

logue to perform tasks. This dialogue’s mechanisms

constitute the interface: the physical devices, such as

keyboards and screens, and the computer programs

that control the interaction. Its objective was to cre-

ate psychology based on task analysis, calculations,

and approximations so that the system designer could

achieve a balance between computational parameters

of human performance and engineering variables.

According to Card et al. (1983), task structure

analysis provides predictive content of psychology.

Once we know people’s goals and consider their per-

ception and information processing limitations, we

should be able to answer questions such as: How long

does it take a person to perform the predefined physi-

cal tasks that allow them to achieve their goals?

4.2.1 Human Information Processing: Human

Perception

Based on information processing psychology, Card

et al. (1983) proposed the Model Human Proces-

sor (MHP). According to them, using models that

see the human being as an information processor pro-

vides a common framework for integrating models of

memory, resolution problems, perception, and behav-

ior. Considering the human mind as an information

processing system, it is possible to make approximate

predictions of part of human behavior.

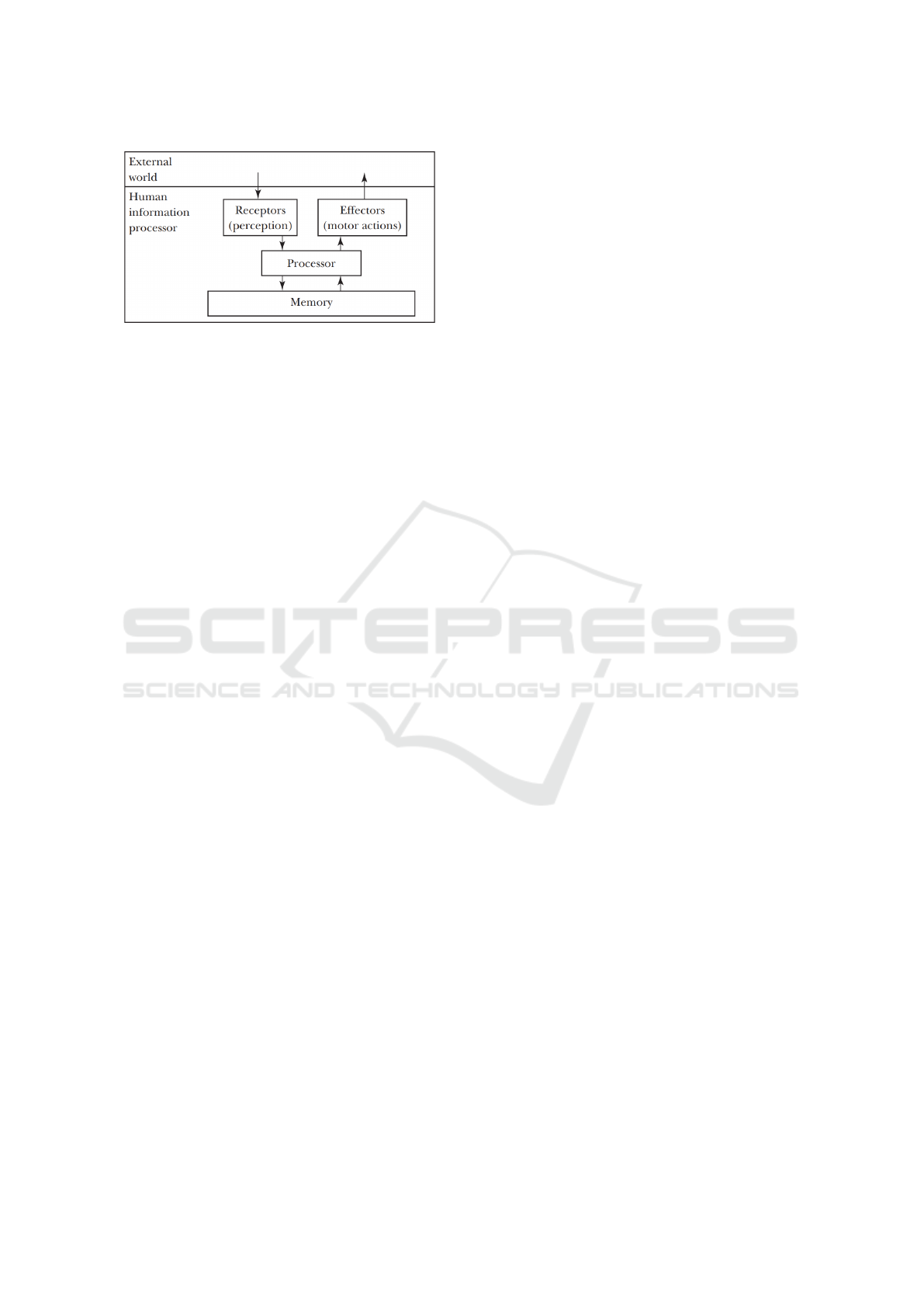

According to Barbosa et al. (2021), the MHP com-

prises three subsystems, each with its memories and

processors, along with some operating principles: the

perceptual, the motor, and the cognitive, Figure 2.

Figure 2 presents a generic representation of an

information processing system. At the center is a

processing executive that operates in a recognition-

action cycle. In each cycle, the information avail-

able by the receivers and coming from the internal

Metaverse4Deaf: Assistive Technology for Inclusion of People with Hearing Impairment in Distance Education Through a Metaverse-Based

Environment

513

Figure 2: A generic human information processing sys-

tem (Carroll, 2003).

memory is compared with a set of patterns, gener-

ally expressed as a set of if-then rules called produc-

tions. This confrontation triggers actions (or opera-

tors) that can change the state of internal memory and

modify the external world through effectors. The cy-

cle then repeats itself. For ease of use, some infor-

mation processing models do not detail this complete

structure, but it is implicit in their assumptions.

Treating the human as an information processor,

albeit a simple stimulus-response controller, allowed

the application of information theory and manual con-

trol theory to problems of display design, visual scan-

ning, workload, aircraft instrument location, flight

controls, air-traffic control, and industrial inspection,

among others. Fitts’ Law, which predicts the time for

hand movements to a target, is an example of an infor-

mation processing theory of this era (Carroll, 2003).

According to Carroll (2003), the natural form of

information processing theories is a computer pro-

gram, where a set of mechanisms is described lo-

cally, and where larger scale behavior is emergent

from their interaction. The claim is not that all hu-

man behavior can be modeled in this manner, but

that, for tasks within their reach. Newell et al. (1972)

adds that, it becomes meaningful to try to represent in

some detail a particular man at work on a particular

task. Such a representation is no metaphor, but a pre-

cise symbolic model on the basis of which pertinent

specific aspects of a man’s problem solving behavior

can be calculated.

The perceptual system transmits sensations from

the physical world detected by the body’s sensory sys-

tems (vision, hearing, touch, smell, taste) to internal

mental representations. Central vision, peripheral vi-

sion, eye movements, and head movements operate as

an integrated system, providing us with a continuous

representation of the visual scene of interest. These

sensations are temporarily stored in areas of sensory

memory (mainly visual and auditory memories), still

physically encoded and with a rapid forgetting time,

depending on the intensity of the stimulus. Then,

some of these sensations are symbolically encoded

and stored in working memory (Barbosa et al., 2021).

The metaverse, a three-dimensional virtual space,

offers revolutionary opportunities for inclusive educa-

tion, especially for people with hearing impairments.

The integration of HCI Theory, focusing on “embod-

ied behavior,” becomes crucial to understanding how

avatar gestural interactions can enhance inclusion and

autonomy in this context. HCI theory highlights “em-

bodied behavior,” which recognizes the importance of

interaction between the user and the interface, con-

sidering gestures, movements, and expressions. In the

educational metaverse, this translates into avatars ca-

pable of reproducing the user’s gestures naturally and

intuitively, enriching communication.

Given this scenario, it is viable to design strate-

gies aimed at inclusion, with particular emphasis

on expanding the autonomy of PwD, notably those

with hearing impairment. As an example, the fol-

lowing approaches stand out: Strategies to Expand

Inclusion. (I)Virtual Sign Language: Avatars can

be programmed to interpret sign language, allow-

ing users with hearing impairments to communicate

through virtual gestures and emulations of sign lan-

guage. (II)Accessible Virtual Spaces: Virtual environ-

ments can be adapted to accommodate different learn-

ing styles, incorporating visual and interactive re-

sources that facilitate understanding concepts. Strate-

gies to Increase Autonomy. (I)Exemption from Inter-

preters: The avatar’s ability to interpret and reproduce

gestures minimizes the dependence on interpreters

in educational contexts, granting greater autonomy

to students with hearing impairments. (II)Experience

Personalization: Avatars can be customized to fit in-

dividual preferences, providing a more personalized

and independent learning experience.

These strategies represent an innovative approach,

providing increased inclusion and autonomy through

gestural interactions of avatars. These technologies

hold the potential to substantially reconfigure the ed-

ucational experience, creating a more accessible, per-

sonalized, and independent environment for people

with hearing impairments.

This approach allows for a variety of interactions,

exemplified by: (I) Virtual Classes: Avatars can re-

produce the teacher’s gestures, making classes more

engaging and understandable for students with hear-

ing impairments; (II) Collaboration on Projects: Col-

laborative work environments enable avatars to com-

municate ideas through gestures, encouraging active

participation in educational projects e (III) Practical

Simulations: Virtual environments can simulate real-

world situations, where avatars interact through ges-

tures to solve problems, providing practical and au-

CSEDU 2024 - 16th International Conference on Computer Supported Education

514

tonomous learning. This study focuses on the second

example mentioned, “project collaboration.”

4.2.2 Discussion

Affordances, represented by drawings, play a cru-

cial role, conveying possibilities for interaction. Su-

perimposing real-world interaction history onto meta-

verse elements, such as tables and boards, enhances

user engagement. While these objects may possess

magical capabilities, preserving their inherent func-

tions ensures a seamless transition for users. This ap-

proach aligns with Embodied Interaction theory, aim-

ing to incorporate real-world behaviors into meta-

verse avatars, providing an expressive outlet, espe-

cially for users with disabilities like deafness.

The Figure 3 illustrates a tablet where the user’s

perspective shifts as they move, emphasizing the im-

portance of leveraging affordances for interaction.

For instance, the interaction control on the tablet dy-

namically responds to the user’s focused object, pre-

senting relevant commands. The proposed research

explores integrating such affordances into the meta-

verse, enhancing interaction for users with hearing

impairments. In this metaverse environment, objects

exhibit a history of real-world interactions, offering

users a familiar and intuitive experience.

Figure 3: Embodied Behavior.

The proposed solution involves collecting embod-

ied behaviors and embedding them into avatars, serv-

ing as alternative to sign language. This approach mit-

igates the limitations associated with sign language,

making interaction more inclusive and cost-effective.

The metaverse environment can be configured akin to

real-world scenarios, facilitating the transfer of expe-

riences and behaviors. By reducing gaps and align-

ing with HCI theories, this approach allows for a

smoother transition of natural behaviors to the virtual

realm. The study considers the challenge of mapping

natural gestures to a virtual environment, emphasiz-

ing the need to comprehend and adapt behaviors for

users, particularly those with hearing impairments.

The solution involves understanding and configuring

virtual environments, such as classrooms or collabo-

rative spaces, to accommodate these behaviors.

Communicational affordances govern how people

collaborate; that is, the medium in question deter-

mines how the person expresses themselves. Different

media enable different forms of manifestation. The

importance of obtaining grounding constraints stands

out here, where ”grounding” refers to the ground,

representing fundamental and immutable truths that

serve as the basis for behaviors and interactions. Sen-

sory aspects such as vision, hearing, and smell are

grounding constraints that provide a solid basis for

understanding the environment.This principle is in-

trinsic to human nature and is shared with animals, as

evidenced by the collaboration between humans and

dogs in joint activities. Collaboration between species

is considered more primitive, based on the essential

elements of life and survival.

The theory of grounding constraints highlights the

need for a solid and reliable basis for human interac-

tions, with the importance of understanding embodied

behaviors. When transferring these principles to the

metaverse, it is crucial to consider how the translation

of natural behaviors to virtual environments can im-

pact the strength of these grounding constraints. It is

worth highlighting the relevance of body language as

a primitive form of communication, closer to the truth

and less subject to linguistic manipulations. The tran-

sition to virtual environments introduces a layer of

cognitive processing, which can influence the strength

of natural grounding constraints. Furthermore, the pe-

culiarity of deaf communication must be considered,

as they use three-dimensional (3D) space during con-

versations. Spatiotemporal language plays a signifi-

cant role, where specific gestures and hand configu-

rations have distinct meanings depending on spatial

location and orientation, revealing the complexity of

gestural communication in virtual environments.

4.3 Case of Study (CSCL)

4.3.1 DALverse

DALverse is one of several products developed in

a development and innovation laboratory (Nasci-

mento et al., 2017), as well as several other prod-

ucts, for example, STUART (Damasceno et al., 2020)

and JLOAD (Silva et al., 2014). It integrates with

DAL, a distance learning platform with several pro-

fessional courses and tools that facilitate student

learning and make teaching accessible for people with

physical, hearing, and low vision disabilities, among

others (de MB Oliveira et al., 2016). Upon joining the

DAL, students enrolled in a course are granted access

to the “Metaverse” room. In this context, the user can

Metaverse4Deaf: Assistive Technology for Inclusion of People with Hearing Impairment in Distance Education Through a Metaverse-Based

Environment

515

personalize their representation in the environment,

choosing between three types of pre-defined avatars:

male profile, female profile, or profile with no defined

gender, the latter represented by a robot avatar.

Within this environment, the user has the possibil-

ity of moving around. Locomotion is controlled using

the arrow keys on the keyboard and mouse, Figure 4.

Figure 4: DAL’s Metaverse Environment with Avatars.

Each avatar, except the robot, has five movements:

forward, backward, right, left, and resting. The robot

avatar, in turn, moves by floating in these exact direc-

tions. The system interprets and reproduces each of

these movements, providing the sensation of move-

ment within the virtual environment.

4.3.2 Promoting Inclusion in Education

Promoting inclusive education offers significant bene-

fits, such as developing social skills, encouraging em-

pathy, building more enriching educational environ-

ments, contributing to developing collaborative skills,

and promoting understanding. Quek and Oliveira

(2013) present the advantages of promoting inclusive

education. Simulating a classroom environment that

promotes collaboration, a blackboard was developed

with the resources of an eraser, pencil, and text in-

sertion with variations in color and size. One user at

a time can use the tool. Others can request to edit

the board using their virtual “tablet”. In this way, we

guarantee greater control of resources and security in

the learning environment, Figure 5.

Figure 5: Collaborative Activity on the Virtual Whiteboard.

Once the owner of the whiteboard, the user will be

able to transmit a browser guide, application window,

or an entire screen of the device, with or without in-

cluding audio on the digital whiteboard. This allows

tutors and students to present their work, questions,

and other external applications on their computers.

4.3.3 The Scenario

The scenario will be that of a university debate room,

with a table arranged in a circular format to promote

interaction between participants. There will be ob-

jects on the table, such as laptops, documents, pens,

and support materials, simulating a typical teamwork

environment. In addition, a multimedia presentation

that the working group developed will be held using

visual and auditory resources.

4.4 Exploratory Experiment

In this context, it is relevant to explore how communi-

cation can be facilitated in the Metaverse, especially

in educational scenarios, where collaboration is es-

sential. One question can be raised is how a deaf per-

son could interact naturally in the Metaverse, partic-

ipating in collaborative activities without depending

on an interpreter. Identifying and listing the main in-

corporated behaviors capable of supporting commu-

nication and collaboration are challenges to be faced.

In order to overcome this challenge, it is suggested

to carry out an observational experiment where deaf

and hearing people physically collaborate in an activ-

ity after attending a virtual class. After consuming the

virtual class, the proposed experiment would observe

how these groups interact in the same physical space.

The goal would be to gain insights into implementing

these dynamics in the Metaverse.

The exploratory experiment would include an in-

depth analysis of the observed behaviors, aiming to

identify those essential to establishing grounding con-

straints and facilitating collaboration in the Meta-

verse. Understanding these behaviors would be cru-

cial for effectively transitioning interactions from nat-

ural to virtual environments.

We intend to rely on linguistics experts, highlight-

ing the importance of an interdisciplinary approach

and recognizing the need to integrate specific knowl-

edge to effectively address the translation of behav-

iors and interactions into the Metaverse. Filming fun-

damental interactions between deaf and hearing peo-

ple aims to create a practical database to understand

the nature of behaviors and facilitate the transition of

these interactions to the virtual environment, empha-

sizing the relevance of grounding constraints for deaf

people in the context of the Metaverse.

CSEDU 2024 - 16th International Conference on Computer Supported Education

516

5 CONCLUSIONS

Metaverse4Deaf proposes an integrative structure to

incorporate individuals with hearing impairment in

the context of distance education, using an envi-

ronment based on metaverse. The main objective is

to understand the behavior of deaf people through

exploratory study. This approach aims to identify

emerging behaviors in interaction, outlining elements

that can be integrated into the metaverse environment.

Implementing these behaviors in this environment

would result in an improved version whose require-

ments were extracted from an ethnographic study as a

requirements elicitation technique.

Future developments include implementing the

results into DALverse, derived from the continued

application of the developing framework. This im-

plementation process seeks to evaluate both Meta-

verse4Deaf and the interaction of deaf individuals in a

collaborative distance learning environment based on

the metaverse. It should be noted that this framework

is constantly being improved, characterizing itself as

a work in progress.

REFERENCES

Alfaisal, R., Hashim, H., and Azizan, U. H. (2022). Meta-

verse system adoption in education: a systematic lit-

erature review. Journal of Computers in Education,

pages 1–45.

Ball, M. (2022). The metaverse: what it is, where to find it,

and who will build it. 2020.

Barbosa, S. D. J., Silva, B. S. d., Silveira, M. S., Gas-

parini, I., Darin, T., and Barbosa, G. D. J. (2021).

Intera¸c

˜

ao Humano-Computador e Experi

ˆ

encia do

Usuario. Autopublica¸c

˜

ao.

Bersch, R. (2008). Introduc¸

˜

ao

`

a tecnologia assistiva. Porto

Alegre: CEDI, 21.

Card, S. K. (2018). The psychology of human-computer

interaction. Crc Press.

Carroll, J. M. (2003). HCI models, theories, and frame-

works: Toward a multidisciplinary science. Elsevier.

Cartaxo, B., Pinto, G., and Soares, S. (2018). The role of

rapid reviews in supporting decision-making in soft-

ware engineering practice. In Proceedings of the 22nd

International Conference on Evaluation and Assess-

ment in Software Engineering 2018, pages 24–34.

Clark, H. H. (1992). Arenas of language use. University of

Chicago Press.

Cline, E. (2011). Ready player one. Ballantine Books.

Damasceno, A., Soares, P., Santos, I., Souza, J., and

Oliveira, F. (2023). Assistive technology for distance

education in metaverse-based environment: A rapid

review. Anais do XXXIV Simp

´

osio Brasileiro de In-

form

´

atica na Educac¸

˜

ao, pages 693–706.

Damasceno, A. R., Martins, A. R., Chagas, M. L., Barros,

E. M., Maia, P. H. M., and Oliveira, F. C. (2020). Stu-

art: an intelligent tutoring system for increasing scal-

ability of distance education courses. In Proceedings

of the 19th Brazilian Symposium on Human Factors in

Computing Systems, pages 1–10.

Davis, A., Murphy, J., Owens, D., Khazanchi, D., and Zig-

urs, I. (2009). Avatars, people, and virtual worlds:

Foundations for research in metaverses. Journal of

the Association for Information Systems, 10(2):1.

de MB Oliveira, F. C., de Freitas, A. T., de Araujo, T. A.,

Silva, L. C., Queiroz, B. d. S., and Soares,

´

E. F.

(2016). It education strategies for the deaf-assuring

employability. In International Conference on Enter-

prise Information Systems, volume 3, pages 473–482.

SCITEPRESS.

Fernandes, F. and Werner, C. (2022). Accessibility in

the metaverse: Are we prepared? In Anais do

XIII Workshop sobre Aspectos da Interac¸

˜

ao Humano-

Computador para a Web Social, pages 9–15. SBC.

Jovanovi

´

c, A. and Milosavljevi

´

c, A. (2022). Vortex meta-

verse platform for gamified collaborative learning.

Electronics, 11(3):317.

Kendon, A. (2004). Gesture: Visible action as utterance.

Cambridge University Press.

Lin, H., Wan, S., Gan, W., Chen, J., and Chao, H.-C. (2022).

Metaverse in education: Vision, opportunities, and

challenges. arXiv preprint arXiv:2211.14951.

Nascimento, M. D., Queiroz, B., Guimaraes, M., Silva,

L. C., Soares, E., Oliveira, F., Ribeiro, D., and Fer-

reira, C. (2017). Aprendizado acessivel. In Anais dos

Workshops do Congresso Brasileiro de Inform

´

atica na

Educac¸ao, volume 6, page 110.

Newell, A., Simon, H. A., et al. (1972). Human problem

solving, volume 104. Prentice-hall Englewood Cliffs,

NJ.

Onggirawan, C. A., Kho, J. M., Kartiwa, A. P., Gunawan,

A. A., et al. (2023). Systematic literature review:

The adaptation of distance learning process during the

covid-19 pandemic using virtual educational spaces

in metaverse. Procedia Computer Science, 216:274–

283.

Quek, F. and Oliveira, F. (2013). Enabling the blind to see

gestures. ACM Transactions on Computer-Human In-

teraction (TOCHI), 20(1):1–32.

Silva, L. C., de MB Oliveira, F. C., De Oliveira, A. C., and

De Freitas, A. T. (2014). Introducing the jload: A

java learning object to assist the deaf. In 2014 IEEE

14th International Conference on Advanced Learning

Technologies, pages 579–583. IEEE.

Tlili, A., Huang, R., Shehata, B., Liu, D., Zhao, J., Met-

wally, A. H. S., Wang, H., Denden, M., Bozkurt,

A., Lee, L.-H., et al. (2022). Is metaverse in educa-

tion a blessing or a curse: a combined content and

bibliometric analysis. Smart Learning Environments,

9(1):1–31.

Vianna, W. B. and Pinto, A. L. (2017). Defici

ˆ

encia, acessi-

bilidade e tecnologia assistiva em bibliotecas: aspec-

tos bibliom

´

etricos relevantes. Perspectivas em Ci

ˆ

encia

da Informac¸

˜

ao, 22:125–151.

Metaverse4Deaf: Assistive Technology for Inclusion of People with Hearing Impairment in Distance Education Through a Metaverse-Based

Environment

517