The Power of Gyroscope Data: Advancing Human Movement Analysis

for Walking and Running Activities

Patrick B. N. Alvim

1 a

, Jonathan C. F. da Silva

1 b

, Vicente J. P. Amorim

1 c

,

Pedro S. O. Lazaroni

2 d

, Mateus Coelho Silva

1 e

and Ricardo A. R. Oliveira

1 f

1

Departamento de Computac¸

˜

ao - DECOM, Universidade Federal de Ouro Preto - UFOP, Ouro Preto, Brazil

2

N

´

ucleo de Ortopedia e Traumatologia(NOT), Belo Horizonte, Brazil

Keywords:

Sensors, Wearable, App, Mobile, AI.

Abstract:

The ability to faithfully reproduce the real world in the virtual environment is crucial to provide immersive and

accurate experiences, opening doors to significant innovations in areas such as simulations, training, and data

analysis. In such a way that actions in the virtual environment can be applied, which would be challenging in

the real world due to issues of danger, complexity, or feasibility, enabling the study of these actions without

compromising these principles. Additionally, it is possible to capture real-world data and analyze it in the vir-

tual environment, faithfully reproducing real actions in the virtual realm to study their implications. However,

the volatility of real-world data and the accurate capture and interpretation of such data pose significant chal-

lenges in this field. Thus, we present a system for real data capture aiming to virtually reproduce and classify

walking and running activities. By using gyroscope data to capture the rotation of axes in the lower human

limbs movement, it becomes possible to precisely replicate the motion of these body parts in the virtual envi-

ronment, enabling detailed analyses of the biomechanics of such activities. In our observations, in contrast to

quaternion data that may have different scales and applications depending on the technology used to create the

virtual environment, gyroscope data has universal values that can be employed in various contexts. Our results

demonstrate that, by using specific devices such as sensors instead of generic devices like smartwatches, we

can capture more accurate and localized data. This allows for a granular and precise analysis of movement

in each limb, in addition to its reproduction. This system can serve as a starting point for the development of

more precise and optimized devices for different types of human data capture and analysis. Furthermore, it

proposes creating a communication interface between the real and virtual worlds, aiming to accurately repro-

duce an environment in the other. This facilitates data for in-depth studies on the biomechanics of movement

in areas such as sports and orthopedics.

1 INTRODUCTION

When we observe the scenario of the orthopedic area,

we realize that the study of human body movement

is a topic of great importance. Understanding the

anatomical factors behind the mechanics of move-

ment through its actors, such as muscles, bones, and

joints (Lee et al.(2019)) is of great importance and use

in the medical and sports field. With the knowledge

a

https://orcid.org/0000-0001-8509-7398

b

https://orcid.org/0000-0003-2214-397X

c

https://orcid.org/0000-0003-3795-9218

d

https://orcid.org/0000-0002-2058-6163

e

https://orcid.org/0000-0003-3717-1906

f

https://orcid.org/0000-0001-5167-1523

of these actors, it is possible to understand how move-

ment is affected by several factors, including the inter-

action between ligaments, joints, and bones, muscle

behavior, and fatigue.

In addition to analyzing any injury generated by

these components and how they affect movement, it

is also possible to act preventive against such injuries

and corrective help in healing and rehabilitation (Lu

and Chang(2012)). Deepening knowledge of the hu-

man body movement is also of great importance for

sports and physical education. Such applied studies

can be used to optimize and improve the training of

athletes seeking better technique and movement effi-

ciency.

The gyroscope data captured by sensors can be

a significant source of information not only for the

510

Alvim, P., F. da Silva, J., Amorim, V., Lazaroni, P., Silva, M. and Oliveira, R.

The Power of Gyroscope Data: Advancing Human Movement Analysis for Walking and Running Activities.

DOI: 10.5220/0012702600003690

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 26th International Conference on Enterprise Information Systems (ICEIS 2024) - Volume 1, pages 510-519

ISBN: 978-989-758-692-7; ISSN: 2184-4992

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

precise reproduction of movement in the digital twin

but also for detecting anomalies related to gait as-

sessment. Such evaluations are primarily carried out

through visual observation by a medical professional,

which can be imprecise and involve highly subjec-

tive rating scales, underscoring the importance of dig-

ital technologies as valuable tools for capturing objec-

tive data and information for accurate diagnosis (Ce-

lik et al.(2021)).

Using SPUs directly connected to the mobile de-

vice (smartphone) instead of consolidating the data

in a WPU, as proposed in (Alvim et al.(2023)), can

help improve the speed at which information reaches

the device for representation in the virtual twin. Em-

ploying a device like WPU to mediate communication

may increase the delay of information, besides being

another critical element of the system, susceptible to

errors and communication issues.

This paper presents a mobile application to cap-

ture and recognize activities in human movement.

Data is collected by a wearable device composed of

sensors and transmitted to the application, where an

AI interprets and classifies them into a type of move-

ment. At the same time, the data is also reproduced

interactively in a virtual twin that replicates the user’s

activity.

Figure 1: Application usage representation.

The main contribution of this work is:

• A proposal for a mobile platform composed of

an integrated hardware and software solution for

reproducing real movements by data sent from a

new wearable device using AI for human walking

and running activities.

1.1 Why not Smartwatches?

Smartwatches are smart devices commonly used in

healthcare and sports activities (Borowski-Beszta and

Polasik(2020)). These devices can provide informa-

tion about a person’s physical condition and perfor-

mance in a sports activity (Zhuang and Xue(2019)).

In this context, smartwatches use the sensors present

in their physical structure to predict this information

(Schiewe et al.(2020); Taghavi et al.(2019)). How-

ever, although these devices present interesting infor-

mation to the user when carrying out a particular ac-

tivity, there is an inevitable imprecision in this infor-

mation because they use unique sensors.

The unique sensors located at a specific location

of the user in the device, such as a gyroscope and

accelerometer, use the movement pattern of one of

the user’s arms to identify an activity, for example,

swimming (Cosoli et al.(2022)). In this literature, the

authors used two smartwatches to identify swimming

activity and minimize the inaccuracy of information

in data classification. This point shows the disadvan-

tage of the smartwatch: to increase accuracy, it needs

more than one device.

Differently, our work seeks to identify walking

and running activity by integrating four sensors on the

user’s leg together with a mobile application. In this

form, the application presents real information about

the activity. Therefore, we can identify more accu-

rately than a single smartwatch.

1.2 Paper Organization

This work is organized as follows: Section 2 presents

a theoretical review of related works found recently in

the literature on AI and mobile applications centered

on recognizing human activities. Section 3 presents

the requirements used to create the application and

information on how data is collected from the system.

In Section 4, we have the analysis of the App devel-

oped. Finally, in Section 5, we present conclusions

and future work.

2 THEORETICAL REFERENCES

AND RELATED WORK

In this section, we present the results of some liter-

ature reviews with an overview of human walking,

tools and mobile apps in activity recognition with in-

telligent devices.

2.1 Human Gait

The ability to walk is crucial for human life, repre-

senting one of the primary means of moving from

one place to another in the environment. This move-

ment is meticulously coordinated among the different

segments of the body, involving a complex interac-

tion between internal and external factors. Controlled

by the neuromuscular and skeletal system, walking is

The Power of Gyroscope Data: Advancing Human Movement Analysis for Walking and Running Activities

511

traditionally defined based on patterns of foot con-

tact with the ground and biomechanical properties

(Mirelman et al.(2018)).

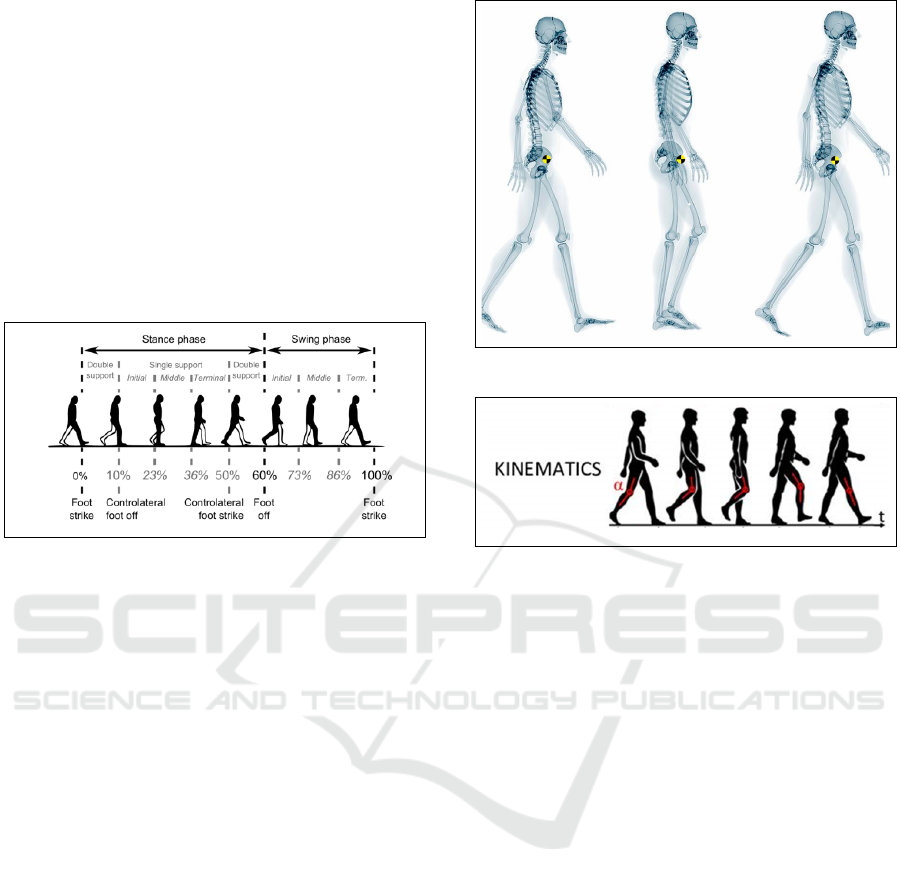

The gait cycle comprises two events, from the mo-

ment one foot makes contact with the ground until

that same foot touches the ground again. The limbs

undergo a support phase where the foot is in contact

with the ground, and a swing phase when the foot is

not in contact with the ground. The support phase,

representing 60% of the movement, can be subdi-

vided into five subphases, and the swing phase, rep-

resenting 40% of the movement, into three subphases

(Bonnefoy-Mazure and Armand(2015)).

Figure 2: Gait cycle phases and subdivisions (Bonnefoy-

Mazure and Armand(2015)).

The center of gravity of the human body is the

point where all the body’s mass is considered to

be concentrated. It is a simplified representation of

the midpoint concerning the total body weight (Yiou

et al.(2017)). During gait, it is crucial to maintain the

center of gravity (CG) of the body within the base

of support to ensure stability and balance. When the

CG moves outside of this base, imbalance occurs, in-

creasing the risk of falls. Therefore, controlling and

maintaining the stability of the CG are essential as-

pects for safe and efficient gait. This involves the co-

ordinated movement of body segments to minimize

any displacement of the CG that may occur during

walking (Moon et al.(2022)). Reducing the energy

cost of walking and maintaining movement stability

are related to the kinematics of the CG. Individuals

are constrained to specific movements during walk-

ing; conversely, decreased dynamic stability in the

CG directly impacts energy expenditure and may ren-

der movement less stable (Tucker et al.(1998)).

The examination of kinematics delineates the

movements of body segments. Given the relative

complexity of the human body and its motions, mod-

eling is essential for simplifying these mechanisms.

Quantifying joint kinematics in three dimensions is

paramount for comprehending and characterizing hu-

man body movements (Pacher et al.(2020)).

Figure 3: Representation of human body center of gravity.

Figure 4: Representation of knee kinematics in the gait cy-

cle.

2.2 AI Tools Applications in Human

Recognition

Advances in gait analysis with machine learning are

changing how we understand biomechanical systems.

After picking the best technique, the model is trained

and checked using the training set to see how well it

works. Then, its performance is tested with the test

set. If it’s accurate enough, we’re done; otherwise,

we adjust the model and keep training until we reach

the desired accuracy. Focusing on making the system

less complex, careful feature selection is key (Khera

and Kumar(2020)).

Artificial intelligence (AI) algorithms are funda-

mental for constructing new tools for recognizing hu-

man activities, such as human movement recognition

based on deep learning (Wang et al.(2018)). Together

with information received by other devices and the

usage of friendly visual interfaces, they form promis-

ing solutions for constructing a new system (Demrozi

et al.(2020); Ann and Theng(2014)).

Authors use embedded devices with convolutional

neural networks (Xu and Qiu(2021)). However, ap-

plying these techniques can demand a lot of the de-

vice’s computational power, which causes a restric-

tion for some devices. Thus, the proposed app in-

telligently presents the information sent by wearable

sensors to an android device, decentralizing tasks to

ICEIS 2024 - 26th International Conference on Enterprise Information Systems

512

optimize the resources used throughout the system.

2.3 Mobile Applications and Wearables

Wearable technology, utilizing accelerometers, gyro-

scopes, and magnetometers, provides a means to mea-

sure a combination of gait variables. These devices

have gained popularity due to their ease of use and af-

fordability. Wearable devices offer the ability to mea-

sure various aspects of gait during running in differ-

ent environments, which can contribute to our under-

standing of running performance, fatigue, and injury

mechanismsd (Mason et al.(2023)).

Most studies involving sensor usage utilize mod-

els called inertial measurement units (IMU). These

units are generally equipped with accelerometers and

gyroscopes, and some models also include magne-

tometers. By using gyroscope data, it is possible to

achieve easier reproduction due to the lack of trans-

lation requirement, as the angular velocity at any po-

sition of the body remains the same. They also suf-

fer from less noise unlike accelerometers (Prasanth

et al.(2021)).

In the literature, we find examples of mobile ap-

plications that perform similar tasks. For instance,

applications that perform this recognition in real-time

(Lara and Labrador(2012)). These applications are

commonly used in healthcare (Zaki et al.(2020a)).

Apps developed in this context are also frequently

used on smartphones, using the device’s sensors, such

as a gyroscope and accelerometer (Zaki et al.(2020b))

(Gy

˝

orb

´

ır

´

o et al.(2009)). This perspective can present

an imprint on the recognition of the activity. Thus,

this work proposes applying AI classification in a

mobile device with data collected by externally dis-

tributed sensors, which have greater precision than

single sensors such as smartphones.

3 PROPOSED SYSTEM

In this section, we present the development of the pro-

posed work. We discuss the requirements for the con-

struction of the mobile application. Also, we present

the interface design and the AI module.

3.1 System Requirements

Before proposing the application, we must recognize

the requirements for this functioning. We performed

this evaluation by inspecting the necessary system

features to perform all the proposed tasks. The spe-

cific requirements to develop the proposed application

are:

• User-friendly computer interface design.

• Definition of minimum hardware requirements for

the application to work.

• Construction of the virtual twin replicating the

user’s movements and interface representing the

type of movement.

• Development of the history functionality, where

the path traveled on the map and the replication of

the movement will be presented.

• Statistics presentation screen, containing quanti-

tative data on each activity performed.

3.2 Overview of the Proposed System

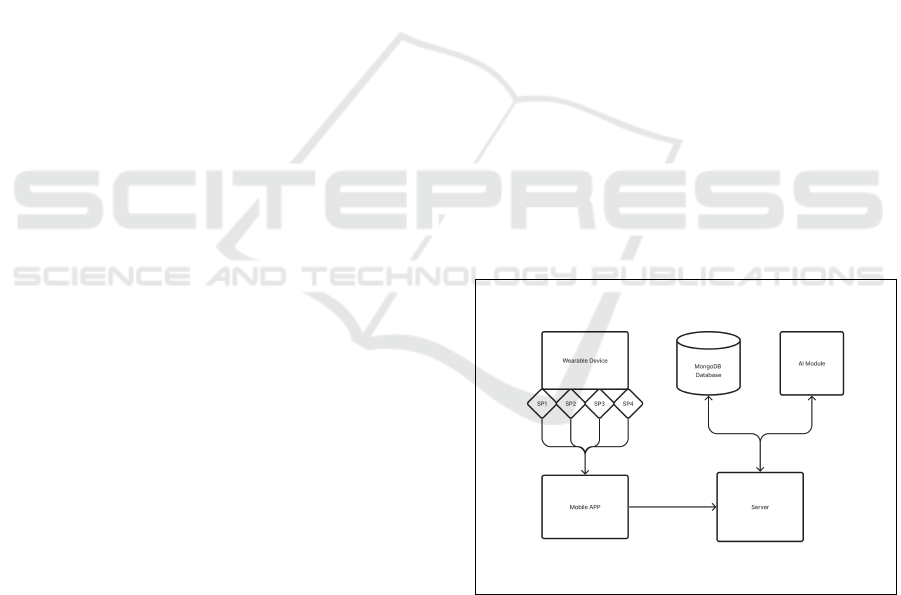

The proposed system consists of three modules: the

wearable device containing sensors, the mobile appli-

cation, and the application server. The wearable de-

vice comprises four sensors positioned on the user’s

legs which are responsible for data collection from

user actions, this data works as the baseline for the

prediction algorithm. The collected data is subse-

quently sent to the mobile application, which for-

wards the data to the application server and repli-

cates the movements in the digital twin. The server

is responsible for storing the data in the database and

classifying the movement using artificial intelligence.

Figure 5 displays the dataflow diagram for the pro-

posed system.

Figure 5: System diagram.

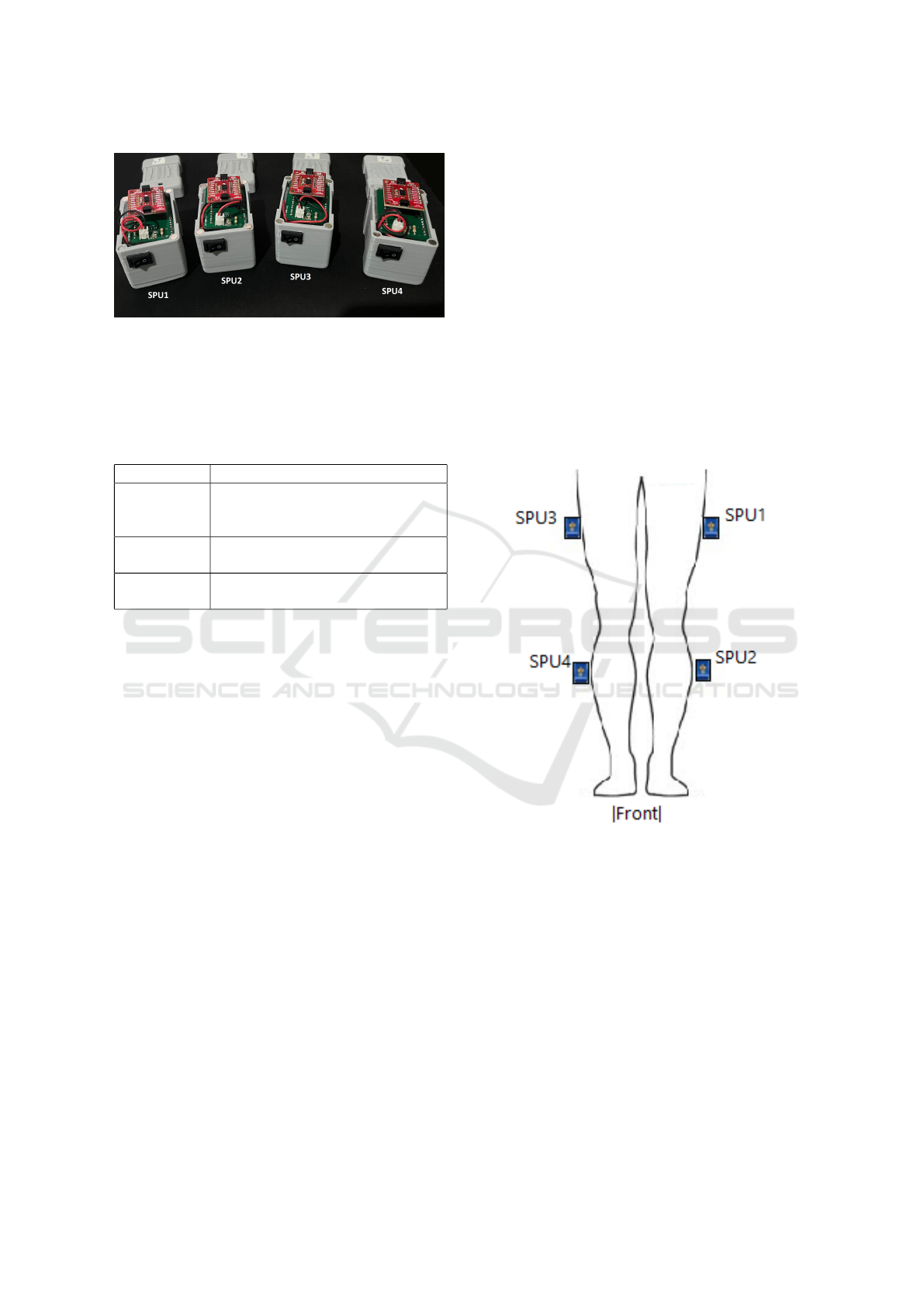

3.2.1 Wearable Device and Sensors

Figure 6 represents the sensors (SPUs) used to cap-

ture the user action data. The SPUs have a set of

state-of-the-art IMUs (Inertial Measurement Units) to

collect the physical movement of the user’s leg. Fig-

ure 7 shows the position locally in the human body to

collect data.

The Power of Gyroscope Data: Advancing Human Movement Analysis for Walking and Running Activities

513

Figure 6: Wearable device used to collect individual’s

movement data.

Sensor Processing Unit – SPU

The SPUs are incorporated by the sensors with the

following hardware in Table 1:

Table 1: SPU hardware description.

Component Description

BNO080

IMU

9-degree inertial sensor comprising

accelerometer, gyroscope,

and magnetometer readings.

Li-ion

battery

power source for the device.

NodeMCU

ESP-32

Hardware platform based on

Espressif ESP-32 solution.

Robustness requirements are essential for con-

structing these devices, such as weight and size (Niu

et al.(2018)). As the sensors developed are made

of lightweight components, they are comfortable for

users, allowing free movement to carry out activities.

Sensors can capture data from accelerometer, gy-

roscope, and magnetometer, in addition to quater-

nions. For the representation of motion in the vir-

tual twin, we will use gyroscope data comprising val-

ues for the X, Y, and Z vectors. When the sensor

rotates on any of these vectors, it returns a positive

or negative value related to the angle the sensor has

been rotated. The data transmission rate of the gyro-

scope for each SPU is around 50 ms, representing 20

samples per second, allowing us to accurately repli-

cate user movements in the virtual twin. A low data

update rate is important because higher values can

cause desynchronization in the representation of vir-

tual twin movements. This problem can also occur

if there is a failure in data transmission due to inter-

ference or the drop of any SPU; the user’s movement

may have changed while the data was not sent, caus-

ing the virtual twin to become desynchronized.

Each sensor was configured to send data every 50

milliseconds via Bluetooth connection, allowing for

the transmission of 20 samples per fraction of a sec-

ond. Combining the data from the 4 sensors results

in a total of 80 data samples per second. Consider-

ing floating-point values of 4 bytes each for the three

axes, we have a total of 12 bytes per sensor reading.

Multiplying this by the 4 sensors and 80 samples, we

achieve a rate of 960 bytes per second, a value well

below the total capacity of a Bluetooth connection,

providing sufficient margin for complete data trans-

mission.

Another crucial aspect is the alignment of the ro-

tation axis values with those used in the virtual twin.

On the sensor hardware board, there are guidelines

for the positioning of the X, Y, and Z axes, and if the

board is oriented differently, it is necessary to adjust

the values to correctly relate the data.

In the current state, both the hardware and its em-

bedded software are fully developed, making it possi-

ble to capture all types of data mentioned earlier and

transmit them via Bluetooth.

Figure 7: Wearable device positions.

The System - In the development of the system, the

interface and the virtual twin proved to be highly re-

sponsive. By integrating gyroscope values into the

avatar’s control variables, we were able to precisely

control the rotation axes of its legs. Initially, the fea-

sibility of using quaternions to replicate user move-

ment in the virtual twin was tested. However, despite

Unity accepting the insertion of these values for ob-

ject modification, it was observed that when directly

applied to the avatar, it did not respond correctly to

user movements. On the other hand, the use of gy-

roscope data allowed for an exact reproduction of the

device’s movement in the virtual object, as shown in

the images below.

ICEIS 2024 - 26th International Conference on Enterprise Information Systems

514

(a) Sensor on 0° rotation. (b) Sensor on 45° rotation.

(c) Sensor on 90° rotation. (d) Sensor on -45° rotation.

Figure 8.

3.2.2 System Interfaces and Functionalities

We employed the Unity framework in developing the

mobile application. This tool is an engine for creating

games that allow the creation and control of virtual

characters easily and intuitively, in addition to provid-

ing several tools for interface design. We developed

a 3D character and a test scenario for the presented

prototype. Figure 9 displays the application’s main

screen.

The digital twin behaves according to the user’s

movement, representing an abstraction of the mea-

surements provided by the model. The data will be

passed from the sensors to the server, which will save

them in the database with the time and the route of the

user captured by GPS. The same data will be passed to

the application, which will map the movement in the

virtual twin’s body to replicate the movement, while

the AI module classifies the movement and presents

the result in the ”Movement Type” field, being able to

obtain values standing, walking or running.

Another critical part of the application will be the

user history containing a chronological representation

of the captured data. On this screen, it will be possible

to see the path taken by the user represented on a map

using the GPS data retrieved by the application. It will

also be possible to visualize the movement performed

by the user along the way, represented by the virtual

twin and the classification of the movement.

Figure 9: Virtual twin and UI prototype.

Finally, the application will also allow the user to

collect statistics containing quantitative data such as

the time spent performing a specific activity (stand-

ing, walking, or running), the distance covered by the

user according to the type of movement, and the time

spent on the activity. These statistics are presented

through values and graphs.

Another feature is the configuration of specific pa-

rameters of the application. For instance, the user can

choose the number of days to store historical data and

clean it, the accuracy of movement classification, and

the status of sensors.

3.3 AI Module

The AI algorithm for classifying the wearable device

data uses LSTM (Long short-term memory) recur-

rent neural networks (RNN)(Hochreiter and Schmid-

huber(1997)). These deep learning networks are

commonly used to learn about events by time se-

ries analysis like HAR (Mekruksavanich and Jitpat-

tanakul(2021)).

The Power of Gyroscope Data: Advancing Human Movement Analysis for Walking and Running Activities

515

As a human activity, such as walking, depends on

information over time, this method becomes appro-

priate in this context. Thus, the data can be classified

and sent to the mobile app to present the digital gem

of the predicted activity.

For the evaluation of the algorithm, we used the

standard evaluation metrics, Precision, Recall, and

F1-Score (Hossin and Sulaiman(2015)). Precision 1,

shows the data classified as really belonging to a class,

true positive, Recall 2, makes a system evaluation to

find the positive samples of the set, and F1 - score 3,

the weighted harmonic mean between precision and

Recall.

Precision =

T P

T P + FP

(1)

Recall =

T P

T P + FN

(2)

F1-Score = 2 ×

Precision × Recall

Precision + Recall

(3)

True positives (T P) are data correctly classified by

the model. True negatives (T N) represent the same as

the negative class. The false positive (FP) refers to

the result classified incorrectly for the positive class,

and the false negative (FN) incorrectly for the neg-

ative category. Finally, the confusion matrix is also

applied to show the visualization of the distribution

of correct and incorrect classifications of each class.

3.3.1 The Dataset

The data used (dat(2024)) to create digital twins in

the mobile application and to train the artificial intel-

ligence model were captured and processed through

a wearable solution attached to the lower part of the

user’s body. This dataset was obtained by collect-

ing information from gyroscopes located on the user’s

legs, which record movements along the X, Y, and Z

axes. Additionally, the data includes the sensor iden-

tifier and the timestamp at which the record was cap-

tured. These data were collected with the aim of rec-

ognizing standing and walking activities. The dataset

contains information from each gyroscope, specifi-

cally along the X, Y, and Z axes, representing the

movements along these axes for each sensor.

For preliminary testing purposes of the AI model,

only data and classification of standing and walking

events were performed to validate training, predic-

tion, and classification, as well as data augmentation

on the data generated in sensor capture. The next step

is to collect more data related to these events, and also

to capture data related to the running event, which is

slightly more complex due to the user’s speed and the

stability required in the sensors to accurately capture

the data.

4 RESULTS AND

OPPORTUNITIES

This paper presents the initial findings of the sys-

tem, showcasing outcomes derived from the data

acquired through sensors and processed by the AI

model. Additionally, we outline various challenges

encountered in application development and share

insights gained throughout the development process

as valuable learning experiences in this segment.

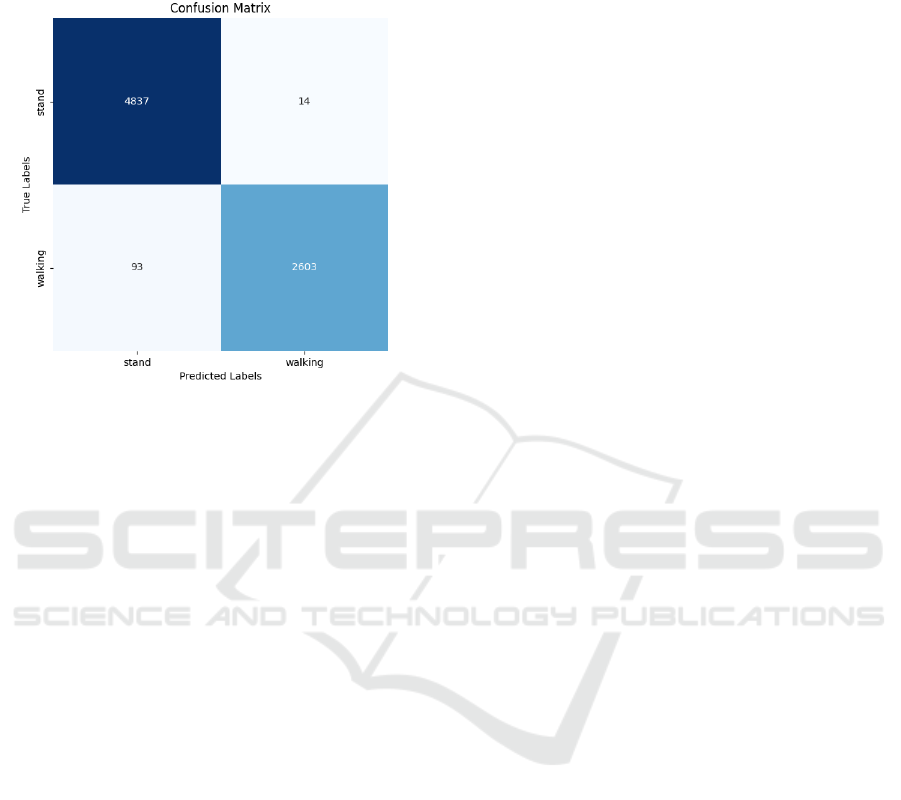

The AI Model - We trained an AI system offline with

the data we collected. The system learned to classify

two main activities: standing and walking. We as-

sessed its performance using standard measures like

precision, recall, and F1-score for each activity, as

well as an overall average. The training process us-

ing an LSTM model proved effective.

Figure 10: Evaluation of the accuracy and loss values for

the training and validation sets.

The results of training the LSTM model are de-

picted in the figure 10. The graphs display a con-

sistent trend of convergence throughout each epoch,

indicating minimal deviation from the desired out-

come. This observation suggests that the model’s per-

formance was stable during the training process, with

no evidence of overfitting. Overall, the convergence

pattern reflects satisfactory progress in optimizing the

AI model for the intended task. Table 2 displays the

metrics for the LSTM.

Table 2: Metrics for the LSTM model.

Precision Recall F1-Score Support

stand 0.98 1.00 0.99 4851

walk 0.99 0.97 0.98 2696

Macro average 0.99 0.98 0.99 7547

Weighted average 0.99 0.99 0.99 7547

Global Accuracy: 99%

The Figure 11 displays the test results for the AI

model. In this test, we observe that the model accu-

ICEIS 2024 - 26th International Conference on Enterprise Information Systems

516

rately classified the data into the two classes in the

dataset. The results indicate that the LSTM model

can efficiently classify the sensor data.

Figure 11: Confusion Matrix.

Opportunities for Integrating the App with the

System - One of the main benefits of this type of

application is the possibility of improving the user’s

decision-making model through the information pro-

vided by the app. For example, the system can present

to the individual the activity performed on the inter-

face of a mobile device. This information presented

brings a gain for this purpose since the graphical rep-

resentation reinforces the classification made by the

AI model.

5 CONCLUSION AND FUTURE

WORK

In this work, we introduced an innovative system for

real data capture aiming to virtually reproduce and

classify walking and running activities. We utilized

gyroscope data to capture the rotation of axes in lower

human limbs’ movements, enabling a precise replica-

tion of these body parts’ motions in the virtual en-

vironment. Our results indicate that, by employing

specific devices such as sensors instead of generic de-

vices like smartwatches, more accurate and localized

data can be captured. This allows for a granular and

precise analysis of movement in each limb, in addi-

tion to faithful reproduction.

The main contribution of this work includes

proposing a mobile platform consisting of an inte-

grated hardware and software solution for reproduc-

ing real movements. We demonstrated that utiliz-

ing gyroscope data, with universal values, provides

a more consistent approach compared to quaternion

data. Furthermore, we discussed the significance

of applying this technology in the orthopedic and

sports field, emphasizing the relevance of studying

human movement to understand anatomical factors

influencing movement mechanics. The proposed sys-

tem serves as a starting point for developing more pre-

cise and optimized devices for various types of human

data capture and analysis.

As future work, we highlight several promising di-

rections for the continuation of this research. Firstly,

we aim to further enhance the system by exploring

different machine learning techniques and AI algo-

rithms for activity classification. Additionally, we

plan to broaden the system’s scope to include a wider

variety of physical activities, allowing for a more

comprehensive application in different contexts.

Integrating more sensors and optimizing the hard-

ware are crucial considerations to improve the sys-

tem’s accuracy and effectiveness. We also intend

to implement a more robust communication inter-

face between the real and virtual worlds, enabling an

even more precise reproduction of the environment in

which activities are being performed.

Moreover, collecting data in real-world scenarios

with a more diverse sample can enhance the general-

ization of the AI model, making it more robust across

different contexts and for various users. Finally, we

contemplate expanding the system to practical appli-

cations, such as health monitoring and personalized

training, to maximize its impact on human well-being.

These future directions aim to refine the ap-

plication and effectiveness of the proposed system,

enabling significant advancements in the fields of

biomechanics, health, and physical training within the

realm of computer science.

ACKNOWLEDGMENT

The authors would like to thank CAPES, CNPq and,

Universidade Federal de Ouro Preto for support-

ing this work. This study was financed in part by

the Coordenac¸

˜

ao de Aperfeic¸oamento de Pessoal de

N

´

ıvel Superior - Brasil (CAPES) - Finance Code 001.

REFERENCES

2024. Gyroscope Human Stand and Walking Data.

https://www.kaggle.com/datasets/patricknunes/

gyroscope-human-stand-and-walking-data.

Patrick BN Alvim, Jonathan CF da Silva, Vicente JP

The Power of Gyroscope Data: Advancing Human Movement Analysis for Walking and Running Activities

517

Amorim, Pedro SO Lazaroni, Mateus Coelho Silva,

and Ricardo AR Oliveira. 2023. Towards a mobile

system with a new wearable device and an AI appli-

cation for walking and running activities. In Anais do

L Semin

´

ario Integrado de Software e Hardware. SBC,

155–166.

Ong Chin Ann and Lau Bee Theng. 2014. Human activ-

ity recognition: a review. In 2014 IEEE international

conference on control system, computing and engi-

neering (ICCSCE 2014). IEEE, 389–393.

Alice Bonnefoy-Mazure and St

´

ephane Armand. 2015. Nor-

mal gait. Orthopedic management of children with

cerebral palsy 40, 3 (2015), 567.

Mikołaj Borowski-Beszta and Michał Polasik. 2020. Wear-

able devices: new quality in sports and finance. Jour-

nal of Physical Education and Sport 20 (2020), 1077–

1084.

Y. Celik, S. Stuart, W.L. Woo, and A. Godfrey. 2021.

Gait analysis in neurological populations: Progres-

sion in the use of wearables. Medical Engineering

& Physics 87 (2021), 9–29. https://doi.org/10.1016/

j.medengphy.2020.11.005

Gloria Cosoli, Luca Antognoli, Valentina Veroli, and

Lorenzo Scalise. 2022. Accuracy and Precision

of Wearable Devices for Real-Time Monitoring of

Swimming Athletes. Sensors 22, 13 (2022), 4726.

Florenc Demrozi, Graziano Pravadelli, Azra Bihorac, and

Parisa Rashidi. 2020. Human activity recognition

using inertial, physiological and environmental sen-

sors: A comprehensive survey. IEEE Access 8 (2020),

210816–210836.

Norbert Gy

˝

orb

´

ır

´

o,

´

Akos F

´

abi

´

an, and Gergely Hom

´

anyi.

2009. An activity recognition system for mobile

phones. Mobile Networks and Applications 14, 1

(2009), 82–91.

Sepp Hochreiter and J

¨

urgen Schmidhuber. 1997. Long

short-term memory. Neural computation 9, 8 (1997),

1735–1780.

Mohammad Hossin and Md Nasir Sulaiman. 2015. A

review on evaluation metrics for data classification

evaluations. International journal of data mining &

knowledge management process 5, 2 (2015), 1.

Preeti Khera and Neelesh Kumar. 2020. Role of machine

learning in gait analysis: a review. Journal of Medical

Engineering & Technology 44, 8 (2020), 441–467.

´

Oscar D. Lara and Miguel A. Labrador. 2012. A mo-

bile platform for real-time human activity recogni-

tion. (2012), 667–671. https://doi.org/10.1109/

CCNC.2012.6181018

Seunghwan Lee, Moonseok Park, Kyoungmin Lee, and Je-

hee Lee. 2019. Scalable muscle-actuated human sim-

ulation and control. ACM Transactions On Graphics

(TOG) 38, 4 (2019), 1–13.

Tung-Wu Lu and Chu-Fen Chang. 2012. Biomechanics of

human movement and its clinical applications. The

Kaohsiung journal of medical sciences 28, 2 (2012),

S13–S25.

Rachel Mason, Liam T Pearson, Gillian Barry, Fraser

Young, Oisin Lennon, Alan Godfrey, and Samuel Stu-

art. 2023. Wearables for running gait analysis: A sys-

tematic review. Sports Medicine 53, 1 (2023), 241–

268.

Sakorn Mekruksavanich and Anuchit Jitpattanakul. 2021.

Biometric User Identification Based on Human Activ-

ity Recognition Using Wearable Sensors: An Experi-

ment Using Deep Learning Models. Electronics 10, 3

(2021). https://doi.org/10.3390/electronics10030308

Anat Mirelman, Shirley Shema, Inbal Maidan, and Jef-

fery M. Hausdorff. 2018. Chapter 7 - Gait.

In Balance, Gait, and Falls, Brian L. Day and

Stephen R. Lord (Eds.). Handbook of Clinical Neu-

rology, Vol. 159. Elsevier, 119–134. https://doi.org/

10.1016/B978-0-444-63916-5.00007-0

Jose Moon, Dongjun Lee, Hyunwoo Jung, Ahnryul Choi,

and Joung Hwan Mun. 2022. Machine learning strate-

gies for low-cost insole-based prediction of center of

gravity during gait in healthy males. Sensors 22, 9

(2022), 3499.

Linwei Niu, Qiushi Han, Tianyi Wang, and Gang Quan.

2018. Reliability-aware energy management for em-

bedded real-time systems with (m, k)-hard timing

constraint. Journal of Signal Processing Systems 90

(2018), 515–536.

L

´

eonie Pacher, Christian Chatellier, Rodolphe Vauzelle,

and Laetitia Fradet. 2020. Sensor-to-segment calibra-

tion methodologies for lower-body kinematic analysis

with inertial sensors: A systematic review. Sensors

20, 11 (2020), 3322.

Hari Prasanth, Miroslav Caban, Urs Keller, Gr

´

egoire Cour-

tine, Auke Ijspeert, Heike Vallery, and Joachim

Von Zitzewitz. 2021. Wearable sensor-based real-time

gait detection: A systematic review. Sensors 21, 8

(2021), 2727.

Alexander Schiewe, Andrey Krekhov, Frederic Kerber, Flo-

rian Daiber, and Jens Kr

¨

uger. 2020. A Study on

Real-Time Visualizations During Sports Activities on

Smartwatches. In 19th International Conference on

Mobile and Ubiquitous Multimedia. 18–31.

Sara Taghavi, Fardjad Davari, Hadi Tabatabaee Malazi, and

Ahmad Ali Abin. 2019. Tennis stroke detection us-

ing inertial data of a smartwatch. In 2019 9th Interna-

tional Conference on Computer and Knowledge Engi-

neering (ICCKE). IEEE, 466–474.

Carole A Tucker, Jose Ramirez, David E Krebs, and

Patrick O Riley. 1998. Center of gravity dynamic sta-

bility in normal and vestibulopathic gait. Gait & pos-

ture 8, 2 (1998), 117–123.

Peng Wang, Hongyi Liu, Lihui Wang, and Robert X. Gao.

2018. Deep learning-based human motion recogni-

tion for predictive context-aware human-robot collab-

oration. CIRP Annals 67, 1 (2018), 17–20. https:

//doi.org/10.1016/j.cirp.2018.04.066

Yang Xu and Ting Ting Qiu. 2021. Human activity recogni-

tion and embedded application based on convolutional

neural network. Journal of Artificial Intelligence and

Technology 1, 1 (2021), 51–60.

Eric Yiou, Teddy Caderby, Arnaud Delafontaine, Paul Four-

cade, and Jean-Louis Honeine. 2017. Balance control

during gait initiation: State-of-the-art and research

perspectives. World journal of orthopedics 8, 11

(2017), 815.

ICEIS 2024 - 26th International Conference on Enterprise Information Systems

518

Tirra Hanin Mohd Zaki, Musab Sahrim, Juliza Jamaludin,

Sharma Rao Balakrishnan, Lily Hanefarezan Asbu-

lah, and Filzah Syairah Hussin. 2020a. The Study

of Drunken Abnormal Human Gait Recognition using

Accelerometer and Gyroscope Sensors in Mobile Ap-

plication. (2020), 151–156. https://doi.org/10.1109/

CSPA48992.2020.9068676

Tirra Hanin Mohd Zaki, Musab Sahrim, Juliza Jamaludin,

Sharma Rao Balakrishnan, Lily Hanefarezan Asbu-

lah, and Filzah Syairah Hussin. 2020b. The study of

drunken abnormal human gait recognition using ac-

celerometer and gyroscope sensors in mobile applica-

tion. In 2020 16th IEEE International Colloquium on

Signal Processing & Its Applications (CSPA). IEEE,

151–156.

Zhendong Zhuang and Yang Xue. 2019. Sport-related hu-

man activity detection and recognition using a smart-

watch. Sensors 19, 22 (2019), 5001.

The Power of Gyroscope Data: Advancing Human Movement Analysis for Walking and Running Activities

519