Dispositional Learning Analytics to Investigate Students Use of

Learning Strategies

Dirk Tempelaar

1a

, Anikó Bátori

2

and Bas Giesbers

2b

1

School of Business and Economics, Department of Quantitative Economics, Maastricht University, Maastricht,

The Netherlands

2

School of Business and Economics, Department of Educational Research and Development, Maastricht University,

Maastricht, The Netherlands

Keywords: Dispositional Learning Analytics, Learning Strategies, Self-Regulated Learning, Problem-Based Learning,

Higher Education.

Abstract: What can we learn from dispositional learning analytics about how first-year business and economics students

approach their introductory math and stats course? This study aims to understand how students engage with

learning tasks, tools, and materials in their academic pursuits. It uses trace data, initial assessments of students'

learning attitudes, and survey responses from the Study of Learning Questionnaire (SLQ) to analyse their

preferred learning strategies. An innovative aspect of this research is its focus on clarifying how learning

attitudes influence and potentially predict the adoption of specific learning strategies. The data is examined

to detect clusters that represent typical patterns of preferred strategies, and relate these profiles to students'

learning dispositions. Information is collected from two cohorts of students, totalling 2400 first-year students.

A pivotal conclusion drawn from our research underscores the importance of adaptability, which involves the

capacity to modify preferred learning strategies based on the learning context. While it is crucial to educate

our students in deep learning strategies and foster adaptive learning mindsets and autonomous regulation of

learning, it is equally important to acknowledge scenarios where surface strategies and controlled regulation

may offer greater effectiveness.

1 INTRODUCTION

In today's technology-driven and ever-changing

landscape, the acquisition of skills conducive to

adaptability is imperative. Self-regulated learning

(SRL), highlighted as essential by various sources

(Ciarli et al., 2021), is vital for continuous learning

and development in the dynamic digital age. SRL

encompasses a set of skills that facilitate learning

processes and lead to positive academic outcomes,

such as improved performance and continuous

progress (Haron et al., 2015; Panadero and Alonso

Tapia, 2014). Specifically, SRL involves (meta-)

cognitive and motivational learning strategies that

shape a dynamic and cyclical process enabling

students to guide their own learning (Zimmerman,

1986). While there exist six different models of SRL

(Panadero, 2017), most of them have the cyclical

a

https://orcid.org/0000-0001-8156-4614

b

https://orcid.org/0000-0002-8077-9039

process comprising three phases in common:

preparatory, performance, and appraisal. Despite the

abundance of scientific literature on SRL and its

promotion in educational settings, teachers and

students often encounter challenges in fostering these

skills, even within pedagogical approaches like PBL,

where self-regulation is more integrated (Loyens et

al., 2013).

Educational technology presents an opportunity to

promote students' SRL. However, while some

educators successfully foster SRL using technology,

others struggle (Timotheou et al., 2023). This

discrepancy may stem from variations in technology

features and student engagement with technology,

which influence its impact on learning and

performance (Lawrence and Tar, 2018; Zamborova

and Klimova, 2023). Recent advancements in

educational research, particularly in learning

Tempelaar, D., Bátori, A. and Giesbers, B.

Dispositional Learning Analytics to Investigate Students Use of Learning Strategies.

DOI: 10.5220/0012711200003693

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 16th International Conference on Computer Supported Education (CSEDU 2024) - Volume 2, pages 427-438

ISBN: 978-989-758-697-2; ISSN: 2184-5026

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

427

analytics, notably dispositional learning analytics,

offer valuable insights into SRL in technology-

enhanced learning (Pardo et al., 2016, 2017;

Tempelaar et al., 2015, 2017).

Recent years have witnessed a significant

expansion in learning analytics research, providing

novel insights into how students engage with online

educational tools and content. Dispositional learning

analytics, a methodological approach focused on

understanding learners' inherent characteristics and

tendencies, has emerged as a significant development

(Buckingham Shum and Deakin Crick, 2012). By

combining dispositional data with objective trace

data, researchers can potentially derive predictive and

actionable insights, aiding in understanding student

behavior, learning strategies, and enabling a more

tailored educational experience (Han et al., 2020;

Tempelaar et al., 2015, 2017).

The current study investigates the learning

strategies of first-year business and economics

students enrolled in an introductory mathematics and

statistics course. This context is of interest due to the

challenges and opportunities presented by the subject

matter, which requires both conceptual understanding

and practical application and is often perceived as

difficult by students. Our focus on this group aims to

illuminate how students engage with complex

quantitative content, thereby contributing to

enhancing academic success. Our primary objective

is to explore and understand the range of learning

strategies preferred by these students. To achieve this,

we employ a dual approach: analyzing trace data,

which provides digital footprints of students'

interactions with learning tools and materials,

combined with dispositional data such as learning

related mindsets and learning strategies. This analysis

aims not only to identify prevalent learning strategies

but also to discern their correlation with students'

engagement with digital learning tools. This

understanding is pivotal for informing more effective

pedagogical approaches and targeted interventions to

enhance student learning outcomes (Han et al., 2020).

This study introduces several innovative aspects

to the field of dispositional learning analytics. Firstly,

it emphasizes the importance of linking initial

learning dispositions, measured at the beginning of

the course, with subsequent learning strategies. By

identifying profiles in this data, we aim to uncover

how initial dispositions may predict the adoption of

specific learning strategies. This approach represents

a significant departure from traditional methods,

which often focus solely on outcomes, to a more

nuanced understanding that encompasses the origins

and evolution of learning behaviors. Moreover, we

leave the traditional variable-centred method of

analysis, in favour of a person-centred analysis.

The insights derived from this study offer

potential benefits to various stakeholders in

education. For educational scientists and designers,

our findings provide critical data to inform the

development of more effective curriculum designs

and learning tools. Teachers can leverage this

information to better understand their students'

learning processes, potentially identifying those

employing less beneficial strategies. This

understanding is crucial for developing targeted

interventions that can significantly enhance student

learning outcomes and promote more effective self-

regulation in the learning process.

2 BACKGROUND

Self-regulated learning (SRL) stands as a key

educational strategy essential for navigating today's

dynamic academic environment. Defined by a

repertoire of skills enabling learners to effectively

manage and oversee their own learning processes,

SRL has been extensively studied for its pivotal role

in achieving favourable academic outcomes, such as

improved performance and ongoing advancement

(Haron et al., 2015; Panadero and Alonso-Tapia,

2014). At the core of SRL lies a cyclical and dynamic

process encompassing cognitive, metacognitive, and

motivational strategies (Zimmerman, 1986). Despite

the extensive literature on SRL, its practical

implementation, particularly in cultivating these

skills within diverse pedagogical contexts, remains a

significant challenge for educators and learners alike.

This challenge extends to student-centred

pedagogical approaches like Problem-Based

Learning (PBL), which inherently complements and

supports the principles of SRL (Hmelo-Silver, 2004;

Schmidt et al., 2007). PBL, centred on real-world

problem-solving, encourages learners to actively

participate in their learning journey, fostering critical

thinking and self-regulated learning skills. In a

program based on PBL principles, learners are

continually required to self-regulate as they

collaboratively and individually navigate through

problems, apply knowledge, and adjust strategies

based on feedback and reflection. However, research

shows mixed results regarding student learning

approaches. A comprehensive literature review on the

adoption of deep versus surface learning approaches

in PBL revealed a small positive effect size

concerning the adoption of deep learning approaches

(Dolmans et al., 2016), yet some studies report a

CSEDU 2024 - 16th International Conference on Computer Supported Education

428

tendency to adopt surface learning approaches across

the studied population (Loyens et al., 2013).

The affordances of educational technology have

been recognized as a potent means to promote SRL

(Persico and Steffens, 2017). However, despite the

unparalleled developments in digitization in (higher)

education, technology alone is not a cure-all for

delivering high-quality technology-enhanced

education, especially considering the emergency

remote learning implementations (Mou, 2023).

Educational research indicates that while some

teachers succeed in fostering SRL in their students

through technology-enhanced learning means, others

do not (Timotheou et al., 2023). Several factors could

underlie this finding, including teacher attitudes and

behaviours regarding technology, the features of

technology, and student engagement with the

technology (e.g., Lawrence and Tar, 2018;

Zamborova and Klimova, 2023). In technology-

enhanced learning environments that promote self-

regulated learning through scaffolding, dispositional

factors have been found to influence student

engagement (Tempelaar et al., 2017, 2020).

Advances in the development of dispositional

learning analytics show promise in aiding the

understanding of SRL in technology-enhanced

learning contexts (e.g., Pardo eta al., 2016, 2017;

Tempelaar et al., 2017, 2020).

2.1 Dispositional Learning Analytics

In the ever-evolving realm of educational research,

Learning Analytics (LA) emerges as a crucial tool,

providing a thorough examination of educational data

to extract actionable insights for learners, educators,

and policymakers (Hwang et al., 2018). Initially, LA

research primarily centred on constructing predictive

models using data from institutional and digital

learning platforms. However, these early efforts

mainly demonstrated the descriptive capabilities of

LA, confined to aggregating and analysing learner

data within existing educational infrastructures

(Viberg et al., 2018; Siemens and Gašević, 2012).

Acknowledging the limitations imposed by the static

nature of such data, Buckingham Shum and Deakin

Crick (2012) introduced the concept of Dispositional

Learning Analytics (DLA), proposing an innovative

framework that intertwines traditional learning

metrics with deeper insights into learners'

dispositions, attitudes, and values.

By incorporating learner dispositions into the

analytic process, DLA aims to enhance the precision

and applicability of feedback provided to educational

stakeholders, thereby refining the effectiveness of

educational interventions (Gašević et al., 2015;

Tempelaar et al., 2017). The concept of 'actionable

feedback,' as conceptualized by Gašević et al. (2015),

emphasizes the transformative potential of DLA in

fostering a more nuanced approach to educational

support, moving beyond generic advisories to deliver

tailored, context-sensitive guidance.

Despite the recognized value of LA in identifying

at-risk students, the challenge of translating analytic

insights into effective pedagogical action remains

significant, as evidenced by studies such as

Herodotou et al. (2020). DLA seeks to address this

gap by incorporating a multidimensional analysis of

learning dispositions, thereby offering a richer, more

holistic understanding of learners' engagement and

potential barriers to their success.

For instance, the simple directive to 'catch up'

may prove insufficient for students consistently

falling behind in their learning process. A deeper

exploration into their learning dispositions through

Dispositional Learning Analytics (DLA) might reveal

specific barriers to their academic engagement, such

as a lack of motivation or suboptimal self-regulation

strategies, enabling more precise interventions

(Tempelaar et al., 2021).

A notable utility of DLA lies in the nuanced

integration of motivational elements and learning

regulation tactics within the broader LA framework.

Our previous research indicates that, although a high

degree of self-regulation is often praised, striking a

balance between self-directed and externally guided

regulation is essential (Tempelaar et al., 2021a, b).

Identifying students predisposed to either excessive

self-reliance or significant disengagement allows for

the design of tailored interventions that resonate with

their unique learning styles. For individuals inclined

towards overemphasis on self-regulation, feedback

may highlight the benefits of external inputs and

adherence to the prescribed curriculum framework.

Conversely, for those displaying disengagement,

strategies may focus on igniting intrinsic motivation

and fostering active participation in the learning

process.

2.2 Research Objective and Questions

In this current investigation, we aim to delve deeper

into how DLA can enhance both the predictive and

intervention capabilities of LA. Expanding upon prior

research conducted by scholars such as Han et al.

(2020), Pardo et al. (2016, 2017), and Tempelaar et

al. (2021a, b), we pivot our focus in this study to

learning strategies. The research questions arising

from this research objective involve examining

Dispositional Learning Analytics to Investigate Students Use of Learning Strategies

429

whether and how dispositional learning analytics can

help us better understand how learning attitudes

influence and potentially predict the adoption of

specific learning strategies within a student-centred

teaching approach.

3 METHOD

3.1 Context and Setting

This research was conducted within the framework of

a mandatory introductory mathematics and statistics

module tailored for first-year undergraduate students.

This module constitutes an essential component of a

business and economics program at a medium-sized

university in The Netherlands, with data collection

spanning academic years 22/23 and 23/24. The

module extends over an eight-week period, requiring

a weekly commitment of 20 hours. Many students,

especially those with limited mathematical skills,

perceive this module as a significant hurdle.

The instructional approach adopted involves a

flipped classroom design, primarily emphasizing

face-to-face Problem-Based Learning (PBL)

sessions. These sessions, conducted in tutorial groups

of up to 15 students, are led by content expert tutors.

Each week, students participate in two such tutorial

groups, each lasting two hours. Fundamental

concepts are introduced through lectures delivered

weekly. Additionally, students are expected to

allocate 14 hours per week to self-study, utilizing

textbooks and engaging with two interactive online

tutoring systems: Sowiso (https://sowiso.nl/) and

MyStatLab (Nguyen et al., 2016; Rienties et al., 2019;

Tempelaar et al., 2015, 2017, 2020).

A primary goal of the PBL approach is to cultivate

Self-Regulated Learning (SRL) skills among

students, emphasizing their responsibility for making

informed learning choices (Schmidt et al., 2007).

Collaborative learning through shared cognitions is

another objective. To achieve these aims, feedback

from the tutoring systems is shared with both students

and tutors. Tutors utilize this feedback to guide

students when necessary, initiating discussions on

feedback implications and suggesting improvement

strategies. These interactions take place within the

tutorial sessions and are not observed.

The student learning process unfolds in three

phases. The first phase involves preparation for the

weekly tutorials, during which students engage in

self-study to tackle "advanced" mathematical and

statistical problems. While not formally assessed, this

phase is crucial for active participation in the

tutorials. The second phase centres on quiz sessions

held at the end of each week (excluding the first).

These quizzes, designed to be formative, provide

feedback on students' mastery of the subject. With

12.5% of the total score based on quiz performance,

students are motivated to extensively utilize the

resources available, particularly those with limited

prior knowledge. The third and final phase is

dedicated to exam preparation during the last week of

the module, involving graded assessments.

3.2 Participants

In total, data from 2406 first-year students enrolled in

academic years 2022/2023 and 2023/2024 were

utilized in this study. All of these students had

engaged with at least one online learning platform.

Among these students, 37% identified as female,

while 63% identified as male. Regarding educational

background, 16% possessed a Dutch high school

diploma, while the majority, comprising 84%, were

international students. The international student

cohort predominantly hailed from European

countries, with a notable representation of German

(33%) and Belgian (18%) nationalities. Additionally,

7% of students originated from outside Europe.

The approach to teaching mathematics and

statistics varies considerably across high school

systems, with the Dutch system placing a greater

emphasis on statistics compared to many other

countries. However, across all countries, math

education is typically categorized into different levels

based on its application in sciences, social sciences,

or humanities. In our business program, a prerequisite

for admission is prior mathematics education tailored

towards social sciences. Within our study cohort,

37% of students pursued the highest track in high

school, contributing to a diverse range of prior

knowledge. Therefore, it was essential for the module

to accommodate these students by offering flexibility

and accommodating individual learning paths,

alongside providing regular interactive feedback on

their learning strategies and tasks.

In addition to a final written exam, student

assessment included a project where students

statistically analysed personal learning disposition

data. To facilitate this, students completed various

individual disposition questionnaires to measure

affective, behavioural, and cognitive aspects of

aptitudes, including a learning strategies

questionnaire at the outset of the module.

Subsequently, they received personalized datasets for

their project work.

CSEDU 2024 - 16th International Conference on Computer Supported Education

430

3.3 e-Tutorial Trace Data

Trace data were collected from both online tutoring

systems and the Canvas LMS, which provided general

course information and links to Sowiso and

MyStatLab. Both Sowiso and MyStatLab employ

mastery learning as their instructional method

(Tempelaar et al., 2017). However, they differ

significantly in their capabilities for collecting trace

data. MyStatLab offers students and instructors several

dashboards summarizing student progress in mastering

individual exercises and chapters but lacks time-

stamped usage data. Conversely, Sowiso provides time

stamps for every individual event initiated by the

student, along with mastery data, enabling the full

integration of temporality in the design of learning

models. Previous studies (Tempelaar et al., 2021a,

2023) focused solely on the rich combination of

process and product trace data from Sowiso. In this

study, we incorporate both trace data of product type,

taken from both e-tutorials, as well as trace data of

process type from Sowiso only. The mastery achieved

by students in each week as preparation for their quiz

sessions constitutes the product type data. Mastery data

represent the proportion of assignments students are

able to solve without using any digital help, in every

week of the course.

The main type of process data, available for the

mathematical e-tutorial Sowiso, is the number of

attempts students undertake to solve the weekly

assignments. Following previous research (Tempelaar

et al., 2023), we delineated three distinct learning

phases based on the timing of learning activities. In

phase 1, students engaged in preparation for the tutorial

session of the week. During these face-to-face tutorial

sessions, students discussed solving 'advanced'

mathematical and statistical problems, necessitating

prior self-study to facilitate active participation in

discussions. Phase 2 learning involved preparing for

the quiz session at the conclusion of each module

week. Phase 3 encompassed preparation for the final

exam, scheduled for the eighth week of the module.

Consequently, students made timing decisions

regarding the extent of their preparation across each of

the three phases.

3.4 Instruments

3.4.1 Study of Learning Questionnaire

The learning strategies questionnaire (see Table 1)

was adapted from the questionnaire employed by

Rovers et al. (2018), which in turn was adjusted from

the questionnaire developed by Hartwig and

Dunlosky (2012) to suit a Problem-Based Learning

(PBL) environment. To customize it for the specific

course, supplementary items regarding the utilization

of online learning platforms were integrated. All

items were assessed on a Likert scale ranging from 1

(never) to 7 (often). The survey was administered

midway through the course to ensure participants'

familiarity with the included learning strategies. It is

apparent that the initial items predominantly focus on

passive learning strategies, which are generally

deemed less effective compared to the subsequent

items that emphasize more active strategies like self-

testing (Dunlosky et al., 2013; Hartwig and

Dunlosky, 2012).

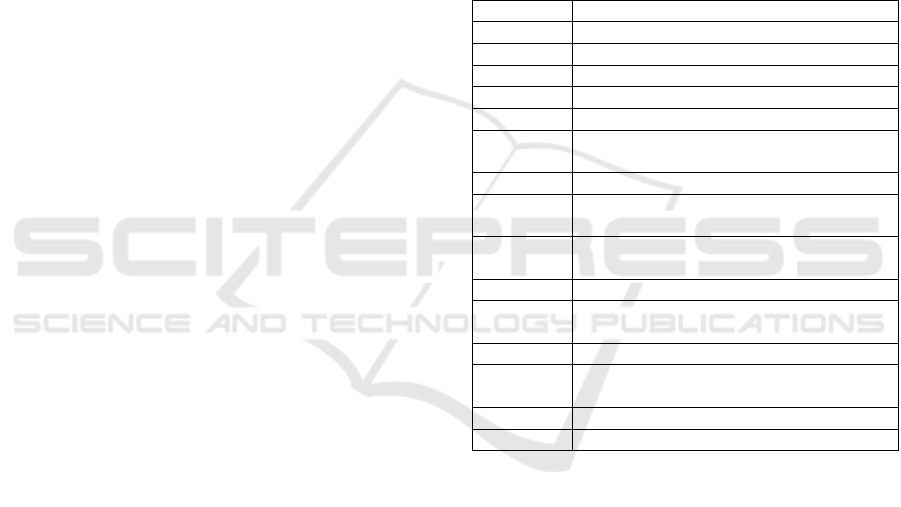

Table 1: Learning Strategy Questionnaire Items.

Item Learning strategy engagement

LrnApp01 Rereading textbook and reader

LrnApp02 Making summaries

LrnApp03 Underlining/marking text

LrnApp04 Explaining to myself what I am reading

LrnApp05 Remembering keywords

LrnApp06 Trying to form a mental image (an image

in my head) of what I am reading

LrnApp07 Testing myself by doing Sowiso exercises

LrnApp08 Testing myself by doing MyStatLab

exercises

LrnApp09 Testing myself with self-made test

questions

LrnApp10 Studying worked-out examples in Sowiso

LrnApp11 Studying worked-out examples in

MyStatLab

LrnApp12 Asking someone else to test me

LrnApp13 Asking questions to other students

(outside of the tutorial group)

LrnApp14 Studying with friends/other students

LrnApp15 Visiting lectures

3.4.2 Mindset Measures: Self-Theories of

Intelligence, Effort-Beliefs and Goals

Self-theories of intelligence measures encompass

both entity and incremental types, originating from

Dweck’s Theories of Intelligence Scale – Self Form

for Adults (2006). This scale comprises eight items:

four statements reflecting Entity Theory and four

reflecting Incremental Theory. Effort-belief measures

were sourced from two references: Dweck (2006) and

Blackwell (2002). Dweck offers example statements

illustrating effort as either negative—EffortNegative,

where exerting effort implies low ability—or

positive—EffortPositive, where exerting effort is

seen as enhancing one’s ability. The former serves as

the introductory item on both subscales of these

statement sets (see Dweck, 2006). Furthermore,

Dispositional Learning Analytics to Investigate Students Use of Learning Strategies

431

Blackwell’s comprehensive sets of Effort beliefs

(Blackwell et al., 2007) were utilized, consisting of

five positively formulated and five negatively

formulated items.

To identify goal setting behaviour, we have

applied the Grant and Dweck (2003) instrument,

which distinguishes the two learning goals

Challenge-Mastery and Learning, as well as four

types of performance goals—two of appearance

nature: Outcome and Ability Goals, and two of

normative nature: Normative Outcome and

Normative Ability Goals.

3.4.3 Learning Processes and Regulation

Strategies

We employed Vermunt's (1996) Inventory of

Learning Styles (ILS) tool to assess learning

processing and regulation strategies, which are

fundamental aspects of Self-Regulated Learning

(SRL). Our investigation specifically targeted

cognitive processing strategies and metacognitive

regulation strategies.

The cognitive processing strategies align with the

SAL research framework (see Han et al., 2020) and

are organized along a continuum from deep to surface

approaches to learning. In the deep approach, students

strive for comprehension, while in the surface

approach, they focus on reproducing material for

assessments without necessarily understanding the

underlying concepts:

Deep processing: forming independent opinions

during learning, seeking connections and

creating diagrams.

Stepwise (surface) processing: investigating

step by step, learning by rote.

Concrete Processing: focus on making new

knowledge tangible and applicable.

The metacognitive regulation strategies shed light on

how students oversee their learning processes and

facilitate categorizing students along a spectrum that

spans from self-regulation as the predominant

mechanism to external regulation. These scales

encompass:

Self-regulation: self-regulation of learning

processes and learning content.

External regulation: external regulation of

learning processes and learning outcomes.

Lack Regulation: absence of regulation.

The instrument was administered at the onset of the

academic study, indicating that the typical learning

patterns observed in students are those developed

during high school education.

3.4.4 Academic Motivations

The Academic Motivation Scale (AMS, Vallerand, et

al., 1992) is rooted in the framework of self-

determination theory, which discerns between

autonomous and controlled motivation. Consisting of

28 items, the AMS prompts individuals to answer the

question "Why are you attending college?" The scale

encompasses seven subscales, with four categorized

under the Autonomous motivation scale, representing

the inclination to learn stemming from intrinsic

satisfaction and enjoyment of the learning process

itself. Furthermore, two subscales are part of the

Controlled motivation scale, indicating learning

pursued as a means to an external outcome rather than

for its inherent value. The last scale, A-motivation,

denotes the absence of regulation.

3.5 Statistical Analyses

Drawing from the framework of person-centred

modelling approaches (Malcom-Piqueux, 2015) and

employing cluster analysis methodologies to identify

unique and shared learner profiles based on their

learning strategy data, this study utilized k-means

cluster analysis (Pastor, 2010). The input data

consisted of fifteen responses to the Study of

Learning Questionnaire (SLQ) instrument. Although

trace data and other disposition data could have been

included in the cluster analysis, the decision was

made to focus solely on profiles derived from SLQ

data. By categorizing students into clusters based

solely on perceived learning strategies, the study

gains the advantage of distinguishing and exploring

relationships between self-reported aptitudes and

those manifested in learning activities, as well as

other aptitude measures (Han et al., 2020). An

alternative approach, as seen in previous studies by

the authors (Tempelaar et al., 2020), could have

combined behavioural and dispositional measures for

clustering, resulting in profiles representing a mix of

actual learning activities and self-perceived learning

dispositions. Another potential approach could have

focused exclusively on trace data for clustering,

examining differences in learning behaviours among

clusters, as demonstrated by Tempelaar et al. (2023).

However, due to the absence of process-type trace

data for the MyStaLab e-tutorial, this approach was

not considered viable for this study.

The determination of the number of clusters

aimed to maximize profile variability while ensuring

that clusters were not overly small (comprising less

than 5% of students). Ultimately, a five-cluster

solution was selected, revealing five clearly distinct

CSEDU 2024 - 16th International Conference on Computer Supported Education

432

profiles. Solutions with higher dimensions did not

significantly alter cluster characteristics and posed

challenges in interpretation. Subsequently,

differences between profiles in E-tutorial use,

mindsets, learning patterns, academic motivations

and course performance were explored through

variable-centred analysis using ANOVA. Since due

to large sample size, nearly all profile differences are

strongly significantly different beyond the .0005

level, reporting is focussing on effect sizes.

4 RESULTS

4.1 Student Learning Strategy Profiles

The optimal characterization of students' learning

strategy profiles emerged through a five-cluster

solution. This selection was predominantly driven by

the preference for solutions that offer a

straightforward and intuitive interpretation of the

profiles, prioritizing parsimony. The five-cluster

solution proves to be the best fit, delineating distinct

profiles of learning approaches within the clusters.

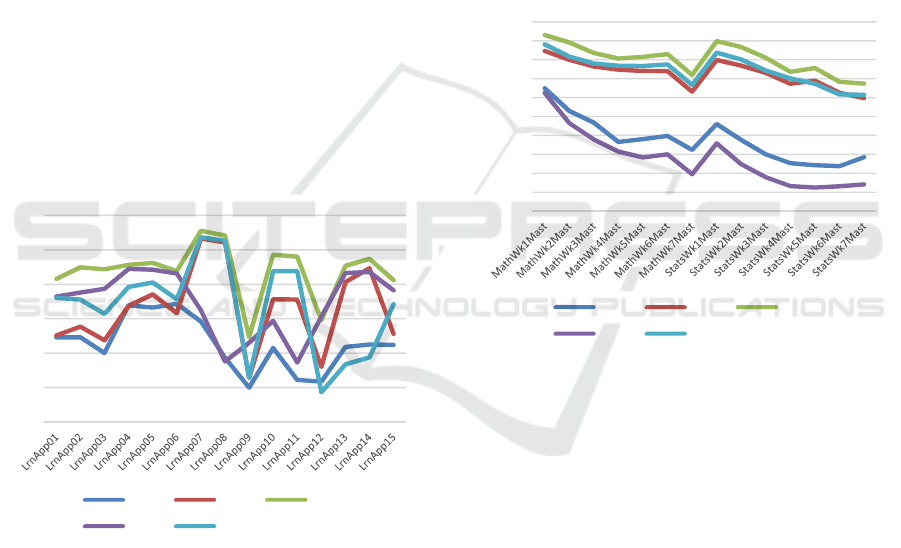

The clusters are presented in Figure 1.

Figure 1: Clusters Based On Learning Strategy Data.

The largest profile with 693 students is given by

Cluster 3, encompassing students who achieved “all

high” scores across all learning strategy items,

resulting in a relatively uniform and less diverse

learning strategy pattern compared to other clusters.

Conversely, Cluster 1, 297 students, serves as its

counterpart in many aspects, with “all low” scores for

most learning strategies. Cluster 4, 340 students, is

the profile of "non-tool users". These students apply

passive learning strategies such as rereading,

marking, and attending lectures, and trust on the

collaboration with peers. The two remaining profiles

are characterized by a strong focus on self-testing.

Cluster 5 students (505) are the intensive “tool-

users”: they utilize the e-tutorials not only for self-

testing but also for accessing worked-out examples.

Cluster 2 students (571) combine the focus on using

tools to self-test with the tendency to collaborate with

peers in learning.

4.2 Profile Differences in e-Tutorial

Use

Figure 2 illustrates the average e-tutorial mastery

scores for the weekly topics. On the left side are the

seven mathematical topics covered over seven weeks,

while on the right side are the mastery scores for the

seven weekly statistical topics.

Figure 2: Profile Differences in Weekly Mastery Scores.

The five profiles are categorized into two patterns,

emphasizing notable distinctions between the two

profiles characterized by consistently low scores ("all

low") and those identified as "non-tool users," which

also attain low mastery scores. Conversely, the "all

high" profile, along with the two "self-testing"

directed profiles, are positioned on the opposite end

of the spectrum. Across all profiles, there is an

observable decline in mastery scores over time, with

the most significant decrease observed in the profiles

starting with relatively low mastery levels.

Process-type trace data, represented by Attempt

data for each of the weekly mathematics topics across

the three learning phases (preparing for tutorial

sessions, quizzes, and exams), exhibit a similar

declining pattern over the weeks. Furthermore, they

indicate that students predominantly focus on the

second learning phase, the preparation of quizzes,

elucidating the saw tooth gradient in Figure 3. At the

1

2

3

4

5

6

7

Cluster 1 Cluster 2 Cluster 3

Cluster 4 Cluster 5

0

5

10

15

20

25

30

35

40

45

50

Cluster 1 Cluster 2 Cluster 3

Cluster 4 Cluster 5

Dispositional Learning Analytics to Investigate Students Use of Learning Strategies

433

lower end of the saw tooth, the "all low" and "non-

tool users" profiles reappear. However, notably, the

gap between these two profiles and the other three

profiles is much narrower compared to the mastery

data. Evidently, extensive utilization of e-tutorials

coincides with less efficient usage.

Figure 3: Profile Differences in Weekly Attempts, by

Learning Phase.

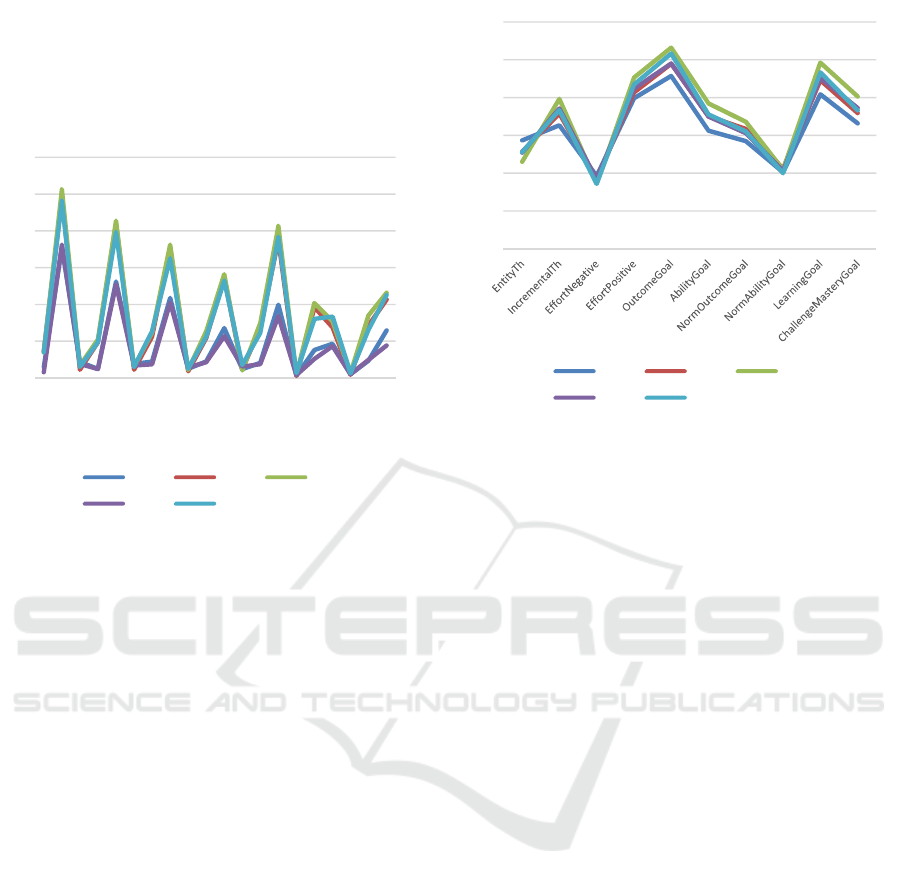

4.3 Profile Differences in Mindsets,

Effort Beliefs and Goals

Different mindsets, whether it's the entity theory

implying a fixed intelligence belief or the incremental

theory suggesting intelligence is adaptable, show

minimal distinctions in their profiles. The most

notable variances are found in the incremental theory,

accounting for a 3.4% eta squared effect size. This

effect is magnified when paired with positive effort

beliefs, resulting in a doubled effect size of eta

squared 6.8%, whereas differences in negative effort

beliefs are ignorable.

When examining students' goal-setting

behaviours across the five profiles, variations emerge,

particularly in outcome goals (8.5% eta squared effect

size), learning goals (9.2% eta squared effect size),

and challenge-mastery goals (4.8% eta squared effect

size). Among these more pronounced profile

distinctions, students in “all high” Cluster 3,

characterized by consistently high attributes, tend to

align with adaptive behaviours, while those in “all

low” Cluster 1, with consistently low attributes, tend

to lean towards maladaptive behaviours. However, no

consistent patterns are observed in the remaining

three clusters: see Figure 4.

Figure 4: Profile Differences in Mindsets, Effort Beliefs

and Achievement Goals.

4.4 Profile Differences in Learning

Patterns

The most prominent and consistent disparities across

all learning dispositions are observed in the

instrument that assesses cognitive learning

processing and metacognitive learning regulation.

Consistency is defined by consistently scoring either

high or low on processing and regulation strategies,

regardless of their type (except for the maladaptive

lack of regulation strategy). Cluster 3 students,

identified as exhibiting "all high" tendencies in

employing various learning strategies, demonstrate

this characteristic consistently in both processing and

regulation. Their propensity for deep learning, as well

as surface (stepwise) and concrete (strategic)

learning, surpasses that of any other cluster.

Moreover, their application of self-regulation and

external regulation of learning exceeds that of all

other clusters. Conversely, Cluster 1 students,

labelled as "all low" in terms of learning strategies,

exhibit uniformly low scores across all learning

processing strategies and both adaptive learning

regulation strategies. For the students in Cluster 2,

their emphasis on self-testing and collaborative

learning translates into a relatively modest intensity

in applying processing or regulation strategies.

However, a clear pattern is absent in the profile

differences between the remaining two clusters, the

"tool users" and "non-tool users" of Clusters 4 and 5.

Effect sizes vary from 7% for external regulation to

13.2% for stepwise processing, as depicted in Figure

5.

0

20

40

60

80

100

120

AttWk1TG

AttWk1Qz

AttWk1Ex

AttWk2TG

AttWk2Qz

AttWk2Ex

AttWk3TG

AttWk3Qz

AttWk3Ex

AttWk4TG

AttWk4Qz

AttWk4Ex

AttWk5TG

AttWk5Qz

AttWk5Ex

AttWk6TG

AttWk6Qz

AttWk6Ex

AttWk7TG

AttWk7Ex

Cluster 1 Cluster 2 Cluster 3

Cluster 4 Cluster 5

1

2

3

4

5

6

7

Cluster 1 Cluster 2 Cluster 3

Cluster 4 Cluster 5

CSEDU 2024 - 16th International Conference on Computer Supported Education

434

Figure 5: Profile Differences in Learning Processing and

Learning Regulation.

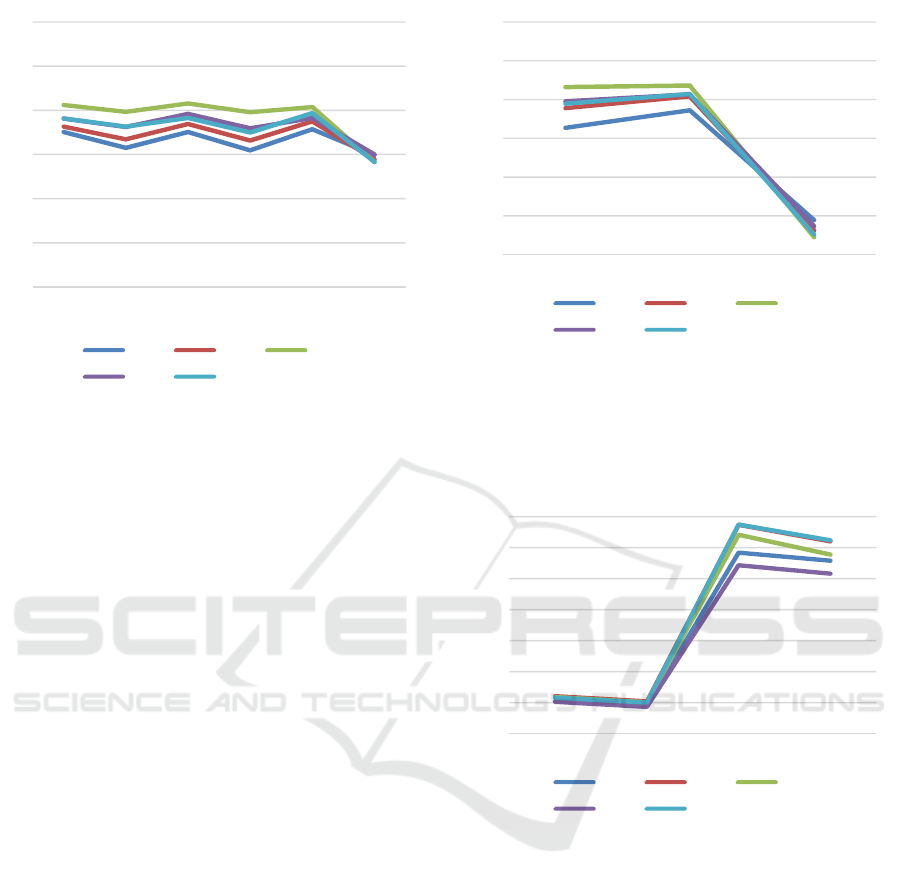

4.5 Profile Differences in Academic

Motivations

In line with the outcomes discussed in the previous

section, distinct and consistent disparities among

clusters emerge in Autonomous and Controlled

Motivation. However, the only substantial effect size

is associated with Autonomous Motivation, reaching

13.8% eta squared, as shown in Figure 6.

Cluster 3 students, characterized as "all high,"

demonstrate the highest levels of both autonomous

and controlled motivation, while Cluster 1 students,

identified as "all low," exhibit the lowest levels of

motivation across both dimensions. This observation

challenges the assumptions of self-determination

theory, which suggest the prevalence of one

dimension of motivation over the other. Profile

variances among Clusters 2, 4, and 5 are minimal and

lack a similarly consistent pattern.

4.6 Profile Differences in Course

Performance

The true gauge of achievement lies in performance,

specifically in how students perform in the course.

Performance metrics including ExamMath,

ExamStats, QuizMath, and QuizStats reveal

variations among profiles, with effect sizes ranging

from 3.9% to 7.3%. The most notable effect size is

found in both quiz scores, where the eta squared

effect size reaches 7.3%.

Figure 6: Profile Differences in Academic Motivations.

Somewhat smaller, be it better visible in Figure 7, are

the profile differences in performance scores: quiz

scores (with range 0…4) and exam scores (with range

0…20).

Figure 7: Profile Differences in Course Performance.

Two clusters stand out as top performers: the two

clusters characterized by extensive "tool usage,"

namely Clusters 2 and 5. Despite being the most

adaptable learners, students in Cluster 1, the "all high"

cluster, achieve intermediate levels of performance.

On the other hand, Cluster 1, consisting of "all low"

students, and Cluster 4, comprising "non-tool users,"

exhibit lower performance levels.

5 DISCUSSION & CONCLUSIONS

In our research, we examined students' preferences

for learning strategies through self-report surveys. In

a PBL curriculum, where students have access to

1

2

3

4

5

6

7

Deep proc. Stepwise

proc.

Concrete

proc.

Self regul. External

regul.

Lack regul.

Cluster 1 Cluster 2 Cluster 3

Cluster 4 Cluster 5

1

2

3

4

5

6

7

Autonomous Controlled Amotivation

Cluster 1 Cluster 2 Cluster 3

Cluster 4 Cluster 5

0

2

4

6

8

10

12

14

QzMath QzStats ExamMath ExamStats

Cluster 1 Cluster 2 Cluster 3

Cluster 4 Cluster 5

Dispositional Learning Analytics to Investigate Students Use of Learning Strategies

435

various learning strategies both within and outside of

technology-enhanced learning environments, this is

the only way to identify how learning takes place.

However, if all learning occurs within digital

confines, the identification of learning strategies is

not limited to self-report methods but can also be

achieved behaviourally, through the analysis of traces

of learning activities. An illustrative example of such

behavioural identification of learning strategies is

provided by Fan et al. (2022), who investigated

learning within a MOOC environment. Fan and

colleagues identified two successful learning

strategies, called intensive and balanced, which were

positively associated with course performance.

Interestingly, these characteristics closely align with

our "all high" profile of preferred learning strategies

identified through self-reports.

Going back to the most salient finding of Rovers

et al.'s (2018) investigation of learning strategies

within a PBL-based program: again, it were the

students who employ a diverse range of learning

approaches who tend to excel. This diversity includes

strategies traditionally viewed as suboptimal, such as

surface-level learning methods. The key factor for

effective learning seems to be adaptability. Rovers et

al. (2018) conclude that students who reported

utilizing various strategies, including some

traditionally considered "ineffective" (like

highlighting, rereading, etc.), but in ways that suited

their learning context, appear being the most adaptive

students.

Our study expands upon the application of diverse

instructional methods. Even within a standard PBL

curriculum, students have access to abundant learning

resources. By integrating blended learning into our

investigation, we further diversify the available

resources, compelling students to select from an even

broader array of learning strategies. Despite

significant shifts in learning environments, Rovers et

al.'s (2018) primary finding remains more or less

consistent: one of the effective learning approaches,

as indicated by course performance, involves

integrating all available learning strategies. This

includes employing deep learning whenever possible

but transitioning to surface-level approaches when

necessary. Students are encouraged to use

autonomous regulation when suitable but should not

hesitate to employ controlled regulation in

challenging circumstances.

Two additional profiles indicative of effective

learning methods concerning course performance

were observed among students who prioritize self-

testing. While the significance of self-assessment in

self-regulated learning is widely recognized

(Panadero et al., 2019), it's essential to exercise

caution in generalizing this finding beyond our

specific context, which involves two e-tutorials

grounded in mastery learning within a test-oriented

learning environment. It is evident that within a

learning environment offering ample opportunities

for self-assessment, students inclined towards self-

assessment tend to excel, even achieving the highest

course performance. However, the question arises

whether this pattern persists in contexts where self-

assessment isn't as robustly supported as in our

particular learning environment.

When assessing performance as a measure of

learning effectiveness, we identify two learning

strategy profiles that exhibit below-average

performance relative to others, albeit with modest

performance differences. Cluster 1 students primarily

rely on non-digital resources and employ surface-

level learning strategies such as highlighting,

underlining, and rereading. Cluster 2 students also

depend on non-digital resources, focus on

memorizing keywords, utilize self-explanation, and

heavily rely on peer collaboration for learning. The

rigorous nature of our course (mathematics and

statistics, which may not align with the preferences of

many business and economics students) could

contribute to the limitations associated with these two

learning strategy profiles. Conversely, students who

incorporate testing as a significant component of their

learning strategies demonstrate above-average

performance, underscoring its importance.

Importantly, previous mathematics education

does not account for variations in learning strategy

preferences, whereas gender does. Female students

are overrepresented among those who adopt effective

learning strategies compared to their male

counterparts.

Addressing challenges related to fostering SRL in

higher education, particularly in student-centred

learning approaches like PBL, is complex. The

current study offers additional insight into how SRL

can be understood through DLA, aligning with

findings from previous research indicating that

employing DLA aids in understanding learners'

motivations, attitudes, and learning strategies,

thereby facilitating the development of more

personalized and effective educational interventions

(Pardo et al., 2016, 2017; Persico & Steffens, 2017;

Tempelaar et al., 2017, 2020). For practical

implementation, DLA could be integrated into the

development of learning analytics dashboards to

inform both students and instructors about learning

progress (e.g., Matcha et al., 2019). However, it is

crucial to consider that interpreting results would

CSEDU 2024 - 16th International Conference on Computer Supported Education

436

require some level of instruction to enhance

understanding and implementation of SRL strategies

within various learning and teaching contexts, as well

as how to interpret DLA data accordingly.

REFERENCES

Blackwell, L. S., Trzesniewski, K. H., and Dweck, C. S.

(2007). Implicit theories of intelligence predict

achievement across an adolescent transition: A

longitudinal study and an intervention. Child

Development, 78(1), 246–263.

Buckingham Shum, S. and Deakin Crick, R. (2012).

Learning Dispositions and Transferable Competencies:

Pedagogy, Modelling and Learning Analytics. In S.

Buckingham Shum, D. Gasevic, and R. Ferguson

(Eds.). Proceedings of the 2nd International

Conference on Learning Analytics and Knowledge, pp.

92-101. ACM, New York, NY, USA.

Ciarli, T., Kenney, M., Massini, S., and Piscitello, L.

(2021). Digital technologies, innovation, and skills:

Emerging trajectories and challenges. Research Policy,

50(7). doi: 10.1016/j.respol.2021.104289

Dolmans, D.H.J.M., Loyens, S.M.M., Marcq, H., and

Gijbels, D. (2016). Deep and surface learning in

problem-based learning: a review of the literature.

Advances in Health Science Education, 21, 1087–1112.

doi: 10.1007/s10459-015-9645-6

Dunlosky J., Rawson, K. A., Marsh, E. J., Nathan, M. J.,

and Willingham, D. T. (2013). Improving Students'

Learning With Effective Learning Techniques:

Promising Directions From Cognitive and Educational

Psychology. Psychological Science in the Public

Interest, 14(1):4-58. doi: 10.1177/1529100612453266

Dweck, C. S. (2006). Mind-set: The New Psychology of

Success. Random House, New York, NY.

Fan, Y., Jovanović, J., Saint, J., Jiang, Y., Wang, Q., and

Gašević, D. (2022). Revealing the regulation of

learning strategies of MOOC retakers: A learning

analytic study, Computers & Education, 178, 104404.

doi: 10.1016/j.compedu.2021.104404

Gašević, D., Dawson, S., and Siemens, G. (2015). Let’s not

forget: Learning analytics are about learning,

TechTrends, 59(1), 64-71. doi: 10.1007/s11528-014-

0822-x

Grant, H. and Dweck, C.S. (2003). Clarifying achievement

goals and their impact. Journal of Personality and

Social Psychology, 85, 541-553.

Han F, Pardo A, and Ellis RA. (2020). Students' self-report

and observed learning orientations in blended

university course design: How are they related to each

other and to academic performance? Journal Computer

Assisted Learning, 36(6), 969–980. doi:

10.1111/jcal.12453

Haron, H.N., Harun, H., Ali, R., Salim, K. R., and Hussain,

N. H. (2014). Self-regulated learning strategies between

the performing and non-performing students in statics.

2014 International Conference on Interactive

Collaborative Learning (ICL), Dubai, United Arab

Emirates, pp. 802-805, doi: 10.1109/ICL.2014.70

17875

Hartwig, M. K. and Dunlosky, J. (2012). Study strategies of

college students: Are self-testing and scheduling related

to achievement? Psychonomic Bulletin & Review,

19(1), 126-134. doi: 10.3758/s13423-011-0181-y

Herodotou, C., Rienties, B., Hlosta, M., Boroowa, A.,

Mangafa, C., and Zdrahal, Z. (2020). The Scalable

implementation of predictive learning analytics at a

distance learning university: Insights from a

longitudinal case study. The Internet and Higher

Education, 45, 100725. doi: /10.1016/j.iheduc.20

20.100725

Hmelo-Silver, C. E. (2004). Problem-Based Learning:

What and How Do Students Learn? Educational

Psychology Review, 16(3), 235–266. doi:

10.1023/B:EDPR.0000034022.16470.f3

Hwang, G.-J., Spikol, D., and Li, K.-C. (2018). Guest

editorial: Trends and research issues of learning

analytics and educational big data. Educational

Technology & Society, 21(2), 134–136.

Lawrence, J. E., and Tar, U. A. (2018). Factors that

influence teachers’ adoption and integration of ICT in

teaching/learning process. Educational Media

International, 55(1), 79–105. doi: 10.1080/09523

987.2018.1439712

Loyens, S. M. M., Gijbels, D. Coertjens, L., and Coté, D. J..

(2013). Students’ Approaches to Learning in Problem-

Based Learning: Taking into account Professional

Behavior in the Tutorial Groups, Self-Study Time, and

Different Assessment Aspects. Studies in Educational

Evaluation, 39(1): 23–32. doi:10.1016/j.stueduc.20

12.10.004

Malcom-Piqueux, L. (2015). Application of Person-

Centered Approaches to Critical Quantitative Research:

Exploring Inequities in College Financing Strategies.

New directions for institutional research, 163, 59-73.

doi: 10.1002/ir.20086

Matcha, W., Uzir, N. A., Gašević, D., and Pardo, A. (2019).

A Systematic Review of Empirical Studies on Learning

Analytics Dashboards: A Self-Regulated Learning

Perspective. IEEE Transactions on Learning

Technologies, 13(2), 226-245. doi: 10.1109/TLT.20

19.2916802.

Mou, T.-Y. (2023). Online learning in the time of the

COVID-19 crisis: Implications for the self-regulated

learning of university design students. Active Learning

in Higher Education, 24(2), 185-205. doi:

10.1177/14697874211051226

Nguyen, Q., Tempelaar, D. T., Rienties, B., and Giesbers,

B. (2016). What learning analytics based prediction

models tell us about feedback preferences of students.

Quarterly Review of Distance Education, 17(3), 13-33.

Panadero, E. (2017). A review of self-regulated learning:

Six models and four directions for research. Frontiers

in Psychology, 8

, 422. doi: 10.3389/fpsyg.2017.00422

Panadero, E. and Alonso-Tapia, J. (2014). How do students

self-regulate? Review of Zimmerman's cyclical model

Dispositional Learning Analytics to Investigate Students Use of Learning Strategies

437

of self-regulated learning. Anales de Psicología, 30(2),

450–462.

Panadero, E., Lipnevich, A., Broadbent, J. (2019). Turning

Self-Assessment into Self-Feedback. In: Henderson,

M., Ajjawi, R., Boud, D., Molloy, E. (eds), The Impact

of Feedback in Higher Education. Palgrave Macmillan,

Cham. doi: 10.1007/978-3-030-25112-3_9

Pardo, A., Han, F., and Ellis, R. (2016). Exploring the

Relation Between Self-regulation, Online Activities,

and Academic Performance: A case Study. In:

Proceedings of the 6th International Learning

Analytics and Knowledge Conference, pp. 422–429.

Edinburgh, UK. doi: 10.1145/2883851.2883883

Pardo, A., Han, F., and Ellis, R. (2017). Combining

university student self-regulated learning indicators and

engagement with online learning events to predict

academic performance. IEEE Transactions on

Learning Technologies, 10(1), 82–92. doi:

10.1109/TLT.2016.2639508

Pastor, D. A. (2010). Cluster analysis. In G. R. Hancock &

R. O. Mueller (Eds.), The reviewer’s guide to

quantitative methods in the social sciences, pp. 41–54.

New York, NY: Routledge

Persico, D., Steffens, K. (2017). Self-Regulated Learning in

Technology Enhanced Learning Environments. In:

Duval, E., Sharples, M., Sutherland, R. (eds.)

Technology Enhanced Learning, pp. 115-126. Cham,

Switzerland: Springer. doi: 10.1007/978-3-319-02600-

8_11

Rienties, B., Tempelaar, D., Nguyen, Q., and Littlejohn, A.

(2019). Unpacking the intertemporal impact of self-

regulation in a blended mathematics environment.

Computers in Human Behavior, 100, 345-357. doi:

10.1016/j.chb.2019.07.007

Rovers, S.F.E., Stalmeijer R.E., van Merriënboer J.J.G.,

Savelberg H.H.C.M. and de Bruin A.B.H. (2018) How

and Why Do Students Use Learning Strategies? A

Mixed Methods Study on Learning Strategies and

Desirable Difficulties With Effective Strategy Users.

Frontiers in Psychology, 9:2501. doi: 10.3389/fpsyg.20

18.02501

Schmidt, H.G., Loyens, S.M.M., van Gog, T., and Paas, F.

(2007). Problem-Based Learning is Compatible with

Human Cognitive Architecture: Commentary on

Kirschner, Sweller, and Clark (2006), Educational

Psychologist, 42(2), 91-97, doi: 10.1080/004615207

01263350

Siemens, G. and Gašević, D. (2012). Guest editorial -

Learning and knowledge analytics. Educational

Technology and Society, 15(3), 1–2.

Tempelaar, D. T., Rienties, B., and Giesbers, B. (2015). In

search for the most informative data for feedback

generation: Learning Analytics in a data-rich context.

Computers in Human Behavior, 47, 157-167. doi:

10.1016/j.chb.2014.05.038

Tempelaar, D. T., Rienties, B., and Nguyen, Q. (2017).

Achieving actionable learning analytics using

dispositions. IEEE Transactions on Learning

Technologies, 10(1), 6-16. doi: 10.1109/TLT.2017.26

62679

Tempelaar, D., Nguyen, Q., and Rienties, B. (2020).

Learning Analytics and the Measurement of Learning

Engagement. In: D. Ifenthaler and D. Gibson (Eds.),

Adoption of Data Analytics in Higher Education

Learning and Teaching, pp. 159-176, Series: Advances

in Analytics for Learning and Teaching. Springer,

Cham. doi: 10.1007/978-3-030-47392-1_9

Tempelaar, D., Rienties, B., and Nguyen, Q. (2021a). The

Contribution of Dispositional Learning Analytics to

Precision Education. Educational Technology &

Society, 24(1), 109-122.

Tempelaar, D., Rienties. B., and Nguyen, Q. (2021b).

Dispositional Learning Analytics for Supporting

Individualized Learning Feedback. Frontiers in

Education, 6:703773. doi: 10.3389/feduc.2021.703773

Tempelaar, D., Rienties, B., Giesbers, B., and Nguyen, Q.

(2023). Modelling Temporality in Person- and

Variable-Centred Approaches. Journal of Learning

Analytics, 10(2), 1-17. doi: 10.18608/jla.2023.7841

Timotheou, S., Miliou, O., Dimitriadis, Y., Sobrino, S. V.,

Giannoutsou, N., Cachia, R., Monés, A. M., & Ioannou,

A. (2023). Impacts of Digital Technologies on

Education and Factors Influencing Schools’ Digital

Capacity and Transformation: A Literature Review.

Education and Information Technologies, 28(6), 6695–

6726. doi: 10.1007/s10639-022-11431-8

Vallerand, R. J., Pelletier, L. G., Blais, M. R., Brière, N. M.,

Senécal, C., and Vallières, E. F. (1992). The Academic

Motivation Scale: A Measure of Intrinsic, Extrinsic,

and Amotivation in Education. Educational and

Psychological Measurement, 52, 1003–1017. doi:

10.1177/0013164492052004025

Vermunt, J. D. (1996). Metacognitive, cognitive and

affective aspects of learning styles and strategies: A

phenomenographic analysis. Higher Education, 31

(25–50). doi: 10.1007/BF00129106

Viberg, O., Hatakka, M., Bälter, O., and Mavroudi, A.

(2018). The Current landscape of learning analytics in

higher education. Computers in Human Behavior, 89,

98-110. doi: 10.1016/j.chb.2018.07.027

Zamborova, K. and Klimova, B. (2023). The utilization of

a reading app in business English classes in higher

education. Contemporary Educational Technology,

15(3). doi:10.30935/cedtech/13364

Zimmerman, B. J. (1986). Becoming a self-regulated

learner: Which are the key subprocesses?

Contemporary educational psychology, 11(4), 307-

313. doi: 10.1016/0361-476X(86)90027-5

CSEDU 2024 - 16th International Conference on Computer Supported Education

438