Development of an Instrument for Evaluating Learning Experiences

in a Hybrid Learning Environment

Paola Costa Cornejo

1a

, Laëtitia Pierrot

2b

and Melina Solari Landa

3c

1

Centre Capsule, Sorbonne University, 4 Place Jussieu, Paris, France

2

Cren, Avenue Olivier Messiaen, Le Mans University, Le Mans, France

3

Techné, University of Poitiers, 1 rue Raymond Cantel, Poitiers, France

Keywords: Learning Experience, Hybrid Learning Environment, Higher Education.

Abstract: An interest in hybrid teaching environment (HLEs) has emerged, particularly since 2020. A previous study

on HLE during the Covid-19 period identified several evaluation challenges. These challenges stem from the

composite nature of the environments (combining human, technical, and pedagogical elements) and their

hybridity (varying degrees of support, openness, and presence-distance interaction). The literature also

highlights methodological shortcomings. The learning experience in a blended context is poorly defined, and

data collection often prevents comprehensive analysis. This paper specifically addresses the following

research question: how can we measure the learning experience in a blended learning context? The state-of-

the-art review identifies key dimensions for consideration, emphasizing the need for multidimensional

approaches to gain a deeper understanding of the learning experience. We aim to apply the designed

instrument in research in three different contexts. Ultimately, this paper seeks to enrich our understanding of

the complexities surrounding the learning experience in blended learning and to provide recommendations

that support teachers' pedagogical practices.

1 INTRODUCTION

The recent interest in hybrid learning environments

(HLEs) is partially fuelled by the COVID-19

pandemic. Our previous research, studying and

assessing HLEs during forced distance learning

(Costa et al., 2022) lockdown, revealed two key

challenges in evaluating these learning environments.

(1) Existing evaluations often focus on either the

technical aspects (tool usability or utility for instance)

or participant (learners, teachers or academic staff)

feelings, neglecting the environment's holistic nature

that encompasses both technology and social

interactions. (2) Teachers in our study reported

implementing innovative, student-centred practices,

while students felt disoriented by the lack of a

familiar lecture structure. This aligns with Carreras

and Couturier (2023), who observed universities

focusing on content provision and highlighting the

need for teacher development on instructional design

a

https://orcid.org/0000-0002-7696-0417

b

https://orcid.org/0000-0003-1701-3783

c

https://orcid.org/0000-0001-6604-4274

to optimize the learning experience. Additionally,

Peltier (2023) echoes our findings regarding differing

perceptions of presence and distance between

learners and teachers.

A review by Raes et al. (2020) highlights the

potential of hybrid learning environments. Such

HLEs offer both organizational benefits (e.g. efficient

teaching practices) and pedagogical advantages (e.g.

improved learning quality). However, the technical

foundation of these environments requires

adjustments for both teachers and learners. For

example, both groups often report diminished social

presence due to reduced or absent visual and auditory

cues compared to traditional classrooms. Addressing

these challenges, Raes et al. (2020) propose key

research recommendations for HLEs:

Expand and diversify data collection;

Prioritize empirical and longitudinal studies

Costa Cornejo, P., Pierrot, L. and Solari Landa, M.

Development of an Instrument for Evaluating Learning Experiences in a Hybrid Learning Environment.

DOI: 10.5220/0012726800003693

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 16th International Conference on Computer Supported Education (CSEDU 2024) - Volume 2, pages 605-612

ISBN: 978-989-758-697-2; ISSN: 2184-5026

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

605

Employ multimodal analysis to capture the

complex nature of engagement, social

presence, and belonging

Evaluate the effectiveness of specific teaching

scenarios within HLEs

Consider the unique possibilities and

limitations of each learning environment

within its institutional context.

Our paper tackles this research question: what

aspects should we consider when evaluating the

learning experience in a hybrid learning environment

to support continuous improvement? Identifying

these crucial dimensions helps pinpoint where data

collection is necessary to assess the effectiveness of

the hybrid approach. Ultimately, these insights can

inform targeted training and support for teachers in

instructional design within hybrid settings.

This proposal focuses on evaluating HLEs within

higher education, encompassing diverse academic

fields yet sharing a common need for assessment. We

consider three specific cases:

1. The START european project supports teachers

who support students transition from high school to

university. It utilizes online resources in various

formats, complementing face-to-face courses and

introducing elements of hybridization. For instance, it

facilitates the integration of students' personal

experiences into the academic sphere, prompting

teachers to acknowledge the transitional process. To

enhance the effectiveness of these resources,

analyzing the combined impact of disciplinary and

personal aspects is crucial.

2. The projects supported by CAPSULE include

some initiatives led by the faculty of Science and

Engineering at Sorbonne University that aim to

transform existing bachelor's degree courses from

purely face-to-face delivery to HLEs formats. This

initiative adresses the question: how should we

evaluate these ongoing transformations, which

respond to the needs expressed by both teachers and

instructional designers?

3. The Learners Portal project arose from

concerns about low student engagement with the

resources provided for hybrid learning courses. An

alumni survey's preliminary results confirmed this,

also revealing a lack of dedicated learning

communities for these cohorts, potentially hindering

the development of crucial autonomous and self-

regulated learning competencies.

The next section will present the review of the

current literature on learning experience evaluation

and then we introduce the foundation of our proposed

evaluation tool.

2 STATE OF THE ART

2.1 Challenges in Measuring the

Learning Experience of Hybrid

Learning Environments

The study of learner experience within HLEs reveals

a diversity of approaches. This variety stems from

differing theoretical frameworks and the absence of a

single, universally accepted definition for "blended

learning" (BL). As Eggers et al. (2021, p. 175) rightly

stated in their systematic review, "the definition of

BL has long been confusing." Notably, French-

speaking researchers and practitioners (Eggers et al.,

2021; Peltier & Séguin, 2021), have widely adopted

the term "dispositif hybride de formation" (hybrid

learning environment) since its introduction by the

Hy-Sup collective (Deschryver & Charlier, 2012).

The evaluation of HLEs raises the issue of the

evaluation concept's polysemy, highlighting the need

to clarify which elements are being measured.

Lachaux (2023, p. 6) defines its multifaceted nature

as focusing on continuous improvement, acting as "an

intermediate diagnosis (...) on the lookout for

anything that is not working, or working well enough,

with a view to improvement”. However, two

systematic reviews (Buhl-Wiggers et al., 2023; Raes

et al., 2020) reveal the limitations in existing

research. Most studies rely on qualitative methods

and case studies, limiting generalizability (Raes et al.,

2020). Additionally, longitudinal studies and

assessments of long-term effects are scarce (Buhl-

Wiggers et al., 2023).

Further, Lajoie et al. (2021) highlight a critical

issue in distance learning research: inconsistent

descriptions of the learning environments themselves

hinder accurate assessment of their impact on student

learning. They also pinpoint the lack of attention to

pedagogical design, proposing a model incorporating

students' socio-demographic characteristics and

course pedagogy to predict potential dropouts.

Defining the learning experience in HLEs

remains too a subject under discussion. Many studies

equate it with satisfaction or performance, often

linked to learner engagement. For instance, Wu et al.

(2010), cited by Bouilheres et al. (2020), emphasize

how perceived success and learning environment

impact overall satisfaction. Similarly, Xiao et al.

(2020) directly link satisfaction and learning

experience to students' ability to explore resources

and engage cognitively. However, alternative

perspectives shift the focus from satisfaction to

student perception and agency. Molinari and Shneider

(2020) define it as "the way learners perceive and give

CSEDU 2024 - 16th International Conference on Computer Supported Education

606

meaning to the learning situation and the emotions

they feel" (p. 3). Authors favoring a learner-centered

approach (Boud & Prosser, 2002; Charlier et al.,

2021; Molinari & Schneider, 2020; Peraya &

Charlier, 2022) stress the importance of designing

HLEs around the students’ perspective. In this

perspective, teaching and learning processes as

inseparable and learning occurs within a specific

context, shaped by diverse student perceptions.

Therefore, "students perceive the same learning

context in different ways, and this variation

fundamentally impacts their approach to learning and

the quality of their outcomes" (Boud & Prosser, 2002,

p. 238).

2.2 Key Dimensions for Assessing the

HLEs Learning Experience

A comprehensive review by Schneider & Preckel

(Schneider & Preckel, 2017) analysed 38 meta-

analyses encompassing over 2 million students in

face-to-face courses to explore factors contributing to

academic success. Their findings identified 105

relevant variables, highlighting the strong correlation

between "social interaction," "course design," and

"performance." The study emphasizes that students’

performance aligns with stimulating learning

environments characterized by clear information

presentation, active student interaction, and

cognitively engaging activities. Additionally,

students with high performance often display positive

self-efficacy, strong prior academic achievement, and

a strategic use of learning strategies. Building upon

these findings, authors like Bonfils & Peraya (2011),

Charlier et al. (2021), De Clercq (2020), and Tricot

(2021) underscore the importance of considering

students' characteristics to design learning scenarios

that effectively meet their diverse needs.

The variables involved in the learning experience

are diverse in nature. While designing a good course

is crucial, effective learning experiences go beyond

mere planning. As highlighted by several researchers

(Amadieu & Tricot, 2014; Deschryver, 2008;

Entwistle & McCune, 2013; Viau et al., 2005), the

real challenge lies in encouraging student

engagement with activities that require active

participation. This reluctance often stems from a lack

of developed skills, including digital competencies,

self-regulation, autonomy, and collaboration

(Kaldmäe et al., 2022). This underlines the inherent

complexity of the issue, requiring a systemic

understanding of the diverse and dynamic variables

involved.

Schneider & Preckel (2017) emphasize the crucial

role of effective implementation in maximizing the

impact of different teaching methods on student

performance. Their analysis of numerous studies

revealed moderating effects for almost all teaching

methods, implying that their effectiveness hinges on

how they are delivered. Notably, teachers of high-

performing students invested heavily in designing

well-structured courses with clear learning objectives

and frequent, targeted feedback. This aligns with

other research highlighting the importance of

continual professional development for higher

education instructors (Romainville & Michaut,

2012). Such training equips teachers with the skills

and knowledge needed to effectively implement

diverse teaching methods, ultimately fostering

student success.

Building on the concept of learner-centred

environments, Boud & Prosser (2002) identify four

key areas to enhance the student experience in

technology-rich settings. Two of these areas directly

address learner engagement and consideration of

individual learning contexts.

2.2.1 Involving Students

Building upon the foundation laid by the Hy-Sup

collective (Deschryver & Charlier, 2012), recent

research emphasizes a learner-centred perspective to

understand how HLEs impact students (Charlier et

al., 2021; Peraya & Charlier, 2022). This necessitates

understanding students' expectations, prior

knowledge, and sense of self-efficacy (Bandura,

2003; Follenfant & Meyer, 2003; Jackson, 2002;

Lécluse-Cousyn & Jézégou, 2023). Furthermore,

Viau et al. (2005) advocate for designing meaningful

learning activities that students perceive as valuable.

This means highlighting their usefulness, interest,

perceived cost, and importance, ultimately fostering

student motivation (De Clercq, 2020; Entwistle &

McCune, 2013; Viau et al., 2005).

Beyond learner characteristics, several design

aspects of HLEs influence student engagement. One

key factor is the degree of course openness, which

Jézégou (2021, 2022) links to students' perception of

organizational and relational proximity (Brassard &

Teutsch, 2014; Moore, 2003). To assess this,

Jézégou's GEODE evaluation system (2021)

proposes three dimensions:

Spatio-temporal openness; flexibility in

accessing learning materials and engaging in

activities (time, place, pace).

Pedagogical openness; freedom in learning

objectives, sequence, methods, formats,

content, and evaluation.

Development of an Instrument for Evaluating Learning Experiences in a Hybrid Learning Environment

607

Openness in mediated communication; choice

of media, communication tools, and resource

interaction.

Research on factors influencing student success in

HLEs has explored various elements. Studies have

examined how engaged and motivated students are in

the learning process (Ames, 1992; Elliot, 1999;

Houart et al., 2019). Others dive into the different

ways students approach learning and how it impacts

their success (Biggs, 1987; Entwistle & McCune,

2013). Research (Pirot & De Ketele, 2002) highlight

the importance of pre-existing knowledge and skills

developed in earlier education, including time

management, organization, and cognitive skills. This

aligns with research emphasizing the connection

between learning strategies, self-regulation, and

academic success (Cosnefroy, 2010; Cosnefroy et al.,

2018; Eggers et al., 2021; Zimmerman, 2000).

2.2.2 Recognising and Integrating Students'

Learning Context

Hybrid learning environments (HLEs) exist within a

complex ecosystem. Recognizing this, evaluation

must consider not only the pedagogical

implementation but also the material and human

contexts of the students involved. For example, does

the activity align with students' current

circumstances, considering task demands and

resource availability? Do assessment activities

accurately reflect learning outcomes and allow for the

demonstration of high-level achievement? As Peraya

& Charlier inquire, how does the "current diversity of

learning spaces transform the student experience"?

(Peraya & Charlier, 2022, p. 38). While research

highlights these crucial perspectives and challenges,

methodological guidance on data collection remains

scarce.

Building on the previous points, Boud & Prosser

(2002) advocate for two additional design features:

challenging activities and opportunities for active

practice. These elements aim to enhance student

learning by promoting deeper cognitive engagement

(Chi & Wylie, 2014; De Clercq, 2020; Vellut, 2019).

This approach encompasses tasks that allow students

to demonstrate their learning, receive feedback,

reflect on their progress, and gain confidence through

hands-on practice.

2.3 Instruments Used to Assess the

Learning Experience in an HLE

Two main approaches exist for collecting data on

students' learning experiences in HLEs.

1. Observed data: This mainly involves analysing

traces left by students in Learning Management

Systems to assess online participation in

learning activities (Bennacer, 2022).

Understanding the meaning behind these traces

is crucial, but interpreting these traces requires

careful consideration of ethical and interpretive

dimensions (Pierrot, 2019). Additionally, the

Hy-Sup collective (2012) proposes a typology

based on observing both learners and teachers,

using 14 items to categorize 6 types of HLEs.

This helps guide improvement in course design,

but different perceptions can arise from its sole

use (Pierrot et al., 2023).

2. Self-reported data: This involves collecting

student data through questionnaires or

qualitative methods like interviews (individual

or group). For example, evaluating learning

strategies often relies on questionnaires with

statements and Likert scales to express

agreement or disagreement (Cosnefroy, 2010;

Cosnefroy et al., 2018; Eggers et al., 2021;

Zimmerman, 2000). However, limitations

include the declarative nature of the data and

potential respondent drop-off due to lengthy

questionnaires, which can compromise

generalizability.

Our review of the current research allows us to

identify major dimensions for evaluating the learning

experience in HLEs, along with potential pitfalls to

avoid. We define the learning experience as students'

perception, understanding, and interaction within an

HLEs, leading to varying levels of engagement with

their learning.

For characterizing the instructional design, we

adopt the Hy-Sup collective's (Deschryver et al.,

2012) theoretical framework. The 14-question self-

assessment tool previously mentioned distinguishes

between 3 teaching-centred and 3 learning-centred

types, also highlighting nuanced differences in five

dimensions (presence-distance articulation,

mediation, mediatization, support, and openness).

We propose enriching this framework by

incorporating the learners' perspective, specifically

focusing on variables that enhance their engagement

within a given HLE context. Additionally, we suggest

complementing perceived data with observed data to

strengthen the evaluation process, as defined by

Lachaux (2023).

3 METHODOLOGY AND

FUTURE WORK

Designing our HLEs learning experience evaluation

tool involved leveraging the iterative strengths of

design-based research (Amiel & Reeves, 2008). This

CSEDU 2024 - 16th International Conference on Computer Supported Education

608

means merging research insights with practitioner

input through a cyclical process. As suggested by

vom Brocke et al. (2020), the process is cyclical as it

adapts through use.

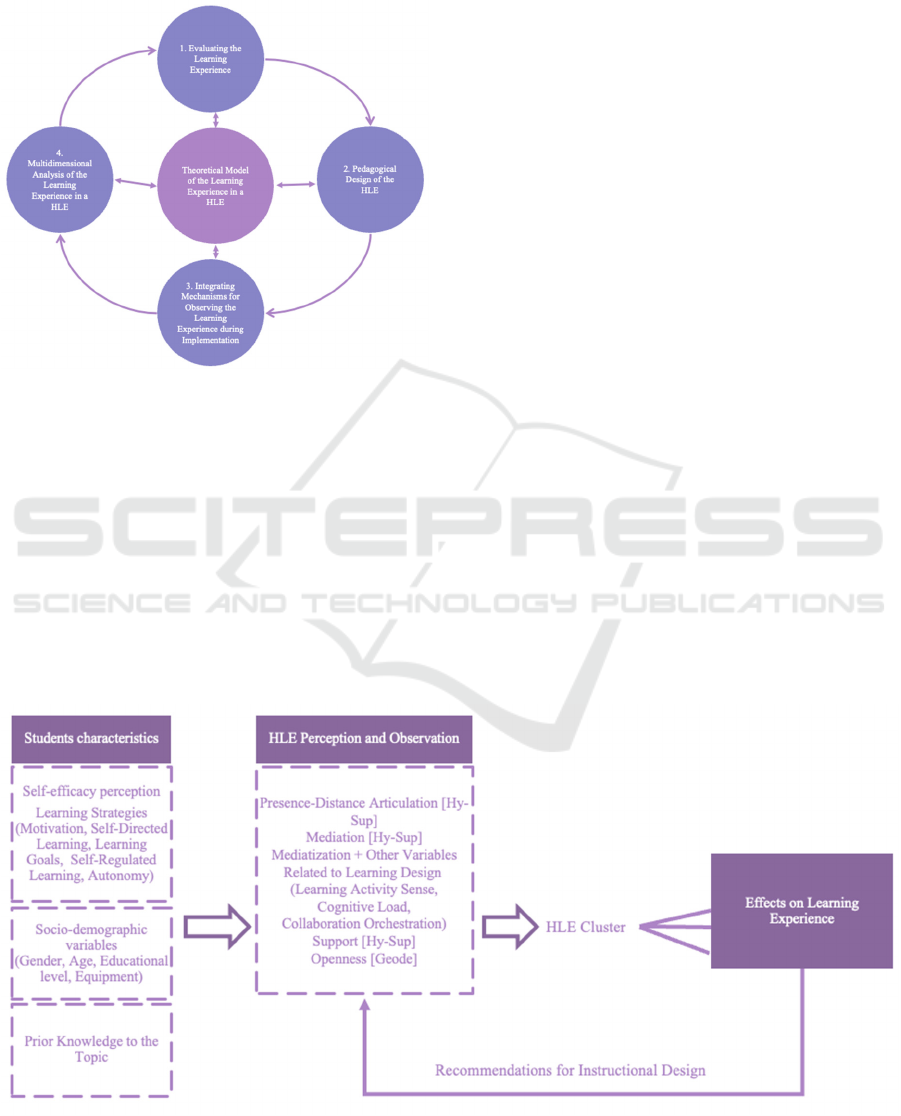

Figure 1: DBR method used in the study (adapted from

Sanchez, 2022).

A core element of this approach is our theoretical

model built around the key dimensions of the HLE

learning experience. This model served four key

purposes: (1) identifying main considerations for

evaluating the learning experience, (2) guiding the

pedagogical design of the HLE itself, (3) integrating

mechanisms for observing the learning experience

during implementation, (4) enabling a

multidimensional analysis of the collected data (see

Figure 1).

We first took up the dimensions identified by the

Hy-Sup collective (Charlier et al., 2021; Deschryver

& Charlier, 2012; Peraya & Charlier, 2022) to

develop our theoretical framework. To enhance our

understanding of students' perspectives in HLEs,

several new dimensions beyond those already

explored seem crucial, aligning with Boud &

Prosser's (2002) recommendations. These include

individual characteristics, skills and feelings of self-

efficacy concerning the proposed activities (skills

relating to the nature of the task and techniques for

the tools that instrument them), considering their

expectations in terms of learning, or even their

learning context.

Figure 2 shows an extract from our evaluation

model designed to capture different aspects of the

learner experience in HLEs. The model’s snippet

focuses on measuring learner involvement and the

dimensions of the meaning of the activity, the feeling

of self-efficacy, the openness of the course and in-

depth learning. The model thus combines dimensions

relating to learners and others relating to the technical

environment on which the HLE is based. In addition,

the sub-dimensions identified are based on

observable elements (e.g. explicitness of target skills)

and other perceived elements (usefulness of the

activity).

Building on the research reviewed earlier, our

approach emphasizes using a mixed-method

approach with data triangulation. This combines

multiple data collection methods, allowing us to

compare analyses of the same element from different

perspectives. This supports the objectivity and

richness of our findings while capturing the

complexity of the HLEs learning experience

(Bobillier Chaumon, 2016).

Figure 2: Extract from the theoretical model of the learning experience in an HLE.

Development of an Instrument for Evaluating Learning Experiences in a Hybrid Learning Environment

609

The rest of the work will consist of defining, the

specific data collection methods for each dimension

of the proposed model. Finally, we will implement

the designed tool in our own educational contexts to

test its effectiveness in real-world settings.

This paper delves into the existing literature to

identify the main dimensions for a multidimensional

evaluation of the HLEs learning experience. This

exploration produced a framework encompassing

various dimensions and sub-dimensions that

influence and inform how students learn and engage

in HLEs settings. In answer to our research question

(namely what aspects to consider when evaluating the

learning experience in a hybrid learning environment

for continuous improvement?), our research suggests

focusing on several dimensions: (1) consider

individual learner characteristics, (2) investigate

learner involvement and engagement, (3) assess

course potentialities in terms of in-depth learning, (4)

evaluate technical environment features and (5)

combine subjective with objective data for a richer

understanding of the learning experience. Based on

these guidelines, our framework serves as the

foundation for a tool we are developing to measure

the learning experience. This tool aims to surpass the

methodological limitations of commonly used data

collection methods in the field. In the next stages, we

will apply, refine, test and validate this tool.

Ultimately, our work seeks to deepen our

understanding of the multifaceted HLEs learning

experience. This will allow us to provide valuable

recommendations for pedagogical engineering and

empower teachers with self-reflection tools to

enhance their teaching practices.

REFERENCES

Amadieu, F., & Tricot, A. (2014). Apprendre avec le

numérique : Mythes et réalités. Retz.

Ames, C. (1992). Classrooms: Goals, structures, and

student motivation. Journal of Educational Psychology,

84(3), 261‑271. https://doi.org/10.1037/0022-

0663.84.3.261

Amiel, T., & Reeves, T. (2008). Design-Based Research

and Educational Technology: Rethinking Technology

and the Research Agenda. Educational Technology &

Society, 4(11), 29‑40.

Bandura, A. (2003). Auto-efficacité : Le sentiment

d’efficacité personnelle. De Boeck.

Bennacer, I. (2022). Teaching analytics: Support for the

evaluation and assistance in the design of teaching

through artificial intelligence [Thèse de doctorat, Le

Mans Université]. https://theses.hal.science/tel-

03935709

Biggs, J. (1987). Student approaches to learning and

studying. Australian Council for Educational Research.

Bobillier Chaumon, M.-E. (2016). L’acceptation située des

technologies dans et par l’activité : Premiers étayages

pour une clinique de l’usage. Psychologie du Travail et

des Organisations, 22(1), 4‑21.

https://doi.org/10.1016/j.pto.2016.01.001

Bonfils, P., & Peraya, D. (2011). Environnements de travail

personnels ou institutionnels ? Les choix d’étudiants en

ingénierie multimédia à Toulon. In L. Vieira, C. Lishou,

& N. Akam, Le numérique au coeur des partenariats :

Enjeux et usages des technologies de l’information et

de la communication (p. 13‑28). Presses Universitaires

de Dakar. http://www.philippe-bonfils.com/9.html

Boud, D., & Prosser, M. (2002). Appraising New

Technologies for Learning: A Framework for

Development. Educational Media International,

39(3‑4), 237‑245.

https://doi.org/10.1080/09523980210166026

Bouilheres, F., Le, L. T. V. H., McDonald, S., Nkhoma, C.,

& Jandug-Montera, L. (2020). Defining student

learning experience through blended learning.

Education and Information Technologies, 25(4),

3049‑3069. https://doi.org/10.1007/s10639-020-

10100-y

Brassard, C., & Teutsch, P. (2014). Proposition de critères

de proximité pour l’analyse des dispositifs de formation

médiatisée. Distances et médiations des savoirs, 2(5).

https://doi.org/10.4000/dms.646

Buhl-Wiggers, J., Kjærgaard, A., & Munk, K. (2023). A

scoping review of experimental evidence on face-to-

face components of blended learning in higher

education. Studies in Higher Education, 48(1),

151‑173.

https://doi.org/10.1080/03075079.2022.2123911

Carreras, O., & Couturier, C. (2023). Les pratiques

d’enseignement dans le supérieur pendant les

confinements et leur persistance. Distances et

médiations des savoirs, 42.

https://doi.org/10.4000/dms.9025

Charlier, B., Peltier, C., & Ruberto, M. (2021). Décrire et

comprendre l’apprentissage dans les dispositifs

hybrides de formation. Distances et médiations des

savoirs, 35. https://doi.org/10.4000/dms.6638

Chi, M. T. H., & Wylie, R. (2014). The ICAP Framework:

Linking Cognitive Engagement to Active Learning

Outcomes. Educational Psychologist, 49(4), 219‑243.

https://doi.org/10.1080/00461520.2014.965823

Cosnefroy, L. (2010). Se mettre au travail et y rester : Les

tourments de l’autorégulation. Revue française de

pédagogie, 170, 5‑15. https://doi.org/10.4000/rfp.1388

Cosnefroy, L., Fenouillet, F., Mazé, C., & Bonnefoy, B.

(2018). On the relationship between the forethought

phase of self-regulated learning and self-regulation

failure. Issues in Educational Research, 2(28),

329‑348.

Costa, P., Solari, M., Pierrot, L., Salazar, R., Peraya, D., &

Cerisier, J. F. (2022). Situación actual y desafíos de la

formación híbrida y a distancia en tiempos de crisis.

Estudio de casos en universidades de Chile y Francia.

Cuadernos de investigación. Aseguramiento de la

Calidad en Educación Superior. Comisión Nacional de

CSEDU 2024 - 16th International Conference on Computer Supported Education

610

Acreditación CNA-Chile, 24.

https://www.cnachile.cl/Paginas/cuadernos.aspx

De Clercq, M. (Éd.). (2020). Oser la pédagogie active.

Quatre clefs pour accompagner les étudiants.es dans

leur activation pédagogique. Presses universitaires de

Louvain. http://pul.uclouvain.be

Deschryver, N., & Charlier, B. (2012). Dispositifs hybrides,

nouvelle perspective pour une pédagogie renouvelée de

l’enseignement supérieur. http://prac-hysup.univ-

lyon1.fr/spiral-

files/download?mode=inline&data=1757974

Eggers, J. H., Oostdam, R., & Voogt, J. (2021). Self-

regulation strategies in blended learning environments

in higher education: A systematic review. Australasian

Journal of Educational Technology, 175‑192.

https://doi.org/10.14742/ajet.6453

Elliot, A. J. (1999). Approach and avoidance motivation

and achievement goals. Educational Psychologist,

34(3), 169‑189.

https://doi.org/10.1207/s15326985ep3403_3

Entwistle, N., & McCune, V. (2013). The disposition to

understand for oneself at university: Integrating

learning processes with motivation and metacognition.

British Journal of Educational Psychology, 83(2),

267‑279. https://doi.org/10.1111/bjep.12010

Follenfant, A., & Meyer, T. (2003). Pratiques déclarées,

sentiment d’avoir appris et auto-efficacité au travail.

Résultats de l’enquête quantitative par questionnaires.

In P. Carre & O. Charbonnier, Les apprentissages

professionnels informels. L’Harmattan.

Houart, M., Bachy, S., Dony, S., Hauzeur, D., Lambert, I.,

Poncin, C., & Slosse, P. (2019). La volition, entre

motivation et cognition : Quelle place dans la pratique

des étudiants, quels liens avec la motivation et la

cognition ? Revue internationale de pédagogie de

l’enseignement supérieur, 35(1).

https://doi.org/10.4000/ripes.2061

Jackson, J. W. (2002). Enhancing Self-Efficacy and

Learning Performance. The Journal of Experimental

Education, 70(3), 243‑254.

https://doi.org/10.1080/00220970209599508

Jézégou, A. (2021). GEODE pour évaluer l’ouverture d’un

environnement éducatif [application web]. La Fabrique

des Formations, Fondation i-Site, Université de Lille.

https://fabrique-formations.univ-lille.fr/geode

Jézégou, A. (2022). La présence a distance en e-Formation.

Enjeux et reperes pour la recherche et l’ingénierie.

Presses Universitaires de Septentrion.

Kaldmäe, K., Polak, M., Saralar-Aras, İ., Ostrowska, B.,

Solari Landa, M., Verswijvel, B., & Zaród, M. (2022).

Pedagogy and the Learning Space [Novigado Project

Final Report]. The Novigado Project Consortium.

https://fcl.eun.org/documents/10180/7012295/Novigad

o-final-report_EN.pdf/8fd9c408-12ed-6158-e87e-

a19f314ff01e?t=1658147139279

Lachaux, J.-P. (2023). Préface. In L’évaluation des

dispositifs pédagogiques innovantes (Associoation

Nationale Recherche Technologie, p. 6‑8).

Lécluse-Cousyn, I., & Jézégou, A. (2023). Stratégies

volitionnelles, sentiment d’autoefficacité et

accompagnement étudiant : Quelles relations dans un

dispositif hybride ? Revue internationale des

technologies en pédagogie universitaire, 20(3), 37‑54.

https://doi.org/10.18162/ritpu-2023-v20n3-03

Molinari, G., & Schneider, E. (2020). Soutenir les stratégies

volitionnelles et améliorer l’expérience des étudiants en

formation à distance. Quels potentiels pour le design

tangible ? Distances et médiations des savoirs, 32.

https://doi.org/10.4000/dms.5731

Moore, M. (2003). Theory of transactional distance: The

evolution of theory of distance education. In D. Keegan

(Éd.), Theoretical Principles of Distance Education (p.

22‑38). Routledge.

Peltier, C. (2023). Présence, distance et absence. Diversité

des représentations liées à la baisse de fréquentation des

cours présentiels et des usages des cours enregistrés.

Distances et médiations des savoirs, 43.

https://doi.org/10.4000/dms.9535

Peltier, C., & Séguin, C. (2021). Hybridation et dispositifs

hybrides de formation dans l’enseignement supérieur :

Revue de la littérature 2012-2020. Distances et

médiations des savoirs, 35.

https://doi.org/10.4000/dms.6414

Peraya, D., & Charlier, B. (2022). Cadres d’analyse pour

comprendre l’hybridation aujourd’hui. Hybridation des

formations : de la continuité à l’innovation

pédagogique ? TICEMED 13, University Panteion,

Athènes.

Pierrot, L. (2019). Les LA : Des réponses et des promesses.

Distances et médiations des savoirs, 26.

https://doi.org/10.4000/dms.3764

Pierrot, L., Costa- Cornejo, P., Solari-Landa, M., Peraya,

D., & Cerisier, J. F. (2023). Ingénierie pédagogique

d’urgence à l’université : Quels enseignements pour

l’avenir ? Ticemed13 (2022), 78‑94.

Pirot, L., & De Ketele, J.-M. (2000). L’engagement

académique de l’étudiant comme facteur de réussite à

l’université Étude exploratoire menée dans deux

facultés contrastées. Revue des sciences de l’éducation,

26(2), 367‑394. https://doi.org/10.7202/000127ar

Raes, A., Detienne, L., Windey, I., & Depaepe, F. (2020).

A systematic literature review on synchronous hybrid

learning: Gaps identified. Learning Environments

Research, 23(3), 269‑290.

https://doi.org/10.1007/s10984-019-09303-z

Romainville, M., & Michaut, C. (2012). Réussite, échec et

abandon dans l’enseignement supérieur. De Boeck

Supérieur.

Sanchez, E. (2022). Les modèles au cœur de la recherche

orientée par la conception. Méthodes de conduite de la

recherche sur les EIAH. https://methodo-

eiah.unige.ch/?p=495

Schneider, M., & Preckel, F. (2017). Variables associated

with achievement in higher education: A systematic

review of meta-analyses. Psychological Bulletin,

143(6), 565‑600. https://doi.org/10.1037/bul0000098

Tricot, A. (2021). Articuler connaissances en psychologie

cognitive et ingénierie pédagogique : Raisons

éducatives, N° 25(1), 141‑162.

https://doi.org/10.3917/raised.025.0141

Development of an Instrument for Evaluating Learning Experiences in a Hybrid Learning Environment

611

Vellut, D. (2019, juin 18). Lier apprentissage actif et

engagement cognitif : Le modèle ICAP (M. Chi).

Louvain Learning Lab.

https://www.louvainlearninglab.blog/apprentissage-

actif-engagement-cognitif-icap-michelene-chi/

Viau, R., Joly, J., & Bédard, D. (2005). La motivation des

étudiants en formation des maîtres à l’égard d’activités

pédagogiques innovatrices. Revue des sciences de

l’éducation, 30(1), 163‑176.

https://doi.org/10.7202/011775ar

vom Brocke, J., Hevner, A., & Maedche, A. (2020).

Introduction to Design Science Research. In J.

vom Brocke, A. Hevner, & A. Maedche (Éds.), Design

Science Research. Cases (p. 1‑13). Springer

International Publishing. https://doi.org/10.1007/978-

3-030-46781-4_1

Wu, J.-H., Tennyson, R. D., & Hsia, T.-L. (2010). A study

of student satisfaction in a blended e-learning system

environment. Computers & Education, 55(1), 155‑164.

https://doi.org/10.1016/j.compedu.2009.12.012

Xiao, J., Sun‐Lin, H., Lin, T., Li, M., Pan, Z., & Cheng, H.

(2020). What makes learners a good fit for hybrid

learning? Learning competences as predictors of

experience and satisfaction in hybrid learning space.

British Journal of Educational Technology, 51(4),

1203‑1219. https://doi.org/10.1111/bjet.12949

Zimmerman, B. J. (2000). Attaining Self-Regulation. In

Handbook of Self-Regulation (p. 13‑39). Elsevier.

https://doi.org/10.1016/B978-012109890-2/50031-7

CSEDU 2024 - 16th International Conference on Computer Supported Education

612