What Will Virtual Reality Bring to the Qualification of Visual

Walkability in Cities?

Chongan Wang, Vincent Tourre, Thomas Leduc and Myriam Servi

`

eres

Nantes Universit

´

e, ENSA Nantes,

´

Ecole Centrale Nantes, CNRS, AAU-CRENAU, UMR 1563, F-44000 Nantes, France

Keywords:

Walkability, Virtual Reality, Urban Design.

Abstract:

Walking as a daily activity has become increasingly popular in recent years. It is not only good for people’s

health, but it also helps reduce the air pollution and other nuisances associated with vehicle use. As such,

we have witnessed the shift in urban design towards a pedestrian-friendly city. While walkability is a broad

concept consisting of many different aspects, our work focuses on only one part of it, namely “visual walk-

ability”. While standard research on visual walkability tends to conduct field experiments to collect data, we

present a novel method to qualify visual walkability, namely assessment in virtual reality with omnidirectional

videos. We analyze the possible questions we would encounter and propose an experiment with answers for

each question.

1 INTRODUCTION

People should walk every day, whether from home to

work or just for leisure, as this is the easiest way to ex-

ercise with minimal side effects for physical and men-

tal health (Morris and Hardman, 1997; White et al.,

2019). It is recommended that a healthy adult should

take about 7500 steps per day or at least 15000 steps

of intense physical activity per week to stay healthy

(Tudor-Locke et al., 2011).

Therefore, modern urban planning strategies tend

to encourage people to walk more every day (Boarnet

et al., 2008; Giles-Corti et al., 2013; Johansson et al.,

2016). This led to the concept of a walkable envi-

ronment or walkability. According to Dovey (Dovey

and Pafka, 2020), walkability is a multi-disciplinary

field connected with public health, climate change,

economic productivity and social equity. Talen (Talen

and Koschinsky, 2013) defined a “walkable neigh-

borhood” as a safe and well-serviced neighborhood

that is equipped with qualities that make walking

a positive experience. This is also the concept of

WalkScore® (Duncan et al., 2011), which assigns a

score for walkability based on distance to a variety

of nearby commercial and public, frequently visited

amenities.

Usual walkability research has used field exper-

iments to collect data such as Pak (Pak and Ag-

ukrikul, 2017) or Raswol (Raswol, 2020), which is

time-consuming and difficult to reproduce, and al-

ways involves many experts who give professional

scores like Ewing (Ewing and Handy, 2009) or Kim

(Kim and Lee, 2022), who cannot be easily recruited

on a large scale. Thus, the results may not be suitable

for classic “non-expert” pedestrians.

Street view imagery (SVI) is becoming easily ac-

cessible via different providers (Google street View,

Tencent, Baidu, etc.), datasets such as Place Pulse

2.0 (Dubey et al., 2016) have provided examples of

evaluating qualitative perceptions by training Convo-

lutional Neural Network (CNN) with Street View im-

ages. Also, vision is our predominant sense (Stokes

and Biggs, 2014; Hutmacher, 2019), which makes vi-

sual information an important part of walkability, this

research focuses on “Visual Walkability”. As a first

approach, and before developing the concept in the

next section on the basis of a definition taken from the

state of the art, we define “Visual Walkability” as per-

ceived walkability that depends on criteria which can

be recognized by sight in the urban space by pedes-

trians. The first impression influences our decision

whether to take this path or the duration and pleas-

antness of a walk. Some of the qualitative aspects of

walkability, such as cleanliness, density, even safety,

etc., can be measured quantitatively through the vi-

sual perception of pedestrians.

The objective of this work is to find out which

visual elements influence pedestrians’ perception of

walkability in the urban environment, with the help of

virtual reality (VR). This leads to our research ques-

Wang, C., Tourre, V., Leduc, T. and Servières, M.

What Will Virtual Reality Bring to the Qualification of Visual Walkability in Cities?.

DOI: 10.5220/0012731200003696

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 10th International Conference on Geographical Information Systems Theory, Applications and Management (GISTAM 2024), pages 203-210

ISBN: 978-989-758-694-1; ISSN: 2184-500X

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

203

tions:

RQ1: Can visual walkability in the real world be

preserved in virtual reality?

RQ2: Which parameters influence visual walka-

bility in virtual urban space during daytime?

The emphasis in these questions are on the words

“Virtual Reality”, “visual”, “urban” and “daytime”.

We will only examine the visual impact on pedes-

trians in an urban environment during the daytime

without considering in this work the changes in per-

ception that would be induced by night-time percep-

tion. By examining visual walkability in virtual re-

ality, we assume that we will eventually be able to

better understand how pedestrians perceive the envi-

ronment and simplify the walkability evaluation pro-

cedure with VR.

2 STATE OF THE ART

Nowadays there are not many studies that deal with

visual walkability. Zhou (Zhou et al., 2019) defined it

as a subjective concept that describes the environmen-

tal qualities that influence an individual’s perception

of the environment as a place to walk. Li (Li et al.,

2020) divided the walkability of streets into physical

and perceived walkability and further investigated it

in terms of visual walkability (Li et al., 2022). We

have found that there is too little work in the field of

visual walkability, so we are pursuing a further study

on this topic.

2.1 Visual Walkability

The term Walkability is a very general word, con-

sisting of many different subjects. For example, 51

perceptual qualities associated with walkability were

mentioned based on Ewing (Ewing and Handy, 2009),

and nine of them are commonly used based on the im-

portance assigned to them in the literature: imageabil-

ity, enclosure, human scale, transparency, complex-

ity, legibility, linkage, coherence, and tidiness. Thus,

walkability is often defined in terms of multi-sensory

perception.

Since we want to go further with visual walkabil-

ity, the elements that visually influence our perception

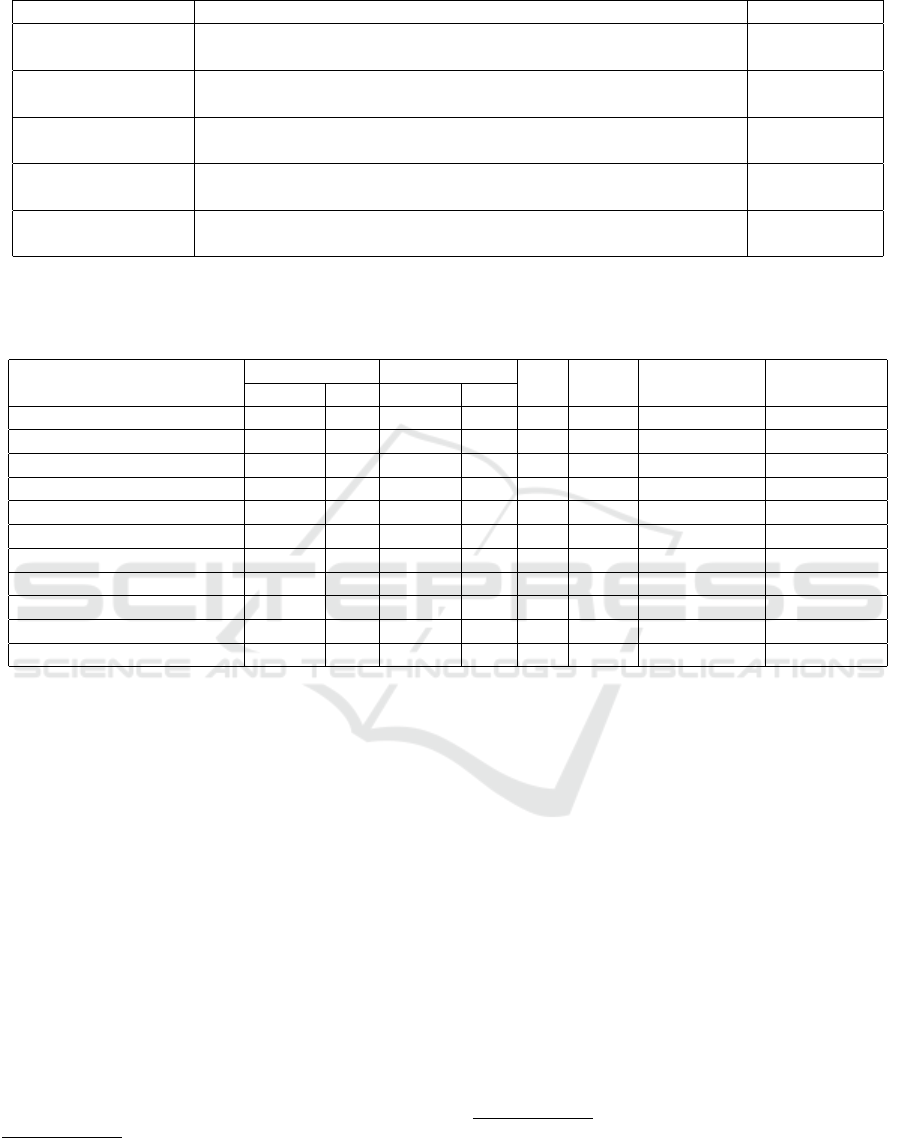

of walkability are important to us. In the Table 1, we

summarize the indicators and the methods that some

of the previous research has used to calculate visual

walkability.

Most of the research has used pictures to represent

the environment thanks to the development of SVI,

little has applied videos as instrument. Kim (Kim and

Lee, 2022) and Nakamura (Nakamura, 2021) have

used panoramic videos, but they did not justify their

choices for video duration. We have also seen several

studies in Table 2 that applied VR, but few of them

have added virtual locomotion, which is an impor-

tant factor for immersive experience, especially when

evaluating walkability.

Furthermore, a large part of the research studying

visual walkability has simply counted pixels in im-

ages (Ma et al., 2021; Zhou et al., 2019; Xu et al.,

2022), as we list in Table 1, the validity of the analysis

of relationships between each visual element remains

doubtful. Although Li (Li et al., 2022) has employed a

machine learning algorithm to uncover hidden infor-

mation in these elements, not all of them have been

validated.

2.2 Virtual Experiences

With the development of technology, the use of virtual

audits instead of on-site experience has recently be-

come more popular in the study of urban design qual-

ity. In Table 2 we summarize the most recent studies

on the use of VR technology in the field of walkabil-

ity. As far as we could find, the oldest article on this

topic is only from 2019.

2.2.1 Virtual Reality

There are two main types of VR used in the field

of walkability, computer-generated VR (CGVR) and

Cinematic VR (CVR) (Kim and Lee, 2022). CGVR

uses 3D models in computer software such as the

Unity or Unreal Engine, to generate a virtual envi-

ronment (VE) for players so that people can move

freely and without restrictions in the VE. This is ideal

for studying walkability. However, depending on the

quality of the model, CGVR can be less realistic

and/or time-consuming for modeling.

On the other hand, the CVR uses a camera to

record an omnidirectional (360°) video and then

present it in a Head-Mounted Display (HMD). Al-

though we cannot choose the route in the videos but

only follow a predefined route, the realism of the

scene and the time required to produce the content is

an advantage.

Both CGVR and CVR are better than a field ex-

periment in terms of reproducibility, variable controls

and efficiency. We can present the same material to

each participant to ensure that they can have an iden-

tical experience regardless of weather, traffic, etc.,

without having to wait for consistent field conditions.

GISTAM 2024 - 10th International Conference on Geographical Information Systems Theory, Applications and Management

204

Table 1: Chosen indicators in state of the art articles.

References Items Calculation

(Zhou et al., 2019)

Building, road, sideways, vehicle, pole, tree, street greenery,

sideway crowdedness, enclosure, promotion of road and pavement

Pixel ratios

(Ma et al., 2021)

Bicyclist, building, car, fence, pavement, pedestrian,

pole, road, road mark, sign symbol, sky, tree

Pixel ratios

(Li et al., 2022)

Vegetation, Sidewalk, terrain, road, people, bike, truck, sky,

pole, fence

Pairwise

MLR

(Xu et al., 2022)

Sky, tree, building, car, road, wall, plant, grass, fence, earth,

person, sidewalk, signboard, truck, bicycle

Pixel ratios

(Zhang et al., 2022)

Skyview factor, green look ratio, building ratio,

motorway proportion, walkway width

Not explained

Pixel ratio: The number of pixels of an object to the total number of pixels in the image

MLR: Multiple Linear Regression model

Table 2: Methods applied in articles.

Article

Image Video

VE HMD Eye-tracking Locomotion

Classic 360 Classic 360

(Zhou et al., 2019) *

(Nagata et al., 2020) *

(Birenboim et al., 2021) * * * *

(Nakamura, 2021) * * *

(Zhang and Zhang, 2021) * * *

(Hollander et al., 2022) * *

(Kim and Lee, 2022) * *

(Li et al., 2022) * *

(Liao et al., 2022) * * -

(Silvennoinen et al., 2022) * *

(Jeon and Woo, 2023) *

* : Applied - : Not mentioned

VE : Virtual Environment HMD : Head-Mounted Display Locomotion: Movement in VR

2.2.2 Eye Tracking

Eye tracking, as the name suggests, tracks pupil

movements and then calculates the point of gaze for

two eyes. We have seen applications in psychol-

ogy, computer science and many other fields (Carter

and Luke, 2020). There are well-known manufac-

turers such as Tobii

1

, SR Research

2

, etc. as well as

some open-source webcam-based eye tracking solu-

tions (Papoutsaki et al., 2017; Dalmaijer et al., 2014).

These solutions can be divided into real-life eye track-

ing, on-screen eye tracking and VR eye tracking.

However, there are not many studies that apply

eye tracking to walkability. Birenboim (Birenboim

et al., 2021) used eye tracking to calculate partici-

pants’ gaze duration on a parked car in VE, and Zhang

(Zhang and Zhang, 2021) used it to study architectural

1

Tobii© 2023, https://www.tobii.com (accessed in

February 2024).

2

SR Research Ltd.© 2024, https://www.sr-research.

com (accessed in February 2024).

cityscape elements.

2.2.3 Virtual Locomotion

Locomotion in a virtual environment influences users’

immersive experience. According to Martinez (Mar-

tinez et al., 2022), there exists five categories of loco-

motion techniques in VR:

• Walking-based: we use real walk (either on a

treadmill) / redirected walk

3

(Razzaque et al.,

2001) or walk-related behaviors like swing arms

or walk-in-place

4

(Boletsis, 2017) to simulate lo-

comotion in VE.

• Steering-based: we use our body part (head, hand,

etc.) or joystick / mouse to direct the locomotion.

3

Remapping between real world and virtual environ-

ment, change people’s real walking direction without they

noticing it.

4

Participant performs step-like movement while re-

maining stationary

What Will Virtual Reality Bring to the Qualification of Visual Walkability in Cities?

205

• Selection-based: we choose our desired location

in VE, then go there by teleport or virtual move-

ment.

• Manipulation-based: we can drag the camera or

the world (either in miniature) directly by hand in

VE.

• Automated: the VE controls our locomotion and

movement.

Among these methods, walk-in-place is moderately

explored and has a relatively high immersion capabil-

ity compared to other techniques (Boletsis and Ced-

ergren, 2019).

3 EXPERIMENTAL DESIGN

QUESTIONS

The aim of this section is not to explain in detail

the practical conditions for conducting the experiment

(e.g. how to incentivize the participants), but rather to

list all the methodological questions that arise in the

preparation phase. Here are the twelve methodologi-

cal questions (MQ) we have identified:

MQ.1 Should the experiment be carried out in static

or dynamic form? That is, will we use a static

or a dynamic medium for this experiment?

Static images are easier to capture using cam-

eras or Street View Image providers, whereas

dynamic images can create a more realistic

environment and show the influence of mov-

ing objects.

MQ.2 What motivation do we give to the partici-

pants? Different travel motivations can lead

to different walkability perception and route

choices. Therefore, we need to specify the

situation for the participants, whether they

were traveling for recreation or commuting.

MQ.3 How much freedom do we give the partici-

pants during the experiment? This question

refers to whether the participants can move

freely in the VR. A greater degree of freedom

can increase the level of immersion and re-

duce the effects of cybersickness, but leads to

a less controlled experimental environment.

MQ.4 What kind of road do we present to the par-

ticipants? Should it be a straight road or

a winding road? Because the sinuosity of

the road can also influence the walkability

(Salazar Miranda et al., 2021).

MQ.5 How should we control for uniformity in ex-

periment? The question is therefore to know

what degree of similarity we consider accept-

able in order to be able to compare two walk-

ing experiences.

MQ.6 What kind of VR should we use? As de-

scribed in the Section 2.2.1, both CGVR and

CVR have their advantages and disadvan-

tages. This choice also depends on the an-

swers of other MQ and constraints of imple-

mentation.

MQ.7 How long should we expose participants to

VR? We need to strike a balance between al-

lowing enough time to assess visual walka-

bility and avoiding too much strain, as former

studies performed by Nakamura (Nakamura,

2021) or Liao (Liao et al., 2022) did not jus-

tify their choice for duration.

MQ.8 Which variables should we study in our ex-

periment? One advantage of using VR is that

we can control the environment and therefore

the variables we want to analyze. However,

we cannot evaluate all elements at the same

time, but need to define some of the most im-

portant ones and their relationship in the envi-

ronment, such as some commonly mentioned

Green View Index (GVI) or Sky-view Factor

(SVF).

MQ.9 How will participant interact with VR? To en-

hance the sense of presence in VR, we need

a proper interaction with it, like locomotion,

interaction with the environment, etc.

MQ.10 What should we measure, and how, during

the experiment? Since we want to study the

influence on human perception, we would

need both objective and subjective data from

our experiments. Thus participants’ subcon-

scious reaction can be helpful when analyz-

ing results.

MQ.11 Which questionnaire should we use? Both for

the evaluation of the VR experience and for

walkability.

MQ.12 How do we deal with other sensory percep-

tions? Like the acoustic or tactile perception

of the surroundings.

4 METHODOLOGY

4.1 Experimental Protocols

Since the word “Walkability” contains the term

“Walk”, which is a dynamic action, we think that a

dynamic medium is more suitable for the evaluation

GISTAM 2024 - 10th International Conference on Geographical Information Systems Theory, Applications and Management

206

of walkability (answer to MQ.1). After reviewing the

literature and the questions we came up with above,

we decided to implement our experiment with omni-

directional videos (MQ.6) to create a more realistic

and immersive virtual environment and ask partici-

pants to rate the walkability. This assessment will be

subject to conditions. The participant will not have to

imagine themselves as a passerby in a drifting situa-

tion (a leisurely walk with no time or specific goal),

but rather as a daily commuter, such as the journey

between home and work (MQ.2).

Since the quality of the 3D model has a great

impact on the sense of presence in VR, which re-

quires detailed modeling, an omnidirectional video

seems to be a better choice (MQ.6). Under these cir-

cumstances, we will not give participants the free-

dom to move around in VR, but make them follow

the route we filmed (MQ.3), which consists of sev-

eral straight streets in different urban environments

(MQ.4). We will still conserve the environmental

sound in the videos, only for creating an immersive

environment, but we will not take into account acous-

tic influence, since our study concentrate on visual

elements (MQ.12). With a focus on visual elements

and the sense of sight, we will ensure that the sense

of hearing is not prominently engaged (i.e., we will

make sure that no sound signals emerge from the

background noise of the city in our videos, contrary

to what one might expect in such a location).

First, we define our experimental field. We have

a considerable number of variables to control, which

makes it difficult to find the locations. The seasonal

and climatic variables can be controlled by filming at

the same location at different times of the year. Dif-

ferent times of day for traffic, etc (MQ.5). The goal

is to have as little variation as possible in two videos.

It may be impossible to have only one changing ele-

ment in the videos, so we may also need to consider a

combination of analyzable factors.

Then, we will define a measuring method for each

potential element and sort them out from our videos.

We can begin with calculating pixel ratio for each ele-

ment, and creating an evaluation table with two levels

according to Stated Preference method (Huang et al.,

2022) as Table 3 (MQ.8).

Table 3: Element Evaluation Table example.

Element Video 1 Video 2 Video 3 ...

Vegetation Low Low High

Pedestrian High High Low

Building High Low High

Sky Low High Low

...

Next, we would have to design questionnaires for

the participants. To meet ethical standards for human

subject experiments and to compare the results with

both objective and subjective data, the questionnaire

would include feelings and satisfaction with the VR

experience as well as walkability ratings, e.g. ele-

ments that increase or decrease walkability (MQ.11).

We will invite non-expert volunteers, balanced by

gender and age, so that they are best suited for our

purpose of evaluating the walkability of a common

everyday path. We will first do a simple demonstra-

tion of our experiment and explain to them how the

system works and what they will see. But we will

not tell our participants what we are actually measur-

ing. Then we will ask them to rate the walkability

in real time with a joystick and then fill in our ques-

tionnaire. The number of participants depends on the

number of variables we will measure, and we expect

20 to 30 participants per variable. Because we didn’t

find enough evidence to define video duration (MQ.7)

and interaction method (MQ.9) in our literature re-

view, we need to perform preliminary experiments to

answer these questions in section 4.4.

During the experiment, we will be collecting the

visual attention (eye-tracking) data and participants’

real-time evaluation, as well as their blink rate and

walking speed / step frequency (MQ.10).

After each experiment, we will analyze the result

with all the data collected. First, we output a video

with each participant’s visual attention data. Then

generate a heatmap of visual attention, along with a

real-time evaluation slider on the side. Using deep

learning image segmentation technology, we can au-

tomatically obtain the result of objective data (Chen

and Biljecki, 2023).

When we will have completed the experiment, we

can obtain our conclusion on elements that influence

visual walkability in VR, and compare with the re-

sults provided by previous studies in Section 2 and in

Table 1.

4.2 Data Collection

4.2.1 Questionnaire Survey

First, because this is a VR experiment, we need a stan-

dard VR questionnaire to determine the level of im-

mersion (Makransky et al., 2017) and cybersickness

(Kim et al., 2018) of the procedure. Then, as men-

tioned in the experimental protocol, we need to col-

lect the data from walkability questionnaires. We will

also practice a commented city walk (Thibaud, 2013)

in VR to collect the real-time reaction of the partici-

pants (MQ.11).

What Will Virtual Reality Bring to the Qualification of Visual Walkability in Cities?

207

4.2.2 Gaze Point

In addition to the questionnaires, the most important

result of our experiment will be the data on the par-

ticipants’ visual attention. In the end, we would like

to create a heat map of visual attention to analyze it.

However, since we are using videos, the format of

this heatmap may differ from the normal heatmaps on

photos, which may require a new way of presentation.

4.2.3 Blink Rate

As mentioned by Batistatou (Batistatou et al., 2022),

people’s blink rate can reflect their level of mental

stress, as the longer duration of gaze fixation can rep-

resent an interesting/meaningful/threatening element.

They assume that blink rate will be lower in a min-

eral urban environment (made of concrete) than in a

green environment (full of vegetation). This subcon-

scious action can also provide us with information for

analyzing walkability.

4.2.4 Walking Speed

According to Franek (Fran

ˇ

ek, 2013), people’s walk-

ing speed is linked to environmental factors. And Sil-

vennoinen (Silvennoinen et al., 2022) believes that in

more walkable areas, people are willing to walk at

a slower pace. We will measure participants (mean)

walking speed during the experiences as a factor for

analyzing walkability.

4.2.5 Real-Time Evaluation

During the video, we will set a slider controlled by

a joystick to allow participants to evaluate immediate

walkability. We hypothesize that this method could

reflect the influence of moving objects, such as the

speed of vehicles and the movement of crowds. This

is our contribution to walkability research, as we are

the first to introduce this method. However, the ac-

curacy of this method has yet to be tested, as hold-

ing a controller in your hand can affect the feeling of

immersion. We will also test this method in our ex-

periment, both for its validity and for its influence on

immersion.

4.3 Equipment Setups

For video filming, we choose Insta360

5

Pro 2 to per-

form the task. It can take up to 8k (7680 × 7680) res-

olution HDR (High Dynamic Range) videos at 30 fps

(frame per second), 6k videos at 60 fps, or 4k videos

5

Insta360© 2024, https://www.insta360.com (accessed

in February 2024).

at 120 fps, all formats support stereoscopic output,

which means that it could generate different contents

for two eyes. This feature can provide depth informa-

tion, creating a more realistic omnidirectional video.

It also has four embedded microphones to record

sound from every direction. In simple, this camera

can film 3D 360 videos with high-quality sound.

As for the choice of HMD, we don’t have many

choices, because we need a HMD with eye tracker.

We have tested Vive

6

Focus 3 with eye-tracker attach-

ment. This HMD has a resolution of 2448 × 2448 per

eye, 90 Hz refresh rate, and 120° of FOV (Field of

View). The eye tracker has 120 Hz frequency, and

0.5° ∼1.1° accuracy. But with the eye-tracker attach-

ment, the FOV is visibly reduced, and its lens tech-

nology, Fresnel lens, makes it heavy and thick. We

have also tested Meta

7

Quest Pro, which has a resolu-

tion of 1800 × 1920 per eye, 90 Hz refresh rate, and

106° of FOV. Even though the latter has a lower res-

olution, these two HMDs have a similar PPD (Pixel

Per Degree). But Meta Quest Pro used “Pancake” lens

technology, which makes it lighter, thinner, and also

clearer. So we decide to use Meta Quest Pro for our

experiment.

In order to retrieve eye-tracking data and apply in-

teractions with our videos, we use Unity software as

graphic engine to create our virtual environment.

4.4 Preliminary Experiments

We started our experiment with some preliminary

tests to define the details of the experiment. We now

have eight recordings that we can show in the Unity

software with the basic user interface and interactions.

We showed these videos to seven participants who

are experts and students in the field of urban design,

and told them to stop playing each video when they

felt it was sufficient for the walkability assessment to

determine the duration of the videos in the formal ex-

periments. We took the average duration of 60 sec-

onds as the standard time for experiments (MQ.7).

Six of the seven participants did not report an ob-

vious feeling of cybersickness while standing up for

the tests. Only one participant had an uncomfortable

sensation in the HMD while standing, and this sensa-

tion decreased when she was sitting. We decided to

continue these experiments while standing using the

walk-in-place method (MQ.9).

6

HTC Corporation© 2011-2024, https://www.vive.com

(accessed in February 2024).

7

Meta© 2024, https://www.meta.com (accessed in

February 2024).

GISTAM 2024 - 10th International Conference on Geographical Information Systems Theory, Applications and Management

208

5 CONCLUSION

In this article, we present a novel method for investi-

gating this rarely focused part of walkability, the Vi-

sual Walkability, by combining it with virtual reality.

According to the two research questions that we pro-

posed, we have raised twelve questions that we might

encounter while conducting the experiment. For ten

of these twelve questions, we have found answers in

the state of the art, while for the last two, we had to

carry out preliminary experiments to guide our choice

of experiment design. We hope that after completing

this work, we can obtain a new and efficient workflow

for evaluating visual walkability and thus facilitate fu-

ture urban design towards a more walkable city.

ACKNOWLEDGEMENTS

This work was supported by the China Scholarship

Council under Grant number 202208070087. We

would also like to thank the French National Research

Agency (ANR) through the “PERCILUM” project

(ANR-19-CE38-0010) for providing video filming

equipment.

REFERENCES

Batistatou, A., Vandeville, F., and Delevoye-Turrell, Y. N.

(2022). Virtual Reality to Evaluate the Impact of Col-

orful Interventions and Nature Elements on Sponta-

neous Walking, Gaze, and Emotion. Frontiers in Vir-

tual Reality, 3.

Birenboim, A., Ben-Nun Bloom, P., Levit, H., and Omer, I.

(2021). The Study of Walking, Walkability and Well-

being in Immersive Virtual Environments. Interna-

tional Journal of Environmental Research and Public

Health, 18(2):364. Number: 2 Publisher: Multidisci-

plinary Digital Publishing Institute.

Boarnet, M. G., Greenwald, M., and McMillan, T. E.

(2008). Walking, Urban Design, and Health: Toward

a Cost-Benefit Analysis Framework. Journal of Plan-

ning Education and Research, 27(3):341–358. Pub-

lisher: SAGE Publications Inc.

Boletsis, C. (2017). The New Era of Virtual Reality Lo-

comotion: A Systematic Literature Review of Tech-

niques and a Proposed Typology. Multimodal Tech-

nologies and Interaction, 1(4):24. Number: 4 Pub-

lisher: Multidisciplinary Digital Publishing Institute.

Boletsis, C. and Cedergren, J. E. (2019). VR Locomo-

tion in the New Era of Virtual Reality: An Empiri-

cal Comparison of Prevalent Techniques. Advances in

Human-Computer Interaction, 2019:e7420781. Pub-

lisher: Hindawi.

Carter, B. T. and Luke, S. G. (2020). Best practices in

eye tracking research. International Journal of Psy-

chophysiology, 155:49–62.

Chen, S. and Biljecki, F. (2023). Automatic assessment of

public open spaces using street view imagery. Cities,

137:104329.

Dalmaijer, E. S., Math

ˆ

ot, S., and Van der Stigchel, S.

(2014). PyGaze: An open-source, cross-platform tool-

box for minimal-effort programming of eyetracking

experiments. Behavior Research Methods, 46(4):913–

921.

Dovey, K. and Pafka, E. (2020). What is walkability? The

urban DMA. Urban Studies, 57(1):93–108.

Dubey, A., Naik, N., Parikh, D., Raskar, R., and Hidalgo,

C. A. (2016). Deep Learning the City: Quantifying

Urban Perception at a Global Scale. In Leibe, B.,

Matas, J., Sebe, N., and Welling, M., editors, Com-

puter Vision – ECCV 2016, Lecture Notes in Com-

puter Science, pages 196–212, Cham. Springer Inter-

national Publishing.

Duncan, D. T., Aldstadt, J., Whalen, J., Melly, S. J., and

Gortmaker, S. L. (2011). Validation of Walk Score®

for estimating neighborhood walkability: An analysis

of four US metropolitan areas. International Journal

of Environmental Research and Public Health, 8.

Ewing, R. and Handy, S. (2009). Measuring the unmea-

surable: Urban design qualities related to walkability.

Journal of Urban Design, 14.

Fran

ˇ

ek, M. (2013). Environmental Factors Influencing

Pedestrian Walking Speed. Perceptual and Motor

Skills, 116(3):992–1019.

Giles-Corti, B., Bull, F., Knuiman, M., McCormack, G.,

Van Niel, K., Timperio, A., Christian, H., Foster, S.,

Divitini, M., Middleton, N., and Boruff, B. (2013).

The influence of urban design on neighbourhood

walking following residential relocation: Longitudi-

nal results from the RESIDE study. Social Science &

Medicine, 77:20–30.

Hollander, J. B., Sussman, A., Lowitt, P., Angus, N., Situ,

M., and Magnuson, A. (2022). Insights into wayfind-

ing: urban design exploration through the use of al-

gorithmic eye-tracking software. Journal of Urban

Design, 0(0):1–22. Publisher: Routledge eprint:

https://doi.org/10.1080/13574809.2022.2118697.

Huang, J., Liang, J., Yang, M., and Li, Y. (2022). Visual

Preference Analysis and Planning Responses Based

on Street View Images: A Case Study of Gulangyu

Island, China. Land, 12(1):129. Publisher: MDPI.

Hutmacher, F. (2019). Why Is There So Much More Re-

search on Vision Than on Any Other Sensory Modal-

ity? Frontiers in Psychology, 10:2246.

Jeon, J. and Woo, A. (2023). Deep learning analysis of

street panorama images to evaluate the streetscape

walkability of neighborhoods for subsidized families

in Seoul, Korea. Landscape and Urban Planning, 230.

Publisher: Elsevier B.V.

Johansson, M., Sternudd, C., and K

¨

arrholm, M. (2016).

Perceived urban design qualities and affective ex-

periences of walking. Journal of Urban De-

sign, 21(2):256–275. Publisher: Routledge eprint:

https://doi.org/10.1080/13574809.2015.1133225.

Kim, H. K., Park, J., Choi, Y., and Choe, M. (2018). Virtual

reality sickness questionnaire (VRSQ): Motion sick-

ness measurement index in a virtual reality environ-

ment. Applied Ergonomics, 69:66–73.

What Will Virtual Reality Bring to the Qualification of Visual Walkability in Cities?

209

Kim, S. N. and Lee, H. (2022). Capturing reality: Valida-

tion of omnidirectional video-based immersive virtual

reality as a streetscape quality auditing method. Land-

scape and Urban Planning, 218. Publisher: Elsevier

B.V.

Li, Y., Yabuki, N., and Fukuda, T. (2022). Measuring

visual walkability perception using panoramic street

view images, virtual reality, and deep learning. Sus-

tainable Cities and Society, 86.

Li, Y., Yabuki, N., Fukuda, T., and Zhang, J. (2020). A

big data evaluation of urban street walkability using

deep learning and environmental sensors a case study

around Osaka University Suita campus.

Liao, B., van den Berg, P. E., van Wesemael, P., and Ar-

entze, T. A. (2022). Individuals’ perception of walk-

ability: Results of a conjoint experiment using videos

of virtual environments. Cities, 125:103650. Pub-

lisher: Elsevier Ltd.

Ma, X., Ma, C., Wu, C., Xi, Y., Yang, R., Peng, N., Zhang,

C., and Ren, F. (2021). Measuring human perceptions

of streetscapes to better inform urban renewal: A per-

spective of scene semantic parsing. Cities, 110. Pub-

lisher: Elsevier Ltd.

Makransky, G., Lilleholt, L., and Aaby, A. (2017). Develop-

ment and validation of the Multimodal Presence Scale

for virtual reality environments: A confirmatory factor

analysis and item response theory approach. Comput-

ers in Human Behavior, 72:276–285.

Martinez, E. S., Wu, A. S., and McMahan, R. P. (2022).

Research Trends in Virtual Reality Locomotion Tech-

niques. In 2022 IEEE Conference on Virtual Reality

and 3D User Interfaces (VR), pages 270–280. ISSN:

2642-5254.

Morris, J. N. and Hardman, A. E. (1997). Walking to

Health. Sports Medicine, 23(5):306–332.

Nagata, S., Nakaya, T., Hanibuchi, T., Amagasa, S.,

Kikuchi, H., and Inoue, S. (2020). Objective scor-

ing of streetscape walkability related to leisure walk-

ing: Statistical modeling approach with semantic seg-

mentation of Google Street View images. Health and

Place, 66.

Nakamura, K. (2021). Experimental analysis of walkabil-

ity evaluation using virtual reality application. Envi-

ronment and Planning B: Urban Analytics and City

Science, 48(8):2481–2496. Publisher: SAGE Publi-

cations Ltd.

Pak, B. and Ag-ukrikul, C. (2017). Participatory Evaluation

of the Walkability of two Neighborhoods in Brussels

- Human Sensors versus Space Syntax. pages 553–

560. Conference Name: eCAADe 2017 : ShoCK!

– Sharing of Computable Knowledge! Place: Rome,

Italy.

Papoutsaki, A., Laskey, J., and Huang, J. (2017).

SearchGazer: Webcam Eye Tracking for Remote

Studies of Web Search. In Proceedings of the ACM

SIGIR Conference on Human Information Interaction

& Retrieval (CHIIR). ACM.

Raswol, L. M. (2020). Qualitative Assessment for Walk-

ability: Duhok University Campus as a Case Study.

IOP Conference Series: Materials Science and Engi-

neering, 978(1):012001. Publisher: IOP Publishing.

Razzaque, S., Kohn, Z., and Whitton, M. C. (2001). Redi-

rected Walking. Accepted: 2015-11-11T18:52:51Z

Publisher: Eurographics Association.

Salazar Miranda, A., Fan, Z., Duarte, F., and Ratti, C.

(2021). Desirable streets: Using deviations in pedes-

trian trajectories to measure the value of the built en-

vironment. Computers, Environment and Urban Sys-

tems, 86:101563.

Silvennoinen, H., Kuliga, S., Herthogs, P., Recchia, D. R.,

and Tunc¸er, B. (2022). Effects of Gehl’s urban design

guidelines on walkability: A virtual reality experiment

in Singaporean public housing estates. Environment

and Planning B: Urban Analytics and City Science,

49(9):2409–2428. Publisher: SAGE Publications Ltd

STM.

Stokes, D. and Biggs, S. (2014). The Dominance of the

Visual. In Stokes, D., Matthen, M., and Biggs, S.,

editors, Perception and Its Modalities, page 0. Oxford

University Press.

Talen, E. and Koschinsky, J. (2013). The Walkable Neigh-

borhood: A Literature Review. International Journal

of Sustainable Land Use and Urban Planning, 1.

Thibaud, J.-P. (2013). Commented City Walks. Wi: Journal

of Mobile Culture, vol.7(n°1):pp.1–32.

Tudor-Locke, C., Craig, C. L., Brown, W. J., Clemes, S. A.,

De Cocker, K., Giles-Corti, B., Hatano, Y., Inoue,

S., Matsudo, S. M., Mutrie, N., Oppert, J.-M., Rowe,

D. A., Schmidt, M. D., Schofield, G. M., Spence, J. C.,

Teixeira, P. J., Tully, M. A., and Blair, S. N. (2011).

How many steps/day are enough? for adults. Interna-

tional Journal of Behavioral Nutrition and Physical

Activity, 8(1):79.

White, M. P., Alcock, I., Grellier, J., Wheeler, B. W., Har-

tig, T., Warber, S. L., Bone, A., Depledge, M. H., and

Fleming, L. E. (2019). Spending at least 120 minutes

a week in nature is associated with good health and

wellbeing. Scientific Reports, 9(1):7730. Number: 1

Publisher: Nature Publishing Group.

Xu, X., Qiu, W., Li, W., Liu, X., Zhang, Z., Li, X., and Luo,

D. (2022). Associations Between Street-View Per-

ceptions and Housing Prices: Subjective vs. Objec-

tive Measures Using Computer Vision and Machine

Learning Techniques. Remote Sensing, 14(4). Pub-

lisher: MDPI.

Zhang, R. X. and Zhang, L. M. (2021). Panoramic visual

perception and identification of architectural cityscape

elements in a virtual-reality environment. Future Gen-

eration Computer Systems, 118:107–117. Publisher:

Elsevier B.V.

Zhang, Y., Zou, Y., Zhu, Z., Guo, X., and Feng, X.

(2022). Evaluating Pedestrian Environment Using

DeepLab Models Based on Street Walkability in

Small and Medium-Sized Cities: Case Study in Gaop-

ing, China. Sustainability (Switzerland), 14(22). Pub-

lisher: MDPI.

Zhou, H., He, S., Cai, Y., Wang, M., and Su, S. (2019). So-

cial inequalities in neighborhood visual walkability:

Using street view imagery and deep learning technolo-

gies to facilitate healthy city planning. Sustainable

Cities and Society, 50. Publisher: Elsevier Ltd.

GISTAM 2024 - 10th International Conference on Geographical Information Systems Theory, Applications and Management

210