Customizing Trust Systems: Personalized Communication to Address AI

Adoption in Smart Cities

Jessica Ohnesorg, Nazek Fakhoury, Noura Eltahawi and Mouzhi Ge

Deggendorf Institute of Technology, Max-Breiherr-Street 32, Pfarrkirchen, Germany

Keywords:

Trust, Individual Preferences, Communication, Transparency, Artificial Intelligence, Human Machine

Interactions, Smart Cities.

Abstract:

Since it is challenging to tailor trust management systems to accommodate diverse individual preferences due

to the evolving adoption of artificial intelligence (AI) in smart cities, through a comprehensive review of in-

ternal and external factors influencing trust levels, including personal values, personality traits, and cultural

background, the paper highlights the crucial role of communications in human-machine interactions by em-

phasizing AI technologies. Based on the review, this paper proposes a Framework for AI Trust enHancement

(FAITH). The FAITH framework integrates personalized communication strategies with individual prefer-

ences to enhance trust in smart city systems. To validate the proposed framework, the FAITH framework is

applied in a use case scenario to demonstrate its potential effectiveness in fostering trust, collaboration, and

innovation. The research results contribute not only to understand trust management systems in smart cities,

but also offer practical insights for addressing the diverse preferences of individuals in smart cities.

1 INTRODUCTION

In today’s urban landscape, smart cities integrate

advanced technologies to redefine the urban living.

As they undergo digital transformation, these cities

evolve into complex ecosystems, demanding a so-

phisticated approach to data governance and seamless

technology integration (Ge and Buhnova, 2022).

One significant challenge in this context is the di-

versity of individual preferences (Persia et al., 2020).

Given that each user has unique perspectives on life

and distinct personalities, it becomes challenging to

create a universally applicable trust management sys-

tem (Bangui et al., 2023). The interpretation of trust

varies among individuals, adding complexity to the

development of a system that can cater to everyone’s

needs. This underscores the need for an adaptive ap-

proach to trust management, considering the diverse

human factors influencing perceptions of trust. De-

veloping a system that resonates with the individual

preferences of a diverse population poses a notable

obstacle in the quest for effective trust management

within smart cities (Ohnesorg et al., 2024).

The aim of the paper is to answer the research

question of How can trust management systems be

tailored to accommodate diverse individual prefer-

ences in the evolving landscape of artificial intelli-

gence adoption?. We propose a framework to cap-

ture individual preferences and highlights the essence

of communication, in turn it offers a practical frame-

work for trustful applications in smart cities.

The rest of the paper is organized as follows, sec-

tion 2 states the methodological approach, followed

by providing an overview of previous research in re-

spect to internal and external factors, which give in-

sights about individual preferences of AI adoption.

Moreover, section 3 focuses on the change in com-

munication encompassing its understanding and its

significance in contemporary contexts, particularly

in scenarios involving human-machine-interactions.

Section 4 is about the proposed framework, which

will be evaluated and discussed in the section 5 by in-

volving multiple previous investigations and highlight

the correlations of certain elements. Finally, section 6

summarizes the research findings and outlines future

directions.

2 METHODOLOGY

The methodology in this study involved a thorough

investigation of research papers, utilizing various

databases like Google Scholar, IEEE Xplore and our

university library. This exploration began with the

Ohnesorg, J., Fakhoury, N., Eltahawi, N. and Ge, M.

Customizing Trust Systems: Personalized Communication to Address AI Adoption in Smart Cities.

DOI: 10.5220/0012731400003714

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 13th International Conference on Smart Cities and Green ICT Systems (SMARTGREENS 2024), pages 73-79

ISBN: 978-989-758-702-3; ISSN: 2184-4968

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

73

formulation of specific keywords covering key themes

such as trust, individual preferences, communication,

human-machine interaction, smart cities, and Artifi-

cial Intelligence. Employing stringent inclusion crite-

ria, we ensured that identified sources were available

in either English or German and offered open access

to full-text articles.

To refine search results, advanced techniques such

as Boolean operations were systematically applied,

and a detailed examination was conducted across ti-

tles, abstracts, and full articles. The selection process

embraced a broad range of publication years, while

also focusing on recent investigations to offer a com-

prehensive perspective on the subject and a more de-

tailed analysis of data related to customized trust sys-

tems landscape within smart cities.

In order to enhance the reliability and validity of

the proposed framework including the proposed sce-

nario, additional resources have been incorporated

and correlated with specific elements of the frame-

work to highlight different connections between our

framework and previous investigations.

3 RELATED WORK

Since it is challenging to precisely capture individ-

ual preferences, establishing a reliable environment

is essential for maintaining trust among individuals

in smart cities. However, two key factors underpin

this challenge: while intelligent systems demand trust

in specific situations, the absence of the human el-

ement raises concerns in real-world scenarios where

individual preferences favor human interaction over

smart systems. Another noteworthy challenge is the

potential reluctance of some users to adopt the pro-

posed trust mechanism. This emphasizes that a uni-

versal trust mechanism may not align with the diverse

preferences and perspectives of individuals in smart

cities (Ohnesorg et al., 2024).

(Davis et al., 1989) emphasized this concept in

his Technology Acceptance Model, which assesses

the factors influencing the acceptance of technology

based on two prior aspect, which consist of the per-

ceived usefulness and ease of use. The potential chal-

lenge of non-adoption occurred when individuals per-

ceive a technology as not meeting their needs or being

difficult to use.

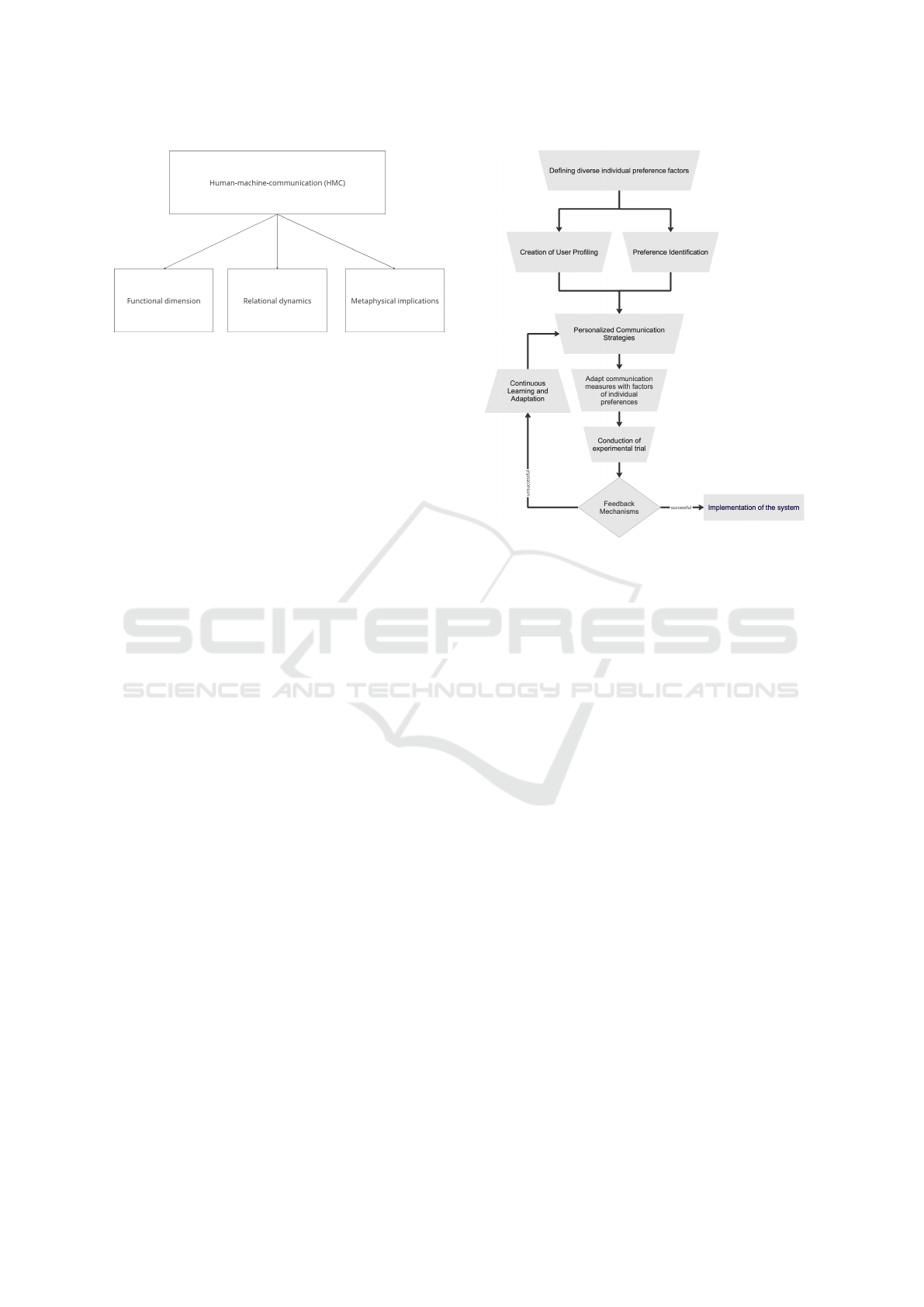

As shown in Figure 1, trust levels in AI adoption

are influenced by internal and external factors, which

also impact the future of AI adoption in smart cities.

In this section, we explore how certain factors impact

trust. Personal values are recognized as influential in

system/service adoption and trust; users are more in-

Figure 1: A set of individual preference factors.

clined to adopt systems that align with their personal

values. For example, environmental concerns and

time consciousness have been shown to influence the

adoption of e-governance services, where users who

prioritize saving paper and value the convenience of

service access without physical visits are more likely

to utilize such services (Belanche et al., 2012).

Personality trait is another factor that can influ-

ence trust. Recent studies suggest that individual dif-

ferences in personality traits lead to variations in trust

levels. The Big Five personality model is commonly

used to classify primary personality factors into five

categories: Extroversion, Agreeableness, Conscien-

tiousness, Neuroticism, and Openness. Some litera-

ture indicates that agreeableness and neuroticism have

the most significant impact on trust, for example,

(Zhou et al., 2020) demonstrates high levels of agree-

ableness or conscientiousness exhibiting greater trust

in automation. (B

¨

ockle et al., 2021) concluded that

high levels of extroversion and agreeableness lead to

higher level of trusts while openness presents a nega-

tive relationship with trust.

Furthermore, personal experience significantly in-

fluences the formation of trust, where every accom-

plishment by the trustee results in higher trust lev-

els. On the other hand, each setback reduces this

trust. However, according to casual attribution the-

ory, failure doesn’t always result in a trust crisis; the

interpretation of the cause of failure plays a crucial

role in this situation. This theory similarly applies

to success, where the way success is perceived can

significantly enhance trust levels (Falcone and Castel-

franchi, 2004).

SMARTGREENS 2024 - 13th International Conference on Smart Cities and Green ICT Systems

74

Upbringing and family environment is suggested

to affect trust, where individuals from less urban-

ized areas might have a protective mechanism that

allows for a rapid increase in trust following posi-

tive social interactions (Lemmers-Jansen et al., 2020).

Other studies suggest that people growing up in fam-

ilies where upper generations are dominant over the

younger generation show less trust levels, alterna-

tively horizontal extension households are more fa-

vorable for trust (Kravtsova et al., 2018).

Physiological factors are another vital factor in-

fluencing trust; some evidence suggests that a nat-

ural tendency to trust may have genetic origins to

the extent that common genes had greater influence

than growing up in the same environment. Trust

based on physiology is of two types; person-based

trust (considering the trustee as an individual) and de-

personalized trust (considering trustee as a member

of a group). Studies show that depersonalized trust

has a great effect on the level of trust as a trustor

is more likely to trust a person they perceive as so-

cially familiar, coming from the same social group

as the trustor, as depersonalized trust represents an

agreement between the in-group members (Evans and

Krueger, 2009). The other internal factor is educa-

tion and knowledge, where evidence suggests that in-

dividuals living in countries with strong governments

and having higher levels of education are more trust-

ing while those living in less efficacious states show

a negative relationship between education level and

trust (G

¨

uemes and Herreros, 2019).

In terms of external factors, cultural background

significantly influences trust where literature depend-

ing on Hofstede’s six cultural dimensions (power

distance (PDI), individualism (IDV), masculinity

(MAS), uncertainty avoidance (UAI), long-term ori-

entation (LTO) and indulgence (IND)) state that par-

ticipants of a high IDV and LTO cultures are more

likely to trust others while those from high PDI and

UAI cultures are less likely to trust (Thanetsunthorn

and Wuthisatian, 2019). Demographics represent an-

other external factor, with research showing that fe-

males have less trust than males in terms of trusting

autonomation; additionally, the more workload is re-

quired by the user the less the tendency to trust the au-

tonomous agent; in addition, users tend to have more

trust in more reliable agents (Hillesheim et al., 2017).

Media and its influence serve an additional exter-

nal factor, research indicated that information on both

social and traditional media have significant effect on

trust (Lee et al., 2021). Moreover, geographical loca-

tion is another factor where studies showed that the

higher the absolute geographical latitude the lower

the exposure to diseases, the lower the variety in lan-

guages and ethnic groups and the lower the income

inequality which leads to greater trust (Le, 2013).

One critical element among the external factors

is economic factor. Related research works demon-

strated that the influence of economic wealth on insti-

tutional trust exists only among rural, less educated

and economically disadvantaged individuals where

this group of people tend to have more trust in in-

stitutions. On the other hand people in environments

marked by significant diversity, advanced education,

and wealth, personal wealth does not automatically

lead to increased trust in government and institutional

systems (Sechi et al., 2023).

Another important aspect that represents a crucial

challenge in the context of interaction between human

and machine is the trust management indicator ”com-

munication”. The evolution of AI technologies from

several mediators, through which people communi-

cate, to interactive communicators, which engages in

simultaneous conversations and presents communica-

tion researchers with both theoretical challenges and

opportunities. Previous research highlighted that the

primary challenge lies in the divergence of commu-

nicative AI in function and human interpretation from

traditional technology roles in communication theory,

which have been grounded in anthropocentric defini-

tions (Guzman and Lewis, 2020).

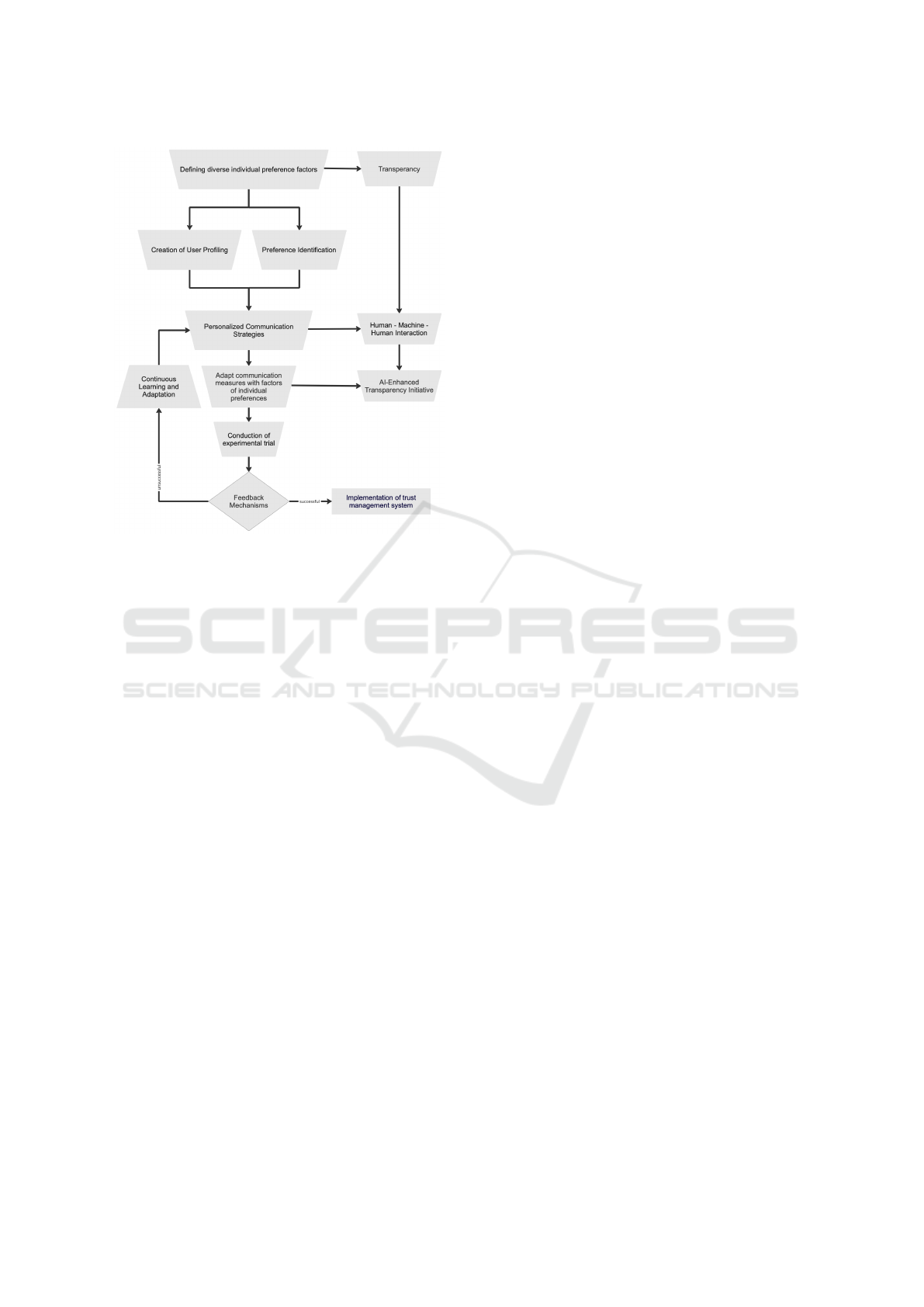

Scholars in the field of human, machine, and com-

munication are actively addressing this challenge by

exploring how technology can be re-conceptualized

as a genuine communicator. This novel perspective

opens up avenues to pose innovative questions about

three crucial aspects of communicative AI technolo-

gies: (1) understanding the functional dimensions

that shape individuals’ perceptions of these devices

and applications as communicators, (2) examining the

relational dynamics governing people’s connections

with these technologies and, subsequently, their rela-

tionships with themselves and others, and (3) delving

into the metaphysical implications arising from the

blurred ontological boundaries that challenge tradi-

tional definitions of what constitutes human, machine,

and communication, which is presented in figure 2

(Guzman and Lewis, 2020).

According to previous research, society 5.0 is an

evolving trend towards an information society. The

transition to digital formats for producing, distribut-

ing, and consuming goods and services enhances

value within the digital economy. Knowledge pro-

cessing, relying on data aggregation from diverse

sources, becomes essential for service delivery. Thus,

it is crucial to have access to metadata, encompass-

ing data quality, source identification, licensing, and

usage rights, concerning private information, for ef-

Customizing Trust Systems: Personalized Communication to Address AI Adoption in Smart Cities

75

Figure 2: Constitution of human machine communication.

fective operations. Therefore, establishing Society

5.0 demands a heightened dedication to transparency

and the seamless integration of information resources.

This commitment may extend beyond standardization

groups to encompass active participation from indi-

viduals and businesses involved in creating and im-

plementing solutions (Frost and Bauer, 2018).

4 FAITH FRAMEWORK

After thoroughly understanding both external and in-

ternal individual preference factors, it’s time to lever-

age them effectively. The framework outlined in the

subsequent sections of this research revolves around

integrating communication strategies with individual

preferences, ultimately resulting in clear communi-

cation measures. These measures enable individuals

to comprehend how systems function, thus bolstering

their trust in the system.

As illustrated in Figure 3, FAITH operates as a

cyclical process driven by AI. It begins by identifying

personal traits and progresses through various steps,

including selecting the suitable communication strat-

egy based on individual factors, implementing a feed-

back and evaluation mechanism, and ultimately en-

suring continuous learning and evolution.

Firstly, the framework starts by defining diverse

individual preference factors, recognizing the impor-

tance of understanding the varied backgrounds and

personal traits of users. This involves creating a com-

prehensive profile for each individual and identifying

their preferences. These components are then orga-

nized within a machine learning system, which ana-

lyzes the profiles to select the suitable communication

strategy for each individual. The goal is to ensure that

information is accurately conveyed and systems are

well understood. In the end it can be used to enhance

the trust for the system.

The personalized communication strategies are

then adapted based on the individual profile. This step

Figure 3: Framework for AI Trust enHancement (FAITH).

ensures that the communication approach aligns with

the unique characteristics and preferences of each

user. This is to enhance the effectiveness of the com-

munication process. By tailoring the communication

strategies, the framework aims to optimize the recep-

tion and comprehension of information, thereby bol-

stering trust in the system.

Next, the framework proceeds with the conduc-

tion of experimental trials. Certain standards are es-

tablished for these experiments, and the results are en-

tered into an AI-driven machine learning system. This

system analyzes the outcomes of the trials to deter-

mine their success. If the results are deemed success-

ful, the system is implemented accordingly. However,

if the results are not desired, the system redirects its

approach and leverages the feedback mechanism to

learn from the outcomes.

Continuous learning and adaptation are integral

components of the framework. Regardless of the trial

outcome, the system continually evolves and adapts

based on the feedback received. This iterative pro-

cess allows for ongoing refinement and improvement,

and ensures that the communication strategies remain

effective and responsive to users’ needs.

The framework operates on the principles of un-

derstanding individual preferences, tailoring com-

munication strategies accordingly, conducting exper-

imental trials, and iterative learning and adapting

based on feedback. By prioritizing personalized com-

munication and continuous improvement, the frame-

SMARTGREENS 2024 - 13th International Conference on Smart Cities and Green ICT Systems

76

work can effectively enhance trust management sys-

tems in smart cities.

5 EVALUATION AND

DISCUSSION

After investigating the determinants influencing trust

levels in AI adoption, we identified that certain fac-

tors were interrelated. Among these interconnected

determinants education and knowledge may affect the

trust (Labonne et al., 2007). Furthermore, economic

factors and geographical location were found to be in-

terrelated where locations of higher geographical lati-

tude tend to have less income inequality and thus lead-

ing to more trust (Le, 2013).

In addition, personality traits and psychological

factors were found to be interlinked in terms of per-

sonality traits being considered part of the psychol-

ogy of the brain, as evidences suggest that personal-

ity traits have significant genetic components (Evans

and Krueger, 2009). The connection is the relation

between demographics and education and knowledge

where some literature considered education level as

part of demographics and indicated that college grad-

uates have more trust in automated agents when com-

pared to less educated individuals (Hillesheim et al.,

2017).

To further validate the FAITH framework, we

have simulated a case scenario to validate the pro-

posed framework. This framework aligns its elements

with the narrative’s components. The scenario illus-

trates an international workplace setting and high-

lights a particular individual preference connected

with a communication strategy deemed potentially

successful.

Consider that a leading tech company is on the

verge of introducing an innovative AI-driven system

designed to streamline workflow processes. As the

firm is committed to inclusiveness and recognizing

the diverse needs of its workforce, this company un-

derstands the significance of catering to individual

preferences and communication styles.

Within its diversity, the company acknowledges

the diversity in cultural backgrounds, where each is

with its unique values, beliefs, and communication

preferences. Recognizing this diversity is crucial

for effective communication and collaboration within

the company. This company is aware that adopting

a one-size-fits-all approach to communication might

not yield the desired results and could potentially lead

to misunderstandings or disengagement among em-

ployees. Thus, the company is dedicated to defining

and adapting communication measures that resonate

with the diverse preferences of its workforce.

The company’s strategy lies the Human-Machine-

Human (HMH) interaction concept, emphasizing col-

laborative engagement between humans and ma-

chines. One of the primary components of this strat-

egy involves utilizing machines to enhance trans-

parency. By providing clear explanations of pro-

cesses and outcomes, this company aims to enhance

the trust and understanding among its workforce.

This approach not only improves communication but

also facilitates a culture of openness and account-

ability within the organization (Lansing et al., 2023;

K

¨

uc¸

¨

uktabak et al., 2021).

As the company progresses with the develop-

ment and implementation of its AI-driven system,

it remains committed to evaluating its effectiveness

through experimental trials. Through thorough as-

sessments, the company can assess the impact of its

communication measures on employee satisfaction,

productivity, and overall organizational performance.

With a commitment to understanding diverse individ-

ual preferences, implementing effective communica-

tion measures, and leveraging HMH interaction, this

company aims to cultivate a workplace culture char-

acterized by trust, collaboration, and innovation.

In alignment with this vision, the proposed frame-

work illustrates how these principles can be effec-

tively applied within the context of company opera-

tions. This framework outlines the steps for defining

diverse individual preference factors, creating user

profiles, identifying preferences, developing person-

alized communication strategies, adapting measures

based on individual preferences. It also includes ex-

perimental trials by implementing feedback mecha-

nisms, and embracing continuous learning and adap-

tation.

In order to evaluate our framework we conducted

research on how AI and machine learning are reli-

able in terms of using machine learning to obtain the

suitable communication strategy. (Pagliaro and San-

giorgi, 2023) suggests that AI has become essential in

activities such as real-time monitoring and data anal-

ysis. In addition, machine learning and AI have rev-

olutionized data analysis, enabling us to identify hid-

den trends and uncover new phenomena. These find-

ings demonstrates that the initial steps of the FAITH

framework are applicable.

Further findings indicate that AI is capable of en-

abling computers and machines to perform functions

such as problem-solving, decision-making and com-

prehension of human communication profile (Sarker,

2022). This acts as a proof of the validity of FAITH

framework in terms of deciding which communica-

tion strategy fits the best with each individual.

Customizing Trust Systems: Personalized Communication to Address AI Adoption in Smart Cities

77

Figure 4: Validation of the FAITH framework.

Another previous study introduced an AI-driven

tool designed to offer real-time guidance during data

collection in experiments. This tool aims to make ex-

periments more efficient and accelerate research in

material science (Sundermier, 2023). This approach

aligns with our idea that it suggests the potential inte-

gration with the proposed framework. By incorporat-

ing this tool, the experimental process could embody

more reliable results and increase the likelihood of a

more effective implementation of the system.

As advanced technologies become integrated into

smart cities, they reshape the way people live, work,

and interact socially (Walletzk

´

y et al., 2022). This

digital transformation of urban landscapes requires

complex administrative structures and seamless tech-

nology integration (Blanco et al., 2023). However,

among this transformation, one significant challenge

is the diversity of individual preferences.

Each user brings unique perspective, value, and

personality traits to the table, making it challenging

to develop a universally applicable trust management

system. The varied interpretations of trust by individ-

uals further complicates this task and highlights the

need for an adaptive approach to trust management

that takes into account different human factors.

Addressing this challenge requires a detailed un-

derstanding of internal and external factors shaping

individual preferences. Personal values, personality

traits, cultural background, and personal experiences

all play crucial roles in shaping trust levels. Moreover,

external factors such as demographics and economic

status further influence trust dynamics within smart

city environments.

To address this challenge, the proposed Frame-

work for AI trust enhancement presents a practical

solution. FAITH integrates personalized communi-

cation strategies with individual preferences, aiming

to enhance trust in smart city systems. By aligning

communication measures with diverse preferences,

FAITH seeks to foster trust, collaboration, and inno-

vation within smart city environments.

6 CONCLUSIONS

In this paper, we have proposed a FAITH frame-

work to provide a pathway towards building trust, col-

laboration, and innovation within the evolving land-

scape of artificial intelligence adoption in smart cities.

By integrating personalized communication strategies

with individual preferences, this framework has ad-

dressed the question how trust management systems

can be tailored to accommodate diverse individual

preferences in the evolving landscape of artificial in-

telligence adoption. The framework has offered prac-

tical insights for dealing with various user preferences

in the digital age and can also help to create more in-

clusive and resilient smart city environments.

In order to validate the framework, we have ap-

plied the FAITH framework in a use case scenario,

which showcases how personalized communication

measures can effectively address to the diverse pref-

erences of employees. In the scenario, a company’s

commitment regarding individual preferences and

leveraging effective communication strategies high-

lights the importance of adapting trust management

approaches to suit diverse populations.

Further research is planned to comprehensively

resolve the challenges associated with trust manage-

ment in smart cities and can explore innovative strate-

gies and solutions to ensure the trustworthy use of

artificial intelligence technologies in urban environ-

ments. Specifically, research could focus on develop-

ing and implementing standardized trustworthy pro-

files to enhance trust assessment in both emerging and

established business relationships.

REFERENCES

Bangui, H., Buhnova, B., and Ge, M. (2023). Social internet

of things: Ethical AI principles in trust management.

In The 14th International Conference on Ambient Sys-

tems, Networks and Technologies (ANT 2023) March

SMARTGREENS 2024 - 13th International Conference on Smart Cities and Green ICT Systems

78

15-17, 2023, Leuven, Belgium, volume 220 of Proce-

dia Computer Science, pages 553–560. Elsevier.

Belanche, D., Casal

´

o, L. V., and Flavi

´

an, C. (2012). Inte-

grating trust and personal values into the technology

acceptance model: The case of e-government services

adoption. Cuadernos de Econom

´

ıa y Direcci

´

on de la

Empresa, 15(4):192–204.

Blanco, J. M., Ge, M., del Alamo, J. M., Due

˜

nas, J. C.,

and Cuadrado, F. (2023). A formal model for reli-

able digital transformation of water distribution net-

works. In Knowledge-Based and Intelligent Informa-

tion & Engineering Systems: Proceedings of the 27th

International Conference KES-2023, Athens, Greece,

6-8 September 2023, volume 225 of Procedia Com-

puter Science, pages 2076–2085. Elsevier.

B

¨

ockle, M., Yeboah-Antwi, K., and Kouris, I. (2021). Can

you trust the black box? the effect of personality traits

on trust in ai-enabled user interfaces. In International

Conference on Human-Computer Interaction, pages

3–20. Springer.

Davis, F. D. et al. (1989). Technology acceptance model:

Tam. Al-Suqri, MN, Al-Aufi, AS: Information Seeking

Behavior and Technology Adoption, pages 205–219.

Evans, A. M. and Krueger, J. I. (2009). The psychology

(and economics) of trust. Social and Personality Psy-

chology Compass, 3(6):1003–1017.

Falcone, R. and Castelfranchi, C. (2004). Trust dynamics:

How trust is influenced by direct experiences and by

trust itself. In Proceedings of the Third International

Joint Conference on Autonomous Agents and Multia-

gent Systems, 2004, pages 740–747. IEEE.

Frost, L. and Bauer, M. (2018). European trends in stan-

dardization for smart cities and society 5.0. NEC Tech-

nical Journal, 13(1):58–63.

Ge, M. and Buhnova, B. (2022). DISDA: digital ser-

vice design architecture for smart city ecosystems.

In Proceedings of the 12th International Conference

on Cloud Computing and Services Science, CLOSER

2022,, Online Streaming, April 27-29, 2022, pages

207–214. SCITEPRESS.

Guzman, A. L. and Lewis, S. C. (2020). Artificial intelli-

gence and communication: A human–machine com-

munication research agenda. New Media & Society,

22(1):70–86.

G

¨

uemes, C. and Herreros, F. (2019). Education and trust:

A tale of three continents. International Political Sci-

ence Review, 40(5):676–693.

Hillesheim, A. J., Rusnock, C. F., Bindewald, J. M., and

Miller, M. E. (2017). Relationships between user de-

mographics and user trust in an autonomous agent. In

Proceedings of the Human Factors and Ergonomics

Society Annual Meeting, volume 61, pages 314–318.

SAGE Publications Sage CA: Los Angeles, CA.

Kravtsova, M., Oshchepkov, A. Y., and Welzel, C. (2018).

The shadow of the family: Historical roots of social

capital in europe. (WP BRP 82/SOC/2018).

K

¨

uc¸

¨

uktabak, E. B., Kim, S. J., Wen, Y., Lynch, K., and

Pons, J. L. (2021). Human-machine-human interac-

tion in motor control and rehabilitation: a review.

Journal of neuroengineering and rehabilitation, 18:1–

18.

Labonne, J., Biller, D., and Chase, R. (2007). Inequality and

Relative Wealth: Do They Matter for Trust?: Evidence

from Poor Communities in the Philippines. Social De-

velopment Department, Community Driven Develop-

ment.

Lansing, A. E., Romero, N. J., Siantz, E., Silva, V., Cen-

ter, K., Casteel, D., and Gilmer, T. (2023). Building

trust: Leadership reflections on community empower-

ment and engagement in a large urban initiative. BMC

Public Health, 23(1):1252.

Le, S. H. (2013). Societal trust and geography. Cross-

Cultural Research, 47(4):388–414.

Lee, J., Baig, F., and Li, X. (2021). Media influence,

trust, and the public adoption of automated vehicles.

IEEE Intelligent Transportation Systems Magazine,

14(6):174–187.

Lemmers-Jansen, I. L., Fett, A.-K. J., van Os, J., Veltman,

D. J., and Krabbendam, L. (2020). Trust and the city:

Linking urban upbringing to neural mechanisms of

trust in psychosis. Australian & New Zealand Jour-

nal of Psychiatry, 54(2):138–149.

Ohnesorg, J., Fakhoury, N., Eltahawi, N., and Ge, M.

(2024). A review of ai-based trust management in

smart cities. In ITM Web of Conferences, volume 62,

page 01003. EDP Sciences.

Pagliaro, A. and Sangiorgi, P. (2023). Ai in experiments:

Present status and future prospects. Applied Sciences,

13(18):10415.

Persia, F., Pilato, G., Ge, M., Bolzoni, P., D’Auria, D.,

and Helmer, S. (2020). Improving orienteering-based

tourist trip planning with social sensing. Future

Gener. Comput. Syst., 110:931–945.

Sarker, I. (2022). Ai-based modeling: Techniques, applica-

tions and research issues towards automation, intelli-

gent and smart systems. SN Computer Science, 3.

Sechi, G., Borri, D., De Lucia, C., and Skilters, J. (2023).

How are personal wealth and trust correlated? a social

capital–based cross-sectional study from latvia. Inter-

national Social Science Journal.

Sundermier, A. (2023). New ai-driven tool streamlines ex-

periments. SLAC.

Thanetsunthorn, N. and Wuthisatian, R. (2019). Under-

standing trust across cultures: an empirical investiga-

tion. review of international business and strategy. 29

(4), 286-314.

Walletzk

´

y, L., Bayarsaikhan, O., Ge, M., and Schwarzov

´

a,

Z. (2022). Evaluation of smart city models: A concep-

tual and structural view. In Proceedings of the 11th

International Conference on Smart Cities and Green

ICT Systems, SMARTGREENS 2022, Online Stream-

ing, April 27-29, 2022, pages 56–65. SCITEPRESS.

Zhou, J., Luo, S., and Chen, F. (2020). Effects of personality

traits on user trust in human–machine collaborations.

Journal on Multimodal User Interfaces, 14:387–400.

Customizing Trust Systems: Personalized Communication to Address AI Adoption in Smart Cities

79