Towards Scenario Retrieval of Real Driving Data with Large

Vision-Language Models

Tin Stribor Sohn

1∗

, Maximilian Dillitzer

1∗

, Lukas Ewecker

1

, Tim Br

¨

uhl

1

, Robin Schwager

1

,

Lena Dalke

1

, Philip Elspas

1

, Frank Oechsle

1

and Eric Sax

2

1

Dr. Ing. h.c. F. Porsche AG, Weissach, Germany

2

Karlsruhe Institut f

¨

ur Technologie (KIT), Karlsruhe, Germany

tin stribor.sohn, maximilian.dillitzer1, lukas.ewecker, tim.bruehl, robin.schwager1, lena.dalke, philip.elspas,

Keywords:

Large Vision-Language Models, Scenario Retrieval, Real Driving Data.

Abstract:

With the adoption of autonomous driving systems and scenario-based testing, there is a growing need for

efficient methods to understand and retrieve driving scenarios from vast amounts of real-world driving data. As

manual scenario selection is labor-intensive and limited in scalability, this study explores the use of three Large

Vision-Language Models, CLIP, BLIP-2, and BakLLaVA, for scenario retrieval. The ability of the models to

retrieve relevant scenarios based on natural language queries is evaluated using a diverse benchmark dataset

of real-world driving scenarios and a precision metric. Factors such as scene complexity, weather conditions,

and different traffic situations are incorporated into the method through the 6-Layer Model to measure the

effectiveness of the models across different driving contexts. This study contributes to the understanding of

the capabilities and limitations of Large Vision-Language Models in the context of driving scenario retrieval

and provides implications for future research directions.

1 INTRODUCTION

The automotive industry is undergoing a transforma-

tion driven by technological advances, particularly in

the area of autonomous driving systems. As the com-

plexity of vehicle functions rises, the need for man-

ifold sensors and robust validation and testing meth-

ods becomes paramount. Traditional miles-driven ap-

proaches struggle to keep pace with the rapid evolu-

tion of autonomous driving technology and the com-

plexity of real-world scenarios with rising automation

levels. Scenario-based testing (SBT) has emerged as

a promising solution to address the challenges associ-

ated with the validation of autonomous driving sys-

tems. By defining a comprehensive set of scenar-

ios that encompass different driving conditions, en-

vironments, and edge cases, SBT provides a system-

atic approach to evaluate the performance and safety

of autonomous vehicles. However, manually gener-

ating and selecting relevant scenarios can be time-

consuming, resource-intensive, and limited in scala-

bility. In recent years, the emergence of Large Vision-

Language Models (LVLMs) has revolutionised the

∗

Equal contribution.

field of artificial intelligence (AI), enabling machines

to understand and generate content across different

modalities, including text and images. LVLMs, have

the ability to understand complex scenes, objects, and

contexts from both textual descriptions and visual in-

put. Harnessing the power of LVLMs for scenario

retrieval (SR) in the automotive industry has the po-

tential to accelerate the validation process and in-

crease test efficiency. By using LVLMs, automotive

engineers and researchers can significantly reduce the

time and effort required for scenario selection and val-

idation. Focusing on the six layers of the 6-Layer

Model (6LM), three popular publicly available pre-

trained LVLMs, Contrastive Language-Image Pre-

training (CLIP), Bootstrapping Language-Image Pre-

training 2 (BLIP-2), and BakLLaVA, are analysed.

Quantitative and qualitative evaluations show the ef-

fectiveness and practicality of LVLMs in facilitating

efficient and comprehensive SBT through SR.

496

Sohn, T., Dillitzer, M., Ewecker, L., Brühl, T., Schwager, R., Dalke, L., Elspas, P., Oechsle, F. and Sax, E.

Towards Scenario Retrieval of Real Driving Data with Large Vision-Language Models.

DOI: 10.5220/0012738500003702

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 10th International Conference on Vehicle Technology and Intelligent Transport Systems (VEHITS 2024), pages 496-505

ISBN: 978-989-758-703-0; ISSN: 2184-495X

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

2 RELATED WORK

In the field of information retrieval in the automo-

tive domain, research has mainly focused on objects,

anomalies and scenarios.

Langner et al. (2019) propose a method for deriva-

tion of logical scenarios through clustering of dy-

namic length segments of driving data represented as

time series. This provides the ability to derive distri-

butions from clusters of concrete scenarios. A map-

ping between a functional description of a driving sce-

nario and real driving data has not been elaborated.

Montanari et al. (2020) cluster recurring patterns

of scenarios based on timeseries data. Clusters of sim-

ilar scenarios and corner cases can be identified. This

does not include the ability to query for these scenar-

ios based on their functional descriptions.

Elspas et al. (2020) introduce a pattern matching

mechanism based on regular expressions in order to

extract driving scenarios from timeseries data. Rules

for each scenario need to be derived in a knowledge

driven process and adequate patterns need to be de-

fined before the data is processed. As this rule-based

method detects cut-ins and lane change maneuvers,

it is possible to interpret extracted patterns with func-

tional descriptions and encode them in retrievable rep-

resentations.

In another contribution Elspas et al. (2021) have

used fully Convolutional Neural Networks (CNNs)

for time series in order to extract scenarios. As their

approach requires labeled datasets with ground truth

annotations for supervised learning, the applicability

may be questioned and the domain may be limited re-

garding the complexity of annotating all relevant as-

pects in a representative training dataset.

Ries et al. (2021) propose a trajectory-based

clustering method based on Dynamic Time Warping

(DTW) for the identification of similar driving sce-

narios. This provides the ability to query similar tra-

jectories of dynamic objects, but does not take into

account all aspects of driving scenarios and abstrac-

tion layers.

To date, most of the methods for information re-

trieval include object retrieval methods, such as the

works of Girshick et al. (2014); Girshick (2015);

Kang et al. (2017); Hu et al. (2016). In the context

of object retrieval, the work of Rigoll et al. (2023)

needs to be specifically addressed, as it proposes a

method using CLIP for object retrieval from automo-

tive image datasets, combining the object labels into

prompts. While it addresses object retrieval in the

automotive domain for the purpose of querying ob-

jects for machine learning datasets, it does not ad-

dress driving SR. To address more safety-critical driv-

ing scenarios, object retrieval can be extended by

anomaly detection methods such as those provided by

Unar et al. (2023); Rai et al. (2023).

The first to focus on the retrieval of overall driving

scenarios is Wei et al. (2024). In this work, the authors

propose a multi-modal birds-eye-view (BEV) retrieval

method using BEV-CLIP, which provides a global

feature perspective for holistic driving SR based on

the overall context and layout. However, the method

does not apply to a general 6LM-oriented framework,

but to the occurrence and location of objects from

BEV in complex scenes.

So far, no work has specifically addressed SR

through functional scenario descriptions based on the

structure of the 6LM, hence the ability to query and

ground all aspects of driving scenarios with natural

language.

3 THEORETICAL FRAMEWORK

3.1 Real Driving Data

Modern vehicles are equipped with manifold types of

sensors in order to accurately perceive the environ-

ment. Additionally, software services provide value

to the driver. Vehicle data can thus be recorded from

different sources and in different modalities.

• Raw sensor data such as RADAR and LiDAR pro-

vide distance and velocity information through

point clouds. Furthermore, camera sensors pro-

vide images and depth information from different

perspectives of the ego-vehicle.

• Bus data includes all data transmitted over the ve-

hicle’s bus systems. It contains data from the en-

tire functional chain, such as raw sensor data, as

well as fused objects and high-level information.

• System log data is recorded directly from the dif-

ferent subsystems and digital in-vehicle services.

In scenario databases, retrieved data can be en-

riched with multiple external data sources such as

map data or knowledge (Petersen et al., 2022). As

LVLMs are mostly provided as pre-trained models for

images and texts, the concept described in this work

leverages camera data to extract the features for SR.

3.2 Scenario-Based Testing

The rising complexity of automotive systems makes it

necessary to break down the real world into a subset

of representative scenarios. SBT reduces the amount

Towards Scenario Retrieval of Real Driving Data with Large Vision-Language Models

497

of validation and verification (V&V) while maintain-

ing sufficient test coverage to achieve regulatory com-

pliance. Scenarios are seen as the ”[...] temporal de-

velopment between several scenes in a sequence of

scenes”, where a scene is a snapshot of the environ-

ment, including scenery, dynamic elements, and all

self-representations of actors and observers as well as

their relationships to each other (Ulbrich et al., 2015).

3.2.1 6-Layer Model

The work of Scholtes et al. (2021) provides context

to these scenarios in the 6LM. The framework pro-

vides a structured description of driving scenarios, di-

viding them into six layers. The first layer describes

the road network and its regulations, including road

markings and traffic signs. To further detail its at-

tributes for analysis purposes, the attribute-layers 1

1

,

1

2

, and 1

3

are created. Layer 1

1

contains only the

road itself, while 1

2

contains the road markings and

1

3

the existing road signs. Layer 2 includes roadside

structures, while layer 3 covers temporary modifica-

tions to layers 1 and 2, such as construction signs.

Dynamic objects such as vehicles and pedestrians are

introduced in layer 4 with a time-dependent descrip-

tion. Layer 5 is divided into attribute layers 5

1

and

5

2

, which contain daytime and weather. Environmen-

tal conditions and digital information for communi-

cation are included in layer 6. In addition, to evaluate

distinct queries, all six layers are further detailed in

three levels, which can be seen in Table 1.

3.3 Scenario Descriptions

Menzel et al. (2018) introduce a terminology that out-

lines abstraction layers for driving scenarios, showing

that scenarios take on different levels of abstraction

at different stages of automotive system development

along the V-model (Dr

¨

oschel and Wiemers, 1999), as

seen in Figure 1.

3.3.1 Functional Scenario

Functional scenarios are described in natural lan-

guage during the concept and design phase of the de-

velopment process, to be definable and understand-

able by human experts. They can contain different

levels of detail and structures.

3.3.2 Abstract Scenario

Abstract scenarios provide a machine-interpretable

format for execution using virtual validation tech-

niques such as X-in-the-Loop (XiL). They are de-

scribed using modeling languages or Scenario De-

scription Languages (SDLs) (Bock and Lorenz,

2022).

3.3.3 Logical Scenario

Logical scenarios are described by parameter ranges

and distributions rather than physical events. Virtual

testing methods aim to sample from these distribu-

tions to generate concrete scenarios and evaluate them

in test cases.

3.3.4 Concrete Scenario

Real driving data, consisting of sensor-, bus-, and sys-

tem log data, represents concrete scenarios as it pro-

vides concrete physical values at specific points in

time.

3.3.5 Test Case

Scenarios being mapped to metrics and acceptance

criteria are called test cases. They can be functional,

logical, or concrete scenarios. Hereby, validation

metrics can be related to safety, comfort, and usabil-

ity.

3.3.6 Relationship of Different Scenario

Abstractions

In order to obtain logical scenarios, it is necessary to

cluster concrete scenarios based on their specific at-

tributes. The mapping of concrete and logical scenar-

ios to functional scenarios makes them interpretable

for human experts. This also applies to abstract sce-

narios in terms of machine interpretability. Test cases

make them measurable. Drawing relationships be-

tween different abstraction layers is therefore a re-

quirement for an effective SR method. (Figure 1).

functional

scenarios

operationsdesign V&V

implementation

logical scenarios

parameter space

x [-1.5 ; 3]

t [72 ; 189]

y [0.25 ; 12.6]

concrete scenarios

real-world drive

SR system

(LVLMs)

semantic

information

query

(e.g. highway at daytime)

output

described in natural language

Figure 1: Concept of SR to map concrete scenarios to func-

tional scenarios.

VEHITS 2024 - 10th International Conference on Vehicle Technology and Intelligent Transport Systems

498

Table 1: Attribute Layers of the 6LM with: Road (1

1

), Road Markings (1

2

), Road Signs (1

3

), Roadside Structures (2),

Temporal Modifications (3), Objects (4), Daytime (5

1

), Weather (5

2

) and Communication (6), with up to three levels of detail.

Level of Detail

1 2 3

Layers (6LM)

6 Source (e.g. Traffic Light) Information (e.g. Color Red) -

5

2

Weather Condition (e.g. Rain) Intensity (e.g. Strong) -

5

1

Illumination (e.g. Night) Intensity (e.g. Dusk) -

4 Type (e.g. Pedestrian) Behaviour (e.g. Moving) Maneuvers (e.g. Cut-in)

3 Type (e.g Construction) Location (e.g. On-Road) -

2 Environment (e.g. Urban) Scenery (e.g. Bridge) Specification (e.g. Residential)

1

3

Type (e.g. Street Sign) Specification (e.g. Velocity) Sign Value (e.g. 100km/h)

1

2

Type (e.g. Lane) Specification (e.g. Dashed) Lane Count (e.g. 3)

1

1

Category (e.g. Highway) Road Character (e.g. Curvy) Road Size (e.g. Large)

3.4 Scenario Retrieval

The urgency of safety assessment based on realistic

driving scenarios, coupled with the open-world prob-

lem of automated driving, requires the collection of

large amounts of driving data due to the multitude of

use cases that need to be covered within operational

design domains (ODDs) for higher levels of automa-

tion. P

¨

utz et al. (2017) outlined a concept and moti-

vation for a scenario database containing real-world

driving scenarios for V&V. However, while this data

is highly representative, it often lacks structure and re-

quires additional annotation to be effectively queried

for scenarios. These queries may involve defining pa-

rameter ranges, identifying patterns and trajectories,

or applying similarity metrics. The challenge is to

draw relationships between different scenario abstrac-

tion layers such as functional scenarios and real-world

driving data. Traditional SR methods are inadequate

to capture the complexity and variability of real-world

scenarios. Using LVLMs for SR addresses these chal-

lenges by structuring and interpreting driving data to

provide interpretable results for engineers.

3.5 Large Vision-Language Models for

Information Retrieval

The ability to embed data in foundation models that

have been pre-trained on large amounts of data with

different modalities, such as speech, images, time se-

ries, or graphs, has gained significant interest in re-

search, industry, and society. For application to spe-

cific tasks and data, there are two predominant ap-

proaches: fine-tuning and in-context learning. Fine-

tuning involves updating the model weights to a spe-

cific target dataset and metric, which requires re-

training the model. Due to the size of the parame-

ters of such models, this process can require signif-

icant computational resources and time. In-context

learning, on the other hand, does not require updating

the weights. Instead, the goal is to provide the model

with context for the specific task or dataset through

targeted prompts. With these prompts, the model can

generate more domain-specific responses. This can be

done by manually exploring and designing prompts

for the specific tasks, or by training additional models

to generate prompts that achieve the best performance

on the targeted task, called soft prompting (Lester

et al., 2021). This method has shown superior perfor-

mance to fine-tuning in resource-constrained down-

stream tasks (Devlin et al., 2019). To retrieve infor-

mation, LVLMs perform an encoding process of both

images and texts into a numerical representation that

captures their semantic and visual features. After en-

coding both textual and visual inputs into feature rep-

resentations, LVLMs employ algorithms to match and

retrieve relevant information. These algorithms anal-

yse the similarity between the encoded features of the

query and the features of the database items. The re-

trieved information is then ranked based on the sim-

ilarity scores, with the most relevant items presented

to the user. Regardless of their potential, LVLMs are

exposed to several challenges such as bias in data and

fairness as well as the difficulty to distinguish right

answers by the model and wrong but well formulated

answers, also denoted as hallucination (Zhou et al.,

2023).

4 METHOD

The method presented in this comparative study aims

to provide a systematic approach to SR facilitated by

functional scenario descriptions that effectively serve

as natural language queries (Figure 1). Central to

this method is the process of projecting images into

the embedding space of a pre-existing LVLM. This

operation involves encoding the images into vector

Towards Scenario Retrieval of Real Driving Data with Large Vision-Language Models

499

representations within the model’s embedding space.

These vector representations are then stored and in-

dexed in a vector database to allow efficient retrieval

based on similarity metrics. To generate embeddings

the LVLM is prompted with natural language queries.

By leveraging the contextual understanding intrinsic

in LVLMs, these embeddings encapsulate semantic

information relevant to the queried scenarios. In addi-

tion, the retrieval process includes scoring the similar-

ity between the query embeddings and those stored in

a vector database. This similarity scoring mechanism

facilitates the retrieval of the most relevant scenarios

based on their proximity to the query in the embed-

ding space. The retrieved scenarios are then returned

as the output of the retrieval process.

4.1 Dataset

Some datasets, such as Berkeley Deep Drive Explana-

tion (BDD-X) (Kim et al., 2018), contain a mapping

of driving scenes to language descriptions. However,

they do not encode structured information in the sense

of the 6LM. Since no ground truth data is provided, a

retrieval-precision-based evaluation approach is per-

formed, which evaluates the relevance of the retrieved

image with respect to the query. Therefore, the se-

lection criterion of the dataset is that the driving

scenes visually encode as much scenario-related in-

formation as possible. For evaluation purposes, the

Berkeley Deep Drive 100K (BDD100K) dataset (Yu

et al., 2020) is used. It contains 100,000 images of

1000 driving scenes in different contexts, seasons,

daytime and weather conditions, taken from the ego-

perspective of vehicle windshields. The variety of im-

ages related to all aspects of the 6LM including scenes

on highways, rural roads, residential and urban areas,

as well as various environmental conditions such as

day, night, dusk, or dawn, makes it suitable for the

evaluation of the presented method.

5 MODELS

To analyse the method proposed in this paper, the SR

capabilities of three LVLMs, CLIP, BLIP-2, and Bak-

LLaVA, are evaluated comparatively.

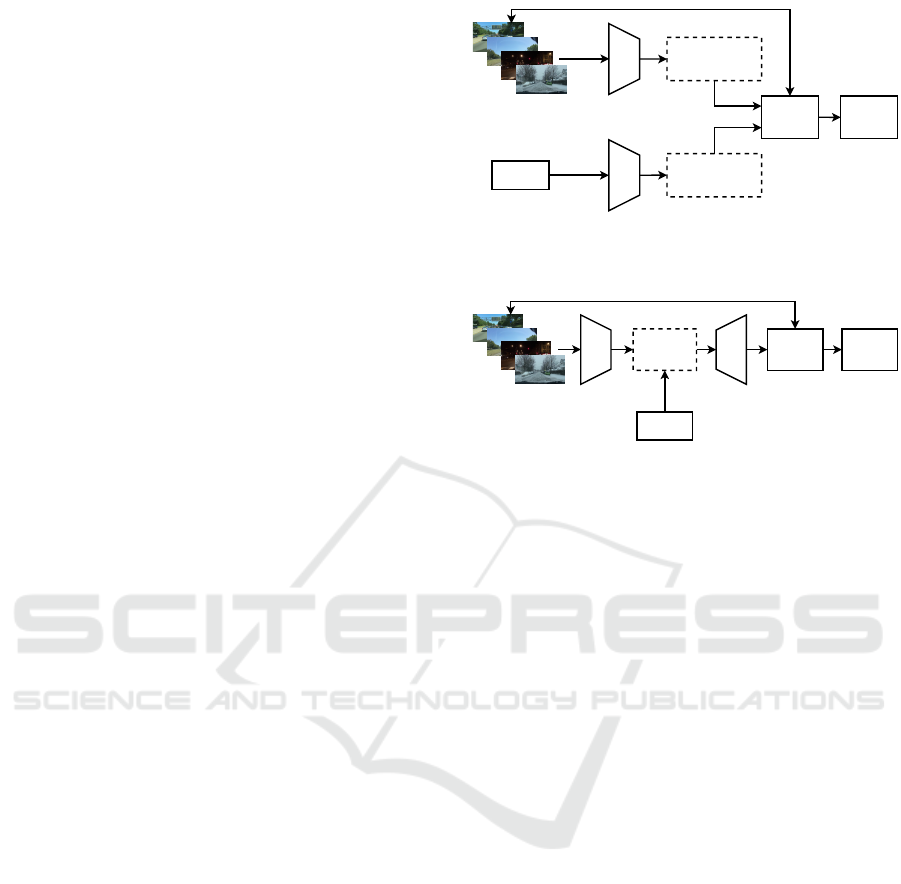

5.1 CLIP

CLIP is a multi-modal LVLM model capable of un-

derstanding images in the context of natural language

(Radford et al., 2021). To be retrievable, all images

are processed by the image encoder and projected

into an embedding vector which is stored and indexed

query

all images

text encoder

image encoder

embedding of

images

vector

database

output

top k

embedding of

texts

Figure 2: SR with CLIP.

query

all images

image

encoder

Q-Former

vector

database

output

top k

LLM

Figure 3: SR with BLIP-2.

within a vector database (Figure 2). Queries are then

projected by the text encoder into the same embed-

ding space in order to retrieve ranked results based on

the cosine similarity for cross-modal understanding.

As a result of using natural language queries, CLIP

can search a large dataset of images and identify those

that are relevant to the query, making it potentially

suitable for SR.

5.2 BLIP-2

BLIP-2 is a generic and compute-efficient method

for vision-language pre-training that leverages frozen

pre-trained image encoders and Large Language

Models (LLMs) (Li et al., 2023). Through the Query-

ing Transformer (Q-Former), BLIP-2 is able to har-

vest the capabilities of already trained powerful vi-

sion and language models without having to update

their weights when applied to downstream tasks such

as visual question answering and image-text genera-

tion. Q-Former bridges the gap between two modal-

ities and aligns their representation with improved

performance, therefore showing potential for multi-

modal tasks such as SR (Figure 3).

5.3 BakLLaVA

BakLLaVA is based on the Large Language and Vi-

sion Assistant (LLaVA) model (Liu et al., 2023).

LLaVA itself combines the Large Language Model

Meta AI (LLaMA) model of Touvron et al. (2023)

and a visual model using visual attributes. This ar-

chitecture is extended by BakLLaVA using the Mis-

VEHITS 2024 - 10th International Conference on Vehicle Technology and Intelligent Transport Systems

500

BakLLaVA

all images

text

vector

output

top k

vector

database

queryprompt*

*based on Table 1

Figure 4: SR with BakLLaVA.

tral 7B LLM (Mistral AI, 2023), which improves the

fusion of language and vision and refines the model’s

ability to understand and generate both text and im-

ages. It further enhances the capabilities of LLaVA

by incorporating techniques for representation learn-

ing, attention mechanisms, and multi-modal fusion,

resulting in a potentially suitable model for a more

detailed SR. All images are processed by BakLLaVA

with a prompt, instructing the model to caption the

images based on the information described in Table

1. The captions are stored in a vector database with

the index of the respective images to be queried for

SR (Figure 4).

6 EVALUATION

To analyse the ability of SR, the LVLMs are evaluated

considering each layer of the 6LM with its different

levels of detail, as shown in Table 1. Consequently,

the models are prompted using different queries cor-

responding to all attribute layers and their correspond-

ing levels. A total of 189 queries is assigned to each

model. Specifically, 60 queries are executed in layer

1, 24 in layer 2, 12 in layer 3, 36 in layer 4, 48 in layer

5, and 9 in layer 6. The imbalance in query distribu-

tions is due to the different attributes and granularities

of each 6LM layer. The goal is to determine the ef-

fectiveness of the models in handling these queries,

thereby revealing their capabilities of SR. Since la-

beled datasets of real driving scenarios are missing

for SR, a manual analysis of the retrieved samples

has to be performed. The precision at k (prec@k)

metric provides a simple and clear interpretation of

the results by focusing on the top recommendations

of the model. For evaluation, the number of relevant

items among the top k instances, denoted as n

k

, de-

termines the precision at a given value of k (k ≥ 1).

Since prec@k ranges from 0 to 1, it allows the model

to reach up to 100% precision, especially for low val-

ues of k.

prec@k =

n

k

k

(1)

For evaluation purposes, k is chosen to be k =

{1;5;10}. Furthermore, the average of all precision

1

1

1

2

1

3

2 3 4

5

1

5

2

6

0

0.2

0.4

0.6

0.8

1

Layer (6LM)

Average Precision

CLIP

BLIP-2

BakLLaVA

Figure 5: Average precision for CLIP, BLIP-2, and Bak-

LLaVA on all layers, including attribute layers, of the 6LM.

values over all calculated prec@ks is used for simpli-

fication. This comprehensive evaluation framework

aims to provide insight into the performance of the

LVLMs, elucidate their capabilities across different

layers and levels of detail, and provide a holistic un-

derstanding of their SR ability.

6.1 Results and Discussion

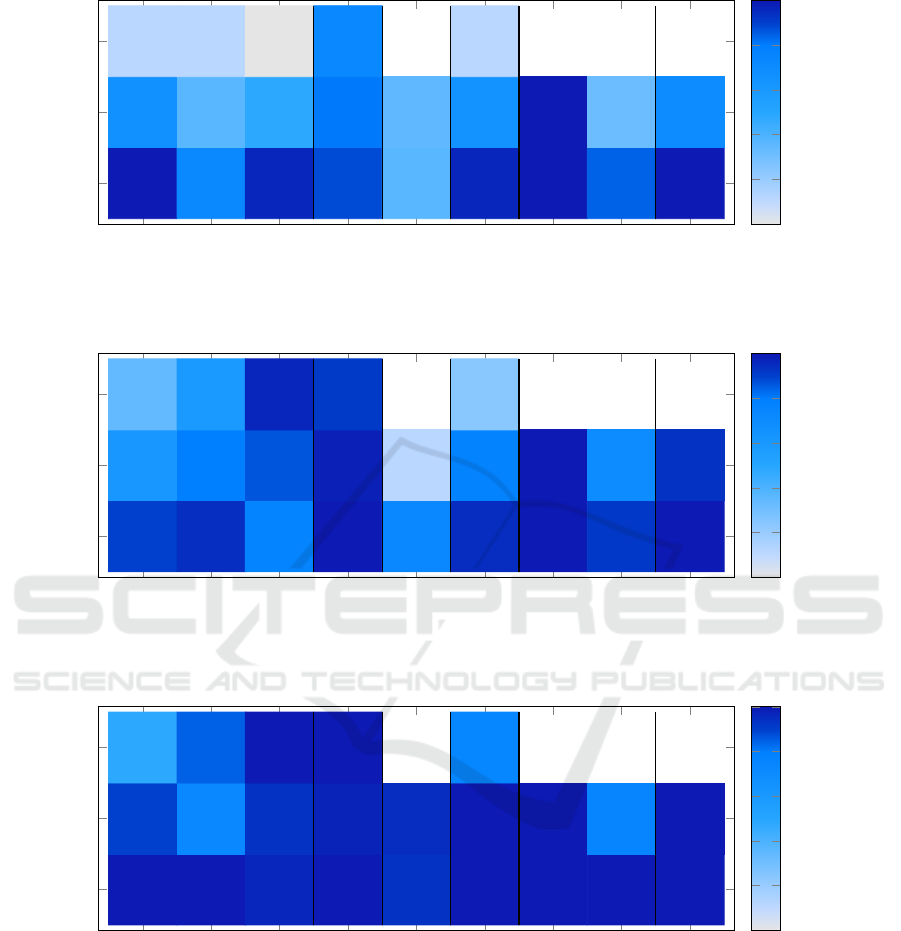

The evaluation of CLIP, BLIP-2, and BakLLaVA,

shown in Figure 5, reveals differences in average pre-

cision across different layers of a scenario. Bak-

LLaVA shows consistently higher average precision

across all layers, achieving 92.74% compared to

78.78% achieved by BLIP-2 and 62.86% achieved by

CLIP. The analysis identifies layer 5

1

as the best per-

forming layer for all models with an average precision

of 100%. Conversely, layer 3 has the lowest precision

for CLIP and BLIP-2 with 35.83% and 42.50%, re-

spectively, while layer 1

1

has the lowest precision for

BakLLaVA with 79.44%. A spread in precision can

be observed for all three models. CLIP and BLIP-2

have wider ranges of 64.17% and 57.50%, while Bak-

LLaVA has a narrower range of 20.56%. All models

show a slight trend indicating that precision tends to

increase with higher layers. However, they differ in

the increase or decrease trend of precision between

certain layer transitions, especially from layer 1

1

to

1

2

and from layer 3 to 4. For all other layer transi-

tions, the increase or decrease trend in precision is the

same for the three models. Further examination of the

attribute layers for layers 1 and 5 provides additional

insight. For CLIP, there is no noticeable trend across

the attribute layers of layer 1. In contrast, BLIP-2 and

BakLLaVA show better identification of scenarios for

the road signs in layer 1

3

compared to road markings

Towards Scenario Retrieval of Real Driving Data with Large Vision-Language Models

501

1

1

1

2

1

3

2 3 4

5

1

5

2

6

1

2

3

1

0.67

0.12

0.72

0.37

0.12

0.97

0.48

0

0.88

0.81

0.73

0.37

0.35

NaN

0.97

0.65

0.12

1

1

NaN

0.85

0.32

NaN

1

0.7

NaN

Layer (6LM)

Level of Detail

0.0

0.2

0.4

0.6

0.8

1.0

Average Precision

(a) CLIP

1

1

1

2

1

3

2 3 4

5

1

5

2

6

1

2

3

0.9

0.62

0.34

0.94

0.8

0.6

0.77

0.87

0.97

1

0.98

0.92

0.73

0.12

NaN

0.95

0.77

0.24

1

1

NaN

0.92

0.7

NaN

1

0.93

NaN

Layer (6LM)

Level of Detail

0.0

0.2

0.4

0.6

0.8

1.0

Average Precision

(b) BLIP-2

1

1

1

2

1

3

2 3 4

5

1

5

2

6

1

2

3

1

0.9

0.48

1

0.73

0.85

0.97

0.93

1

1

0.98

1

0.93

0.95

NaN

1

1

0.74

1

1

NaN

1

0.75

NaN

1

1

NaN

Layer (6LM)

Level of Detail

0.0

0.2

0.4

0.6

0.8

1.0

Average Precision

(c) BakLLaVA

Figure 6: Heatmaps showing average precision of the LVLMs with different levels of detail of the 6LM as in Table 1. NaN

values indicate intentional omissions of experiments.

(1

2

) and the road itself (1

1

). Additionally, in layer

5, more detailed scenario queries for heavy and light

weather conditions in attribute layer 5

2

lead to a de-

crease in precision.

To further evaluate the levels of detail, the

heatmaps in Figure 6 show that CLIP achieves an

average precision of 86.14% for level 1, 59.39% for

level 2, and 21.67% for level 3 across all layers. Fur-

ther, BLIP-2 achieves 91.27% for level 1, 75.43% for

level 2, and 61.33% for level 3. In contrast, Bak-

LLaVA achieves higher average precision across all

levels, with 98.89% for level 1, 91.62% for level 2,

VEHITS 2024 - 10th International Conference on Vehicle Technology and Intelligent Transport Systems

502

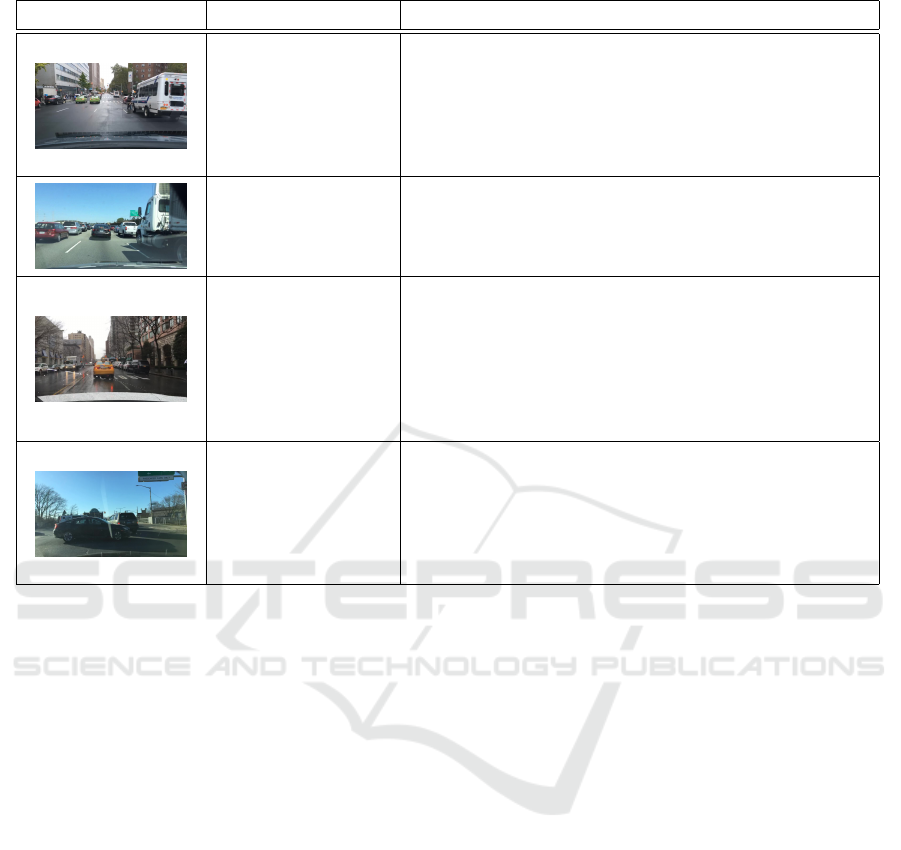

Table 2: Exemplary challenging queries and the corresponding answers of the BakLLaVA model.

Scenario Query Answer (BakLLaVA)

What types of

vehicles are visible

in the scene?

In the scene, there are several types of vehicles present:

A large white bus with blue stripes, which appears to be

a public transit bus. Two green taxi cabs. A red car. A

black SUV. A white car. A motorcycle. A truck. The

vehicles are on a city street, and there are also

pedestrians visible on the sidewalks.

Where is the dark

red car located?

The dark red car is in the left lane of the highway,

moving in the same direction as the other traffic.

In which directions

are the different cars

driving?

In the image, there are cars in the opposite direction of

the camera’s perspective. The most prominent vehicle is

a yellow taxi cab, which is driving on the right side of

the road. Behind the taxi, there are other vehicles,

including a white van and a black SUV. The road

appears to be a one-way street, and the vehicles are

moving in the same direction as the taxi.

Is a car turning to a

specific direction in

this scene?

The car in the image that appears to be turning to the

right. It’s a black car, and you can see the front end of it

as it’s making the turn. The car is positioned in the right

lane, which is typically used for right turns, and it’s

angled towards the right side of the image, indicating

that it’s in the process of turning.

and 81.50% for level 3. Present NaN values indicate

an intentional omission of an experiment for this par-

ticular level of detail, as no appropriate queries could

be formulated. Dark coloring in the heatmap corre-

sponds to high precision, with CLIP showing scat-

tered coloring and achieving dark colors primarily at

level 1. As the level of detail increases, the coloring

becomes lighter, indicating lower precision. BLIP-2

still shows a scattered coloring with slightly darker

patches compared to CLIP. Conversely, BakLLaVA’s

heatmap is dominated by dark colors at all levels, with

only a few spots showing lighter coloring. All three

models exhibit comparably low precision in layer 1

1

with detail level 3, especially when the model queried

to distinguish between large and small intersections.

In addition, layer 1

3

with detail level 3 shows a sig-

nificant disparity in precision, with CLIP failing to

detect speed signs with specified speed values, while

BLIP-2 and BakLLaVA perform effectively in this re-

gard. Layer 4 with detail level 3 poses a challenge

for the models as they are queried to retrieve maneu-

vers such as following, braking, turning, and cut-ins.

However, BakLLaVA shows a 62% and 50% higher

precision in this task compared to CLIP and BLIP-2.

To further investigate BakLLaVA’s ability to ad-

dress specific aspects and levels of detail, as it is the

best performing LVLM in this evaluation, a qualita-

tive analysis was performed using an image dialogue.

Table 2 shows four exemplary dialogues out of a set of

25 questions that were asked to further investigate the

possible level of detail of additional aspects. Red col-

ored answers represent wrong answers of the model

to the given query. The results show that BakLLaVA

is able to answer the query adequately even for fine-

grained scene descriptions such as color, number and

location of certain objects. Boundaries were espe-

cially investigated in the description of motion direc-

tions, including car directions, turning directions, and

lane directions. In these cases, model hallucination

was observed, in which the model not only provided

incorrect movement directions, but also began to de-

scribe the scene incorrectly.

The evaluation results show the general ability of

LVLMs to query camera data of real driving data for

driving scenario related information. Up until the 10

best recommendations by the model (k = 10), CLIP,

BLIP-2 and BakLLaVA are able to achieve high aver-

age precision scores. With higher degrees of detail in

the query, the performance of both, CLIP and BLIP-

2, degrades, while BakLLaVA is still able to encode

many contexts in further detail degrees, such as de-

scribing the exact speed limit value on a speed sign.

Towards Scenario Retrieval of Real Driving Data with Large Vision-Language Models

503

Further investigations through a visual dialogue show,

that BakLLaVA can provide even more detailed infor-

mation about object attributes and locations. On the

other hand, all of the evaluated models struggle with

information encoded in temporal sequences of images

such as detailed maneuvers or object movement direc-

tions embedded in layer 4. This might also be related

to the static nature of the inputs, as not videos but sin-

gle input images were fed into the models.

7 CONCLUSION

The elaborated method outlines the potential of us-

ing pre-trained LVLMs for semantic enrichment and

retrieval of real driving data with natural language

queries in the form of functional scenario descrip-

tions. Specifically, BakLLaVA, consisting of an im-

age encoder and Mistral 7B as the LLM backbone,

achieves accurate query results even for detailed spec-

ifications such as the location and color of objects en-

coded in the images.

Future work should focus on several key areas.

One key is to create a dataset tailored for SR with

LVLMs that includes multi-modal driving data such

as time series or point clouds additional to images.

Incorporating external data sources such as map- and

weather data can provide additional semantic struc-

ture to produce meaningful joint embeddings. The

ability of LVLMs to incorporate other SR tasks, such

as querying abstract scenario descriptions from con-

crete, logical, and functional scenarios, offers poten-

tial for more efficient and effective SBT. Metrics like

recall at k (recall@k) should be evaluated in addition

to prec@k to ensure the relevance of the retrieved sce-

narios. Furthermore, future research should investi-

gate prompt engineering techniques, incorporate tax-

onomies for different use cases, and explore the tem-

poral domain using video language models. The im-

pact of fine-tuning compared to in-context learning,

and the associated trade-off in computational cost for

the SR task, may have important implications for fu-

ture research directions. User studies with domain

experts querying scenarios can be conducted to ex-

plore the feasibility of the concept and the ability of

the models to cope with domain-specific language.

Analysing combined queries that jointly integrate dif-

ferent scenario layers can provide a more comprehen-

sive understanding of the SR capability. Besides re-

trieval performance, additional metrics such as com-

putational efficiency, storage requirements, and re-

trieval time should be considered. These efforts will

advance SR methods in the automotive domain for

V&V tasks.

REFERENCES

Bock, F. and Lorenz, J. (2022). Abstract natural scenario

language version 1.0.

Devlin, J., Chang, M.-W., Lee, K., and Toutanova, K.

(2019). BERT: Pre-training of deep bidirectional

transformers for language understanding. In Burstein,

J., Doran, C., and Solorio, T., editors, Proceedings

of the 2019 Conference of the North American Chap-

ter of the Association for Computational Linguistics:

Human Language Technologies, Volume 1 (Long and

Short Papers), pages 4171–4186, Minneapolis, Min-

nesota. Association for Computational Linguistics.

Dr

¨

oschel, W. and Wiemers, M. (1999). Das V-Modell 97:

der Standard f

¨

ur die Entwicklung von IT-Systemen mit

Anleitung f

¨

ur den Praxiseinsatz. Oldenbourg Wis-

senschaftsverlag, Berlin, Boston, 2014th edition.

Elspas, P., Klose, Y., Isele, S. T., Bach, J., and Sax, E.

(2021). Time series segmentation for driving scenario

detection with fully convolutional networks. In VE-

HITS, pages 56–64.

Elspas, P., Langner, J., Aydinbas, M., Bach, J., and Sax,

E. (2020). Leveraging regular expressions for flexible

scenario detection in recorded driving data. In 2020

IEEE International Symposium on Systems Engineer-

ing (ISSE), pages 1–8. IEEE.

Girshick, R. (2015). Fast r-cnn. In Proceedings of the IEEE

international conference on computer vision, pages

1440–1448.

Girshick, R., Donahue, J., Darrell, T., and Malik, J. (2014).

Rich feature hierarchies for accurate object detec-

tion and semantic segmentation. In Proceedings of

the IEEE conference on computer vision and pattern

recognition, pages 580–587.

Hu, R., Xu, H., Rohrbach, M., Feng, J., Saenko, K., and

Darrell, T. (2016). Natural language object retrieval.

In Proceedings of the IEEE conference on computer

vision and pattern recognition, pages 4555–4564.

Kang, K., Li, H., Xiao, T., Ouyang, W., Yan, J., Liu, X.,

and Wang, X. (2017). Object detection in videos with

tubelet proposal networks. In Proceedings of the IEEE

conference on computer vision and pattern recogni-

tion, pages 727–735.

Kim, J., Rohrbach, A., Darrell, T., Canny, J., and Akata,

Z. (2018). Textual explanations for self-driving vehi-

cles. In Proceedings of the European conference on

computer vision (ECCV), pages 563–578.

Langner, J., Grolig, H., Otten, S., Holz

¨

apfel, M., and Sax,

E. (2019). Logical scenario derivation by clustering

dynamic-length-segments extracted from real-world-

driving-data. In VEHITS, pages 458–467.

Lester, B., Al-Rfou, R., and Constant, N. (2021). The power

of scale for parameter-efficient prompt tuning. arXiv

preprint arXiv:2104.08691.

Li, J., Li, D., Savarese, S., and Hoi, S. (2023). Blip-

2: Bootstrapping language-image pre-training with

frozen image encoders and large language models.

Liu, H., Li, C., Wu, Q., and Lee, Y. J. (2023). Visual in-

struction tuning.

VEHITS 2024 - 10th International Conference on Vehicle Technology and Intelligent Transport Systems

504

Menzel, T., Bagschik, G., and Maurer, M. (2018). Scenarios

for development, test and validation of automated ve-

hicles. In 2018 IEEE Intelligent Vehicles Symposium

(IV), pages 1821–1827.

Mistral AI (2023). Mistral 7b: The best 7b model to date,

apache 2.0. Mistral AI News. Accessed: February 22,

2024.

Montanari, F., German, R., and Djanatliev, A. (2020). Pat-

tern recognition for driving scenario detection in real

driving data. In 2020 IEEE Intelligent Vehicles Sym-

posium (IV), pages 590–597. IEEE.

Petersen, P., Stage, H., Langner, J., Ries, L., Rigoll, P.,

Hohl, C. P., and Sax, E. (2022). Towards a data engi-

neering process in data-driven systems engineering. In

2022 IEEE International Symposium on Systems En-

gineering (ISSE), pages 1–8. IEEE.

P

¨

utz, A., Zlocki, A., Bock, J., and Eckstein, L. (2017). Sys-

tem validation of highly automated vehicles with a

database of relevant traffic scenarios. situations, 1:E5.

Radford, A., Kim, J. W., Hallacy, C., Ramesh, A., Goh, G.,

Agarwal, S., Sastry, G., Askell, A., Mishkin, P., Clark,

J., Krueger, G., and Sutskever, I. (2021). Learning

transferable visual models from natural language su-

pervision.

Rai, S. N., Cermelli, F., Fontanel, D., Masone, C., and

Caputo, B. (2023). Unmasking anomalies in road-

scene segmentation. In Proceedings of the IEEE/CVF

International Conference on Computer Vision, pages

4037–4046.

Ries, L., Rigoll, P., Braun, T., Schulik, T., Daube, J., and

Sax, E. (2021). Trajectory-based clustering of real-

world urban driving sequences with multiple traffic

objects. In 2021 IEEE International Intelligent Trans-

portation Systems Conference (ITSC), pages 1251–

1258.

Rigoll, P., Langner, J., and Sax, E. (2023). Unveiling objects

with sola: An annotation-free image search on the

object level for automotive data sets. arXiv preprint

arXiv:2312.01860.

Scholtes, M., Westhofen, L., Turner, L. R., Lotto, K.,

Schuldes, M., Weber, H., Wagener, N., Neurohr, C.,

Bollmann, M. H., K

¨

ortke, F., et al. (2021). 6-layer

model for a structured description and categoriza-

tion of urban traffic and environment. IEEE Access,

9:59131–59147.

Touvron, H., Lavril, T., Izacard, G., Martinet, X., Lachaux,

M.-A., Lacroix, T., Rozi

`

ere, B., Goyal, N., Hambro,

E., Azhar, F., Rodriguez, A., Joulin, A., Grave, E.,

and Lample, G. (2023). Llama: Open and efficient

foundation language models.

Ulbrich, S., Menzel, T., Reschka, A., Schuldt, F., and Mau-

rer, M. (2015). Defining and substantiating the terms

scene, situation, and scenario for automated driving.

In 2015 IEEE 18th International Conference on Intel-

ligent Transportation Systems, pages 982–988.

Unar, S., Su, Y., Zhao, X., Liu, P., Wang, Y., and Fu, X.

(2023). Towards applying image retrieval approach

for finding semantic locations in autonomous vehicles.

Multimedia Tools and Applications, pages 1–22.

Wei, D., Gao, T., Jia, Z., Cai, C., Hou, C., Jia, P., Liu,

F., Zhan, K., Fan, J., Zhao, Y., et al. (2024). Bev-

clip: Multi-modal bev retrieval methodology for com-

plex scene in autonomous driving. arXiv preprint

arXiv:2401.01065.

Yu, F., Chen, H., Wang, X., Xian, W., Chen, Y., Liu, F.,

Madhavan, V., and Darrell, T. (2020). Bdd100k: A

diverse driving dataset for heterogeneous multitask

learning. In Proceedings of the IEEE/CVF conference

on computer vision and pattern recognition, pages

2636–2645.

Zhou, Y., Cui, C., Yoon, J., Zhang, L., Deng, Z., Finn, C.,

Bansal, M., and Yao, H. (2023). Analyzing and mit-

igating object hallucination in large vision-language

models.

Towards Scenario Retrieval of Real Driving Data with Large Vision-Language Models

505