Generation of Breaking News Contents Using Large Language Models

and Search Engine Optimization

Jo

˜

ao Pereira

1

, Wenderson Wanzeller

1

and Ant

´

onio Miguel Rosado da Cruz

1,2 a

1

ADiT-LAB, Instituto Polit

´

ecnico de Viana do Castelo, 4900-348 Viana do Castelo, Portugal

2

ALGORITMI Research Lab, Universidade do Minho, Guimar

˜

aes, Portugal

Keywords:

AI, Artificial Intelligence, News, Newspaper, LLM, Large Language Models, Journalist, Breaking News.

Abstract:

With easy access to the internet, anyone can search for the latest news. However, the news found on the

internet, especially on social media, are often of dubious origin. This article explores how new technologies

can help journalists in their day-to-day work. We therefore sought to create a platform for generating hot

news content using Artificial Intelligence, namely Large Language Models (LLMs), combined with Search

Engine Optimization (SEO). We investigate how LLMs impact content production, analyzing their ability to

create compelling and accurate narratives in real time. Additionally, we examine how SEO integration can

optimize the visibility and relevance of this content in search engines. This work highlights the importance

of strategically combining these technologies to improve efficiency in disseminating news, adapting to the

dynamism of online information and the demands of a constantly evolving audience.

1 INTRODUCTION

In the digital realm, the rapid evolution of technolo-

gies and the swift dissemination of information have

made it increasingly challenging to verify the authen-

ticity of the news that reach us. News should be to-

tally impartial, not opinionated, and inform the reader

effectively. However, any individual can access a so-

cial network or build a website and create fake news

or news that are not totally impartial. Fake news are

gaining momentum on social media, as it is increas-

ingly easier to create and disseminate links and con-

tent or “illustrative” images of false events, and dis-

seminate this content on WhatsApp, X and Facebook,

seeking to influence the reader’s opinion in a biased

way. A study by the Columbia Journalism Review

(Nelson, 2017) states that 30 per cent of fake news are

linked to Facebook, which creates a chain of shares

that lead the user to believe that they are real news

(see Figure 1).

The main goal aim of this paper is to support the

creation of credible news contents quickly, impar-

tially and seriously. The aim is that, when a news

topic is hot or trending, a journalist can quickly cre-

ate a news story with all the details, without block-

ers, and obtain a news text with the help of AI based

tools, based on the text of the same news from dif-

ferent sources previously catalogued as credible. The

a

https://orcid.org/0000-0003-3883-1160

Figure 1: Connecting Fake News to social networks (taken

from (Nelson, 2017)).

proposed news platform will generate news contents

with daily trending topics, with the help of Artificial

Intelligence and Search Engine Optimisation (SEO)

technology (Google, 2024), so that it is optimised for

search engines. This will provide journalists with

an important tool to make them able to use these

news items, which have been generated according to

the trending words of the moment, enabling them to

edit any generated text to meet the journalist’s cri-

teria so that they can then be published wherever

they wish. The journalist has full control over the

news item and can freely edit it to suit the required

context. The generated content will cite the credi-

888

Pereira, J., Wanzeller, W. and Rosado da Cruz, A.

Generation of Breaking News Contents Using Large Language Models and Search Engine Optimization.

DOI: 10.5220/0012739200003690

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 26th International Conference on Enterprise Information Systems (ICEIS 2024) - Volume 1, pages 888-893

ISBN: 978-989-758-692-7; ISSN: 2184-4992

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

ble sources from where it has been based on. To

give an example: With the resignation of the Por-

tuguese Prime Minister, news trends monitoring plat-

forms, such as Google Trends, presented trending

words like ”Ant

´

onio Costa”, ”Resignation Ant

´

onio

Costa”, ”Prime Minister”, etc. When journalists log

on to the proposed platform, they will see all the news

created by the platform based on the trending words

of the moment. This way, they will not waste time

searching for trending topics, and will be able to use

the platform to launch breaking news that would take

much longer to build if they had to do more research,

check sources, etc.

Using a Large Language Model, such as Chat-

GPT (ChatGPT, ), the aim is to obtain a news text that

summarises the news contents obtained from credible

sources. From the texts collected, a complete and im-

partial news item will be generated, with all the details

so that the reader is properly informed. At the end

of this process, the user/journalist will always be in

charge of editing, finalising and approving the news,

being able to enter the platform and consult and ap-

prove, or not, the complete news with the latest hot

topics.

The rest of this paper is structured as follows. In

the next section, the research methods is presented.

In section 3, a review of tools for supporting journal-

ists in creating news texts is made. Section 5 presents

conclusions and defines research lines for future de-

velopments of this project.

2 RESEARCH METHOD

The research method followed in this work is Design

Science Research (DSR) (Cruz and Rosado da Cruz,

2020). DSR is an iterative problem-solving process

common in areas such as information systems and

computer science. This approach involves creating

and evaluating innovative artefacts to deal with com-

plex problems. The process includes several phases:

problem identification, artefact design and develop-

ment, demonstration or validation, evaluation and it-

erative refinement.

In the problem identification phase, researchers

thoroughly understand the context and scope of the

problem. They then move on to the design and de-

velopment of artifacts, which can range from models

to software systems. This stage emphasises creativity

and innovation, combining existing knowledge with

innovative approaches to create effective solutions.

Once the artifact is created, it undergoes demon-

stration or validation to show its functionality and po-

tential impact. This involves implementing it in real

or simulated environments and establishing evalua-

tion criteria. The evaluation phase analyses the arte-

fact’s usefulness, usability and contribution to knowl-

edge, focusing on both practical application and the-

oretical advancement.

Iterative refinement is a central aspect of DSR.

Based on the evaluation results and feedback, re-

searchers continuously improve the artefact to in-

crease its relevance and effectiveness. Finally, the re-

sults and insights are communicated through publi-

cations and other channels, contributing to collective

knowledge in the respective field.

This research work has concluded the problem

identification phase, being here presented the context

and scope of the problem, together with the defini-

tion of future work for the design and development of

the main artifact, which will be a journalists-targeted

news platform that will generate news contents with

the help of Artificial Intelligence and Search Engine

Optimisation (SEO) technology, and based on the

daily trending topics.

3 STATE OF THE ART

The state of the art research has focused on the fol-

lowing topics: ”support tools for journalists” and ”ar-

tificial intelligence in journalism”.

In the first article analysed (Franks et al., 2022),

the authors analyse the lack of specific digital tools

adapted to support journalists’ creativity. While some

general tools are adapted to help with story develop-

ment, such as search engines and text analysis, not

many are designed for journalists and do not explicitly

focus on idea generation during news development.

Examples of digital tools for journalism include

DocumentCloud (DocumentCloud, ), which analyses

documents for references and timelines, and proto-

types such as NewsReader (NewsBlur, ), using text

analysis and AI to categorise news and financial data,

although they do not focus on generating new angles

for news stories.

Other tools are mentioned, such as the Story Dis-

covery Engine (Broussard, 2014), which helps anal-

yse text and discover stories. The text also mentions

startups such as Loyal.ai, which offers interactive as-

sistants for quick searches, but has no concrete evi-

dence of supporting journalists’ creativity.

Another article (Dhiman, 2023) discusses the role

of artificial intelligence (AI) in journalism, emphasis-

ing that, although AI can automate aspects such as

data analysis, fact-checking and even news produc-

tion, it cannot replace human journalists.

AI is seen as a tool to complement human jour-

Generation of Breaking News Contents Using Large Language Models and Search Engine Optimization

889

nalists, allowing them to concentrate on complex re-

porting while AI handles routine tasks. It highlights

how AI can reduce variable costs in journalism by au-

tomating data analysis, fact-checking, news produc-

tion and personalising content for readers. However,

it notes that implementing AI can require significant

technological investment.

The article also looks at how AI, such as Chat-

GPT, can help with tasks related to journalism, such

as fact-checking, writing news articles, creating head-

lines and analysing data. It emphasises the impor-

tance of using AI tools judiciously and verifying in-

formation from any source.

Overall, while AI presents opportunities to sim-

plify journalistic processes and reduce costs, it em-

phasises the need for ethical considerations and hu-

man oversight when using these technologies.

Another article (Kotenidis and Veglis, 2021)

notes that algorithmic technology has advanced con-

siderably in recent years, but faces challenges, espe-

cially in the automated production of content. One

crucial limitation is the reliance on structured data. In

addition, although algorithms can mimic human writ-

ing, they still lack in areas such as analytical thinking,

flexibility and creativity. This creates a disconnect

between algorithms and humans, especially in auto-

mated newsrooms.

In addition to automated content production, there

are challenges in other areas, such as data mining,

where the results can be insignificant or even incor-

rect.

Despite this, algorithmic technology is promis-

ing for solving contemporary problems in journal-

ism, such as information overload and credibility. Al-

though the introduction of more sophisticated algo-

rithms may cause turbulence, it is hoped that they

will help produce news faster and on a larger scale,

expanding coverage to unprofitable events. However,

this could lead to information overload, exacerbated

by the spread of fake news.

This recent study (Lermann Henestrosa et al.,

2023) investigated how readers perceive content pro-

duced automatically by algorithms, focussing specif-

ically on science journalism articles. Although much

content is already generated automatically, there is lit-

tle knowledge about how artificial intelligence (AI)

authoring affects audience perception, especially in

more complex texts.

The researchers highlighted technological ad-

vances in automated text generation, citing large-scale

language models such as OpenAI’s GPT-3, Microsoft

and NVIDIA’s Megatron-Turing as examples of ad-

vanced natural language generation (NLG) capabili-

ties. These models illustrate the ability to simulate

human writing, but there is still a lack of studies on

the impact of AI authoring on complex texts.

The studies conducted analysed readers’ percep-

tions of science journalism articles written by algo-

rithms. Surprisingly, even when an AI author pre-

sented information in an evaluative way on a scientific

topic, there was no decrease in the credibility or trust

attributed to the text. The presentation of the infor-

mation was identified as the main factor influencing

readers’ perceptions, regardless of the declared au-

thorship.

An interesting point was that although the partici-

pants considered the AI author to be less ”human” in

their writing, this perception did not impact the eval-

uation of the messages. This raised questions about

the importance of the nature of the author for readers

in terms of credibility and trust in the content.

The results indicated an acceptance of AI as an

author of scientific texts, provided certain conditions

were met. In addition, the studies revealed positive

attitudes towards automation, suggesting a favourable

attitude towards the use of algorithms in content pro-

duction.

However, the studies had some limitations, includ-

ing the influence of participants’ prior beliefs and the

perceived neutrality of the information presented. Fu-

ture research should further explore how readers un-

derstand the workings of text production algorithms

and the relationship between the perceived ”humani-

sation” of AI and the credibility of the content gener-

ated.

In summary, the study has contributed to a deeper

understanding of how AI authorship affects the pub-

lic’s perception of complex content, paving the way

for reflection on the acceptance of algorithmically

generated texts in more diverse contexts.

The study (Sir

´

en-Heikel et al., 2023) examines

the influence of AI technologies on journalism by

studying the logics underpinning the construction of

technical solutions. It uses a theoretical framework

to understand the interrelationships between institu-

tions, individuals, and organizations in social sys-

tems. The integration of AI technologies into news

organizations impacts how work is organized and re-

shapes journalism. The study explores companies that

develop and sell NLG (natural language generation)

services for journalism, revealing how technologists

view their interactions with news organizations.

The participants in the study represent different

educational backgrounds, cultures, and languages, yet

share a sensemaking of their relationship with jour-

nalism. The presupposition that technologists and

journalists occupy separate fields of logic is validated

through the interviews. The companies involved in

ICEIS 2024 - 26th International Conference on Enterprise Information Systems

890

the study differ in size, market reach, and age, allow-

ing for analysis of both established players and new

entrants to the field.

It also focuses on the interplay that occurs when

AI technologies are incorporated into organizations.

It reveals that AI technologies in news organizations

require interaction between the logics of journalism

and technologists, leading to competition and assim-

ilation of logics. The study is limited by only inter-

viewing one representative from each company, but

sheds light on the influence exerted by AI technolo-

gies on journalism.

It identifies a shared theory of rationalization, a

frame of optimization, and a narrative of misinterpre-

tation among the companies involved in developing

AI solutions for journalism. The theory of rationaliza-

tion is distilled as solutionist, rationalizing news or-

ganizations by solving the problem of reaching audi-

ences at scale without adding human resources. This

allows newswork to refocus on creating value for au-

diences. The frame of optimization centers around

optimizing newswork, freeing journalists from hav-

ing to do ”boring news that doesn’t add any value.”

It derives from the theory of rationalization and ver-

balizes a normative conceptualization of what jour-

nalism ought to be. The narrative of misinterpretation

is expressed through the limitations of explaining AI

systems, to whom it is explained, and when it is ex-

plained.

This also delves into the perspectives of technol-

ogists involved in developing AI solutions applied in

journalism. It reveals that different companies pro-

vide different solutions for automated content, with

varying generation and distribution models. The

study sheds light on the influence exerted by AI tech-

nologies on journalism by studying the logics under-

pinning the construction of the technical solutions.

Table 1 summarizes the conclusions from the state

of art.

According to a survey conducted by the media

think-tank at the London School of Economics (LSE),

news organizations see potential for AI throughout

the entire production process, including news gath-

ering, production, and distribution. The main moti-

vations for adopting AI in journalism are increased

efficiency in the newsroom, improved business func-

tionality, and enhanced relevance for audiences.

AI technologies, particularly NLG, have been uti-

lized for various applications in journalism. These

include content recommendation, improved tagging,

automated stories, summaries, and text-to-audio con-

version. AI is also valuable for data cleansing, extrac-

tion, linking records, and identifying news angles. It

is particularly useful for handling resource-intensive

or technically challenging stories.

The article highlights that AI is viewed as a part-

ner in the journalistic process, augmenting and opti-

mizing news work. It can assist in sorting out mean-

ing from vast amounts of information and contribute

to the production of scoops, analysis, and brand build-

ing. The focus is on augmenting work processes

that lack creativity, allowing journalists to concen-

trate on more important aspects of their work. While

AI has the potential to address certain challenges in

journalism, there is also recognition of the impact on

jobs. The article emphasizes the importance of defin-

ing journalistic value in terms of investigative stories,

analysis, and brand building, rather than simply re-

lying on AI for routine news stories. In conclusion,

the study provides valuable insights into the inter-

play between AI technologies and journalism, shed-

ding light on the influence exerted by AI technolo-

gies on journalism. It highlights the need for inter-

action between the logics of journalism and technol-

ogists, and the competition and assimilation of logics

that occur when AI technologies are incorporated into

organizations. The study contributes to a better un-

derstanding of the impact of AI technologies on jour-

nalism and the dynamics of the evolving relationship

between technology and news organizations.

4 DISCUSSION

Based on all the articles analysed, it is clear that there

is still a long way to go on both sides. It is sometimes

difficult to introduce technological evolution in cer-

tain areas, but journalism has increasingly benefited

from artificial intelligence. So, in summary:

Support Tools and AI in Journalism: An article

highlights the lack of specific digital tools designed

to foster journalists’ creativity. While there are gen-

eral tools to help develop stories, few are dedicated to

generating ideas during news production. Examples

mentioned include DocumentCloud and NewsReader,

but there is a lack of tools focussed on generating new

angles for news stories.

Role of AI in Journalism: AI is seen as a com-

plementary tool to human journalists, capable of au-

tomating tasks such as data analysis, fact-checking

and even news production. However, it is emphasised

that AI cannot replace essential human skills for jour-

nalism, such as empathy, understanding the nuances

of language and ethical considerations.

Challenges of AI in Journalism: Automation in

content production faces challenges, including limi-

tations in interpreting unstructured data and the dif-

ficulty in replicating human analysis, flexibility and

Generation of Breaking News Contents Using Large Language Models and Search Engine Optimization

891

Table 1: Summary of conclusions from the state of art.

Title and Reference Main conclusions

Using computational tools to support

journalists’ creativity (Franks et al., 2022)

- Lack of digital tools to support journalists creativity.

How Artificial Intelligence Helped Me Investigate

Textbook Shortages (Broussard, 2014)

- AI offers interactive assistants, but has no concrete

evidence of supporting journalists’ creativity

Does Artificial Intelligence Help Journalists:

A Boon or Bane? (Dhiman, 2023)

- AI can automate aspects such as text analysis,

fact checking, and even news production.

- Algorithms or models may be biased.

- Ethical considerations and human oversight

when using these technologies.

Algorithmic Journalism—Current Applications

and Future Perspectives (Kotenidis and Veglis, 2021)

- Crucial limitation of AI is relience on structured data.

- AI lacks analytical thinking, flexibility and creativity.

- Some results can be insignificant or erroneous.

Automated journalism: The effects of AI authorship

and evaluative information on the perception of a

science journalism article

(Lermann Henestrosa et al., 2023)

- Readers tend to attribute similar credibility or trust to

AI generated news contents as to human written news.

At the crossroads of logics: Automating

newswork with artificial intelligence—(Re)defining

journalistic logics from the perspective

of technologists

(Sir

´

en-Heikel et al., 2023)

- Integration of AI technologies into news organizations

impacts how work is organized and reshapes journalism.

- Participants in a study, representing different

backgrounds, share a presupposition that technologists

and journalists occupy separate fields of logic.

- AI technologies in news organizations require interaction

between the logics of journalism and technologists,

leading to competition and assimilation of logics.

- Identifies a shared theory of rationalization, a frame of

optimization, and a narrative of misinterpretation among

the companies involved in developing AI solutions

for journalism.

creativity.

Audience Perception of AI-Generated Texts:

Studies on the perception of AI-generated texts indi-

cate that the presentation of information is crucial for

credibility, regardless of whether it is produced by hu-

mans or algorithms. Although participants recognize

AI-generated writing as less ”human”, this does not

affect their evaluation of the message.

Impact of AI on News Organizations: The incor-

poration of AI technologies into news organizations

influences the way work is organized and transforms

journalism. The interaction between the logics of

journalism and technology generates competition and

assimilation of logics.

Potential Use of AI in Journalism: AI is seen

as a partner in the journalistic process, capable of

optimizing tasks and freeing journalists to focus on

more complex aspects of the job. Applications of

AI include content recommendation, automatic sum-

maries, data analysis and assistance with technically

challenging stories.

Overall, AI is perceived as a promising tool for

simplifying journalistic processes, but there is an em-

phasis on the need for ethical considerations and hu-

man supervision when using these technologies.

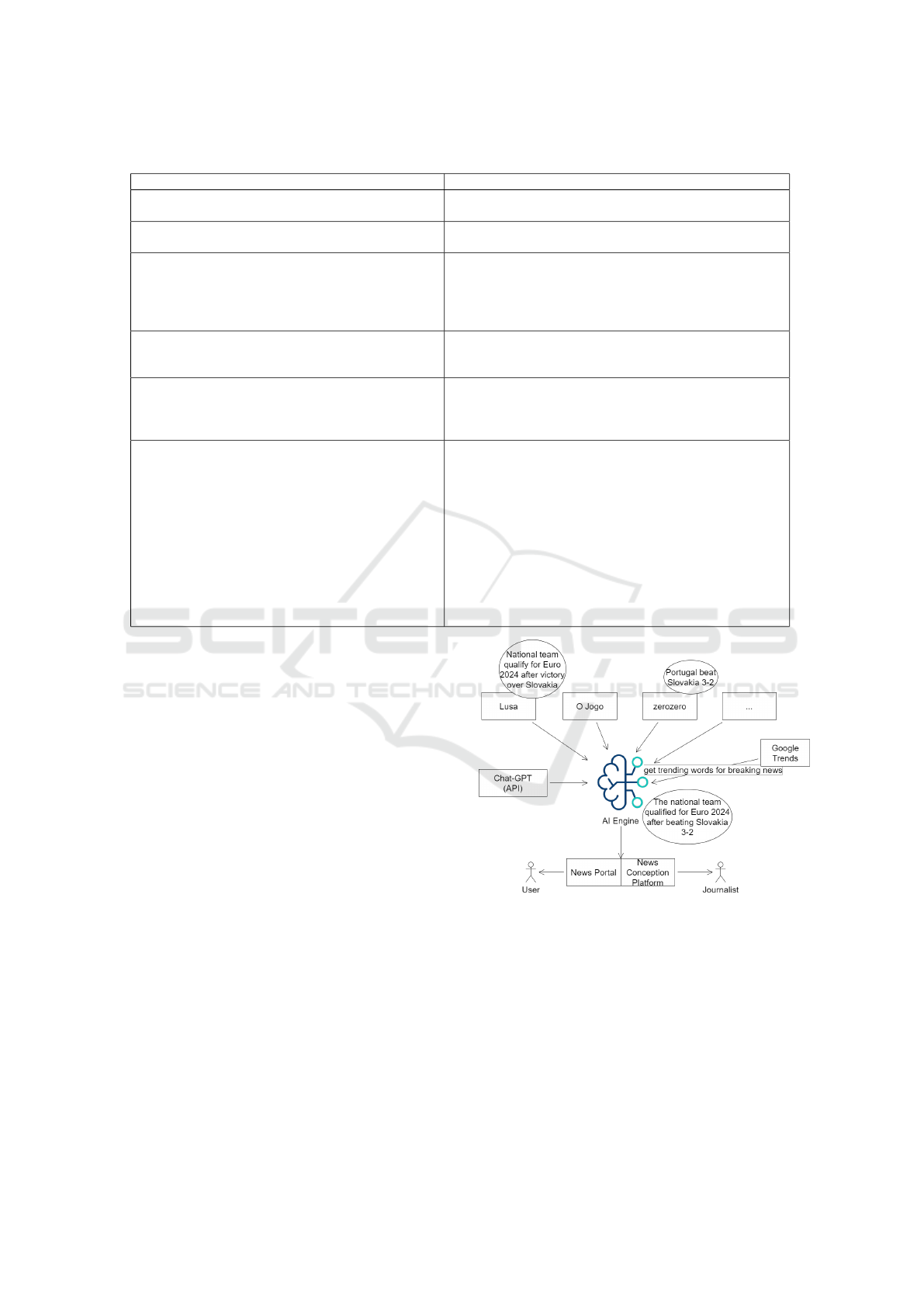

Figure 2: General architecture of the proposed project.

5 CONCLUSION AND FUTURE

WORK

In this article we have reviewed the state of art re-

lated to AI-based tools for helping journalists in their

daily work. A lack of tools for helping journalists

creativity, especially creating news contents, has been

identified. Also, it is necessary to solve a number of

ICEIS 2024 - 26th International Conference on Enterprise Information Systems

892

existing problems in order to help journalists or even

an entire newsroom. However, there have been some

difficulties because, in general terms, artificial intel-

ligence / large language models are a recent subject,

which is why there are not many articles available on

the subject. In other words, the field of journalism

with artificial intelligence continues and will continue

to be intensively explored.

This work has also set the basis for creating an

AI-based news generating platform, which would be-

come a tool for journalists, aiding in detecting news

trends and compiling texts from reliable sources that

would be delivered to the journalist for review and fi-

nalization before sending it to a news editorial office

or a news portal. Figure 2 shows the proposed high-

level architecture. As future work, we intend to con-

tinue this project, now moving on to the engineering

and development of the platform itself.

ACKNOWLEDGEMENTS

This contribution has been developed in the context

of Project “TEXP@CT – Pacto de Inovac¸

˜

ao para

a Digitalizac¸

˜

ao do T

ˆ

extil e Vestu

´

ario”, funded by

PRR through measure 02/C05-i01/2022 of IAPMEI

- Agency for Competitiveness and Innovation. For

improving the manuscript’s text, some AI-based tools

have been used, such as Google Translator and Write-

full.

REFERENCES

Broussard, M. (2014). How artificial intelligence helped me

investigate textbook shortages. American Journalism

Review.

ChatGPT. ChatGPT. Avaible at https://chat.openai.com/.

Cruz, E. F. and Rosado da Cruz, A. M. (2020). Design

science research for IS/IT projects: Focus on digital

transformation. In 15th Iberian Conference On Infor-

mation Systems And Technologies (CISTI 2020).

Dhiman, B. (March 24, 2023). Does artificial intelligence

help journalists: A boon or bane? SSRN Electronic

Journal. http://dx.doi.org/10.2139/ssrn.4401194.

DocumentCloud. DocumentCloud. Avaible at

www.documentcloud.org.

Franks, S., Wells, R., Maiden, N., and Zachos, K. (2022).

Using computational tools to support journalists’ cre-

ativity. Journalism, 23(9):1881–1899.

Google (2024). Search engine optimization (seo) starter

guide. Available at https://developers.google.com/

search/docs/fundamentals/seo-starter-guide.

Kotenidis, E. and Veglis, A. (2021). Algorithmic jour-

nalism—current applications and future perspectives.

Journalism And Media, 2:244–257.

Lermann Henestrosa, A., Greving, H., and Kimmerle, J.

(2023). Automated journalism: The effects of ai au-

thorship and evaluative information on the perception

of a science journalism article. Computers in Human

Behavior, 138:107445.

Nelson, J. L. (Jan. 2017). Is ‘fake news’ a fake prob-

lem? - columbia journalism review. Available at

https://www.cjr.org/analysis/fake-news-facebook-

audience-drudge-breitbart-study.php.

NewsBlur. NewsBlur. Avaible at

https://www.newsblur.com.

Sir

´

en-Heikel, S., Kjellman, M., and Lind

´

en, C.-G. (2023).

At the crossroads of logics: Automating newswork

with artificial intelligence—(re)defining journalistic

logics from the perspective of technologists. Jour-

nal Of The Association For Information Science And

Technology, Special Issue:Artificial Intelligence and

Work, 74(3):345–366.

Generation of Breaking News Contents Using Large Language Models and Search Engine Optimization

893