Learning Analytics Support in Higher-Education: Towards a

Multi-Level Shared Learning Analytics Framework

Michael Vierhauser

1 a

, Iris Groher

2 b

, Clemens Sauerwein

1 c

, Tobias Antensteiner

1 d

and

Sebastian Hatmanstorfer

2

1

University of Innsbruck, Department of Computer Science, Innsbruck, Austria

2

Johannes Kepler University Linz, Institute of Business Informatics, Software Engineering, Linz, Austria

Keywords:

Assurance of Learning, Learning Analytics, Learning Objectives, Competency-Based Education.

Abstract:

Assurance of Learning and Competency-Based Education are increasingly important in higher education, not

only for accreditation or transfer of credit points. Learning Analytics is crucial for making educational goals

measurable and actionable, which is beneficial for program managers, course instructors, and students. While

universities typically have an established tool landscape where relevant data is managed, information is typ-

ically scattered across various systems with different responsibilities and often only limited capabilities for

sharing data. This diversity, however, significantly hampers the ability to analyze data, both on the course

and curriculum level. To address these shortcomings and to provide program managers, course instructions,

and students with valuable insights, we devised an initial concept for a Multi-Level Shared Learning Analytics

Framework to provide consistent definition and measurement of learning objectives, as well as tailored infor-

mation, visualization, and analysis for different stakeholders. In this paper, we present the results of initial

interviews with stakeholders, devising core features. In addition, we assess potential risks and concerns that

may arise from the implementation of such a framework and data analytics system. As a result, we identified

six essential features and six main risks to guide further requirements elicitation and development of our pro-

posed framework.

1 INTRODUCTION

In higher education, particularly at the university

level, assessment of students’ performance, system-

atic analysis of learning outcomes, and quality assur-

ance of teaching and learning material have become

widely adopted practices. Such activities even be-

come mandatory when study programs apply for ac-

creditation (e.g., AACSB

1

for business and account-

ing programs), which typically requires a set of well-

established Learning Objectives on program-level

and a course syllabus with an Assurance of Learning

(AoL) system in place (Stewart, 2021). These objec-

tives describe the competencies in terms of skills and

knowledge (Kumar et al., 2023), learners (i.e., stu-

a

https://orcid.org/0000-0003-2672-9230

b

https://orcid.org/0000-0003-0905-6791

c

https://orcid.org/0009-0009-9464-5080

d

https://orcid.org/0000-0001-5513-1073

1

https://www.aacsb.edu

dents) should have acquired after successfully com-

pleting the course (Vasquez et al., 2021).

In addition to meeting accreditation requirements,

AoL serves as an effective quality assurance pro-

cess for assessing the effectiveness of individual stu-

dents, specific courses, and entire programs. To fa-

cilitate accurate evaluation, proper and well-defined

measurements, as well as respective guidelines for

improvements, need to be established. This requires

support for the structured capturing and definition

of metrics and evaluation data at both the program

and the individual course level. Moreover, this con-

tributes to the principle of constructive alignment,

which emphasizes the alignment of Learning Objec-

tives and teaching methods with the corresponding

assessment (Mimirinis, 2007). Following this princi-

ple, for example, Learning Objectives need to be de-

fined and subsequently linked on the program level

as well as course level. Consequently, assessments

need to be performed in a way so that student per-

formance – with respect to the defined Learning Ob-

Vierhauser, M., Groher, I., Sauerwein, C., Antensteiner, T. and Hatmanstorfer, S.

Learning Analytics Support in Higher-Education: Towards a Multi-Level Shared Learning Analytics Framework.

DOI: 10.5220/0012744400003693

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 16th International Conference on Computer Supported Education (CSEDU 2024) - Volume 1, pages 635-644

ISBN: 978-989-758-697-2; ISSN: 2184-5026

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

635

jectives – can be adequately measured, typically re-

quiring the aggregation and analysis of data from var-

ious systems. Furthermore, course enrollment data

and student information may reside in a dedicated

university-wide, centrally managed information sys-

tem, whereas course data might be managed by differ-

ent departments in dedicated Learning Management

Systems (LMS), making it hard to create meaningful

analysis, particularly on the program level.

Furthermore, to exacerbate the situation, Learn-

ing Objectives are often defined by different stake-

holders for different goals and purposes. Program-

level objectives are typically managed by program

managers, while course-level objectives are defined

by lecturers responsible for the individual courses.

Knowledge about if and how course-level objectives

do in fact contribute to program-level objectives is

often overlooked and thus not captured (Lakhal and

S

´

evigny, 2015). To draw meaningful conclusions,

collected data needs to be analyzed to identify the

skills and knowledge gained in the course and poten-

tial improvement areas, for example with respect to

the didactic principles used or the course material pro-

vided (Bakharia et al., 2016): Program managers, on

the other hand, might be particularly interested in his-

torical enrollment data and cohort analysis to identify

trends and plan courses accordingly, while instructors

may seek to uncover knowledge gaps to improve their

course setup, the material provided, or time spent on

certain topics (Divjak et al., 2023). This calls for dedi-

cated and customized views and visualizations of col-

lected data, while precautionary measures need to be

taken so that only eligible stakeholders have access to

certain information (Pardo and Siemens, 2014).

As part of our ongoing work in the area of Learn-

ing Analytics (Clow, 2013; Khalil et al., 2022), we

have created tools, scripts, and applications, for in-

dividual courses for basic analysis (e.g., analysis of

students’ competencies in programming classes), and

therefore gained additional information beyond sim-

ple statistics provided by most LMS. However, keep-

ing all of these up-to-date with ever-changing settings

and exercise formats has quickly turned into a cum-

bersome and time-consuming experience, reinventing

the wheel each semester for every new course analysis

we introduced. To address these issues, we have de-

veloped an initial conceptualization of a Multi-Level

Shared Learning Analytics Framework in order to

build a comprehensive framework that provides ex-

tended analysis capabilities that provide information

for students, course instructors, and program man-

agers.

Following a proper Software Engineering pro-

cess (Kotonya and Sommerville, 1998) for integrating

the framework in the existing landscape of university-

specific tools, workflows, and processes, in a first

step we conducted a series of scoping interviews to

(1) identify key stakeholders, and gain insights into

current pain points and needs of students, course in-

structors, and program managers. As part of these

interviews, we, furthermore, (2) collected high-level

requirements and features users would expect to be

provided by such a framework. Finally, we (3) col-

lected potential risks or negative side effects, and

what factors need to be considered when designing

such a framework that might threaten its acceptance

or readiness. Based on the results of the interviews,

we have identified six feature categories and six risk

groups that merit consideration in the development of

our envisioned framework. Accordingly, we further

discuss the findings and implications and lay out a

roadmap for further development and evaluation in a

university-wide process.

The remainder of this paper is structured as fol-

lows. In Section 2, we first present a motivating ex-

ample from two universities facing similar challenges

that led to the idea of creating a Multi-Level Shared

Learning Analytics Framework. We continue by de-

scribing the interview study we conducted with key

stakeholders in Section 3, followed by a discussion of

the results and insights in Section 4. Based on our

findings, we outline an initial roadmap for building

our framework in Section 5.

2 BACKGROUND AND

MOTIVATION

In this section, we first provide a motivating example

(cf. Section 2.1) and discuss related work in the area

of Learning Analytics and CBE (cf. Section 2.2).

2.1 Motivation for a Learning Analytics

Platform

In recent years, Competency-Based Education (CBE)

has gained significant traction at universities (Pluff

and Weiss, 2022; Long et al., 2020). CBE is an ed-

ucational model in which students pass courses that

are part of their selected programs by demonstrating

knowledge and skills (Vasquez et al., 2021). In these

courses, students are typically assessed based on their

ability to demonstrate specific competencies and, ide-

ally, receive a more personalized learning experience

tailored to their individual needs, preferences, and

abilities. In order to transition to CBE and effec-

tively implement CBE across all programs, it is cru-

EKM 2024 - 7th Special Session on Educational Knowledge Management

636

cial to systematically define learning objectives for

both study programs and individual courses.

Learning Objectives are specific and measurable

goals that describe what students should know, or

be able to do by the end of a course or educational

program (Teixeira and Shin, 2020; Barthakur et al.,

2022). Learning Analytics, in this context, has been

established as a major factor that provides deep in-

sights and analysis (Atif et al., 2013; Klein et al.,

2019; Viberg et al., 2018). A key factor for success-

fully introducing competencies and CBE is the abil-

ity to measure learning outcomes, analyze data, and

draw conclusions to improve both students’ results

and curriculums. As part of our own work in this area,

we have been directly engaged in the accreditation of

course programs in business informatics and business

administration, and have further introduced compe-

tencies and dedicated LOs in our undergraduate basic

programming courses.

However, as part of this effort, we have faced mul-

tiple challenges and pain points with regard to defin-

ing competencies across several courses of a study

program, and more importantly, creating meaningful

analyses that go beyond “simple” course-level anal-

ysis. In accrediting business informatics and admin-

istration programs, we introduced competencies and

dedicated LOs, and faced significant challenges in

defining consistent cross-course competencies. Par-

ticularly, we experienced difficulties with data inter-

connectivity across university systems, complicating

course analysis, planning, and budgeting. One of the

first issues we encountered was a lack of intercon-

nectability and the ability to exchange and aggregate

data between various university systems. For exam-

ple, in order to create meaningful gender analysis of

course and exam results we had to manually export

student data from the university management system

and exam data from our LMS and merge everything in

an Excel spreadsheet, which we, at some point, auto-

mated using various self-written Python scripts. Sim-

ilar problems arose when trying to collect informa-

tion about students progressing over several semesters

to get insights on how to plan our upcoming courses

(particularly with regard to slots we need to offer and

course instructors we need to budget). During discus-

sions with colleagues from other departments and uni-

versities, we found that many have encountered com-

parable hurdles when attempting to implement Learn-

ing Analytics that go beyond the limited functions

provided by current tools. Even though the specific

tools (e.g., LMS and university information systems)

are different, challenges remain largely the same.

2.2 Related Work

Learning Analytics is driven by the ever-growing

availability of data and the rise of online learning and

corresponding learning platforms, that allow track-

ing students and collecting vast amounts of learning-

related information (Ferguson, 2014). In recent work,

Tsai et al. (Tsai et al., 2020) have identified trends and

barriers in applying Learning Analytics in higher ed-

ucation. Similar to our intentions, “improving student

learning performance” and “improving teaching ex-

cellence” rank among the most important driving fac-

tors. With regards to skill descriptions part of LA ac-

tivities, Kitto et al. (Kitto et al., 2020) have explored

the use of skills taxonomies establishing mappings

between different subject descriptions using natural

language processing. This aspect is also highly rele-

vant in the context of our framework, helping to not

only standardize the curricula formats, but also auto-

mate the mapping. Focusing on curriculum analytics,

Hilliger et al. (Hilliger et al., 2020) have create an “In-

tegrative Learning Design Framework” and accompa-

nying tool support. Their work focuses on instruc-

tors and curriculum managers as primary stakeholders

and supports program-level decision-making. Their

insights on these two levels, e.g., the need for “au-

tomated reports of competency attainment” are also

useful input for further requirements for our frame-

work.

Ga

ˇ

sevi

´

c et al. (Ga

ˇ

sevi

´

c et al., 2016) conducted a

large-scale study on LMS usage and student perfor-

mance. One of their main findings was that too gen-

eralized models for academic success used in Learn-

ing Analytics may not improve the quality of teach-

ing, and that more course-specific models could be

beneficial. Moreover, Ifenthaler and Yau (Ifenthaler

and Yau, 2020) have conducted a systematic litera-

ture review, including 46 publications on Learning

Analytics support in higher education. Similar to

our anecdotal evidence, they concluded that standard-

ized measures, and visualizations are needed, that can

be integrated into existing digital learning environ-

ments. Kumar et al. (Kumar et al., 2023) suggest a

model for computer science curricula that integrates

knowledge and competency models synergistically.

Their work focuses on both ends of the learning spec-

trum, facilitating on the one hand teaching and on the

other hand assessment. Consequently, Kumar et al.’s

model offers both “an epistemological and a teleolog-

ical perspective” on the subject of computer science,

thereby guiding ongoing specification and refinement

of our proposed Multi-Level Shared Learning Analyt-

ics Framework.

Learning Analytics Support in Higher-Education: Towards a Multi-Level Shared Learning Analytics Framework

637

Information

Aggregation

Information

Aggregation

Student-LevelStudent-Level

Course-LevelCourse-Level

Program-LevelProgram-Level

Shared

Learning

Analytics

Platform

Program Manager

Course Instructor

Students

Ext. Data

Sources

- Aggregated

Course Results

- Cohort Analysis

- Track Overall

Learning

Progress

- Track Individual

Course

Progress

University

Information

System

- Validate Course

Competences

- Monitor

Learning

Progress

- Validate Course

Competences

- Monitor

Learning

Progress

Course

Enrollment

System

Learning

Management

System (LMS)

Examination

Management

System

Student

Competences

Student

Competences

Course

Competences

Course

Competences

Program

Competences

Program

Competences

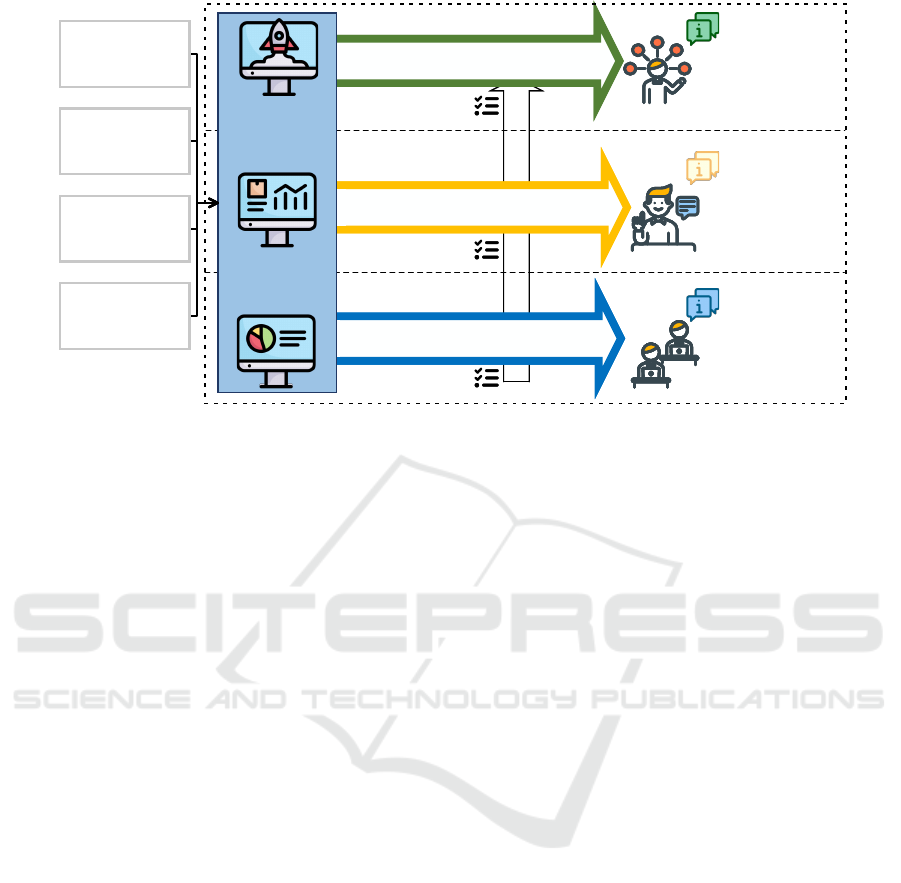

Figure 1: Our envisioned framework with 3 levels (i.e., student, course instructor, program manager) with customized visual-

ization and analysis capabilities.

3 INTERVIEWS

As a first step towards collecting core requirements

and identifying additional key stakeholders, we con-

ducted a series of initial semi-structured interviews

with participants from two different universities in

Austria. The goal was to gain insights and a deeper

understanding of the current situation, pain points,

and high-level requirements and features of such a

framework. Moreover, we sought to identify poten-

tial risks when introducing such advanced analytics

capabilities that process potentially sensitive data.

In the following, we provide details about our

proposed framework (cf. Section 3.1), the interview

setup (cf. Section 3.2) and results (cf. Section 3.3).

3.1 Conceptual Framework

Based on the identified shortcomings and issues we

have observed, we developed an initial concept of

a Multi-Level Shared Learning Analytics Framework

that aims to assist both program managers and course

instructors. In addition, our proposed framework

should provide students with information about their

study and learning progress. Fig. 1 provides an

overview of our envisioned framework, depicting its

three main levels: At the bottom level, individual stu-

dents should be supported (Rainwater, 2016), for ex-

ample, throughout the course of a semester to keep

track of their learning progress, whereas at the course

level course instructors should be able to keep track

of their class and identify potential shortcomings or

weak points early on. At the top level, a program

manager in charge of one or more programs needs a

comprehensive, literally high-level view of multiple

courses, with aggregated metrics and LOs.

• The Student-Level. Learning Analytics not only

provides benefits to teachers and instructors, but can

also provide valuable information to students. Simi-

lar to an LMS where students have access to course

materials, students can explore the competencies of

each individual course in which they are enrolled in.

Moreover, they receive immediate feedback about the

competencies they already gained, and successfully

completed assessments, assessments they have suc-

cessfully completed, assessment results, and upcom-

ing tasks.

• The Course-Level. Course instructors use the

framework to define and manage course objectives

and link them to assignments and related teaching and

learning materials. This allows for a more detailed ex-

amination of the extent to which students are achiev-

ing each course competency. In addition, validation of

the coverage of all competencies in a particular given

course is provided. Furthermore, to support a top-

down analysis, instructors link their individual course

objectives to the overall program-level objectives.

• The Program-Level. At the highest level, program

managers use the framework to define and manage

program objectives which, in turn, are linked to the

individual course objectives. They are provided with

a view that shows how each course in the program

contributes to these objectives. In addition, high-level

views and analysis of course results support identify-

EKM 2024 - 7th Special Session on Educational Knowledge Management

638

ing in which order students complete the courses and

what difficulties they face.

• Integration of External Data Sources. To provide

the above-mentioned analysis capabilities, different

external data sources need to be integrated, including

LMS as well as university management, course en-

rollment, and examination management systems. In

this way, LOs can be linked to teaching and learn-

ing material, homework assignments, or assessments.

Moreover, detailed access statistics can be presented

to course instructors.

3.2 Methodology

The objective of the preliminary scoping interviews

was to gather the prerequisites for our proposed

framework while obtaining explicit comprehension

of the needs and requisites of stakeholders at differ-

ent levels. Therefore, we specifically selected par-

ticipants representing stakeholders at three different

levels, i.e., students, course instructors, and program

managers (cf. Fig. 1).

One researcher created an initial set of questions,

which was then discussed among the authors, and the

final questionnaire was divided into three parts, with

a total of 14 open-ended questions. In the first part,

we asked participants about their current practices and

tasks they conduct specific to their respective roles

and if any tool support exists. After this, we briefly

introduced the concept of our Multi-Level Shared

Learning Analytics Framework (cf. Section 2.1 and

Fig. 1). We intentionally refrained from introducing

the framework before part one to avoid any bias when

discussing the current state.

The second part focused on the framework re-

quirements and features, as well as potential issues or

risks that may arise when introducing such a frame-

work. Finally, the third part was dedicated to the indi-

vidual stakeholder’s level and what – as well as how –

they would use the proposed framework in their daily

professional life.

In total, we conducted interviews with twelve par-

ticipants, i.e., four per level, with two at each of the

two universities. For the student level, we covered

a broad spectrum of both bachelor and master stu-

dents of Business Informatics as well as Computer

Science. For the course level, we interviewed three

senior lecturers with several years of teaching expe-

rience, and one Assistant Professor. Finally, for the

program level, we interviewed three professors, who

also serve (or have served) as program managers for

various programs ranging from bachelor to PhD pro-

grams, and a program/accreditation manager. Each

interview was conducted by at least one researcher in

person or via Zoom, lasting approximately 45 minutes

to one hour. After asking for consent, we recorded all

interviews and subsequently transcribed them using

OpenAI’s Whisper speech-to-text service (OpenAI,

2023). All interviews were conducted and recorded

in German and later partially translated during coding

(see below).

After the transcription of the interviews, we used

open coding to analyze them for relevant information

about (1) features, (2) stakeholders, or (3) potential

risks and challenges (Seaman, 1999). For this qualita-

tive analysis of the interviews, we used QCAmap (T.

Fenzl and P. Mayring, P., 2023), an open-source tool

for systematic text analysis. Relevant statements were

then translated into English, and two researchers sub-

sequently created an initial grouping of the codes. In

the second step, two additional researchers reviewed

the initial grouping, conflicts were resolved, and the

final grouping was established. All researchers are

co-authors of this paper with experience in teaching,

Learning Analytics, and interview studies.

3.3 Interview Results

After multiple iterations of discussion among the re-

searchers a final set of feature and risk categories

emerged. In total, we identified twelve categories. Six

categories pertaining to potential features and frame-

work requirements (cf. F01 to F06, and another six

(cf. R01 to R06 in Table 1) concerning potential risks

and challenges. An overview of the final set of cat-

egories and number of constituent codes is provided

in Table 1.

Statements covering features are split fairly evenly

across five categories, with only the sixth group re-

lated to “Course Harmonization” was only mentioned

four times. For the stated risks, two categories stand

out. First, (general) “Platform Usage and Operation”

was mentioned 21 times, and second, privacy and data

protection issues were addressed a total of 16 times.

In total, we included 238 coded statements, which

are distributed across the twelve groups F01 to F06

and R01 to R06. As 22 statements were not explic-

itly assignable to one group, but fit into two groups,

we assigned them to both. In addition, for each code,

we also attached the relevant levels to identify cross-

cutting features and risks.

In the following, we discuss each of the feature

and risk categories in more detail.

Learning Analytics Support in Higher-Education: Towards a Multi-Level Shared Learning Analytics Framework

639

4 DISCUSSION

In the following section, we provide an overview of

the results from our interviews, first discussing the re-

sulting feature categories (cf. Section 4.1) and second

the risks we have identified (cf. Section 4.2) .

4.1 Feature Categories

Based on our analysis and coding, we uncovered six

main categories of features that were mentioned dur-

ing the interviews. The five features Curriculum Man-

agement Support, Quality Assurance, Study Planning,

Study Statistics, and Course Harmonization cover

specific framework functionalities, whereas System

Integration, collects functionality not directly pro-

vided by our envisaged framework, yet vital for its

intended application.

• Curriculum Management Support. One major

group of requirements revolves around the curriculum

and curriculum-related activities that are necessary to

manage and maintain a study program. While such

activities fall within the responsibilities of program

managers, interviewees have mentioned several as-

pects where our proposed framework could also sup-

port course instructors as well as students as a posi-

tive side effect. For example, tracing and refining ex-

isting program-level Learning Objectives could help

them to better plan their individual courses, or a single

view on university processes can alleviate the (admin-

istrative) burden of credit transfers, for both students

and course instructors alike (e.g., Learning Objective-

related recognition rules responsibilities). This con-

firmed our assumption that dependencies across dif-

ferent levels of our framework are of particular impor-

tance, and that functionality on one level could also be

beneficial for other stakeholders not directly involved

in a particular task.

• Quality Assurance. Several requirements cover

topics related to quality assurance, such as support

for accreditation of study programs, and consolidated

information necessary for this process. Furthermore,

participants mentioned the importance of perform-

ing cross-course analysis, as well as cohort and en-

rollment number analysis. The framework should

thus provide different metrics from dropout rates in

courses, to a detailed display of already completed

introductory courses. It should also make Learning

Objectives “quantifiable”, facilitating measurability,

and identifying problematic Learning Objectives. An

identification of lower-performing students would al-

low for early intervention strategies. Moreover, in-

terviewees mentioned that “(the framework) can re-

ally make Learning Objectives measurable and oper-

ationalized, so that they are not only just defined.”

• Study Planning. Another group of requirements

is related to the organization and planning of courses

for study programs. Scheduling courses and exams

typically falls within the responsibility of program

managers, and the framework could help them opti-

mize resource utilization by suggesting the optimal

size and timing for specific classes. Participants men-

tioned that dependencies between courses and their

objectives can be visualized, which might help them

to schedule courses in a sequence that maximizes

learning outcomes while minimizing schedule con-

flicts. This can also foster exchange or coordina-

tion between teaching staff regarding the contents

to be taught, or material to be provided in courses.

The study planning feature is also relevant to stu-

dents. The students interviewed indicated creating

a customized study schedule based on their enrolled

classes, taking into account due dates for assignments

and exams would be very helpful. In addition, stu-

dents stated that they might also like to monitor their

own study progress by visualizing course dependen-

cies or prerequisites to make more informed decisions

about which courses to take in future semesters.

• Study Statistics. Particularly, the group of course

instructors suggested that the use of statistical anal-

ysis can provide sophisticated information about stu-

dents and their learning progress. For example, key

performance indicators (KPIs) to measure homework

assignments, and aggregated course and grade statis-

tics for students might be beneficial. Moreover,

potential features of the framework highlighted by

instructors’ respondents include linking competen-

cies to homework or exam questions and comparing

homework or learning objectives. Similarly, students

mentioned that measuring or checking their learning

progress, statistically analyzing their learning goals,

and gaining insight into their learning progress would

be valuable in assessing their current learning perfor-

mance.

• Course Harmonization. Program managers, in-

structors, and students unanimously identified trans-

parency as a crucial feature of the envisioned frame-

work, highlighting the potential of our framework to

enhance course evaluation and establish uniform as-

sessment criteria and standards across classes. For

example, one participant mentioned that it would be

good to have “[...] uniform and somewhat compara-

ble grading criteria for different classes”.

• System Integration. Due to the multitude of tools

and heterogeneous platforms used at the program,

course, and student level, it became evident that sys-

tem integration was the most frequently cited fea-

ture. This requires interface functionalities to other

EKM 2024 - 7th Special Session on Educational Knowledge Management

640

systems (e.g., LMS, and university information sys-

tems) or the ability to (automatically) import/export

data relevant for analysis. On the other hand, cor-

responding communication functionalities have also

been demanded to enable uniform communication be-

tween lecturers and students, or vice versa.

Concerning the three levels of our proposed

framework (cf. Fig. 1) we were able to identify spe-

cific activities and hence requirements for each of the

anticipated user groups. For the program level, cur-

riculum management, and study planning-related ac-

tivities were mentioned most frequently (cf. Curricu-

lum Management Support and Study Planning fea-

tures). While currently tools exist that support (parts)

of their work in this area, spreadsheets, and manual

work still appear to be prevalent. For the group of

course instructors and students, we could identify a

broad level of planning and analysis activities. The

unified view on several courses was deemed helpful

by both groups and could help either group to gain

additional insights into their teaching and study activ-

ities.

4.2 Identified Risks

Besides the desired features, the second aspect of

the interviews was concerned with identifying poten-

tial risks and negative implications such a framework

could exhibit.

As the envisioned framework potentially com-

bines and manages electronic data about students and

their study progress, data protection, and privacy were

consistently cited as one of the main risks by all three

groups. Depending on the level of the respondent, dif-

ferent aspects of data protection were considered im-

portant. For example, there should only be role-based

access to data, even though the data is scattered in

Table 1: Resulting Feature and Risk categories.

ID Category Description Count

Features

F01 Curriculum Management 51

F02 Quality Assurance 49

F03 Study Planning 56

F04 Study Statistics 44

F05 Course Harmonization 4

F06 System Integration 56

Risks

R01 Preconditions not met 8

R02 Negative side-effects 4

R03 Interpretation of Data 4

R04 Privacy 16

R05 Platform Development 6

R06 Platform Usage & Operation 21

different systems and needs to be shared accordingly.

This might pose a major challenge, as it would mean

standardizing access controls, role management, and

even establishing a decentralized security infrastruc-

ture.

Furthermore, participants identified data misinter-

pretation as another major risk. Learning Analytics

platforms collect and analyze large amounts of data

to provide insights into student performance, com-

mitment, and Learning Objectives. However, the in-

terpretation of this data can be challenging. Over-

simplification of data visualizations, and unsuitable

metrics may inadvertently introduce biases, leading to

unfair or inaccurate assessments of student capabili-

ties. Also, looking at certain KPIs in isolation may

not capture the full context of a student’s learning

journey, potentially resulting in misleading conclu-

sions. Excessive dependency or focus upon KPIs was

stated as one specific risk: “result in [...] focusing too

much on KPIs, which are continuously checked, with

the purpose to have students pass a class, and push

them through - but they might lose interest in study-

ing/learning something new.” Without a profound un-

derstanding of the educational context, stakeholders

may make ill-informed decisions based on the pre-

sented data.

Another risk is related to how to use the avail-

able data, for example, the aggregated course infor-

mation or student performance. While such data can

improve quality and learning outcomes (cf. Quality

Assurance), over-reliance on statistical data and KPI-

related metrics without critical reflection could hinder

creativity and individual teaching approaches. Fur-

thermore, specifically by the lecturers, the fear was

expressed that aggregated information can serve as

a monitoring tool for lecturers, and thus it has to be

clearly defined which information can be viewed by

which stakeholders.

Concerning the framework’s utilization and func-

tionality, risks arise from potentially low acceptance

among stakeholders. Consequently, additional com-

mitment is warranted to overcome the complexity and

possible ambiguity of the framework. Furthermore,

it may not be perceived as having significant advan-

tages, as “it simply presents already available infor-

mation more streamlined”.

4.3 Threats to Validity

As with any study, our work is subject to a number of

threats to validity.

The number of study participants was limited,

which constitutes a threat to external validity. In total,

we interviewed twelve participants – four from each

Learning Analytics Support in Higher-Education: Towards a Multi-Level Shared Learning Analytics Framework

641

level of the envisioned framework. However, all par-

ticipants had several years of experience within their

area of expertise, and we included participants from

two different universities.

To gain a broader understanding of detailed re-

quirements and needs in such a framework, further in-

terviews are required. Nevertheless, we are confident

that our initial interview study has captured a num-

ber of highly relevant features and requirements for

further discussion and refinement. While we have fo-

cused on the educational and learning needs of Com-

puter Science and Business Informatics, we believe

that our framework can be applied in a broader con-

text. To further confirm the generalizability of the fea-

tures, additional evaluation is needed, for example in

the form of a dedicated utility study of individual fea-

tures.

Concerning internal validity and analysis and in-

terpretation of results, several researchers were in-

volved in the process. The extracted interview data

was extensively discussed among all researchers and

groupings, features, and respective risks were dis-

cussed until consensus was achieved.

5 ROADMAP

Our interviews have confirmed a pressing need for

additional support and tools to facilitate AoL at the

course and curriculum level. Furthermore, as we ex-

pected, privacy and (mis-)use of data were mentioned

as one of the primary concerns, alongside the accep-

tance and adoption of yet another tool in practice.

One surprising takeaway from the interviews was

that certain features, we assumed would be bene-

ficial for a particular group of stakeholders, (e.g.,

course instructors) would be equally valuable, for ex-

ample, for students when presented (i.e., visualized)

in a slightly different way. Another important as-

pect is the incorporation of existing university pro-

cesses where the framework needs to be embedded.

While individual Learning Analytics solutions require

less “global” (i.e., university-wide) effort, connecting

and analyzing data across courses, or even study pro-

grams, needs to take into account existing roles and

their respective responsibilities (e.g., dean of study

who is in charge of overseeing several programs).

Based on our findings and observations from the in-

terviews, we have compiled three main tasks as part

of our next steps that will provide (1) a data protec-

tion concept and transparency guidelines, (2) an in-

formation model for relevant data and roles, and (3) a

reference architecture and implementation.

• Interfaces & Data. Visualizing and analyzing data

was deemed as one of the key aspects, requiring the

integration of various interfaces and external data.

Initial steps include a detailed analysis to identify

key systems, the information they offer, and the

derivation of a relevant information model. The

ability to visualize and analyze data, was deemed as

one of the key aspects. This in turn requires diverse

interfaces and external data to be integrated. One

of the first activities will be an in-depth analysis,

identifying key systems and what kind of information

these can provide and deriving an information model

of relevant data.

• Data Protection and & Privacy Concept. As in-

terview participants have indicated clear concerns re-

garding data protection and the threat of data being

available to a (too) broad range of users, a key activity

– interconnected with the information model – is the

creation of a role and access concept guided by exist-

ing university processes and access rights. This will

allow us to specify what data is required and who will

be eligible to view certain information or statistical

analysis. In addition, to mitigate the fear of misuse,

transparency is a second key factor. We will work on

creating clear guidelines on what information is pro-

vided, how it is used, and how it can be used (e.g.,

only in anonymized and aggregated form).

• Learning Objective Definition & Management.

The effective management of learning objectives,

both on curriculum and on course level, is a central

part of our proposed framework. Learning Objec-

tives will be systematically defined using a standard-

ized taxonomy, such as Bloom’s Taxonomy (Thomp-

son et al., 2008; McNeil, 2011), to ensure clarity and

measurability. They will then be mapped to specific

learning activities and assessments, facilitating track-

ing and analytics.

As part of these activities, we are planning on con-

ducting focused workshops with stakeholders to per-

form a more in-depth requirement elicitation. Based

on an initial proof-of-concept prototype, we are cur-

rently building based on the collected features, we

will follow a participatory design approach (Trischler

et al., 2018) to actively engage users from all three

levels, in all phases of the design. One concrete out-

come from this second analysis is a reference archi-

tecture of our framework that will serve as a blueprint

for implementing interfaces and a concrete frame-

work instance.

EKM 2024 - 7th Special Session on Educational Knowledge Management

642

6 CONCLUSION

In this paper, we have presented findings from a se-

ries of preliminary interviews, with the main aim of

collecting requirements for a Shared Learning Ana-

lytics Framework. In summary, our investigation has

revealed essential insights into the characteristics and

potential hazards associated with such a framework.

We identified six fundamental features, related to cur-

riculum management, study planning and statistics,

quality assurance, course harmonization, and integra-

tion of other systems. Moreover, stakeholders high-

lighted six critical risks that could impede the adop-

tion of such a framework, ranging from privacy con-

cerns to development and maintenance challenges,

along with potential negative side effects. Building

upon these initial findings, our next steps involve con-

ducting focused stakeholder workshops to further re-

fine and extend our requirements and subsequently

derive a comprehensive design and reference archi-

tecture.

REFERENCES

Atif, A., Richards, D., Bilgin, A., and Marrone, M. (2013).

Learning analytics in higher education: a summary

of tools and approaches. In Proc. of the Ascilite-

australian society for computers in learning in ter-

tiary education annual conference, pages 68–72. Aus-

tralasian Society for Computers in Learning in Ter-

tiary Education.

Bakharia, A., Corrin, L., De Barba, P., Kennedy, G.,

Ga

ˇ

sevi

´

c, D., Mulder, R., Williams, D., Dawson, S.,

and Lockyer, L. (2016). A conceptual framework link-

ing learning design with learning analytics. In Proc.

of the 6th International Conference on Learning Ana-

lytics and Knowledge, pages 329–338.

Barthakur, A., Joksimovic, S., Kovanovic, V., Richey, M.,

and Pardo, A. (2022). Aligning objectives with as-

sessment in online courses: Integrating learning ana-

lytics and measurement theory. Computers & Educa-

tion, 190:104603.

Clow, D. (2013). An overview of learning analytics. Teach-

ing in Higher Education, 18(6):683–695.

Divjak, B., Svetec, B., and Horvat, D. (2023). Learning an-

alytics dashboards: What do students actually ask for?

In Proc. of the 13th International Learning Analytics

and Knowledge Conference, pages 44–56.

Ferguson, R. (2014). Learning analytics: drivers, develop-

ments and challenges. Italian Journal of Educational

Technology, 22(3):138–147.

Ga

ˇ

sevi

´

c, D., Dawson, S., Rogers, T., and Gasevic, D.

(2016). Learning analytics should not promote one

size fits all: The effects of instructional conditions in

predicting academic success. The Internet and Higher

Education, 28:68–84.

Hilliger, I., Aguirre, C., Miranda, C., Celis, S., and P

´

erez-

Sanagust

´

ın, M. (2020). Design of a curriculum an-

alytics tool to support continuous improvement pro-

cesses in higher education. In Proceedings of the 10th

International Conference on Learning Analytics and

Knowledge, pages 181–186.

Ifenthaler, D. and Yau, J. Y.-K. (2020). Utilising learn-

ing analytics to support study success in higher edu-

cation: a systematic review. Educational Technology

Research and Development, 68:1961–1990.

Khalil, M., Prinsloo, P., and Slade, S. (2022). A comparison

of learning analytics frameworks: A systematic re-

view. In Proc. of the 12th International Learning An-

alytics and Knowledge Conference, pages 152–163.

Kitto, K., Sarathy, N., Gromov, A., Liu, M., Musial, K., and

Buckingham Shum, S. (2020). Towards skills-based

curriculum analytics: Can we automate the recogni-

tion of prior learning? In Proceedings of the 10th

International Conference on Learning Analytics and

Knowledge, pages 171–180.

Klein, C., Lester, J., Rangwala, H., and Johri, A. (2019).

Learning analytics tools in higher education: Adop-

tion at the intersection of institutional commitment

and individual action. The Review of Higher Educa-

tion, 42(2):565–593.

Kotonya, G. and Sommerville, I. (1998). Requirements en-

gineering: processes and techniques. Wiley Publish-

ing.

Kumar, A. N., Becker, B. A., Pias, M., Oudshoorn, M.,

Jalote, P., Servin, C., Aly, S. G., Blumenthal, R. L.,

Epstein, S. L., and Anderson, M. D. (2023). A com-

bined knowledge and competency (ckc) model for

computer science curricula. ACM Inroads, 14(3):22–

29.

Lakhal, S. and S

´

evigny, S. (2015). The aacsb assurance of

learning process: An assessment of current practices

within the perspective of the unified view of validity.

The International Journal of Management Education,

13(1):1–10.

Long, C., Bernoteit, S., and Davidson, S. (2020).

Competency-based education: A clear, equitable path

forward for today’s learners. Change: The Magazine

of Higher Learning, 52(6):30–37.

McNeil, R. C. (2011). A program evaluation model: Us-

ing bloom’s taxonomy to identify outcome indicators

in outcomes-based program evaluations. Journal of

Adult Education, 40(2):24–29.

Mimirinis, M. (2007). Constructive alignment and learn-

ing technologies: Some implications for the quality of

teaching and learning in higher education. In Proc. of

the 7th IEEE International Conference on Advanced

Learning Technologies, pages 907–908. IEEE.

OpenAI (2023). Whisper. https://openai.com/research/wh

isper. [Online; Accessed 01-10-2023].

Pardo, A. and Siemens, G. (2014). Ethical and privacy prin-

ciples for learning analytics. British journal of educa-

tional technology, 45(3):438–450.

Pluff, M. C. and Weiss, V. (2022). Competency-based ed-

ucation: The future of higher education. New Models

Learning Analytics Support in Higher-Education: Towards a Multi-Level Shared Learning Analytics Framework

643

of Higher Education: Unbundled, Rebundled, Cus-

tomized, and DIY, pages 200–218.

Rainwater, T. (2016). Teaching and learning in competency-

based education courses and programs: Faculty and

student perspectives. The Journal of Competency-

Based Education, 1(1):42–47.

Seaman, C. B. (1999). Qualitative methods in empirical

studies of software engineering. IEEE Transactions

on Software Engineering, 25(4):557–572.

Stewart, V. M. (2021). Competency-based education: Chal-

lenges and opportunities for accounting faculty. The

Journal of Competency-Based Education, 6(4):206–

210.

T. Fenzl and P. Mayring, P. (2023). QCAamap. https://ww

w.qcamap.org. [Online; Accessed 01-10-2023].

Teixeira, P. N. and Shin, J. C., editors (2020). Learning

Objectives, pages 1996–1996. Springer Netherlands,

Dordrecht.

Thompson, E., Luxton-Reilly, A., Whalley, J. L., Hu, M.,

and Robbins, P. (2008). Bloom’s taxonomy for cs

assessment. In Proc. of the Tenth Conference on

Australasian Computing Education-Volume 78, pages

155–161.

Trischler, J., Pervan, S. J., Kelly, S. J., and Scott, D. R.

(2018). The value of codesign: The effect of cus-

tomer involvement in service design teams. Journal

of Service Research, 21(1):75–100.

Tsai, Y.-S., Rates, D., Moreno-Marcos, P. M., Mu

˜

noz-

Merino, P. J., Jivet, I., Scheffel, M., Drachsler, H.,

Kloos, C. D., and Ga

ˇ

sevi

´

c, D. (2020). Learning an-

alytics in european higher education—trends and bar-

riers. Computers & Education, 155:103933.

Vasquez, J. A., Marcotte, K., and Gruppen, L. D. (2021).

The parallel evolution of competency-based educa-

tion in medical and higher education. The Journal of

Competency-Based Education, 6(2):e1234.

Viberg, O., Hatakka, M., B

¨

alter, O., and Mavroudi, A.

(2018). The current landscape of learning analytics

in higher education. Computers in human behavior,

89:98–110.

EKM 2024 - 7th Special Session on Educational Knowledge Management

644