Navigating the Landscape of Digital Competence Frameworks: A

Systematic Analysis of AI Coverage and Adaptability

Barbara Wimmer

a

, Irene Mayr

b

and Thorsten H

¨

andler

c

Ferdinand Porsche Mobile University of Applied Sciences (FERNFH), Austria

Keywords:

Competence Framework, Digital Competences, Systematic Mapping Study, Artificial Intelligence (AI),

Generative AI, Adaptability, Framework Comparison.

Abstract:

The rapidly evolving capabilities of generative artificial intelligence (AI) in understanding and generating

texts and images challenge the role of human competences in existing and redesigned processes and infras-

tructures in many domains. In addition to aspects such as automating complex tasks and decision-making

processes, the question arises as to which human competences are required to deal efficiently and confidently

with these newly emerging AI-driven opportunities, manifesting in form of new tools, methods, processes and

infrastructures, including the design and use of hybrid human-AI ecosystems. A variety of digital competence

frameworks (DCFWs) is available to support practitioners from didactic and business contexts in specifying

and measuring such competences. In this paper, we systematically analyze established DCFWs and compare

the provided means to cope with the challenges from rapidly evolving generative AI. For this purpose, we

present the results of a systematic mapping study (SMS) based on 25 identified international DCFWs, focus-

ing on the degree of AI coverage and adaptability. The resulting structural overview and comparative analysis

provides orientation and aims to empower both individual practitioners and organizations to evaluate, select,

combine, contextualize, adapt and apply existing frameworks based on their individual application purposes.

1 INTRODUCTION

OpenAI’s launch of ChatGPT (OpenAI, 2022) at the

end of 2022 has brought attention (Johnson, 2022) to

large language models (LLM) and generative AI in

general. It also raised awareness to questioning AI’s

impact on human competences (Shiohira, 2021). A

rapidly transforming AI landscape continuously im-

pacts and challenges society, economy and educa-

tion. As new tools and applications are emerging,

they not only represent technological advances, but

also raise critical questions about how these changes

will transform processes, infrastructures, and the role

of humans (Llaneras et al., 2023; Nature Machine

Intelligence, 2023). For instance, questions arise

such as to what extent practitioners and organizations

are prepared for the effects of massive technologi-

cal shifts on labour (Zarifhonarvar, 2023) and edu-

cation (Chiu et al., 2023), and how they can be sup-

ported by research to adapt accordingly. To address

a

https://orcid.org/0009-0005-9547-2223

b

https://orcid.org/0009-0009-0147-2185

c

https://orcid.org/0000-0002-0589-204X

this issue, analyzing established Digital Competence

Frameworks (DCFW) could provide insights by elab-

orating their flexibility to adapt to new contexts in

general as well as their integration of AI. Therefore

this paper presents a comparative analysis of estab-

lished DCFWs, aiming to provide orientation in an

ever evolving field, trying to keep up with the pace of

technological development. In this endeavour, a sys-

tematic mapping study (SMS) was conducted to ex-

amine, compare and map a selection of 25 DCFWs in

an iterative process. Based on a structural overview of

DCFW characteristics, we further investigated criteria

for categorizing the DCFWs in terms of adaptability

for specifying competences and coverage of AI com-

petences. In particular, this paper provides the follow-

ing contributions:

1. We present a systematic overview and compari-

son of established digital competence frameworks

(DCFWs).

2. In addition, we report on the results of a compara-

tive analysis of the extent to which DCFWs (a) ad-

dress AI competences and (b) provide adaptability

for competence specification.

Wimmer, B., Mayr, I. and Händler, T.

Navigating the Landscape of Digital Competence Frameworks: A Systematic Analysis of AI Coverage and Adaptability.

DOI: 10.5220/0012752900003693

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 16th International Conference on Computer Supported Education (CSEDU 2024) - Volume 1, pages 653-667

ISBN: 978-989-758-697-2; ISSN: 2184-5026

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

653

The resulting structural overview with emphasis

on the level of adaptability for competence specifica-

tion by users as well as the coverage of AI compe-

tences, provides orientation and guidance for both in-

dividual practitioners and organizations. For instance,

it supports experts in didactic or business contexts

seeking to leverage such frameworks for specifying

AI-related competences, such as for defining learning

objectives, assessing actual competences, or specify-

ing requirements for project staffing.

Paper Structure. The remainder of this paper is

structured as follows: Section 2 provides background

and related work on digital competences and frame-

works. The applied approach and research methodol-

ogy are outlined in Section 3. In Section 4, we present

the findings of our systematic mapping study (SMS)

in terms of the analysis and comparison of frame-

works. Section 5 discusses limitations and challenges.

Finally, Section 6 concludes the paper.

2 BACKGROUND & RELATED

WORK

In this section, we discuss definitions and relevant

concepts of digital competences (Section 2.1), outline

structural components of digital competence frame-

works (DCFWs; Section 2.2), and give an overview

of how these frameworks have been investigated in

previous research (Section 2.3).

2.1 Digital Competences

Exploring the terminology around digital compe-

tences (DC) in scientific literature as well as in the

analyzed DCFWs, it becomes manifest that on the one

hand the term ’digital competence’ lacks a clear def-

inition. On the other hand, there are multiple terms

that are used synonymously or similarly next to each

other, such as ’competence’, ’literacy’ and ’skill’; also

see (Ferrari et al., 2012; Mart

´

ınez et al., 2021; Mat-

tar et al., 2022; S

´

anchez-Canut et al., 2023). Under-

standing DC as a boundary concept, (Ilom

¨

aki et al.,

2016) emphasizes that, considering the pace of tech-

nological development, a definition for the concept

of DC should be wide enough to accommodate these

circumstance and therefore not to strongly be driven

by a technology perspective, which is supported by

(Mart

´

ınez et al., 2021) concluding that it ”consoli-

dates the techno-social perspective for empowerment

and technological appropriation, which exceeds the

operational use of tools”. For this paper, we refrain

from including a category for these definitions, be-

cause it would go beyond the intended scope and does

not quite touch on our research questions. Also pre-

vious work on comparing DCFWs has already dealt

with this topic thoroughly. Confronted with 16 differ-

ent concepts, we pragmatically decided to adopt the

term ’competence’, as used by the majority of the re-

viewed DCFWs.

2.2 Digital Competence Frameworks

In the following, we outline the purpose and struc-

tural components of digital competence frameworks

(DCFWs). In general, frameworks, taxonomies and

models play a crucial role in structuring and sys-

tematizing competences. They provide a general

orientation to help organizations remain competitive

and meet current (digital) standards. There are al-

ready well established generic models and frame-

works in the education sector, such as Bloom’s Tax-

onomy of Learning Objectives (Krathwohl, 2002)

or the Technological Pedagogical Content Knowl-

edge (TPACK) framework, which ”attempts to cap-

ture some of the essential qualities of teacher knowl-

edge required for technology integration in teaching”

(Mishra and Koehler, 2006). In addition, also frame-

works for specific application domains such as soft-

ware refactoring are in usage (Haendler and Neu-

mann, 2019). Such structures are essential to provide

clear and consistent benchmarks for the development

of competencies. Digital Competence Frameworks

are supposed to provide a comprehensive tool to en-

sure a common understanding of what constitutes DC

by fostering standardization and consistency. They

give guidance for digital skill development as well as

assistance to make informed design choices, therefore

allowing continuous relevance of curricula and train-

ing programs regarding emergent technologies (UN-

ESCO, 2023). Generally, a DCFW consists at least

of the defined competence areas or dimensions, often

sub-divided in further competences, and proficiency

levels specific to each DCFW (UNESCO, 2023).

Current research attempts to span the bridge from

digital to AI competences. By exploring the concepts

of AI literacy, (Ng et al., 2021) derives general rec-

ommendations for AI literacy in education. From an

HCI perspective, (Long et al., 2022) extracts ”a set of

AI literacy competences and design considerations”,

while (Ng et al., 2023) identifies challenges teach-

ers may be facing when including AI tools into their

teaching practice (e.g., ethical concerns). (Santana

and D

´

ıaz-Fern

´

andez, 2023) examines competences

for AI from an HRM viewpoint presenting a ”sys-

tematization and representation of the relationship be-

tween employee competences and AI”. The Canadian

AI competency Framework (Dawson College, 2021)

EKM 2024 - 7th Special Session on Educational Knowledge Management

654

defines three competence domains in context with AI,

encompassing competences focusing on project de-

velopment (technical), on project planning and scal-

ing (business) and on implementation and utilization

(human) while integrating ethical competences into

all domains. (Sattelmaier and Pawlowski, 2023) pro-

pose a framework for K-12 education, introducing ’AI

competencies’ (technical focus) and ’emerging com-

petencies’ (AI competences with a focus on genera-

tive AI), including ethical, social, and privacy impli-

cations on both levels.

2.3 Digital Competence Frameworks in

Comparison

Most previous research on comparing and analyzing

DCFWs has narrowed down their sample by aiming

explicitly at teachers (Cabero-Almenara et al., 2020;

Yang et al., 2021; Tomczyk and Fedeli, 2021; Be-

nali and Mak, 2022) or education in general (Mattar

et al., 2022), although with different focus and meth-

ods. (S

´

anchez-Canut et al., 2023) specifically ana-

lyzes ”the existing definitions of professional DC, the

frameworks used to develop it at the workplace, and

the gender differences observed”. (Ferrari, 2012) de-

cided on a broader scope, aiming for a ”fair distri-

bution of target groups that the frameworks are ad-

dressed to”. One approach that all the work just

mentioned has in common is aligning and compar-

ing DCFWs by competence area/dimension and pro-

ficiency levels (if given), examining them thoroughly

and sufficiently, which is why we refrain from an in-

depth analysis providing a quantitative overview in-

stead. We observed that the need to differentiate be-

tween target group and target audience is certainly

recognized (Rosado and Belisle, 2006; Mattar et al.,

2022), however there is little consistency to be found

when introducing these categories to clearly make

this distinction. Facing a similar challenge, we will

discuss our approach in Section 3.3. The category

self-assessment is systematically defined by (Rosado

and Belisle, 2006; Ferrari, 2012) and included by

(Tomczyk and Fedeli, 2021; Yang et al., 2021), rais-

ing further questions concerning the validity of self-

assessment tools and thus opens up a research direc-

tion which is beyond the scope of this work. Another

interesting focus is presented by (Cabero-Almenara

et al., 2020; Mattar et al., 2022) validating The Digi-

tal Competence Framework for Citizens (DIGCOMP)

and European Framework for the Digital Compe-

tence of Educators (Benali and Mak, 2022), both

DCFWs providing the basis for a new DCFW ecosys-

tem. Comparing DIGCOMPs competences and com-

petence areas with national digital competence curric-

ula for schools, was chosen by (Siddiq, 2018) (Nor-

way and Sweden) and (Hazar, 2019) (Turkey). Fi-

nally, most literature agrees that the characteristics of

DCFWs are depending on context and cultural factors

(Ilom

¨

aki et al., 2016; Yang et al., 2021) and objec-

tive and purpose and therefore are fluent. Although

there has been research about the concepts aiming to

define DC, the structure and dimensions of compe-

tence areas and proficiency levels in detail, little re-

gard has been given to analysing DCFWs in view of

adaptability and AI, except (Mattar et al., 2022) point-

ing out the few FWs already updated according to

the impact of emerging tech, concluding that ”digi-

tal competence frameworks need constant updates as

technologies continuously evolve”. This paper there-

fore aims to investigate this gap, by identifying cate-

gories to structure information about DCFWs, analyz-

ing how much thought has been given to adaptability

and exploring if and how (generative) AI was consid-

ered to be integrated.

3 SYSTEMATIC MAPPING

PROCESS

This section outlines the applied research methodol-

ogy. In order to develop an overview of established

digital competence frameworks (DCFWs), our ap-

proach is following the process of a systematic map-

ping study (SMS), which represents a kind of system-

atic literature review, but with an emphasis on elab-

orating a structural overview over a certain research

domain by identifying appropriate means for quanti-

fying and classifying the field (Kitchenham and Char-

ters, 2007; Petersen et al., 2008; Ralph, P. et al.,

2021). Proceeding from usage in medicine research,

SMS nowadays represent a popular methodology in

technical fields such as software engineering or infor-

mation systems, see, e.g., (Zhao et al., 2021; Wolny

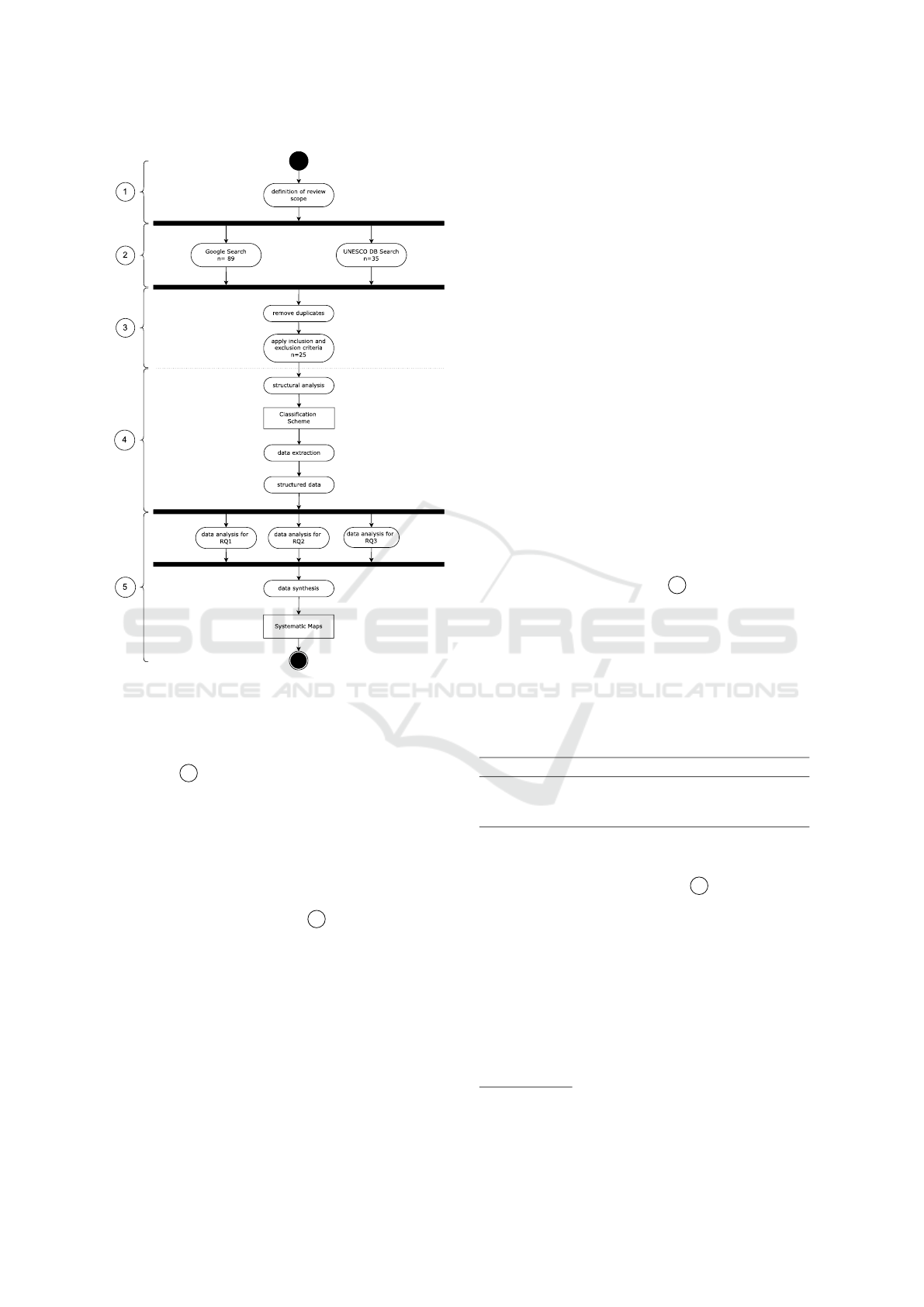

et al., 2017). Fig. 1 illustrates the applied research

process in terms of an activity diagram of the Uni-

fied Modeling Language (UML2) (Object Manage-

ment Group, 2017). Departing from defining the re-

view scope and corresponding research questions (see

1 in Fig. 1), we performed a combined structured

search for DCFWs (see 2

). We then filtered the

identified DCFWs by removing duplicates as well as

by applying inclusion and exclusion criteria (see 3

and Tab. 1). Based on a first coarse structural analy-

sis of the resulting DCFWs, we derived a classifica-

tion scheme suitable to categorize the DCFWs, and

extracted the corresponding data (see 4 ). The fol-

lowing Sections 3.1 to 3.3 provide details on these

steps.

Navigating the Landscape of Digital Competence Frameworks: A Systematic Analysis of AI Coverage and Adaptability

655

Figure 1: Applied research process to analyze established

DCFWs in terms of a systematic mapping study.

The resulting findings in terms of structural char-

acteristics of DCFWs and the derived systematic

maps (see 5 ) are reported in Section 4.

3.1 Review Scope & Research Questions

In order to address the question on the extent to which

established DCFWs are suitable for specifying com-

petences for generative AI, we defined the following

research questions (RQ1–3) as starting point for our

systematic mapping study (see 1 in Fig. 1).

RQ1. Which criteria are suitable to categorize

and compare digital competence frameworks

(DCFWs)?

RQ2. How do DCFWs provide adaptability for users

to specify competences?

RQ3. How do DCFWs address competences for ar-

tificial intelligence (AI), especially generative

AI?

3.2 Search & Selection of Frameworks

To identify established DCFWs, we conducted a

structured search combining the use of a search

engine and snowballing (Wohlin, 2014); for details,

see below. Since most DCFWs are not published in

terms of scientific literature, we combined Google

Scholar and Google as search engines, ensuring

not to oversee results. We defined and applied the

following search string:

(digital OR IT) AND (framework* OR reference* OR model*

OR taxonomy*) AND (competenc* OR literac* OR skill*) AND

AI

Additionally, we restricted the search to PDF files

published in English after 2017 (i.e., filetype:pdf, af-

ter:2017, and lang:en; also see Tab. 1). During liter-

ature search, a reference point we continuously stum-

bled upon was the UNESCO-UNEVOC database

1

providing a current overview of established DCFWs

for teachers, learners and citizens. The referenced

DCFWs can be searched by criteria relevant to the

design, content, and use of digital skills. In addi-

tion to the search engine (see 2 in Fig. 1), we used

this database to track the referenced DCFWs; also see

backward snowballing (Wohlin, 2014). In particular,

the UNESCO database has a total of 35 entries, struc-

tured into 27 DCFWs, five programs, two standards

and one tool. For the purpose of our study, we only re-

flected DCFWs in the database explicitly categorized

as a framework.

Table 1: Applied inclusion and exclusion criteria.

Inclusion Exclusion

categorized as framework,

applicable on at least local

level

not available in English,

published before 2017

After removing duplicates, we then filtered the

remaining DCFWs according to the defined inclu-

sion and exclusion criteria (see 3 in Fig. 1). In

particular, we decided to exclude DCFWs not avail-

able in English, in order to focus on internationally

accessible DCWFs–with the exception of DigComp

AT 2.3 (#06). In order to reflect the state-of-the-

art of DCFWs, we excluded DCFWs published be-

fore 2017. Also, for comparability reasons, DCFWs

which are not intended to be applicable on an at least

regional level, such as frameworks published by uni-

versities, were excluded. The application of these in-

clusion and exclusion criteria results in 25 DCFWs

1

Available at https://unevoc.unesco.org/home/Digital+

Competence+Frameworks.

EKM 2024 - 7th Special Session on Educational Knowledge Management

656

forming the basis for the further analysis (see 3 in

Fig. 1). The selected DCFWs were then systemat-

ically organized by structural basic categories (i.e.,

title, description, origin, target group(s), publisher,

year, geographical coverage). Information about the

DCFWs was extracted and analyzed by two authors

independently by examining the documents and web-

sites provided by the DCFWs. The results then

have been discussed and merged together in an iter-

ative process in order to identify and synthesize suit-

able categories for a classification scheme, accord-

ing to the guidelines for conducting systematic map-

ping studies in software engineering (Kitchenham and

Charters, 2007). This collection of structural charac-

teristics sets the foundation to answer RQ1 (see 4

in Fig. 1). For RQ2 and RQ3, we performed a sim-

ilar process, consisting of the following three steps:

(a) screening the DCFWs for relevant terms and cri-

teria, (b) defining hierarchical levels (extent to which

AI competences addressed and adaptability for users),

and, finally, (c) assessing which levels are met by the

DCFWs (see 5 in Fig. 1).

3.3 Classification Scheme

Here, we introduce the applied classification scheme

(see Tab. 2). The scheme is structured into dif-

ferent categories, each characterized by properties,

variations or levels, assigned to certain groups ad-

dressing the research questions (RQ1–3). The first

set of categories (basic) was derived from the UN-

ESCO database and validated through examining the

DCFWs’ documentations (i.e., regional scope, year

of publication and how each DCFW defines the tar-

get group it is supposed to serve). This data has

been amended by an identifier and code (i.e., DCFWs’

abbreviations as given). To collect data on how

the DCFWs are similar and where they differ (see

RQ1/focus in Tab. 2), we took a closer look on how

and to what extent (quantity) competence areas and

proficiency levels are defined by the DCFWs. There

are different approaches in related comparative work

to introduce the category target group, as discussed

in Section 2.3. Considering there are subtle differ-

ences when looking more closely at how DCFWs de-

fine whom they are aiming at, we sorted them accord-

ingly to the intended purpose for each target group,

finding four different aspects. First, there is an au-

dience, which can be understood as the end users,

such as students, learners or more broadly citizens,

which are not supposed to educate themselves along

any chosen DCFW. Then, there is the group of educa-

tors, who are able to contextualize a DCFW transfer-

ring it into their teaching practice as well as use self-

assessment tools as foundation to develop their own

DC. In terms of application, some DCFWs are sup-

posed to be used as a starting point to develop policies

or training programs, therefore aiming at stakeholders

like labour market (social) partners, or non govern-

mental organizations (NGOs). Another perspective,

is whose purpose it is to adapt a DCFW by either

updating or further developing it, which are funda-

mentally represented by public or private stakehold-

ers, like curriculum developers or policy makers. In

sum, target group is a complex category, strongly de-

pending on purpose and context. For this reason, we

introduced the category sector. Moreover, to address-

ing RQ1/supplement, we collected data about licence

information, forming the basis for adaptability. An-

other important category related to adaptability is the

extent to which support for practical application is

given. These categories are extended by measuring

tools and recommendations for self-assessment. In

Section 4.1, we will explore the RQ groups basics, fo-

cus, and supplement in detail. To distinguish between

the extent to which each DCFW provides adaptability

for competence specification (RQ2), as well as if and

how competences for (generative) AI are addressed

(RQ3), we propose hierarchical levels (0–3), which

we will presented in Sections 4.2 and 4.3.

4 MAPPING RESULTS

In this section, we present the results of our sys-

tematic mapping. In Section 4.1, we provide an

overview of the structural characteristics (such as re-

gional and sector-specific scopes) of DCFWs. Sec-

tion 4.2 then provides details on how the frameworks

support adaptability for users to specify competences.

Section 4.3 reports on how AI competences are ad-

dressed by DCFWs. Finally, Section 4.4 discusses the

results of mapping of adaptability and AI coverage.

4.1 Characteristics of Digital

Competence Frameworks

In order to address RQ1 (which criteria are suitable

to categorize and compare DCFWs?), we collected

the structural characteristics of 25 DCFWs (according

to the category groups basic, focus, and supplement

in Tab. 2). These structural characteristics are

presented in two parts. Tab. 3 provides basics such

as the framework title, regional scope and the year of

publication. In turn, Tab. 4 provides data on the other

groups (i.e., focus, supplement, levels of adaptability

and (gen)AI coverage). As two key criteria to dis-

tinguish the DCFWs, Fig. 2 illustrates the DCFWs’

Navigating the Landscape of Digital Competence Frameworks: A Systematic Analysis of AI Coverage and Adaptability

657

Table 2: Applied classification scheme to analyze digital competence frameworks (DCFWs).

Category Characteristics RQ Category Group

id (#) assigned identifier

RQ1 basic

code assigned abbreviation (code) for clarity

framework title full publication title

region local, national, international, global

year year of publication (latest version)

targeted sector sector aimed at

RQ1 focusproficiency quantity of proficiency levels

competence areas quantity of competence areas

licence information given licence information

RQ1 supplementline application guidance [y/n] support with utilization and implementation

self-assessment [y/n] tools or recommendations for self-assessment

level of adaptability

0 - none

RQ2 adaptability

1 - some context, templates

2 - application scenarios, guidelines

3 - specifically designed dimension for adaptability

level of AI coverage

0 - none

RQ3 (generative) AI

1 - as an example for technology

2 - ethics, privacy; included into indicators

3 - application scenarios

McKinsey (1),

Profuturo (1),

SFIA Foundation (1),

UNESCO (2)

Association of International Certified Professional

Accountants (1),

European Commission (3),

European Training Foundation (1),

GSMA Global Organisation (1),

UNESCO and Broadband Commission (1),

UNICEF Regional Office for Europe and Central

Asia (1)

Australia (2),

Austria (1),

Canada (1),

England, UK (1),

Norway (1),

Singapore (1),

South Africa (1),

Spain (1),

USA (1),

Wales, UK (1)

Quebec, Canada (1)

education

48%

teacher

education/training

16%

TVET, VET, further

education

16%

IT professionals

8%

accountancy and

financial services

4%

IT, mobile tech

4%

public sector

4%

global 20%

international 32%

national 44%

local 4%

(a)

(b)

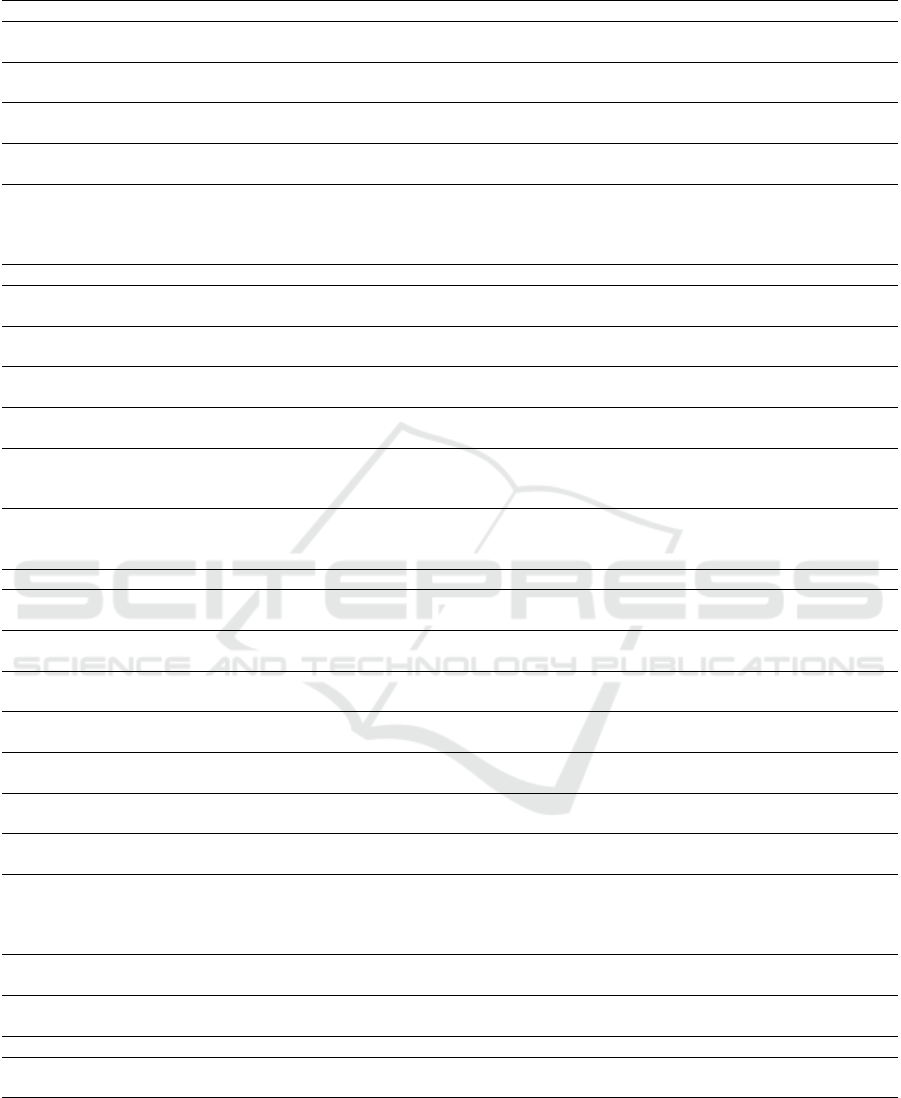

Figure 2: Regional (a) and sector-specific characteristics (b) of analyzed digital competence frameworks (DCFWs).

operational bounds (Rosado and Belisle, 2006) in

terms of the distribution of addressed regions and

sectors (Fig. 2 (a) and (b), respectively).

Regional Scope. In particular, 44% (11) DCFWs are

intended to be applied at a national and 32% (8) at an

international level, 20% (5) are aiming at a global au-

dience, just one DCFW operates within a local scope

(4%); also see Fig. 2 (a). Among the DCFWs labeled

national, five are European (AT #06, UK #08 and

#12, NO #20, ES #03), two from Australia (#02, #11),

two from North America (CS #25, US #24), one from

Africa (ZA #19) and one from South-East Asia (SG

#23). The label international includes the follow-

ing organizations; European Commission (#09, #07,

#04), European Training Foundation (#14), UNESCO

and Broadband Commission (#30), GSMA Global Or-

ganization (#31), Association of International Cer-

tified Professional Accountants (#32), UNICEF Re-

gional Office for Europe and Central Asia (#33). Pub-

lishers for a global audience are; UNESCO (#10,

#17), Profuturo (#15), the SFIA Foundation (#22) and

McKinsey (#18).

Targeted Sector. Moreover, as shown in detail in

Fig. 2 (b), 48% of the DCFWs are aiming at the edu-

cational sector (#03, #04, #06, #07, #08, #09, #10,

#13, #14, #15, #21, #25), 16% at teacher educa-

tion/training (#17, #19, #20, #24), 16% at vocational

EKM 2024 - 7th Special Session on Educational Knowledge Management

658

Table 3: Structural characteristics of analyzed digital competence frameworks (part 1).

# Code Reference Framework Region Year

#01 AIDTC

(Balbo Di Vinadio

et al., 2022)

Artificial Intelligence and Digital Transformation Competen-

cies for Civil Servants

International 2022

#02 AWDSF

(Gekara, V, Snell, D,

2019)

Skilling the Australian Workforce for the Digital Economy -

The Australian Workforce Digital Skills Framework

National 2019

#03 CDCFT (INTEF, 2017)

Common Digital Competence Framework for Teachers (CD-

CFT)

National 2017

#04 CFRIDiL (Adami et al., 2019)

Common Framework of Reference for Intercultural Digital Lit-

eracies (CFRIDiL)

International 2019

#05 CGMA

(Association of

International Cer-

tified Professional

Accountants, 2019)

Competency Framework. Digital Skills (CGMA) International 2019

#06 DCAT (N

´

arosy et al., 2022) DigComp 2.3. AT National 2022

#07 DCEDU (Redecker, 2017)

European Framework for the Digital Competence of Educators

(DigCompEdu)

International 2017

#08 DCFWA

(Education Wales,

UK, 2022)

Digital Competence Framework National 2022

#09 DCOMP

(Vuorikari et al.,

2022)

The Digital Competence Framework for Citizens (DigComp

2.2)

International 2022

#10 DLGF (Law et al., 2018)

Digital Literacy Global Framework - A Global Framework of

Reference on Digital Literacy Skills for Indicator 4.4.2

Global 2018

#11 DLSF

(Department of Ed-

ucation, Skills and

Employment., 2020)

Digital Literacy Skills Framework (DLSF) National 2021

#12 DTPF

(Education and

Training Foundation,

2019)

Digital Teaching Professional Framework National 2019

#13 EDCF (Siina et al., 2022) Educators’ Digital Competency Framework International 2022

#14 ETFM

(European Training

Foundation, 2022)

ETF READY Model (Reference model for Educators’ Activi-

ties and Development in the 21st-centurY)

International 2022

#15 GFEDC

(Trujillo S

´

aez et al.,

2020)

The Global Framework for Educational Competence in the Dig-

ital Age

Global 2020

#16 GSMA (Jacobs et al., 2021)

Developing mobile digital skills in low- and middle-income

countries

International 2021

#17 ICTCFT (Butcher, 2018)

UNESCO ICT Competency Framework for Teachers (ICT

CFT) Version 3

Global 2018

#18 MKIN (Dondi et al., 2019)

Defining the skills citizens will need in the future world of work

(McKinsey)

Global 2019

#19 PDFDL

(Department of Edu-

cation. SA, 2019)

Professional Development Framework for Digital Learning National 2019

#20 PFDK

(Kelentri

´

c et al.,

2017)

Professional Digital Competence Framework for Teachers National 2017

#21 QCDCF

(Minist

`

ere de

l’

´

Education et de

l’Enseignement

sup

´

erieur, 2019)

Quebec Digital Competency Framework Local 2019

#22 SFIA

(SFIA Foundation,

2021)

Skills Framework for International Age (SFIA - 8) Global 2021

#23 SKILL

(Government of Sin-

gapore, 2022)

SkillsFuture - Skills Framework for Infocomm Technology National 2022

#24 TETC (Foulger et al., 2017) Teacher Educator Technology Competencies National 2017

#25 UUEMLF (Mediasmarts, 2022)

USE, UNDERSTAND & ENGAGE: A Digital Media Literacy

Framework for Canadian Schools

National 2022

education and training (#02, #11, #12), 8% at IT pro-

fessionals (#22, #23) while 4% each are directed at

accountancy and financial services (#05), IT - mobile

technology (#16), and public sector (#01).

Competence Areas and Proficiency Levels. In ad-

dition to these criteria, the DCFWs differ widely re-

garding competence areas ranging from 2 to 20 and

proficiency levels from 0 to 9.

Navigating the Landscape of Digital Competence Frameworks: A Systematic Analysis of AI Coverage and Adaptability

659

Table 4: Structural characteristics of analyzed digital competence frameworks (part 2).

# Code Sector

Prof

Level

Comp

Areas

Licence

Appl.

Guide

Self-

Assess

Level

Adapt

Level

AI

#01 AIDTC public sector 3 3 CC-BY-SA 3.0 IGO y y 2 3

#02 AWDSF

vocational education

and training (VET)

system

5 4 CC BY 3.0 AU y y 1 1

#03 CDCFT education 6 5 CC BY SA n n 1 1

#04 CFRIDiL education 3 3+1 n/a y y 3 0

#05 CGMA

accountancy and fi-

nancial services

4 6 n/a n n 0 0

#06 DCAT education 8 6

CC-BY-NC-ND-

3.0-AT

y y 1 2

#07 DCEDU education 6 6

Reuse authorized,

provided source is

ack

y y 1 0

#08 DCFWA education 5 4/12 n/a y n 1 0

#09 DCOMP education 4 5 CC BY 4.0 y y 2 3

#10 DLGF education - 7 CC-BY-SA 3.0 IGO y n 2 0

#11 DLSF

vocational education

and training (VET)

system

5 2 CC BY-NC-SA 3.0 y y 1 0

#12 DTPF

technical vocational

education and training

(TVET)

3 7 n/a y n 2 2

#13 EDCF education - 20

n/a but funded by

the EU

y y 1 0

#14 ETFM education - 6+1 CC BY 4.0 y y 3 0

#15 GFEDC education 3 3 n/a y n 1 0

#16 GSMA IT, mobile technology 3 6 n/a y y 2 0

#17 ICTCFT teacher-training 3 6 CC BY-SA 3.0 IGO y y 2 3

#18 MKIN future world of work - 13 n/a n n 0 0

#19 PDFDL teacher-training - 13 n/a n y 2 0

#20 PFDK teacher education 3 7 CC BY-SA 3.0 NO n y 1 0

#21 QCDCF education - 12 n/a y n 2 2

#22 SFIA IT professionals 7 6

free for personal

use, commercial

license

y y 2 0

#23 SKILL IT professionals - 8 n/a y y 2 1

#24 TETC teacher education - 12 n/a y n 2 0

#25 UUEMLF education - 9 n/a y y 1 0

Supplemental Materials. 13 out of 25 DCFWs pro-

vide licence information, nine of which are licensed

as a derivative of CC BY, encouraging adaptations, ex-

cept #06 surprisingly using CC-BY-NC-ND, whereby

ND stands for No derivatives or adaptations of the

work are permitted. While #22 is offering a com-

mercial license but is free for personal use, #14 is

the only DCFW providing a policy for its adaptation

and translation. At least rudimentary supporting con-

tent or material (e.g., well-worded descriptors) to put

a DCFW into action is provided by 20 DCFWs. Tools

for the self-assessment of DC are either provided or

recommended by 17 DCFWs. Most DCFWs claim

to be easily adaptable, some of them –especially with

teacher focus– can be used as a template for contextu-

alization, but do actually not promote adaptability. 20

out of 25 DCFWs offer resources in terms of guidance

on how to apply the framework, by either providing

a dedicated chapter or section in the main document

(#02, #09, #10, #14, #17, #19), online platforms (#06,

#08, #16, #22. #23, #24, #25), examples for differ-

ent types of content, either through descriptors (#04,

#13, #15), sample activities (#07,#11, #21), attitudes

(#01), or using a model (#12).

EKM 2024 - 7th Special Session on Educational Knowledge Management

660

Table 5: Applied levels of adaptability for specifying com-

petences by DCFW users.

Level 0 Level 1 Level 2 Level 3

not men-

tioned

context

specific

tem-

plates for

teaching

practice

guidelines

and sce-

narios for

practical

application

specifically

designed

(flexi-)

dimensions

Table 6: Applied levels of AI coverage.

Level 0 Level 1 Level 2 Level 3

not men-

tioned

illustrative,

generic,

exemplary

context

specific,

some

related

content

embedded,

multidi-

mensional

4.2 Adaptability

This section addresses RQ2 (how do DCFWs pro-

vide adaptability for users to specify competences?).

Drawing from criteria for the four hierarchical levels

of adaptability (Tab. 5), we discuss details concern-

ing the DCFWs’ adaptability for competence speci-

fication. In psychology, adaptability can be defined

as ”the capacity to make appropriate responses to

changed or changing situations” (VandenBos, 2007).

This definition corresponds with our research focus,

investigating to what capacity DCFWs are prepared

for technological (AI) shifts. To provide a system-

atic categorization of how a DCFW is supposed to be

used, contextualized, applied or adapted for specify-

ing competences, we mapped them to one of four hi-

erarchical levels, which are defined in the following.

Adaptability Levels.

• In particular, Level 0 is encompassing DCFWs

delivering no results for searching the documents

for the terms adapt*, flex*, and change* or from

data collected while scanning the DCFWs.

• Those DCFWs mapped to Level 1 aren’t explic-

itly providing adaptability but can be understood

as tangible templates for stakeholders with the ex-

pertise to use and foremost contextualize them.

• At Level 2, DCFWs are characterized by provid-

ing supplemental material, supporting contextual-

ization, implementation or adaptation.

• DCFWs at Level 3 are anticipating technological

change by adding specific dimensions for adapta-

tion to new contexts. The DCFW characteristics

regarding adaptability are described below.

Adaptability Mapping of DCFWs.

Level 0. Two DCFWs are mapped to this level, #05

(Association of International Certified Professional

Accountants, 2019) specifically aims at accountants

not necessarily requiring adaptability. While #18

(Dondi et al., 2019) presents 13 skill groups derived

from their research, we understand it as a report giv-

ing recommendations.

Level 1. Aiming at vocational education/training, #02

is demonstrating ”how [it] can be applied in digi-

tal skills gap analysis” (Gekara, V, Snell, D, 2019).

Targeting the same sector, #11 depicts a subtle gran-

ulated structure with well documented components,

supported by exemplary activities (Department of Ed-

ucation, Skills and Employment., 2020). #06 and #03

are both adaptions from #09 into a national context,

the former (an already updated version) highlighting

low-threshold on using digital devices for a broader

audience (N

´

arosy et al., 2022). The latter aims to

be a reference for evaluating and developing teach-

ers DCs (INTEF, 2017), supplemented by an online

Digital Competence Portfolio for Teachers, has also

drawn from #07, itself a model to assess and de-

velop pedagogical DC by enabling utilization through

well defined sets of competences complemented by a

list of typical activities and a progression model (Re-

decker, 2017). Aiming to describe teachers’ DCs, #13

outlines 20 competences structured along four areas

of knowledge, offering a ”common frame of refer-

ence” to enable ”inclusive teaching and learning” (Si-

ina et al., 2022). Some DCFWs on this level are simi-

lar in presenting a number of competence areas struc-

tured along progression levels to enable contextual-

ization. We will briefly outline the key differences.

Part of a mandatory cross curricular skills framework,

#08 applies descriptors based on predefined Princi-

ples of Progression (DCF, ), supported by learning

pathways (Education Wales, UK, 2022). #20 is aim-

ing at professional development and ”the actual prac-

tice of the profession” (Kelentri

´

c et al., 2017) by de-

livering a straightforward template. While #25 does

not promote adaptability, it provides meaningful de-

scriptors and examples (Mediasmarts, 2022). #15 de-

fines identities (i.e., teacher, citizen, connector) (Tru-

jillo S

´

aez et al., 2020) and can be contextualized but

is giving no indication on how to do so.

Level 2. Emphasizing adaptability as an presumed

attitude for civil servants, #01 is ”meant to present

governments with a usable set of AI and digital trans-

formation competencies” (Balbo Di Vinadio et al.,

2022). To support contextualization and adaptation

to different contexts, #09 provides reports and guide-

lines for implementation (Vuorikari et al., 2022). #10

is presenting a methodical model for Sustainable De-

velopment Goal (SDG) thematic Indicator 4.4.2 sup-

ported by pathways for tailoring competence grids to

specific needs (Law et al., 2018). #12, supports ap-

Navigating the Landscape of Digital Competence Frameworks: A Systematic Analysis of AI Coverage and Adaptability

661

plication by illustrating ”how the framework could be

used by practitioners ”using the SAMR model” (Ed-

ucation and Training Foundation, 2019) and a ref-

erence guide. In sum, three DCFWs target teacher

education and support implementation with extensive

supplementary resources; #19 by providing examples

of teaching and learning activities (Department of Ed-

ucation. SA, 2019), #17 –updated to version 3– en-

couraging adaptation and including implementation

examples (Butcher, 2018), while #24 delivers online

courses –by this, the only DCFW monetising their

supplemental material (Foulger et al., 2017). #21

highlights the need for an adaptive concept of DC

to not become ”invalidated by technological innova-

tions”, concluding that its implementation therefore

must be an iterative process (Minist

`

ere de l’

´

Education

et de l’Enseignement sup

´

erieur, 2019), supported by

examples from different contexts. Targeting IT pro-

fessionals, #22 provides a platform with ’Help and

Online resources’ (accessible after registration), in-

cluding, e.g., skills profiles for industries and jobs

(SFIA Foundation, 2021). #23 contains detailed job

role descriptions, offering an online portal with tai-

lored training programs (Government of Singapore,

2022). #16 offers ”resources that stakeholders can

adapt and scale to support their training efforts” (Ja-

cobs et al., 2021), including information on deploy-

ment and impact of already realized implementations.

Level 3. Two DCFWs explicitly provide a so-

called flexible dimension–even though with distinct

approaches. Introducing context free descriptors,

#04 integrates a ’+1’ dimension for transversal skills

”rarely taught, let alone assessed in formal education

contexts” (Adami et al., 2019). While claiming ”the

placeholder for the 7th context-specific” domain em-

phasizes that READY has been designed with adapt-

ability and flexibility in mind”, #14 doesn’t elaborate

much further on how to do so (European Training

Foundation, 2022).

4.3 Coverage of AI Competences

In order to address RQ3 (how do DCFWs address

competences for artificial intelligence (AI), especially

generative AI?), we introduce criteria to evaluate

DCFWs by four hierarchical levels of AI coverage

(Tab. 6). We then provide further details on the

specifics concerning AI coverage for each DCFW.

AI-Coverage Levels.

• DCFWs at AI Level 0, do not mention AI at all,

manifested by providing no search result, either

for searching the documents for terms like ar-

tific*, generat* and AI nor from the data collected

while scanning the documents.

• DCWFs mapped to Level 1 use these terms to il-

lustrate examples of emerging technologies (such

as augmented or virtual reality (AR/VR) but do

not put them in context with the DCFW itself.

• At Level 2, AI is used in a certain context within

the DCFW, e.g., in terms of competence de-

scriptors for machine learning or AI ethics, even

though application scenarios are not included.

• DCFWs at Level 3 dedicate a full chapter or sec-

tion to AI as well as approach this topic in a mul-

tidimensional manner.

AI-Coverage Mapping of DCFWs.

Level 0. 52% of DCFWs (#04, #05, #07, #08, #10,

#11, #13, #14, #15, #16, #18, #19, #20, #22, #24,

#25) do not mention the term AI at all.

Level 1. #02 combines AI with augmented and vir-

tual reality (AR/VR) within a tech indicator system,

but does not provide a specific context for the prac-

tical application (Gekara, V, Snell, D, 2019). Rais-

ing awareness to potential AI applications in educa-

tion, #03 incorporates AI in context of ”digital content

creation and programming” (INTEF, 2017). #23 pro-

vides job role descriptions for the IT sector, includ-

ing specific AI-related skills, highlighting the practi-

cal applications in specific careers rather than being

integrated into a curriculum (Government of Singa-

pore, 2022).

Level 2. The only DCFW using the term genera-

tive AI, #12 is situating AI under the competence area

”Subject and Industry Specific Teaching”, focusing

on professional development. While AI is mentioned

in various activities, the descriptions remain general,

such as ”using AI (AR, VR)” (Education and Train-

ing Foundation, 2019). Strongly based on #09, #06

does not yet fully integrate all of the original models

latest update (introducing AI competences) but added

a low-threshold online module on the basic impacts

of AI. #21 categorizes AI under ”developing and mo-

bilizing technological skills”, highlighting the impor-

tance of a general understanding of AI, also in con-

text of ”developing critical thinking about the use of

digital technologies” (Minist

`

ere de l’

´

Education et de

l’Enseignement sup

´

erieur, 2019).

Level 3. #01 focuses on ”the major AI and digi-

tal transformation competencies needed in the pub-

lic sector” (Balbo Di Vinadio et al., 2022) –the only

DCFW we could identify to do so. With Version

2.2. #09 introduced a section addressing AI, focus-

ing on citizens interacting with AI systems, ”rather

than focusing on the knowledge about Artificial Intel-

ligence per se” (Vuorikari et al., 2022), demonstrating

its commitment to human-centered considerations in-

cluding AI-assisted and AI-automated decision mak-

ing (Eigner and H

¨

andler, 2024), as well as addressing

EKM 2024 - 7th Special Session on Educational Knowledge Management

662

ethical impacts. #17 dedicates a chapter exploring AI

and its role in assistive technologies, highlighting the

impact on accessibility ”made possible by advances

in ’machine learning’ and ’deep learning’ algorithms”

(Butcher, 2018), raising awareness on how AI can be

used to support students with disabilities. with state-

ments like ”AI facilitates|can assist|enables . . . ”, it

exemplifies activities for the competence area ’Appli-

cation of Digital Skills’.

4.4 Mapping of Adaptability and AI

Coverage

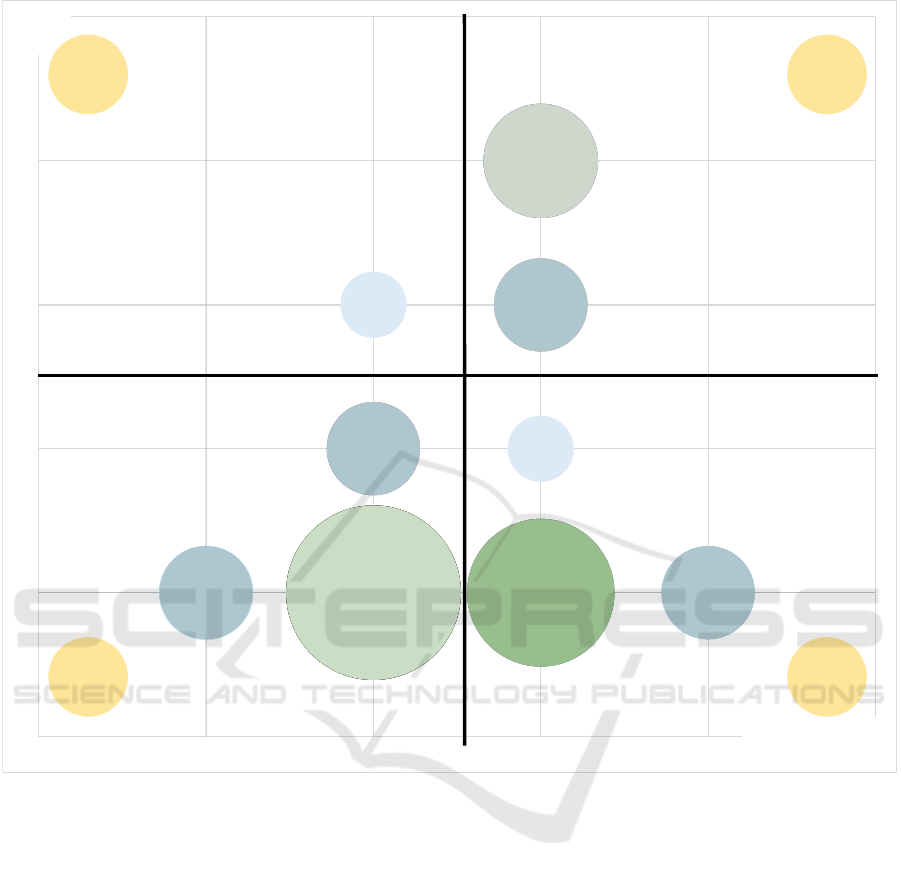

Fig. 3 summarizes the key findings from the system-

atic mapping study driven by the question to which

extent established DCFWs are suitable for specifying

competences for generative AI. In particular, it illus-

trates a two-dimensional matrix correlating the levels

of AI competence coverage (y-axis) and the levels of

adaptability (x-axis). The resulting grid is divided into

four quadrants (Q1–4), in which the analyzed DCFWs

are located in terms of bubbles indicating their quan-

tified distribution.

• DCFWs clustered in quadrant Q1 provide some

adaptability but do not address AI at all or only

marginally. Aiming mostly at teachers and train-

ers, focusing more on contextualization and prac-

tice transfer than on adaptability, they may equip

teachers with the DC to adapt to some extent

to new technology when given the necessary re-

sources to do so.

• DCFWs located in Q2 show strong adaptability

but still refer to AI on a basic level. They address

different target groups and cover every sector ex-

cept accountancy and financial services.

• Only one DCFW is situated in Q3 by providing

the same basic adaptability, but fulfilling the cri-

teria for AI level 1.

• Q4 unites the star pupils with strong adaptabil-

ity by either providing tangible material for prac-

tical application and adaptability to new contexts

or having a specifically designed dimension to

accommodate new competence fields emerging

from technological shifts. Nevertheless, out of 25

analyzed DCFWs, there is just a single one using

the term ’generative AI’ in a few of their compe-

tence descriptors (Education and Training Foun-

dation, 2019).

5 DISCUSSION

In this section, we reflect on limitations of the applied

approach (Section 5.1), discuss observed challenges

(Section 5.2), and illustrate the utility of our findings

by example (Section 5.3).

5.1 Limitations

As illustrated in Section 3, our analysis follows a sys-

tematic mapping approach driven by the stated re-

search questions. However, throughout our analy-

sis, we have identified several aspects worthy of fur-

ther elaboration. For instance, other DCFWs (cross-

)referenced by the selected DCFWs could by inves-

tigated in detail, especially the ones deriving from

#09, of which a number of adaptations are already

documented (Vuorikari et al., 2022). Due to the pur-

pose of this study, DCFWs published by individual

organizations (e.g., universities) were deliberately not

considered, whereby this could be a promising re-

search avenue. In addition, most DCFWs analyzed

originate from ’developed’ countries, which leaves a

significant gap especially concerning Asia and Latin-

America, further aggravated by limited accessibility

through language. Due to the high diversity of target

groups and aims, e.g., encompassing K-12, the vo-

cational sector, higher education as well as individ-

ual citizens, the mapping required the application of

coarse and generalizing categories. A detailed inves-

tigation of domain-specific circumstances would be

useful for future work.

5.2 Challenges

Driven by the question how far the characteristics

of DCFWs can be broken down to simple variables

while still giving valuable information, we we have

observed several challenges in the course of analyzing

the DCFWs. As outlined in Section 3, one challenge

was to actually identify established DCFWs. Since

DigComp (#09) was some kind of anchor while still

planning this research, we were lucky finding it ac-

companied with a multitude of literature preceding

and ultimately substantiating the DCFWs, and point-

ing us to the UNESCO-UNEVOC database serving as

source for backward snowballing. Based on analy-

ses of the corresponding DCFWs as well as further

literature (see Section 2), it becomes manifest that

there is no terminological consistency concerning the

terms digital literacy and digital competence, as was

already observed by (Mattar et al., 2022) and (Ferrari

et al., 2012). Also, related research is pointing out that

defining DC is depending on multiple aspects such as

Navigating the Landscape of Digital Competence Frameworks: A Systematic Analysis of AI Coverage and Adaptability

663

#05

#18

#07

#08

#11

#13

#15

#20

#25

#02

#03

#06

#10

#16

#19

#22

#24

#23

#12

#21

#01

#17

#09

#04

#14

-1

0

1

2

3

4

-1 0 1 2 3 4

adaptability

AI

Q4Q3

Q2Q1

Figure 3: Matrix classifying digital competence frameworks (DCFWs) according to their levels (0–3) of AI coverage (vertical)

and adaptability (horizontal) into four quadrants (Q1–4).

context, purpose and aim, and therefore to be under-

stood as fluent concept. There are obviously more as-

pects which still need to be explored more thoroughly.

Furthermore during data collection, we gathered more

structural data than we could address in this paper,

like a comparison of terminological consistency, its

purpose in context with its aims or details on the pro-

vided means of (self-)assessment, which will be ad-

dressed in future research.

5.3 Practical Utility

This paper’s purpose was to systematically analyze

and categorize established DCFWs, with emphasis on

AI coverage and adaptability for competence specifi-

cation. The resulting overview condensed in the ma-

trix in Fig. 3, can be utilized by practitioners to select,

evaluate, and apply DCFWs, which is illustrated by

the following exemplary application scenarios.

• First, consider teaching staff at a university aim-

ing to choose a DCFW suitable for defining AI-

related learning objectives for a course and subse-

quently assessing students’ performances against

these objectives. In this case, DCFWs #09 and

#17 would be suitable candidates.

• Then, imagine an HR expert in a company aiming

to determine the AI-related competences required

for project staffing. Focusing on vocational edu-

cation and training and assigned to AI level 2, e.g.,

#12 could provide the necessary means for orien-

tation, while other DCFWs with the same target

sector do not include AI competences.

EKM 2024 - 7th Special Session on Educational Knowledge Management

664

6 CONCLUSION

This paper presents the results of a systematic anal-

ysis of established digital competence frameworks

(DCFWs) with focus on how the DCFWs address

AI competences and provide adaptability for compe-

tence specification due to challenges of rapid tech-

nological development. A key contribution of this

paper is the development and application of crite-

ria for classifying DCFWs into hierarchical levels

regarding adaptability and coverage of AI compe-

tences. Resulting from applying these criteria, we

present a matrix (Fig. 3) illustrating the DCFWs dis-

tribution according to their mapped levels for both

aspects, aiming to support stakeholders in choosing

the most suitable DCFW for their specific application

purposes. This matrix can be utilized by a broad au-

dience, including educators, VET and labour market

experts, HR and IT professionals, management per-

sonnel, or researchers. The most significant findings

from analyzing DCFWs’ adaptability are that only

few DCFWs can be mapped to level 2 or 3, highlight-

ing the need for a clearer distinction between contex-

tualization and adaptability. Concerning AI cover-

age, most surprising was that just one DCFW actu-

ally uses the term generative AI in its competence de-

scriptors, while 52% of the analyzed DCFWs do not

mention AI at all. Furthermore AI is often placed in

the field of informatics and programming, but rarely

regarded multi-dimensional. Following up on if and

how these DCFWS will respond to the challenges in-

herent to generative AI and large language models

(LLMs) and its impact on education, labour and so-

ciety in general, could represent interesting research

directions. Our work can be seen as a first exploration

of DCFWs regarding this rapidly evolving field of

generative AI. The resulting overview provides a first

practical orientation and forms the basis for further

research in terms of in-depth analyses, e.g., taking a

closer look at how AI competences are addressed by

DCFWs located in Q4, or conceptual work, e.g., de-

veloping a DCFW tailored to the challenges posed by

generative AI.

ACKNOWLEDGEMENTS

The authors gratefully acknowledge the support from

the ”Gesellschaft f

¨

ur Forschungsf

¨

orderung (GFF)”,

as this research was conducted at Ferdinand Porsche

Mobile University of Applied Sciences (FERNFH)

as part of the ”Digital Transformation Hub” project

funded by the GFF with means of the Province of

Lower Austria.

REFERENCES

Adami, E., Karatza, S., Marenzi, I., Moschini, I., Petroni,

S., Rocca, M., and Grazia Sindoni, M. (2019). Com-

mon Framework of Reference for Intercultural Digital

Literacies.

Association of International Certified Professional Accoun-

tants (2019). Competency Framework. Digital Skills.

Balbo Di Vinadio, T., van Noordt, C., Vargas Alvarez del

Castillo, C., and Avila, R. (2022). Artificial Intelli-

gence and Digital Transformation Competencies for

Civil Servants.

Benali, M. and Mak, J. (2022). A comparative analysis of

international frameworks for Teachers’ Digital Com-

petences. International Journal of Education and De-

velopment using ICT, 18.

Butcher, N. (2018). UNESCO ICT Competency Framework

for Teachers; 2018.

Cabero-Almenara, J., Romero-Tena, R., and Palacios-

Rodr

´

ıguez, A. (2020). Evaluation of Teacher Digi-

tal Competence Frameworks Through Expert Judge-

ment: the Use of the Expert Competence Coefficient.

Journal of New Approaches in Educational Research,

9(2):275–293.

Chiu, T., Xia, Q., Zhou, X., Chai, C., and Cheng, M. (2023).

Systematic literature review on opportunities, chal-

lenges, and future research recommendations of artifi-

cial intelligence in education. Computers and Educa-

tion: Artificial Intelligence, 4.

Dawson College (2021). Artificial Intelligence Competency

Framework. A success pipeline from college to uni-

versity and beyond.

Department of Education. SA (2019). Professional Devel-

opment Framework for Digital Learning.

Department of Education, Skills and Employment. (2020).

Digital Literacy Skills Framework (DLSF).

Dondi, M., Klier, J., Panier, F., and Schubert, J. (2019).

Defining the skills citizens will need in the future

world of work.

Education and Training Foundation (2019). Digital Teach-

ing Professional Framework.

Education Wales, UK (2022). Digital Competence Frame-

work Wales.

Eigner, E. and H

¨

andler, T. (2024). Determinants

of llm-assisted decision-making. arXiv preprint

arXiv:2402.17385.

European Training Foundation, E. (2022). The ’READY’

Model (Reference model for Educators’ Activities and

Development in the 21st-century).

Ferrari, A. (2012). Digital competence in practice: an anal-

ysis of frameworks. Publications Office of the Euro-

pean Union, LU.

Ferrari, A., Punie, Y., and Redecker, C. (2012). Understand-

ing digital competence in the 21st century: An analy-

sis of current frameworks. In Proc. of ECTEL, pages

79–92. Springer.

Foulger, T. S., Graziano, K., Schmidt-Crawford, D., and

Slykhuis, D. (2017). Teacher Educator Technology

Navigating the Landscape of Digital Competence Frameworks: A Systematic Analysis of AI Coverage and Adaptability

665

Competencies. Journal of Technology and Teacher

Education.

Gekara, V, Snell, D, Molla, A, K. . T. (2019). Skilling the

Australian Workforce for the Digital Economy - The

Australian Workforce Digital Skills Framework.

Government of Singapore, S. (2022). Skills Framework for

Infocomm Technology.

Haendler, T. and Neumann, G. (2019). A framework for the

assessment and training of software refactoring com-

petences. In KMIS, pages 307–316.

Hazar, E. (2019). A Comparison between European digi-

tal competence framework and the Turkish ICT cur-

riculum. Universal Journal of Educational Research,

7(4):954–962.

Ilom

¨

aki, L., Paavola, S., Lakkala, M., and Kantosalo, A.

(2016). Digital competence – an emergent boundary

concept for policy and educational research. Educa-

tion and Information Technologies, 21:655–679.

INTEF (2017). Common Digital Competence Framework

for Teachers (CDCFT).

Jacobs, L., Carboni, I., Hartley, B., Lindsey, D., Sibthorpe,

C., and Tiel Groenestege, M. (2021). Developing mo-

bile digital skills in low- and middle-income coun-

tries.

Johnson, A. (2022). Here’s What To Know About OpenAI’s

ChatGPT — What It’s Disrupting And How To Use It.

Kelentri

´

c, M., Karianne, H., and Arstorp, A.-T. (2017). Pro-

fessional Digital Competence Framework for Teach-

ers.

Kitchenham, B. and Charters, S. (2007). Guidelines for per-

forming Systematic Literature Reviews in Software

Engineering. EBSE Technical Report.

Krathwohl, D. R. (2002). A revision of bloom’s taxonomy:

An overview. Theory into practice, 41(4):212–218.

Law, N., Woo, D., de la Torre, J., and Wong, G. (2018). A

global framework of reference on digital literacy skills

for indicator 4.4. 2.

Llaneras, K., Rizzi, A., and

´

Alvarez, J. A. (2023). ChatGPT

is just the beginning: Artificial intelligence is ready to

transform the world.

Long, D., Teachey, A., and Magerko, B. (2022). What is AI

literacy? Competencies and design considerations. In

Proc. of ACM CHI, pages 1–20.

Mart

´

ınez, M. C., S

´

adaba, C., and Serrano-Puche, J. (2021).

Meta-framework of digital literacy: a comparative

analysis of 21st-century skills frameworks. Revista

Latina de Comunicacion Social, 79:76–110.

Mattar, J., Santos, C. C., and Cuque, L. M. (2022). Anal-

ysis and comparison of international digital compe-

tence frameworks for education. Education Sciences,

12(12).

Mediasmarts (2022). USE, UNDERSTAND & ENGAGE:

A Digital Media Literacy Framework for Canadian

Schools.

Minist

`

ere de l’

´

Education et de l’Enseignement sup

´

erieur

(2019). Digital Competency Framework.

Mishra, P. and Koehler, M. J. (2006). Technological peda-

gogical content knowledge: A framework for teacher

knowledge. Teachers College Record, 108:1017–

1054.

Nature Machine Intelligence (2023). What’s the next word

in large language models?

Ng, D. T. K., Leung, J. K. L., Chu, S. K. W., and Qiao, M. S.

(2021). Conceptualizing AI literacy: An exploratory

review. Computers and Education: Artificial Intelli-

gence, 2:100041.

Ng, D. T. K., Leung, J. K. L., Su, J., Ng, R. C. W., and Chu,

S. K. W. (2023). Teachers’ AI digital competencies

and twenty-first century skills in the post-pandemic

world. Educational technology research and devel-

opment, 71:137–161.

N

´

arosy, T., Schm

¨

olz, A., Proinger, J., and Domany-Funtan,

U. (2022). Digitales Kompetenzmodell f

¨

ur

¨

Osterreich.

Medienimpulse.

Object Management Group (2017). Unified Modeling Lan-

guage – version 2.5.1. https://www.omg.org/spec/

UML/2.5.1.

OpenAI (2022). Introducing ChatGPT.

Petersen, K., Feldt, R., Mujtaba, S., and Mattsson, M.

(2008). Systematic mapping studies in software en-

gineering. In Proc. of EASE, EASE’08, page 68–77.

BCS Learning & Development Ltd.

Ralph, P. et al. (2021). Empirical Standards for Software

Engineering Research.

Redecker, C. (2017). DigCompEdu.

Rosado, E. and Belisle, C. (2006). Analysing Digital Lit-

eracy Frameworks. A European framework for digital

literacy (eLearning Programme 2005-2006).

S

´

anchez-Canut, S., Usart-Rodr

´

ıguez, M., Grimalt-

´

Alvaro,

C., Mart

´

ınez-Requejo, S., Lores-G

´

omez, B., et al.

(2023). Professional Digital Competence: Definition,

Frameworks, Measurement, and Gender Differences:

A Systematic Literature Review. Human Behavior

and Emerging Technologies, 2023.

Santana, M. and D

´

ıaz-Fern

´

andez, M. (2023). Competen-

cies for the artificial intelligence age: visualisation of

the state of the art and future perspectives. Review of

Managerial Science, 17:1971–2004.

Sattelmaier, L. and Pawlowski, J. M. (2023). Towards a

Generative Artificial Intelligence Competence Frame-

work for Schools. In Proc. of ICOEINS), pages 291–

307. Atlantis Press.

SFIA Foundation (2021). Skills Framework for the Infor-

mation Age (SFIA - 8).

Shiohira, K. (2021). Understanding the Impact of Artificial

Intelligence on Skills Development. Education 2030.

Siddiq, F. (2018). A comparison between digital compe-

tence in two Nordic countries’ national curricula and

an international framework: Inspecting their readiness

for 21st century education. Seminar.net.

Siina, C., Fuller, S., and Sakamoto, J. (2022). Educators’

Digital Competency Framework.

Tomczyk, L. and Fedeli, L. (2021). Digital Literacy

among Teachers - Mapping Theoretical Frameworks:

TPACK, DigCompEdu, UNESCO, NETS-T, DigiLit

Leicester. Proc. of IBIMA, 23-24 November 2021,

Seville, Spain, pages 244–252.

EKM 2024 - 7th Special Session on Educational Knowledge Management

666

Trujillo S

´

aez, F., Alvarez Jim

´

enez, D., Montes Rodr

´

ıguez,

R., Segura Robles, A., and Garc

´

ıa San Mart

´

ın, M.

(2020). The Global Framework for Educational Com-

petence in the Digital Age.

UNESCO (2023). Digital Frameworks.

VandenBos, G. R. (2007). APA dictionary of psychology.

American Psychological Association.

Vuorikari, R., Kluzer, S., and Punie, Y. (2022). DigComp

2.2, The Digital Competence Framework for Citizens:

With new Examples of Knowledge, Skills and Atti-

tudes. Technical report, Publications Office of the Eu-

ropean Union, JRC.

Wohlin, C. (2014). Guidelines for snowballing in system-

atic literature studies and a replication in software en-

gineering. In Proc. of EASE, pages 1–10.

Wolny, S., Mazak, A., Carpella, C., Gneist, V., and Wim-

mer, M. (2017). Thirteen years of SysML: a system-

atic mapping study. Software and Systems Modeling,

19:111–169.

Yang, L., Garc

´

ıa-Holgado, A., and Mart

´

ınez Abad, F.

(2021). A Review and Comparative Study of Teacher’s

Digital Competence Frameworks: Lessons Learned,

chapter 03, pages 51–71. IGI Global.

Zarifhonarvar, A. (2023). Economics of ChatGPT: A Labor

Market View on the Occupational Impact of Artificial

Intelligence. Journal of Electronic Business & Digital

Economics.

Zhao, L., Alhoshan, W., Ferrari, A., Letsholo, K. J., Ajagbe,

M. A., Chioasca, E.-V., and Batista-Navarro, R. T.

(2021). Natural Language Processing for Require-

ments Engineering: A Systematic Mapping Study.

ACM Comput. Surv., 54(3).

Navigating the Landscape of Digital Competence Frameworks: A Systematic Analysis of AI Coverage and Adaptability

667