Forecasting the Future Development of ABCtronics: A

Comprehensive Analysis

Guoer Cao

Faculty Office of Humanities and Social Sciences, University of Nottingham Ningbo China, Ningbo, China

Keywords: Market Demand Estimation, Sales Forecasting, Analysis of Quality Control, Downtime Distribution of

Equipment, Statistical Analysis of Customer Satisfaction.

Abstract: This paper presents an in-depth case study analysis of ABCtronics, a player in the semiconductor industry,

focusing on the complexities of manufacturing processes, quality control mechanisms, and strategic decisions

necessary for maintaining competitiveness and meeting customer expectations. Key aspects of the study

include differing perspectives on downtime distribution of ion implanters, a comparative analysis of quality

control methods (specifically, batch acceptance testing and single chip testing), statistical analysis of customer

satisfaction, and market demand estimation and sales forecasting. Applying statistical methods, the paper

reveals disagreements between Mark and Stuart on equipment downtime distribution and suggests equipment

replacement to enhance efficiency. Furthermore, the paper evaluates the effectiveness of quality control

methods, highlighting the greater rigor of single chip testing in detecting defects, making it the preferred

quality control approach. Additionally, the study involves an analysis of customer perception of products,

quantifying customer satisfaction by calculating sample means, standard deviations, and confidence intervals,

and discusses the feasibility of achieving sales targets. Overall, this research provides insightful understanding

into the challenges faced by companies in the semiconductor industry and underscores the importance of

remaining competitive in a dynamic market environment.

1 INTRODUCTION

ABCtronics is a semiconductor manufacturing

company established in 1997. Initially a small-scale

operation, it has grown into a medium-scale

enterprise. The company specializes in various wafer

product lines, including mixed-signal integrated

circuits, and analog, and high-voltage circuit boards.

A significant portion of ABCtronics' business relies

on one major client, XYZsoft. The semiconductor

manufacturing industry, where ABCtronics operates,

is characterized by highly cyclical demand patterns.

The importance of studying ABCtronics lies in its

approach to quality control and the challenges it faces

in a competitive and dynamic market. The company

has adopted statistical methods for quality control,

implementing a Lot Acceptance Testing Method

(LATM) to check the quality of its IC chips.

ABCtronics is considering shifting to a new

technology, "defect-free manufacturing," which

could reduce the probability of producing a defective

chip. Additionally, there is a proposal to change the

current quality control policy from LATM to an

Individual Chip Testing Method (ICTM), which is

expected to enhance quality assurance and maintain a

strong relationship with its biggest customer,

XYZsoft. The case of ABCtronics presents an

opportunity to understand the complexities of

manufacturing processes, quality control

mechanisms, and the strategic decisions that

companies in the semiconductor industry must make

to remain competitive and meet customer

expectations. This understanding is crucial in

analyzing the company's potential to overcome its

challenges, such as cyclical market demands and the

need for technological advancements in quality

control.

2 SUPPORTING ANALYSIS

In the quantitative analysis of the case pertaining to

ABCtronics, a significant divergence is observed

between the assessments of Mark and Stuart

regarding the downtime distribution of the ion

implanter. To elucidate this discrepancy, a rigorous

Cao, G.

Forecasting the Future Development of ABCtronics: A Comprehensive Analysis.

DOI: 10.5220/0012850300004547

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 1st International Conference on Data Science and Engineering (ICDSE 2024), pages 577-581

ISBN: 978-989-758-690-3

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

577

computation of the expected value, variance, and

probability for each proposed distribution is

undertaken (Box et al 2005). These statistical

measures are employed to distinctly delineate the

disparities between the two distribution models,

thereby facilitating a more comprehensive

understanding of the distribution characteristics.

Furthermore, the inefficiency and suboptimal

performance of the current ion implanter necessitate

the consideration of its replacement. This

recommendation is posited following an in-depth

evaluation of the implanter's operational parameters

and its impact on overall productivity. Additionally,

the manuscript critically examines the proposition put

forth by Mark regarding the implementation of a 30%

threshold to regulate the fabrication process. The beta

distribution function (BETA.DIST) is utilized to

assess the feasibility of this threshold, subsequently

substantiating the efficacy of Mark's suggestion in

enhancing process control. In the domain of quality

control methodologies, a comparative analysis is

conducted between the Lot Acceptance Testing

Method (LATM) and the Individual Chip Testing

Method (ICTM) (Montgomery 2013). While the

LATM is characterized by a higher acceptance rate, it

is posited that this may lead to excessive leniency,

increasing the risk of accepting defective chips. In

contrast, the ICTM, which entails a meticulous

examination of each chip, demonstrates a lower

acceptance probability, thereby significantly

reducing the likelihood of defective chip approval.

This analysis underscores the ICTM's enhanced

stringency and its effectiveness in quality assurance.

The study further explores customer perception

analysis, revealing through probability magnitude

examination that the majority of customers categorize

the product as "satisfactory" rather than "good."

Employing a sample size of 40, the study calculates

the sample mean, standard deviation, and the relevant

t-value to derive a confidence interval. The

determination of the minimum sample size

incorporates the use of coefficients and margin of

error, often resulting in a non-integer value. To

address this, the adoption of the nearest higher integer

as the sample size is recommended. Moreover, an

intern proposes the adoption of a multiple regression

equation as a strategy to mitigate multicollinearity. A

detailed analysis of specific data sets is conducted,

evaluating the regression model's efficacy through R-

squared values and significance testing,

complemented by Variance Inflation Factor (VIF)

calculations to detect multicollinearity. In the context

of sales projections, the case study presents average

sales figures and probabilities across various demand

scenarios. The expected sales figures are derived by

multiplying the predicted probabilities of sales

volume in each scenario with their corresponding

average sales figures. An analysis of the probabilities

in the market demand estimate table is undertaken to

estimate the probability of achieving the target sales

volume of 3 million chips.

3 DOWNTIME AND CHEMICAL

IMPURITY PROBLEM

In the present academic study, we rigorously examine

the differing perspectives of Mark and Stuart,

employees at ABCtronics, regarding the downtime

analysis of the ion implanter. Stuart, the president of

the fabrication plant, posits that the downtime and

related activities adhere to a uniform distribution,

denoted as X~U(a,b) (Ross 2014). Conversely, Mark,

the leader of the Quality and Reliability Team (QRT),

contends that the ion implanter’s downtime exhibits a

gamma distribution pattern (Ross 2014). Our

objective is to elucidate the mechanics of each

distribution model and discern the underlying reasons

for their divergent viewpoints. To initiate this

analysis, we constructed an Excel spreadsheet based

on Table 1 from the case study, titled "Data on

downtime of ion implantation." This spreadsheet

facilitates the conversion of downtime data from

hours and minutes into a uniform hourly metric,

enabling the computation of the mean downtime

(6.01944 hours) and variance (2.76823). Assuming a

uniform distribution of this data, we calculate the

distribution's parameters. Within a uniform

distribution, the expected value of a random variable

x is determined by

E(X)= (b+a)/2 (1)

and its variance by

Var(X)=(b-a)^2/12 (2)

.By inputting the calculated mean and variance

into these formulas, we resolve the values of 'a' and

'b', leading to a more detailed understanding of the

probability density function. Subsequently, we

calculate the probability of the downtime being

within the range of 0 to 5 hours, as indicated by the

integral of the probability density function over this

interval. This computation reveals a significant

probability, suggesting a substantial likelihood of the

downtime falling within the desired range under a

uniform distribution model. Alternatively, we

hypothesize that the data follows a gamma

distribution, applying the mean and variance to derive

the parameters theta and k. Utilizing Excel's

ICDSE 2024 - International Conference on Data Science and Engineering

578

GAMMA.DIST function, we ascertain the

probability of achieving a downtime of 5 hours or less

under this model (Ross 2014). The differing

probabilities yielded by the uniform and gamma

distributions underscore the basis for the

disagreement between Mark and Stuart. Given the

prolonged downtime and its detrimental impact on

production efficiency, we advocate for the

replacement of the ion implanter. Our analysis

demonstrates a considerable probability of achieving

a reduced downtime under the uniform distribution,

thereby supporting the recommendation for

equipment change to enhance operational efficiency.

Additionally, we examine Mark's proposal regarding

the use of impurity levels in the fabrication process.

By employing the beta distribution function in Excel,

we assess the appropriateness of a 30% impurity

threshold (Evans et al 2000). The resultant low

probability density function value at this threshold,

coupled with the observed trend of a sharp increase in

impurities beyond 30%, corroborates Mark's analysis.

Thus, setting a 30% impurity cutoff emerges as a

judicious decision to mitigate the influx of impurities

in the fabrication process.

4 COMPARISON OF QUALITY

CONTROL METHODS

In this comprehensive academic study, we rigorously

investigate the quality control processes utilized by

ABCtronics in the production of Integrated Circuit

(IC) chips. Our focus is primarily on the assessment

and comparison of two distinct quality control testing

methods: the Lot Acceptance Testing Method

(LATM) and the Individual Chip Testing Method

(ICTM) (Pyzdek and Keller 2018). These methods

are pivotal in ensuring the reliability and performance

standards of IC chips, which are critical components

in various electronic devices. The LATM, a prevalent

method in the semiconductor industry, involves a

statistical sampling process where a random sample

of 25 IC chips is selected from a larger batch of 500

chips, without the option of replacement. The entire

lot's acceptance is contingent upon the condition that

the sample contains fewer than two defective chips. If

the sample reveals two or more defective chips, the

lot is deemed unacceptable and is subsequently

rejected. The statistical framework of LATM is

underpinned by the Hypergeometric distribution, a

discrete probability distribution that is highly

applicable in scenarios of sampling without

replacement (Montgomery 2013). Through the

application of the HYPGEOMDIST function in

Excel, we meticulously calculate the probabilities for

the presence of zero and one defective chip within the

sample, which are found to be 0.9024 and 0.09519,

respectively. Consequently, the cumulative

probability of a lot being accepted under the LATM

criteria is determined to be 0.9976. In stark contrast,

the ICTM presents a more individualized approach to

quality control, where each chip within a sample of

25, drawn sequentially from a lot of 500 chips,

undergoes rigorous testing. This method incorporates

the provision for replacement, ensuring that the lot

size remains constant during the sampling process.

The detection of a defective chip triggers immediate

corrective measures targeted specifically at the faulty

unit. The primary aim of ICTM is to significantly

enhance the precision of the quality control process

by intensively focusing on the identification and

rectification of defective IC chips. The theoretical

foundation of the ICTM is best represented through

the Binomial Distribution, which models the

probability of a fixed number of successes in a

sequence of independent experiments (Learning

2016). By employing the BINOMDIST function in

Excel, we calculate the probability of a lot passing the

ICTM criteria, which is ascertained to be 0.9047. To

perform a thorough comparative evaluation of these

two methodologies, we analyze the probabilities

associated with both LATM and ICTM. This

comparative study reveals that LATM possesses a

slightly higher probability of acceptance (0.09) in

comparison to ICTM. This finding suggests that the

acceptance criteria established in LATM may exhibit

excessive leniency, potentially leading to the

inadvertent acceptance of defective products within

the manufacturing process. Therefore, the ICTM is

identified as a more rigorous and effective method in

the detection of defective IC chips. As a result of this

analysis, we advocate for the adoption of ICTM over

LATM in ABCtronics' quality control processes. This

recommendation is made with the objective of

enhancing the overall quality and reliability of the IC

chips produced, thereby upholding and potentially

elevating the manufacturing standards within the

semiconductor industry.

5 CUSTOMER FEEDBACK

In this academic investigation, we engage in a

statistical analysis to comprehend customer attitudes

toward the products offered by the company under

study. To this end, we have established specific

statistical parameters: a sample mean bar x of 56.9

Forecasting the Future Development of ABCtronics: A Comprehensive Analysis

579

and a sample standard deviation (s) of 18.979. This

analysis seeks to quantify customer perceptions, a

task necessitated by initial qualitative assessments

made by Robert, who believed the products to be

well-received. We have computed the probabilities

corresponding to four distinct customer satisfaction

categories. This calculation was done to

quantitatively assess customer opinions regarding the

product's quality. The derived probabilities for each

category are as follows: P(needs improvement) = 0.2,

P(satisfactory) = 0.4, P(good) = 0.3, and P(very good)

= 0.1. These probabilities indicate that a significant

proportion of customers consider the products to be

satisfactory, rather than good. Given that the

population standard deviation (σ) is unknown for

this sample, we estimated it using the sample standard

deviation in conjunction with a T distribution (Zach

2023 & Math 2023). Utilizing a 90% confidence

interval, with a significance level (α) of 0.1 and a

critical t-value of 1.685, we calculated the confidence

interval using the established statistical formula:

n

s

tx

n

s

tx

2/2/

αα

∝

+≤≤−

(3)

The computation yielded an upper confidence

limit of 61.95599 and a lower limit of 51.84401,

thereby providing a range within which the true mean

customer score is likely to fall (Zach 2023).

Furthermore, to determine the requisite sample size

for achieving a 90% confidence level in analyzing the

mean customer score, we employed the formula:

2

)(

2/

E

st

n

⋅

=

α

(4)

For the margin of error, set at 4 (E = 4). This

calculation resulted in a minimum sample size of 64

(Bevans 2023). This sample size is essential for

ensuring statistical reliability and validity in the

assessment of customer satisfaction scores.

6 EQUATIONS AND

MATHEMATICS

In the process of addressing question (a), this

academic paper employs a multiple linear regression

model to analyze the relationship between three

independent variables, denoted as X1, X2, and X3,

and the dependent variable Y. In the process of

addressing question (a), this academic paper employs

a multiple linear regression model to analyze the

relationship between three independent variables,

denoted as X1, X2, and X3, and the dependent

variable Y. The model is expressed as follows:

Y=8.861−0.00524X1−5.505X2+1.130X3Y=8.861−0

.00524X1−5.505X2+1.130X3 (5)

The initial step in our analysis involves assessing

the model's goodness of fit, as indicated by the

coefficient of determination (R²). The observed R²

value of 0.908, which surpasses the threshold of 0.75,

suggests an adequate preliminary fit of the model

(Biometrika 2014). To further validate the model, t-

tests are conducted on the coefficients representing

demand, price, and economic factors as shown in

figure 1. These tests aim to examine the null

hypothesis that posits the coefficients of these

variables are equal to zero. The results of the t-tests,

reflected in the p-values, indicate that at a 5%

confidence level, the coefficient for demand is not

statistically significant, leading to the acceptance of

the null hypothesis for this variable. In contrast, the

coefficients for price and economic factors

demonstrate statistical significance at the same

confidence level, thereby rejecting the null

hypothesis and suggesting a meaningful impact on

sales. An additional aspect of our analysis involves

the detection of multicollinearity within the

regression model. This is achieved by calculating the

Variance Inflation Factor (VIF) for each independent

variable. The VIF, determined as the ratio of the

variance of a coefficient in a model with multiple

predictors to the variance of that coefficient in a

model with only one predictor, serves as an indicator

of multicollinearity. A VIF value exceeding 5 is

generally considered indicative of multicollinearity.

As shown in figure 2, VIF for all variables in this

model is less than 5. Therefore , there is no obvious

multicollinearity in this model. For question (b), we

derive probabilities and average sales volumes under

distinct market demand scenarios, utilizing data from

the provided table. The probabilities for these

scenarios are as follows: P(XX>200) = 0.4, P(100≤

XX ≤ 200) = 0.4, and P(XX<100) = 0.2.

Corresponding average sales figures are

YY(XX>200) = 2.385, YY(100≤XX≤200) = 2.14,

and YY(XX<100) = 1.265. Based on these figures,

we compute the expected sales figures for each

scenario: E(XX>200) = 0.954, E(100≤XX≤200) =

0.856, and E(XX<100) = 0.253. Additionally,

referencing the market demand estimate table, we

ascertain the probability P(YY>3) to be 0.2,

indicating a 20% likelihood of achieving the target

sales volume of 3 million chips. This probabilistic

approach provides insights into the potential sales

ICDSE 2024 - International Conference on Data Science and Engineering

580

performance under varying market conditions.lidity

in the assessment of customer satisfaction scores.

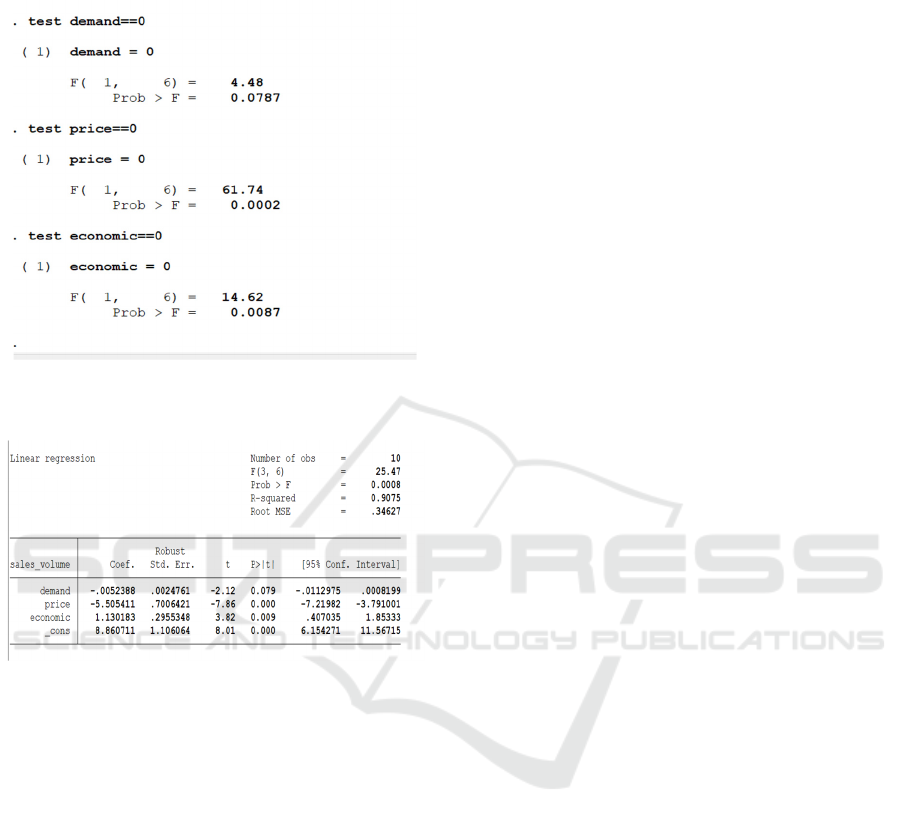

Figure 1. Data of testing individuel coefficients

(Photo/Picture credit: Original)

Figure 2. Data of linear regression model (Photo/Picture

credit :Original)

7 CONCLUSION

In conclusion, this study has substantiated several key

findings pertinent to the operations and quality

control measures at ABCtronics. Firstly, our analysis

supports Mark's assumption that the downtime of the

ion implanter follows a gamma distribution pattern.

This insight provides a more accurate framework for

anticipating and managing equipment downtime.

Further investigation into the chemical impurity

levels in the fabrication process reveals that a

threshold of 30% impurity is a critical juncture;

beyond this point, there is a marked increase in

impurity levels. Therefore, implementing a 30%

cutoff for impurity levels in the fabrication process

emerges as a prudent strategy to mitigate the risks

associated with excessive impurities. A comparative

analysis between the existing Lot Acceptance Testing

Method (LATM) and the proposed Individual Chip

Testing Method (ICTM) indicates that LATM has a

relatively higher probability of acceptance. However,

this could potentially lead to the inadvertent approval

of defective products due to their moderate threshold

value. Conversely, ICTM demonstrates greater

stringency and effectiveness in detecting defective

chips, making it a preferable quality control method.

The study also delved into customer satisfaction,

utilizing data from Table 2 to compute a 90%

confidence interval for the average customer score,

which ranges from 51.84401 to 61.95599.

Additionally, considering a specified margin of error

of 4, we determined that a minimum sample size of

64 is required to analyze the mean customer score

with 90% confidence. Finally, an analysis of market

demand estimates suggests a 20% probability

(P(YY>3) = 0.2) of achieving the target sales volume

of 3 million chips. The semiconductor industry is

currently experiencing medium-level demand, with

the potential for meeting or exceeding targets if

additional orders, such as those from Customer

PQRsystems, are secured. This finding underscores

the importance of strategic market engagement and

customer acquisition in achieving sales objectives

REFERENCES

G. E. P. Box, J. S. Hunter, W. G. Hunter, Statistics for

Experimenters: Design, Innovation, and Discovery

(2005).

D. C. Montgomery, Introduction to Statistical Quality

Control (2013).

S. M. Ross, Introduction to Probability Models (2014)

M. Evans, N. Hastings, B. Peacock, Statistical Distributions

(2000).

T. Pyzdek, P. A. Keller, The Six Sigma Handbook (2018)

M. E. Learning, The Experts at Dummies. Using the t-

Distribution to Calculate Confidence Intervals (2016)

Zach. How to Calculate Confidence Intervals: 3 Example

Problems. Statology (2023).

A. Math, Confidence Interval Using t Distribution

Calculator (2023).

R. Bevans, Understanding Confidence Intervals: Easy

Examples & Formulas (2023).

T. Biometrika, Biometrika 101(4), 927-942 (2014).

Forecasting the Future Development of ABCtronics: A Comprehensive Analysis

581