From Low Fidelity to High Fidelity Prototypes: How to Integrate

Two Eye Tracking Studies into an Iterative Design Process for

a Better User Experience

Gilbert Drzyzga

a

and Thorleif Harder

b

Institute of Interactive Systems, Technische Hochschule Lübeck, Germany

Keywords: Learner Dashboard, Motivation, Self-Regulation, Cognitive Demand, Usability, User Experience, User

Centered Design, Eye-Tracking.

Abstract: The aim of this study is to investigate the effectiveness of an iterative evaluation design process using low-

fidelity prototypes (LFPs) and high-fidelity prototypes (HFPs) for a learner dashboard (LD) to improve user

experience (UX) within an eye-tracking study with thinking aloud. The LD itself is designed to support online

students in their learning process and self-regulation. Two studies were conducted, Study 1 focused on an

LFP and Study 2 on the HFP version of the prototype. The involved participants (n=22) from different

semesters provided different perspectives and emphasized the importance of considering heterogeneous user

groups in the evaluations. Key findings included fewer adjustments required for the HFP, highlighting the

value of early evaluation and iterative design processes in optimizing UX. This iterative approach allowed for

continuous improvement based on real-time feedback, resulting in an optimized final prototype that better

met functional and cognitive requirements. Comparison of key concepts across both studies revealed positive

effects of methodological improvements, demonstrating the effectiveness of combining early evaluations with

refined approaches for improved UX design in learning environments.

1 INTRODUCTION

In recent years, there has been a growing interest in

understanding how users interact with digital

products and services, which are becoming more and

more common in everyday life. This is partly driven

by their ubiquity in different domains and the

emergence of new technologies and applications

(Goodwin, 2009), (Mohammed & Karagozlu, 2021).

To gain insight and a deeper understanding of how

users behave and interact with a digital tool such as a

Learner Dashboard (LD), methods such as eye-

tracking with thinking-aloud techniques can provide

valuable insights that can then be used to refine the

design (Drzyzga et al., 2023), (Toreini et al., 2022).

The LD is being developed as a plug-in to a Learning

Management System (LMS) for a university network

in higher education and is intended to help online

students with self-regulation and also to reduce

dropout (Drzyzga et al., 2023). It has been developed

a

https://orcid.org/0000-0003-4983-9862

b

https://orcid.org/0000-0002-9099-2351

through an iterative user-centered design (UCD)

process in collaboration with the students who will

use it (Drzyzga & Harder, 2023). As part of this

iterative process, a second thinking aloud eye-

tracking study was conducted as a follow-up study

using a modified design prototype version of the LD.

Both studies are designed to understand users'

cognitive effort in digital learning environments.

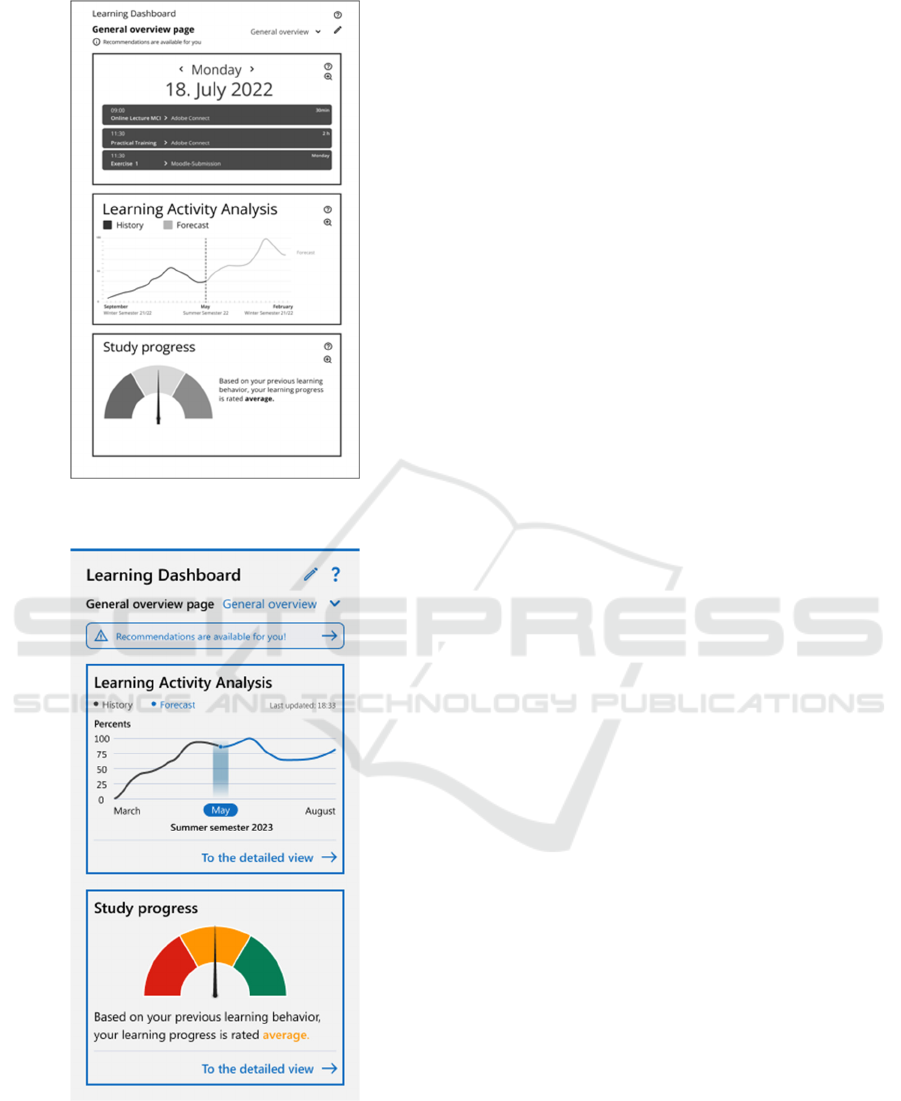

Figure 1 shows the research object used in study

one to investigate the development of a design

prototype based on a low-fidelity prototype (LFP)

(Drzyzga & Harder, 2022). After the development of

the LFP, the prototype was reviewed on several

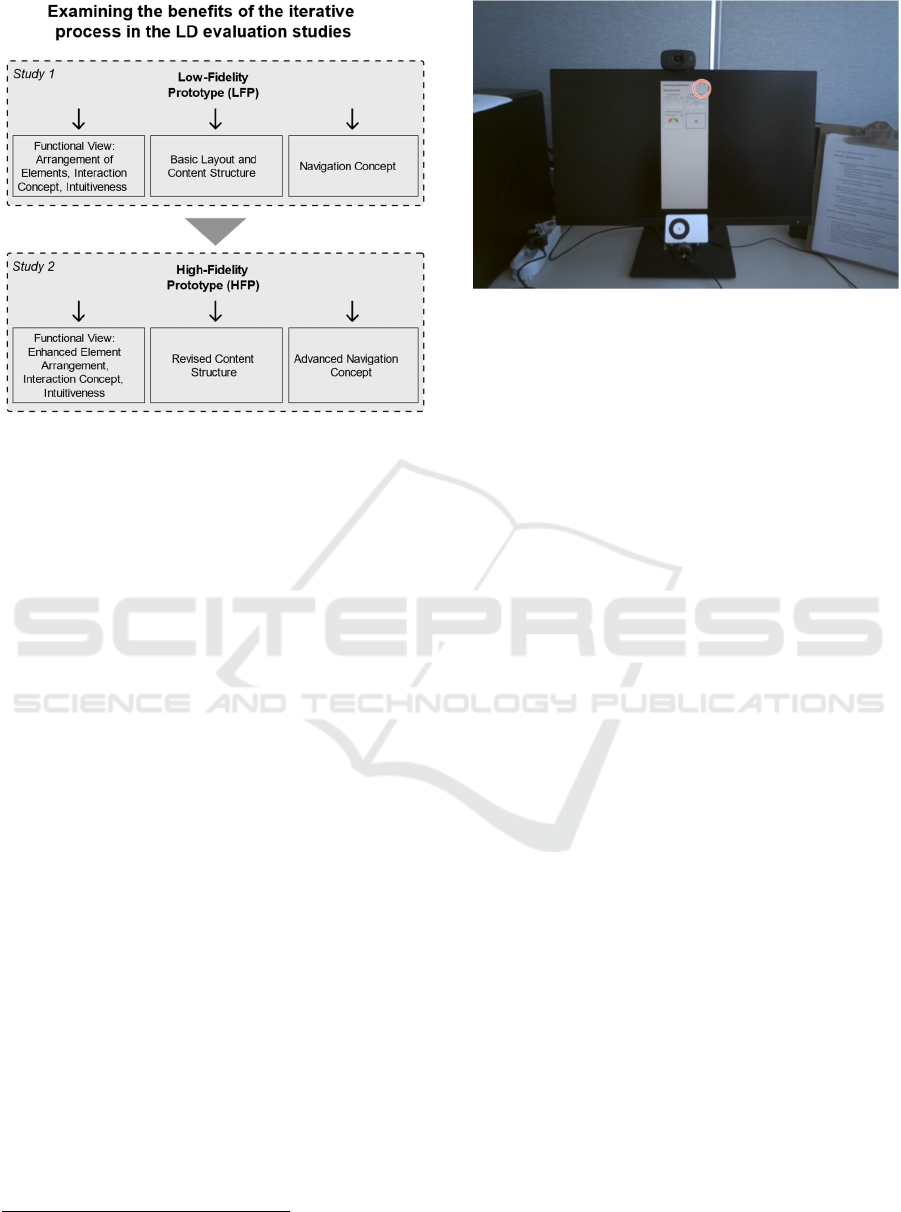

levels. Based on the results of the LFP version, a

high-fidelity prototype (HFP) was designed as shown

in Figure 2. This formed the basis for the evaluation

of Study 2. Such iterative approaches based on

different fidelities (Figure 1) provide an opportunity

to see if previous design decisions are going in the

right direction and if adjustments need to be made

(Bergstrom et al., 2011).

Drzyzga, G. and Harder, T.

From Low Fidelity to High Fidelity Prototypes: How to Integrate Two Eye Tracking Studies into an Iterative Design Process for a Better User Experience.

DOI: 10.5220/0012854900003753

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 19th International Conference on Software Technologies (ICSOFT 2024), pages 485-491

ISBN: 978-989-758-706-1; ISSN: 2184-2833

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

485

Figure 1: The LFP LD version (named here “Learning

Dashboard”) (Drzyzga & Harder, 2022).

Figure 2: The HFP version (named here “Learning

Dashboard”).

Wireframes, such as the one shown in Figure 1, do not

need to be to scale initially (Hartson & Pyla, 2018), so

the different widths, for example, are a result of

focusing on functionality in the first version and

adapting to the later page layout in the HFP version.

An important interest of this study was the degree

of validity of the approach despite the different stages

of development of the prototype. In this context, the

following research question (RQ) and goals emerged:

1. How does an iterative design process that

includes both an LFP and HFP improve the

identification and resolution of usability

problems compared to using only one type of

prototype?

2. How do the insights gained from interactions

with the LFP differ from those gained from

HFP evaluations? Do these differences affect

the effectiveness and efficiency of solving

usability problems at different stages of

development?

3. How does iterative evaluation with LFP and

HFP maintain consistency across participant

groups, such as students from different

semesters or backgrounds?

The aim is to investigate how the eye-tracking studies

conducted on the LFP and HFP lead to less significant

usability problems being identified in subsequent

evaluations. Secondly, to identify differences in

general layout organisation and interaction design

concerns when interacting with LFPs as opposed to

HFPs, which focus more on screen clarity, learning

progress information and overall user experience

(UX). A third key objective is to examine the iterative

evaluation with LFPs and HFPs and whether it

provides consistent usability findings across

participant groups, minimising the impact of group

differences on perceptions, opinions and feedback.

2 METHOD

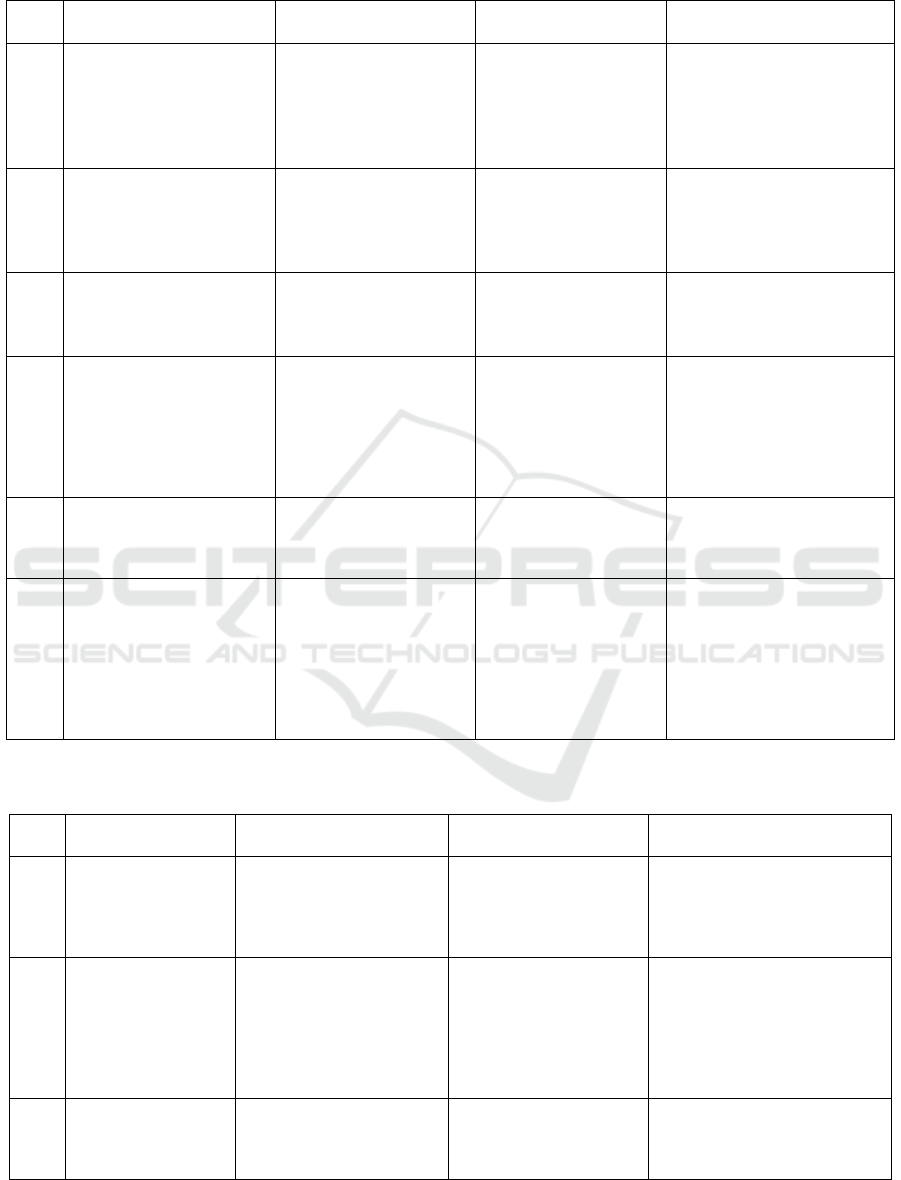

To ensure methodological rigor in this study, the

usability and UX of an LFP and an HFP were

compared and contrasted using a mixed methods

approach that combined eye-tracking and thinking-

aloud techniques (Figure 3). This allowed the

research questions related to prototyping learning

dashboards to be addressed while leveraging the

strengths of both quantitative and qualitative data

collection methods.

The two studies recruited students from a variety

of classes and backgrounds for a total of 22

participants. This was done to ensure that the results

could be generalized across different user groups and

to minimize the impact of group differences.

ICSOFT 2024 - 19th International Conference on Software Technologies

486

Figure 3: Evaluation process for the two studies.

3 CONDUCTING AND SETTING

UP THE TWO STUDIES

The two studies were preceded by clear objectives,

the formulation of their research questions to guide

the studies, and a thorough literature review to

contextualize the research within existing knowledge

of digital learning processes. This included analysis,

prototype design, eye-tracking methods and thinking

aloud techniques. This formed the basis for the design

of two sequential studies by evaluating the LFP and

HFP. Upon completion of both studies, the data sets

were analyzed to provide quantitative and qualitative

insights into the user behavior and cognitive

processes associated with each prototype.

The Participants were able to use their own visual

acuity using the “Tobii Pro Glasses 3” glasses

1

. They

were seated approximately 70 cm from the monitor to

obtain optimal results. Calibration and validation of

the correct position, gaze direction and consideration

of external conditions (e.g., reflections, sunlight)

were performed before the start of the experiments

(Figure 4). To avoid any disruption to the study, a

protocol was drawn up by the research assistants for

taking notes (Drzyzga et al., 2023).

1

https://www.tobii.com/products/eye-trackers/wearables/t

obii-pro-glasses-3

Figure 4: Eye-tracking test with HFP open and card editing

view (red circle indicates user's viewpoint).

A total of ten participants (6 male, 4 female) took part

in Study 1 and twelve participants (9 male, 3 female)

in Study 2. In both in-person studies, the participants

were students of a usability course in the 4th semester

of a bachelor's programme that teaches e.g.

conceptual thinking or digital media production

(“Information Technology and Design”). They were

asked to perform different tasks on the design

prototype, which was provided during the session as

a clickable graphical prototype in an internet browser.

Prior to the studies, pre-tests were carried out with

three participants each, for a total of two hours, to

determine whether the task design and interview

questions were suitable and understandable (Drzyzga

et al., 2023).

The LFP consisted of 14 different views

(wireframes) with contextual modalities and the HFP

contained a total of 9 different wireframes with

several contextual modalities to simulate a realistic

usage context of the LD prototype. Study 1 was a

one-day test and study 2 took a total of three days to

complete, excluding preparation and follow-up

activities. The studies followed ethical guidelines.

At the beginning of the study, participants were

informed of the aims and objectives of the study. This

included the scope and purpose of the study, as well

as information about the project and the aim of

developing a self-regulation LD as an open-source

plugin for the LMS. The briefing took five minutes.

All participants gave their consent for their data to be

processed for this study. The subsequent evaluation

took approximately 20 minutes each. At the end of the

evaluation, the abbreviated version of the User

Experience Questionnaire (UEQ-S) was filled in

(Schrepp et al., 2017).

From Low Fidelity to High Fidelity Prototypes: How to Integrate Two Eye Tracking Studies into an Iterative Design Process for a Better

User Experience

487

Table 1: Comparison of some of the key concepts of the two studies with examples of improvements for the evaluation of the

different prototypes (LFP & HFP).

Goal Description Study 1

Findin

g

s/Conce

p

ts

(

LFP

)

Study 2

F

indin

g

s/Conce

p

ts

(

HFP

)

Improvements/Findings

1 Obtaining information

about arrangement of

elements, layout design,

overall UX

Layout organization (e.g.

icon placement)

Interaction design

Intuitive navigation and

labelling

Findings about improved UX

due to better arrangement of

elements, interaction design

changes, clearer labels and

easier to understand

navi

g

ation in the HFP.

2 Evaluating the clarity of

different views and

learning progress

information

Clarity of higher-level

views (semester, study)

Informed about learning

status

Improved clarity

Trustworthiness of

recommendations

Findings show improved

clarity in the HFP, trustworthy

recommendations, and

reduced missing information

due to desi

g

n im

p

rovements.

3 Assessment of the

explanatory nature of

adding/removing

functionalit

y

for card editin

g

Clarity of general

workflow

Revised functionality

Clarity of used visual

a

spects (e.g. colors, icons)

Improved usability in HFP

through more intuitive

functionality. Colored

p

rotot

yp

e easier to evaluate.

4

Evaluating paths to content

elements, return to start,

labelling of interaction

elements, navigation

elements, orientation within

the LD, and use of drop-

down menus

Paths

Return to Start

Labelling

Navigation elements

Orientation

Use of drop-down menus

Easier paths and return to

Start

Sufficient labelling

Constant orientation

within the LD

Improvements based on

findings from both studies,

highlighting the improved UX

due to better pathfinding,

return options, labelling and

overall navigation in the HFP.

5

Evaluating help page

content, clarity, scope of

information provided, and

completeness

Clarity of content

Visibility of information

Clarity of content

Visibility of information

Findings on improved help

page design in the HFP.

6 Gathering general feedback

Ease of paths

Ease of return to start

Sufficiency of labelling

Understanding of

navigation

Orientation within the

dashboard

Use of drop-down menus

Ease of paths

Ease of return to start

Sufficiency of labelling

Understanding of

navigation

Orientation within the

dashboard

Use of drop-down menus

Additional findings about

aspects of improvements

made to the HFP.

Table 2: Comparison of some of the key concepts of the two studies with examples of improvements to the methodology

used.

Goal Description Study 1

Findin

g

s/Conce

p

ts

(

LFP

)

Study 2

Findin

g

s/Conce

p

ts

(

HFP

)

Improvements/Findings

1

Obtaining information

to optimize the

methodology

Gaining insight into how to

conduct a wireframe-based

evaluation and focus on

functional and cognitive

requirements

Gaining insight into how

to conduct a more

complex prototype

evaluation

Less moderation of interviews

2 Obtaining information

about the iterative

evaluation

Functional as a basis Repetition based on an

advanced prototype

The findings revealed that fewer

(greater) adjustments had to be

made later.

Adjustments (e.g. interactions

or functions) are easier to make

in an LFP. In the HFP both

would have to be tested.

3 Obtaining additional

perspectives and

opinions

Smaller group of

participants

Smaller group of

participants

Findings show that different

groups of users/students

(different semesters) resulted in

different views and opinions.

ICSOFT 2024 - 19th International Conference on Software Technologies

488

4 RESULTS & ANALYSIS

The results of the two studies presented, which used

the wireframe prototypes LFP and HFP to investigate

the effectiveness of iterative development within a

student-centered design approach in evaluating

usability and overall UX in an LD, aimed to explore

the implications of this combined approach. Several

key findings emerged from Study 1, which focused

on the LFP:

The LFP required fewer adjustments than the

HFP, suggesting that interactions or features can be

easily changed early in development without

affecting later testing. This underscores the value of

incorporating usability and UX evaluation early in the

design process and emphasizes iterative procedures

for potential UX improvements.

Students from different years provided different

perspectives on the LD, demonstrating that involving

users with different backgrounds or experiences is

critical to gaining a full understanding of their needs

and preferences. This finding reinforces the

importance of considering heterogeneous user groups

in UX evaluations.

The iterative design process allowed for

continuous improvement based on real-time feedback

from participants, resulting in an improved prototype

that better met functional and cognitive requirements.

This also underscores the effectiveness of integrating

such evaluation approaches early in the LD

development lifecycle.

Study 2 builds on Study 1 and incorporates minor

methodological refinements, including reduced

interaction during interviews to create a more natural

UX with less investigator influence on participant

responses. The key findings from this combined look

at the conduct of both studies were:

As in Study 1, fewer adjustments were required

for the LFP compared to the HFP, further

emphasizing that interactions or features can be easily

modified in early stages of development without

affecting later stages of testing. This finding

reinforces the value of early evaluation and iterative

design processes in optimizing the UX of the LFP.

Also in this study, participants from different

years provided different perspectives on the LD, as

observed in Study 1, again highlighting the

importance of considering different user groups in

UX evaluations. The consistency of this finding

across both studies underscores its importance.

As in Study 1, the iterative design process allowed

for continuous improvement based on real-time

feedback from participants, resulting in an optimized,

functionally enhanced prototype at both the UI and

cognitive levels.

A comparison of key concepts between the two

studies (Table 1) revealed positive effects resulting

from methodological improvements, such as less

intervention during interviews and fewer adjustments

required for the LFP compared to the HFP (Table 2).

These improvements allowed for continuous

improvement based on real user feedback, resulting

in a final prototype that effectively addressed the

functional and cognitive requirements identified by

participants with different backgrounds or

experiences. The combination of early evaluations

using LFPs with methodological refinements led to an

optimized product, demonstrating the value of

iterative approaches in UX design for LDs.

The RQs identified in this study can be answered

as follows:

For RQ1, the use of the iterative design process

improved the detection and resolution of usability

issues substantially compared to using only one type

of prototype, resulting in fewer major issues in the

subsequent evaluations.

For RQ2, interacting with the LFP primarily

identified general layout organisation and interaction

design concerns, while the HFP provided more

accurate insights into specific visual elements, clarity

of views, learning progress information and overall

UX.

For RQ3 it could be concluded that the iterative

evaluation approach minimised the impact of group

differences on perceptions, opinions and feedback by

maintaining consistency across different participant

groups, such as students from different years or

background.

These first findings demonstrate the benefits of an

integrated approach to prototyping in design

processes. By combining LFPs and HFPs, usability

problems can be identified and resolved more

effectively, while minimizing development effort and

ensuring a consistent understanding of user needs

across different user groups. Finally, this work

provides valuable insights for optimizing design

workflows by adopting an iterative approach that

maximizes efficiency in solving usability challenges

at different stages of the development process.

5 DISCUSSION & OUTLOOK

The approach presented provided valuable insights

into the evolving usability/UX of the LD and helped

to identify potential issues early in the development

process.

When comparing the LFP and HFP,

improvements were observed in various aspects of the

LD, including layout organization, clarity of

From Low Fidelity to High Fidelity Prototypes: How to Integrate Two Eye Tracking Studies into an Iterative Design Process for a Better

User Experience

489

information presentation, functionality, navigation

and help page design. Although conducting separate

studies, as was done here, may take more time and

effort to plan, administer, transcribe and analyze, it

has advantages such as the early identification of

functional and cognitive problems through the LFP,

including the findings and improvements listed. This

iterative approach, with increasing levels of detail as

development progresses, was also seen as an

advantage by (Hartson & Pyla, 2018), for example in

deciding on initial ideas. Although there are some

advantages to starting with such an LFP based on

wireframes, users have suggested that a colored

prototype might be easier to evaluate. In the second

study using the HFP, it was possible to focus

development on application details and reduce the

workload by spreading participants over several days.

Bergstrom et al. stated that further iterations could

also create new problems, which in this case could not

be immediately identified in the quantitative /

qualitative iteration (Bergstrom et al., 2011). This

could be an advantage of combining these methods in

this way. Through an understanding of cognitive

effort and user behavior, more effective and efficient

interfaces can be developed that are designed to

support the learning experience of students and to

enable self-regulation.

5.1 Limitations

The effectiveness of the study with two user groups

may not generalize well without further testing in

different educational contexts and stages of

development. There may also be issues with

subjectivity with qualitative methods such as

interview techniques. Additional appropriate metrics

would need to be considered to accurately measure

UX improvements. The approach itself should

consider or incorporate aesthetics and emotional

responses in addition to usability. Despite these

limitations, an iterative approach is still valuable for

improving the usability of educational technology

through continuous research and collaboration

between stakeholders.

5.2 Potential Areas for Future Research

Future studies could further explore the impact of

iterative design processes on usability and UX by

including additional methods or comparing different

levels of fidelity in more detail. Testing different

methods, as was done, can provide additional insight

into user behavior and preferences, leading to more

effective design solutions. More research may be

needed to investigate the optimal number of iterations

or stages in such a development cycle, and the ideal

balance between user involvement and time

efficiency. This could help to determine whether

there are more efficient strategies that still provide

valuable insights for improving usability/UX design.

Questions remain as to whether a single study

approach with more participants could have produced

similar results, or whether the addition of another

iteration step might have provided additional benefits.

These open questions provide opportunities to

explore alternative development strategies and

further refine the process in future studies.

ACKNOWLEDGEMENTS

This work was funded by the German Federal

Ministry of Education, grant No. 01PX21001B.

REFERENCES

Bergstrom, J. C. R., Olmsted-Hawala, E. L., Chen, J. M., &

Murphy, E. D. (2011). Conducting Iterative Usability

Testing on a Web Site: Challenges and Benefits. 7(1).

Drzyzga, G., & Harder, T. (2022). Student-centered

Development of an Online Software Tool to Provide

Learning Support Feedback: A Design-study

Approach. CHIRA, 244–248.

Drzyzga, G., & Harder, T. (2023). A Three Level Design

Study Approach to Develop a Student-Centered

Learner Dashboard. International Conference on

Computer-Human Interaction Research and

Applications, 262–281.

Drzyzga, G., Harder, T., & Janneck, M. (2023). Cognitive

Effort in Interaction with Software Systems for Self-

regulation—An Eye-Tracking Study. In D. Harris &

W.-C. Li (Eds.), Engineering Psychology and

Cognitive Ergonomics (Vol. 14017, pp. 37–52).

Springer Nature Switzerland. https://doi.org/10.10

07/978-3-031-35392-5_3

Goodwin, K. (2009). Designing for the digital age: How to

create human-centered products and services. Wiley,

Wiley Publishing.

Hartson, R., & Pyla, P. S. (2018). The UX book: Agile UX

design for a quality user experience. Morgan

Kaufmann.

Mohammed, Y. B., & Karagozlu, D. (2021). A review of

human-computer interaction design approaches

towards information systems development. BRAIN.

Broad Research in Artificial Intelligence and

Neuroscience, 12(1), 229–250.

Schrepp, M., Hinderks, A., & Thomaschewski, J. (2017).

Design and Evaluation of a Short Version of the User

Experience Questionnaire (UEQ-S). International

Journal of Interactive Multimedia and Artificial

ICSOFT 2024 - 19th International Conference on Software Technologies

490

Intelligence, 4(6), 103. https://doi.org/10.9781/ijimai.

2017.09.001

Toreini, P., Langner, M., Maedche, A., Morana, S., &

Vogel, T. (2022). Designing attentive information

dashboards. Journal of the Association for Information

Systems, 23(2), 521–552.

From Low Fidelity to High Fidelity Prototypes: How to Integrate Two Eye Tracking Studies into an Iterative Design Process for a Better

User Experience

491