Deep-CNN with Bacterial Foraging Optimization Based Cascaded

Hybrid Structure for Diabetic Foot Ulcer Screening

Naif Al Mudawi

1 a

, Wahidur Rahman

2 b

and Md. Tusher Ahmad Bappy

2

1

Department of Computer Science, College of Computer Science and Information System, Najran University,

Najran, Saudi Arabia

2

Department of Computer Science and Engineering, Uttara University, Dhaka, Bangladesh

Keywords:

The Diabetic Foot Ulcer, Cascaded Network, Machine Learning, Deep Learning, Bacterial Foraging

Optimization.

Abstract:

Diabetic Foot Ulcer (DFU) poses a challenge for healing as a result of inadequate blood circulation and sus-

ceptibility to infections. Untreated DFU can result in serious complications, such as the necessity for lower

limb amputation, which has a substantial impact on one’s quality of life. Although several systems have been

created to recognize or categorize DFU using different technologies, only a few have integrated Machine

Learning (ML), Deep Learning (DL), and optimization techniques. This study presents a novel method that

utilizes sophisticated algorithms to precisely detect Diabetic Foot Ulcers (DFU) from photographs. The study

is organized into distinct phases: generating a dataset, extracting features from DFU photos using pre-trained

Convolutional Neural Networks (CNN), identifying the most effective features through an optimization tech-

nique, and categorizing the images using standard Machine Learning algorithms. The dataset is divided into

photos that are DFU-positive and images that are DFU-negative. The Bacterial Foraging Optimization (BFO)

approach is used to choose crucial features following their extraction from the CNN. Subsequently, seven ma-

chine learning techniques are employed to accurately classify the photos. The effectiveness of this strategy

has been evaluated through the collection and analysis of experimental data. The proposed method achieved a

remarkable 100% accuracy in classifying DFU images by utilizing a combination of EfficientNetB0, Logistic

Regression Classifier, and BFO algorithms. The research also contrasts this novel methodology with prior

methodologies, showcasing its potential for practical DFU identification in real-world scenarios.

1 INTRODUCTION

High blood sugar levels are indicative of diabetes mel-

litus (DM), which is caused by inadequate insulin or

inadequate glucose utilization. This results in compli-

cations, including diabetic foot ulcers (DFU) and am-

putations, as well as heart, kidney, eye, and foot dis-

eases(Association, 2009), (Cho et al., 2015), (Khan-

dakar et al., 2022b), (Munadi et al., 2022). The global

number of adults with diabetes increased by 10.5%

from 2000 to 2021, from 151 million in 2000 to over

537 million in 2021. It is anticipated that 630 mil-

lion individuals will have diabetes by 2035, with 80%

of them residing in underdeveloped countries (IDF, ),

(Thotad et al., 2023). DFU is a severe complication

of diabetes that frequently results in amputation and

substantial morbidity. DFU will develop in approxi-

a

https://orcid.org/0000-0001-8361-6561

b

https://orcid.org/0000-0001-6115-2364

mately 34% of individuals with diabetes at some point

(Association, 2009), (Saeedi et al., 2019), (Armstrong

et al., 2017). Redness, calluses, and blisters are

symptoms of DFU, which are caused by skin and tis-

sue injury as a result of poor blood flow and infec-

tions. DFU can lead to amputation and even mortality

if left untreated (Ghanassia et al., 2008), (Kendrick

et al., 2022), (Ahsan et al., 2023). Research indicates

that 10-25% of individuals with diabetes will develop

DFU, with a significant number of cases resulting in

hospitalization and amputation (Jeffcoate and Hard-

ing, 2003), (Cavanagh et al., 2005), (Abdissa et al.,

2020), (Almobarak et al., 2017). Diabetes-related

foot issues are a significant cause of hospitalization

and amputations in highly developed countries such

as the United States and Qatar (Singh and Chawla,

2006), (Ponirakis et al., 2020), (Ananian et al., 2018).

On the other hand, Anxiety and depression are

also significant mental health consequences of DFU.

Al Mudawi, N., Rahman, W. and Bappy, M. T. A.

Deep-CNN with Bacterial Foraging Optimization Based Cascaded Hybrid Structure for Diabetic Foot Ulcer Screening.

DOI: 10.5220/0012931300003822

In Proceedings of the 21st International Conference on Informatics in Control, Automation and Robotics (ICINCO 2024) - Volume 1, pages 285-292

ISBN: 978-989-758-717-7; ISSN: 2184-2809

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

285

The treatment is a multidisciplinary approach that

emphasizes the control of diabetes and the passage

of blood to the feet, which includes imaging and

blood tests (Ahmad et al., 2018). The diagnostic pro-

cess can be impeded by human errors and a lack of

specialists, but more accurate and early DFU detec-

tion could prevent serious complications (Khandakar

et al., 2022a). This underscores the necessity of au-

tomatic DFU identification and categorization sys-

tems. In the sharp opposite, Artificial Intelligence

(AI) is essential for the early detection of diseases,

particularly in the context of primary care and pa-

tient health surveillance. Numerous automated sys-

tems have been developed to identify and monitor

diabetic foot ulcers (DFU) through the use of Deep

Learning (DL) and Machine Learning (ML). A tradi-

tional ML method was employed in a study (Khan-

dakar et al., 2021) to identify diabetic feet in ther-

mogram images. It presented a comparison between

Convolutional Neural Networks (CNNs) and machine

learning techniques. MobilenetV2 achieved an F1-

score of 95% for two-foot thermogram images, while

the AdaBoost Classifier, which employed 10 features,

achieved a score of 97%. The EfficientNet model was

proposed in a separate study (Thotad et al., 2023) for

the early detection of DFUs. The model achieved an

accuracy of 98.97%, an F1-score of 98%, a recall of

98%, and a precision of 99%. A new training method

for the FCN32 VGG network was introduced in paper

(Kendrick et al., 2022) to enhance DFU wound seg-

mentation, resulting in a Dice score of 0.7446. Two

models for categorizing foot thermograms were eval-

uated in an additional study (Filipe et al., 2022). The

initial model classified images into four categories.

The second model initially classified the foot as either

healthy or DFU, and subsequently classified DFUs

into three severity levels. Model 2 demonstrated a

superior accuracy of 93.2% in the classification of

healthy and first-class diabetic feet.

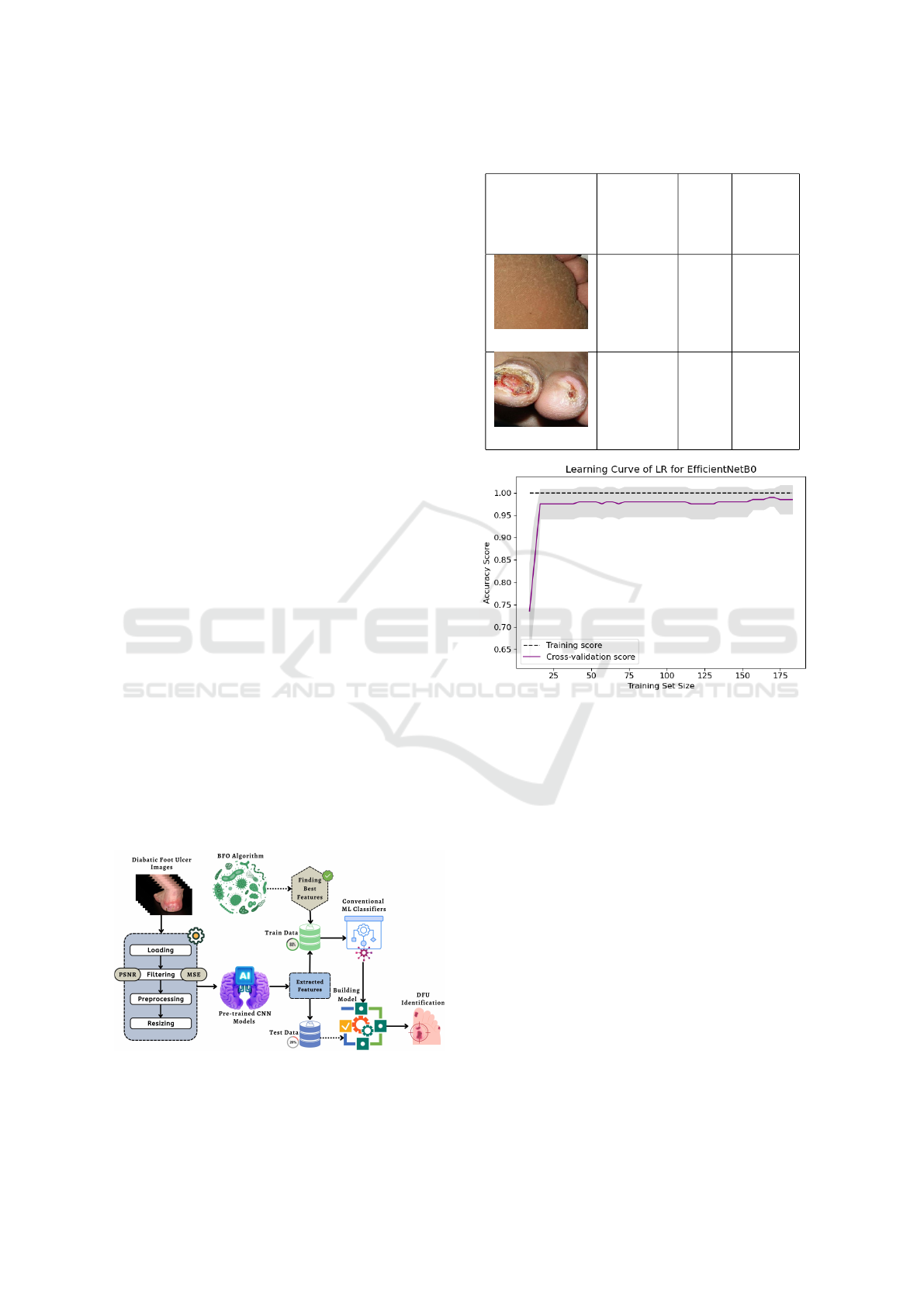

Figure 1: Architecture of the proposed system.

Table 1: Sample foot ulcer images for this research.

Sample

Images

Class

Name

Class

No.

No.

Sample

Images

Normal

Feet

0 493

Abnormal

Feet

1 493

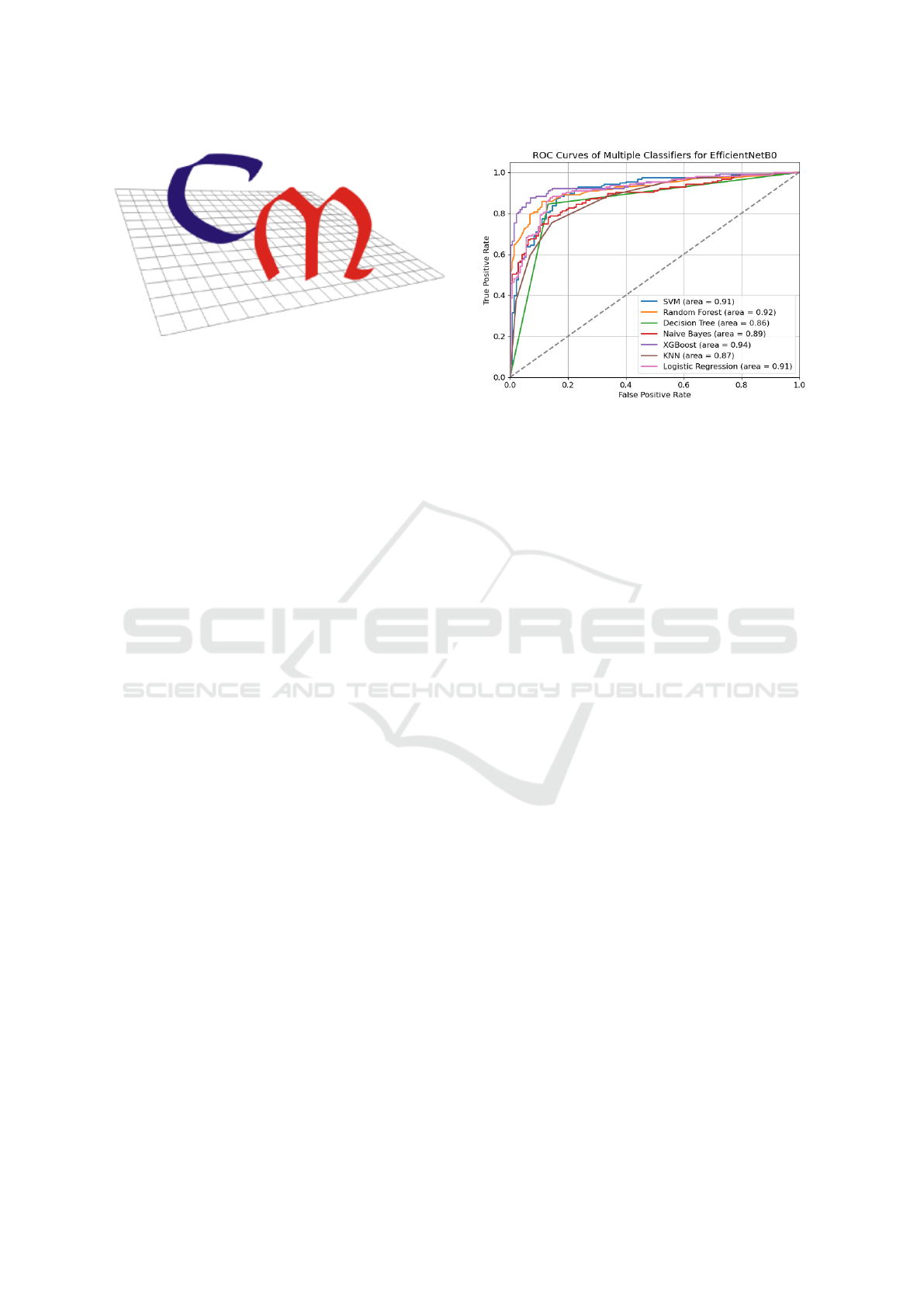

Figure 2: Learning curve of ResNet50-BFO-LR approach

for different classifiers.

Besides, current automated DFU detection meth-

ods, public datasets, object identification systems, and

deep learning methods were examined in an article

(Yap et al., 2021). Another paper (Scebba et al.,

2022) suggested a Detect-and-Segment (DS) method

that employs deep neural networks to identify DFUs,

distinguish them from the adjacent tissue, and gener-

ate a wound segmentation map. The Matthews’ cor-

relation coefficient (MCC) was enhanced from 0.29

to 0.85 by this method. The objective of a study

(Das et al., 2022) was to develop a CNN-based clas-

sification framework that surpassed existing results.

The framework was able to achieve 97.4% accu-

racy by optimizing the model architecture and pa-

rameters. An additional innovative system for DFU

categorization was proposed (Munadi et al., 2022).

This system effectively separated DFU thermal im-

ages into positive and negative categories with 100%

accuracy. The system was based on thermal imag-

ing and deep neural networks. AlexNet, VGG16/19,

GoogLeNet, ResNet50.101, MobileNet, SqueezeNet,

ICINCO 2024 - 21st International Conference on Informatics in Control, Automation and Robotics

286

Figure 3: Confusion Matrix With the EfficientNetB0-BFO-

LR network.

and DenseNet are among the CNN-based frame-

works that Research (Ahsan et al., 2023) suggested

for the classification of ischemia and infection. The

ResNet50 model demonstrated the maximum level of

accuracy, with a 99.49% accuracy rate for ischemia

and an 84.76% accuracy rate for infection. Another

study (Kendrick et al., 2022) clustered the sever-

ity risk of DFUs using the k-means clustering algo-

rithm and a labeled diabetic thermogram dataset us-

ing an unsupervised method. The severity classifica-

tion was most effectively performed by the VGG19

CNN model, which achieved an accuracy of 95.08%,

precision of 95.08%, sensitivity of 95.09%, F1-score

of 95.08%, and specificity of 97.2%.

However, the current methods have some flaws,

such as misrepresenting classes and making it hard to

find risky areas when both feet are affected. Certain

models only label feet as diabetic or healthy, with-

out checking to see how many problems they have

(Filipe et al., 2022). The study aims to get around

these problems by using CNN frameworks that have

already been trained for feature extraction, Bacterial

Foraging Optimization for feature selection, and ML

models for DFU classification.

The article is classified into 4 interconnected

parts: Section 2 provides the materials and method.

Section 3 illustrates the associated results adopted

from the proposed methodology. Finally, section 4

represents the conclusion of this paper.

2 MATERIALS AND METHOD

This section describes the dataset distribution and the

methods used to choose, train, and test the models for

the suggested cascaded network.

2.1 Dataset

We obtained ulcer-infected photos from both a pub-

licly available dataset (Laith, 2021; Alzubaidi et al.,

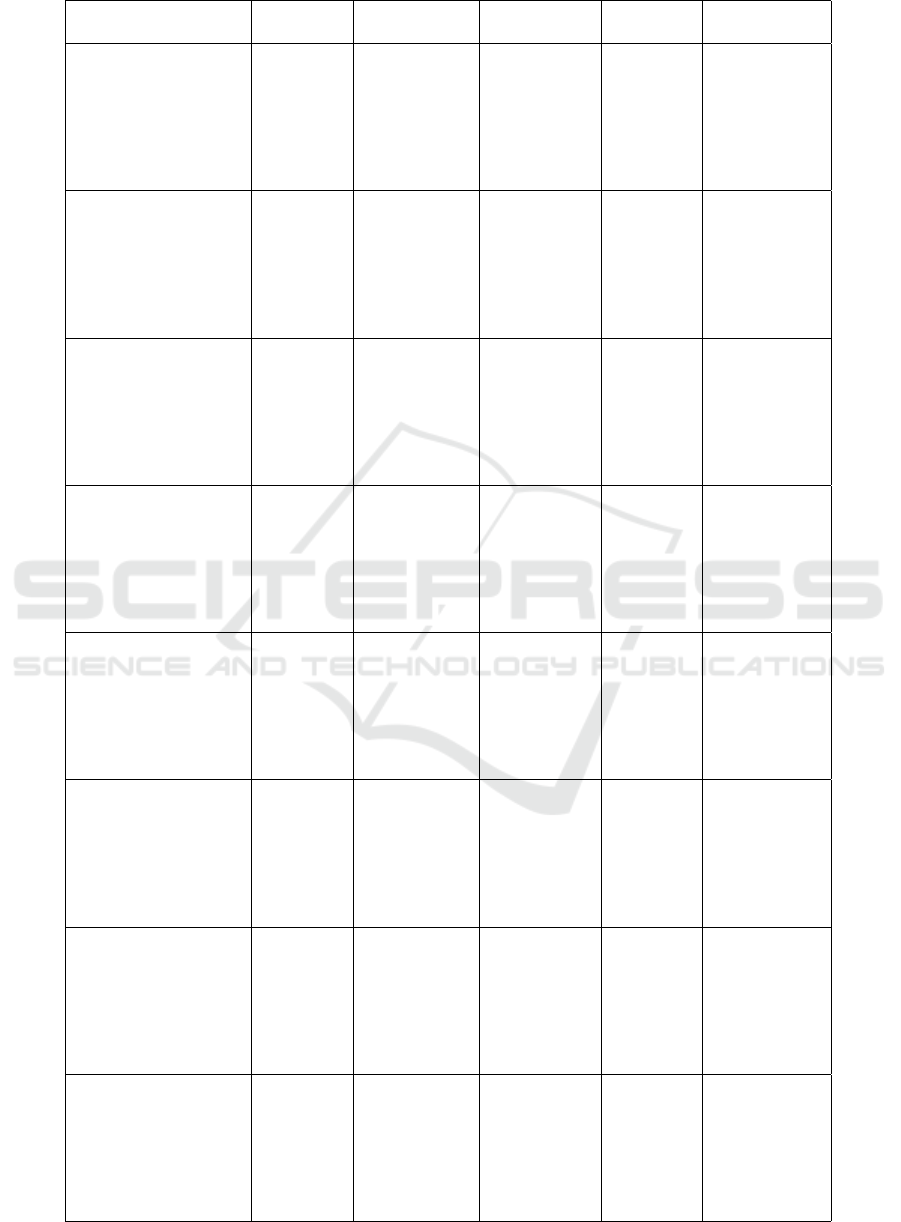

Figure 4: ROC curves of EfficientNetB0-BFO approach for

different classifiers.

2020) and a local general hospital. Subsequently,

a proficient dermatologist scrutinized these pho-

tographs to ascertain the existence of anomalous foot

in them. The dermatologist evaluated the images us-

ing a scale ranging from 0 to 1, with 1 representing

a significant level of abnormality in the foot. For our

investigation, we exclusively included photos that re-

ceived a score of either 1 or 0. In order to validate the

appropriateness of these photos for training machine

learning models, we assessed their quality using the

reference image quality evaluation approach devised

by Yagi et al., which takes into account factors such

as sharpness and noise. This dataset has 1050 photos

depicting deformed feet and 1026 images depicting

normal feet. Tab. 1 displays a selection of photos

taken from the dataset.

2.2 Proposed Architecture and Working

Principles

This section will present various image pre-

processing techniques. Prior to classifying diabetic

foot ulcers, it is important to pre-process the images

in the dataset to ensure dependable results due to the

presence of diverse forms of noise in the images. Im-

age filtering techniques are employed to eliminate un-

desired aspects, whilst augmentation approaches are

utilized to tackle the problem of a restricted dataset.

Figure 1 illustrates the structure of the suggested sys-

tem, while Algo. 1 provides a detailed explanation of

the proposed method, outlining each step. The pro-

posed method commences with the pre-processing of

the input image, a vital step to guarantee the seamless

operation of the system. The pre-processing stage en-

tails evaluating the image’s quality. In order to exam-

ine the quality of the image, we have created a tool

called the Image Quality Assessment Tool (IQAT).

Deep-CNN with Bacterial Foraging Optimization Based Cascaded Hybrid Structure for Diabetic Foot Ulcer Screening

287

Table 2: Performance of Various CNN Based Feature Extractors with Different Classifiers.

CNN Based

Feature Extractor

Classifiers Accuracy (A) Precision (P) Recall (R) F1-Score (F1)

DenseNet121 + BFO SVC 0.9706 0.9706 0.9706 0.9706

RF 0.9853 0.9860 0.9850 0.9853

DT 0.9559 0.9586 0.9552 0.9558

NB 0.9363 0.9368 0.9360 0.9362

XGB 0.9755 0.9762 0.9752 0.9755

KN 0.9657 0.9685 0.9650 0.9656

LR 0.9853 0.9860 0.9850 0.9853

EfficientNetB0 + BFO SVC 1.0000 1.0000 1.0000 1.0000

RF 0.9951 0.9950 0.9952 0.9951

DT 0.9853 0.9854 0.9856 0.9853

NB 0.9510 0.9513 0.9513 0.9510

XGB 1.0000 1.0000 1.0000 1.0000

KN 0.9951 0.9952 0.9950 0.9951

LR 1.0000 1.0000 1.0000 1.0000

InceptionV3 + BFO SVC 0.9559 0.9586 0.9552 0.9558

RF 0.9608 0.9643 0.9600 0.9606

DT 0.9265 0.9289 0.9258 0.9263

NB 0.7745 0.7940 0.7719 0.7695

XGB 0.9804 0.9815 0.9800 0.9804

KN 0.9118 0.9164 0.9108 0.9113

LR 0.9559 0.9602 0.9550 0.9557

MobileNetV2 + BFO SVC 0.9706 0.9706 0.9706 0.9706

RF 0.9853 0.9852 0.9854 0.9853

DT 0.9167 0.9172 0.9163 0.9166

NB 0.9265 0.9271 0.9269 0.9265

XGB 0.9755 0.9756 0.9754 0.9755

KN 0.9363 0.9368 0.9360 0.9362

LR 0.9755 0.9762 0.9752 0.9755

ResNet50 + BFO SVC 1.0000 1.0000 1.0000 1.0000

RF 1.0000 1.0000 1.0000 1.0000

DT 0.9902 0.9906 0.9900 0.9902

NB 0.9804 0.9804 0.9806 0.9804

XGB 0.9902 0.9906 0.9900 0.9902

KN 0.9951 0.9952 0.9950 0.9951

LR 1.0000 1.0000 1.0000 1.0000

VGG16 + BFO SVC 1.0000 1.0000 1.0000 1.0000

RF 0.9902 0.9902 0.9902 0.9902

DT 0.9755 0.9754 0.9756 0.9755

NB 0.9853 0.9852 0.9854 0.9853

XGB 0.9853 0.9854 0.9852 0.9853

KN 0.9657 0.9685 0.9650 0.9656

LR 1.0000 1.0000 1.0000 1.0000

VGG19 + BFO SVC 0.9902 0.9902 0.9902 0.9902

RF 0.9902 0.9902 0.9902 0.9902

DT 0.9804 0.9804 0.9804 0.9804

NB 0.9804 0.9804 0.9804 0.9804

XGB 0.9902 0.9902 0.9902 0.9902

KN 0.9804 0.9804 0.9804 0.9804

LR 0.9902 0.9902 0.9902 0.9902

Xception + BFO SVC 0.9363 0.9403 0.9354 0.9360

RF 0.9804 0.9813 0.9800 0.9804

DT 0.9314 0.9333 0.9308 0.9312

NB 0.7745 0.7875 0.7765 0.7727

XGB 0.9657 0.9672 0.9652 0.9656

KN 0.9461 0.9487 0.9454 0.9459

LR 0.9510 0.9544 0.9502 0.9508

ICINCO 2024 - 21st International Conference on Informatics in Control, Automation and Robotics

288

Table 3: Performance Metrics and Complexities for Various Techniques.

Techniques A P R F1 Time Complexity Space Complexity

DenseNet121 + BFO+ RF 0.9853 0.9860 0.9850 0.9853 2.15 s ± 87.7 ms

PM: 486.81 MiB,

INC: 56.38.45 MiB

EfficientNetB0 + BFO+ LR 1.0000 1.0000 1.0000 1.0000 39.3 ms ± 3.16 ms

PM: 691.94 MiB,

INC: 62.14 MiB

InceptionV3 + BFO+ XGB 0.9804 0.9815 0.9800 0.9804 1.54 s ± 35.4 ms

PM: 700.20 MiB,

INC: 62.44 MiB

MobileNetV2 + RF 0.9853 0.0952 0.9854 0.9853 629 ms ± 6.46 ms

PM: 711.17 MiB,

INC: 62.88 MiB

ResNet50 + BFO+ SVC 1.0000 1.0000 1.0000 1.0000 126 ms ± 1.73 ms

PM: 1466.89 MiB,

INC: 876.52 MiB

VGG16 + BFO+ LR 1.0000 1.0000 1.0000 1.0000 277 ms ± 19.6 ms

PM: 851.52 MiB,

INC: 61.44 MiB

VGG19 + BFO+ LR 0.9902 0.9902 0.9902 0.9902 283 ms ± 10.3 ms

PM: 945.81 MiB,

INC: 62.97 MiB

Xception + BFO+ RF 0.9804 0.9813 0.9800 0.9804 681 ms ± 21.5 ms

PM: 738.36 MiB,

INC: 63.45 MiB

The IQAT evaluated the image’s quality based on its

sharpness and noise levels. In our research we have

considered the six conventional image filtering tech-

niques: Average Filter, Gaussian Filter, Median Filter,

Bilateral Filter, Min Filter, and Max Filter. In Algo.

1, a linear regression model was utilized to determine

the quality of the image. Regression analysis employ-

ing mean square errors was used to precalculate the

coefficients of quality predictors. Lower image qual-

ity was indicated by a higher Q

i

value. The picture

was used for additional analysis if Q

i

was less than

the threshold Q

th

, which was set at 10 for our study.

The image was reduced to comply with the AI model

input size and moved to sRGB space to balance color

values after IQAT approved its quality. The practical

usage of the proposed system depends on these pre-

processing procedures, particularly quality checking

(Hossain et al., 2018). After the post-preprocessing, a

CNN model that has already been trained—ResNet50

in particular—is used to treat the image based on its

applicability and performance. The most pertinent

traits are then chosen for classification using the BFO

approach. Using the SVC model as the classifier, the

final prediction divides the image into two categories:

Normal Foot and Abnormal Foot. The procedure for

choosing the feature extractor and classifier models

for the suggested approach is described in depth in

the following section.

2.3 Model Selection and Pipeline

Building

In this study, we extracted features from images us-

ing eight pre-trained CNN approaches. To extract

the features, a CNN model that has already been

trained is given images from the dataset. For this, we

employed models from ResNet50, EfficientNetB1,

MobileNetV2, Xception, InceptionV3, VGG16, and

VGG19. We employed Bacterial Foraging Optimiza-

tion (BFO) to select the optimal features. Upon ob-

taining the features, we classified the images using

seven standard machine learning methods. Next, the

suggested system determines the relative importance

of each class in the total assessment.

We investigated seven machine-learning models

for classification: SVC, DT, RF, NB, XGB, KNN,

and LR. The CNN model was employed as the feature

extractor, and conventional machine learning models

as the classification model in the suggested cascaded

network. Thus, in this study, we combined five fea-

ture extractor models with seven classifier models to

create 35 distinct networks.

3 EXPERIMENTAL RESULTS

ANALYSIS

This subsection presents the findings of the current

study that combined the seven ML-based approaches

with CNN pre-trained frameworks. All of the code

for the suggested work was executed on the Google

Colab, which has 53GB of random-access memory

(RAM) and a dedicated Graphics Processing Unit

(GPU). For this study, the ”pro” tier of the subscrip-

tion service was used. Using the pre-trained models,

the study retrieved important elements from the given

image. After that, ML-based models were utilized

to evaluate the obtained data in the model evaluation

processes. 80 percent of the dataset was set aside for

training and 20 percent for testing in order to train and

assess the proposed model.

Deep-CNN with Bacterial Foraging Optimization Based Cascaded Hybrid Structure for Diabetic Foot Ulcer Screening

289

Data: InputImage

RGB

Result: Result

Initialize: Params ← {P

1

, P

2

, . . . , P

n

};

while InputImage

RGB

̸= NIL do

ProcessedImage

sRGB

←

IQAT (InputImage

RGB

);

ResizedImage ← Resize to 224 × 224;

FeatureExtractor ← Load ResNet50 with

Params;

ExtractedFeatures ← Extract features from

ResizedImage;

BFOProcessor ← Load BFO with Params;

SelectedFeatures ← Select features from

ExtractedFeatures;

Classi f ierModel ← Load SVC with

ClassParams;

Prediction ← Predict using SelectedFeatures;

if Prediction > 0.5 then

Result ← Abnormal Foot;

else

Result ← Normal Foot;

end

end

Output: Result;

Procedure IQAT(InputImage

RGB

):

Initialize: α, β, γ, Threshold;

Load parameters α, β, γ;

QualityIndex =

α + β × Sharpness + γ × Noise;

Estimate Sharpness and Noise;

if QualityIndex < T hreshold then

if LinearColor ≤ 0.0031 then

ProcessedImage

sRGB

=

12.92 × LinearColor;

else

ProcessedImage

sRGB

=

1.0552 × LinearColor

1

2.4

;

end

end

Return ProcessedImage

sRGB

;

Procedure BFO(ExtractedFeatures):

Initialize: f ilterVar ← 2F − 1, Where

F = 1, 2, . . . , f ilterVar;

InputImage ← Input image;

FeatureVector ← Feature vector;

Start:;

BestFilter ← Apply filter to InputImage;

FilteredQuality ← Filtered image;

ArrayConversion ← Convert image to array;

for each ArrayConversion do

Extract feature vector

ResultFeature{V

0

,V

1

, . . . ,V

14

};

FeatureVector ← Resul tFeature;

end

Return FeatureVector;

Algorithm 1: Proposed algorithm to build the pipeline.

Tab. 2 shows the experimental result analysis with

the pre-trained CNN Models and conventional ma-

chine learning (ML) classifiers. In our experiment,

we have utilized eight (08) pre-trained CNN mod-

els to extract the feature vectors. Initially, we have

enumerated the models with performance evaluation

matrices such as accuracy, precision, recall, and F1-

score. At the beginning, we have evaluated the model

with extracted features and then we have applied the

BFO algorithm to find the best features. Then we ap-

plied the ML seven (07) classifiers to assess the re-

sult. The experimental result shown in Fig. 2 that we

have found the highest accuracy of 100% with the pre-

trained models like EfficientNetB0, ResNet50, and

VGG16. From this table, we can easily track out that

some models provided the best result with the Sup-

port Vector Classifiers (SVC) and some of the models

extracted the highest accuracy with the Logistic Re-

gression (LR) classifier.

To check the efficacy of these models, we didn’t

rely on the performance evaluation matrices alone;

thus, we have applied time complexity and space

complexity like Peak Memory (PM) and Increment

(INC) to the highest-performing network as depicted

in Tab. 3. This table clearly shows that the Effi-

cientNetB0 with the BFO algorithm and LR classi-

fier performed better as per the table. From this table,

we tracked out that EfficientNetB0-BFO-LR network

consumed the complexity of 681 ms ± 21.5 ms and

space complexity of 738.36 MiB and INC: 63.45 MiB

that is the lower than ResNet50, VGG16 and VGG19

models. Fig. 2 shows the learning curve of this net-

work which is very promising for ulcer identification.

On the other hand, Fig. 3 shows the confusion ma-

trix of the EfficientNetB0-BFO-LR network and Fig.

4 illustrates the roc-curve of the seven classifiers from

the EfficientNetB0-BFO network.

4 SUMMARY

This section presents a comparison between the ex-

isting work and the proposed framework, as seen in

Tab. 1 and Tab. 2. Prior studies (Thotad et al., 2023),

(Ahsan et al., 2023), (Das et al., 2022),(Alzubaidi

et al., 2022) and (Filipe et al., 2022), (Haque et al.,

2022), (Khandakar et al., 2022b) only employed deep

learning approaches and machine learning models to

classify diabetic foot ulcers (DFU). The study em-

ployed both machine learning (ML) and deep learn-

ing (DL) approaches (Khandakar et al., 2022a), (Mu-

nadi et al., 2022), (Khandakar et al., 2022b). In the

context of CNN and ML models, previous studies

(Yap et al., 2021), (Das et al., 2022), (da C. Oliveira

et al., 2021) primarily depended on various pre-

trained models or ML methodologies. Only a lim-

ited number of previous studies, specifically (Haque

et al., 2022), (Xie et al., 2022) have employed fea-

ICINCO 2024 - 21st International Conference on Informatics in Control, Automation and Robotics

290

ture optimization methods. The study (Filipe et al.,

2022) using planter thermogram and thermogram pic-

ture database to achieve accuracy rates of 95.08%,

100%, and 93.2% in diagnosing problems related to

DFU. The research (Das et al., 2022) utilized a dataset

of 292 pictures of foot ulcers and achieved a classifi-

cation accuracy of 97.4% in identifying diabetic foot

ulcers (DFU). In the study conducted by (Ahsan et al.,

2023), the classification of DFU based on ischemia

and infection was performed using the DFU dataset

from 2020. The achieved accuracy rates were 99.49%

for ischemia classification and 84.76% for infection

classification. Study [36] achieved an accuracy of

01.01% in early DFU identification using solely a

public dataset. However, in study (Alzubaidi et al.,

2022), both public and private datasets of DFU were

used, resulting in an accuracy of 94.8% and 97.3%

respectively. The authors of (Haque et al., 2022) uti-

lized DFU images of electromyography and ground

reaction forces to achieve accuracy rates of 96.18%

and 98.68%. On the other hand, the suggested method

employed eight pre-trained models of Convolutional

Neural Network (CNN) architecture and seven tradi-

tional Machine Learning (ML) techniques, along with

a Bacterial Foraging Optimization (BFO) model, to

classify photos of Diabetic Foot Ulcers (DFU). The

proposed strategy heavily relies on the utilization of

filtering and enhancing techniques for photographs.

The dataset was subjected to feature optimization us-

ing the BFO technique to extract the most impor-

tant features. Subsequently, the data was sent to the

ML classifiers for classification. The proposed frame-

work, employing the EfficientNetB1+BFO+LR net-

work, has achieved a remarkable accuracy of 100%.

This advancement would greatly enhance the current

state-of-the-art in categorizing DFU field.

5 CONCLUSIONS

One of the key challenges today is preventing and

managing fatal illnesses like diabetic foot ulcers

(DFU). This study looks at using advanced tech-

niques like machine learning (ML), deep learning

(DL), and feature optimization to classify DFU im-

ages. We started by using features from pre-trained

CNN models. The BFO method was used to select

these features. Initial tests with standard ML algo-

rithms and pre-trained CNN models achieved 100%

accuracy. By combining EfficientNetB0, logistic re-

gression (LR), and BFO, we also reached 100% accu-

racy.

However, the model has some limitations. It

hasn’t been tested in real-time for DFU classification,

and the BFO method was used for basic analysis. Fu-

ture research will focus on applying this method to

deeper CNN layers, making it suitable for smart de-

vices and IoT. Further studies will also include data

from more databases to improve the real-world appli-

cation of the DFU classification method

REFERENCES

Idf diabetes atlas. Accessed: 2023-05-25.

Abdissa, D., Adugna, T., Gerema, U., and Dereje, D.

(2020). Prevalence of diabetic foot ulcer and associ-

ated factors among adult diabetic patients on follow-

up clinic at jimma medical center, southwest ethiopia,

2019: an institutional-based cross-sectional study.

Journal of Diabetes Research.

Ahmad, A., Abujbara, M., Jaddou, H., Younes, N. A., and

Ajlouni, K. (2018). Anxiety and depression among

adult patients with diabetic foot: prevalence and asso-

ciated factors. Journal of Clinical Medicine Research,

10(5):411.

Ahsan, M., Naz, S., Ahmad, R., Ehsan, H., and Sikan-

dar, A. (2023). A deep learning approach for diabetic

foot ulcer classification and recognition. Information,

14(1):36.

Almobarak, A. O., Awadalla, H., Osman, M., and Ahmed,

M. H. (2017). Prevalence of diabetic foot ulceration

and associated risk factors: an old and still major pub-

lic health problem in khartoum, sudan? Annals of

Translational Medicine, 5(17).

Alzubaidi, L., Fadhel, M. A., Al-Shamma, O., Zhang, J.,

Santamar

´

ıa, J., and Duan, Y. (2022). Robust appli-

cation of new deep learning tools: An experimental

study in medical imaging. Multimedia Tools and Ap-

plications, pages 1–29.

Alzubaidi, L., Fadhel, M. A., Oleiwi, S. R., Al-Shamma, O.,

and Zhang, J. (2020). Dfu-qutnet: diabetic foot ulcer

classification using novel deep convolutional neural

network. Multimedia Tools and Applications, 79(21-

22):15655–15677.

Ananian, C. E., Dhillon, Y. S., Gils, C. C. V., Lindsey,

D. C., Otto, R. J., Dove, C. R., Pierce, J. T., and

Saunders, M. C. (2018). A multicenter, randomized,

single-blind trial comparing the efficacy of viable cry-

opreserved placental membrane to human fibroblast-

derived dermal substitute for the treatment of chronic

diabetic foot ulcers. Wound Repair and Regeneration,

26(3):274–283.

Armstrong, D. G., Boulton, A. J. M., and Bus, S. A. (2017).

Diabetic foot ulcers and their recurrence. New Eng-

land Journal of Medicine, 376(24):2367–2375.

Association, A. D. (2009). Diagnosis and classification of

diabetes mellitus. Diabetes Care, 32(Suppl 1):S62.

Cavanagh, P. R., Lipsky, B. A., Bradbury, A. W., and Botek,

G. (2005). Treatment for diabetic foot ulcers. The

Lancet, 366(9498):1725–1735.

Deep-CNN with Bacterial Foraging Optimization Based Cascaded Hybrid Structure for Diabetic Foot Ulcer Screening

291

Cho, N. H., Kirigia, J., Mbanya, J. C., Ogurstova, K., Guar-

iguata, L., and Rathmann, W. (2015). IDF Diabetes

Atlas-8th. International Diabetes Federation.

da C. Oliveira, Leandro, A., d. Carvalho, A. B., and Dantas,

D. O. (2021). Faster r-cnn approach for diabetic foot

ulcer detection. In VISIGRAPP (4: VISAPP) , pages

677–684.

Das, S. K., Roy, P., and Mishra, A. K. (2022). Dfu-spnet:

A stacked parallel convolution layers based cnn to im-

prove diabetic foot ulcer classification. ICT Express,

8(2):271–275.

Filipe, V., Teixeira, P., and Teixeira, A. (2022). Auto-

matic classification of foot thermograms using ma-

chine learning techniques. Algorithms, 15(7):236.

Ghanassia, E., Villon, L., d. Dieudonn

´

e, J.-F. T., Boeg-

ner, C., Avignon, A., and Sultan, A. (2008). Long-

term outcome and disability of diabetic patients hos-

pitalized for diabetic foot ulcers: a 6.5-year follow-up

study. Diabetes Care, 31(7):1288–1292.

Haque, F., Reaz, M. B. I., Chowdhury, M. E. H., Ezed-

din, M., Kiranyaz, S., Alhatou, M., Ali, S. H. M.,

Bakar, A. A. A., and Srivastava, G. (2022). Machine

learning-based diabetic neuropathy and previous foot

ulceration patients detection using electromyography

and ground reaction forces during gait. Sensors,

22(9):3507.

Hossain, M. S., Nakamura, T., Kimura, F., Yagi, Y., and

Yamaguchi, M. (2018). Practical image quality eval-

uation for whole slide imaging scanner. In Biomedi-

cal Imaging and Sensing Conference, volume 10711,

pages 203–206. SPIE.

Jeffcoate, W. J. and Harding, K. G. (2003). Diabetic foot

ulcers. The Lancet, 361(9368):1545–1551.

Kendrick, C., Cassidy, B., Pappachan, J. M., O’Shea, C.,

Fernandez, C. J., Chacko, E., Jacob, K., Reeves,

N. D., and Yap, M. H. (2022). Translating clin-

ical delineation of diabetic foot ulcers into ma-

chine interpretable segmentation. arXiv preprint

arXiv:2204.11618.

Khandakar, A., Chowdhury, M. E. H., Reaz, M. B. I., Ali, S.

H. M., Abbas, T. O., Alam, T., Ayari, M. A., Mahbub,

Z. B., Habib, R., and Rahman, T. (2022a). Thermal

change index-based diabetic foot thermogram image

classification using machine learning techniques. Sen-

sors, 22(5):1793.

Khandakar, A., Chowdhury, M. E. H., Reaz, M. B. I., Ali,

S. H. M., Hasan, M. A., Kiranyaz, S., Rahman, T.,

Alfkey, R., Bakar, A. A. A., and Malik, R. A. (2021).

A machine learning model for early detection of di-

abetic foot using thermogram images. Computers in

Biology and Medicine, 137:104838.

Khandakar, A., Chowdhury, M. E. H., Reaz, M. B. I., Ali, S.

H. M., Kiranyaz, S., Rahman, T., Chowdhury, M. H.,

Ayari, M. A., Alfkey, R., and Bakar, A. A. A. (2022b).

A novel machine learning approach for severity clas-

sification of diabetic foot complications using thermo-

gram images. Sensors, 22(11):4249.

Laith (2021). Diabetic foot ulcer (dfu).

Munadi, K., Saddami, K., Oktiana, M., Roslidar, R.,

Muchtar, K., Melinda, M., Muharar, R., Syukri, M.,

Abidin, T. F., and Arnia, F. (2022). A deep learning

method for early detection of diabetic foot using de-

cision fusion and thermal images. Applied Sciences,

12(15):7524.

Ponirakis, G., Elhadd, T., Chinnaiyan, S., Dabbous,

Z., Siddiqui, M., Al-muhannadi, H., Petropou-

los, I. N., Khan, A., Ashawesh, K. A. E., and

Dukhan, K. M. O. (2020). Prevalence and man-

agement of diabetic neuropathy in secondary care in

qatar. Diabetes/Metabolism Research and Reviews,

36(4):e3286.

Saeedi, P., Petersohn, I., Salpea, P., Malanda, B., Karu-

ranga, S., Unwin, N., Colagiuri, S., Guariguata, L.,

Motala, A. A., and Ogurtsova, K. (2019). Global and

regional diabetes prevalence estimates for 2019 and

projections for 2030 and 2045: Results from the inter-

national diabetes federation diabetes atlas. Diabetes

Research and Clinical Practice, 157:107843.

Scebba, G., Zhang, J., Catanzaro, S., Mihai, C., Distler,

O., Berli, M., and Karlen, W. (2022). Detect-and-

segment: a deep learning approach to automate wound

image segmentation. Informatics in Medicine Un-

locked, 29:100884.

Singh, G. and Chawla, S. (2006). Amputation in dia-

betic patients. Medical Journal Armed Forces India,

62(1):36–39.

Thotad, P. N., Bharamagoudar, G. R., and Anami, B. S.

(2023). Diabetic foot ulcer detection using deep learn-

ing approaches. Sensors International, 4:100210.

Xie, P., Li, Y., Deng, B., Du, C., Rui, S., Deng, W., Wang,

M., Boey, J., Armstrong, D. G., and Ma, Y. (2022). An

explainable machine learning model for predicting in-

hospital amputation rate of patients with diabetic foot

ulcer. International Wound Journal, 19(4):910–918.

Yap, M. H., Hachiuma, R., Alavi, A., Br

¨

ungel, R., Cassidy,

B., Goyal, M., Zhu, H., R

¨

uckert, J., Olshansky, M.,

and Huang, X. (2021). Deep learning in diabetic foot

ulcers detection: a comprehensive evaluation. Com-

puters in Biology and Medicine, 135:104596.

ICINCO 2024 - 21st International Conference on Informatics in Control, Automation and Robotics

292