Systematic Investigation on Deep Learning Network in Skin

Cancer Diagnosis

Sihan Bian

a

Department of Information Security, Shanghai Jiao Tong University, Shanghai, China

Keywords: Machine Learning, Deep Learning, Skin Cancer.

Abstract: Skin cancer is raising global concern in healthcare. Researchers are looking into the application of deep

learning networks in skin cancer diagnosis, which is full of potential in saving labour and time. This paper

summarizes the framework of machine learning algorithms in skin cancer detection, and reviews several

recent studies on deep learning of skin cancer diagnosis. The approaches from these studies fall into three

primary categories: classification, segmentation, and the creation of supplementary data. Techniques like

Grad-CAM are integrated with Explainable Artificial Intelligence for the classification of skin lesions,

offering insights by emphasizing critical regions. Additionally, the paper touches on the constraints and

hurdles associated with employing deep learning for diagnosing skin cancer, noting common problems such

as a lack of data diversity and concerns over privacy protection. The influence of parameters on model efficacy

and the limited scope of interpretable models to explanations based on individual samples are highlighted.

Furthermore, it's pointed out that deep learning models have not been sufficiently tested in clinical settings.

In conclusion, the paper summarizes the methods evaluated and underscores that deep learning frameworks

require further exploration and enhancements before they can be reliably used in clinical settings without

direct oversight from medical professionals.

1 INTRODUCTION

Skin Cancer is a remarkable health issue due to its

growing incidence rate. According to the cancer

statistics in the United States, 6 cases per 100,000 and

year at the beginning of the 1970s were diagnosed,

while there were 18 cases per 100,000 inhabitants and

year at the beginning of 2000 (Garbe et al., 2009).

According to the World Health Organization's tumor

classification, there are up to 60 types of malignant

tumors of skin cancer, among which the malignant

melanoma (MM) has the strongest lethality, and the

Basal cell carcinoma (BCC) has the greatest

commonality (Garbe et al., 2009). The main causes of

skin cancer are Ultraviolet radiation and genetic

factors. With human life expectancy rising, the

average age of melanoma incidence increases.

Economic burdens are also growing in healthcare of

the skin cancer intervention.

Prompt diagnosis of skin cancer is crucial for

potential patients because the death rate of malignant

skin cancer soars with the fast development and

a

https://orcid.org/0009-0001-5726-3309

spread of tumors, as well as the difficulty of medical

treatment. Traditional diagnosis of skin cancer under

clinical settings includes dermoscopy, blood tests,

biopsy, and histopathological examination. However,

the manual examination by dermatologists can cost

time and potential misdiagnosis may occur. Due to

this reason, researchers have looked into deep

learning as a kind of auxiliary diagnosis due to their

excellent prediction performance.

Convolutional Neural Network (CNN) is a deep

learning technique widely used in the recognition of

visual features and the classification of images,

demonstrating excellent performance in many tasks

including medical image analysis, autonomous

driving, face recognition etc. (Coşkun et al., 2017; Li

et al., 2019; Qiu et al., 2022). In recent years, studies

on the diagnosis of skin cancer using convolutional

neural network have emerged and most models

perform as well as specialists in classifying the sign

of skin cancer (Haggenmüller et al., 2021).

Researchers are improving the classification models

so as to prepare them for clinical use. For example,

Bian, S.

Systematic Investigation on Deep Learning Network in Skin Cancer Diagnosis.

DOI: 10.5220/0012953200004508

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 1st International Conference on Engineering Management, Information Technology and Intelligence (EMITI 2024), pages 469-473

ISBN: 978-989-758-713-9

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

469

FixCaps V2 is an advanced method based on

FixCaps, a capsule network and has good

generalization and stability in skin cancer diagnosis

(Lan et al., 2022). PRU-Net, on the other hand, is a

new algorithm for skin cancer segmentation through

the strengthened dissemination and reuse of image

information (Li et al., 2023). Moreover, the

explainable artificial intelligence (XAI) model can

interpret its diagnosis and includes an interface for

experts to participate in, which enables the further

advancement of the model (Mridha, Krishna et al.,

2023). The aim of this study is to present an overall

review of the recent study on the application of CNN

in skin cancer diagnosis.

The paper is structured as follows: The first part

is the introduction to the use of deep learning on skin

cancer classification. Second, the methods of several

recent studies will be reviewed. Third, the limitations,

challenges and future prospects of these methods will

be discussed. Finally, conclusions of the review on

convolutional neural network in cancer diagnosis will

be presented.

2 METHOD

2.1 The Framework of Machine

Learning-Based Algorithms in Skin

Cancer Detection

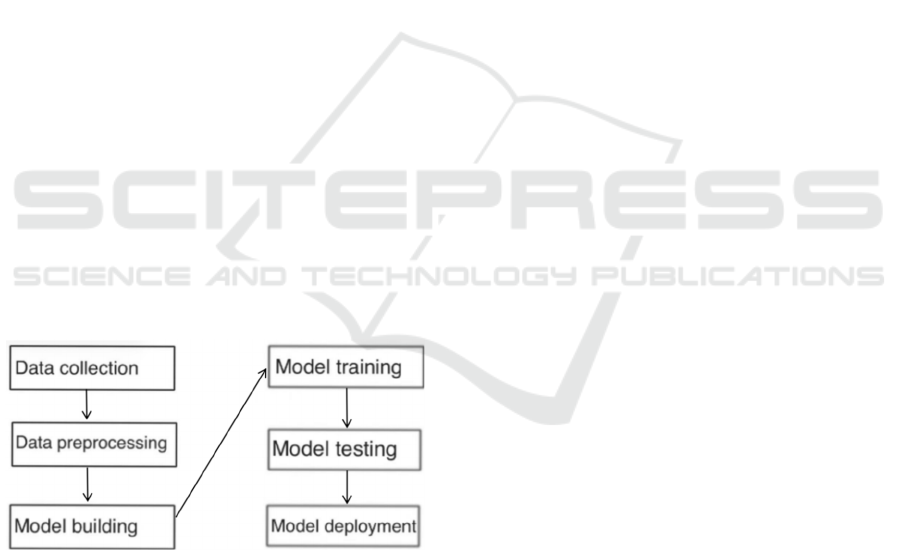

Figure 1 presents the framework of machine learning-

based algorithms in skin cancer detection.

Figure 1: Framework of machine learning-based algorithms

in skin cancer detection (Picture credit: Original).

Data Collection: Sufficient data is crucial for

deep learning models to make accurate predictions

and diagnoses for medical image analysis

applications. The datasets of the skin cancer images

were built through the following sources:

Kaggle, the well-known scientific community

website contains a dataset called HAM10000 which

provides 10015 images of dermoscopy. All the sizes

of these images are regularized into 600 × 450,

sampled from 7728 patients.

The existing dataset from the International Skin

Imaging Collaboration, which contains 25, 94 images

and 12970 labeled images of great qualification.

These data are collected under clinical circumstances

and are labeled and noted by specialists.

Dermnet is a community website containing over

20, 000 dermatology images and they have gone

through the censor of consultant dermatologists.

Data Preprocessing: Data preprocessing is

introduced into the process of deep learning.

Preprocessing includes normalization and

augmentation so as to improve the quality of the data

and enhance the generalization ability of the model.

Model Building: Appropriate learning model

such as Convolutional Neural Network (CNN),

Support Vector Machine (SVM), or Decision Tree are

selected in consideration of the size of image sizes

and the availability of computational resources. This

step includes the adjustment and improvement of

existing training models in order to avoid defects such

as the insufficient feature utilization caused by

ignoring inter-layer feature interaction or overfitting

in convolutional neural networks caused by

imbalanced dataset categories.

Model Training: Optimization algorithms such

as Stochastic Gradient Descent are applied to the

training of model. Researchers adjust the

hyperparameters of the model to improve its

performance.

Model Testing: The trained model should

undergo the comparison of deep learning outcomes

and the real results provided by specialists. Here are

the evaluation metrics of the model performance:

accuracy, precision, recall, and F1-score.

Model Deployment: For real-time clinical use,

the trained model should be transformed into an

executable format, such as saving the model

parameters as files.

2.2 Classification

2.2.1 Convolutional Neural Network

Convolutional neural network (CNN) is a deep

learning technique widely used in the recognition of

visual features and the classification of images. CNN

has flexible structure including convolutional layer,

pooling layer, normalization layer and fully

connected layer. CNN models extract features

through hierarchical abstraction: Networks from

lower levels can extract basic texture and color

information (points, lines, blocks) which is applied to

EMITI 2024 - International Conference on Engineering Management, Information Technology and Intelligence

470

various object recognition tasks. On the other hand,

networks of higher levels can interpret these features

in an abstract way. Pooling layers decrease the sizes

of input neurons. Images of skin lesions are extracted

by CNN and obtained features are classified into

several groups of different diseases (Dorj, Ulzii-

Orshikh et al., 2018; Wang, 2018).

2.2.2 XAI-Based Skin Lesion Classification

System

Mridha et al. proposed an Explainable Artificial

Intelligence (XAI)-based skin lesion system

incorporating Grad-CAM and Grad-CAM++. This

model can be used as an auxiliary tool for early-stage

skin cancer diagnosis and provides the explanation

for the model’s decisions.

Grad-CAM is implemented as follows: First,

compute the gradient about feature maps of the

convolutional layers. Second, compute the alphas

through averaging gradients. Third, calculate the final

Grad-CAM heatmap.

Grad-CAM explains its classification on skin

lesions by highlighting the most significant parts of

the input images that decide the outcome. Grad-

CAM++ improves its performance by using guided

backpropagation to produce a more detailed heatmap.

2.2.3 FixCaps V2

FixCaps V2 proposed by Lan et al. is a CapsNets-

based skin diagnosis algorithm that inherits the

features of CapsNets that "outputs are the clustering

of inputs" and maintains its capsule architecture.

FixCaps V2 solves the size issue of high-resolution

images through feature-aware networks without

stacking large amounts of convolutional or capsule

layers. (Lan et al., 2022; Cai, 2023)

What’s more, FixCaps also has improvements on

CapsNets such as the larger receptive field. This is

achieved by ultilizing large kernel convolution

exceeding 9×9. With larger kernel, more image

information is received by the network. FixCaps V2

also applies convolutional block attention model

(CBAM) so as to make itself more concentrated on

the object and reduce the loss of spatial information

due to convolution and pooling.

2.3 Segmentation

2.3.1 PRU-Net

Li et al. proposed a new skin cancer segmentation

model called PRU-Net. It is a combination of dense

link modules and pyramid-type void convolutional

modules, plus the residual module (Li, 2023).

First, PRU-Net adopts U-Net and Densely

Connected Convolutional Network (DenseNet) as the

segmentation model to enhance the propagation and

reuse of global information. Secondly, channel

attention mechanism is added to improve the

segmentation accuracy of edge images. After that, the

residual modules in ResNet and the dilated pyramid

pooling module are introduced to enhance the

segmentation performance of the model.

U-Net combines shallow feature information and

deep semantic information to provide accurate

segmentation for data images. PRU-Net not only

shares the same advantages as U-Net, but also solves

the defects of U-Net such as the segmentation blur

caused by low contrast between image feature regions

and background regions.

2.4 Supplementary Data Generation

2.4.1 Self-Attention StyleGAN

Generate adversarial networks are used in image

enhancement of the datasets in deep learning. (Zhao,

Chen et al, 2022). designed a framework combining

self-attention SA-styleGAN with SE-ResNeXt-50.

The "style" in StyleGAN borrows from style transfer

and enables highly controllable image generation in

an unsupervised manner. For further improvement,

SA-StyleGAN abandons the use of mixup

regularization. Single latent code is used in SA-

StyleGAN in order to effectively eliminate image

distortion and blur, providing high-quality sample

images for classifiers. The number of noise modules

is also reduced to eliminate unnecessary noise in

generated data.

3 DISCUSSION

Although significant progresses have been achieved,

there still are some limitations and challenges in

terms of the use of deep learning in skin cancer

diagnosis.

First, the datasets of the studies reviewed are

similar and have insufficient sample variety. 4 out of

8 studies use HAM10000 to train models so these

models may have similar bias over dermoscopic

images. For example, 67% of the HAM10000 is made

up of samples of melanocytic nevi dermatoscopy so

models trained by it are likely to be less accurate

diagnosing from other skin lesions. To address this

problem, researchers might unite medical experts

Systematic Investigation on Deep Learning Network in Skin Cancer Diagnosis

471

integrate a large amount of legal public

dermatological datasets available online, or utilize

deep learning algorithms for legal information mining

and analysis (Xia et al., 2017). That will aid in the

creation of a more accurate and comprehensive skin

lesion image recognition and diagnostic system.

Another issue is about the parameters of these

models. When the dataset is explicitly different from

the original dataset of a pre-trained model, the initial

parameters of the network do not well express the

primary features of the new dataset (Wang, 2018).

This limits the flexibility of a single model’s

application on diagnosing skin cancers of prominent

differences. Furthermore, different random seeds

may have huge impacts on the iteration results of a

model. Different architectures have varying

adaptation degrees to pseudo-random numbers. The

cause of this phenomenon is still waiting to be studied

(Cai, 2023).

Third, when it comes to the interpretability of

deep learning models, the related study points out that

their current model has only achieved success in

giving out single-sample explanations (Mridha,

Krishna et al., 2023). The stage of applying their

explanation approach to several samples and

combining them is still waiting for research, which is

crucial for complicated lesion analysis in clinic use.

In addition, as Mridha proposed in his paper, current

evidence is not enough to relate the observed

relevance of feature dimensions to the real score.

Therefore, multiple measures for evaluating

explanations should be explored.

The fourth challenge is the issue of privacy.

Medical information is confidential so any research

involving personal health data may raise data privacy

controversies. Although datasets from International

Skin Imaging Collaboration or HAM10000 have

removed all personal identity information and are all

anonymized, there are still risks to data security and

privacy protection. Other datasets from medical

institutions may not be free to access, but these

datasets also undergo risks of privacy thefts due to the

fierce competition of the medical industry. What’s

more, deep learning networks are able to memorize

training datasets. If the network is subjected to

malicious attacks, it may lead to the leakage of private

user data (Tian, 2020).

The fifth main challenge is the practicability of

deep learning methods. Since the deep learning

models are trained and tested under artificial

circumstances, their performance under real

circumstances is rarely measured. Therefore, the

diagnosis by deep learning networks must be under

the supervision of human specialists. In addition,

some advanced deep learning approaches can also be

considered for further improving the performance (Li

et al., 2024; Sun et al., 2020; Wu et al., 2024).

4 CONCLUSIONS

This paper has reviewed 8 current studies on deep

learning in the area of skin cancer diagnosis. Deep

learning technique is time-and-labour-saving in

analyzing the images of skin lesions if trained through

prompt algorithms and fed by balanced datasets of

lesion images in various conditions.

Most recent studies on this topic concentrate on

the classification of images, using convolutional

neural network or improved capsule networks like

FixCaps V2. Some explored auxiliary methods for the

diagnosis such as image segmentation by PRU-Net or

supplement data generation by Self-Attention

StyleGAN. In addition, XAI-based classification

system provides explanations for the decisions of the

deep learning model.

To satisfy the need of the medical industry,

further studies may explore the integration of these

methods so as to address the insufficiency of data and

provide well-segmented data. Deep learning models

may also be ported to mobile devices to ensure early

awareness of people on their skin lesions.

REFERENCES

Cai, S. B. 2023. Research on skin cancer assisted diagnosis

method based on FixCaps [Doctoral dissertation,

Chongqing Jiaotong University].

Coşkun, M., Uçar, A., Yildirim, Ö., & Demir, Y. 2017. Face

recognition based on convolutional neural network.

In 2017 international conference on modern electrical

and energy systems (MEES) (pp. 376-379). IEEE.

Dorj, U. O., et al. 2018. The skin cancer classification using

deep convolutional neural network. Multimedia tools

and applications, 77(8), 9909-9924.

Garbe, C., MD, & Leiter, U., MD. 2009. Melanoma

epidemiology and trends. Clinics in dermatology,

27(1), 3-9.

Haggenmüller, S., et al. 2021. Skin cancer classification via

convolutional neural networks: systematic review of

studies involving human experts. European Journal of

Cancer, 156, 202-216.

Lan, Z., et al. 2022. FixCaps: An Improved Capsules

Network for Diagnosis of Skin Cancer. IEEE Access,

10, 76261-76267.

Li, M., He, J., Jiang, G., & Wang, H. 2024. DDN-SLAM:

Real-time Dense Dynamic Neural Implicit SLAM with

Joint Semantic Encoding. arXiv preprint

arXiv:2401.01545.

EMITI 2024 - International Conference on Engineering Management, Information Technology and Intelligence

472

Li, P., Chen, X., & Shen, S. 2019. Stereo r-cnn based 3d

object detection for autonomous driving.

In Proceedings of the IEEE/CVF Conference on

Computer Vision and Pattern Recognition (pp. 7644-

7652).

Li, Y. L., Tian, J., Chen, D. X., Deng, Y., Zeng, W. X., &

Zeng, Y. Q. 2023. Research on skin cancer

segmentation method based on PRU-Net. Computer

Knowledge and Technology, 19(24), 9-13.

Mridha, K., et al. 2023. An interpretable skin cancer

classification using optimized convolutional neural

network for a smart healthcare system. IEEE Access,

11, 1.

Qiu, Y., Wang, J., Jin, Z., Chen, H., Zhang, M., & Guo, L.

2022. Pose-guided matching based on deep learning for

assessing quality of action on rehabilitation

training. Biomedical Signal Processing and

Control, 72, 103323.

Sun, G., Zhan, T., Owusu, B.G., Daniel, A.M., Liu, G., &

Jiang, W. 2020. Revised reinforcement learning based

on anchor graph hashing for autonomous cell activation

in cloud-RANs. Future Generation Computer Systems,

104, 60-73.

Tian, X. T. 2020. Research on privacy protection

technology of training data in deep learning [Doctoral

dissertation, Harbin Engineering University].

Wang, L. 2018. Machine learning based skin disease image

classification [Doctoral dissertation, Zhejiang

University].

Wu, Y., Jin, Z., Shi, C., Liang, P., & Zhan, T. 2024.

Research on the Application of Deep Learning-based

BERT Model in Sentiment Analysis. arXiv preprint

arXiv:2403.08217.

Xia, Y., Zhang, L., Meng, L., et al. 2017. Exploring web

images to enhance skin disease analysis under a

computer vision framework. IEEE Transactions on

Cybernetics, 48(11), 3080-3091.

Zhao, C., Shuai, R. J., Ma, L., Liu, W. J., & Wu, M. L. 2022.

Generation and classification of skin cancer images

based on self-attention StyleGAN. Computer

Engineering and Applications, 58(18), 111-121.

Systematic Investigation on Deep Learning Network in Skin Cancer Diagnosis

473