Optimizing Decision Making in Aviation: A New Communication

Paradigm for Rerouting

Turkan Hentati

1a

, Théodore Letouze

2b

, Charles Alban Dormoy

1c

, Jaime Diaz-Pineda

3d

,

Ricardo Jose Nunes dos Reis

4e

, Anaisa de Paula Guedes Villani

4f

and Jean-Marc Andre

2g

1

CATIE, Bordeaux, France

2

Bordeaux INP-ENSC, IMS UMR 5218, Université de Bordeaux, CNRS Talence, Bordeaux, France

3

Thales AVS, Bordeaux, France

4

Embraer Research and Technology Europe - Airholding S.A., Alverca do Ribatejo, Portugal

Keywords: HAT, Bidirectional Communication, Decision Making, Intelligent Assistant, Human-Cooperative Techniques.

Abstract: Commercial aviation is increasingly constrained by airspace congestion and the need to balance profitability

with environmental concerns. Despite this growing complexity, pilot’s cognitive resources remain the same.

This article examines a new communication paradigm using 'intentions' in HAT (Human Autonomy Teaming)

for commercial aviation. The use case involves a cockpit IA (Intelligent Assistant) designed to assist flight

crew in re-routing or diverting an airliner to a new destination in the event of weather hazards, taking into

account various operational performance indicators. To communicate and negotiate with the IA, the pilot

expresses their high-level goal, also known as operator intention, which includes preserving cognitive

capacities, passenger comfort, or airline profitability, in order to find the optimal solution. This work

compares three types of assistance: decision support, cooperative assistance, and collaborative assistance. The

study aims to identify the key features of each type and determine the most suitable level of assistance for

supporting decision-making during rerouting. To validate the objectives of this use case, six pilots were asked

to evaluate three different types of assistance using the 'cognitive walkthrough' method and questionnaires

about trust and usability. The results provide some key features of each type of assistance that can increase

the performance of decision making in a distributed work between pilot and IA.

1 INTRODUCTION

Safety has always been a paramount consideration in

the civil aviation industry (Li et al., 2023). With the

worldwide rapid growth of airlines’ operations, the

importance of aviation safety and risk is becoming

more prominent. Over the past decades, the use of

intelligent systems in aircraft has increased

exponentially, promising to revolutionize the safety,

efficiency, and comfort of air travel. Artificial

intelligence technologies are expected to play an

exceedingly crucial role in the future of the aviation

sector. Investments in artificial intelligence, which

a

https://orcid.org/0000-0003-0865-4618

b

https://orcid.org/0000-0002-8670-0280

c

https://orcid.org/0009-0003-5737-6789

d

https://orcid.org/0009-0007-0591-7706

e

https://orcid.org/0000-0002-5201-5314

f

https://orcid.org/0000-0002-4523-8720

g

https://orcid.org/0000-0001-9844-4694

amounted to approximately $340 million in 2019, are

projected to reach $3.7 billion by 2027, with a

compound annual growth rate of 45.3%. This trend is

expected to further intensify the aircraft where they

are anticipated to be equipped with sophisticated

artificial intelligent (AI) systems, playing a crucial

role in decision-making, and pilot’s assistance

(Ceken, 2024). Such sophisticated AI systems are

likely to transform human-machine interactions to

Human-Autonomy Teaming (HAT), in which team-

oriented intentions, shared mental models, and some

decision authority to determine actions, allow

Hentati, T., Letouze, T., Dormoy, C., Diaz-Pineda, J., Reis, R., Villani, A. and Andre, J.

Optimizing Decision Making in Aviation: A New Communication Paradigm for Rerouting.

DOI: 10.5220/0012960600004562

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 2nd International Conference on Cognitive Aircraft Systems (ICCAS 2024), pages 51-57

ISBN: 978-989-758-724-5

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

51

systems to effectively coordinate with humans in

complex tasks (Lyons, et al., 2021).

This exponential growth stems primarily from the

industry's constant pursuit of enhancing aviation

safety. Various types of aviation accidents, such as

loss of control in flight (LOC-I), unexplained or

undetermined incidents (UNK), Controlled Flight

Into Terrain (CFIT), as well as system or component

failures, have prompted a thorough revaluation of

existing safety protocols (Li et al., 2023). These

incidents, often attributed to human error or

unforeseen environmental factors, have highlighted

the need for technological assistance for pilots to

manage complex situations and systems, particularly

in critical flight events.

1.1 Human Autonomy Teaming

Recent research into intelligent systems has centred

on exploring the viability of HAT, which would serve

the dual purpose of managing new assistance onboard

and providing support to the pilot during periods of

high workload. It’s an innovative technique for user

control and review of decision making (Saephan,

2023). The Challenge in HAT lie in creating (1)

opportunities for teams to build shared awareness and

collective motivation, (2) comprehension of the tasks

and interactions that can gain from social cueing, and

(3) devising methods to utilize these cues effectively

to improve HAT performance (Lyons et al., 2021).

This assistant can reduce the cognitive burden on the

pilot and enhance operational efficiency. Ultimately,

the goal of a HAT, is to regain and manage control of

an aircraft mostly in the event of pilot incapacitation,

either directly or by enabling intervention from a

ground operator. Despite its apparent intuitiveness,

the concept of mental workload remains surprisingly

elusive to define conclusively, with no universal

consensus reached thus far (Puca & Guglieri, 2023).

The fundamental reasoning behind employing HATs

is the potential to enhance performance compared to

either humans working alone or machines operating

independently, especially in situations characterized

by significant uncertainty (Cummings, 2014). The

European Union Aviation Safety Agency (EASA),

the primary European aviation regulator, has outlined

a valuable vision of AI and its potential impacts on

aviation operations and practices. EASA's recent

guidance on human-AI teaming (HAT) consists of six

categories, including 1B Cognitive assistant

(equivalent to advanced automation support); 2A

Cooperative agent, capable of completing tasks as

requested by the operator; 2B Collaborative agent, an

autonomous agent that works with human colleagues,

but can take initiative and execute tasks, as well as

negotiate with its human counterparts (Kirwan,

2024).

Communication, coordination and trust are

important in HAT (Johnson et al., 2014). To have a

good communication Human – IA, there is 3 key

attributes that will allow users to move toward

treating automation as a teammate: a pilot-directed

interface, transparency, and bi-directional

communication. These principles are seamlessly

integrated into all three levels of assistance offered

and based on the EASA mentioned above (Shively et

al., s. d.). With a focus on empowering pilots,

ensuring transparency, and facilitating effective

communication, our services are committed to

delivering top-quality technical assistance while

meeting rigorous aviation standards. Moreover, a

long time ago, Fitts (1951), initiated an early effort to

classify activities within air traffic control systems

into human tasks and machine tasks, utilizing the

"Men-Are-Better-At and Machines-Are-Better-At"

(MABA-MABA) principle. However, the rigidity of

this principle poses limitations, as technological

advancements can render such categorizations

outdated over time. Another widely adopted

framework is the Level of Automation (LOA), which

categorizes tasks based on cognitive abilities. For

instance, LOA frameworks often include categories

such as information acquisition, information analysis,

decision making, and action implementation.

In recent times some works in Single Pilot

Operations (SPO) have developed a Proof of Concept

(PoC) of a human autonomy teaming (HAT), with

cognitive computing (the machine) acting as a

teammate for the pilot (the human) in SPO. The

intelligent Teammate was implemented in legacy

cockpits using augmented reality (AR) and vocal

communication, offering two levels of assistance to

test: on-request and proactive CCT "automatic"

(Dormoy et al., 2021; Minaskan et al., 2022).

Various organizations, such as SESAR SJU which

is an institutionalised European partnership (Save et

al., 2012) and (EASA, 2023), have developed LOA

taxonomies to guide the understanding and

implementation of automation levels in aviation.

Also, in ATCO a proof-of-concept of a controller

working position (CWP) was developed and

presented at the Airspace World 2023 in Geneva. It

was evaluated in term of feasibility utility and

usability for Single Controller Operations (SCO) in a

Human-in-the-loop simulation campaign involving

human ATCOs (Jameel et al., 2023). This POC was

appreciated by the participants of the trade show.

Nevertheless, the employment of more autonomous

ICCAS 2024 - International Conference on Cognitive Aircraft Systems

52

systems often faces issues regarding societal and

organisational acceptance. Rice et al., (2019)

developed a predictive model indicating that the

likelihood of being willing to fly on an autonomous

aircraft is positively correlated with familiarity with

the technology and negatively correlated with caution

toward new technologies. The main objective of

Haiku project, in which the work herein presented

was developed, is to enhance the understanding on

Human-AI Teaming aspects, through prototypes

designed to establish safe, secure, trustworthy, and

effective partnerships with humans in aviation

systems. Specifically in Use Case 2 (UC 2), we aim

to address this gap exploring HAT for mission

replanning in the cockpit of commercial aviation.

1.2 The Objective of the HAT in

Commercial Aviation

It is crucial to emphasize that the objective of the

proposed intelligent assistant (IA) is to assist

commercial pilots in their complex task of flying.

This system purpose is to improve pilots' situational

awareness, reduce workload and stress associated

with monitoring and mitigating unexpected events

that may affect the planned route, while supporting

the selection of a course of action that not only assures

a safe flight termination, but also safeguards

passengers’ comfort and other operational objectives

of the airline. Indeed, the symbiosis between man and

machine lies at the heart of this technological

evolution, ensuring safer and more efficient air

navigation in the years ahead.

The means by which this intelligent system

communicates with pilots, using operational

intentions to express high-level goals, is central to

these objectives. Still, different types of interactions

may be proposed to promote the shared awareness,

trust and coordination needed to assure the overall

performance in the task. The aim of this study is to

offer a comprehensive understanding of the

methodology employed and the outcomes achieved

during the initial validation phase of UC 2 in Haiku

project, in which different intelligent assistant (IA)

concepts were evaluated.

2 MATERIAL AND METHODS

2.1 Methodology

We employed the "Cognitive Walkthrough" method.

Rooted in human factors engineering, this method is

designed to systematically evaluate interface

usability by simulating user interactions and decision-

making processes iteratively using a user-centered

design approach.

The initial questions that were imposed are as

follows:

- What are the key features for each type of

assistance (decision support - 1B,

cooperative - 2A, collaborative - 2B) that

enable teamwork requirements assurance

and effectiveness?

Our hypothesis (H) are:

- H1: HAT cooperative teaming (2A)

improves decision making process for on air

re-route situation vs. decision support

assistance (1B).

- H2: HAT collaborative teaming (2B)

improves decision making process for on air

re-route situation vs. HAT cooperative

teaming (1B).

This endeavour serves to explore the potential of AI

in addressing operational challenges faced by pilots,

particularly in an increasingly complex aviation

environment.

In our case, we evaluate three different levels of

intelligent assistant.

• 1B – Human assistance

• 2A – Human-AI cooperation,

• 2B – Human-AI collaboration

2.2 Material

The proposed intelligent assistant concepts

investigated in this paper integrate the principles of

AI-based “COMBI” (Hourlier et al., 2022) in the

cockpit IA with the goal of helping flight crew re-

route an aircraft to a new airport destination due to

deteriorating weather, considering a number of

factors (e.g. remaining fuel available and distance to

airport; in-route turbulences, connections possible for

passenger given their ultimate destinations; etc.). The

flight crew remain in charge, but always coordinates

with the IA to derive the optimal solution.

Through the COMBI interface, the pilots use

operational intentions to communicate with the IA.

“Intentions involve mental activities such as planning

and forethought, they can be declared and clearly

defined, while in other instances can be undeclared

or masked, making them sometimes complex to

identify” (Bratman, 1987).

These intentions are always oriented to achieve a

particular goal in a specific way.

COMBI allows the pilot to direct the options

generation according to the prioritized intentions and

also to assess the proposed results in terms of these

Optimizing Decision Making in Aviation: A New Communication Paradigm for Rerouting

53

intentions. In the Combi’s user interface, the pilot can

select and prioritize between three intentions:

- Safety passenger comfort

- Pilot cognitive comfort

-

Airline Profitability

The graphical interface in Figure 1 was proposed

to easily allow pilots selection and visualization of the

prioritization of intentions.

Figure 1: An interactive mock-up illustrating the

communication of the pilot's intentions to the COMBI.

For example, in Figure 1, the pilot selects Passenger

comfort it means that he wants a solution prioritizing

the passenger comfort at first, then profitability (same

side of the triangle) and at last pilot cognitive

comfort.

Description of the low-fidelity prototype:

Interface showing a number of proposed routes

graphically, accompanied by critical information

such as weather conditions, estimated time and fuel at

destination, with scores that represent how the route

contributes to the three intentions.

On-demand complementary information to

support operational XAI, with more elements

indicating how the selected route and destination

contribute to the intentions.

2.3 Use Case Scenarios

The flight scenario was designed with the assistance

of two pilots and based on various Eurocontrol

reports (Eurocontrol, 2023) which identify the

airports most affected by significant weather

conditions at different times of the year. The scenario

was presented to the pilots as a brief operational flight

plan, describing a regional flight from Marseille to

Munich. Four different weather conditions were

simulated in order to represent the four seasons, with

a variety of weather representative conditions.

Pilots were asked to evaluate three different types

of AI assistants along with their respective interfaces,

utilizing the "Cognitive Walkthrough" method.

During the Cognitive Walkthrough sessions,

pilots were presented with simulated flight scenarios

and guided through tasks involving the AI assistants

and interfaces. As they progressed through each

interface, pilots were encouraged to articulate their

thoughts, interactions, and decision-making

processes out loud. Researchers closely observed and

documented pilot behaviours, identifying potential

usability issues, cognitive workload, and

complexities within the interfaces. At the end of every

level few questions about trust in AI and usability

were asked.

By drawing upon the expertise and insights of

seasoned pilots, the Cognitive Walkthrough

methodology yields valuable feedback for refining AI

assistants and interfaces within aviation settings. This

structured approach enables the identification and

remediation of usability challenges, ultimately

improving the usability and user experience of AI

technologies in aviation operations.

2.4 Modalities: Levels of Assistance

The three modalities tested with all pilots are:

• Support to decision (1B): 3 routes are

proposed linked to pilot’s intentions, and the

associated flight plan (FP) can be

implemented in FMS on request. The 3

routes maximize the first intention.

• Cooperative Assistant (2A): low-impact

threats - 1 route linked to pilot’s intentions,

implemented on request /other cases - work

same as 1B. The route maximizes the first

intention.

• Collaborative assistant (2B): 2 routes are

proposed - 1 called “Least negative impact”,

and 1 called “Best Compromise”. The

respective FP can be implemented in the

FMS on request.

- “Least negative impact”: the other intentions may

lose value, but the loss will be shared as evenly as

possible between the two intentions.

- “Best compromise”: The loss of value for the other

two intentions will be assessed against the gain on the

first intention. We will accept low losses for low

gains.

For each, in all the solutions proposed by the

assistants, the safety is always a priority and

guaranteed.

For the reroute case, the new route is always safe,

ensuring that the aircraft arrives at destination with a

functional machine, safe crew and minimum legal

fuel. For the choice of the alternate airport, the

landing with safe performance, safe machine, crew,

minimum legal fuel etc are also always ensured. This

information is displayed on the HMI as a grey bar to

show that it is not changeable and 100%.

ICCAS 2024 - International Conference on Cognitive Aircraft Systems

54

3 RESULTS

The panel comprised six commercial airline pilots

from different nationalities selected from diverse

aviation backgrounds and all flown Europe (short and

long haul). The average age of the pilots is M=48.33

(SD = 6.62). On average, the pilots accumulated

9,045 flight hours throughout their careers (SD =

3.099), with an average of 535 flight hours within the

last 12 months (SD = 215), reflecting variability in

flight experience and recent activity among the

participants. Given the small sample size of our study

(6 pilots), we restricted our analyses to descriptive

analysis to discern only trends. All analyses were

carried out using R studio version 4.3.0.

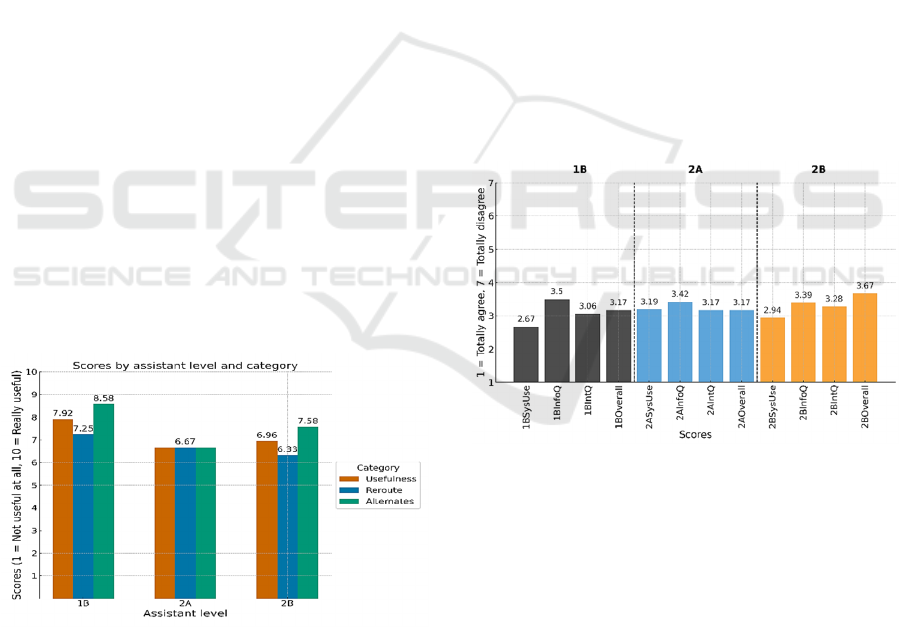

3.1 Usefulness

With regard to the interpretation of the results for

question 1 “On a scale of 1 to 10, how useful is this

assistant against being alone in the cockpit?" with 1

“not useful at all” and 10 “really useful”,

distinguishing between the 3 situations and the 3

types of assistants (1B - decision support, 2A -

cooperation, 2B - collaboration).

Participants responses provide valuable feedback

on the perceived utility of the assistant in real-world

aviation scenarios. Higher ratings indicate that the

assistant is seen as more beneficial compared to

operating alone in the cockpit. Conversely, lower

ratings may indicate areas where the assistant falls

short in meeting pilots' expectations and requirements

for in-flight assistance.

Figure 2: Overall usefulness, usefulness for reroute and

usefulness for alternates (diversion), for the 3 types of

assistant.

These results suggest that overall, participants

tend to prefer the 1B type assistant (decision support),

followed by the 2B type assistant (collaboration),

while the 2A type assistant (cooperation) is less

favourably rated. However, it is important to note that

preferences may vary depending on specific

operational situations.

Nevertheless, we can conclude that any of these

assistants would be useful (score above 5), for any

situation (Overall, Usefulness, Reroute, Alternates).

3.2 Usability

According to ISO 9241, the usability is the

effectiveness, efficiency, and satisfaction with which

specified users achieve specified goals in particular

environments.

To measure the usability, we used The Computer

System Usability Questionnaire (CSUQ) which

evaluate 3 dimensions : the System Usability

(SYSUSE), the information quality (INFOQUAL)

and the interaction quality (INTERQUAL) (Lewis,

1995).

The CSUQ is for measuring the perception of the

user’s experience. The pilots who participated in this

study were asked to respond to the 16 CSUQ

questions. The participants are asked to rate their

responses in a scale from 1 “totally agree to 7 “totally

disagree”.

Figure 3: Results of the CSUQ questionnaire for 1B, 2A and

2B intelligent assistants, and by dimensions, SYSUSE

(System Usability), INFOQUAL (Information Quality),

INTERQUAL (Interaction Quality).

In summary, the cooperative (2A) and the decision support

(1B) seem to offer an overall improvement in the user

experience compared to the collaborative (2B). The system

usability seems to be better in 1B. The information quality

seems to be slightly better in 1B.

3.3 Trust in AI

This questionnaire assesses individuals' tendencies to

trust technology in various contexts (Schneider &

Preckel, 2017). It can be used to understand

participants' attitudes and perceptions regarding the

Optimizing Decision Making in Aviation: A New Communication Paradigm for Rerouting

55

reliability, usability, and effectiveness of technology

in supporting their tasks and decision-making

processes.

Based on descriptive analysis, it appears that the

tendencies of the results did not lead to changes in the

subjects' overall trust in AI across the different

conditions (Before the exhibition to the AI and after).

but they seem to have an average trust in the IA (rate

of 2.75 out of 5).

3.4 Interviews

In the interviews, pilots were asked about what they

appreciated in the intelligent assistant concepts and

what areas could be improved. Overall, the pilots

appreciate the experience using a bidirectional

communicator proposed by the Combi assistant, with

a preference for the functionality proposed by the

assistance level 1B, which offered multiple options.

The operational intentions of passenger comfort and

airline profitability were well understood, differently

from the pilot cognitive comfort intention, which

seemed more complex to assess. Despite this, pilots

recognized the importance and the usefulness of

intentions and positively evaluated managing the

mission based on them.

The pilots also provided some recommendations

to improve the interface, such as adding natural voice

interaction, using colors to indicate airport situations,

and offering multiple solutions like in 1B.

When asked about the best means of providing

additional information to support explainability and

proper oversight, pilots indicated that multiple types

of interfaces and interactions should be tested in

prototypes to form an opinion.

4 DISCUSSION

The difficulties encountered in understanding pilot

cognitive comfort highlight the need for a training

phase to thoroughly understand the model behind

each intention. This will also help increase trust in the

system even if the rate of trust is already good.

In our observations, we noticed differences in how

decisions were made across scenarios 1B, 2A, and

2B. When using the 2A assistant, decisions were

made quickly, often because there were fewer

alternative options available. On the other hand, we

observed that pilots took longer time to make

decisions with the 2B and 1B assistants.

In scenarios where decision-making was swift,

such as 2A, pilots may have been compelled to rely

on rapid judgments due to the constraints of the

situation. With fewer alternative options available,

even if it was not the best solution for them, pilots

may have accepted the option because it was just

acceptable.

Conversely, in scenarios 2B and 1B, where

decision times were longer due to the greater number

of options, the multiple choices and the time allowed

enabled the pilots to analyse, compare and evaluate

the different options in depth. However, if time was

limited, the limited cognitive resources under stress

could have forced the pilots to adopt more cautious

and deliberate decision-making processes.

Overall, the authors highlighted the impact of

human cognitive limitations, particularly in high-

pressure flight scenarios where time constraints may

compromise the depth of analysis and lead to varied

decision-making times. This variation in decision-

making time can be explained by the limited

cognitive resources of humans, as described in

Rasmussen's SRK (Skill, Rule, Knowledge) model

(Rasmussen, 1983). When relying more on analytical

knowledge, the decision-making process becomes

slower and more mentally demanding. This is known

as the paradox of choice, where trying to avoid

missing out on the best option can prolong decision-

making and cause frustration over unselected options.

Additionally, having multiple choices creates a need

for cognitive closure, which is the desire to have a

clear answer to avoid uncertainty and regret, resulting

in cognitive strain and frustration. Time stress also

plays a crucial role; in our scenario, the pilots had

ample time to make decisions. Lower time stress

allows for more analytical decision-making, leading

to longer decision times.

5 CONCLUSION AND FUTURE

WORKS

In this paper we showed that the different concepts of

assistance have positive and negative characteristics.

As future work, we will consider pilot feedback, we

will continue interviewing pilots to determine the

most concise comprehensible way of presenting

additional information in the interface and integrate

the strengths of each level into a unified assistant with

personalized features. With these steps, we can

enhance the utility and user experience of future

projects in aviation assistance.

By incorporating pilots' feedback, we can refine

the assistant's features to better suits their needs and

preferences. This could involve streamlining

decision-making processes, optimizing interface to

ICCAS 2024 - International Conference on Cognitive Aircraft Systems

56

ensure user acceptancy and usability, and enhancing

support for handling varying levels of complexity and

stress in flight scenarios.

Furthermore, merging the advantages of each

level into a single assistant with a personalized touch

can provide pilots with a more tailored and intuitive

user experience. This approach would empower pilots

to leverage the assistant's capabilities more

effectively, thereby improving overall decision-

making efficiency and effectiveness. A new version

of combi will be tested in a simulator with more

pilots.

ACKNOWLEDGEMENTS

This study has been conducted in the project Haiku.

This project has received funding by the European

Union’s Horizon Europe research and innovation

programme HORIZON-CL5-2021-D6-01-13 under

Grant Agreement no 101075332.

We would like to thank all the participants who

took part in our study. We thank all the reviewers for

their useful suggestions.

REFERENCES

Bratman, M. (1987). Intention, plans, and practical reason.

Ceken, S. (2024). Cleared for Takeoff : Exploring Digital

Assistants in Aviation. In Harnessing Digital

Innovation for Air Transportation (p. 1‑24). IGI Global.

Cummings, M. M. (2014). Man versus machine or man+

machine? IEEE Intelligent Systems, 29(5), 62‑69.

Dormoy, C., André, J.-M., & Pagani, A. (2021). A human

factors’ approach for multimodal collaboration with

Cognitive Computing to create a Human Intelligent

Machine Team : A Review. IOP Conference Series:

Materials Science and Engineering, 1024(1), 012105.

https://doi.org/10.1088/1757-899x/1024/1/012105

Eurocontrol. (2023). Network Operations Report 2023.

https://www.eurocontrol.int/sites/default/files/2024-

03/eurocontrol-annual-nor-2023-annex-2-airports.pdf

European Union Aviation Safety Agency. (2023). EASA

Concept Paper : First usable guidance for Level 1 & 2

machine learning applications—Issue 02. European

Union Aviation Safety Agency. https://www.easa.euro

pa.eu/en/document-library/general-publications/easa-

artificial-intelligence-concept-paper-proposed-issue-

2#group-easa-downloads

Fitts, P. M. (1951). Human engineering for an effective air-

navigation and traffic-control system.

Hourlier, S., Diaz-Pineda, J., Gatti, M., Thiriet, A., &

Hauret, D. (2022). Enhanced dialog for Human-

Autonomy Teaming-A breakthrough approach. 1‑5.

Jameel, M., Tyburzy, L., Gerdes, I., Pick, A., Hunger, R.,

& Christoffels, L. (2023). Enabling Digital Air Traffic

Controller Assistant through Human-Autonomy

Teaming Design. 1‑9.

Johnson, M., Bradshaw, J. M., Feltovich, P. J., Jonker, C.

M., Van Riemsdijk, M. B., & Sierhuis, M. (2014).

Coactive Design : Designing Support for

Interdependence in Joint Activity. Journal of Human-

Robot Interaction, 3(1), 43. https://doi.org/10.5898/

JHRI.3.1.Johnson

Kirwan, B. (2024). The Impact of Artificial Intelligence on

Future Aviation Safety Culture. Future Transportation,

4(2), 349‑379.

Lewis, J. R. (1995). Measuring perceived usability : The

CSUQ, SUS, and UMUX. International Journal of

Human–Computer Interaction, 34(12), 1148‑1156.

Li, X., Romli, F. I., Azrad, S., & Zhahir, M. A. M. (2023).

An Overview of Civil Aviation Accidents and Risk

Analysis. Proceedings of Aerospace Society Malaysia,

1(1), 53‑62.

Lyons, J. B., Sycara, K., Lewis, M., & Capiola, A. (2021).

Human–Autonomy Teaming : Definitions, Debates,

and Directions. Frontiers in Psychology, 12.

Minaskan, N., Alban-Dromoy, C., Pagani, A., Andre, J.-M.,

& Stricker, D. (2022). Human intelligent machine

teaming in single pilot operation : A case study.

348‑360.

Puca, N., & Guglieri, G. (2023). Enabling Single-Pilot

Operations technological and operative scenarios : A

state-of-the-art review with possible cues. Titolo non

avvalorato.

Rasmussen, J. (1983). Skills, rules, and knowledge; signals,

signs, and symbols, and other distinctions in human

performance models. IEEE transactions on systems,

man, and cybernetics, 3, 257‑266.

Saephan, M. (2023). Human-Autonomy Teaming.

California High School Aero Astro Club Meeting.

Save, L., Feuerberg, B., & Avia, E. (2012). Designing

human-automation interaction : A new level of

automation taxonomy. Proc. Human Factors of

Systems and Technology, 2012.

Schneider, M., & Preckel, F. (2017). Variables associated

with achievement in higher education : A systematic

review of meta-analyses. Psychological bulletin,

143(6), 565.

Shively, R. J., Coppin, G., & Lachter, J. (s. d.). Bi-

directional Communication in Human-Autonomy

Teaming.

Optimizing Decision Making in Aviation: A New Communication Paradigm for Rerouting

57