Optimizing Privacy and Processing: Navigating Federated Learning

in the Era of Edge Computing

Haocheng Liu

a

Computer Science, Boston University, Boston, U.S.A.

Keywords: Federated Learning, Edge Computing, Privacy Preservation, Distributed Machine Learning.

Abstract: Edge Computing (EC)is an emerging architecture that brings Cloud Computing (CC) services nearer to data

sources. When integrated with Deep Learning (DL), EC becomes a highly promising technology and finds

extensive application across various fields. This paper investigates the dynamic intersection of Federated

Learning (FL) and Edge Computing, two forefront technological paradigms set to redefine data handling and

machine learning at the network's edge. With the exponential rise in data from edge devices, FL presents a

paradigm shift prioritizing user privacy, where data remains localized while contributing to a collective

learning model. This work delves into the inherent challenges—data heterogeneity, varying computational

capacities, and intermittent connectivity. It evaluates current methodologies, highlights advancements in

algorithmic strategies to ensure robust and efficient distributed learning, and discusses potential applications.

Future directions are examined, suggesting novel approaches for adaptive, privacy-preserving, and scalable

machine learning solutions, thus catering to the nuanced demands of real-time, decentralized data processing.

1 INTRODUCTION

In recent years, the proliferation of edge devices such

as smartphones, sensors, and the Internet of Things

(IoT) gadgets has catalyzed an exponential increase

in data generation at the network's edge. This surge in

data, marked by its sensitive and private nature, calls

for innovative processing and learning paradigms that

prioritize user privacy (Konečný et al., 2016). The

push for real-time, low-latency decision-making

reveals the shortcomings of traditional cloud-centric

machine learning models, which necessitate central

server data transmission and processing. Addressing

these challenges, edge computing and federated

learning have emerged as transformative approaches,

poised to revolutionize the handling and learning of

data at scale.

Edge computing offers a distributed paradigm that

situates computation and data storage closer to data

sources. This proximity reduces long-distance

communications, minimizes latency, and optimizes

bandwidth usage, while bolstering privacy and

security as data transmission to central servers is not

required (Mach et al., 2017). Despite its benefits in

enhancing privacy and reducing bandwidth and

a

https://orcid.org/0009-0002-4413-6463

latency, edge computing alone does not inherently

facilitate collaborative learning from decentralized

data.

In terms of federated learning, a machine learning

approach empowering multiple edge devices to

collaboratively train a shared model while

maintaining localized data. Edge devices refine

models on their own data and periodically send

updates, rather than raw data, to a central server for

aggregation. This methodology not only safeguards

privacy by circumventing the need for raw data

transfer but also capitalizes on the distributed nature

of edge devices to expand machine learning to

numerous participants (Li et al., 2020).

The melding of Federated Learning (FL) with

Edge Computing (EC) marks a significant departure

from centralized to distributed machine learning.

Conceptualized to address privacy concerns, FL

harnesses the computational capabilities at the

network's edge, facilitating local data processing and

thereby mitigating traditional cloud-based

bottlenecks (Ye, 2020; Brecko et al., 2022).

Challenges arise with the heterogeneity of edge

devices, often laden with non-IID data, which

disrupts the homogeneity needed for machine

704

Liu, H.

Optimizing Privacy and Processing: Navigating Federated Learning in the Era of Edge Computing.

DOI: 10.5220/0012969100004508

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 1st International Conference on Engineering Management, Information Technology and Intelligence (EMITI 2024), pages 704-707

ISBN: 978-989-758-713-9

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

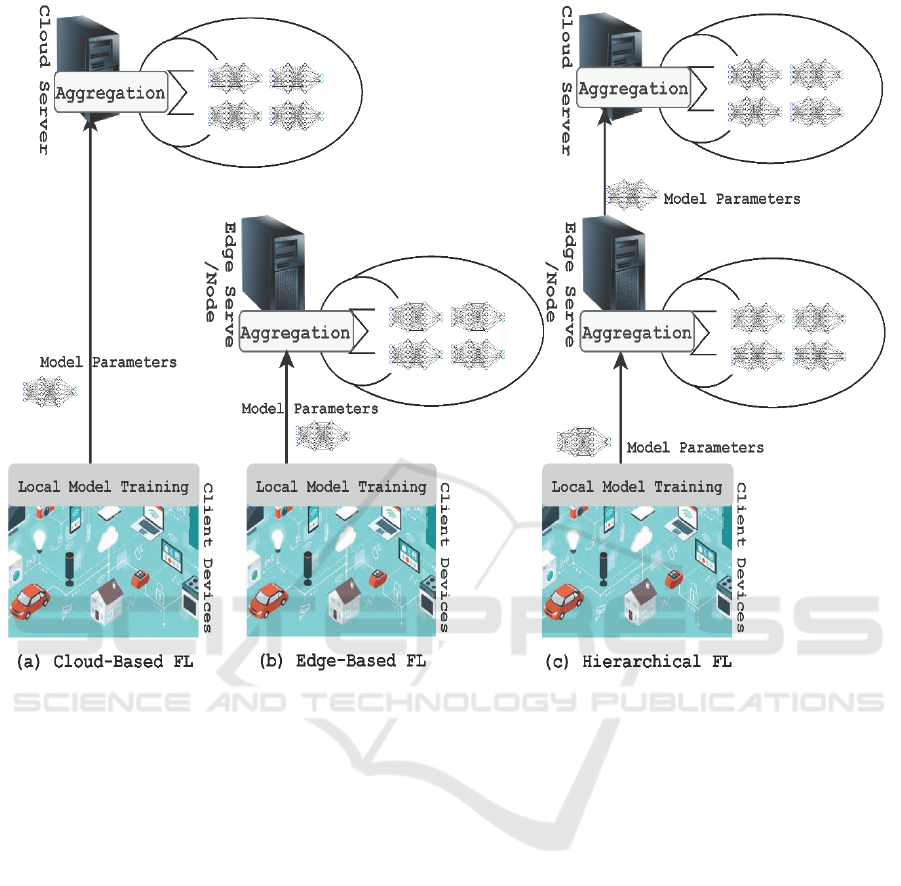

Figure 1: The structure of edge computing and federated learning (Abreha, 2022).

learning models. Innovations in FL seek to resolve

this by incorporating techniques such as weighted

aggregation to align global models with distributed

datasets (Wang et al., 2021).

Advancements in FL address the limitations of

edge device resources, ensuring a resilient

infrastructure capable of supporting real-time

analytics and decision-making (Zhou et al., 2020). As

the landscape of EC is fraught with sporadic

connectivity, FL must incorporate algorithms capable

of maintaining model convergence despite

intermittent communications (Wang et al., 2021).

Responding to these issues, the field has seen the

rise of advanced FL models that emphasize

robustness against disconnections and network

variability, ensuring that the learning process remains

intact even under suboptimal conditions. Research

has also explored client weighting strategies,

optimizing the contribution of each device based on

data quality and relevance (Brecko et al., 2022).

This paper aims to explore the intersection of

federated learning and edge computing, delving into

the opportunities and challenges that this confluence

presents. More specifically, this paper will discuss the

key concepts underlying federated learning and edge

computing, review the state-of-the-art approaches for

integrating the two, and highlight the potential

applications and future directions of this emerging

field.

2 METHOD

2.1 Theoretical Framework

The integration of Federated Learning (FL) with Edge

Computing (EC) ushers in a comprehensive

analytical approach that marries decentralized data

processing with the powerful computational

capabilities of edge devices as shown in Figure 1.

This amalgamation aims to overcome the limitations

of traditional centralized data processing models,

providing a pathway to enhanced data security and

user privacy. FL's adaptability in handling diverse

data types across distributed networks is fundamental

Optimizing Privacy and Processing: Navigating Federated Learning in the Era of Edge Computing

705

to this research, with the preservation of data privacy

being a principal concern (Kairouz et al., 2019).

Within this framework, Edge Devices are

identified as data generation and processing hubs,

acting independently to train models locally. This

process sidesteps the need for raw data centralization,

thereby reducing the risks associated with data

breaches and unauthorized access. Global Models

serve as the overarching structure in FL, synthesized

from local models and designed to adaptively learn

from the collective intelligence of all participating

devices (McMahan et al., 2017). Such models are

pivotal in accommodating the Non-IID Data that is

endemic to real-world application scenarios, which

presents varying statistical properties across different

devices and is central to the authenticity of the

modeling approach.

The Mathematical Model crucial to FL is detailed

as follows:

F(θ)=

∑

𝐹

(𝜃)

(1)

This formula is central to the method,

encapsulating the essence of FL by optimizing the

local and global loss functions across the network

(McMahan et al., 2017). Here, 𝜃 denotes the model

parameters, which are updated through Federated

Averaging (FedAvg), a robust algorithm designed for

resilient model updates in response to data

distribution variability.

2.2 Data Collection and Pre-Processing

Data collection for FL in EC environments entails a

multifaceted approach. An exhaustive dataset

reflective of the intrinsic diversity of edge devices

will be compiled. Pre-processing includes a rigorous

phase of normalization, ensuring that the dataset's

features are on a comparable scale, and feature

extraction, where key attributes are identified and

isolated for subsequent modeling. This process is

executed with an emphasis on maintaining data

integrity and privacy, in accordance with the latest

industry standards and privacy laws (Li et al., 2020).

2.3 Model Implementation

Post-preparation, FL models are iteratively developed

and fine-tuned. The initial phase involves simulations

to assess theoretical viability, followed by real-world

deployments to gauge practical applicability. This

multi-phase strategy is crucial for ensuring that FL

models are not only efficient and scalable but also

responsive to the dynamic requirements of EC

applications, such as smart cities and healthcare

systems, which demand both computational

efficiency and real-time data processing capabilities

(Bonawitz et al., 2019).

2.4 Model Evaluation

A rigorous model evaluation strategy is employed,

taking into account a comprehensive array of

performance metrics. These metrics span from

predictive accuracy to computational latency and

include the resilience of the model in unstable

network environments — an aspect particularly

pertinent to the EC context where connectivity may

fluctuate (Konečný et al., 2016).

2.5 Ethical Considerations and Privacy

Ensuring the ethical use of data is of utmost

importance. The methodology is underpinned by

stringent data protection measures, including

differential privacy, to ensure the confidentiality and

integrity of individual data contributions throughout

the FL process. Such measures ensure compliance

with ethical standards and relevant privacy

legislation, safeguarding against potential misuse of

data (Dwork, 2011).

3 DISCUSSIONS

In discussing the integration of Federated Learning

(FL) in Edge Computing (EC), several facets need to

be addressed, including the strengths of FL in

enhancing privacy and reducing latency, the

challenges presented by data heterogeneity, the

varying computational capabilities across edge

devices, and the unreliable nature of network

connections.

FL is highly beneficial for its distributed nature,

which allows for data to be processed at its source,

thereby maintaining privacy and reducing latency—a

significant advantage for real-time data processing

applications. The collaborative approach of FL also

enables a multitude of devices to contribute to the

development of a more robust global model,

reflecting a wide spectrum of data insights (McMahan

et al., 2017).

However, the implementation of FL in EC is not

without its challenges. Data heterogeneity represents

a substantial hurdle, given that edge devices generate

a wide variety of data, which can lead to skewed

learning outcomes if not properly managed.

Additionally, the varying computational capabilities

of these devices necessitate models that can operate

within these constraints, ensuring consistency and

EMITI 2024 - International Conference on Engineering Management, Information Technology and Intelligence

706

reliability in the learning process (Brecko et al.,

2022).

Network reliability is another critical issue. Edge

devices often operate in environments with

intermittent connectivity, which can disrupt the FL

process. Solutions are being explored to enhance

communication protocols, ensuring secure and stable

connections throughout the FL training process

(Shaheen et al., 2022).

Addressing these challenges, researchers are

exploring state-of-the-art solutions such as advanced

algorithms that account for data distribution

disparities and network interruptions. These solutions

aim to improve communication efficiency and model

aggregation, even in the face of the inherent

unpredictability of edge networks. Moreover,

ensuring the security of the federated learning process

remains a significant area of active research, with a

focus on developing encryption methods and privacy-

preserving techniques to protect against cyber threats

(Shaheen et al., 2022).

For the future direction of FL in EC, there is a

clear need for novel frameworks and approaches that

are adaptive to the dynamic conditions of edge

environments. Industries such as healthcare, smart

cities, and transportation are particularly primed for

the adoption of FL, given their reliance on real-time

data processing and decision-making. The potential to

develop FL models that can operate efficiently in

these sectors is vast, with ongoing research directed

towards overcoming current limitations and

harnessing the full potential of FL in EC (Shaheen et

al., 2022).

4 CONCLUSION

As this research concludes, Federated Learning and

Edge Computing together mark a paradigm shift

towards a more autonomous and privacy-aware

digital infrastructure. The promise they hold extends

beyond current achievements, gesturing towards a

future where data sovereignty and localized

intelligence become the norm. Challenges persist,

notably in harmonizing the diverse data ecosystem

and ensuring seamless connectivity, but they also act

as catalysts for further ingenuity. By continually

pushing the boundaries of what's possible in FL and

EC, there's potential to revolutionize how data is

processed and utilized, making intelligent edge

devices not just a convenience but a cornerstone of

modern computation. As this field evolves, it's

anticipated that the solutions developed will not only

be technologically sound but also ethically

responsible, steering towards a future where

technology works seamlessly, safely, and to the

benefit of all.

REFERENCES

Abreha, H. G., Hayajneh, M., & Serhani, M. A. 2022.

Federated learning in edge computing: a systematic

survey. Sensors, 22(2), 450.

Bonawitz, K., et al. 2019. Towards Federated Learning at

Scale: System Design. arXiv preprint

arXiv:1902.01046.

Brecko, A., Kajati, E., Koziorek, J., & Zolotova, I. 2022.

Federated Learning for Edge Computing: A Survey.

Applied Sciences, 12(18), 9124. MDPI.

Dwork, C. 2011. Differential Privacy. Encyclopedia of

Cryptography and Security. Access the document

Konečný, J., McMahan, H. B., Ramage, D., & Richtárik, P.

2016. Federated Optimization: Distributed Machine

Learning for On-Device Intelligence. arXiv preprint

arXiv:1610.02527.

Li, T., Sahu, A. K., Talwalkar, A., & Smith, V. 2020.

Federated Learning: Challenges, Methods, and Future

Directions. IEEE Signal Processing Magazine, 37(3),

50-60.

Mach, P., & Becvar, Z. 2017. Mobile Edge Computing: A

Survey on Architecture and Computation Offloading.

IEEE Communications Surveys & Tutorials, 19(3),

third quarter 2017.

McMahan, H. B., et al. 2017. Communication-Efficient

Learning of Deep Networks from Decentralized Data.

Proceedings of AISTATS.

Shaheen, M., Farooq, M. S., & Umer, T. 2024. AI-

empowered mobile edge computing: inducing balanced

federated learning strategy over edge for balanced data

and optimized computation cost. Journal of Cloud

Computing, 13(52).

Wang, S., Tuor, T., Salonidis, T., Leung, K. K., Makaya,

C., He, T., & Chan, K. 2021. Adaptive Federated

Learning in Resource Constrained Edge Computing

Systems. IEEE Journal on Selected Areas in

Communications, 37(6), 1205-1221. IEEE.

Ye, Y., Li, S., Liu, F., Tang, Y., & Hu, W. 2020. EdgeFed:

Optimized federated learning based on edge

computing. IEEE Access, 8, 209191-209198.

Zhou, Z., Chen, X., Li, E., Zeng, L., Luo, K., & Zhang, J.

2020. Edge Intelligence: Paving the Last Mile of

Artificial Intelligence with Edge Computing.

Proceedings of the IEEE, 107(8), 1738-1762. IEEE.

Optimizing Privacy and Processing: Navigating Federated Learning in the Era of Edge Computing

707