Stock Price Prediction Based on CNN, LSTM and CNN- LSTM Model

Yufei Wang

Stony Brook Institute, Anhui University, Longhe Campus, Hefei, 230000, China

Keywords: Stocks Prediction, CNN Model, LSTM Model, CNN-LSTM Hybrid Model.

Abstract: Stock investments are perennially recognized for their high potential returns and commensurate risk levels.

While CNN and LSTM models individually demonstrate proficiency in data prediction, each has its inherent

limitations. In pursuit of overcoming these limitations, this research proposes a composite CNN-LSTM

model. Initially, this paper selects two disparate stocks for evaluation, employing the individual CNN and

LSTM models to predict their prices. Subsequently, the construction of the hybrid model involves utilizing

the CNN layers to extract spatial features, which are then transformed into a one-dimensional vector. This

vector is subsequently fed into the LSTM layer to capitalize on its sequence data handling capabilities,

culminating in the model's predictive execution. The final phase of this study entails a comparative analysis

of the predictive performance. The results show that the CNN-LSTM model inherits the advantages of the

two individual models and is both highly stable and extremely efficient in making predictions on big stock

data. This enhancement is particularly notable when compared to the single CNN and LSTM models,

underscoring the efficacy of integrating these two distinct computational approaches into a unified predictive

framework.

1 INTRODUCTION

With high risk and high returns, stocks have always

been popular and have become a favorite form of

investment. The stock market is a significant and

volatile component of the financial market, which is

a very complicated and uncertain field. However, the

high volatility of stocks in the stock exchange market

poses a challenge to traditional quantitative trading

strategies (Kong et al., 2024). When forecasting

stocks, investors should not only build models to

forecast stock data, but also pay attention to

macroeconomic factors, company fundamentals,

market sentiment and other multi-dimensional

information to comprehensively analyze the

dynamics of the stock market. With the advent of the

era of big data analytics, machine learning and deep

learning have shown great potential to surpass

traditional machine learning in data prediction (Hu et

al., 2019) When dealing with enormous amounts of

stock data, some academics have used different ways.

Nowadays, neural network model, random forest

model, deep learning model and time series model are

widely used by academics. These approaches have

produced stock trend forecasting models that have a

higher forecasting accuracy than individual forecasts

(Zhang 2023). However, scholars have found that

many times models do not predict stock prices.

Therefore, it is particularly important to construct a

model that can be used scientifically with high

accuracy and stability. In the following, this paper

will introduce two widely used models, the CNN

model and the LSTM model. In this paper, these two

models will be fused to obtain the CNN-LSTM model

and compare the performance of them.

Convolutional Neural Networks (CNNs) have a

great advantage in extracting features because CNNs

have a convolution layer and the convolution layer

can extract features very well. Since CNNs can share

the convolution Kernel, CNNs can also handle high

dimensional data very well. In addition, the CNN

model has an efficient operating speed, which is a

great advantage when dealing with large datasets.

However, the disadvantages of CNN are also obvious.

For example, the correlation between the local and the

overall could be ignored by the Pooling layer, which

could lose a lot of important data. In conclusion, when

predicting stocks, CNN models have the advantages

of strong feature extraction capability, the ability to

handle time series data, and higher prediction

accuracy in some cases. However, it also suffers from

high requirements on the size and diversity of the

22

Wang, Y.

Stock Price Prediction Based on CNN, LSTM and CNN- LSTM Model.

DOI: 10.5220/0012982600004601

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 1st International Conference on Innovations in Applied Mathematics, Physics and Astronomy (IAMPA 2024), pages 22-30

ISBN: 978-989-758-722-1

Proceedings Copyright © 2024 by SCITEPRESS – Science and Technology Publications, Lda.

dataset, model complexity needs to be balanced, and

there may be overfitting and other shortcomings.

Long Short Term Memory (LSTM) model is a

variant of RNN. LSTM has forgetting gate and

memory gate so that he can remember and use past

information. This is different from the traditional

RNN model where the original information fades

away over time. Therefore, LSTM is very suitable for

processing long sequential data and has high practical

value for predicting the results of stock data.

Although LSTM is well received by many scholars,

in fact, it still has some defects. First of all, the LSTM

model is complex and takes a long time to run. Its four

main parts-memory cells, input gates, forgetting

gates, and memory gates-have complex calculations.

Secondly, LSTM is also likely to end up with

overfitting results when processing data, as it over-

memorizes and uses past information. In conclusion,

when predicting stocks, the LSTM model has the

advantages of the ability to handle long-term

dependencies, flexibility, and high prediction

accuracy. However, it also suffers from long

computation time and high model complexity.

This paper believes that CNN model and LSTM

model each has its own advantages and disadvantages

in stock prediction. CNN are usually computationally

more efficient, which gives CNNs an advantage when

dealing with large-scale datasets. However, the

convolutional kernel of CNNs usually has a fixed

size, which limits the scope of its observations. As a

result, CNNs may not be as effective as LSTMs in

capturing long-term dependencies. LSTM are able to

capture and model long-term dependencies in data

through their unique gating mechanism. This makes

LSTM perform well in handling sequential data with

long-term dependencies. However, LSTM needs to

maintain internal state at each time step, which causes

it to be computationally more complex and time-

consuming than CNN.

The motivation behind this paper is to address the

limitations and performance differences between

CNNs and LSTMs in stock prediction by exploring

and comparing their capabilities. Both models have

their own shortcomings when it comes to predicting

stocks, necessitating a deeper comparative analysis to

uncover their nuanced performance characteristics.

Additionally, this paper not only compares the

traditional CNN and LSTM models individually but

also examines the CNN-LSTM fusion model to better

understand the performance differences among these

methods. Through rigorous comparative analysis, the

paper seeks to provide valuable insights into the

potential of hybrid approaches for enhancing the

accuracy and efficiency of stock prediction models.

In conclusion, both CNN and LSTM models have

their own shortcomings when it comes to predicting

stocks. Their performance differences need to be

further explored from the comparative analysis, in

addition, this paper adds CNN-LSTM fusion model

to increase the comparative relationship to compare

the performance differences of these methods.

2 METHOD

First, this paper will construct CNN model, LSTM

model and CNN-LSTM model and predict two

different stock data of different sizes and visualize the

prediction results. Second, this paper will introduce

several evaluation metrics to evaluate each model.

Finally, we will compare the models, analyze the

reasons for the different results, and give appropriate

advice to investors.

2.1 Model Construction

CNN Model

In the fields of text classification, natural language

processing, and imagine recognition, CNN is

recognized as a well-developed technology with

strong feature extraction capabilities in both data

space and time series (Lecun et al., 1989). The

structure of CNN usually consists of Input layer,

Convolution layer, ReLU, Pooling layer,

Rasterization, Fully Connected layer, ReLU and

Output layer (Figure 1). Moreover, Convolution

layer, ReLU and Pooling layer are stackable and

reusable, which is the core structure of CNN. The

CNN network receives multi-dimensional data as

input from the input layer, and to extract features

from the data, convolution operations are performed

on the input data by a convolution layer made up of

several convolution kernels. After the convolution

process, the pooling layer is used to minimize the

feature dimension. Furthermore, because of its local

invariance, the pooling layer improves network

stability and lessens model overfitting (Qi, 2024). The

pooling layer also helps the model capture key

features in the stock data and ignore unimportant

details. The fully connected layer located at the end

of the CNN can integrate the features extracted from

the previous layers very well. It spreads the feature

maps output from the convolutional and pooling

layers and performs a linear transformation through

the weight matrix and bias terms. Finally, the

activation function generates the final forecast

(Auntie and Chen, 2023).

Stock Price Prediction Based on CNN, LSTM and CNN- LSTM Model

23

Figure 1: CNN model structure (Picture credit: Original).

LSTM

Due to the gradient explosion and gradient vanishing

problems of traditional recurrent neural networks,

LSTM was created to solve this problem (Luo et al.,

2024). LSTM is a Long Short-Term Memory

network, a special kind of RNN (Recurrent Neural

Network). Compared with traditional RNNs, LSTM

is able to solve long sequence problems effectively by

introducing the concepts of memory cells, input gates,

output gates and forgetting gates. As a result, LSTM

is more appropriate for processing and forecasting

significant events with long intervals in a time series

(Liu et al., 2024).

Forget gates and memory gates are structures

unique to the LSTM and also its strengths. Some

"information" about the previous state of the LSTM

may become "outdated" with the passage of time. The

"Forge gate" is responsible for selectively forgetting

specific elements of the previous cell state in order to

prevent excessive memory from impairing the neural

network's processing of the current input. The

following equation can be used to describe the

computation of the forgetting gate and is the output

vector of the sigmoid neural layer

.

𝑓

𝜎

𝑊

ℎ

,𝑥

𝑏

(1)

Whenever a new input value is fed, the LSTM will

first decide which memories to forget based on the

new input value and the output of the previous

moment. The Memory Gate can judge itself and

decide whether or not to incorporate the current data

into

the control unit of the unit state. The memory

gate also performs two more crucial functions. The

first is to retrieve the valid information from the

current input, which is determined by the formula:

𝐶

tanh 𝑊

ℎ

,𝑥

𝑏

(2)

The other important operation is to filter and rate the

extracted valid information, which is calculated as:

𝑖

𝜎𝑊

ℎ

,𝑥

𝑏

(3)

In practical calculations, the LSTM model has

some drawbacks. First, the computational complexity

of the LSTM model is high. Because LSTM uses a

gating mechanism and a long-term memory

mechanism compared to traditional RNN, the

computation of the LSTM model is greatly increased,

so it takes a lot of time when running large data.

Secondly, when using the LSTM model to predict the

data, this is a time series prediction, so the results

obtained will have a certain lag

.

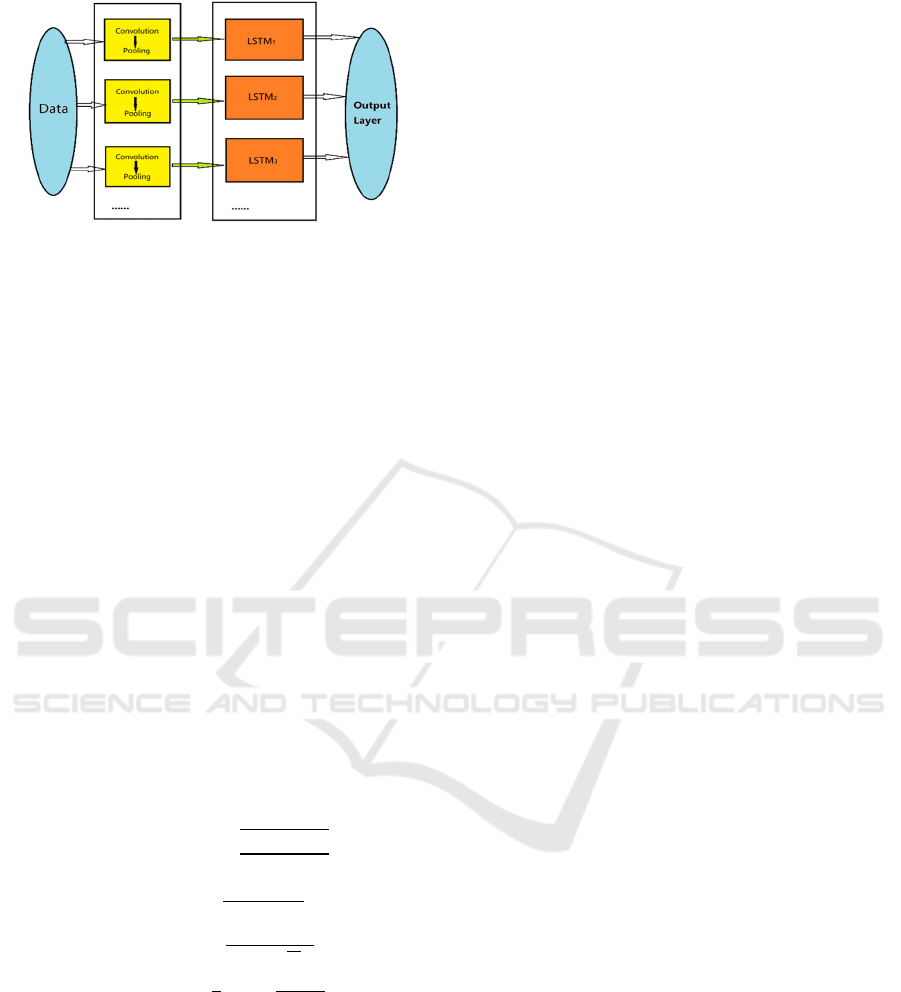

CNN-LSTM

CNN, as a network feature extractor, has excellent

feature extraction capability, in this model, CNN

model will be used for feature extraction. The idea of

extraction of Hua’s research (2021) is to first input

the data from the input layer (Hua , 2021); then use

multiple convolutional layers to extract the spatial

features of the input data; add pooling layers (e.g.,

max-pooling) between the convolutional layers to

reduce the spatial dimensions of the data while

retaining the important features; and finally use an

activation function (ReLU) after each convolutional

layer to increase the nonlinearity of the model. At this

point, the obtained reshape the output of the CNN

layer into a shape suitable for the LSTM input before

passing it to the LSTM layer for processing (Zhou

2022). The LSTM, in turn, introduces gating units to

preserve and forget the time series features, thus

realizing improved prediction accuracy (Figure 2).

Finally, a fully connected layer is added after the

LSTM layer as an output layer to output the

prediction results. The CNN-LSTM model, which

combines the advantages of CNN and LSTM

networks, is able to extract the time domain features

while taking into account the effects of multiple

influencing factors on the network, so that the

prediction results are accurate and at the same time

fast and efficient (Liu et al., 2024 & Hua, 2021)

.

Table 1: Stock data.

Date Open High Low Close Adj Close Volume

2010/2/1 6.870357 7 6.832143 6.954643 5.887797 7.5E+08

2010/2/2 6.996786 7.01 6.906429 6.995 5.921964 6.98E+08

2010/2/3 6.970357 7.15 6.943571 7.115357 6.023856 6.15E+08

2010/2/4 7.026071 7.084643 6.841786 6.858929 5.806765 7.58E+08

IAMPA 2024 - International Conference on Innovations in Applied Mathematics, Physics and Astronomy

24

Figure 2: CNN-LSTM model structure (Picture credit:

Original).

3 RESULTS

3.1 Data Choosing

Accurate data is essential before research beginning.

In this paper, two datasets are used. Apple's stock

price from January 8, 2010 to April 5, 2024 and

Nike's price from January 8, 2022 to April 5, 2024,

respectively. They both from Yahoo platform, in

addition, it contains not only the closing price, but

also the opening price, sales volume, the highest price

of the day, and the lowest price of the day. The table

1 shows part of Apple's data

.

3.2 Evaluation Indicators

In this section, four indicators, Root Mean Squared

Error, Mean Relative Error, symmetric Mean

Absolute Percentage Error and R-Square are chosen

to evaluate the prediction accuracy of the stock price

prediction model. The following are their formulas:

𝑅𝑀𝑆𝐸

∑

(4)

𝑀𝐴𝐸

∑

|

|

(5)

𝑅

1

∑

∑

(6)

𝑀𝐴𝑃𝐸

∑

|

|

(7)

Both RMSE and MAE represent the degree of

deviation between the predicted and true values, so the

smaller the RMSE and MAE, the better the model.

Generally, R² ranges from 0 to 1. The better the

variables in the equation explain y and the more closely

the model fits the data, the closer R2 is to 1; conversely,

the closer it is to 0, the less well the model fits the data.

MAPE is expressed as a percentage. It can be used to

indicate the magnitude of the model error, so the closer

the MAPE is to 0 the more accurate the model is done

by Zhang in 2021 (Zhang, 2021)

.

In addition to these four metrics, this paper will

also calculate the running time of each model for both

datasets. (The time here is measured in seconds).

3.3 Apple’s Stock Data

Apple's stock data contains 3584 sets of data, which

is a larger dataset. When building models, there is

need to set look_back=n. It means to predict

tomorrow's closing price using data from the previous

n days at the current point in time. In making

predictions on Apple Inc.'s corporate data, all of three

models set look_bask=60. (When debugging the

model, after changing the value of look_back several

times, it was finally found that the model could fit the

data well when look_back=60).

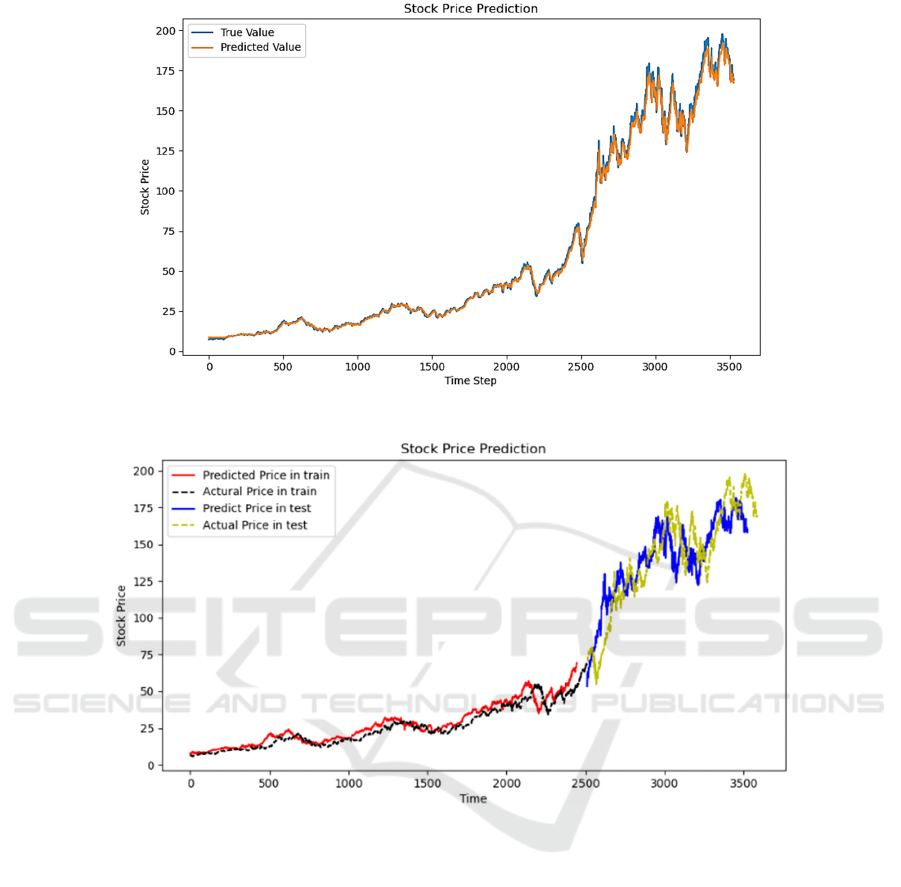

3.3.1 CNN

CNN demonstrates its strength in handling long time

series (Figure 3). As can be seen from the prediction

curve, the true and predicted values are very close to

each other. There is only a small error at the local

extreme points

.

3.3.2 LSTM

According to the prediction curve in the figure 4, it is

LSTM demonstrates its strength in handling long time

series. But LSTM model still has a lag (i.e., the true

value lags behind the predicted value).

Stock Price Prediction Based on CNN, LSTM and CNN- LSTM Model

25

Figure 3: CNN predict result of APPLE’s (Picture credit: Original).

Figure 4: LSTM predict result of APPLE's (Picture credit: Original).

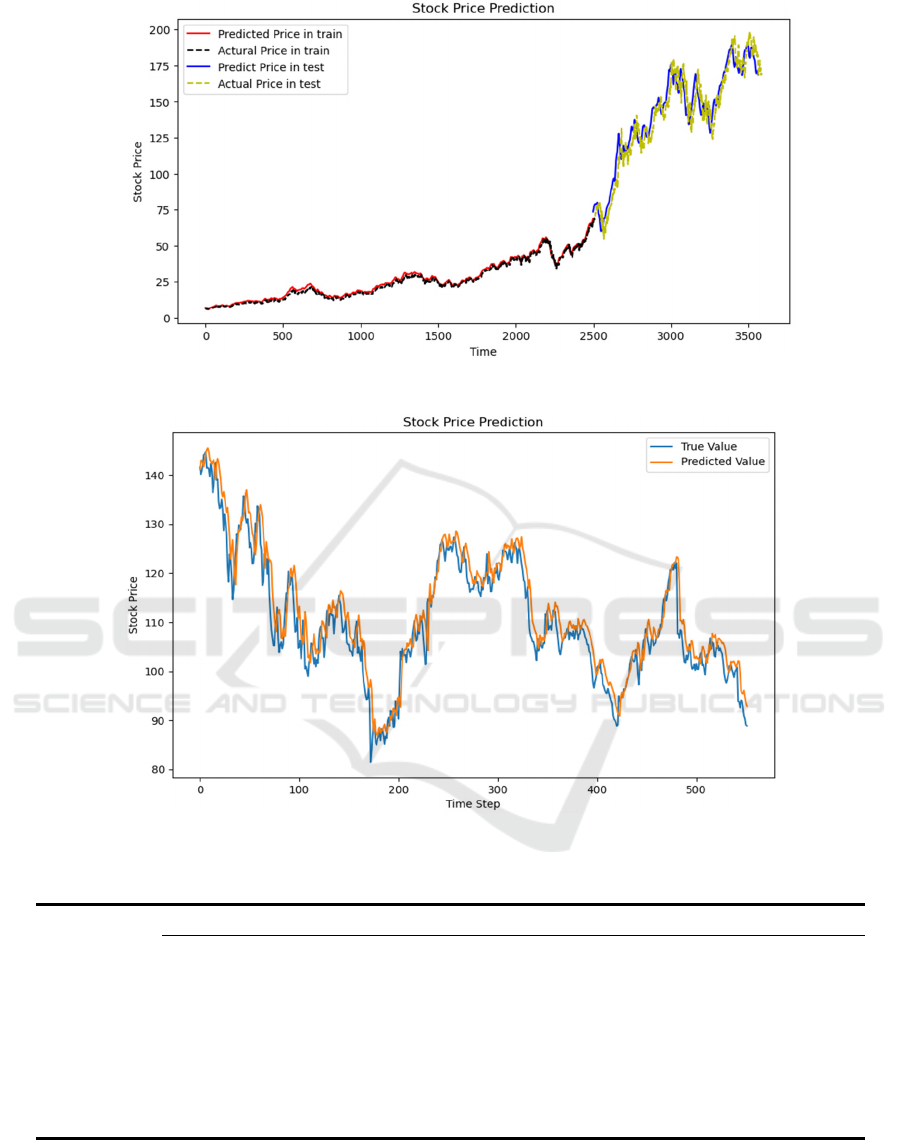

3.3.3 CNN-LSTM

The figure 5 shows the prediction results of the CNN-

LSTM model. From the prediction curve, it can be

seen that the CNN-LSTM composite model can

demonstrate better results than both the CNN model

and the LSTM model alone in predicting larger time

series. This paper argues that the CNN model

effectively reduces noise when extracting features.

Compared to the LSTM model which will extract all

the data when processing the data, the CNN-LSTM

model takes advantage of the CNN in extracting the

features and greatly reduces the error of the results

and it solves the problem of lagging prediction

results.

3.4 Nike’s Stock Data

Compared to Apple's stock data, Nike's stock data is

a smaller data set with only 562 data sets. In making

predictions on Nike’s stock data, all of three models

set look_bask=10. (When debugging the model, after

changing the value of look_back several times, it was

finally found that the model could fit the data well

when look_back=10.)

3.4.1 CNN

As can be seen from the figure 6, the CNN model

performs equally well when dealing with small

datasets. Although it is not as good as when dealing

with APPLE's dataset, the true and predicted values

are very close to each other and fit well.

IAMPA 2024 - International Conference on Innovations in Applied Mathematics, Physics and Astronomy

26

Figure 5: CNN-LSTM predict result of APPLE's (Picture credit: Original).

Figure 6: CNN predict result of NIKE's (Picture credit: Original).

Table 2: Summary of evaluation indicators.

Apple’s stock

data

Method RMSE MAE R^2 SMAPE Time

CNN 6.6710 1.7869 0.9979 4.3039% 7.97s

LSTM 43.4755 34.3995 0.7315 11.1565% 92.87s

CNN-LSTM 45.4162 35.7618 0.7174 11.5060% 7.91s

Nike’s stock

data

Method RMSE MAE R^2 SMAPE Time

CNN 11.6657 2.5883 0.9244 2.3619% 2.44s

LSTM 9.5071 7.3367 0.2957 5.0222% 6.35s

CNN-LSTM 9.0999 7.0301 0.6696 2.8232% 1.72s

Stock Price Prediction Based on CNN, LSTM and CNN- LSTM Model

27

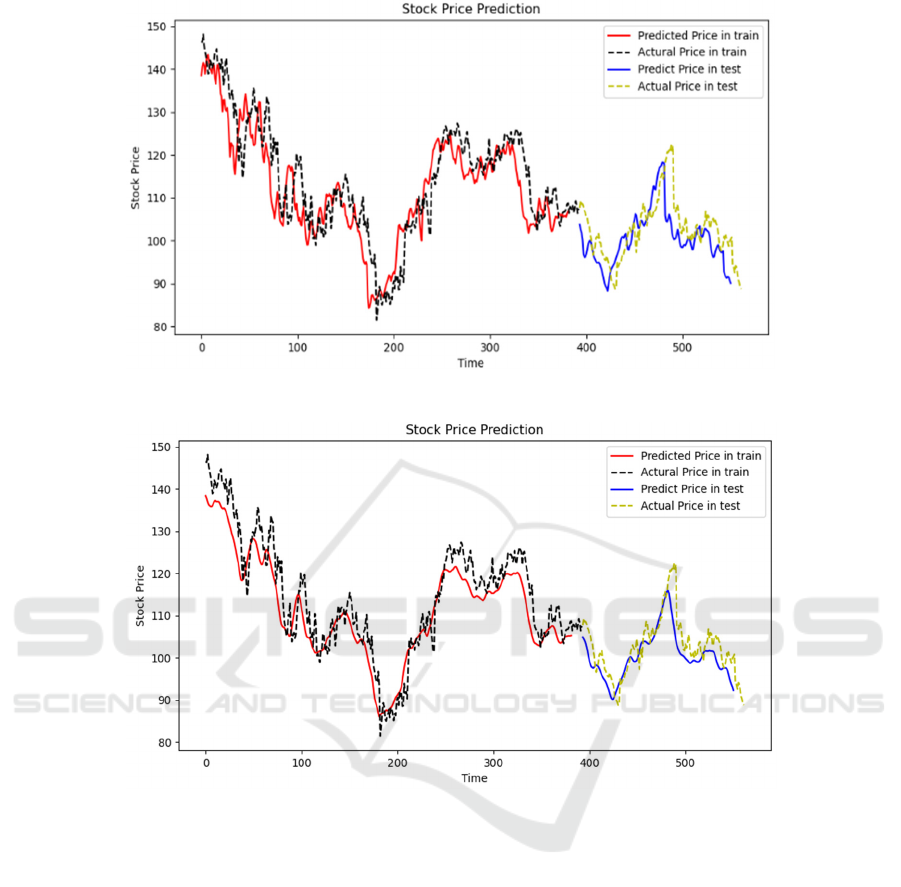

Figure 7: LSTM predict result of NIKE's (Picture credit: Original).

Figure 8: CNN-LSTM predict result of NIKE's (Picture credit: Original).

3.4.2 LSTM

The figure 7 shows the prediction results of the LSTM

model. From the prediction curves, it can be found

that the LSTM model is still stable in its prediction

for small datasets. But the shortcoming is that the lag

of LSTM model is still obvious.

3.4.3 CNN-LSTM

From the prediction results (Figure 8), it can be seen

that the CNN-LSTM model is not very effective in

handling small datasets. the CNN model extracts the

features and then puts the features into the LSTM

layer for processing, which solves the problem of

lagging prediction results of the LSTM model.

However, at the same time, the CNN model extracts

too few features, which leads to insufficient training

of the LSTM model, and obvious errors can be seen

in the final prediction results.

3.5 Summary of Evaluation Indicators

By comparing the evaluation metrics of these three

models (Table 2), this paper draws the following

conclusions:

(1) In overall, the CNN model outperforms the

LSTM model in stock prediction. First of all, the

CNN model fits better in terms of prediction

results, both when predicting large stock data

and small stock data, and there is no lag in the

prediction results, as is the case with the LSTM

model. Secondly, CNN model takes very less

time in predicting stocks. Especially when

predicting large stock data, the CNN model

shows great efficiency and accuracy. As can be

IAMPA 2024 - International Conference on Innovations in Applied Mathematics, Physics and Astronomy

28

seen in previous experiments, when predicting

APPLES' stock data, the CNN model took only

7.97 seconds, but the LSTM model took 92.87

seconds.

(2) The CNN-LSTM model has an excellent fit. It

not only solves the defect of lagging prediction

results of LSTM model, but also obtains

extremely high efficiency. As can be seen from

the runtime result data in the chart, the CNN-

LSTM model took only 7.91 seconds to process

APPLE's stock data. The CNN-LSTM model

does not perform very well when predicting

small stock data. From the prediction curve, the

curve fitting is not very good and there is a

significant error. Although the CNN-LSTM

model can still achieve short time consumption

and no lag when predicting small stock data, the

prediction error is relatively large and the curve

is not fitted very well. Through the analysis, this

paper finds that since the CNN-LSTM model

extracts features from the convolutional layer in

the CNN model and then uses the features as

inputs to the LSTM layer, this can easily lead to

the output of the CNN that may lose some

important information of the original data that is

important for the LSTM, and this can lead to a

degradation of the fusion model's performance.

(3) From the results, CNN model has better

accuracy, stability and short time consuming

when predicting stock prices. CNN-LSTM

model performs well when dealing with large

stock data, not only efficient but also accurate.

However, when dealing with small stock data, it

can lead to poor fitting due to too little feature

data. Thus, in this paper, CNN-LSTM model is

considered to be poor in stability. LSTM model

will show deviation between the predicted curve

and the real curve when predicting the stock

price, and high time consuming is also its defect.

Nevertheless, this paper does not consider the

LSTM model and CNN-LSTM model as

unsuitable for predicting stock prices compared

to the CNN model because in machine learning

and financial forecasting, simply performing

well on a training set is not enough to show that

the model works just as well in real-world

applications. When predict the stock data, both

LSTM and CNN-LSTM models consider the

effect of feature values on the target value (stock

closing price) and are accompanied by long term

memory, so the predict results of them have

more real-world applications value, while CNN

models do not have these properties. Therefore,

in this paper, we believe that the prediction

results of LSTM and CNN-LSTM models have

the higher reference value for investors

.

4 CONCLUSION

Stock price prediction is a multifaceted and intricate

endeavor influenced by a myriad of factors. While

both CNN and LSTM models have demonstrated

efficacy in this domain, each exhibits inherent

limitations. To address this challenge, this study

introduces a novel stock price prediction

methodology leveraging a hybrid CNN-LSTM

model. By amalgamating the feature extraction

prowess of CNN with LSTM's adeptness in sequence

data processing, the resultant model achieves

heightened accuracy and enhanced stability. In the

experimental setup, we initially curate two distinct

stock datasets varying significantly in size,

accompanied by four evaluation metrics.

Subsequently, standalone CNN and LSTM models

are trained and evaluated on these datasets

individually. Prediction outcomes are obtained and

corresponding evaluation indices computed.

Thereafter, the hybrid CNN-LSTM model is

formulated, trained on the same datasets, and

evaluated using the established metrics. Comparative

analysis of the prediction outcomes across the three

models and the four evaluation metrics ensues.

The analysis shows that the CNN model exhibits

short time-consumption, stability, and accuracy when

dealing with both large and small stock data. The

LSTM model, on the other hand, possesses the

drawbacks of long time-consumption and the

generation of deviations between the prediction

curves and the true-value curves. The CNN-LSTM

model solves the two problems existing in the LSTM

model, however, since the features extracted by the

CNN model lose some important information about

the original data, this makes the data processed by the

LSTM layer incomplete, and this can lead to a

degradation in the performance of the fusion model.

Although both the LSTM model and CNN-LSTM

model are not as suitable as the CNN model for stock

price prediction in terms of the results, their

prediction results have higher practical application

value because they put the feature values into the

model to join the training and introduce the memory

gate and forgetting gate.

Finally, this paper argues that the CNN-LSTM

fusion model is suitable for predicting large stock

data because this model is characterized by high

efficiency, accuracy, and realistic applications. For

investors, this paper holds that both CNN model and

Stock Price Prediction Based on CNN, LSTM and CNN- LSTM Model

29

LSTM model are good prediction models when

pred

icting stocks, but CNN-LSTM fusion model

is a better choice when predicting stock data of

long time series

REFERENCES

Kong Y., et al. Design of stock quantitative trading

algorithms under deep reinforcement learning. Journal

of Nanchang University (Science Edition)

48.01(2024):24-29+35.

Hu Y, Luo D., Hua K, et al. A review and discussion on

deep learning. Journal of Intelligent Systems,

2019, 14(1):1-19.

Zhang H., Research on short-term trend prediction method

of stock based on deep learning. 2023. Beijing

University of Posts and Telecommunications, MA

thesis.

Lecun Y., Boser B, Denker J S, et al. Backpropagation

applied to handwritten zip code recognition. Neural

Computation, 1989, 1(4): 541-551

Qi Liang. Prediction of lithium-ion battery charge state

based on CNN-LSTM. Electronic Quality

.01(2024):1-5.

Auntie X. Chen. A CNN-LSTM stock price prediction

model based on Bayesian optimization. 2023.

Lanzhou University, MA thesis. doi:

10.27204/d.cnki.glzhu.2023.002202.

Luo G., Wang X., and Dai J., Randomized feature graph

neural network model for IoT intrusion detection.

Computer Engineering and Applications 1-12.

Liu Z., et al. A study on the rapid detection of total

flavonoid content in Dendrobium officinale based on

Raman spectroscopy combined with CNN-LSTM deep

learning method. Spectroscopy and Spectral Analysis

44.04(2024):1018-1024.

Hua S., A CNN-LSTM PM2.5 concentration prediction

model based on attention mechanism. 2021. Zhejiang

University of Technology, MA thesis.

Zhou Q., Application of CNN and LSTM in short-term

stock price rise and fall prediction of cyclical

stocks. 2022. Zhejiang University, MA

thesis.doi:10.27461/d.cnki.gzjdx.2022.000387.

Zhang Y., Design of stock price prediction and quantitative

investment based on CNN-LSTM hybrid model.

2021.Huazhong University of Science and Technology,

MA thesis.

IAMPA 2024 - International Conference on Innovations in Applied Mathematics, Physics and Astronomy

30