LiDAR-Based Object Recognition for Robotic Inspection of Power Lines

Jos

´

e M

´

ario Nishihara de Albuquerque and Ronnier Frates Rohrich

Graduate School of Electrical Engineering and Computer Science,

Universidade Tecnol

´

ogica Federal do Paran

´

a (UTFPR), Curitiba, Brazil

Keywords:

LiDAR, Inspection, Autonomous Robot, Power Lines.

Abstract:

This article presents a novel technique using Light Detection and Ranging (LiDAR) sensors implemented in

an autonomous robot for the multimodal predictive inspection of high-voltage transmission lines (LaRa). The

method enhances the robot’s capabilities by providing vertical perception and classifying transmission-line

components using artificial-intelligence techniques. The LiDAR-based system focuses on analyzing

two-dimensional (2D) slices of objects, reducing the data volume, and increasing the computational efficiency.

Object classification was achieved by calculating the absolute differences within a 2D slice to create unique

signatures. When evaluated experimentally with a k-nearest neighbors network on a Raspberry Pi on a

real robot, the system accurately detected objects such as dampers, signals, and insulators during linear

movement experiments. The results indicated that this approach significantly improves LaRa’s ability to

recognize power-line components, achieving high classification accuracy and exhibiting potential for advanced

autonomous inspection applications.

1 INTRODUCTION

The reliability and efficiency of power-line

infrastructure are critical to modern society,

necessitating regular inspection and maintenance

to prevent outages and ensure safety. Traditional

methods for inspecting power lines, which involve

manual inspections or the use of manned helicopters,

are labor-intensive, expensive, and often dangerous.

The advent of autonomous robotic systems offers

a promising alternative for performing detailed

inspections while reducing human risks and

operational costs.

Robotic systems offer unparalleled consistency

and precision, perform repetitive tasks without

fatigue, and operate in environments that are

hazardous or inaccessible to humans. By automating

the inspection process, these robots can conduct

frequent and thorough assessments and identify

potential issues before they escalate to critical failure

(Yang et al., 2020). This proactive approach not

only enhances the reliability of the power supply

but also significantly reduces maintenance costs and

minimizes downtime. Furthermore, the use of robots

can alleviate safety risks associated with manual

inspections, protect the well-being of maintenance

personnel, and ensure compliance with stringent

safety regulations.

Power-line inspections typically depend on

manual processes that are time-consuming, labor

intensive, and dangerous. These methods typically

involve visual inspections conducted by personnel on

foot or using specialized vehicles (Chen et al., 2021).

Although they are effective, they are limited in their

ability to access hard-to-reach areas—particularly in

difficult terrain or adverse weather conditions. The

effectiveness of traditional visual inspection methods

relies heavily on the experience of the inspector,

which limits their reliability for comprehensive

integrity verification.

The emergence of autonomous robotic systems

has provided a transformative solution for these

challenges. Equipped with advanced sensing and

navigation capabilities, these robots can perform

detailed inspections of power lines, significantly

reducing the need for human intervention and

associated risks. Among the various available

sensing technologies, Light Detection and Ranging

(LiDAR) is the most promising. LiDAR systems use

laser pulses to measure distances with high precision

and create detailed three-dimensional (3D) maps of

the environment. When integrated with advanced

object-recognition algorithms, LiDAR-equipped

robots can assess the conditions of power lines,

insulators, and other critical elements with high

reliability (Zhang et al., 2022; Qin et al., 2018).

Nishihara de Albuquerque, J. M. and Rohrich, R. F.

LiDAR-Based Object Recognition for Robotic Inspection of Power Lines.

DOI: 10.5220/0012985800003822

In Proceedings of the 21st International Conference on Informatics in Control, Automation and Robotics (ICINCO 2024) - Volume 2, pages 197-204

ISBN: 978-989-758-717-7; ISSN: 2184-2809

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

197

The complexity of a power-line environment

presents unique challenges for object recognition.

Factors such as varying weather conditions,

dense vegetation, and the presence of multiple

overlapping objects necessitate robust and adaptable

recognition systems. Incorporating artificial

intelligence (AI) into LiDAR-based robotic systems

enhances their capability to recognize and classify

objects in complex power-line environments. AI

algorithms—particularly those based on machine

learning—can be trained on vast datasets to identify

various components and anomalies accurately. These

algorithms learn to discern subtle patterns and

features in LiDAR data, improving their accuracy

and reliability over time. By continuously updating

the models with new data, the system can adapt to

changing conditions and maintain high performance.

The combination of AI and LiDAR technology allows

the development of intelligent inspection systems

that not only detect issues but also predict potential

failures, facilitate timely interventions, and reduce

the likelihood of power outages.

This article presents an advanced technique for

inspecting power lines wherein LiDAR sensors

are used to accurately detect transmission-line

components. The method was implemented in

an autonomous robot for the multimodal predictive

inspection of high-voltage transmission lines (LaRa)

to enhance the capabilities of a multimodal inspection

sensor. The integration of LiDAR technology

provides vertical perception of the elements on

adjacent transmission lines. Object classification was

performed using various AI techniques with the aim

of identifying the most precise method for evaluating

actual transmission-line elements. The proposed

approach concentrates on examining a single 2D

cross-section of the object, which greatly minimizes

the amount of data and enhances computational

performance.

The remainder of this paper is organized as

follows. Section 2 discusses related work to clarify

the contributions of the present study. Section

3 describes the concept of the LaRa inspection

robot. Section 4 presents the proposed approach for

LiDAR-based object recognition and the experiments.

Finally, Section 5 presents conclusions.

2 RELATED WORK

The integration of LiDAR technology into robotic

inspection systems has attracted considerable

attention in recent years, with studies demonstrating

its potential to revolutionize power-line maintenance

(Alhassan et al., 2020). (Korki et al., 2019)

discussed the challenges of using unmanned aerial

vehicles (UAVs) in power¬line inspection and fault

detection, along with solutions. They presented three

conceptual designs that incorporate AI and efficient

sensors for high-precision fault detection. These

designs use thermal sensors and secure cloud-based

communication for data transfer.

LiDAR sensors are widely used in UAV

inspections of power lines to create detailed maps.

(Chen et al., 2022) proposed a diffusion-coupled

convolutional neural network for real-time detection

of power transmission lines using UAV-borne LiDAR

data. (Jenssen et al., 2018) addressed the limitations

of the current manual and helicopter-assisted

methods for power-line inspection, highlighting

concerns regarding cost, speed, and safety. This

review covers existing research on automating

this process using UAVs, robots, and AI-driven

vision systems, emphasizing the requirement for

high accuracy. The proposed approach focuses on

employing UAVs for inspection, utilizing optical

images as primary data, and leveraging deep learning

for analysis to advance autonomous vision-based

inspections in the power sector.

(Paneque et al., 2022) discussed power-line

inspection using a reactive-quadrotor-based online

system. In contrast to traditional methods involving

two-stage processes (data collection and offline

analysis), this system constructs a real-time 3D map,

evaluates data quality on the fly, and adjusts flight

to enhance the resolution as needed. The use of

LiDAR sensors for UAV inspection of transmission

lines primarily focuses on creating maps for

subsequent segmentation and classification, utilizing

the sensor’s capability for 3D depth perception in

visual processing.

These studies highlight the transformative

potential of LiDAR technology for power-line

inspection and maintenance. They address critical

aspects, including fault detection, UAV integration,

advanced object recognition, multisensor fusion, and

real-time monitoring, laying a solid foundation for

further advancement. LiDAR sensors are typically

used for surface mapping. This study introduces

a novel approach involving LiDAR-based object

recognition for power-line inspection, which can be

integrated into a multimodal inspection approach as a

complementary component.

ICINCO 2024 - 21st International Conference on Informatics in Control, Automation and Robotics

198

3 LaRa: AUTONOMOUS ROBOT

FOR MULTI-MODAL

PREDICTIVE INSPECTION OF

HIGH-VOLTAGE

TRANSMISSION LINES

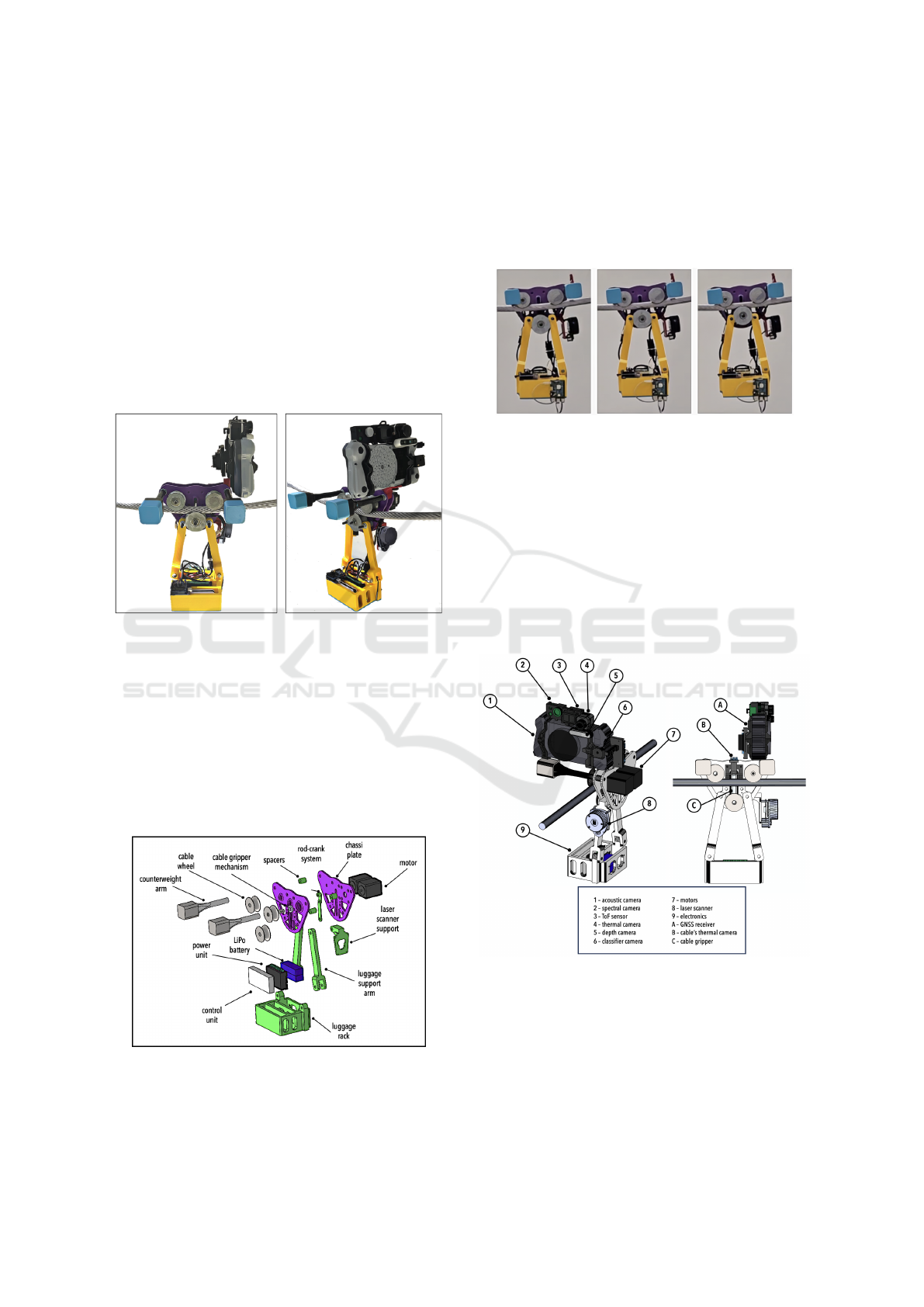

The inspection is performed autonomously using

a mobile robot that moves over electrical cables.

The autonomous robot for the multimodal predictive

inspection of high-voltage transmission lines (LaRa)

is designed to attach to the cable and move with

precision, carrying the multimodal inspection system,

as shown in Figure 1.

Figure 1: The Autonomous Robot for Multi-Modal

Predictive Inspection of High-Voltage Transmission Lines.

Two wheels are used to ensure support on the

electrical cable: one wheel is free, and the other is

driven by a servomotor, as shown in Figure 2. The

third wheel is part of a connecting rod–crank system

that moves the non-actuated wheel toward the cable,

maintaining a clamping pressure similar to that of a

robotic claw. This wheel can also move linearly away

from the cable, allowing the robot to be removed and

perform obstacle suppression maneuvers.

Figure 2: Exploded view of LaRa robot.

The cable-gripper system is mounted on a

structure consisting of two parallel plates separated

by fixed spacers. Between these plates, a connecting

rod–crank system moves the fixing wheel at the

bottom of the cable. The motors are fixed to the front

part of the claw, which interferes with the stabilization

of the system on the cable, leading to rotation around

the cable and potential falls.

Figure 3: Cable-gripper system in action.

The LaRa robot features a lower luggage rack

fixed with two articulated arms to ensure that the

weight is always directed toward the gravitational

force at the center of the cable gripper. The luggage

rack houses the electronic control system, motor

power, control system, and battery of the robot.

The center of mass of the system is aligned with

the cable center, which is achieved by introducing two

counterweight arms. One of these arms also serves

as a support for the attachment of the multimodal

inspection sensor.

Figure 4: Modules of LaRa robot.

High-voltage transmission lines are inspected

using a multimodal sensor specially designed for

predictive inspection. The sensor consists of several

subsensors (Figure 4), including an acoustic camera,

a spectral camera, a ToF sensor, a thermal camera, a

depth camera, and a classifier camera. All the sensors

are integrated into a stacked inspection map. This

LiDAR-Based Object Recognition for Robotic Inspection of Power Lines

199

approach is detailed in a previous work (hidden for

blind review).

The LaRa robot also features a specially placed

LiDAR sensor (Figure 4, item 8) to track objects in

a plane below the robot. This information is crucial

for analyzing the distance between transmission-line

elements and vegetation. It is also correlated with the

acoustic faults detected in the robot’s interior plane.

4 THE LiDAR-BASED OBJECT

RECOGNITION

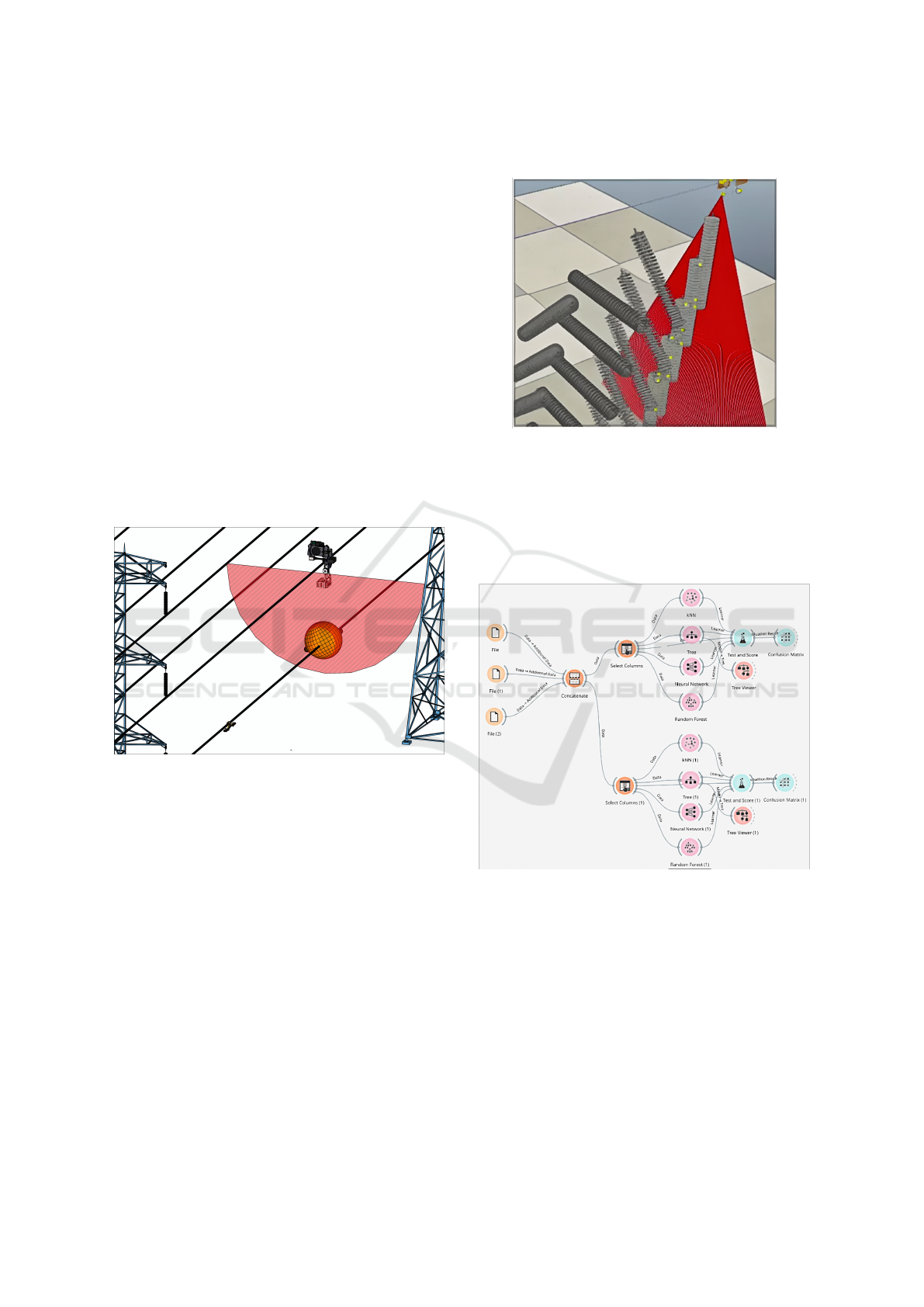

We investigated the development of a classification

system for LiDAR sensors in autonomous inspection

robots. For power lines, LiDAR-based recognition

was employed to introduce perception into the

interior plane of the robot, identify elements in the

lower cables, and measure the distance between the

elements and vegetation, as shown in Figure 5.

Figure 5: Proposed approach for LiDAR-Based Object

Recognition.

Four machine-learning models were tested

to develop a more reliable method for object

recognition. The analysis was conducted using

Orange (Dem

ˇ

sar et al., 2013; Dem

ˇ

sar and

Zupan, 2012)—an open-source platform for data

visualization and machine learning—and the

virtual experiment platform CoppeliaSim (Coppelia

Robotics) (Rohmer et al., 2013) for simulation of

robotics systems. A Hokuyo LiDAR sensor was

configured to perform 158 readings within a 50° field

of view. The sensor was attached to a simple model

of the inspection robot, and both the sensor and

robot were controlled and configured using a Robot

Operating System (ROS). Three distinct scenes were

created, each containing one of the analyzed objects,

with variations in distance and angle, as shown in

Figure 6. To collect the data, a Python script recorded

the sensor readings as the robot moved through each

scene.

Figure 6: Creation of the dataset in a virtual environment.

Four machine-learning models were tested

to develop a more reliable method for object

recognition. A composite technique was employed

to compare the results of k-nearest neighbors (kNN),

decision tree, random forest, and neural network

models, as shown in Figure 7.

Figure 7: Analysis of Machine Learning methods.

A confusion matrix—a fundamental tool in

machine learning and data analysis—was used to

evaluate the performance of the classification model

by comparing the predictions made by the model

with real data. The comparison between the

machine-learning models was based on the confusion

matrix, as shown in Table 1 for the random forest

model, Table 2 for kNN, Table 3 for the decision tree,

and Table 4 for the neural network.

The random forest model had the highest scores

across all metrics: it had an area under the ROC

curve (AUC) of 0.963, a classification accuracy (CA)

ICINCO 2024 - 21st International Conference on Informatics in Control, Automation and Robotics

200

Table 1: Confusion Matrix of Random Forest.

Damper Isolator Wire marker Σ

Damper 334 5 9 348

Isolator 14 868 28 910

Wire marker 11 93 170 274

Σ 359 966 207 1532

Table 2: Confusion Matrix of KNN.

Damper Isolator Signaler Σ

Damper 314 25 9 348

Isolator 8 875 27 910

Wire marker 6 163 105 274

Σ 328 1063 141 1532

Table 3: Confusion Matrix of Decision Tree.

Damper Isolator Wire marker Σ

Damper 317 17 14 348

Isolator 26 793 91 910

Wire marker 10 100 164 274

Σ 355 910 269 1534

Table 4: Confusion Matrix of Neural Network.

Damper Isolator Wire marker Σ

Damper 329 10 9 348

Isolator 12 862 36 910

Wire marker 9 118 147 274

Σ 350 990 192 1532

of 89.6%, an F1 score of 0.891, a precision of

0.892, a recall of 0.896, and a Matthews correlation

coefficient (MCC) of 0.812. This suggests that it

is exceptionally effective for distinguishing between

classes, making accurate predictions, and maintaining

a strong correlation between the observed and

predicted classifications. The neural network model

also performed well, with an AUC of 0.906, CA of

87.3%, F1 score of 0.866, precision of 0.868, recall

of 0.873, and MCC of 0.771, which were close to

those of the random forest model. Both the kNN and

decision tree models exhibited good performance but

lagged behind the top two models. The kNN model

had an AUC of 0.907, CA of 84.5%, F1 score of

0.828, precision of 0.840, recall of 0.845, and MCC

of 0.718. The decision tree model achieved similar

metrics, with an AUC of 0.907, CA of 83.2%, F1

score of 0.831, precision of 0.831, recall of 0.832,

and MCC of 0.701. The evaluation results for these

methods are presented in Table 5.

Table 5: Comparison of machine learning models.

Model AUC CA F1 Prec Recall MCC

kNN 0.907 0.845 0.828 0.840 0.845 0.718

Tree 0.907 0.832 0.831 0.831 0.832 0.701

Neural Network 0.906 0.873 0.866 0.868 0.873 0.771

Random Forest 0.963 0.896 0.891 0.892 0.896 0.812

kNN is a simple and intuitive lazy-learning

algorithm, meaning that it does not require an

explicit training phase, which can be beneficial for

real-time or dynamic datasets where the model must

adapt quickly with extensive retraining. This model

exhibited strong performance, indicating that it is a

reliable and accurate choice for classification tasks.

Furthermore, it has relatively few parameters to tune,

making it simpler to optimize than more complex

models such as neural networks or random forests.

For small to moderately sized datasets, kNN can be

computationally efficient and quick to implement and

is adequate for embedding in hardware; therefore, the

KNN method was selected for object recognition.

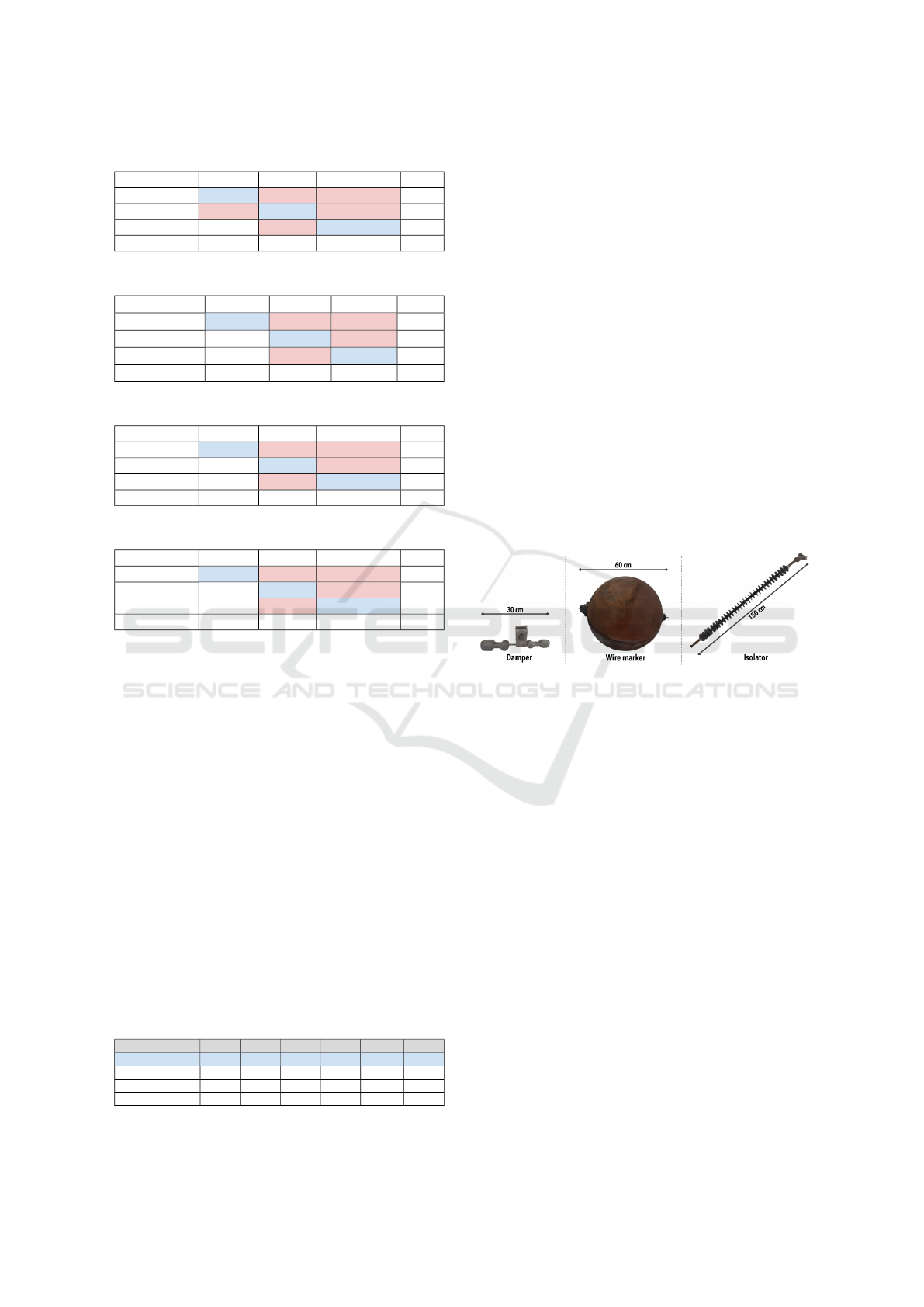

The kNN approach was extended for applications

in real LaRa robot. A new dataset was created

using the RPLiDAR A1 LiDAR sensor from Slamtec,

which was configured similarly to the simulation and

pointed perpendicular to the cable, 1.1 m from the

ground. Three types of real objects were analyzed:

insulators, wire markers, and dampers, as shown in

Figure 8.

Figure 8: Objects of power lines: damper, wire marker and

isolator.

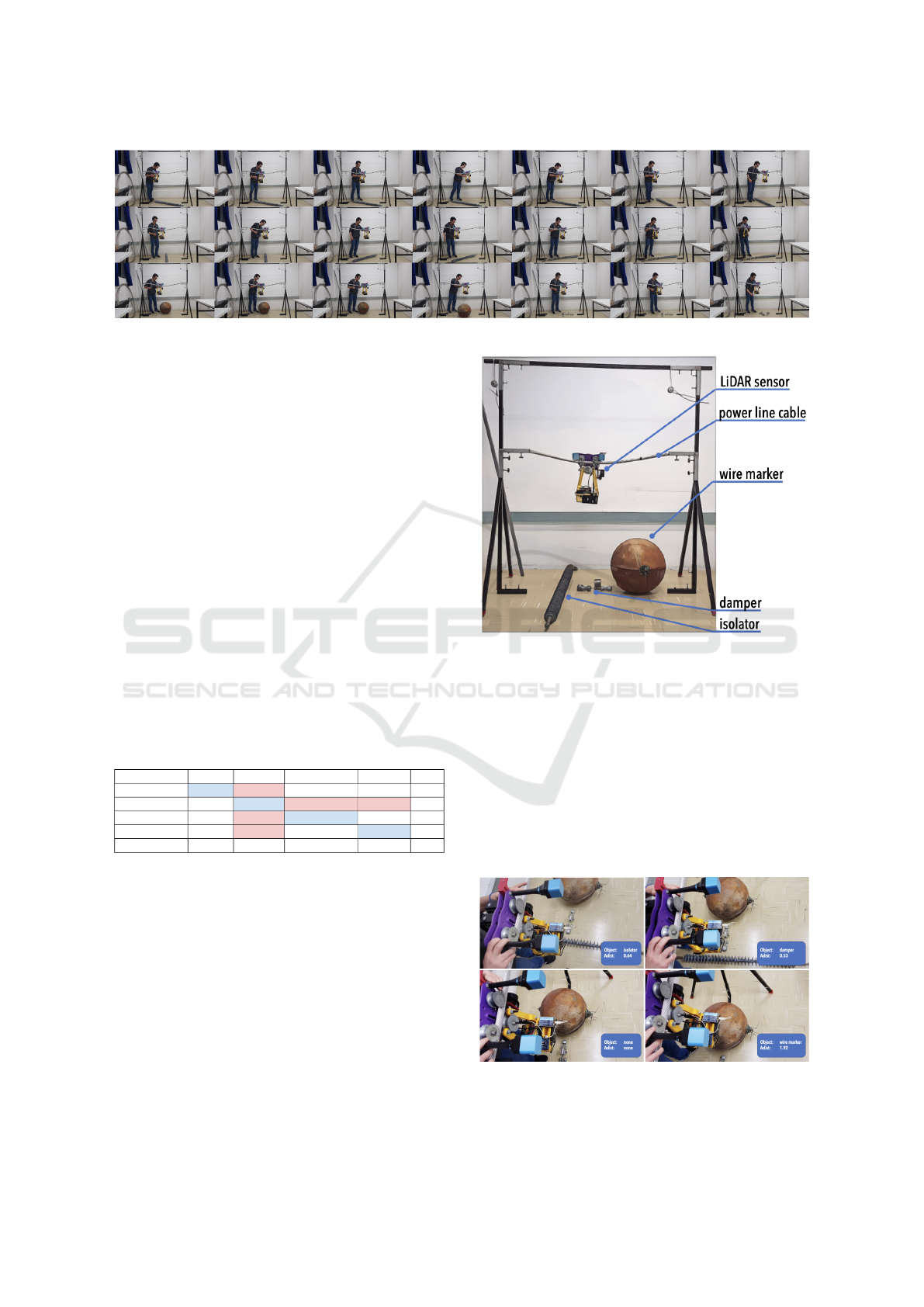

The LaRa robot was coupled to a real power-line

cable (i.e., a Grosbeak cable for 380 kV) fixed in a

laboratory structure that provided the same distance

to objects as a real transmission line. Four datasets

were created—one for each class analyzed (nothing,

wire marker, damper, and insulator)—and merged

in Orange. The data captured by the sensor were

processed to reduce noise; the information above 1.1

m was considered noise, as illustrated in Figure 9.

The kNN model was trained using the scikit-learn

library in Python with six neighbors, as determined

by the simulations. The trained model was saved in a

file and converted to .csv for use in C/C++ code. The

Euclidean method was used to calculate the distances

in the kNN model. Each new reading from the LiDAR

sensor was transformed into a vector of 159 elements

and compared with the distances of the kNN model,

and the class with the highest frequency among the

first six distance sums was returned.

The code was implemented on a Raspberry

Pi 3 running Raspbian with ROS Noetic, which

was equipped with a 3.2-inch LCD to show the

classifications. The LiDAR sensor and the code

LiDAR-Based Object Recognition for Robotic Inspection of Power Lines

201

Figure 9: Dataset acquisition.

communicated using the ROS, which facilitated

integration of the system components.

Orange was used to evaluate the accuracy of the

model by employing data collected from an actual

sensor. For this evaluation, the F1 score was selected

because of the imbalance between the classes in

the dataset. The F1 score is a performance metric

that combines precision and recall and provides a

harmonic mean of these two metrics. It is particularly

useful when there is an imbalance in classes because

it offers a more balanced view of the model’s

performance.

For our dataset, when the kNN model was

used with six neighbors, the F1 score was 0.938,

with an accuracy of 94%. Table 6 presents the

confusion matrix, indicating the percentage of correct

classifications for each class. The matrix revealed

that the most significant errors occurred in the

classification of the damper. This is because the

damper was significantly smaller than the other

objects.

Table 6: Confusion Matrix of kNN in real model.

None Isolator Wire marker Damper Σ

None 98.5% 1.7% 0.0% 2.0% 201

Isolator 0.5% 91.7% 1.5% 15.1% 239

Wire marker 1.0% 3.9% 98.5% 0.7% 269

Damper 0.0% 2.6% 0.0% 82.2% 131

Σ 197 230 261 152 840

4.1 Evaluation

The proposed approach was evaluated within an

experimental framework in which the kNN model

embedded in the Raspberry Pi controlling the LaRa

robot was used to detect objects in the lower plane.

A linear movement experiment was performed on

the robot cable in a round-trip path passing through

elements such as dampers, signals, and insulators.

The recognition system obtained 50 classification

samples for each object at different distances as the

robot moved along the cable, as shown in Figure 10.

The proposed method performed classification

Figure 10: Objects of power lines: damper, wire marker and

isolator.

and returned the average of the six closest distances

to each reading. When the sensor-captured data

were consistent with the training data, the average

distance of the readings was below 1, indicating high

classification certainty. This metric was adopted to

analyze whether the model was capable of correctly

identifying objects and maintaining the expected

proximity between the sensor readings and training

data. The evaluation results are shown in Figure 11,

and the output data are presented in Table 7.

Figure 11: Experimentation of LiDAR-Based Object

Recognition.

ICINCO 2024 - 21st International Conference on Informatics in Control, Automation and Robotics

202

Table 7: Overall evaluation.

Recognitions Efficiency Mean A.distance Deviance

Damper 46 92.00% 0.44 0.07

Isolator 48 96.00% 0.61 0.14

Wire marker 50 100.00% 1.41 0.34

Mean 48 96.00% 0.82 0.18

The experimental analysis was summarized and

graphically presented using boxplots. These graphs

provide a visual summary that can help identify the

central tendency, variability, and symmetry of the

data, along with potential outliers, as shown in Figure

12.

Figure 12: Boxplot of average distances.

5 CONCLUSIONS

This paper presents a novel approach for detecting

and classifying elements along power lines using a

LiDAR sensor. In contrast to traditional methods that

process entire 3D point clouds, this method focuses

on analyzing a single two-dimensional (2D) slice of

an object, significantly reducing the data volume and

increasing the computational efficiency.

Object classification was achieved by calculating

the absolute differences between consecutive values

within a 2D slice of the LiDAR point cloud.

These differences were aggregated to create a

unique signature for each object, allowing effective

categorization. The results indicated that the kNN

classification system can introduce the capability of

power-line object recognition to a LaRa autonomous

inspection robot equipped with a LiDAR sensor,

achieving accurate identification of different classes

of objects.

ACKNOWLEDGEMENTS

The project is supported by the National Council for

Scientific and Technological Development (CNPq)

(process CNPq 407984/2022-4); the Fund for

Scientific and Technological Development (FNDCT);

the Ministry of Science, Technology and Innovations

(MCTI) of Brazil; the Araucaria Foundation; and the

General Superintendence of Science, Technology and

Higher Education (SETI).

REFERENCES

Alhassan, A. B., Zhang, X., Shen, H., and Xu, H.

(2020). Power transmission line inspection robots:

A review, trends and challenges for future research.

International Journal of Electrical Power & Energy

Systems, 118:105862.

Chen, C., Jin, A., Yang, B., Ma, R., Sun, S., Wang,

Z., Zong, Z., and Zhang, F. (2022). Dcpld-net:

A diffusion coupled convolution neural network for

real-time power transmission lines detection from

uav-borne lidar data. International Journal of Applied

Earth Observation and Geoinformation, 112:102960.

Chen, M., Tian, Y., Xing, S., Li, Z., Li, E., Liang, Z., and

Guo, R. (2021). Environment perception technologies

for power transmission line inspection robots. Journal

of Sensors, 2021(1):5559231.

Dem

ˇ

sar, J., Curk, T., Erjavec, A., Gorup,

ˇ

C., Ho

ˇ

cevar, T.,

Milutinovi

ˇ

c, M., Mo

ˇ

zina, M., Polajnar, M., Toplak,

M., Stari

ˇ

c, A., et al. (2013). Orange: data mining

toolbox in python. the Journal of machine Learning

research, 14(1):2349–2353.

Dem

ˇ

sar, J. and Zupan, B. (2012). Orange: Data mining

fruitful and fun. Inf. Dru

ˇ

zba IS, 6:1–486.

Jenssen, R., Roverso, D., et al. (2018). Automatic

autonomous vision-based power line inspection: A

review of current status and the potential role of deep

learning. International Journal of Electrical Power &

Energy Systems, 99:107–120.

Korki, M., Shankar, N. D., Shah, R. N., Waseem, S. M.,

and Hodges, S. (2019). Automatic fault detection

of power lines using unmanned aerial vehicle (uav).

In 2019 1st International Conference on Unmanned

Vehicle Systems-Oman (UVS), pages 1–6. IEEE.

Paneque, J., Valseca, V., Mart

´

ınez-de Dios, J., and Ollero,

A. (2022). Autonomous reactive lidar-based mapping

for powerline inspection. In 2022 International

Conference on Unmanned Aircraft Systems (ICUAS),

pages 962–971. IEEE.

Qin, X., Wu, G., Lei, J., Fan, F., Ye, X., and Mei, Q.

(2018). A novel method of autonomous inspection for

transmission line based on cable inspection robot lidar

data. Sensors, 18(2):596.

Rohmer, E., Singh, S. P., and Freese, M. (2013). V-rep:

A versatile and scalable robot simulation framework.

In 2013 IEEE/RSJ international conference on

intelligent robots and systems, pages 1321–1326.

IEEE.

Yang, L., Fan, J., Liu, Y., Li, E., Peng, J., and

Liang, Z. (2020). A review on state-of-the-art

power line inspection techniques. IEEE

LiDAR-Based Object Recognition for Robotic Inspection of Power Lines

203

Transactions on Instrumentation and Measurement,

69(12):9350–9365.

Zhang, Y., Dong, L., Luo, J., Lu, L., Jiang, T., Yuan, X.,

Kang, T., and Jiang, L. (2022). Intelligent inspection

method of transmission line multi rotor uav based on

lidar technology. In 2022 8th Annual International

Conference on Network and Information Systems for

Computers (ICNISC), pages 232–236. IEEE.

ICINCO 2024 - 21st International Conference on Informatics in Control, Automation and Robotics

204