autoWT: A Semi-Automated ML-Based Movement Tracking System for

Performance Tracking and Analysis in Olympic Weightlifting

Gatis Jurk

¯

ans, Anikó Ekárt

a

and Ulysses Bernardet

b

Aston University, Aston Triangle, Birmingham, U.K.

Keywords:

Olympic Weightlifting, Human Action Recognition, AI Sport Coaching, Video Sports Analysis, Long Term

Performance Tracking.

Abstract:

As part of AI Coaching Assistant project research in sports performance tracking systems, we present autoWT,

a novel semi-automated computer vision tracking system designed and developed for repeated long-term per-

formance tracking of Olympic Weightlifting (OW) training. The system integrates multiple cameras and a

heart rate sensor to capture, detect, and analyse OW movements, providing coaches and athletes with objective

performance metrics. Key features include automated lift detection, clip extraction, and acquired performance

metric visualisation based on markerless pose estimation data. The system architecture, consisting of a dis-

tributed system with multiple workers and a controller, enables efficient processing of high-bandwidth data

streams. The paper provides an overall system architecture, operating principles and a detailed breakdown

of action onset recognition and performance metric extraction system modules. We evaluate the system’s lift

detection accuracy and the repeatability of extracted performance metrics using data from Olympic lifts. Re-

sults demonstrate high accuracy in lift detection and consistent and repeatable metric extraction, indicating

autoWT’s potential as a valuable tool for conducting long-term Olympic weightlifting performance analysis

studies and as an aid for coaching. The autoWT system can enhance the broader perspective and be an exem-

plary model for designing tracking systems in other sports.

1 INTRODUCTION

Significant progression in many sports disciplines,

such as golf, shot put, or Olympic weightlifting, de-

pends on gradual improvements of technical profi-

ciency in a few complex dynamic movements.

Tracking and analysing these changes is tradition-

ally the task of an experienced coach, who has spent

years participating in, observing, and correcting these

movements.

The AI Coaching Assistant (ACA) project aims

to provide access to accurate tracking of long-term

performance changes based on quantifiable and ob-

jective metrics using state-of-the-art computer vision

and machine learning techniques.

Here, we present the auto Weightlifting Tracker

(autoWT), a semi-automated long-term performance

tracking system developed to support Olympic

weightlifting training.

We have selected Olympic weightlifting (snatch

and clean & jerk) movements because they are essen-

a

https://orcid.org/0000-0001-6967-5397

b

https://orcid.org/0000-0003-4659-3035

tial to strength and conditioning training in numerous

sports disciplines such as judo, rugby, track and field,

and CrossFit.

Olympic lifts are considered highly efficient for

power development. However, these movements are

often difficult to introduce into strength and condi-

tioning programs due to their flat learning curve for

acquiring movement efficiency. One challenge is that

the core metric—weight on the barbell—can not di-

rectly inform about technical movement changes.

OW is well suited for long-term, high-resolution

performance tracking, as the training process is spa-

tially confined to being executed on weightlifting

platforms. Multiple cameras can be permanently in-

stalled to capture the training. Our contribution pro-

poses a novel system architecture and features that en-

able automated Olympic lift detection and annotated

clip extraction for repeated data collection in long-

term performance tracking studies.

To achieve the aforementioned functionality, the

system needs to include the following features:

• To develop performance forecasting models in-

tegrating a combination of sensors, the system

60

Jurk

¯

ans, G., Ekárt, A. and Bernardet, U.

autoWT: A Semi-Automated ML-Based Movement Tracking System for Performance Tracking and Analysis in Olympic Weightlifting.

DOI: 10.5220/0012997400003828

In Proceedings of the 12th International Conference on Sport Sciences Research and Technology Suppor t (icSPORTS 2024), pages 60-71

ISBN: 978-989-758-719-1; ISSN: 2184-3201

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

requires continuous and synchronised recording

from multiple sensors such as cameras, heart rate

monitors, accelerometers, and positional trans-

ducers.

• We needed to develop a key action detection sys-

tem to improve computational efficiency by re-

ducing markerless pose model use and minimise

coaches’ need to curate individual Olympic lift

clips.

• Ability to log supplementary data (exercise type,

set/repetition count, used weight, additional com-

mentary) – This data allows for easy annotation of

the captured data of the extracted clips.

• System and metric data visualisation – Provides

easy access to the system status and the ability

to provide feedback using metrics extracted from

Olympic lifts.

• System checks to ensure rigorous and repeatable

data capture.

We will begin by reviewing related work in perfor-

mance analysis technologies for barbell sports. Sub-

sequently, we describe the system requirements, fea-

tures, and architecture of autoWT. We will conclude

with a description of the numeric evaluation of the

system in terms of accuracy and repeatability.

2 RELATED WORK

2.1 Olympic Weightlifting

OW consists of two movements: snatch and clean &

jerk. In both, a loaded barbell is lifted to the above-

head position in one or two steps, and then the compe-

tition winner is awarded to the athlete with the high-

est combined weight. This study will refer to snatch

and clean & jerk as Olympic lifts (see Figure 1) and

any strength or bodybuilding movements trained us-

ing free weights or a barbell (barbell squats, bench

press, clean pull, etc.) as assistance lifts.

2.2 Barpath Tracking in Olympic

Weightlifting

The use of cameras for OW movement analysis goes

back to the 1960s and 1970s when OW was a highly

contested Olympic discipline during the Cold War era

(Garhammer and Newton, 2013). This period estab-

lished bar-path tracking as the preferred tool used

by researchers interested in comparing the perfor-

mance of Olympic lifts (AN, 1978). More recently

improved bar tracking algorithms have been devel-

oped (Hsu et al., 2018), (Hsu et al., 2019), and there

are now consumer-level tools such as BarSense, WL-

Analysis, Dartfish and others that provide bar track-

ing. One of the first examples of a system to aid

coaching in OW beyond bar-path tracking was im-

plemented by (cha, ) for extraction of 3D bar-path

and performance metrics (barbell tilt and knee flex-

ion angle) using depth data - Kinect cameras. While

bar tracking is helpful, with the rapid advancement of

computer vision and related computer science fields,

many more tools and approaches have become avail-

able for extracting useful information from video data

of human movement.

2.3 Action Recognition of Olympic Lifts

To perform long-term tracking of Olympic lifts using

camera sensors, they must first be identified within

the video stream data; this aim fits in the Human Ac-

tion Recognition (HAR) sub-field of computer sci-

ence. (Host and Ivaši

´

c-Kos, 2022) define categories

for HAR in sports, according to which Olympic lifts

are Individual Complex Actions - a combination of

simple actions and interactions with an object. A pop-

ular method for detecting periodic activities, includ-

ing exercise, is RepNet (Destro, 2024), which could

be used for the repetition segmentation of assistance

lifts like the squat and overhead press, yet for the

detection of Olympic lifts, this is not applicable as

the lifts are usually performed in sets ranging from 1

to 3 repetitions with each repetition usually intermit-

tent by a pause between lifts. An Olympic lift on-

set detection method was developed by (Yoshikawa

et al., 2010) using Cubic Higher-Order Local Auto-

Correlation, which is a feature extraction method

that captures complex spatial relationships comput-

ing third-order auto-correlation values of pixel val-

ues within a local neighbourhood. A strong feature

of this method is the ability to detect the onset regard-

less of the different capture angles of the lifts. There

are also convolutional neural network (CNN) based

approaches using deep key-frame detection - detect-

ing and extracting key positions of Olympic lifts from

lift recordings (Jian et al., 2019), (Pan, 2022), (He

et al., 2023). These methods are not applied for ac-

tion recognition or lift onset detection but could be

suited for these applications. The limiting factors for

pure CNN-based systems are the high computational

system requirements for data processing and the prob-

abilistic nature of the key frame detection - delivering

several frames associated with the same key position

at high frame rates.

autoWT: A Semi-Automated ML-Based Movement Tracking System for Performance Tracking and Analysis in Olympic Weightlifting

61

Figure 1: Examples of Olympic lifts. A - Snatch lift. B - Clean & Jerk lift.

2.4 Modern Computer Vision Use for

Barbell Sports Performance

Analysis

Olympic lifts are challenging to analyse due to their

fast pace and highly technical nature. Currently, only

two publications suggest technique adjustment and

analysis methods for Olympic lifts using computer vi-

sion (Rethinam et al., 2023),(Bolarinwa et al., 2023).

Markerless pose detection (MPD) is a core technol-

ogy used in both papers. MPD enables the extraction

of landmark locations of the body pose directly from

image data. Several models are available; we use

Google’s - BlazePose (full-heavy) model implemen-

tation due to its reduced computational demand as be-

ing designed for application in mobile devices while

delivering high pose estimation accuracy (Bazarevsky

et al., 2020). MPD has shown reliable retrieval of

body landmark data, the caveat being that there is a

potential landmark offset compared to marker-based

solutions and errors due to occlusion of body parts

(Needham et al., 2021) (Mroz et al., 2021). (Rethi-

nam et al., 2023) propose MPD data for an algorithm

that calculates the athletes’ centre of gravity extracted

from their body proportions. This approach suggests

that the algorithm can be used to determine the stabil-

ity of the base (foot placement) for the athlete execut-

ing clean & jerk movement. (Bolarinwa et al., 2023)

developed a system improving the refereeing process

to reduce human bias in judging successful and failed

attempts in OW. First, by recognising recovery parts

of snatch and clean & jerk movements, a neural net-

work classifies lifts as complete or incomplete. Then,

MPD is used for lift analysis to determine common

technique breakdowns, such as the press-out rule.

Several studies suggest MPD use to correct the

technique of assistance lifts outside of OW applica-

tions, as they are technically much simpler and pe-

riodic. A good example is (Lin and Jian, 2022),

where an algorithm was developed for assisting dead-

lift form correction.

(Arandjelovi

´

c, 2017) did not use MPD, but devel-

oped an entire “monitoring-assessment-adjustment”

loop for powerlifting exercises - squat and bench

press, which allows for analysis and performance

forecasting based on simulation and personalised ath-

lete profiles.

Olympic weightlifting has a long history of using

camera-based data analysis for performance tracking,

which had historically been accessible only to sports

scientists exploring the biomechanics of Olympic

lifts. The last two decades of rapid developments

in computer vision and machine learning have built

algorithms that now can give greater access to pose

data estimation directly from video footage. Some

work has been shown to utilize these tools for real-

time adjustment of simple periodic assistance lifts and

for judging technical aspects of individual executed

Olympic lifts. Based on our literature review, we have

identified a gap in research for building an automated

system for repeated long-term performance tracking

in OW, integrating lift detection, performance metric

extraction and feedback.

3 ARCHITECTURE

In this section, we will highlight the key features and

introduce the overall selected system architecture. We

will then describe in depth the two core modules, the

Lift Detector and Metric Extractor.

3.1 autoWT Features

The features selected and implemented as part of au-

toWT aim to serve the goals of the AI Coaching As-

sistant project - to improve the coaching experience

through long-term tracking of athletes using computer

vision and machine learning. autoWT enables long-

term data collection studies to develop performance

forecasting models using markerless pose estimation

data. Such research studies require long-term data

collection, where athletes need to use the system to

record their training sessions repeatedly throughout

whole training blocks. This is achieved by easing

the integration of autoWT as part of existing training

practices; we selected features that enable data col-

lection and provide benefits to the training process,

encouraging the system’s use.

icSPORTS 2024 - 12th International Conference on Sport Sciences Research and Technology Support

62

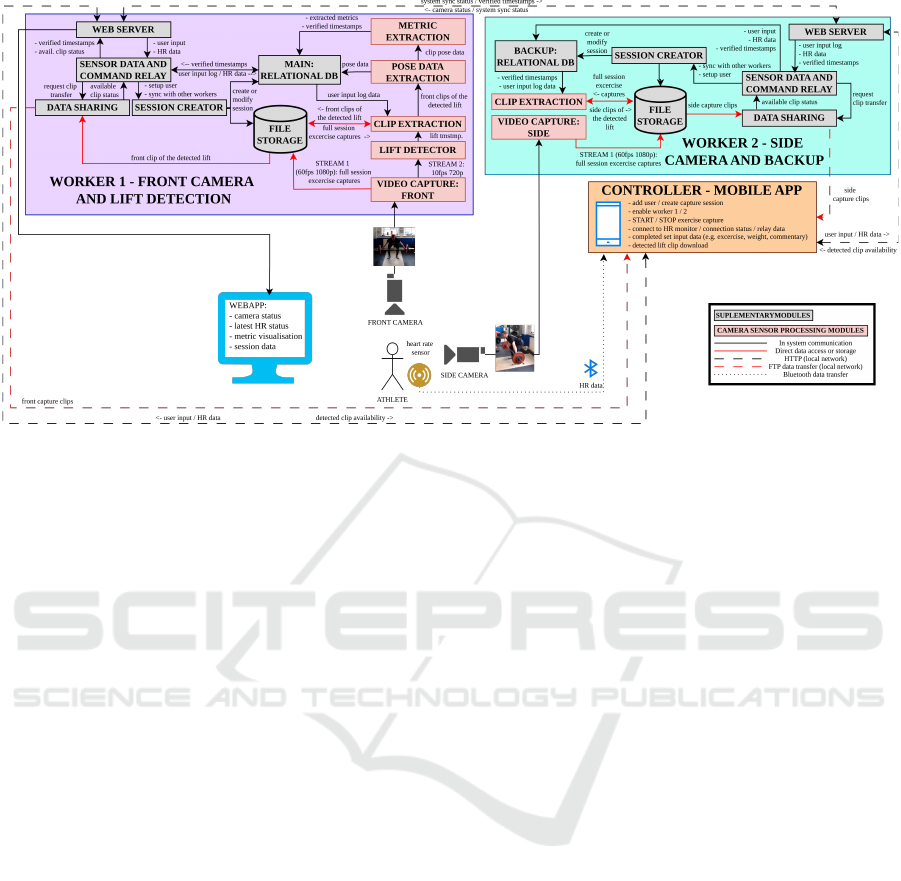

Figure 2: Auto weightlifting tracker architecture.

3.1.1 Multi Camera Capture with Detected

Olympic Lift Extraction

Camera use for performance analysis and review is a

common practice in OW. Yet, it is often left out as the

production of individual clips and their annotation is

time-consuming and labour-intensive. AutoWT auto-

mates lift detection and clip extraction with easy ac-

cess to the user. By providing this sought-out feature,

we enable coaches and athletes to direct more focus

on training. The extracted clips are also annotated

with a unique identifier number and weight used on

the barbell, extracted from the user-logged data.

3.1.2 User Data Logging and Visualisation

Besides lift data accessible through the controller ap-

plication, autoWT worker systems serve a WebApp

displayed on a large screen during system use. The

WebApp gives easy access to system information:

camera capture status, detected lift performance met-

ric visualisations, latest heart rate sensor readings,

and tabulated user-logged data. The ability to quickly

see the progression of the current training session in a

singular overview encourages the logging of the train-

ing session data.

3.1.3 Multi Sensor Data Capture

Currently, autoWT allows for camera and heart rate

sensor data capture. The system enables the setting

of heart rate warning thresholds. When the user’s

heart rate is above the threshold, visual cues flag this

change by increasing the font and changing the colour

of the displayed heart rate readings. This data can en-

able a simple method for tracking athletes’ exertion

levels and inform rest time selection. This data col-

lection supports future research exploring athlete fa-

tigue level forecasting based on combined heart rate

tracking and performance metric data.

3.2 autoWT System Procedure

Before the system’s first use, the controller system

and the workers are set up on the same local network,

the cameras are positioned in permanent static loca-

tions, and markers are set on the weightlifting plat-

form to indicate the setup starting position when lift-

ing.

The worker systems are first powered on when

using the system, which starts a series of automated

scripts enabling autoWT software. Then, when the

controller app is opened, it waits to receive synchro-

nising checks from the worker systems. Once work-

ers are available, the controller app enables the ini-

tialisation of a user using the Session Creator module

to create new storage locations and database entries

associated with a new training session in all worker

systems.

After initialisation, athletes can start using cam-

eras and the heart rate sensor - these sensors can be

used simultaneously or independently. As soon as the

user enables the heart rate sensor on the controller

app, the sensor data starts streaming to workers and

appears for display on the WebApp.

The software is designed to automate the exercise

capture and analysis, so when capturing an exercise,

autoWT: A Semi-Automated ML-Based Movement Tracking System for Performance Tracking and Analysis in Olympic Weightlifting

63

the athlete only needs to start and stop capturing when

changing to a new exercise. To start camera capture,

the user selects the intended exercise from a list on the

controller app. Depending on the exercise selection,

different autoWT features will be enabled. If Olympic

lifts are selected, worker modules associated with lift

detection and metric extraction will be enabled. Af-

ter exercise selection and pressing the “start capture”

button, worker systems are notified to start recording

using the front and side cameras simultaneously.

When recording Olympic lifts, detected and ex-

tracted clips populate the available clips table on the

controller app, which, upon selection, can be down-

loaded to the device. Additionally, if the Snatch

movement is being recorded, the metric extraction

system generates the Snatch Pull Height (SPH) met-

ric, visualised on the WebApp.

During the capture, the athlete can log informa-

tion about completed sets - set/repetitions count and

additional commentary on execution. Each time a

new set is added, the information appears on the We-

bApp in a tabulated format. Every 5 seconds, the

WebApp updates newly added sets or calculated met-

rics available from the previously executed sets. Once

the athlete has completed the exercise, they press the

“stop capture” button, notifying termination of exer-

cise capture; once the lift processing modules com-

plete any outstanding tasks, they stop until a new ex-

ercise recording commences, repeating the process.

3.3 System Design Considerations and

Architecture Selection

To implement the functionality described, the system

requirements include:

• Concurrently process multiple sensor data

streams, such as capturing and encoding multiple

high-bandwidth video streams and timestamped

physiology data, including heart rate sensor data.

• The system needs to be enabled by single-

board computers rather than GPU-enabled, high-

powered PC systems.

• System needs to be accessible via low-

specification devices.

• Function effectively with unreliable external net-

work access.

Given these requirements, a distributed architecture

was selected because it can handle high-bandwidth

data processing through parallel processing, support

low-specification control devices by offloading inten-

sive tasks to distributed workers, and operate effec-

tively without consistent network access. autoWT

comprises two worker sub-systems that handle the

processing for camera sensor data combined with

a single controller system (MobileApp) that coordi-

nates activities and relays data for a heart rate monitor

sensor. Each worker sub-system comprises modules

covering different system functions divided into core

and sensor processing modules. The overall system

architecture and the interactions between sub-systems

and their specific modules can be seen in Figure 2.

3.3.1 Worker Sub-System - Core Modules

Core modules are crucial for integrating the worker

sub-systems into the autoWT system. The current au-

toWT core modules include the Web Server, which

enables communication between systems through the

local network using the HTTP protocol and can host

a WebApp to display system status information. An-

other module is the Sensor/Command Relay, which

processes command packets received from the Web

Server and forwards them to the relevant sub-system

modules. Additionally, there is the Data Sharing mod-

ule, which controls an FTP server to transmit ex-

tracted Olympic lift video clips to the MobileApp

(controller). Finally, the Session Creator module

manages the creation and administration of the ongo-

ing session, including file storage and database setup.

3.3.2 Worker Sub-System - Sensor Processing

Modules

The sensor processing modules - capture, process,

and analyze sensor data, specifically camera sensor

data. The video capture module manages the cap-

ture and storage of multiple video streams. Mean-

while, the Lift Detector module (Worker 1) uses a

low-framerate video stream to detect Olympic lift

start and end onset timestamps, with a more detailed

description in the Lift Detector section. Following

this, the Clip Extraction module (Worker 1) gener-

ates individual annotated clips from the stored high-

framerate stream data using detected onsets and user-

logged data. Then, the Pose Data Extraction mod-

ule (Worker 1) applies the markerless pose model

to extracted clips and stores the pose in the rela-

tional database. Finally, the Metric Extraction mod-

ule (Worker 1) extracts performance metrics for visu-

alisation by the WebApp - more details in the Metric

Extractor section.

3.4 Lift Detector Module

The Lift Detector is the module that detects the start

and end of Olympic lift movements from a low-

framerate video stream. It can currently detect snatch

icSPORTS 2024 - 12th International Conference on Sport Sciences Research and Technology Support

64

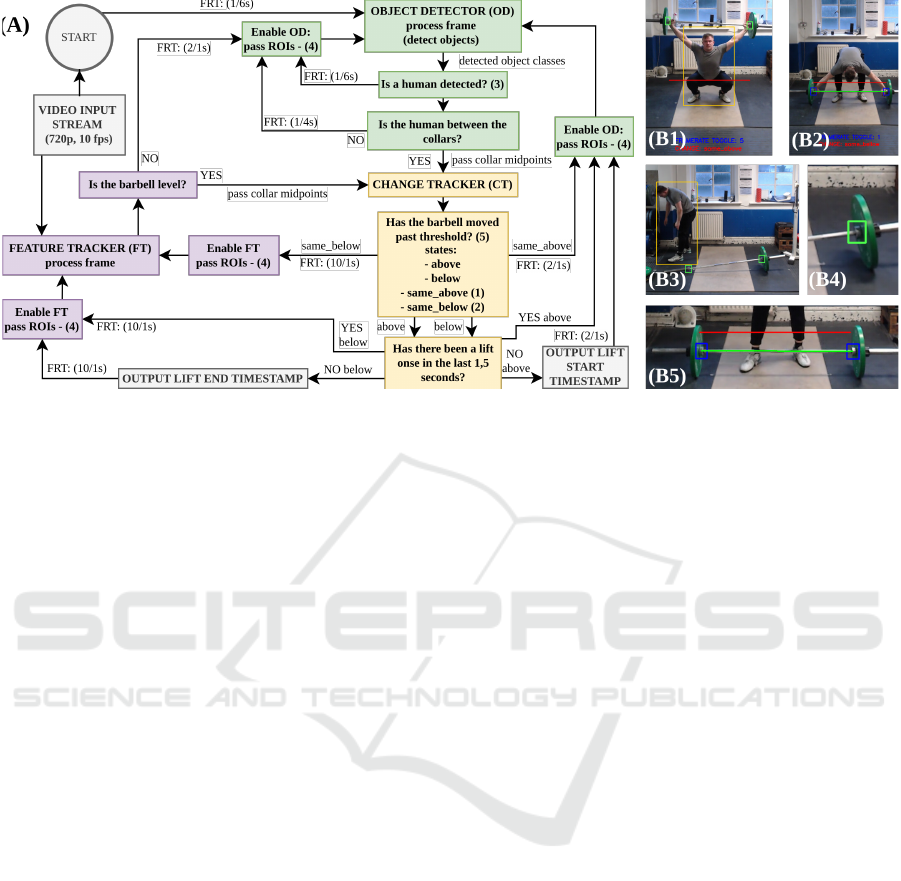

Figure 3: Lift Detector module state diagram. (A) The state diagram shows the interaction between three parts: the Feature

Tracker (FT), Object Detector (OD), and Change Tracker (CT). FRT - Framerate toggle indicates an adjustment to the input

framerate for the OD and the FT, e.g. (1/6s) (1 frame per 6 seconds). (B) A series of images detailing parts of the state

diagram. (B1) Lift detector output during the "same_above" state. (B2) Output during the "same_below" state; (B3) is an

example of output when a human is detected in the frame but not between the barbell collars; (B4) Example of a barbell

collar Region of interest (ROI), identified by the object detector. (B5) ROIs tracked by the feature tracker; the red line is the

threshold line, and the green line is the barbell identified by the midpoints of the barbell collar ROIs.

and clean & jerk movement onsets with high accuracy

(see Evaluation - Lift Detector Testing). The "Change

Tracker” component of the Lift Detector tracks the

barbell’s position around a threshold line, set based

on readings of the barbell’s location while stationary

on the floor (Figure 3). Depending on whether the

barbell is detected above or below the threshold and

whether a person is detected in the frame or between

the barbell collars, the system switches between the

“Object Detector” and the "Feature Tracker”. This

design enables efficient lift onset detection with opti-

mised system resource use.

3.4.1 Change Tracker

The Change Tracker is the go-between the Object

Detector and the Feature Tracker, keeping track of

the state changes of the overall lift detector module.

There are four states in the system:

• above —- the barbell just moved past the thresh-

old line, triggering the lift start onset detection;

• same_above —- the barbell continues to be above

the threshold;

• below—the barbell just moved below the thresh-

old line, triggering the lift end onset detection;

• same_below —- the barbell continues to be below

the threshold line.

In addition to tracking the lift detector state, the

change tracker employs additional checks for im-

proved performance. First, to reduce false positive

consecutive onset detections due to the barbell being

thrown on the ground and bouncing off the floor past

the threshold line, the change tracker checks if an on-

set has been detected in the last 1.5 seconds. Second,

the change tracker adjusts the height of the threshold

line above the barbell based on the last 400 readings

while the barbell is level on the floor.

3.4.2 Object Detector

The Object Detector is a fine-tuned Region-based

Convolutional Neural Network (R-CNN) trained on

a set of 1400 images of Olympic lifts. The images

were manually annotated with two classes of objects:

Collars and Humans. Collars are the inner part of the

weightlifting barbell (see Figure 3(B4) - example for

region of interest of the barbell inner collar). When

both humans and collars are detected in the frame,

additional checks are performed to ensure the correct

object detection, such as checking if the person is de-

tected between the collars at the same height in the

image frame. Then, if the barbell is below the change

threshold line and the additional checks are passed,

the system switches to the Feature Tracker. Depend-

ing on the Lift Detector’s overall state, the Object De-

tector will read the input frames at a rate between 1

frame every 6 seconds to 2 frames every second. This

low frame rate is due to the high system performance

needs of the R-CNN, which is why we use it in com-

autoWT: A Semi-Automated ML-Based Movement Tracking System for Performance Tracking and Analysis in Olympic Weightlifting

65

bination with a more traditional Feature Tracker sys-

tem.

3.4.3 Feature Tracker

Once the Object Detector passes the barbell collar

ROIs, the Feature Tracker acquires the stream, track-

ing each ROI at an increased frame rate of 10 frames

per second. The Channel and Spatial Reliability

Tracking feature tracker allows for efficient barbell

tracking but is prone to drift when a single side of the

barbell is moved, creating partial occlusion, or when

the barbell is accelerating very rapidly, creating mo-

tion blur and, therefore, losing features being tracked.

To avoid drift, the feature tracker checks that the bar-

bell is level and switches to the object detector when

the barbell is uneven. To prevent the feature tracker

from losing collars due to barbell acceleration, we

only use the feature tracker up to the threshold line,

past which the object detector is used. This works be-

cause the mechanics of the Olympic lifts have a slow

initial acceleration pattern from the floor; the barbell

never moves fast enough in this region for the feature

tracker to lose tracking.

Figure 4: Snatch Pull height metric. Y-axis markerless pose

estimate data coordinates after filtering with SPH metric

key points added.

3.5 Metric Extraction Module

The Lift Detector identified onsets are used to ex-

tract high-framerate clips. Then, the Metric Extrac-

tion module uses markerless pose data extracted from

the clips to generate defined metrics. Following dis-

cussions with Olympic weightlifting coaching staff

supporting the AI Coaching Assistant project, several

metrics were identified as potentially helpful in track-

ing long-term performance. One such metric is snatch

pull height SPH, which is the distance that the barbell

travels between the start of the lift and the maximum

height the barbell reaches following the last pull of the

snatch (see Figure 4); the metric can identify snatch

lift performance efficiency when examined for maxi-

mal lifts. Additionally, we propose that SPH can help

effectively analyse athletes’ ability to adjust to weight

increases during multiple repetition sets. The follow-

ing is an example of an analytical approach for ex-

tracting the key points of interest from the markerless

pose data necessary to generate the snatch pull height

metric.

3.5.1 Extracting Key Points for Snatch Pull

Height Metric

To calculate the metric, we primarily use the y-axis

data for the wrist movement. First, the pose data is fil-

tered to remove high-frequency noise using a rolling

average filter with a window size of 10, which is 1/6th

of a second for a capture frame rate of 60fps. We find

two key points to calculate SPH: the start of the snatch

movement and the maximum pull height. The differ-

ences between these two points result in the SPH met-

ric (see Figure 4).

3.5.2 Finding Maximum Pull Height Point

To determine the maximum point where the wrist

reaches its peak height during a pull, we locate the

first local maximum peak in the upward movement of

the wrist along the y-axis that is greater than the av-

erage height of the wrist when the barbell is on the

floor, plus an additional 20%.

3.5.3 Finding Start Acceleration Point

The onsets provided by the lift detector give an ap-

proximate start and end position for the lift move-

ments. However, our identified key points must be

exact, as the start acceleration point is essential for

calculating many potential performance metrics. This

task is not trivial. We cannot simply use a single sig-

nal, e.g., y-axis points, for the wrist movement, as the

athlete’s body is not fully rigid; there is a slight off-

set for different body landmarks when the body starts

moving upwards before the barbell leaves the ground.

At the same time, just before the barbell starts accel-

erating from the floor, the athlete remains steady for

a fraction of a second as the force transfers into the

barbell before the combined system starts moving up-

wards. By comparing the y-axis movement across

multiple landmarks, we can find this steady period

and define the start acceleration point at the start of

this period using Algorithm 1. The algorithm initially

filters the pose data only to include the specified y-

axis signals from the beginning of the lift recording to

the maximum pull index. Then, for each signal, stan-

dard deviations over a rolling window are calculated,

and the mean of these deviations is used to calculate a

icSPORTS 2024 - 12th International Conference on Sport Sciences Research and Technology Support

66

threshold value. Finally, to determine the start accel-

eration point, all the signals within the defined win-

dow are compared to the threshold; if the start and

end values are below the set threshold, a steady pe-

riod is identified. If a steady period is not found, the

window size is reduced, and the process is repeated.

As the steady period is a fraction of a second, we se-

lected the window size as 15 or below for our system.

Given that our system’s pose data is extracted from

recordings at 60fps, the window equals 15 frames or

less than a quarter of a second.

4 EVALUATION

A data collection study was conducted to provide

data, first, to develop and test the Lift Detector mod-

ule, and second, to test the extracted metric repeata-

bility across and within capture sessions using the in-

tegrated markerless pose model in the Metric Extrac-

tion module.

We captured full training sessions using the base

autoWT system of two cameras and a heart rate mon-

itor, capturing Olympic and assistance lifts. Follow-

ing each capture session, data was annotated manu-

ally, with individual start and end timestamps of each

executed Olympic lift and start and end timestamps

for executed set for assistance movements. Addition-

ally, user data was logged, including set, repetition

numbers, weight on the barbell, and additional coach

commentary. Data was collected using the following

hardware:

• Controller - MobileApp: Samsung Galaxy Tab 6A

(Android 8.1)

• Workers: 2 * (single board computers) - Jetson

Xavier NX (Ubuntu 22.04 JetPack)

• Cameras: 2 * (web cameras) - Logitech Streamc-

Cam

• Heart Rate Monitor: Polar H10

Eight athletes (7m,1f) participated in a total of 21

training sessions; each participant conducted between

1 to 6 full sessions, providing us with 381 snatch and

190 clean & jerk recordings. The following sections

explore insights gained from this data.

4.1 Lift Detector Testing

Apart from the data used to train the Object Detector

for the Lift Detector, out of the twenty-one sessions,

four full capture sessions, each featuring a different

athlete performing Olympic lifts, were set aside for

Input: Pose data D (all 33 y points),

Maximum pull height index M

Output: start_acc_point index

Initialize Parameters:

Define signals_of_interest: {s

1

, s

2

, . . . , s

m

} ⊆

{"wrist_y", "hip_y", "ankle_y", . . . };

Filter D to D

′

with signals of interest;

Truncate D

′

at M, resulting in D

′′

;

Let n be the number of data points in D

′′

;

Iterate over window sizes:

Define W = {15, 14, . . . , 5};

foreach window_size w ∈ W do

Calculate Tolerance:

foreach signal s ∈ {s

1

, s

2

, . . . , s

m

} do

Compute σ

t,s

over a rolling window

of size w for t ∈ {1, 2, . . . , n − w + 1}

in D

′′

;

Calculate mean standard deviation:

σ

w,s

=

1

n − w + 1

n−w+1

∑

t=1

σ

t,s

end

Calculate overall mean:

σ

w

=

1

m

∑

s∈{s

1

,s

2

,...,s

m

}

σ

w,s

Define tolerance τ =

σ

w

2

;

Check Moving Averages:

for i = n down to w do

if ∀s ∈

{s

1

, s

2

, . . . , s

m

},

D

′′

i,s

− D

′′

i−w,s

< τ

then

Set start_acc_point to i − w;

break;

end

end

if start_acc_point is set then

break;

end

end

Algorithm 1: Find start acceleration algorithm.

testing. This dataset comprises 4 hours and 23 min-

utes of complete recordings, with 268 manually an-

notated timestamps marking the start and end of each

lift.

4.1.1 Lift Detector Test Procedure

The ground truth timestamps were annotated to in-

clude the whole lift, with the start timestamp being

in an interval of ±2 seconds of the actual start of the

lift and the end being recorded as ±2 seconds of the

autoWT: A Semi-Automated ML-Based Movement Tracking System for Performance Tracking and Analysis in Olympic Weightlifting

67

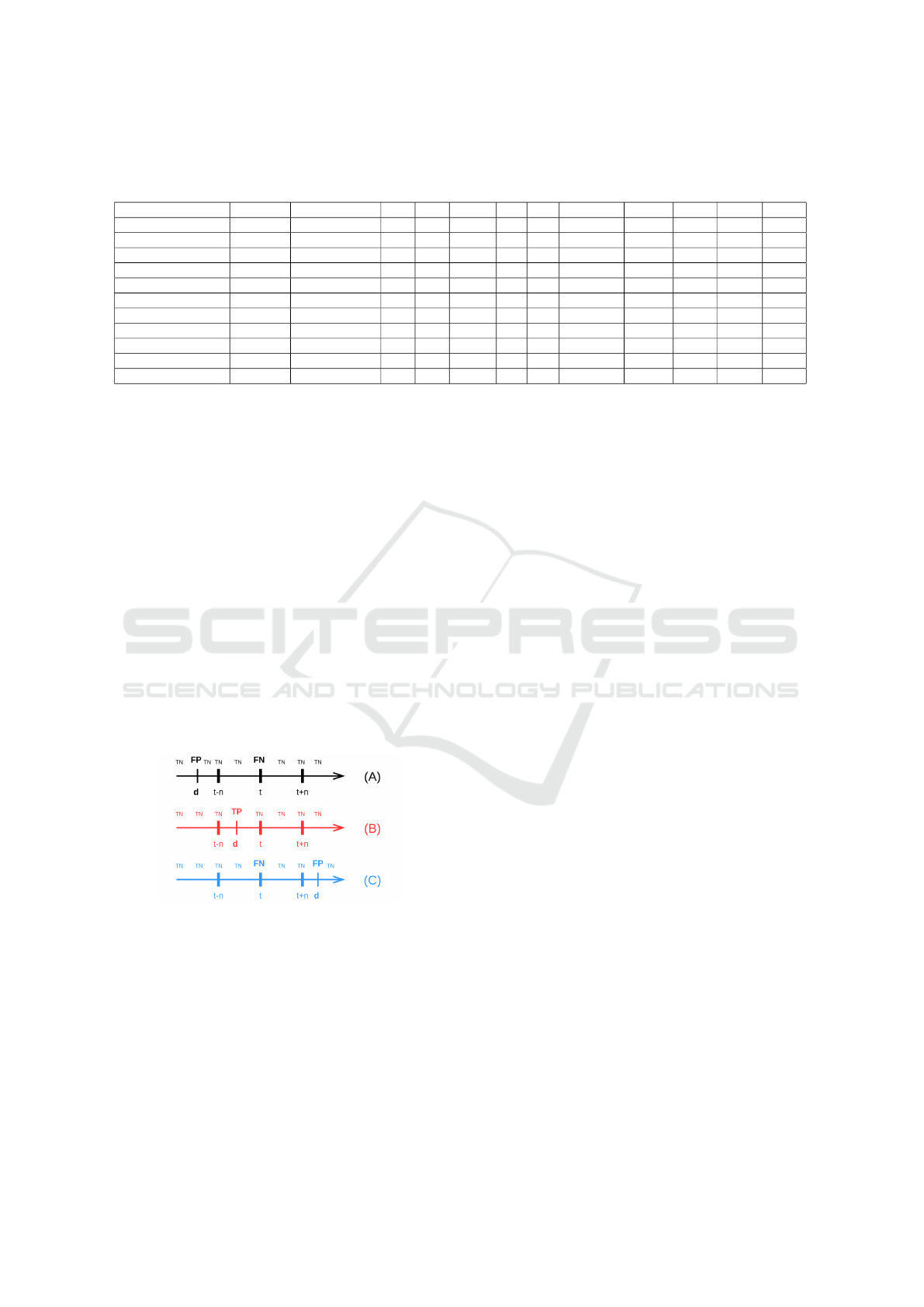

Table 1: Lift Detector Test Results. LD detections - total number of all detections by the Lift Detector. AP - Actual Pos-

itives, TP - True Positives, TN - True Negatives, FP - False Positives, FN - False Negatives, MCC - Matthew’s Correlation

Coefficient, JCC - Jaccard Coefficient.

Participant Exercise LD detections AP TP TN FP FN Precision Recall F1 MCC JCC

p1 cnj 27 28 27 1783 0 1 1.000 0.964 0.982 0.982 0.964

p1 snatch 70 68 60 1918 10 8 0.857 0.882 0.870 0.865 0.769

p14 cnj 24 22 22 2275 2 0 0.917 1.000 0.957 0.957 0.917

p14 snatch 42 40 37 2468 5 3 0.881 0.925 0.902 0.901 0.822

p6 cnj 21 26 21 1825 0 5 1.000 0.808 0.894 0.897 0.808

p6 snatch 33 40 30 2040 3 10 0.909 0.750 0.822 0.823 0.698

p9 cnj 23 20 17 1720 6 3 0.739 0.850 0.791 0.790 0.654

p9 snatch 24 24 23 1432 1 1 0.958 0.958 0.958 0.958 0.920

TOTAL_SNATCH snatch 169 172 150 7858 19 22 0.888 0.872 0.880 0.877 0.785

TOTAL_CNJ cnj 95 96 87 7603 8 9 0.916 0.906 0.911 0.910 0.837

TOTAL ALL 264 268 237 15461 27 31 0.898 0.884 0.891 0.889 0.803

barbell being dropped back on the floor. Yet the Lift

Detector detects onsets once the barbell moves past

a threshold above or below the barbell. We consider

that the detector detects an onset correctly if the cor-

rect type of onset (start or end) is detected within ±2

seconds of the annotated onset. We treat each record-

ing as a time series of length equal to the number of

seconds (assuming one frame/second). This means

that a recording of length N with an onset at moment

t and a time window around the onset from t − n to

t + n will have N data points;

• Time window within which we accept one posi-

tive data example: t − n....t + n

• Negative data examples: 0...t − n − 1 and t + n +

1...N

For each recording and the lift detector identifying an

onset at some moment d, we will have N data points

in time, as seen in Figure 5.

Figure 5: Lift detector onset detection. A - (d < t − n); B -

(t − n ≤ d ≤ t + n); C - (d > t + n).

The counting of the different categories for each

recording can be simplified:

i f t −n < d < t + n :

T N = N − 1, T P = 1, FP = 0, FN = 0

Otherwise :

T N = N − 2, T P = 0, FP = 1, FN = 1

4.1.2 Lift Detector Test Findings

We used five metrics to measure performance: pre-

cision, recall, F1 score, Matthew’s correlation coef-

ficient, and the Jaccard Coefficient. These metrics

mitigated the misleading effects of dataset imbalances

caused by the high number of True Negatives. It pro-

vided a more accurate assessment of the test’s per-

formance by focusing on both positive and negative

outcomes. Table 1 shows the breakdown of the re-

sults across individual participants in either snatch or

clean & jerk movements. The Lift Detector demon-

strates overall solid performance with total test values

across both Olympic lifts of 0.898 and 0.884 for pre-

cision and recall, 0.891 for the F1 score, 0.889 for

the MCC, and 0.803 for the Jaccard coefficient. The

values indicate that the implemented detector system

has balanced precision and recall, a strong correla-

tion between predicted and actual classifications, and

a significant overlap between predicted positives and

actual positives. This suggests that the Lift Detector

is highly effective at correctly identifying true posi-

tive cases, indicating lift onsets while maintaining a

low rate of false positives and negatives.

4.2 Metric Extractor Testing

The AutoWT system relies on integrating markerless

pose as a key technology enabling performance data

collection. The system is intended for long-term data

collection through repeated capture sessions. The un-

derlying measurement system—the markerless pose

model—must deliver repeatable measurements given

the same conditions, i.e., the same results are obtained

repeatedly under unchanged conditions. Additionally,

based on this repeatability assumption, the autoWT

system should be able to identify deviations in the ex-

tracted key point data if the camera setup has been

altered.

icSPORTS 2024 - 12th International Conference on Sport Sciences Research and Technology Support

68

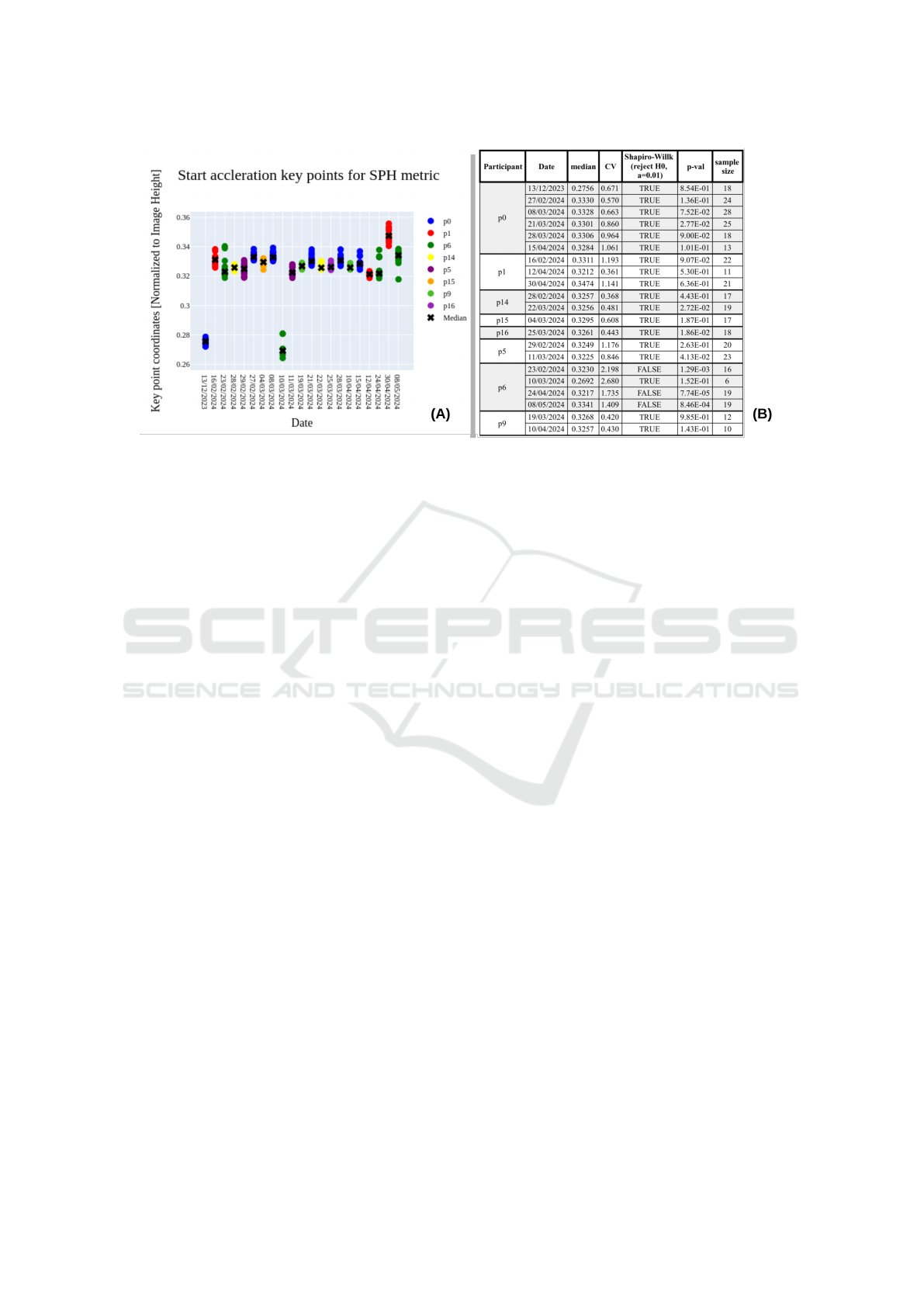

Figure 6: Start Acceleration key points across all capture sessions from the data collection study. A - graphic showing the

distributions of the key points in each capture session. B - Table showing each capture session: Each session data point

distribution median, CV - Coefficient of Variation, Shapiro-Wilk test results for distribution normality and sample size.

4.2.1 Start Acceleration Point Distribution and

Repeatability

We will examine the start acceleration key points to

test the data repeatability. Given that the front camera

has not been moved, the key point we extract for the

same participant across capture sessions should de-

liver the same y-axis landmark data within a normal

distribution.

We use the Shapiro-Wilk test to determine the

normality of the start acceleration point distributions

for each capture session. Before testing, extreme

outliers—values greater than three standard devia-

tions—were removed (5/384 key points). The test

results show that 18 of the 21 sessions tested were

Gaussian. The test results can be seen in Figure 6 - B.

After testing that the distributions are normal, we

can compare the distributions of points using central

tendency measures, such as the median, and variance

measures, such as the coefficient of variation.

To highlight other indications of the data repeata-

bility, we draw attention to a few additional notes. As

this data is based on the wrist point detections from

the markerless pose model, they are slightly different

for each athlete. Yet we can observe that the median

of the data and the spread are similar for participants

who have multiple sessions (e.g., p0, p5, p14). This

can also be seen when looking at the coefficients of

variation in Figure 6 - B and the shapes of the distri-

butions in Figure 6 - A.

4.2.2 Detecting Camera Offset Using Start

Acceleration Points

We know the front camera used for data collection

was not moved during the captured sessions. There-

fore, we expect the wrist points to lie within a normal

distribution for each captured training session, which

we have shown for most sessions.

When setting up the autoWT system for data col-

lection, the weightlifting gym staff and regular mem-

bers were instructed not to alter the setup. However,

the cameras were adjusted during the data capture

study period due to space restrictions - the front cam-

era was near a weight stand and was moved by acci-

dent. As the camera mounts are static, they can only

be moved up, down, or side to side. This should be

noticeable in the data as an offset for the session fol-

lowing the alteration.

By reviewing the session key point distributions

and their median values in Figure 6 - A, we can see

that the camera was moved three times during the

study period: following the first session on 13/12/23,

then following the sessions on 8/3/24 and 24/04/24,

respectively. Each time following a session, when the

camera was tilted, it was subsequently readjusted, but

the newly adjusted height did not match exactly with

the previous viewing angle.

Even when accounting for slight variations in the

athlete’s setup position before the lift, we found that

the captured key points are normally distributed and

can be acquired with high repeatability across re-

peated capture sessions. Lastly, we observed that de-

viations for the median of the start acceleration point

distributions can be used to determine if the camera

autoWT: A Semi-Automated ML-Based Movement Tracking System for Performance Tracking and Analysis in Olympic Weightlifting

69

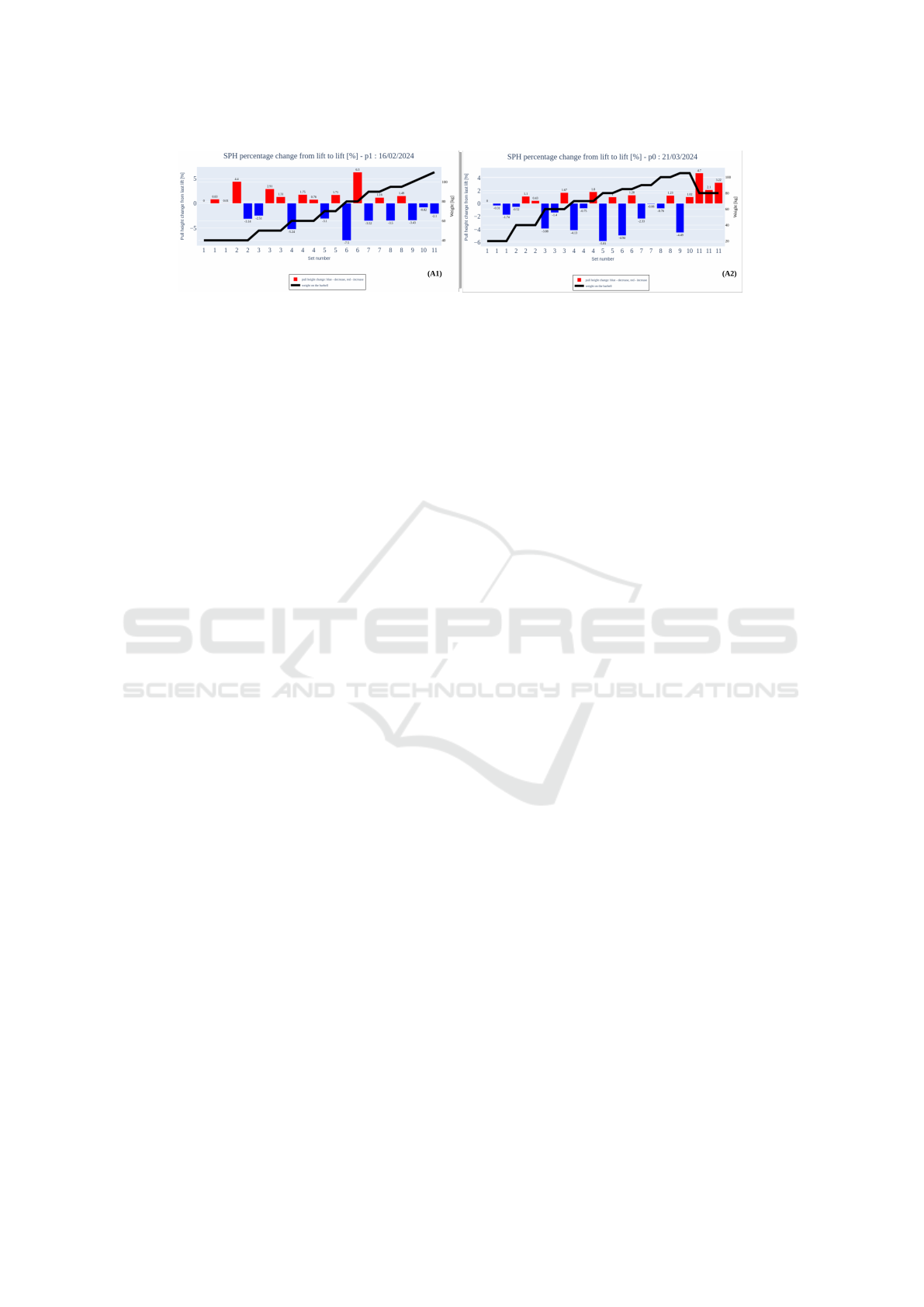

Figure 7: A1 and A2 - Examples of the Snatch Pull Height metric visualised as a percentage change from lift to lift across a

full snatch capture session for participants p0 and p1. Figures display the snatch pull height change as a bar chart with pull

height increase in blue and decrease in red; the weight on the barbell for each repetition and the set is overlaid as a black line

with the y-axis markings on the right side.

position has been altered, therefore giving a method

to flag setup changes and help improve the rigour of

the data capture process.

5 CONCLUSIONS

This paper presents autoWT, a system for enhancing

research and coaching practice in Olympic weightlift-

ing training through automated, long-term perfor-

mance tracking. The distributed architecture ef-

ficiently manages multiple high-bandwidth camera

data streams and supports low-specification con-

trol devices for processing and analysing Olympic

weightlifting movements. Key features such as multi-

camera capture, user data logging, and heart rate mon-

itoring have been carefully selected to build a versa-

tile, powerful, yet easily deployable system.

Our Lift Detector module automates Olympic lift

onset detection with high precision (0.898) and recall

(0.884) across snatch and clean & jerk movements,

providing reliable automated clip extraction for fur-

ther analysis. The Metric Extraction module, exem-

plified by the snatch pull height (SPH) metric, show-

cases the system’s ability to provide meaningful per-

formance data.

Furthermore, our analysis of start acceleration key

points across multiple sessions demonstrates the reli-

ability of using markerless pose estimation for long-

term performance tracking, with high repeatability

and normally distributed data. We have also shown

that deviations in data distribution can give the au-

toWT the ability to flag camera position changes, en-

hancing data capture rigour and ensuring consistent

measurement conditions.

Despite these advancements, the current study has

limitations, as not all aspects of the OW training pro-

cess have been integrated into the autoWT system.

Future work includes improvements to the Lift Detec-

tor module to automate periodic assistance movement

action recognition and individual repetition segmen-

tation. A full comprehensive system validation will

be needed using a larger more diverse dataset with

more participants.

Currently, autoWT computes a single perfor-

mance metric—snatch pull height. The range of

extracted metrics will be expanded to cover clean

& jerk, and assistance movements to enable perfor-

mance tracking across the entire OW training process.

The planned metrics will include the clean pull height,

jerk displacement, and jerk velocity, with each metric

informing us about lift efficiency changes.

Currently, the user interface of the controller app

and the WebApp are mainly geared towards control

of the system by an operator. In the future, the UI

will be further developed not only to allow control by

end users but also to provide users with instant per-

formance feedback. Figure 7 shows a proposed ver-

sion of the SPH metric feedback for observing perfor-

mance adjustments to weight increases across mul-

tiple repetitions. This visualisation can effectively

show how SPH changes during a training session and

inform the coach of different performance patterns.

In Figure 7, we draw attention to the changes from set

to set. Both athletes use comparatively light weights

for the first two sets, which do not give enough re-

sistance to control the weight with significant preci-

sion. Yet from set 3 onwards, two different patterns

for adjusting to increased weight from the previous

set become apparent. Participant 0 consistently shows

a more substantial drop when weight increases with

a slight increase in pull height in subsequent repeti-

tions. At the same time, Participant 1 also shows a

decrease in pull height but with an increase in every

consecutive repetition. The overall relative change

range for the athletes is similar. However, participant

1’s ability to increase the pull height for every follow-

ing set aligns with the athlete’s overall higher strength

base than Participant 0. The autoWT system will be

used to collect data for longitudinal studies to investi-

gate the effect of providing short-term and long-term

feedback to users using the identified metrics and data

visualisations. Notably, the data from these studies

icSPORTS 2024 - 12th International Conference on Sport Sciences Research and Technology Support

70

will serve to develop performance forecasting models

integrating data from multiple sensors, including the

combination of heart rate data and performance met-

rics acquired from the camera sensors. By addressing

these areas, the autoWT system can further contribute

to performance optimization in Olympic weightlifting

and serve as an example for long-term performance

research of other complex sports movements.

In conclusion, the autoWT system offers a promis-

ing approach to objective, repeatable, long-term per-

formance tracking. The system has the potential to

transform research and coaching practice, opening

new avenues for future performance optimization in

Olympic weightlifting.

ACKNOWLEDGEMENTS

This research was funded and supported by the EP-

SRC’s DTP, Grant EP/T518128/1 and the industrial

partner - Gymshark. Additional thanks to Gian Singh

Cheema and Warley Weightlifting Club.

REFERENCES

Three-dimensional monitoring of weightlifting for com-

puter assisted training. In VRIC, Laval France.

AN, V. (1978). Weightlifting. International Weightlifting

Federation, Budapest.

Arandjelovi

´

c, O. (2017). Computer-Aided Parameter Selec-

tion for Resistance Exercise Using Machine Vision-

Based Capability Profile Estimation. Augmented Hu-

man Research, 2(1):4.

Bazarevsky, V., Grishchenko, I., Raveendran, K., Zhu, T.,

Zhang, F., and Grundmann, M. (2020). BlazePose:

On-device Real-time Body Pose tracking. Number:

arXiv:2006.10204 arXiv:2006.10204 [cs].

Bolarinwa, D., Qazi, N., and Ghazanfar, M. (2023). Shifting

the Weight: Applications of AI in Olympic Weightlift-

ing. In 2023 IEEE 28th(PRDC), pages 319–326.

ISSN: 2473-3105.

Destro, M. (2024). materight/RepNet-pytorch. original-

date: 2023-02-28T15:28:43Z.

Garhammer, J. and Newton, H. (2013). Applied Video

Analysis for Coaches: Weightlifting Examples. In-

ternational Journal of Sports Science & Coaching,

8(3):581–594.

He, Q., Li, W., Tang, W., and Xu, B. (2023). Recognition to

weightlifting postures using convolutional neural net-

works with evaluation mechanism. Measurement and

Control, page 00202940231215378. Publisher: SAGE

Publications Ltd.

Host, K. and Ivaši

´

c-Kos, M. (2022). An overview of Hu-

man Action Recognition in sports based on Computer

Vision. Heliyon, 8(6):e09633.

Hsu, C.-T., Ho, W.-H., and Chen, J.-S. (2019). High Effi-

cient Weightlifting Barbell Tracking Algorithm Based

on Diamond Search Strategy. In Arkusz, K., B˛edz-

i

´

nski, R., Klekiel, T., and Piszczatowski, S., editors,

Biomechanics in Medicine and Biology, pages 252–

262, Cham. Springer International Publishing.

Hsu, C.-T., Ho, W.-H., Tsai, W.-I., and Lin, Y.-C. (2018).

Realtime weightlifting barbell trajectory extraction

and performance analysis. | Physical Education Jour-

nal | EBSCOhost. ISSN: 1024-7297 Issue: 1 Pages:

73 Volume: 51.

Jian, M., Zhang, S., Wu, L., Zhang, S., Wang, X., and He, Y.

(2019). Deep key frame extraction for sport training.

Neurocomputing, 328:147–156.

Lin, C.-Y. and Jian, K.-C. (2022). A real-time algorithm for

weight training detection and correction. Soft Com-

puting, 26(10):4727–4739.

Mroz, S., Baddour, N., McGuirk, C., Juneau, P., Tu, A.,

Cheung, K., and Lemaire, E. (2021). Comparing the

Quality of Human Pose Estimation with BlazePose

or OpenPose. In 2021 4th International Conference

on Bio-Engineering for Smart Technologies (BioS-

MART), pages 1–4.

Needham, L., Evans, M., Cosker, D. P., Wade, L.,

McGuigan, P. M., Bilzon, J. L., and Colyer, S. L.

(2021). The accuracy of several pose estimation meth-

ods for 3D joint centre localisation. Scientific Reports,

11(1):20673. Number: 1 Publisher: Nature Publish-

ing Group.

Pan, S. (2022). A Method of Key Posture Detection and

Motion Recognition in Sports Based on Deep Learn-

ing. Mobile Information Systems, 2022:e5168898.

Publisher: Hindawi.

Rethinam, P., Manoharan, S., Kirupakaran, A. M.,

Srinivasan, R., Hegde, R. S., and Srinivasan, B.

(2023). Olympic Weightlifters’ Performance Assess-

ment Module Using Computer Vision. In 2023 IEEE

International Workshop on Sport, Technology and Re-

search (STAR), pages 8–12.

Yoshikawa, F., Kobayashi, T., Watanabe, K., Katsuyoshi,

S., and Otsu, N. (2010). Start and End Point Detection

of Weightlifting Motion using CHLAC and MRA:.

In B-Interface 2011, pages 44–50, Valencia, Spain.

SciTePress.

autoWT: A Semi-Automated ML-Based Movement Tracking System for Performance Tracking and Analysis in Olympic Weightlifting

71