Applying a Systematic Approach to Design Human-Robot Cooperation

in Dynamic Environments

Sridath Tula

1,2

, Marie-Pierre Pacaux-Lemoine

1

, Emmanuelle Grislin-Le Strugeon

1,3

,

Anna Ma-Wyatt

2,4

and Jean-Philippe Diguet

2

1

UPHF, CNRS, UMR 8201-LAMIH, Valenciennes, France

2

CNRS, IRL 2010 CROSSING, Adelaide, Australia

3

INSA Hauts-de-France, Valenciennes, France

4

The University of Adelaide, South Australia, Australia

Keywords:

Human-Robot Cooperation, Human-Robot Interfaces, Autonomous Agents, Mobile Robots, Human-Robot

Teaming, Control and Supervision Systems.

Abstract:

This paper introduces a framework to enhance Human-Robot Cooperation in high-risk environments by

leveraging a grid-based analysis. By integrating the concepts of Know-How-to-Operate and Know-How-

to-Cooperate, the framework aims to balance and streamline cooperation strategies. The framework proposes

grid-based configurations to identify agent competencies, manage resources, and dynamically allocate tasks.

The study details first the framework, then shows how it can be applied to a team made of one human and two

robots in a search-and-rescue context.

1 INTRODUCTION

Effective cooperation between humans and robots

is essential in dynamic and high-risk environments

(Bravo-Arrabal et al., 2021) to ensure efficient re-

sponses to complex situations such as fires and

search and rescue missions (Vera-Ortega et al., 2022).

Human-Robot (H-R) cooperation takes advantage of

the distinct strengths of both fields, combining human

intelligence and flexibility with robotic accuracy and

endurance. This mutual interaction not only increases

responders’ safety but also enhances overall outcomes

in dangerous circumstances.

Current techniques often emphasize full auton-

omy (Wijayathunga et al., 2023), frequently overlook-

ing the unique benefits that human operators bring

to the cooperative framework. Indeed, Autonomous

robots excel at navigating hazardous environments,

but human judgments based on global knowledge and

experience are vital for adapting to unforeseen events

(Li et al., 2023). However, optimizing cooperation

between human operators and autonomous robots in

hazardous conditions raise multiple complex ques-

tions.

Designing effective H-R teams presents several

challenges. One key difficulty lies in fusing human

cognitive strengths with robotic functionalities to op-

timize task performance, efficiency, and interaction

intuitiveness (Goodrich et al., 2008). This involves

determining the right balance between autonomy and

control sharing between humans and robots. Addi-

tionally, human factors such as cognitive limitations

and potential biases need to be considered alongside

technical limitations in real-time communication and

coordination (Mostaani et al., 2022). Furthermore,

unforeseen events in dynamic environments can dis-

rupt established communication protocols, rendering

robotic systems unusable or limiting their function-

ing, requiring H-R teams to adapt and react seam-

lessly (Nourbakhsh et al., 2005). Integrating human

judgment and decision-making with robotic capabil-

ities becomes crucial, particularly in high-risk situa-

tions where quick and accurate responses are essential

(Filip, 2022).

Recent advances in human-robot cooperation ar-

chitectures and frameworks, such as the use of

AND/OR graphs and hierarchical models, have been

developed to better integrate human flexibility with

robotic precision (Murali et al., 2020). These sys-

tems aim to facilitate smoother interactions by en-

248

Tula, S., Pacaux-Lemoine, M.-P., Grislin-Le Strugeon, E., Ma-Wyatt, A. and Diguet, J.-P.

Applying a Systematic Approach to Design Human-Robot Cooperation in Dynamic Environments.

DOI: 10.5220/0013008500003822

In Proceedings of the 21st International Conference on Informatics in Control, Automation and Robotics (ICINCO 2024) - Volume 2, pages 248-255

ISBN: 978-989-758-717-7; ISSN: 2184-2809

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

abling robots to predict and adapt to human actions,

and by validating cooperation with up-to-date infor-

mation through digital twins and other virtual systems

(Darvish et al., 2020).

While these digital twin systems provide impor-

tant advantages, our study takes a different approach

by presenting a comprehensive Human Machine Co-

operation model (Pacaux-Lemoine and Vanderhae-

gen, 2013) adapted for human-robot cooperation and

its implementation using a grid-based architecture.

The HMC model analyzes the complexity of human-

robot cooperation, focusing on its importance in a va-

riety of situations, especially those requiring search

and rescue missions. By exploring the design and

functionality of this cooperation model grid, we in-

tend to see its usefulness in increasing cooperation,

decision-making, and communication within the H-R

teams in dynamic and challenging situations. Further-

more, the purpose of this study is to uncover any pos-

sible failure situation in the model that may impact

H-R cooperation.

In the following sections, we will further explain

the model, exploring the frameworks that underlie

human-robot cooperation and the role of the grid in

facilitating the design of seamless cooperation among

agents. By leveraging structured human-robot coop-

eration architectures and grid analysis, we aim to con-

tribute to the development of more effective and re-

silient human-robot teams in critical situations.

2 BACKGROUND

Successful cooperation between human operators and

autonomous robots is critical for attaining com-

mon goals in tough situations. Human-robot inter-

action (HRI) and human-robot teaming (HRT) in-

volve communication, coordination, and interaction

to enable successful cooperation in complicated con-

texts (Paliga, 2022). Understanding the complex-

ities of this cooperation requires a comprehensive

analysis on both HRI and HRT frameworks. There-

fore, this section will explore the current state-

of-the-art in both areas, followed by an examina-

tion of the Human-Machine System (HMS) domain,

specifically focusing on the capabilities, Know-How-

to-Operate (KHO) and capabilities, Know-How-to-

Cooperate (KHC) model.

2.1 Cooperation in the Human-Robot

Interaction Domain

While traditional HRI research focused on develop-

ing interfaces and communication protocols (Mizrahi

et al., 2020), there’s a growing emphasis on under-

standing and incorporating human cognitive aspects

like situational awareness, trust, and decision-making

into robot design. This shift reflects the understanding

that successful human-robot teaming requires robots

that can not only perform tasks but also collaborate

effectively with humans in complex environments.

The prominent areas of exploration within HRI

are HRT or Human–Autonomy Teaming (O’Neill

et al., 2022). HRT research focuses on developing

robots that can act as teammates, understanding hu-

man intentions, anticipating needs, and adapting to

changing situations (Li et al., 2023). This cooperative

approach has the potential to significantly enhance ef-

ficiency and performance in various applications.

However, current research focuses predominantly

on physical human-robot cooperation (Aronson et al.,

2018), leaving a significant gap in addressing the cog-

nitive elements of human-robot interaction (Jiang and

Arkin, 2015). Identifying the limitations of current

methodologies, three key challenges emerge (Tula

et al., 2024):

Lack of Swift Human Decisions: Autonomous

robots often struggle with rapid decision-making in

dynamic situations. Human operators, with their

cognitive abilities and field knowledge, can respond

quickly to unexpected events (Chella et al., 2018).

Complex Sensor Data Interpretation: Au-

tonomous robots may face difficulties analyzing and

interpreting complex sensor data. Human operators

excel in understanding global information at a

cognitive level, making their presence essential in

navigating complex scenarios where sensor data

alone is insufficient (Mizrahi et al., 2020).

Communication Weakness between Humans and

Robots: Effective communication between humans

and robots is essential for successful cooperation.

Current approaches often exhibit weaknesses in es-

tablishing robust communication channels, hinder-

ing the seamless exchange of critical data necessary

for cooperative decision-making (Grislin-Le Strugeon

et al., 2022).

2.2 Cooperation in the Human-Machine

System Domain

More generally, the concept of cooperation between

humans and machines has evolved greatly through-

out time, owing to technological improvements and a

better knowledge of human factors. Early techniques

focused on automating specific tasks with robots act-

ing as machines controlled by humans. As technology

evolved, the emphasis switched to developing more

interactive systems in which robots could help peo-

Applying a Systematic Approach to Design Human-Robot Cooperation in Dynamic Environments

249

ple with real-time data and analysis (Alirezazadeh and

Alexandre, 2022). This growth resulted in the cre-

ation of collaborative systems in which humans and

robots operate smoothly together, using each other’s

competencies. Modern HMS research focuses on the

integration of cognitive and autonomous capacities in

robots (Hoc, 2001), allowing for more complex inter-

actions and cooperation.

The state of the art in Human-Machine Sys-

tems (HMS) (Pacaux-Lemoine, 2020) focuses on

diverse ways to improve human-machine interac-

tion, highlighting both technology developments and

human-centered design principles. Researchers cre-

ated models to better understand the dynamics of

these interactions. One such principle in this field is

the Know-How-to-Operate (KHO) and Know-How-

to-Cooperate (KHC) model developed by (Pacaux-

Lemoine et al., 2023). This framework analyzes

human-machine cooperation by dividing it into two

key categories: i) KHO focuses on an agent’s (hu-

man or machine) ability to perform individual tasks.

It involves a four-step process: Information Gather-

ing (IG), Information Analysis (IA), Decision Selec-

tion (DS), and Action Implementation (AI); ii) KHC

tackles how agents interact and coordinate actions.

Similarly to KHO, it involves four steps: Information

Gathering on Other Agents (IGO) to understand their

capabilities, Interference Detection (ID) to identify

potential conflicts, Interference Management (IM) to

resolve conflicts and optimize cooperation, and fi-

nally, Function Allocation (FA) to assign tasks to the

most suitable agent (human or machine).

The KHO-KHC structured approach offers a plat-

form for organizing agent roles, accelerating infor-

mation flow, and optimizing task allocation within

Human-Robot Cooperation. By defining individual

and cooperative functionalities, this approach pro-

vides a framework to enhance cooperation between

humans and robots.

3 GRID-BASED ANALYSIS

This section describes a systematic approach to

establishing cooperation-related characteristics for

Human-Robot (H-R) teams that employs a grid archi-

tecture. The goal is to aid the designer in analysis by

thoroughly understanding each agent’s role, interac-

tions, and capabilities within the cooperative environ-

ment. The subsections that follow detail the contents

of the grid, the grid filling process, the organization’s

dynamic adaptability, and the potential benefits of the

grid support.

3.1 Grid Description

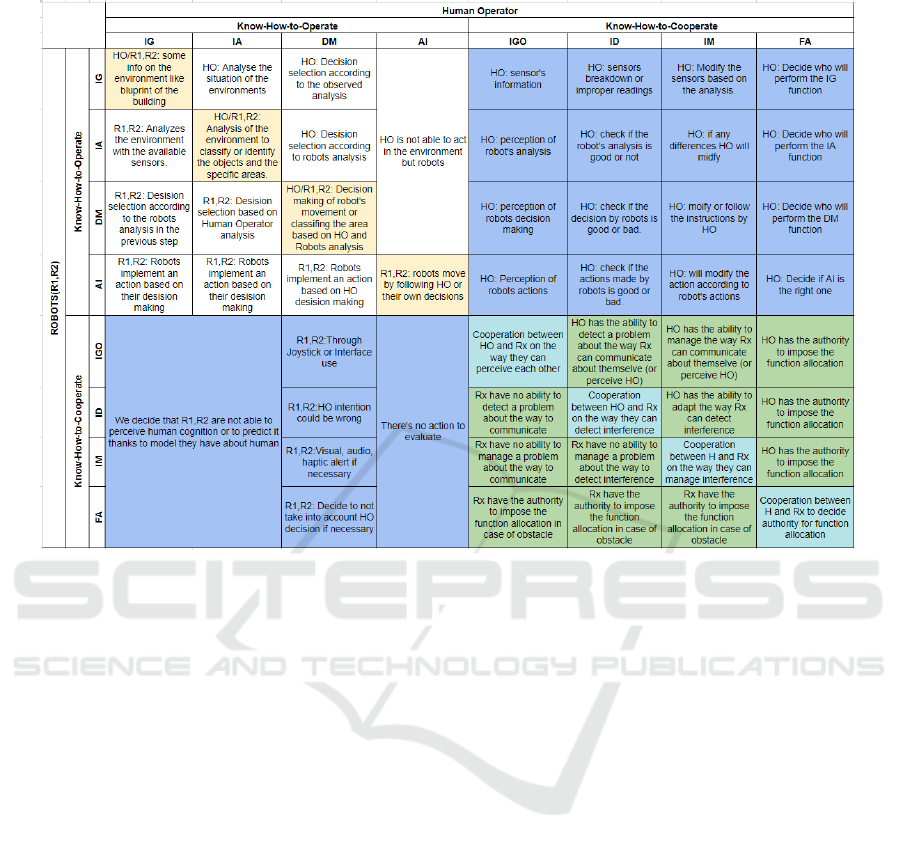

The grid framework is divided into four quadrants,

each reflecting a different facet of cooperation be-

tween the human operator and the robots. The reason

for specifically mentioning robots is that the abilities

of two robots are generally similar, making it simpler

to consider them together (in Table 1). The top left

quadrant handles cooperation based on each agent’s

ability to interact with the environment or process,

such as individual task accomplishment and environ-

mental interaction. The top right quadrant, known as

KHC-human, enables the human operator to access

the robots’ behavior or condition depending on the

situations, determining the human agent’s capabili-

ties. The lower left quadrant, known as KHC-robot,

allows robots to interact with human operator depend-

ing on the specific situation in a scenario, establishing

the robot agent’s competencies. Finally, the bottom

right quadrant focuses on cooperation between both

agents in terms of abilities like information sharing,

task sharing, allocation and, coordination, using the

common workspace to communicate and store essen-

tial information or cooperation needed for a situation

in an aftermath scenario such as a fire accident. A

Common Workspace acts as the hub for all interac-

tions, ensuring that all agents have access to shared in-

formation and can coordinate their efforts efficiently.

3.2 Steps to Fill the Grid

Filling the quadrants involves a detailed process. The

grid is meant to be filled by the system designer and

to support the identification of the task that the human

operator will complete according to the situation.

To begin, the roles and competencies of the agents

must be determined. The Human Operator is in

charge of decision-making, task distribution, and in-

terfacing with other agents. Sensors, actuators, and

autonomous capabilities enable the robots to collect

information, maneuver, and complete tasks.

Step 1: In the top left quadrant, focusing on Know-

How-to-Operate (KHO) aspects, tasks include infor-

mation gathering IG, where both the human opera-

tor and robots could gather relevant data from the

environment; information analysis IA, where both

agents’ process and interpret the gathered informa-

tion to make informed decisions specific to their own

tasks; decision selection DS, choosing the most suit-

able course of action based on analyzed information

about their own area of authority; and Action im-

plementation AI, executing the chosen actions effec-

tively to complete their parts of the overall task.

ICINCO 2024 - 21st International Conference on Informatics in Control, Automation and Robotics

250

Table 1: KHO & KHC grid for a team of 1 Human operator, and 2 Robots agents’.

Step 2 & 3: The Know-How-to-Cooperate (KHC)

components are addressed from both the human and

robot perspectives to ensure effective coordination.

From the human perspective (Step 2), tasks involve

information gathering (IGO) on the robots’ actions

and intentions, interference detection (ID) to identify

potential conflicts arising from the combined actions

of multiple agents, interference management (IM) to

resolve these conflicts for smooth and coordinated

task execution, and function allocation (FA) to assign

tasks and responsibilities among the agents for opti-

mal team performance. Similarly, from the robot per-

spective (Step 3), tasks include gathering information

on the human operator’s actions and intentions, de-

tecting potential conflicts due to the human operator’s

actions, managing these interferences to ensure seam-

less task execution, and allocating functions to opti-

mize overall team performance. The key difference

between these steps lies in the perspective: Step 2 em-

phasizes the human operator’s view of understanding

and managing robot interactions, while Step 3 focuses

on the robot’s view of understanding and human inter-

actions.

Step 4. The bottom right quadrant focuses on the

control of the cooperation between human operator

and robots. Tasks include shared information gather-

ing, where both agents gather and share information

relevant to the overall task; shared decision making, a

cooperative decision-making process considering in-

puts from both agents; and shared action implementa-

tion, executing tasks cooperatively to ensure coordi-

nated efforts and mutual support.

3.3 Dynamic Adaptation

Dynamic adaptability is critical for maintaining effec-

tive teamwork in changing circumstances. This in-

cludes real-time monitoring of the environment and

agent status, as well as continuous feedback loops to

alter actions and tactics in response to new informa-

tion. Adaptive task allocation enables the dynamic

redistribution of tasks depending on the current situ-

ation and agent capabilities. Situation-based adjust-

ments employ predetermined cases to guide initial

task allocation and cooperation, which may be modi-

fied based on real-time data. Regular training sessions

for human operators and robots will enhance team-

work abilities and enable the assessment of the coop-

eration model using the grid in various circumstances.

The grid provides a unique viewpoint on dynamic

adaptation. By examining the capabilities and ca-

pacities of human and robot agents inside the KHC-

human and KHC-robot quadrants (see Table 1), the

grid makes it easier to identify strengths and weak-

Applying a Systematic Approach to Design Human-Robot Cooperation in Dynamic Environments

251

nesses for appropriate work allocation. This approach

promotes a better knowledge of human-robot capabil-

ities, allowing designers to strategically assign tasks

based on real-time data. Furthermore, the grid pro-

motes flexible and responsive cooperation by allow-

ing for dynamic allocation of workload based on

predetermined situations and real-time modifications.

It’s also a useful tool for designers and developers.

Designers can define approaches to cooperation in-

side the grid to create specific parameters for inter-

action between humans and robots. Furthermore, the

grid may be utilized to develop the needed functions

for agent capabilities and incorporate these abilities

towards cooperation.

3.4 Implementation and Evaluation

Proof-of-concept experiments in crisis scenarios,

such as fires or post-earthquake settings, should be

conducted to evaluate agent cooperation under vari-

ous configurations. Tasks will range from simple nav-

igation to complex pick-and-place actions, support-

ing both human and robot agents. Evaluation metrics

will include reaction time, event detection precision,

navigation accuracy, and task management, along-

side subjective feedback from Human-Robot Interac-

tion surveys to assess cooperation and system useful-

ness. Comparative analyses between scenarios with

and without the cooperative model will highlight its

benefits, focusing on agent skills, responsibilities, and

results.

The grid framework is crucial in these evaluations,

structuring interactions and task assignments based

on agent roles and capabilities. Metrics such as re-

action time and navigation accuracy directly correlate

with the grid’s efficacy. Feedback from these evalua-

tions will refine the grid, creating a feedback loop that

enhances overall system performance.

3.5 Grid Analysis Aids Cooperation

The grid analysis enables the identification of agent

competencies. Typically, the system designer creates

the grid to identify, manage, and allocate the roles and

actions of the agents. Each cell represents a distinct

interaction between agents, simplifying the identifica-

tion of their competencies. For example, in a search

and rescue effort, a human operator might examine

blueprints to identify areas of interest, while robots

use sensors to detect objects and navigate effectively.

Resource management is another important fea-

ture of the grid design. The grid structure promotes

optimal resource utilization by allocating particular

functions and authorities to each agent depending on

their capabilities and the situation’s requirements with

the help of human operator. For example,in a res-

cue operation, the human operator will assign roles to

himself, such as analyzing blueprints and making ed-

ucated judgments, while robots are given tasks such

as navigation and data gathering.

The grid analysis facilitates allocation of tasks

based on the situation, allowing operations to be dy-

namically assigned in response to changing environ-

ments or objectives. This might ensure that resources

are allocated efficiently. For example, if the environ-

ment gets more dangerous or complicated, the human

operator may assign additional tasks to the robots in

order to reduce risk and increase efficiency. The grid

helps to manage this assignment operation by clearly

defining roles and competencies. In contrast, if com-

prehensive analysis or complicated decision-making

is necessary, the human operator may take on a more

active role to process information and recommend

best plan of action based on the agents’ competencies.

The grid also acts as a paradigm for developing

and deploying agent skills including programming

and training. Designers can analyze what could be the

best plan of action and decision required for good co-

operation by mapping out how the grid’s agents inter-

act. For example, the grid might emphasize the need

for robots to traverse barriers autonomously or con-

vey crucial information to human operators, motivat-

ing designers to include suitable capabilities. By out-

lining these interactions, the grid aids in the system-

atic development of cooperation strategies and skills.

By linking the dynamic adaptation of the orga-

nization, implementation, and evaluation processes

back to the grid framework, we underscore its im-

portance in enhancing cooperation. This integral ap-

proach not only optimizes the performance of H-

R teams but might also improve their adaptability

and effectiveness in dynamic and hazardous environ-

ments.

To summarise, the grid framework provides a sys-

tematic way to define agents’ role, resources, and ob-

jectives, for cooperation among the agents, shared

task, allocation and execution to complete a goal. Its

simplicity and versatility make it appropriate for a

variety of circumstances, opening the path for wider

adoption in crisis management and other cooperative

contexts. Designers may use the grid framework as

a tool to program and apply the cooperative model

based on unique situational needs, resulting in re-

silient and successful real-world solutions.

In the next section, we explore the application of

the grid analysis in a real-world situation and show

how the human operator and robots communicate and

coordinate their actions.

ICINCO 2024 - 21st International Conference on Informatics in Control, Automation and Robotics

252

4 APPLICATION

Based on the grid analysis between the human opera-

tor and robots, we apply the method explained in Sec-

tion 3 to a simple Search and Rescue operation. We

will use examples of cells in each quadrant of the grid

to illustrate the use of the grid analysis, highlighting

benefits, difficulties, and remaining gaps. The section

concludes with the need for an intermediary agent.

Consider a situation where the goal is to locate

and rescue a vital object trapped within a collapsed

structure. The grid arranges the roles and interactions

of each agent as follows:

4.1 Human Operator (HO)

The human operator has authority for analyzing

blueprints or visualizing the surroundings to uncover

prospective areas of interest, assessing information

provided by the robots to make educated decisions,

and using situational analysis to direct the robots’

movements and behaviors. The human operator’s re-

sources and objectives include collecting data on the

environment (Information Gathering - IG), interpret-

ing data from the robot’s sensors to evaluate the situa-

tion (Information Analysis - IA), determining the best

course of action (Decision Seclection - DS), and send-

ing orders to the robots to perform search and rescue

tasks such as removing debris or accessing difficult

areas (Action Implementation - AI).

4.2 Robots (R1, R2)

The robots are responsible for navigating through the

environment to locate the necessary object, using sen-

sors to detect the presence of an object and structural

irregularities, and reporting findings to the human op-

erator and following their directions. The robots’ re-

sources and objectives include acquiring data on the

building’s layout, structural stability, and potential

hazards (Information Gathering - IG), analyzing sen-

sor data to identify areas with the highest likelihood of

locating the vital object (Information Analysis - IA),

making decision on the best course of action based

on gathered information (Decision Selection - DS),

and performing tasks such as moving debris, entering

restricted areas, and sending real-time updates to the

human operator (Action Implementation - AI).

4.3 Dynamic Grid Use: Example

The dynamic use of the grid (as mentioned in Sec-

tion 3.3) not only facilitates the identification of agent

competencies but also assists in resource manage-

ment, task allocation according to the situation, and

system implementation to design the abilities of the

agents can be illustrated through various situations:

Situation 1: Initial Assessment. In the initial assess-

ment phase, the human operator uses IG and IA to

analyze initial data and directs robots to high-priority

areas (top left quadrant - KHO). Robots gather de-

tailed structural data but await further instructions be-

fore proceeding as seen in the top left quadrant KHO.

Situation 2: Encountering an Obstacle. When

robots encounter debris, the human operator assesses

the situation and decides to direct the robots to re-

move it (top right and bottom right quadrant). Both

robots and the human operator pause to reassess the

organization of cooperation, possibly seeking addi-

tional data (bottom right quadrant - KHC).

Situation 3: Locating the Object. As robots iden-

tify potential locations of the trapped object, the hu-

man operator uses IA to interpret sensor data and con-

firm the findings (top left and top right quadrant). The

robots then proceed to the identified locations to begin

the rescue operation (top left quadrant), while contin-

uously providing real-time updates to the human op-

erator(bottom left quadrant).

Situation 4: Structural Instability. If the robots

discover any physical unpredictability in the environ-

ment, the human operator must swiftly analyze the

dangers and determine whether to proceed, change

the robots’ direction, or evacuate the area (top left,

right left and bottom right quadrant). The human op-

erator may request further data or relevant informa-

tion to better grasp the consequences (top right and

bottom right quadrant).

4.4 Addressing Gaps

To address the gaps as discussed in background sec-

tion 2, the cooperation model should leverage the cog-

nitive strengths of human operators and the advanced

data processing capabilities of robots. By dynami-

cally updating the grid analysis, the system can help

at ensuring that human operators can make swift de-

cisions in fast-changing situations, thereby compen-

sating for the autonomous robots’ slower and some-

times the decision-making processes bad. The human

operators excel at interpreting complex sensor data,

providing critical insights that robots alone may miss.

Effective communication channels within the grid can

facilitate seamless data exchange between humans

and robots, ensuring robust cooperation and timely re-

sponses to dynamic rescue scenarios. However, gaps

remain in efficiently integrating data processing and

communication within the grid framework. Real-time

Applying a Systematic Approach to Design Human-Robot Cooperation in Dynamic Environments

253

data interpretation and synchronization are crucial for

improving the cooperation model.

4.5 Benefits and Difficulties

Benefits. A key benefit of the proposed model is

its capacity to support organized interactions between

human and robot agents’, which closely resemble the

designer’s planned cooperation designs. This is ac-

complished by a systematic analysis of the grid. This

organized procedure enables seamless interaction and

task execution resulting in simplified task comple-

tion. Real-time data analysis and communication en-

able informed decision-making, allowing for swift

adaptation to changing situations. Additionally, the

grid framework facilitates resource allocation by dy-

namically assigning tasks based on the capabilities of

each agent, maximizing the utilization of available re-

sources.

Difficulties. While the proposed method offers sig-

nificant benefits, there are also challenges associ-

ated with its usage. Real-time data exchange be-

tween human operators and robots, while crucial

for collaboration, can be hindered by communica-

tion delays, which impact the scenario’s speed and

the robot’s abilities. Highly dynamic situations with

fast-moving robots may necessitate immediate action

without waiting for human input, especially when

encountering potentially dangerous unknowns. This

highlights the need for a step back preventative action,

where the robot takes a pre-programmed, safe pause

to allow for human analysis. Additionally, sensor data

from robots may contain inaccuracies or disturbances,

affecting decision-making. Integrating advanced data

processing systems with existing human-robot inter-

action frameworks can be complex, requiring careful

planning and implementation. Addressing these chal-

lenges will be crucial for the implementation of the

cooperation.

4.6 Need for an Intermediary Agent

The grid analysis between the Human operator and

robots highlights the critical need for an intermedi-

ary agent to bridge gaps in coordination, lack of swift

human decisions, communication weakness and com-

plex sensor data (refer to Section 2) and enhance over-

all efficiency. In this context, the Intelligent Assis-

tance System (IAS) serves as the ideal intermediary

agent. The IAS can process information from both

human operators and robots, creating an integrated

action plan that optimizes the use of available re-

sources.

The IAS empowers rapid interpretation of com-

plex sensor data. By leveraging advanced algorithms

and machine learning, the IAS can swiftly analyze

vast amounts of data, identify patterns, and extract

actionable insights. This comprehensive understand-

ing, in contrast to a robot’s limited local view, allows

the IAS to maintain a crucial overview of the situa-

tion. This capability is crucial for making swift and

informed decision in dynamic S&R scenarios. The

IAS can also facilitate robust communication chan-

nels by acting as a central hub for data exchange, en-

suring that critical information is seamlessly shared

between human operators and robots. For example, it

can synchronize real-time updates from robots and re-

lay important information to the human operator, en-

suring that both agents are informed and can coordi-

nate their actions as needed.

Additionally, the IAS can dynamically adjust

strategies in response to real-time changes in the en-

vironment. By continuously monitoring the situation

and analyzing incoming data, it can recommend ac-

tion plan adjustments to ensure efficient and effective

operations as conditions evolve. The inclusion of an

IAS as an intermediary agent addresses research gaps

by leveraging advanced data processing and commu-

nication capabilities, thereby enhancing coordination

and efficiency in rescue operations and highlighting

the importance of integrating such agents for success-

ful human-robot cooperation.

5 CONCLUSION

The integration of human operators, robots, and an in-

termediate agent within a grid-based framework can

enhance cooperation in complex scenarios such as

search and rescue operations. By defining roles and

interactions, the grid framework facilitates a struc-

tured approach to identifying competencies, manag-

ing resources, and dynamically allocating tasks based

on the situations. Human operators use their an-

alytical skills to guide decisions, while robots au-

tonomously navigate and collect data with advanced

sensors. The intermediate agent, though still under

development, is crucial for coordinating actions and

processing information, optimizing mission perfor-

mance and safety. Further practical implementation,

such as a proof-of-concept experiment, is required

to show the framework’s effectiveness, dependability,

and workload management. The intermediate agent’s

ability to implement adaptive algorithms and real-

time monitoring can have the potential to reduce the

cognitive workload on human operators.

In conclusion, the grid-based analysis offers a

structured approach for cooperative tasks in disaster

ICINCO 2024 - 21st International Conference on Informatics in Control, Automation and Robotics

254

management and other domains requiring coordinated

multi-agent systems. The clarity, organization, and

adaptability of the grid structure promote efficient co-

operation in diverse environments. Future research

should focus on refining the intermediate agent and

exploring new dimensions of the human-robot inter-

action to meet emerging challenges.

ACKNOWLEDGEMENTS

This research work is supported by the CNRS and

CROSSING: the French-Australian Laboratory for

Humans/Autonomous Agents Teaming. The authors

express also their sincere gratitude to Prof. Paulo Ed-

uardo Santos for his participation in this project.

REFERENCES

Alirezazadeh, S. and Alexandre, L. A. (2022). Dynamic

task scheduling for human-robot collaboration. IEEE

Robotics and Automation Letters, 7(4):8699–8704.

Aronson, R. M., Santini, T., K

¨

ubler, T. C., Kasneci, E.,

Srinivasa, S., and Admoni, H. (2018). Eye-hand be-

havior in human-robot shared manipulation. In Proc.

Int. Conf. on Human-Robot Interaction, pages 4–13.

Bravo-Arrabal, J., Toscano-Moreno, M., Fernandez-

Lozano, J., Mandow, A., Gomez-Ruiz, J. A., and

Garc

´

ıa-Cerezo, A. (2021). The internet of cooperative

agents architecture (x-ioca) for robots, hybrid sensor

networks, and mec centers in complex environments:

A search and rescue case study. Sensors, 21(23):7843.

Chella, A., Lanza, F., Pipitone, A., and Seidita, V.

(2018). Knowledge acquisition through introspection

in human-robot cooperation. Biologically inspired

cognitive architectures, 25:1–7.

Darvish, K., Simetti, E., Mastrogiovanni, F., and Casalino,

G. (2020). A hierarchical architecture for human–

robot cooperation processes. IEEE Transactions on

Robotics, 37(2):567–586.

Filip, F. G. (2022). Collaborative decision-making: con-

cepts and supporting information and communication

technology tools and systems. International Journal

of Computers Communications & Control, 17(2).

Goodrich, M. A., Schultz, A. C., et al. (2008). Human–

robot interaction: a survey. Foundations and Trends

in Human–Computer Interaction, 1(3):203–275.

Grislin-Le Strugeon, E., de Oliveira, K. M., Thilliez, M.,

and Petit, D. (2022). A systematic mapping study on

agent mining. J. of Exp. & Theor. AI, 34(2):189–214.

Hoc, J.-M. (2001). Towards a cognitive approach to human–

machine cooperation in dynamic situations. Int. J. of

human-computer studies, 54(4):509–540.

Jiang, S. and Arkin, R. C. (2015). Mixed-initiative human-

robot interaction: Definition, taxonomy, and survey.

In 2015 IEEE Int. Conf. on SMC, pages 954–961.

Li, S., Zheng, P., Liu, S., Wang, Z., Wang, X. V., Zheng,

L., and Wang, L. (2023). Proactive human–robot col-

laboration: Mutual-cognitive, predictable, and self-

organising perspectives. Robotics and Computer-

Integrated Manufacturing, 81:102510.

Mizrahi, D., Zuckerman, I., and Laufer, I. (2020). Using

a stochastic agent model to optimize performance in

divergent interest tacit coordination games. Sensors,

20(24):7026.

Mostaani, A., Vu, T. X., Sharma, S. K., Nguyen, V.-D.,

Liao, Q., and Chatzinotas, S. (2022). Task-oriented

communication design in cyber-physical systems: A

survey on theory and applications. IEEE Access,

10:133842–133868.

Murali, P. K., Darvish, K., and Mastrogiovanni, F. (2020).

Deployment and evaluation of a flexible human–robot

collaboration model based on and/or graphs in a man-

ufacturing environment. Intelligent Service Robotics,

13(4):439–457.

Nourbakhsh, I. R., Sycara, K., Koes, M., Yong, M., Lewis,

M., and Burion, S. (2005). Human-robot teaming

for search and rescue. IEEE Pervasive Computing,

4(1):72–79.

O’Neill, T., McNeese, N., Barron, A., and Schelble, B.

(2022). Human–autonomy teaming: A review and

analysis of the empirical literature. Human factors,

64(5):904–938.

Pacaux-Lemoine, M.-P. (2020). HUMAN-MACHINE CO-

OPERATION: Adaptability of shared functions be-

tween Humans and Machines-Design and evaluation

aspects. PhD thesis, Universit

´

e Polytechnique Hauts-

de-France.

Pacaux-Lemoine, M.-P., Habib, L., and Carlson, T. (2023).

Levels of Cooperation in Human–Machine Systems:

A Human–BCI–Robot Example. In Handbook of

Human-Machine Systems. Wiley.

Pacaux-Lemoine, M.-P. and Vanderhaegen, F. (2013). To-

wards levels of cooperation. In 2013 IEEE Int. Conf.

on SMC, pages 291–296.

Paliga, M. (2022). Human–cobot interaction fluency and

cobot operators’ job performance. the mediating role

of work engagement: A survey. Robotics and Au-

tonomous Systems, 155:104191.

Tula, S., Pacaux-Lemoine, M.-P., Grislin-Le Strugeon,

E., Santos, P. E., Ma-Wyatt, A., and Diguet, J.-P.

(2024). Agent’s cooperation levels to enhance human-

robot teaming. In Workshop ARMS (Autonomous

Robots and Multirobot Systems), 23rd Inter. Conf.

on Autonomous Agents and Multiagent Systems (AA-

MAS’24), page 9, Auckland, New Zealand.

Vera-Ortega, P., V

´

azquez-Mart

´

ın, R., Fern

´

andez-Lozano,

J. J., Garc

´

ıa-Cerezo, A., and Mandow, A. (2022). En-

abling remote responder bio-signal monitoring in a

cooperative human–robot architecture for search and

rescue. Sensors, 23(1):49.

Wijayathunga, L., Rassau, A., and Chai, D. (2023). Chal-

lenges and solutions for autonomous ground robot

scene understanding and navigation in unstructured

outdoor environments: A review. Applied Sciences,

13(17):9877.

Applying a Systematic Approach to Design Human-Robot Cooperation in Dynamic Environments

255