Study of the Influence of a Force Bias on a Robotic Partner During

Kinesthetic Communication

Ousmane Ndiaye

1 a

, Ouriel Grynszpan

2 b

, Bruno Berberian

3 c

and Ludovic Saint-Bauzel

1 d

1

Institut des Syst

`

emes Intelligents et de Robotique, Sorbonne University, Paris, France

2

Laboratoire Interdisciplinaire des Sciences du Numerique, Universite Paris-Saclay, Paris, France

3

Office National d’Etudes et Recherches Aerospatiales, Salon-de-Provence, France

Keywords:

Physical Human-Human Interaction, Physical Human-Robot Interaction, Sense of Agency.

Abstract:

Numerous physical tasks necessitate collaboration among multiple individuals. While it’s established that dur-

ing comanipulation tasks, the exchange of forces between actors conveys information, the precise mechanisms

of transmission and interpretation remain poorly known. Various studies have underscored that when a robot

exhibits human-like motions, human understanding of its intentions is enhanced. Nevertheless, discernible

disparities emerge when comparing Human-Human and Human-Robot interactions across diverse metrics.

Among all the usable metrics, this paper focuses on the sense of control over the physical exchange and the

average values of interaction forces. We demonstrate here that the addition of a subtle force bias on the robot

motions results in a diminishing of the observed disparities on these metrics, making human interactions with

this robotic partner more akin to those with other humans.

1 INTRODUCTION

Since the early concept of cobots (Peshkin et al.,

2001), significant progress in control, conception,

and safety has brought natural Human-Robot interac-

tion closer to reality. Robotic devices have evolved

from rigidly programmed entities to systems that can

smoothly interact with their environment, and react

to some amount of unknown parameters. Robots are

now more often led to work alongside humans and

to cooperate with them for numerous tasks in a wide

range of applications, from industry to health care

(De Santis et al., 2007) (Goodrich and Schultz, 2007).

This cooperation often leads to interaction either via

direct contact or via indirect contact through a jointly

held object. Therefore, understanding the interac-

tion’s underlying processes is a crucial challenge, giv-

ing us new directions for improving robotic partners

of all kinds.

The cooperation appears to be altered when coop-

erating with a robotic partner. (Obhi and Hall, 2011)

has shown the difference in sense of agency when

a

https://orcid.org/0009-0000-5407-9103

b

https://orcid.org/0000-0002-7141-5946

c

https://orcid.org/0000-0002-3908-4358

d

https://orcid.org/0000-0003-4372-4917

we believe it is a human partner. Then(Grynszpan

et al., 2019) has shown that the sense of agency is

hindered during kinesthetic cooperation. They have

shown some discrepancy using three metrics related

to efforts and the sense of agency. This prompts the

question: What features in Human-Robot Interaction

impede cooperation and alter the sense of agency?

Analysis of the dataset from (Grynszpan et al., 2019)

revealed that humans tend to maintain a constant light

force, regardless of whether an action is executed or

not. Furthermore, it was observed that individuals

exert more force when engaging in kinesthetic com-

munication exclusively (Mielke et al., 2017) (Parker

and Croft, 2011). This leads to the question: Does

force directly influence the perception of kinesthetic

dyadic cooperation? Assessing this aspect involves

implementing a robotic partner, named Virtual Partner

(VP). Our study explore how humans perceive inter-

actions with robots. Integrating force bias into robot

models could offer means for them to enhance their

communication capabilities.

400

Ndiaye, O., Grynszpan, O., Berberian, B. and Saint-Bauzel, L.

Study of the Influence of a Force Bias on a Robotic Partner During Kinesthetic Communication.

DOI: 10.5220/0013013400003822

In Proceedings of the 21st International Conference on Informatics in Control, Automation and Robotics (ICINCO 2024) - Volume 1, pages 400-407

ISBN: 978-989-758-717-7; ISSN: 2184-2809

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

2 RELATED WORKS

Numerous studies showcase humans’ capability to ex-

change information and intentions through force ex-

changes (Ganesh et al., 2014) (Candidi et al., 2015)

(van der Wel R. P. R. D. et al., 2011) (Pezzulo et al.,

2021). Nonetheless, the specific demands and con-

tent of the messages conveyed in such exchanges re-

main unclear. Two approaches have been employed

to tackle this issue.

The first approach involves directly observing ex-

changes of forces and trajectories and developing

tools for analyzing interactions. Within this approach,

studies such as (Al-Saadi et al., 2021), (Madan et al.,

2015), (Mielke et al., 2017), (Parker and Croft, 2011)

and (B

¨

orner et al., 2023) present methods for decom-

posing interaction forces (e.g., distinguishing harmo-

nious and conflicting interactions, identifying efforts

contributing to joint action or not) or analyzing prop-

erties of motion during Human-Human interaction.

In the second approach, virtual partners are de-

veloped and features leading to similar interactions

between Human-Human and Human-Robot pairs are

observed. The study presented here falls within this

category, alongside others such as (Takagi et al.,

2017) and (Li et al., 2019). These studies demonstrate

that during physical interaction between two humans,

one participant incorporates the intentions of the other

into their own command scheme. Their methodology

entails creating models of virtual partners capable of

adapting their behavior to that of their human coun-

terparts. While these models exhibit the adaptive na-

ture of humans in interaction, their limitations (Tak-

agi et al., 2018) underscore the necessity for further

advancements in this domain.

One apparent method for enhancing these models

would involve considering the roles during interac-

tions. Indeed, the dynamic exchange of roles and the

specific roles each partner assumes significantly influ-

ence physical communication, as evidenced by (Jar-

rasse et al., 2013) (M

¨

ortl et al., 2012) (Abbink et al.,

2012) (Feth et al., 2011) (Reed and Peshkin, 2008).

To the best of our knowledge, real-time determina-

tion of a person’s role within an interaction is infea-

sible due to the multitude of potential roles and their

subtle distinctions. Since this study does not focus on

role identification, we have circumvented this issue by

concentrating on brief and elementary interactions. In

doing so, we assume: (1) the interaction begins with

a brief negotiation phase followed by a relatively har-

monious execution phase, (2) the roles of “Initiator”

and “Follower” serve as adequate descriptors, and (3)

each participant’s role remains consistent throughout

the physical exchange. Insights from a related study

(Grynszpan et al., 2019) suggest that these assump-

tions generally hold true in most instances. Another

supporting element for our hypotheses is the concept

of “1st-Crossing,” previously introduced in (Roche

and Saint-Bauzel, 2021). The “1st-Crossing” descrip-

tor predicts, with a 95% success rate, which actor will

dictate the direction based on their initial voluntary

movement in a dyadic physical exchange. This im-

plies that the outcome of short interactions tends to

be influenced by the first move. Building upon this

premise, we have developed a Virtual Partner (VP) ca-

pable of assuming both roles, the algorithm of which

is delineated in Section 3.

3 METHODS

3.1 Apparatus and Settings

Each participant controls a 1-dof (degree of freedom)

haptic interface (Figure 1), called 1D-SEMAPHORO.

These interfaces, consisting of a platform and a pad-

dle that moves from right to left on which are respec-

tively placed the right hand and the tip of a finger,

were designed and built in ISIR (Roche et al., 2018)

and are published with a opensource license CC-BY-

NC. The motor controllers and the sensors acquisition

run at 2kHz.

Figure 1: Image of the 1D-SEMAPHORO.

The 1D-SEMAPHORO comes with 3 different

modes. When the 1st mode is selected, the paddles

are free and receive no mechanical effort from the mo-

tors. There is no communication between users.

Selecting the second mode puts the haptic devices

in bilateral teleoperation thanks to a 4-channel con-

troller. The 1D-SEMAPHORO’s mechanical structure

makes it a good choice for a transparent and stable

controller. When computing the command F

h,i

(t) for

Study of the Influence of a Force Bias on a Robotic Partner During Kinesthetic Communication

401

the handle i, the operation can be summarize in (3.1).

F

h,i

(t) = K ∗ (X

i

(t − T d) −

˜

X

i

(t)) +

˜

F

i

(t − T d)

Where T d represents the delay in the control loop (in

our case, Td = .5 ms). X

i

and

˜

X

i

are the states of both

handles. The state is composed of the position and

velocity.

˜

F

i

is the force measured by the sensor on the

other handle i and K is a scheduled PD controller.

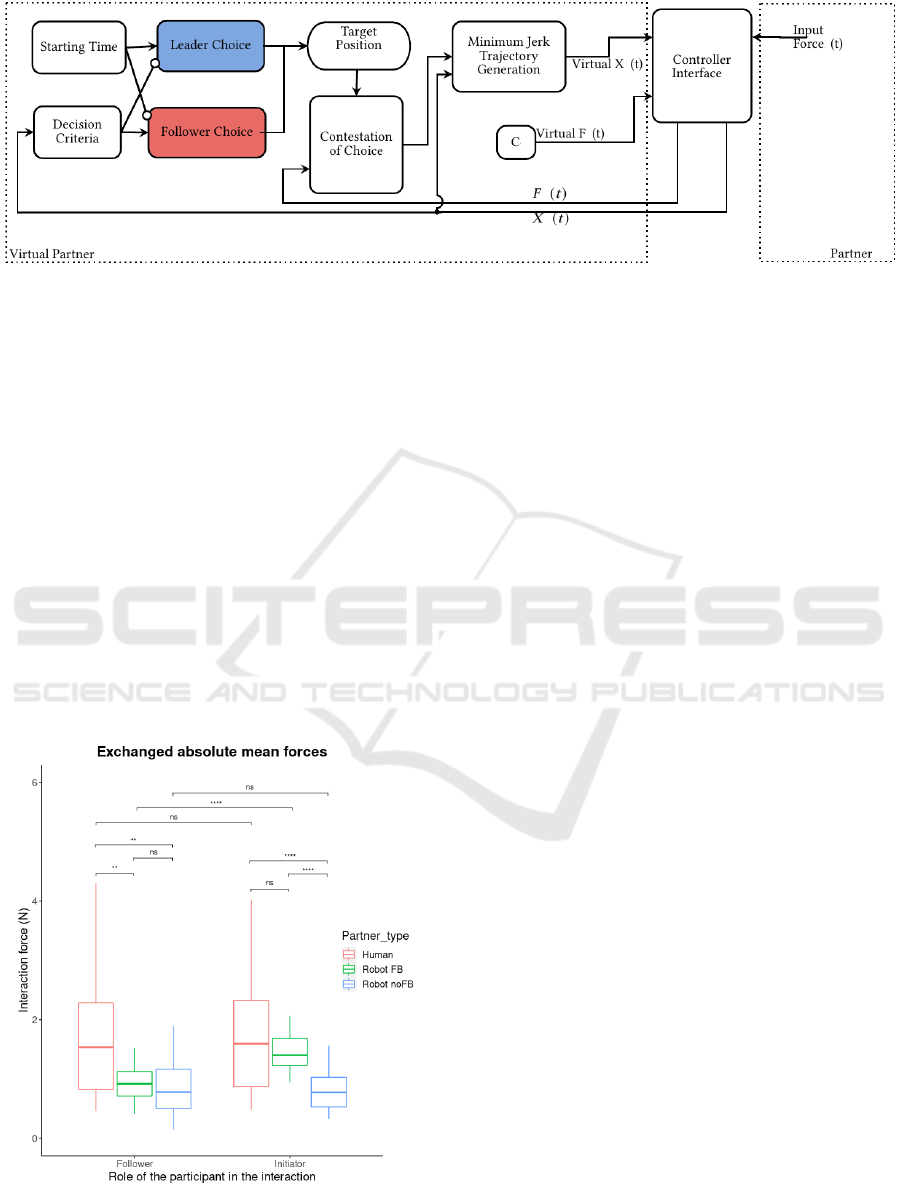

In the last mode, the haptic devices are both linked

to two virtual partners (VP) (algorithm representation

in Figure 5 (Roche and Saint-Bauzel, 2021)). At all

times, the VP monitors the human partners’ efforts.

Depending on the amount of effort generated by the

latter, the VP takes on the role of Leader or Follower.

Then the VP chooses a position to reach, depending

on its role and the targets on screen, and creates a

minimum-jerk trajectory to it. A PD controller is then

used to keep the VP on track with the reference tra-

jectory.

3.2 Types of Partner

Based on these modes we defined several types of

partner:

• Alone: Participants perform the task on their own,

without any interaction between participants.

• Human: Participants physically communicate to-

gether using SEMAPHORO-1D in bilateral teleop-

eration.

• Robot noFB (no force bias): Each participant in-

teracts with a VP (described part 3.1) without the

added force bias.

• Robot FB: Each participant interacts with their own

VP applying the additional force bias. The magni-

tude of this force bias is constantly 0.3N and its di-

rection is randomly determined at the beginning of

each trial. The magnitude of this force bias comes

from previous data on 10 participants. It represents

the average value of all the forces they generate

when no specific action is performed.

3.3 Metrics

The sense of agency, which can be defined as one abil-

ity to feel in control of observable modifications in the

environment through one’s actions (Haggard et al.,

2002) (Haggard, 2005), has been highlighted in hu-

mans during solo tasks or sequential group tasks. In

our study, this concept is used to analyze the feeling

of control during real-time collaboration tasks. This

metric allows us to observe how interactions are per-

ceived in relation to the behavior of the partner. It can

be measured at two levels :

• the implicit (unconscious) level: The perception of

the time interval between an action and its effect is

used as a measure of Intentional Binding (IB). IB

is an effect linked to the sense of agency, whereby

an individual perceives her/his action and its effect

as attracted towards one another (Haggard, 2005)

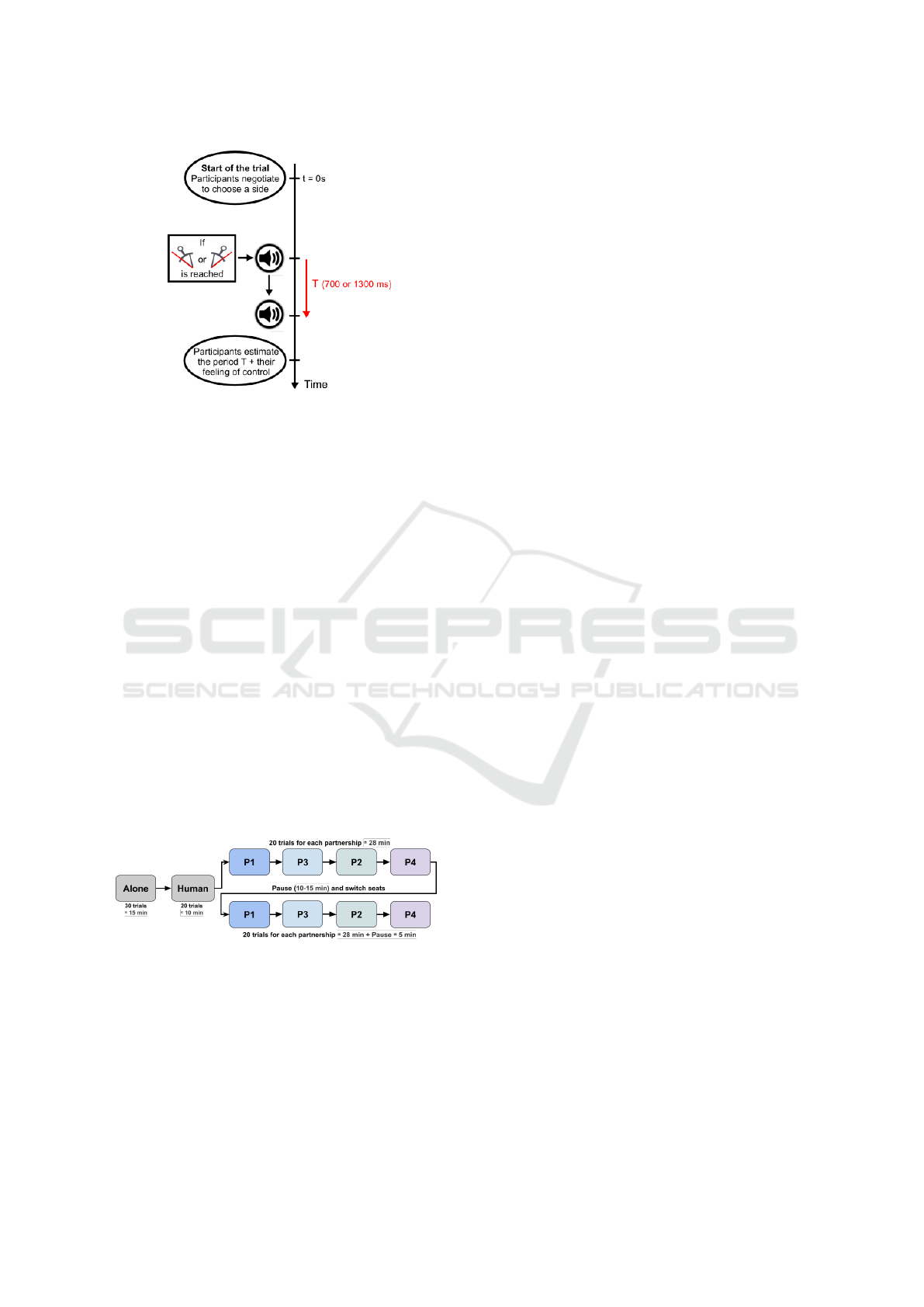

(Capozzi et al., 2016) (see Figure 2).

• the explicit (conscious) level: measurement based

on questionnaires where humans are asked to rate

their feeling of control over the physical communi-

cation (Ivanova et al., 2020) (Obhi and Hall, 2011).

Figure 2: Representation of the Intentional Binding effect.

The value of IB is the sum of Action and Outcome Bindings.

The feeling of control, represented by the study

of agency, is refined by observing how it evolves in

relation to each partner’s role. We chose to use the

contribution to the first motion of the dyad as the cri-

terion to identify the role of each member (Initiator

or Follower). To be more precise, the first member

to generate an effort superior to a threshold becomes

Initiator. If both members exceed this threshold, the

one applying the greatest force is recorded as Initiator

of the dyads’ motion.

3.4 Experimental Design

3.4.1 Task and Stimulus

Throughout the experiment, participants must execute

the same task with different partners. Regardless of

the partner, they must move their paddles to the right

or the left. When they reach an end position, they

hear a beep and after a period of time, a second one.

To implicitly measure their sense of agency, partici-

pants must quantify the time elapsed between the two

sounds in milliseconds. The explicit measurement is

done by asking them to rate their own level of involve-

ment in the final group decision.

3.4.2 Procedures and Phases

The experimenter explains the context of the experi-

ment and what kind of task they are asked to perform.

Dyad members take the test in the same room, sit-

ting side by side, and each has a computer screen and

headphones. An opaque curtain separates them, pre-

venting them from seeing each other.

During the training phase, participants have to fo-

cus on and develop their ability to estimate intervals

ICINCO 2024 - 21st International Conference on Informatics in Control, Automation and Robotics

402

Figure 3: Representation of a trial during the evaluation

phase. In the training, the period T of time is chosen ran-

domly between 300 ms and 2000 ms.

of time that range from 300 to 2000 milliseconds. Af-

ter an answer is given, they can see the correct value

appearing beside it. This phase consists of 30 tri-

als in Alone mode and 20 trials in Human. Train-

ing with Alone enables participants to improve their

ability to estimate time intervals consistently while

training with Human develops their ability to prior-

itize time estimation over the rest.

During the evaluation phase, they still have the

same task but with three modifications. First, the in-

terval duration is no longer picked at random (two

possible intervals, 700ms and 1300ms). Second, Par-

ticipants are linked to the 4 different partner types

described previously (Alone, Human, Robot FB and

Robot noFB). Third, the question about the explicit

measure of the agency is included. To avoid asym-

metries in haptic devices as much as possible and to

collect enough data, they must follow a track with the

four types of partners (the order is chosen randomly).

Each partnership is passed twice (as illustrated in Fig-

ure 4) and participants swap seats halfway through.

Figure 4: Representation of a passage. The order of the

types of partners (P#) is chosen randomly for each dyad.

3.5 Participants

The participants were recruited through a process re-

specting the standard of experimental plans. Partic-

ipants may be right or left-handed and must be free

of any known visual or auditory deficits. To prevent

possible effects on task performance, participants are

paired in dyads consisting of people who have never

worked together in a collaborative task. The mini-

mum number of 40 participants has been established

thanks to a G*Power 3 (Franz et al., 2007) analysis

where the parameters are based on (Grynszpan et al.,

2019) and are set to: α = 0.05, Power ≥ 0.9 and an

effect size ≃ 0.5.

44 participants were recruited during the cam-

paign. Some technical problems with the 1D-

SEMAPHORO resulted in data loss for 6 of them.

Since the ability of the 38 participants to distinguish

between short and long periods is essential for data

processing, we monitor this throughout the experi-

ment. Therefore, their given answers in Alone were

sorted into two groups, depending on the correct time

interval (700 ms or 1300 ms). If a t-test between the

two populations of answers yields no significant dif-

ference in the means, the corresponding participant

becomes an outlier and his/her data is excluded from

the experiment campaign. By doing this, 3 partici-

pants were removed from the study, leaving a dataset

of 35 participants.

3.6 Statistical Analysis

To summarize the statistical analysis carried out, we

have 3 independent variables: IV1: the type of part-

ner (4 conditions), IV2: the delay between the action

and the sound (2 conditions) and IV3: the role of each

participant during the interaction (2 conditions). The

chosen dependent variables are: DV1: the absolute

mean forces exchanged between partners, DV2: the

implicit measure of the sense of agency and DV3: the

explicit measure. It is expected that the association of

these variables is relevant to analyzing the way physi-

cal cooperation is felt by humans when working with

another human or with a robot.

On both implicit and explicit measures of the

sense of agency, we conducted a 3-way repeated mea-

sures ANOVA to assess any effects. For the analy-

sis of average interaction forces, as the Delay vari-

able exerts no influence on the physical exchanges up-

stream, it was omitted as an independent variable, and

a 2-way repeated measures ANOVA was conducted.

Prior to analysis, normality and sphericity of the sam-

ples were assessed. Results indicated normal or near-

normal distributions, as determined by Shapiro-Wilk

tests. Mauchly’s sphericity tests provided conclu-

sive results for one variable and inconclusive results

for others. When necessary, a Greenhouse-Geisser

correction was applied to the ANOVA. In instances

where significant effects were observed in our tests,

pairwise comparisons were conducted using a Holm-

Bonferroni correction.

Study of the Influence of a Force Bias on a Robotic Partner During Kinesthetic Communication

403

Figure 5: (Roche and Saint-Bauzel, 2021) Virtual Partner (VP) algorithm interacting with a Human partner. F(t), X(t), Virtual

F(t), and Virtual X(t) are respectively the force and position of the human and the VP. The difference between Robot noFB

and Robot FB lies in the value of Virtual F(t) (C = 0N for Robot noFB and C = ±0.3N for Robot FB.

4 RESULTS

4.1 Force Analysis

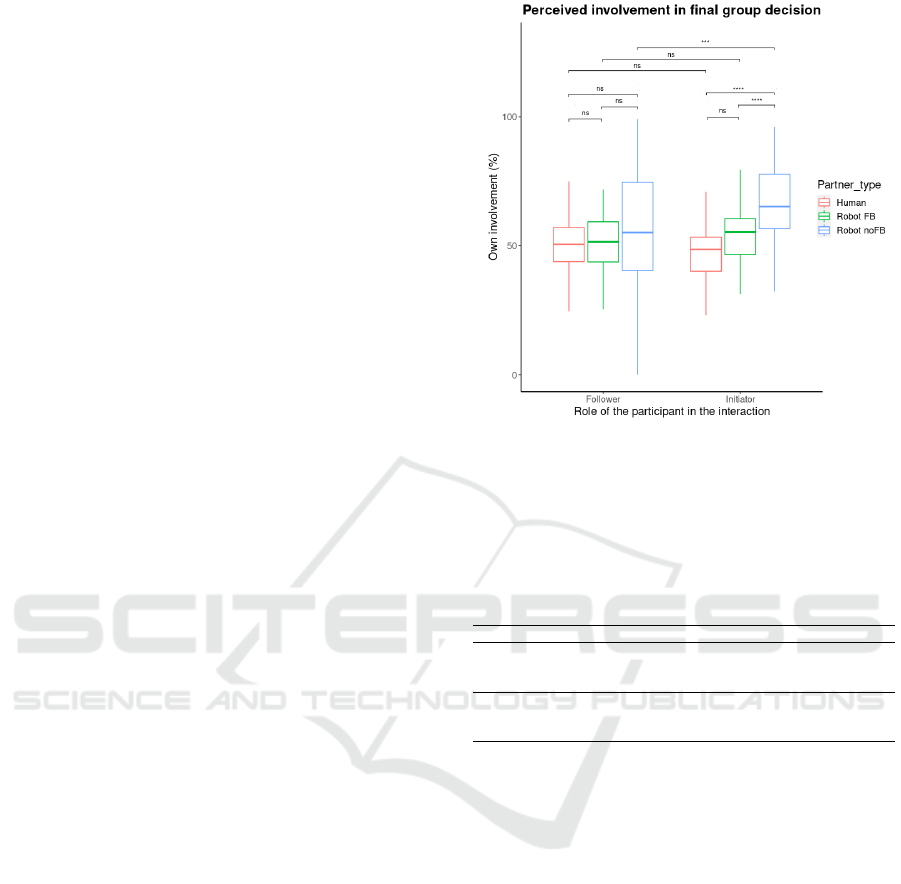

The analysis of the average exchange of effort fo-

cuses on physical cooperation to a strict definition. It

presents several interesting results on the effects in-

duced by the force bias and on human-robot cooper-

ation. Figure 6 and the results of the statistical tests

highlighted two major elements. Firstly, the results

in Robot FB are slightly different from those of the

other two groups and secondly, the average forces ex-

changed with the virtual partner are lower and more

tightly concentrated on the mean than those between

humans.

Figure 6: Boxplot showing the mean values of the absolute

forces exchanged between partners.

Whereas Human and Robot noFB are invariant

to the Role (respectively, t(1,34)=0.23, p=1.0 and

t(1,34)=-0.69, p=1.0), Robot FB is not (t(1,34)=8.60,

p<0.001). Moreover, while the mean exchange

of effort of Robot FB:Initiator is close to that of

Human:Initiator (t(1,34)=-2.27, p=0.18) and Hu-

man:Follower (t(1,34)=-2.16, p=0.19), the mean ex-

change of effort of Robot FB:Follower remains sim-

ilar to that of Robot FB:Initiator (t(1,34)=-1.36,

p=0.73) and Robot noFB:Initiator (t(1,34)=-0.33,

p=1.0). The Robot FB:Initiator group demonstrates

that the addition of a force bias significantly changed

the nature of interactions beyond its own effect. In-

deed, it is logical to observe an increase in interaction

forces when a force bias is introduced. However, the

force bias’s magnitude is only 0.3N, yet we observe a

significant increase in average forces (t(1,34)=-10.18,

p<0.001), around 0.7N. This suggests that the light

force bias has a direct effect on the overall interaction.

Although the incorporation of a force bias en-

hances interactions with the VP, its communicative

capabilities remain constrained. The first constraint

manifests in the significant variability of average ef-

fort within the Robot FB condition as a function of

the Role, whereas outcomes in the Human condition

indicate that Role should exert no discernible influ-

ence. Our observations during several passages re-

vealed that participants tended to assume a domi-

nant role in their interactions with the virtual part-

ner, while their interactions with each other were

more evenly distributed. This observation, in con-

junction with our findings, suggests that our virtual

partner elicits specific behavioral responses from par-

ticipants: either the human participant assumes lead-

ership, and the virtual partner resists or follows, or

the virtual partner assumes leadership and the human

adopts a passive-like demeanor, resulting in minimal

ICINCO 2024 - 21st International Conference on Informatics in Control, Automation and Robotics

404

resistance from him/her. The second limitation is evi-

dent in the disparity in variances between the Human

condition and the other two groups. These two lim-

itations underscore not a constraint of the force bias

effect, but rather a limitation inherent in the virtual

partner model employed. While our model accounts

for the actions of the human partner in its decision-

making process, its capacity to generate a range of ef-

forts is overly restricted, necessitating modifications

to the model.

4.2 Sense of Agency - Implicit Level

The ANOVA highlights the effect of the Delay on par-

ticipants’ time interval perceptions (F(1.34)=44,367,

p<0.001). Indeed, neither the Role (F(1,34)=0.38,

p=0.54) nor the type of partner (F(2,68)=0.98,

p=0.38) had a significant effect. This result is quite

surprising to us as it differs from that obtained in a

previous experiment which was very similar (Gryn-

szpan et al., 2019). In the latter, we observed a sig-

nificant difference between the Human-Human group

and the Human-Robot noFB group, which is no

longer the case here with equivalent groups Human

and Robot noFB. We believe that this difference is due

to an overestimated effect size, resulting in a sample

size too small to draw firm conclusions on this part of

the results.

4.3 Sense of Agency - Explicit Level

The ANOVA on the feeling of control underlines the

effect of the interaction between the Type of partner

and the Role (F(1.69,57.53)=12.402, p<0.001).

Firstly, we observe that this feeling of control

varies very little in the Human group in relation to

the role assumed (t(2,69)=-1.72, p=0.63). A similar

observation can be made for Robot FB (t(2,69)=0.78,

p=1.0) but not for Robot noFB. The responses for

Robot noFB showed a significant difference in the

means with Human (t(2.69) = 3.67, p=0.005), and dif-

ferent variances depending on the role assumed by

the participant. Moreover, the mean values for the

Human and Robot FB groups are around 50%, while

they are higher for Robot noFB. These initial obser-

vations highlight the effects of adding the light force

bias, namely (1) reducing the standard deviation of

responses, (2) making them independent of the role

played, and (3) bringing the feeling of control with

the VP closer to that with a human partner.

Refining the analysis according to each partici-

pant’s role reveals slight differences in results. The

Table 1 shows similar trends for both roles, but with

greater differentiation when the human initiates the

Figure 7: Boxplot showing the estimation of participants’

level of involvement in group decisions.

group’s first movement. Looking at our results, we

believe that this difference is due to a large standard

deviation in the Robot noFB:Follower group.

Table 1: Main results of the posthoc test on participants’

level of involvement in the group final decision.

Participant’s role Group 1 Group 2 t(2, 69) Adj. p-value

Human Robot noFB 8.989 < 0.001

Initiator Human Robot FB 1.994 0.45

Robot FB Robot noFB 6.663 < 0.001

Human Robot noFB 1.998 0.45

Follower Human Robot FB 0.25 1

Robot FB Robot noFB 1.718 0.63

Our results show that the addition of a light force

bias alone had a significant impact on the human part-

ner’s sense of agency. Indeed, the significant differ-

ences between the Human and Robot noFB groups

were significantly reduced between the Human and

Robot FB groups. Thus, constantly feeling a constant

light force bias altered their perception of control.

5 DISCUSSION

The findings presented in this paper show that inte-

grating a subtle constant force bias into physical inter-

actions between humans and virtual partners slightly

alters their exchanges, rendering them more akin to

those observed in human-human interaction. Specif-

ically, notable distinctions emerge when comparing

virtual partners with and without this force bias across

two dimensions: the explicit perception of agency and

the magnitude of interaction forces. In both aspects,

the virtual partner employing the force bias demon-

Study of the Influence of a Force Bias on a Robotic Partner During Kinesthetic Communication

405

strates outcomes more reminiscent of human inter-

action. These disparities underscore that the force

bias not only influences the magnitude of forces ex-

changed between partners but also impacts the hu-

man’s perceptual experience of interaction with their

virtual counterpart.

Nevertheless, our observations underscore signif-

icant disparities between human-human and human-

robot pairings, despite the presence of the force bias.

Participants often exhibit dominance in interactions

with the robot, albeit to a lesser extent when the force

bias is active. Furthermore, instances where partic-

ipants opt to follow the virtual partner are accom-

panied by a passive-like demeanor not typically ob-

served in human-human interactions. Additionally,

exchanges with virtual partners entail considerably

smaller efforts compared to interactions between hu-

mans.

The effect of the force bias is highlighted through

mean force exchanges and the explicit measurement

of the sense of agency. However, no specific effect

was observed at the level of implicit measurement.

In a previous and similar work (Grynszpan et al.,

2019), significant differences in implicit agency mea-

surement were obtained between human-human and

human-robot dyads. We believe two reasons explain

this phenomenon. Firstly, the effect size of partner

type on implicit measurement may have been overes-

timated, indicating a number of participants too small

to draw any conclusion. Secondly, implicit agency

measurement can be disrupted by the experimental

framework. According to (Howard et al., 2016), this

measurement requires a certain amount of cognitive

resources, and when these resources are already en-

gaged in another task, the measurement is disrupted

and unreliable. Tasks capable of disrupting the mea-

surement include memory exercises and light physical

efforts (such as pulling on an elastic band). Our prior

findings suggested that the efforts exchanged between

the partners did not induce a substantial cognitive load

capable of interfering with the implicit measurement

of the sense of agency. However, our recent findings

appear to support this assertion. This observation is

also of interest as it underscores a potential angle of

analysis of kinesthetic interaction that focuses on the

cognitive load.

6 CONCLUSIONS

This study examines the impact of a consistent low-

intensity force bias applied throughout dyadic kines-

thetic cooperation. Findings indicate that the force

bias fosters an interaction more akin to human-human

engagement when the human initiates movement,

generating forces comparable to those exerted by a

human partner. These promising outcomes motivate

further investigation into this force bias.

We propose that the physical connection be-

tween two humans mirrors a traditional communi-

cation channel, allowing a kinesthetic discourse be-

tween them. Building upon this premise, we in-

tend to enhance the interaction capabilities of our

Virtual Partner (VP) models by integrating elements

of non-verbal communication from other languages.

Through preliminary investigations into sign lan-

guage, we have identified intriguing parallels be-

tween sign language and the kinesthetic communica-

tion channel. Certain components of sign language

can be feasibly implemented in VP models by using

a variable force bias. Subsequent works will delve

into these modalities in greater depth and employ a

methodology akin to the one utilized here to exam-

ine their impact on the physical interaction between

humans and robots.

ACKNOWLEDGMENTS

This work is funded by the French research agency

(ANR) as part of the ANR2020 PRC Lexikhum

project.

REFERENCES

Abbink, D. A., Mulder, M., and Boer, E. R. (2012). Haptic

shared control: Smoothly shifting control authority?

Cognition, Technology and Work, 14:19–28.

Al-Saadi, Z., Sirintuna, D., Kucukyilmaz, A., and Basdo-

gan, C. (2021). A novel haptic feature set for the clas-

sification of interactive motor behaviors in collabora-

tive object transfer.

B

¨

orner, H., Carboni, G., Cheng, X., Takagi, A., Hirche, S.,

Endo, S., and Burdet, E. (2023). Physically interact-

ing humans regulate muscle coactivation to improve

visuo-haptic perception.

Candidi, M., Curioni, A., Donnarumma, F., Sacheli, L. M.,

and Pezzulo, G. (2015). Interactional leader-follower

sensorimotor communication strategies during repeti-

tive joint actions. Journal of the Royal Society Inter-

face, 12.

Capozzi, F., Becchio, C., Garbarini, F., Savazzi, S., and Pia,

L. (2016). Temporal perception in joint action: This is

my action. Consciousness and Cognition, 40:26–33.

De Santis, A., Lippiello, V., Siciliano, B., and Luigi,

V. (2007). Human-Robot Interaction Control Using

Force and Vision, volume 353, pages 51–70.

Feth, D., Groten, R., Peer, A., and Buss, M. (2011). Hap-

tic human-robot collaboration: Comparison of robot

ICINCO 2024 - 21st International Conference on Informatics in Control, Automation and Robotics

406

partner implementations in terms of human-likeness

and task performance. Presence, 20:173–189.

Franz, F., Edgar, E., Alebert-Georg, L., and Axel, B. (2007).

G*power 3: A flexible statistical power analysis pro-

gram for the social, behavioral, and biomedical sci-

ences. Behavior Research Methods, pages 175–191.

Ganesh, G., Takagi, A., Osu, R., Yoshioka, T., Kawato,

M., and Burdet, E. (2014). Two is better than one:

Physical interactions improve motor performance in

humans. Scientific Reports, 4.

Goodrich, M. and Schultz, A. (2007). Human-robot inter-

action: A survey. Foundations and Trends in Human-

Computer Interaction, 1:203–275.

Grynszpan, O., Saha

¨

ı, A., Hamidi, N., Pacherie, E., Berbe-

rian, B., Roche, L., and Saint-Bauzel, L. (2019). The

sense of agency in human-human vs human-robot

joint action. Consciousness and Cognition, 75.

Haggard, P. (2005). Conscious intention and motor cogni-

tion. Trends in Cognitive Sciences, 9:290–295.

Haggard, P., Clark, S., and Kalogeras, J. (2002). Volun-

tary action and conscious awareness. Nature Neuro-

science, 5:382–385.

Howard, E. E., Edwards, S. G., and Bayliss, A. P. (2016).

Physical and mental effort disrupts the implicit sense

of agency. Cognition, 157:114–125.

Ivanova, E., Carboni, G., Eden, J., Kr

¨

uger, J., and Burdet,

E. (2020). For motion assistance humans prefer to

rely on a robot rather than on an unpredictable human.

IEEE Open Journal of Engineering in Medicine and

Biology, 1:133–139.

Jarrasse, N., Sanguineti, V., Burdet, E., and Jarrass

´

e, N.

(2013). Slaves no longer: review on role assignment

for human-robot joint motor action. Adaptive Behav-

ior, 22:70–82. ¡br/¿.

Li, Y., Carboni, G., Gonzalez, F., Campolo, D., and Burdet,

E. (2019). Differential game theory for versatile phys-

ical human–robot interaction. Nature Machine Intelli-

gence, 1:36–43.

Madan, C. E., Kucukyilmaz, A., Sezgin, T. M., and Bas-

dogan, C. (2015). Recognition of haptic interaction

patterns in dyadic joint object manipulation. IEEE

Transactions on Haptics, 8:54–66.

Mielke, E. A., Townsend, E. C., and Killpack, M. D. (2017).

Analysis of rigid extended object co-manipulation by

human dyads: Lateral movement characterization.

M

¨

ortl, A., Lawitzky, M., Kucukyilmaz, A., Sezgin, M., Bas-

dogan, C., and Hirche, S. (2012). The role of roles:

Physical cooperation between humans and robots. In-

ternational Journal of Robotics Research, 31:1656–

1674.

Obhi, S. S. and Hall, P. (2011). Sense of agency and in-

tentional binding in joint action. Experimental Brain

Research, 211:655–662.

Parker, C. A. C. and Croft, E. A. (2011). Experimen-

tal investigation of human-robot cooperative carrying.

pages 3361–3366. Institute of Electrical and Electron-

ics Engineers (IEEE).

Peshkin, M., Colgate, J., Wannasuphoprasit, W., Moore, C.,

Gillespie, R., and Akella, P. (2001). Cobot architec-

ture. IEEE Transactions on Robotics and Automation,

17(4):377–390.

Pezzulo, G., Roche, L., and Saint-Bauzel, L. (2021). Haptic

communication optimises joint decisions and affords

implicit confidence sharing. Scientific Reports, 11.

Reed, K. and Peshkin, M. (2008). Physical collaboration

of human-human and human-robot teams. Haptics,

IEEE Transactions on, 1:108–120.

Roche, L., Richer, F., and Saint-Bauzel, L. (2018). The

semaphoro haptic interface: a real-time low-cost

open-source implementation for dyadic teleoperation.

Roche, L. and Saint-Bauzel, L. (2021). Study of kines-

thetic negotiation ability in lightweight comanipula-

tive decision-making tasks: Design and study of a vir-

tual partner based on human-human interaction obser-

vation.

Takagi, A., Ganesh, G., Yoshioka, T., Kawato, M., and Bur-

det, E. (2017). Physically interacting individuals es-

timate the partner’s goal to enhance their movements.

Nature Human Behaviour, 1.

Takagi, A., Usai, F., Ganesh, G., Sanguineti, V., and Burdet,

E. (2018). Haptic communication between humans

is tuned by the hard or soft mechanics of interaction.

PLoS Computational Biology, 14.

van der Wel R. P. R. D., G., K., and Sebanz, N. (2011). Let

the force be with us: Dyads exploit haptic coupling for

coordination. Journal of Experimental Psychology:

Human Perception and Performance, 37(5):1420–

1431.

Study of the Influence of a Force Bias on a Robotic Partner During Kinesthetic Communication

407