Construction of a Questionnaire to Measure the Learner Experience in

Online Tutorials

Martin Schrepp

1 a

and Harry Budi Santoso

2 b

1

SAP SE, Hasso-Plattner-Ring 7, 69190 Walldorf, Germany

2

Fakultas Ilmu Komputer, Universitas Indonesia, Depok, Indonesia

Keywords:

Online Tutorials, Learner Experience, Measurement, Questionnaire, User Experience, Surveys.

Abstract:

Online tutorials are efficient tools to support learning. They can be easily delivered over company web pages

or common video platforms. In commercial contexts, they have the potential to reduce service load, replace

product documentation, allow customers to explore more complex products over free trials, and ultimately

simplify the learning process for customers. This can lead to increased customer satisfaction and loyalty.

But if tutorials are not well-designed, then these goals can not be achieved. Therefore, it is important to be

able to measure the satisfaction of learners with a tutorial. We describe the construction of a questionnaire

that measures the learner experience with tutorials. The questionnaire was developed by creating a set of

candidate items, which were then used by participants in a study to rate several tutorials. The results of a

principal component analysis suggests that two components are relevant. The items in the first component

(named Structural Clarity) describe that a tutorial is well-structured by a logical sequence of steps that are

easy to follow and understand. The second component (named Transparency) refers to the way the tutorial

communicates the underlying learning goals, prerequisites, and concepts and how they can be applied in

practice.

1 INTRODUCTION

Online tutorials are an efficient method for knowledge

transfer and are therefore frequently used in many

different domains (Van der Meij and van der Meij,

2013). For example, to explain how to operate (e.g.,

how to use a TV remote control), maintain (e.g., how

to change the SIM card of a smart phone), or repair

(e.g., how to replace defective parts) technical de-

vices, how to work efficiently with established soft-

ware products (e.g., how to perform typical not fully

intuitive tasks with MS Excel or MS Word) or how to

do special tasks in a programming environment (e.g.,

tutorials concerning web development on platforms

like SelfHTML).

Previous works have investigated the use of on-

line tutorials for learning in knowledge and problem

solving transfer (Mayer, 2005; Mautone and Mayer,

2001). The design and use of tutorials in web-

sites or applications are relevant for the learnability

(Nielsen, 2012) and efforts to enhance the user experi-

a

https://orcid.org/0000-0001-7855-2524

b

https://orcid.org/0000-0003-0459-0493

ence by following a human-centered design approach

(Husseniy et al., 2021).

The term tutorial is used in various contexts. In

this paper, we focus on instructional materials that

teach specific skills or the ability to perform specific

tasks with products in a sequence of steps without the

assistance of an instructor or the ability to ask ques-

tions in a community. Larger online courses covering

general topics or concepts, as well as pure documen-

tation, are not considered in this study, although dis-

tinguishing such approaches from tutorials can some-

times be challenging.

Steps of tutorials often include demonstrations,

detailed examples or exercises that helps the learner

to understand the topic covered by the tutorial. They

can be pure video tutorials or textual step-by-step de-

scriptions in a web-page (which are often supported

by illustrations or even interactive elements to try out

certain aspects) or a combination of these techniques.

Tutorials have a variety of interesting use cases.

However, their effectiveness relies on their ability to

support users in their learning tasks. This means that

tutorials must be well-designed, engaging, and easy to

understand. If this is not the case, a tutorial may have

Schrepp, M. and Santoso, H. B.

Construction of a Questionnaire to Measure the Learner Experience in Online Tutorials.

DOI: 10.5220/0013014700003825

In Proceedings of the 20th International Conference on Web Information Systems and Technologies (WEBIST 2024), pages 315-322

ISBN: 978-989-758-718-4; ISSN: 2184-3252

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

315

a negative impact on the market success of a product.

Let us look at some examples to explain this in

more detail. A tutorial is often the first contact of a

user with a product, for example, software develop-

ers often use tutorials offered on free trial versions of

cloud platforms to decide if they want to use this plat-

form for a project. If the tutorials are not helpful they

might switch to another platform. Customers often

search for tutorials in the web if they have an issue

with a product and do not want to call the support. If

a tutorial offered by the manufacturer does not help

to solve the issue this can cause anger and a loss of

loyalty.

It is important to note that different persons may

have varying opinions regarding the quality of a tuto-

rial, i.e. the perception of learner experience is purely

subjective, which is similar to the concept of user

experience (Schrepp, 2021). To gain a better under-

standing of different opinions, it is thus necessary to

gather feedback from larger groups of learners. On-

line questionnaires are an efficient tool for this pur-

pose. They can be placed at the end of a tutorial or

triggered when the learner completes the tutorial or

reaches a certain point in the tutorial.

We describe in this paper the development of a

questionnaire to measure the perceived subjective ex-

perience of learners with a tutorial. Such a question-

naire can be used to continuously measure the learner

experience and therefore act as an element for quality

control.

2 TYPICAL APPLICATION

SCENARIOS FOR TUTORIALS

There are several typical usage scenarios for tutorials,

especially in the commercial context.

Online tutorials empower customers to find an-

swers to their questions and troubleshoot issues on

their own. This reduces the need to reach out to cus-

tomer support (Hsieh, 2005). Thus, online tutorials

can help to reduce support calls and thus saves re-

sources in customer support. Such tutorials are a cost-

efficient way to train users (Van der Meij and van der

Meij, 2013). However, a badly realized tutorial can

cause quite negative emotions towards a product or

vendor. Thus, a bad learner experience can negatively

impact the learners willingness to use or purchase a

product (Beaudry and Pinsonneault, 2005; Venkatesh

et al., 2003). For example, for mobile online games

an effect of the tutorial quality to user adoption was

found (Zhiyong et al., 2021).

Tutorials provide step-by-step instructions accom-

panied by visual aids such as videos, images, and

interactive elements. Good online tutorials foster a

sense of interactivity. Users can actively participate

in the learning process, following along with the in-

structions, trying out examples, and receiving imme-

diate feedback. Thus, online tutorials are often more

attractive than traditional documentation. But tutori-

als are often hard to adjust to changes (especially if

they contain videos) and have thus a high risk to be

outdated. Tutorials can also be perceived by users as

disruptive and are often skipped and forgotten (Laub-

heimer, 2023).

Tutorials are massively used to support customers

with engaging learning materials, for example, the

Microsoft support page contains a huge number of

short tutorials (in most cases a short video accom-

panied with a step-by-step textual description) that

demonstrate features of Microsoft products.

Offering free cloud-based demo systems or time-

limited free downloads for commercial software can

be an effective strategy to support sales. However,

granting free access to a product may not be enough,

particularly for more complicated products that re-

quire some knowledge to fully explore their capabili-

ties. Users who lack prior knowledge may struggle or

overlook certain usage scenarios and features if they

explore the product on their own. To address this, tu-

torials play a crucial role in guiding users and show-

casing the product’s potential functionalities, making

them a valuable marketing tool. A prominent example

are cloud platforms, for example Amazon Web Ser-

vices, IBM Cloud Platform, Microsoft Azure or SAP

Business Technology Platform. They all offer devel-

opers free trial accounts and support learning by many

tutorials that help to explore the product features in a

guided and easy to understand sequence.

Thus, good online tutorials allow customers to fa-

miliarize themselves with specific topics comfortably

with low effort. They are available 24 hours, allow-

ing customers to access information at their conve-

nience. This eliminates the need to wait for customer

support agents to be available or endure long hold

times. Customers can access tutorials whenever they

encounter an issue, empowering them to resolve prob-

lems quickly and efficiently. That means they can

help to increase customer satisfaction and loyalty.

3 REQUIREMENTS FOR THE

TUTORIAL QUESTIONNAIRE

There are several papers that evaluate tutorials in cer-

tain domains using surveys with some ad hoc formu-

lated questions, for example (Brill and Park, 2011).

Our goal is to create a standardized questionnaire that

WEBIST 2024 - 20th International Conference on Web Information Systems and Technologies

316

can be used for many tutorials and is thus able to pro-

duce comparable results. Thus, the items in the ques-

tionnaire should not be too specific and should not

relate to aspects of a tutorial that are only relevant in

some special areas.

A tutorial questionnaire can be used to constantly

evaluate running online tutorials. For example, by a

feedback link placed at the end of the tutorial or a di-

alog that asks for feedback launched at special events

in the tutorial or after a specific delay from the starting

point of the tutorial.

It is important that the questionnaire does only

contain a small number of items and can thus be com-

pleted fast by the learners. There are two main rea-

sons for this:

• Many tutorials are rather short. The time spent

on feedback must be somehow proportional to the

duration of the tutorial.

• Tutorials are typically only visited once. Thus,

users expect no benefit from their feedback. This

is different to products that are used frequently,

since in this case users assume that their feedback

contributes to product improvements in the near

future and is thus potentially beneficial for them-

selves. Therefore, the motivation to provide feed-

back will be lower than that for a usual product

experience questionnaire.

Thus, a questionnaire with a huge number of items

will often be not completed and result in a high

dropout rate (Schrepp, 2024). This will reduce the

number of feedback collected and may also impact a

bias, since mainly very satisfied or dissatisfied learn-

ers may be motivated to complete the questionnaire.

4 CONSTRUCTION PROCESS

We follow a classical process of questionnaire devel-

opment in UX (Schrepp, 2021). Several established

UX questionnaires are constructed accordingly to this

method, for example, SUMI (Kirakowski and Cor-

bett, 1993), AttrakDiff2 (Hassenzahl et al., 2003),

UEQ (Laugwitz et al., 2008) or VISAWI (Moshagen

and Thielsch, 2010).

1. Select a larger initial set of candidate items that

describe aspects of tutorial quality and have a

common format.

2. Collect a data set. A larger sample of participants

rates various online tutorials with all candidate

items.

3. Analyse the data by principal components analy-

sis (Pearson, 1901; Hotelling, 1933). Determine

the number of components required to explain the

variance in the data sufficiently.

4. Choose the most relevant components as scales

and per scale determine the items with the high-

est loadings on the underlying component as items

for the questionnaire.

4.1 Creation of Candidate Items

To create an initial list of items several research pa-

pers describing the impact of different quality aspects

on the perceptions of overall learner experience were

analyzed.

Tutorials typically use step-by-step instructions to

demonstrate important features, task flows or con-

cepts of a product. However, if this step-by-step ex-

planation does not work as described the learner is

quickly lost and frustrated (Mirhosseini and Parnin,

2020).

There are multiple reasons that can cause such

effects, for example, missing or poorly written in-

structions, or that the tutorial is simply outdated. In

addition, it is important that the tutorial (especially

longer tutorials) is encouraging and interesting to fol-

low. This is somehow related to the usage of different

presentation techniques, for examples videos, inter-

active diagrams, or executable code snippets (Head

et al., 2020). Other problems can result from ignor-

ing learners’ prior knowledge respectively not stating

clearly what knowledge level is assumed for starting

a tutorial (Kim and Ko, 2017). In addition, practice

and sufficient feedback helps learners to follow in-

teractive tutorials (Kim and Ko, 2017). Another im-

portant point is the length of a tutorial (Lamontagne

et al., 2021). If a video tutorial is long and covers a

complex topic, this can result in a high cognitive load

for the learner. In such cases it makes sense to split

the tutorial into several less complex and shorter parts

(Spanjers et al., 2011).

In addition to scientific papers, there is a wealth

of information on the web on how to create a ”good”

tutorial. Several blog posts or even “tutorials on how

to write a good software tutorial” exist. Extensive re-

search for guidelines and tips was done in the web.

Quality aspects of “good” tutorials were collected and

then condensed into several statements (in English).

This initial list of statements was reviewed con-

cerning their formulations and potential duplicates

were removed. Then the remaining statements were

further consolidated concerning their formulations

until an agreed version was reached. This resulted in

the following list of candidate items:

• Q1: The content of the tutorial fits my needs.

Construction of a Questionnaire to Measure the Learner Experience in Online Tutorials

317

• Q2: The tutorial contains only relevant informa-

tion.

• Q3: I am satisfied with the duration of the tutorial.

• Q4: In my opinion the tutorial is well-structured.

• Q5: The content of the tutorial is easy to under-

stand.

• Q6: The duration and preconditions of the tutorial

are as expected.

• Q7: It is interesting to work through the tutorial.

• Q8: The tutorial motivates me to learn more about

this topic.

• Q9: The language used in the tutorial is simple

and free of jargon.

• Q10: Technical terms are adequately explained.

• Q11: The tutorial is divided into manageable steps

that form a logical sequence.

• Q12: It is clearly stated at the beginning of the

tutorial what learners will achieve.

• Q13: The tutorial includes practical examples and

demonstrations.

• Q14: Visuals like images, diagrams, and videos

are used to explain complex concepts.

• Q15: The content of the tutorial is up to date.

• Q16: The tutorial explains not just how to do

something, but why it’s done that way.

• Q17: All steps in the tutorial worked exactly as

described.

• Q18: Required prerequisites for the tutorial are

explained at the start of the tutorial.

• Q19: The tutorial is easy to follow.

• Q20: The examples given in the tutorial are prac-

tically relevant.

• Q21: The tutorial covers all the features I am in-

terested in.

• Q22: The pace of the tutorial is appropriate.

Since the data for the construction process were col-

lected in Indonesia, the items were translated care-

fully to Indonesian language. Translations were re-

viewed independently by several native speakers and

some adjustments were performed in an iterative pro-

cess until an agreed translation was reached. The In-

donesian translations are contained in the Appendix

of this paper.

4.2 Data Collection

4.2.1 Participants

The participants were undergraduate students. They

come from various faculties of science and engineer-

ing, particularly computer science, from a number

of universities spread across several provinces in In-

donesia including Jakarta, West Java, and Bengkulu.

The students were contacted over email. Around

300 students were contacted and 117 (61 male, 55

female, 1 unknown, average age: 21.23 years, stan-

dard deviation 3.97 years) responded and filled out the

questionnaire. Participation in the study was entirely

voluntary.

4.2.2 Rated Tutorials

The participants could select one of seven different tu-

torials that describe how to use the following common

applications:

• SATUSEHAT: A mobile application designed to

provide comprehensive health services and infor-

mation, ensuring a continuum of care for Indone-

sia’s citizens. By standardizing and integrating

electronic medical records, this platform connects

various health information systems.

• DJP Online: A service managed by Indonesia’s

Directorate General of Taxation (Direktorat Jen-

deral Pajak Republik Indonesia), offering a range

of tax-related applications and services for tax fil-

ers.

• Shopee: A well-known e-commerce platform in

Indonesia, where shoppers can conveniently find

a wide range of products, including clothes, elec-

tronics and other products, via this application.

This platform can be accessed both as a website

and a mobile app.

• Gojek: An app providing a range of on-demand

services including transportation and food deliv-

ery, where tutorials on how to use Gojek are gen-

erally accessible. The products of Gojek consist

of Transport and Logistics, Payments, Food and

Shopping as well as Business.

• Bukalapak: Another widely used e-commerce

platform in Indonesia, allowing users to purchase

or sell both new and second-hand items via this

application. Anyone in Indonesia has the opportu-

nity to sell their products on Bukalapak by estab-

lishing an economical online storefront, which ac-

commodates both single item and wholesale shop-

ping systems.

WEBIST 2024 - 20th International Conference on Web Information Systems and Technologies

318

• Tokopedia: A digital shopping application pro-

viding a wide range of products from various

categories, with extensive Tokopedia instruction

guides readily accessible. Tokopedia’s online

marketplace delivers products through various

channels, including Marketplace, Official Stores,

Instant Commerce, Interactive Commerce, and

Rural Commerce, catering to a wide range of con-

sumer needs.

• Traveloka: A platform designed for booking

flights, hotels, buses and travels, train tickets and

other services. Traveloka offers comprehensive

tutorials to assist users in planning their trips more

effectively.

These tutorials were selected because the correspond-

ing products are very popular among the target group

and we could therefore assume that the students we

contacted knew at least one of these products and had

watched a corresponding tutorial.

4.2.3 Survey

The participants receive an email with a short motiva-

tion and a link to open the survey.

The survey starts with a short instruction, fol-

lowed by two questions concerning age and gender

of the participant. Below these fields the participants

could select the tutorial they want to rate from a se-

lection field showing all the 7 alternatives.

Below this selection the 22 candidate items were

shown. Each item had a 7-point response scale with

the endpoints Fully Disagree and Fully Agree. Af-

ter the last item a button to submit the response was

shown.

The survey can be completed within a timeframe

of five to ten minutes.

4.3 Analysis of the Data

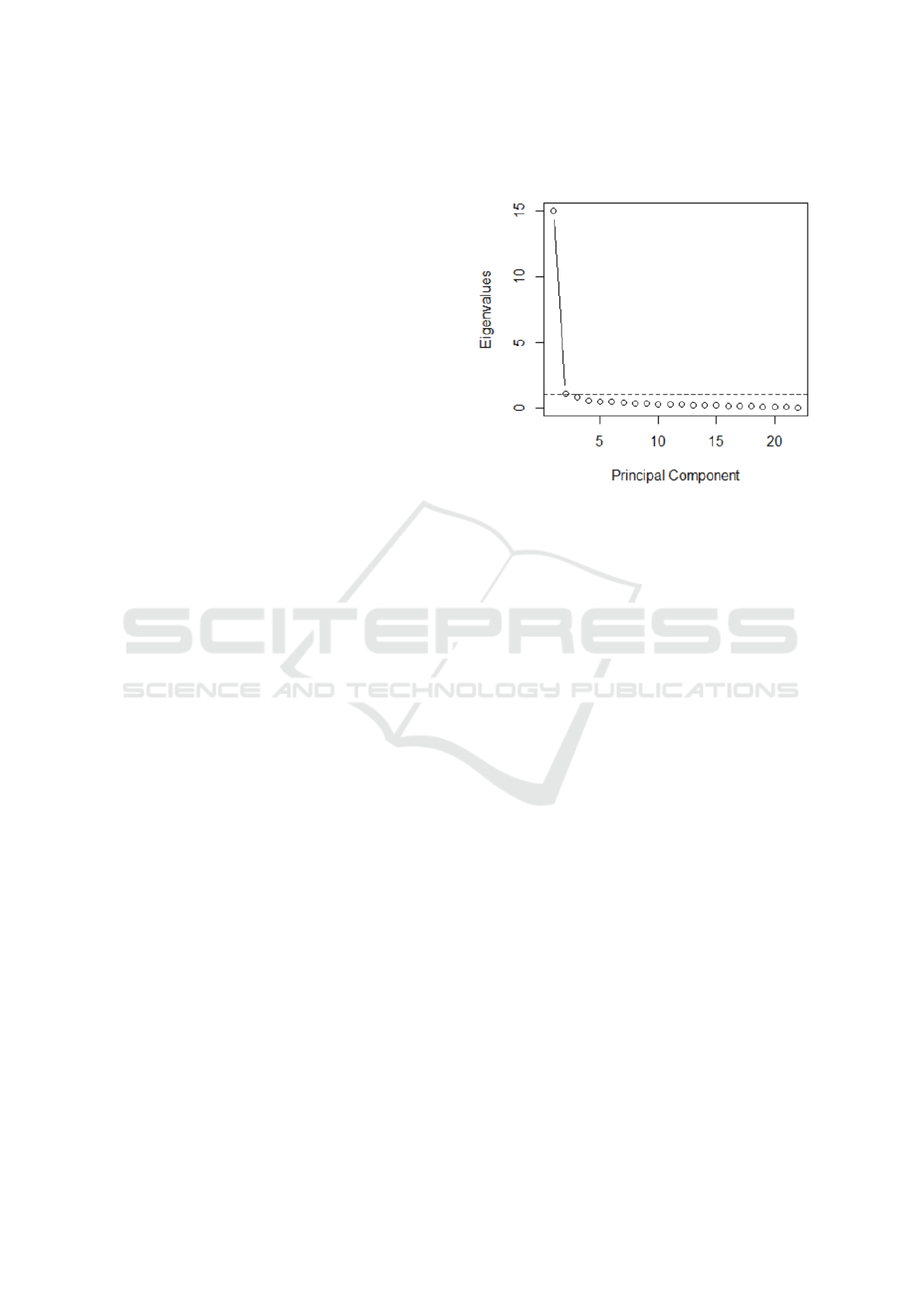

A principal component analysis with varimax rotation

was performed. Figure 1 shows the eigenvalues of the

potential components. The higher the eigenvalue is

(they are shown in order from largest to smallest), the

higher is the predictive value of this component.

Accordingly, to the scree-test (Cattell, 1966) (de-

termine the point after which eigenvalues differ only

slightly) and the Kaiser-Gutmann criterion (Guttman,

1954) (remove components with eigenvalues smaller

than one) two components could be identified and are

chosen as scales. These two components represent

73% of the variance in the data. A commonly used

rule is to retain enough components to explain more

than 70% of the variance in the original data (Jolliffe

and Cadima, 2016). Thus, our two component solu-

tion seems to be a reasonable choice.

Figure 1: Scree plot of the eigenvalues. The dashed line

shows the cutoff value for the Kaiser-Gutmann criterion.

We decided to choose 4 items per component for

the first version of the questionnaire. Therefore, the

4 items with the highest loading on the correspond-

ing components (see Table 1 in the Appendix) were

selected. Commonly used heuristic consider compo-

nent loadings greater than 0.4 as generally acceptable,

see (Comrey and Lee, 2013). The loadings of our se-

lected items are all above 0.7, and can be thus savely

be considered as good representations of the compo-

nents.

The items with the highest loading on the first

component were Q4, Q5, Q11 and Q19. These items

are shown below:

• In my opinion the tutorial is well-structured.

• The content of the tutorial is easy to understand.

• The tutorial is divided into manageable steps that

form a logical sequence.

• The tutorial is easy to follow.

They describe that a tutorial is well-structured in a

logical sequence that is easy to follow and understand.

We name this scale therefore Structural Clarity.

The items with the highest loadings on the second

component are Q12, Q13, Q16, and Q18. These items

are shown below:

• It is clearly stated at the beginning of the tutorial

what learners will achieve.

• The tutorial includes practical examples and

demonstrations.

• The tutorial explains not just how to do some-

thing, but why it’s done that way.

Construction of a Questionnaire to Measure the Learner Experience in Online Tutorials

319

• Required prerequisites for the tutorial are ex-

plained at the start of the tutorial.

These items refer to the way the tutorial communi-

cates the underlying learning goals, prerequisites, and

concepts and how they can be applied to practice. So

somehow they describe that the tutorial makes its pur-

pose transparent to the learner. Therefore, we call this

scale Transparency.

The Cronbach-Alpha (Cronbach, 1951) values are

0.95 for the structural clarity scale and 0.89 for the

transparency scale indicating a high internal scale

consistency.

The first four items form the scale Structural Clar-

ity, while the last 4 items form the scale Transparency.

The answers are coded from -3 (Fully disagree) to +3

(Fully agree), thus a 0 represents a neutral evalua-

tion. The scale score for Structural Clarity respec-

tively Transparency is calculated as the mean over

all items in the scale and all participants of a study.

The overall tutorial quality score is the mean of the

scale scores for Structural Clarity and Transparency.

Thus, the questionnaire reports an overall score and

two scores for the sub-scales.

5 LIMITATION AND OUTLOOK

We described the development of a standard question-

naire to measure tutorial quality. Of course, a sound

validation of the questionnaire concerning reliability

and validity as well concerning its ability to differ-

entiate between tutorials of different quality must be

evaluated in further studies.

Data collection for the construction was done in

Indonesia. Of course, it must be checked if there were

any culture specific influences that had an impact on

the selection of items. This is not really likely, we

know for example that the impact of cultural back-

ground on the importance of UX quality aspects is rel-

atively low (Santoso and Schrepp, 2019). In addition,

the investigated tutorials do of course not cover the

full range of tutorials concerning length, complexity

or topics. Thus, the scale structure must be confirmed

by repeating the study in different countries and with

different types of tutorials. The results presented in

this paper are just the first step in the construction pro-

cess.

Currently, the questionnaire is available in English

and Indonesian language. It is planned to develop fur-

ther translations and to make the questionnaire as well

as the translations available to researchers over a ded-

icated website that allows to view and download the

material.

REFERENCES

Beaudry, A. and Pinsonneault, A. (2005). Understanding

user responses to information technology: A coping

model of user adaptation. MIS quarterly, pages 493–

524.

Brill, J. and Park, Y. (2011). Evaluating online tutorials for

university faculty, staff, and students: The contribu-

tion of just-in-time online resources to learning and

performance. International Journal on E-learning,

10(1):5–26.

Cattell, R. B. (1966). The scree test for the number of

factors. Multivariate Behavioral Research, 1(2):245–

276.

Comrey, A. L. and Lee, H. B. (2013). A first course in factor

analysis. Psychology press.

Cronbach, L. J. (1951). Coefficient alpha and the internal

structure of tests. Psychometrika, 16(3):297–334.

Guttman, L. (1954). Some necessary conditions for

common-factor analysis. Psychometrika, 19(2):149–

161.

Hassenzahl, M., Burmester, M., and Koller, F. (2003).

AttrakDiff: Ein Fragebogen zur Messung

wahrgenommener hedonischer und pragmatis-

cher Qualit

¨

at. Mensch & Computer 2003: Interaktion

in Bewegung, pages 187–196.

Head, A., Jiang, J., Smith, J., Hearst, M. A., and Hartmann,

B. (2020). Composing flexibly-organized step-by-step

tutorials from linked source code, snippets, and out-

puts. In Proceedings of the 2020 CHI Conference on

Human Factors in Computing Systems, pages 1–12.

Hotelling, H. (1933). Analysis of a complex of statistical

variables into principal components. Journal of Edu-

cational Psychology, 24(6):417–441.

Hsieh, C. (2005). Implementing self-service technology to

gain competitive advantages. Communications of the

IIMA, 5(1):77–83.

Husseniy, N., Abdellatif, T., and Nakhil, R. (2021). Im-

proving the websites user experience (ux) through the

human-centered design approach (an analytical study

targeting universities websites in egypt). Journal of

Design Sciences and Applied Arts, 2(2):24–31.

Jolliffe, I. T. and Cadima, J. (2016). Principal compo-

nent analysis: a review and recent developments.

Philosophical transactions of the royal society A:

Mathematical, Physical and Engineering Sciences,

374(2065).

Kim, A. S. and Ko, A. J. (2017). A pedagogical analy-

sis of online coding tutorials. In Proceedings of the

2017 ACM SIGCSE Technical Symposium on Com-

puter Science Education, pages 321–326.

Kirakowski, J. and Corbett, M. (1993). The software us-

ability measurement inventory. British Journal of Ed-

ucational Technology, 24(3):210–212.

Lamontagne, C., S

´

en

´

ecal, S., Fredette, M., Labont

´

e-

LeMoyne,

´

E., and L

´

eger, P.-M. (2021). The effect of

the segmentation of video tutorials on user’s training

experience and performance. Computers in Human

Behavior Reports, 3.

WEBIST 2024 - 20th International Conference on Web Information Systems and Technologies

320

Laubheimer, P. (2023). Onboarding tutorials vs. contextual

help, https://www.nngroup.com/articles/onboarding-

tutorials/ (last accessed: April 9, 2024).

Laugwitz, B., Held, T., and Schrepp, M. (2008). Con-

struction and evaluation of a user experience question-

naire. In HCI and Usability for Education and Work:

4th Symposium of the Workgroup Human-Computer

Interaction and Usability Engineering of the Aus-

trian Computer Society, USAB 2008, Graz, Austria,

November 20-21, 2008. Proceedings 4, pages 63–76.

Springer.

Mautone, P. D. and Mayer, R. E. (2001). Signaling as a cog-

nitive guide in multimedia learning. Journal of edu-

cational Psychology, 93(2):377–389.

Mayer, R. E. (2005). The Cambridge handbook of multime-

dia learning. Cambridge university press.

Mirhosseini, S. and Parnin, C. (2020). Docable: Evaluating

the executability of software tutorials. In Proceedings

of the 28th ACM Joint Meeting on European Software

Engineering Conference and Symposium on the Foun-

dations of Software Engineering, pages 375–385.

Moshagen, M. and Thielsch, M. T. (2010). Facets of visual

aesthetics. International Journal of Human-Computer

Studies, 68(10):689–709.

Nielsen, J. (2012). Usability 101: Introduction to

usability, https://www.nngroup.com/articles/usability-

101-introduction-to-usability/ (last accessed: April 9,

2024).

Pearson, K. (1901). On lines and planes of closest fit to

systems of points in space. The London, Edinburgh,

and Dublin Philosophical Magazine and Journal of

Science, 2(11):559–572.

Santoso, H. B. and Schrepp, M. (2019). The impact of cul-

ture and product on the subjective importance of user

experience aspects. Heliyon, 5(9).

Schrepp, M. (2021). User Experience Questionnaires: How

to use questionnaires to measure the user experience

of your products? KDP, ISBN-13: 979-8736459766.

Schrepp, M. (2024). Designing and analyzing question-

naires and surveys. In User Experience Methods and

Tools in Human-Computer Interaction, pages 121–

169. CRC Press.

Spanjers, I. A., Wouters, P., Van Gog, T., and Van Mer-

rienboer, J. J. (2011). An expertise reversal effect of

segmentation in learning from animated worked-out

examples. Computers in Human Behavior, 27(1):46–

52.

Van der Meij, H. and van der Meij, J. (2013). Eight guide-

lines for the design of instructional videos for software

training. Technical communication, 60(3):205–228.

Venkatesh, V., Morris, M. G., Davis, G. B., and Davis, F. D.

(2003). User acceptance of information technology:

Toward a unified view. MIS quarterly, pages 425–478.

Zhiyong, X., Jiani, W., and Lijun, J. (2021). How game tu-

torials in mobile online games affect user adoption. In

2021 10th International Conference on Educational

and Information Technology (ICEIT), pages 264–268.

IEEE.

APPENDIX

Indonesian Items

Below the translations for the English items into In-

donesian language are shown. These translations

were used for data collection.

• Q1: Materi tutorial sesuai dengan kebutuhan saya.

• Q2: Tutorial hanya memuat informasi yang rele-

van.

• Q3: Saya puas dengan durasi tutorial.

• Q4: Tutorial telah disusun dengan baik.

• Q5: Materi tutorial mudah dipahami.

• Q6: Durasi dan prasyarat yang diperlukan sesuai

ekspektasi.

• Q7: Tutorial menarik untuk diikuti.

• Q8: Tutorial memotivasi saya untuk mempelajari

lebih banyak hal/materi mengenai topik ini.

• Q9: Bahasa yang digunakan dalam tutorial seder-

hana dan bebas dari jargon.

• Q10: Istilah teknis dijelaskan dengan cukup

memadai.

• Q11: Tutorial disusun dengan tahapan yang logis.

• Q12: Apa yang akan dicapai pengguna aplikasi

disampaikan secara jelas di awal tutorial.

• Q13: Tutorial memuat contoh-contoh penerapan

dan demonstrasi.

• Q14: Visualisasi seperti gambar, diagram, dan

video digunakan untuk menjelaskan konsep yang

kompleks.

• Q15: Tutorial memuat konten terkini.

• Q16: Tutorial menjelaskan ’bagaimana’ dan

’mengapa’ sesuatu perlu dilakukan.

• Q17: Semua tahapan dalam tutorial berjalan seba-

gaimana yang dijelaskan.

• Q18: Prasyarat yang diperlukan untuk tutorial di-

jelaskan di awal tutorial.

• Q19: Tutorial mudah diikuti.

• Q20: Contoh-contoh yang diberikan dalam tuto-

rial bersifat praktis dan relevan.

• Q21: Tutorial mencakup semua fitur yang

menarik bagi saya.

• Q22: Tutorial dijelaskan dengan tempo yang

sesuai.

Construction of a Questionnaire to Measure the Learner Experience in Online Tutorials

321

Loadings from PCA

Table 1: Loadings of the items on the two extracted compo-

nents.

Item Component 1 Component 2

Q1 0.771 0.331

Q2 0.750 0.319

Q3 0.762 0.421

Q4 0.821 0.345

Q5 0.872 0.280

Q6 0.752 0.428

Q7 0.636 0.518

Q8 0.457 0.589

Q9 0.560 0.517

Q10 0.686 0.606

Q11 0.816 0.410

Q12 0.457 0.702

Q13 0.301 0.853

Q14 0.608 0.529

Q15 0.586 0.594

Q16 0.297 0.801

Q17 0.594 0.658

Q18 0.280 0.796

Q19 0.793 0.451

Q20 0.630 0.633

Q21 0.622 0.531

Q22 0.558 0.636

English Questionnaire

In my opinion the tutorial is well-structured.

Fully disagree ◦ ◦ ◦ ◦ ◦ ◦ ◦ Fully agree

The content of the tutorial is easy to understand.

Fully disagree ◦ ◦ ◦ ◦ ◦ ◦ ◦ Fully agree

The tutorial is divided into manageable steps that

form a logical sequence.

Fully disagree ◦ ◦ ◦ ◦ ◦ ◦ ◦ Fully agree

The tutorial is easy to follow.

Fully disagree ◦ ◦ ◦ ◦ ◦ ◦ ◦ Fully agree

It is clearly stated at the beginning of the tutorial what

learners will achieve.

Fully disagree ◦ ◦ ◦ ◦ ◦ ◦ ◦ Fully agree

The tutorial includes practical examples and demon-

strations.

Fully disagree ◦ ◦ ◦ ◦ ◦ ◦ ◦ Fully agree

The tutorial explains not just how to do something,

but why it’s done that way.

Fully disagree ◦ ◦ ◦ ◦ ◦ ◦ ◦ Fully agree

Required prerequisites for the tutorial are explained at

the start of the tutorial.

Fully disagree ◦ ◦ ◦ ◦ ◦ ◦ ◦ Fully agree

Indonesian Questionnaire

Tutorial telah disusun dengan baik.

Sangat tidak setuju ◦ ◦ ◦ ◦ ◦ ◦ ◦ Sangat setuju

Materi tutorial mudah dipahami.

Sangat tidak setuju ◦ ◦ ◦ ◦ ◦ ◦ ◦ Sangat setuju

Tutorial disusun dengan tahapan yang logis.

Sangat tidak setuju ◦ ◦ ◦ ◦ ◦ ◦ ◦ Sangat setuju

Tutorial mudah diikuti.

Sangat tidak setuju ◦ ◦ ◦ ◦ ◦ ◦ ◦ Sangat setuju

Apa yang akan dicapai pengguna aplikasi disam-

paikan secara jelas di awal tutorial.

Sangat tidak setuju ◦ ◦ ◦ ◦ ◦ ◦ ◦ Sangat setuju

Tutorial memuat contoh-contoh penerapan dan

demonstrasi.

Sangat tidak setuju ◦ ◦ ◦ ◦ ◦ ◦ ◦ Sangat setuju

Tutorial menjelaskan ’bagaimana’ dan ’mengapa’

sesuatu perlu dilakukan.

Sangat tidak setuju ◦ ◦ ◦ ◦ ◦ ◦ ◦ Sangat setuju

Prasyarat yang diperlukan untuk tutorial dijelaskan di

awal tutorial.

Sangat tidak setuju ◦ ◦ ◦ ◦ ◦ ◦ ◦ Sangat setuju

WEBIST 2024 - 20th International Conference on Web Information Systems and Technologies

322