Towards Increasing Robot Autonomy in CHARM Facility:

Network Performance, 3D Perception, and Human Robot Interface

David Forkel

1,2 a

, Pejman Habibiroudkenar

1,3 b

, Enric Cervera

2

, Ra

´

ul Mar

´

ın-Prades

2 c

,

Lucas Comte

1 d

, Eloise Matheson

1 e

, Christopher McGreavy

1 f

, Luca Buonocore

1 g

,

Josep Mar

´

ın-Garc

´

es

1 h

and Mario Di Castro

1 i

1

European Organization for Nuclear Research (CERN), Meyrin, Switzerland

2

Jaume I University, Castell

´

on de la Plana, Spain

3

Aalto University, Espoo, Finland

Keywords:

Mobile Robotics, Wireless Communication, 3D Fusion, Human-Robot Interaction.

Abstract:

The CHARMBot robot performs remote inspections in CERN’s CHARM facility, with its operations currently

managed through teleoperation. This study investigates the challenges and potential solutions to enhance

CHARMBot’s autonomy, focusing on network performance, 3D perception, and the graphical user interface

(GUI). The communication network can experience constraints, as demonstrated in Experiment 1, which high-

lights the latency in transmitting compressed images to the operator at the control station. Under certain con-

ditions, this latency can significantly impact manual control, leading to TCP buffer congestion and displaying

images to the user with a delay of up to 10 seconds, depending on the network congestion, requested resolu-

tion and compression rate. To improve user interaction and environmental perception, CHARMBot needs to

be equipped with advanced sensors such as 3D LiDAR and stereo camera. Enhancing the robot’s autonomy

is crucial for safe interventions, allowing the remote operator to interact with the robot via a supervised inter-

face. The experiments characterize the network’s performance in transmitting compressed images and propose

a ”lightweight” visualization mode. Preliminary experiments on 3D perception using LiDAR and stereo cam-

era and mesh creation of the environment are discussed. Future work will focus on better integration of these

components and conducting a proof-of-concept experiment to demonstrate the system’s safety.

1 INTRODUCTION

This article first describes the operational environ-

ment of the experiments. Subsequently, the im-

portance of robotics at CERN and the role of the

CHARMBot are emphasized. The Human Robot In-

terface is then presented for operational use and for

3D perception. Thereafter, the experiments on net-

a

https://orcid.org/0000-0001-5678-6186

b

https://orcid.org/0000-0001-5678-6186

c

https://orcid.org/0000-0002-2340-4126

d

https://orcid.org/0009-0006-6876-2783

e

https://orcid.org/0000-0002-1294-2076

f

https://orcid.org/0000-0001-8424-1941

g

https://orcid.org/0000-0001-5396-2519

h

https://orcid.org/0000-0002-5400-3480

i

https://orcid.org/0000-0002-2513-967X

work performance as well as point cloud map gener-

ation and mesh creation are conducted. The article

ends with the conclusions from the experiments and

further work.

1.1 The CERN High-Energy

Accelerator Mixed-Field (CHARM)

Facility

CHARM facility is a unique irradiation infrastructure

at CERN, long utilized for testing electronic equip-

ment used in CERN accelerators and accessible to

users from the aerospace industry (CERN, 2024b).

CHARM offers a novel, cost-effective approach

to radiation qualification. It enables the parallel

batch screening of multiple components or boards and

the testing of large systems, ranging from full racks

Forkel, D., Habibiroudkenar, P., Cervera, E., Mari

´

n-Prades, R., Comte, L., Matheson, E., McGreavy, C., Buonocore, L., Mari

´

n-Garcés, J. and Di Castro, M.

Towards Increasing Robot Autonomy in CHARM Facility: Network Performance, 3D Perception, and Human Robot Interface.

DOI: 10.5220/0013020100003822

In Proceedings of the 21st International Conference on Informatics in Control, Automation and Robotics (ICINCO 2024) - Volume 1, pages 445-452

ISBN: 978-989-758-717-7; ISSN: 2184-2809

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

445

to medium-sized satellites, under operational condi-

tions. A notable application was the testing of the CE-

LESTA CubeSat’s radiation model before its space-

flight, marking the first system-level test of an en-

tire satellite. The facility is highly adaptable, pro-

viding a high-penetration radiation environment with

adjustable maximum dose rates and fluxes through

various configurations of target, shielding, and lo-

cation. Space applications particularly benefit from

CHARM’s capability to simultaneously test the three

main radiation effects of interest: single event ef-

fects, total ionizing dose, and displacement damage.

The radiation environment generated at CHARM is

also ideal for atmospheric neutron characterization of

avionic systems.

In 2018, CERN established a commercial agree-

ment with Innovative Solutions In Space (ISIS) BV, a

Dutch company specializing in nanosatellite solutions

(CERN, 2024c). In September 2018, ISIS experts

conducted tests at CERN on two CubeSat systems

that had previously flown multiple times, allowing for

direct comparisons between ground testing and flight

data. Future plans include offering ISIS customers the

opportunity to perform irradiation tests at CHARM.

Additionally, a test campaign in November 2018 sup-

ported the development of a radiation-tolerant micro-

camera for satellite applications, in collaboration with

MicroCameras & Space Exploration SA.

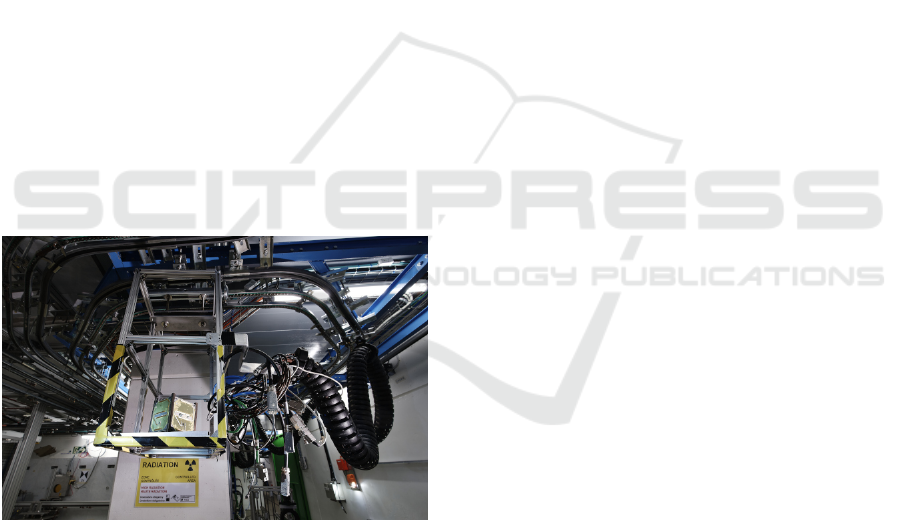

Figure 1: Radiation testing of CELESTA satellite in

CHARM facility (CERN, 2024a).

1.2 The Importance of Robotics at

CERN

CERN has a long-standing tradition of using robots

for inspection, especially when dealing with highly

radioactive components like ISOLDE targets (Cather-

all et al., 2017). Among these innovations is the

Train-Inspection-Monorail (TIM) robot, specifically

designed for the LHC. TIM integrates an electrical

train with a monorail system originally built for the

Large-Electron-Positron (LEP) Collider. This versa-

tile robot is employed for a variety of tasks, includ-

ing visual inspections, functional tests of the 3,600

beam-loss monitors, and conducting radiation sur-

veys within the LHC accelerator tunnel (Castro et al.,

2018). This not only helps to reduce accelerator

downtime but also significantly decreases the need

for human intervention in potentially hazardous envi-

ronments. Additionally, the Measurement & Inspec-

tion Robot for Accelerators (MIRA) is utilized for

performing auto-piloted robotic radiation surveys in

the SPS, further exemplifying CERN’s commitment

to enhancing the identification of beam-losses and ef-

ficiency in radiological risk planning through robotic

technology in the accelerator complex (Forkel et al.,

2023). In the CHARM facility, a frame with the in-

tegrated test components is changed weekly. The in-

stalled frame is picked up with an automated guided

vehicle (AGV) in the target zone and placed in the

shielded stock area. The frame prepared with the new

devices is then picked up by the AGV and placed back

in the target area. As this test device frame has to

be placed very precisely, it was necessary to install

cameras to monitor the exact positioning. In addition,

further frames containing test devices are conveyed

into the target area via monorails on the ceiling and

near the wall. However, the gamma radiation dose in

the target area is high enough for unprotected cameras

to fail after just a few weeks, starting with individual

dead pixels and ending with the camera failing com-

pletely as a result. It was therefore necessary to de-

velop a robust robotic solution that could perform the

visual inspection in the CHARM target area. In recent

years 3D LiDAR sensing devices have become more

compact and affordable, so a small robot platform

can easily incorporate one such sensor together with

a compact stereo vision system. The main advantage

of combining 3D LiDAR with stereo cameras is the

robustness against different conditions of the environ-

ment (Nickels et al., 2003). Also, dense stereo data

fills the gaps in the sparse 3D LiDAR cloud (Mad-

dern and Newman, 2016). The combination of both

sensors can improve object tracking (Dieterle et al.,

2017) and long-range depth estimation (Choe et al.,

2021).

1.3 CHARMBot - Mobile Robot Base

for Remote Inspection of Target

Exchange

CERN has developed a new robot base for remote

inspection of the target exchange at CHARM facil-

ity, the so-called CHARMBot. It follows the CERN-

ICINCO 2024 - 21st International Conference on Informatics in Control, Automation and Robotics

446

Figure 2: CHARMBot equipped with 3D LiDAR and stereo

camera.

Bot modular robotic system, which consists of a mo-

bile Mecanum wheel base, as well as the necessary

sensors such as gamma radiation sensor and front

and back cameras (Di Castro et al., 2018). Further-

more, the mechanical design features a robust and

rigid structure made from aluminum profiles, which

not only enhances durability but also ensures the pro-

tection of internal components. Since the main task

lies in remote inspection, CHARMBot is equipped

with a 360° surveillance camera located at the cen-

ter of the robot. For the experiments described in this

article, a 3D LiDAR with integrated IMU and a stereo

camera were mounted on the robot base. In order to

process the data, they were linked to a Nvidia Jetson

Orin nano. One of the key features of CHARMbot is

its compact size. The robot is specifically designed

to fit beneath the target transporting racks, enabling

it to access all areas of the CHARM facility. This en-

sures that no part of the inspection zone is overlooked.

Overall, CHARMbot’s innovative features and user-

centric design make it an invaluable tool for visual

inspection operations, offering a unique combination

of precision, flexibility, and ease of use.

CHARMbot is primarily employed for visual in-

spection operations, with its main objective being to

provide a comprehensive perspective of the CHARM

target in areas where cameras are not installed. This

capability allows for a thorough visual inspection of

the entire area from a moving robot platform.

To achieve this, multiple advanced features have

been integrated into Charmbot. The design philoso-

phy was to create a plug-and-play solution, ensuring

ease of use for operators. Consequently, several sys-

temd services were developed that automatically ini-

tiate all communication points as soon as the robot is

powered on.

Figure 3: 2D GUI in operation during CHARM target ex-

change.

Figure 4: 3D Perception and Autonomous Navigation GUI

overlaying the 3D LiDAR scan, visual odometry localiza-

tion, and current point cloud from stereo camera.

1.4 Human Robot Interface for

Teleoperation and Visual Inspection

Moreover, a sophisticated solution for managing the

video streams from all onboard cameras has been im-

plemented. This includes the ability to control the

volume of data requested from each camera, optimiz-

ing the robot’s performance under various conditions

Currently, two parameters can be adjusted: the video

quality and the number of frames per second (FPS).

By fine-tuning these settings, operators can maintain

control of the robot even when bandwidth is limited,

by reducing the video quality to the minimum nec-

essary level. This adaptability enhances the overall

efficiency and effectiveness of the inspection process.

Besides this, as can be shown in Experiment 1, for

highly congested network situations a camera visual-

ization lightweight mode has been tested, to reduce

the number of packets in transit while monitoring the

cameras.

The control application for Charmbot is devel-

oped using the Unity game engine and features a 2D

user interface. This interface is designed to be safe,

intuitive, and user-friendly, ensuring that operators

can manage the robot with ease. The GUI provides

users with the flexibility to choose their preferred con-

trol device, enhancing accessibility and convenience

Towards Increasing Robot Autonomy in CHARM Facility: Network Performance, 3D Perception, and Human Robot Interface

447

(Szczurek et al., 2023). Also, the operational tele-

operated GUI is being upgraded to a supervised one,

which enables activating high level navigation com-

mands and representing 3D Mixed Reality informa-

tion (see Figure 4). Moreover, the GUI provides

an augmented reality mode, enabling the operator to

inspect the remote intervention in a 3D hologram,

which can be moved and scaled according to the user

needs, so that the mission monitoring is more immer-

sive for the operator (see Figures 5 and 6).

Figure 5: Augmented Reality GUI mode showing the exte-

rior view of the CHARM facility.

Figure 6: Augmented Reality GUI mode showing the map

scaled and focusing on the robot position.

2 EXPERIMENTS

2.1 Experiment 1: Network

Performance

In this experiment, a connection to CHARMbot is es-

tablished from the ground station, where the human

operator is located. It was observed that the cam-

eras on CHARMBot, which use the Motion JPEG

(MJPEG) protocol, experienced fluctuating frame re-

trieval times depending on the network state. As the

Transmission Control Protocol (TCP) buffers began

to fill, the overall delay increased by several seconds,

which was not comfortable for the operator. The cam-

eras transmit images at a constant bit rate, according

to the GUI parameters, via the MJPEG protocol.

To mitigate this issue, a network performance

experiment was conducted, testing the Frame Re-

trieval Time (FRVT) under different configurations

(i.e., compressions, resolutions). Results indicated

that using a light-weight mode for camera visualiza-

tion, employing single JPEG REST requests, can sig-

nificantly improve performance under congestion sit-

uations. In this mode, the camera MJPEG connection

is closed, and image feedback is provided through

successive web JPEG requests. This allows the op-

erator to select this mode if network performance de-

grades, ensuring that only one image is in transit at

any given time, thereby preventing TCP buffer over-

load.

Figure 7 illustrates the JPEG compression size at

different resolutions, highlighting the average frame

size for each combination using CHARMBot’s WiFi

connection and VPN encrypted tunneling. This helps

to understand the data load each image imposes on

the network. Moreover, Figure 8 demonstrates the

Frame Retrieval and Visualization Time, which in-

volves sending a packet to the robot to obtain a new

image and waiting for it to be received and visualized

before requesting the next one. This figure provides

insights into the time taken for each frame retrieval

and visualization cycle under various network condi-

tions. Lastly, Figure 9 summarizes the Frames Per

Second (FPS) that can be achieved in the light-weight

mode at different resolutions when only a single im-

age is in transit within the network, avoiding buffer

overload. This mode results in FPS ranging from 2 to

10. These findings support the proposed light-weight

mode for camera visualization in the GUI, enhancing

operator experience by reducing delays and prevent-

ing TCP buffer overload under constrained network

conditions.

2.2 Experiment 2: 3D LiDAR and

Stereo Camera Map and Mesh

Creation

2.2.1 Cloud Map Creation

In this experiment, CHARMbot is operated through

teleoperation, navigating from the charging station lo-

cated in the stocking area to the target area.

During the operation, point cloud data was col-

lected from both the Unitree LiDAR and Zed stereo

camera systems, along with integrated IMU values

from the Unitree LiDAR and visual odometry pro-

vided by the stereo Zed camera. The Point-LIO (He

et al., 2023) and RTABmap (Labb

´

e and Michaud,

ICINCO 2024 - 21st International Conference on Informatics in Control, Automation and Robotics

448

Figure 7: JPEG Compression Average Frame Size (100 images per combination) from CHARMBot using the WiFi connection

and VPN encrypted tunneling , for every compression and resolution.

Figure 8: Frame Retrieval and Visualization Time (FRVT) to the ground station from CHARM facilities using the WiFi

connection and VPN encrypted tunneling.

Figure 9: Summary of REST Lightweight mode Frame Per Seconds using WiFi connection and VPN encrypted tunneling.

Towards Increasing Robot Autonomy in CHARM Facility: Network Performance, 3D Perception, and Human Robot Interface

449

Figure 10: Schematics of the CHARM facility and

CHARMbot’s LiDAR localization.

Figure 11: CHARM facility cloud map created using Uni-

tree LiDAR and Zed stereo camera points.

2018) algorithms were employed to generate cloud

maps from the LiDAR and Zed stereo camera data, re-

spectively. Minor modifications were made, wherein

LiDAR scan matching and IMU fusion odometry

were utilized as inputs for RTABmap algorithm.

The schematics of CHARM facility and the LiDAR

odometry are shown in Figure 10. Upon creating

the point cloud, points above the ceiling and below

the floor that were falsely registered by RTABmap

were removed. Furthermore, due to dynamic points

present at the site, excessive noise was registered in

RTABmap’s cloud. This problem was mitigated by

first constructing an octree for both the LiDAR and

camera point clouds, then finely aligning the point

clouds using Iterative Closest Point (ICP) and elim-

inating points from the RTABmap output that ex-

ceeded the tolerance distance threshold. The filtered

cloud was a dense, well-aligned, and information-rich

point cloud depicted in Figure 11. The processed

cloud map was utilized in the GUI to visualize the

real-time position of the robot.

2.2.2 Meshing

Once the map is created, statistical outlier removal

was used to eliminate sparse points. This step en-

sures that the points adversely affecting the mesh-

ing accuracy are removed. The point cloud is then

sub-sampled to reduce the computational power re-

quired for further processing. Next, each point’s nor-

mals are calculated using the triangulation method to

indicate the direction each point is facing, which is

essential for accurately reconstructing the surface of

the object. With the knowledge of normals at hand,

the Poisson surface reconstruction function in Cloud-

Compare (CloudCompare, 2023) is used to create the

mesh. This method converts the point cloud data into

a continuous surface, resulting in a detailed mesh that

accurately represents the scanned object. The Pois-

son method is particularly effective at handling com-

plex shapes, making it ideal for creating high-quality

meshes. The process can inevitably produce incorrect

meshes. To alleviate this challenge, a density scalar

field is utilized to remove inaccurate meshes by tak-

ing the 99% dense meshes and removing the rest, as

shown in Figure 13 , ensuring the final model is as

precise as possible. The meshing process is summa-

rized in Figure 12.

2.3 Video

Under the following link the video of the GUI su-

pervising the robot inspection is shown. The 3D

Mixed Reality, visual-odometry localization, and pre-

registered 3D LIDAR overlay are demonstrated here:

https://cernbox.cern.ch/s/gcjF5wtMmgSZLVX

3 CONCLUSIONS AND FURTHER

WORK

This paper addresses the current challenges and pro-

poses solutions to enhance CHARMBot’s autonomy

through the optimization of the communication net-

work, 3D perception, and GUI, as well as the inte-

gration of AI. In fact, enhancing the autonomy of

CHARMBot within the CHARM facility is critical,

particularly under constrained network environments.

Autonomous capabilities allow CHARMBot to oper-

ate more effectively and safely, even when network

performance is degraded. By equipping CHARM-

Bot with advanced sensors such as 3D LiDAR and

stereo cameras, we can ensure robust environmental

perception and efficient task execution. This reduces

the reliance on constant manual teleoperation, which

can be significantly hampered by network latency and

bandwidth limitations. The experiments conducted

have demonstrated that employing a lightweight vi-

sualization mode can mitigate TCP buffer conges-

tion and improve the operator’s experience, maintain-

ing control over the robot even in challenging net-

work conditions. Additionally, designing a super-

vised user interface is paramount for the safe opera-

tion of CHARMBot in hazardous scenarios like those

ICINCO 2024 - 21st International Conference on Informatics in Control, Automation and Robotics

450

Figure 12: Process of creating a mesh from a LiDAR point cloud, The map created using LiDAR [1] and the map created

from a stereo camera [2] are merged [3] and processed through several steps: statistical outlier removal [4] for noise reduction,

sub-sampling [5] for data simplification, normal calculation using the triangulation method [6], and ultimately mesh creation

[7]. The final step involves using a density scalar field to remove inaccurate meshes, ensuring the precision of the model [8].

Figure 13: The scalar field highlights the top 99% dense

areas to ensure the accuracy and quality of the mesh by fil-

tering out less significant regions.

present in the CHARM facility. By integrating these

autonomous behaviour and user interface enhance-

ments, CHARMBot can perform critical inspections

and tasks with higher reliability and reduced human

risk, aligning with CERN’s commitment to leverag-

ing advanced robotics for safer and more efficient op-

erations. Future work will focus on the further in-

tegration of these components and conducting proof-

of-concept experiments to demonstrate the proposed

system’s safety.

AUTHORS’ CONTRIBUTIONS

The authors confirm contribution to the article as fol-

lows. Implementation and experimentation David

Forkel, Pejman Habibiroudkenar, Enric Cervera, Ra

´

ul

Mar

´

ın-Prades, Lucas Comte and Josep Marin-Garc

´

es;

Concept and supervision Eloise Matheson, Christo-

pher McGreavy, Lucas Buonocore and Mario Di Cas-

tro.

ACKNOWLEDGEMENTS

This work has been funded by CERN (European Or-

ganization for Nuclear Research). Special thanks to

Mr. J

´

er

ˆ

ome Lendaro for his technical support in ex-

perimental phases.

REFERENCES

Castro, M. D., Tambutti, M. L. B., Ferre, M., Losito, R.,

Lunghi, G., and Masi, A. (2018). i-tim: A robotic sys-

tem for safety, measurements, inspection and main-

tenance in harsh environments. In 2018 IEEE Inter-

national Symposium on Safety, Security, and Rescue

Towards Increasing Robot Autonomy in CHARM Facility: Network Performance, 3D Perception, and Human Robot Interface

451

Robotics, SSRR 2018, Philadelphia, PA, USA, August

6-8, 2018, pages 1–6. IEEE.

Catherall, R., Andreazza, W., Breitenfeldt, M., Dorsival, A.,

Focker, G., Gharsa, T., Giles, T., Grenard, J.-L., Locci,

F., Martins, P., Marzari, S., Schipper, J., Shornikov,

A., and Stora, T. (2017). The isolde facility. Journal

of Physics G: Nuclear and Particle Physics, 44.

CERN (2024a). Cern aerospace facilities, url:

https://kt.cern/aerospace/facilities (accessed

20.07.2024).

CERN (2024b). Charm - mixed fields, url:

https://kt.cern/technologies/charm-mixed-fields

(accessed 20.07.2024).

CERN (2024c). First commercial customers in charm,

url: https://kt.cern/success-stories/first-commercial-

customers-charm (accessed 20.07.2024).

Choe, J., Joo, K., Imtiaz, T., and Kweon, I. S. (2021). Vol-

umetric propagation network: Stereo-lidar fusion for

long-range depth estimation. IEEE Robotics and Au-

tomation Letters, 6(3):4672–4679.

CloudCompare (2023). Cloudcompare 3d point cloud and

mesh processing software. Version 2.11.3.

Di Castro, M., Ferre, M., and Masi, A. (2018). Cern-

tauro: A modular architecture for robotic inspection

and telemanipulation in harsh and semi-structured en-

vironments. IEEE Access, 6:37506–37522.

Dieterle, T., Particke, F., Patino-Studencki, L., and Thi-

elecke, J. (2017). Sensor data fusion of lidar with

stereo rgb-d camera for object tracking. In 2017 Ieee

Sensors, pages 1–3. IEEE.

Forkel, D., Cervera, E., Mar

´

ın, R., Matheson, E., and

Di Castro, M. (2023). Mobile robots for teleoper-

ated radiation protection tasks in the super proton syn-

chrotron. In Gini, G., Nijmeijer, H., Burgard, W., and

Filev, D., editors, Informatics in Control, Automation

and Robotics, pages 65–82, Cham. Springer Interna-

tional Publishing.

He, D., Xu, W., Chen, N., Kong, F., Yuan, C., and Zhang, F.

(2023). Point-lio: Robust high-bandwidth light detec-

tion and ranging inertial odometry. Advanced Intelli-

gent Systems, 5.

Labb

´

e, M. and Michaud, F. (2018). Rtab-map as an open-

source lidar and visual simultaneous localization and

mapping library for large-scale and long-term online

operation. Journal of Field Robotics, 36.

Maddern, W. and Newman, P. (2016). Real-time proba-

bilistic fusion of sparse 3d lidar and dense stereo. In

2016 IEEE/RSJ International Conference on Intelli-

gent Robots and Systems (IROS), pages 2181–2188.

IEEE.

Nickels, K., Castano, A., and Cianci, C. (2003). Fusion of

lidar and stereo range for mobile robots. In Int. Conf.

on Advanced Robotics.

Szczurek, K. A., Prades, R. M., Matheson, E., Rodriguez-

Nogueira, J., and Castro, M. D. (2023). Multimodal

multi-user mixed reality human–robot interface for re-

mote operations in hazardous environments. IEEE Ac-

cess, 11:17305–17333.

ICINCO 2024 - 21st International Conference on Informatics in Control, Automation and Robotics

452