IntelliFrame: A Framework for AI-Driven, Adaptive, and

Process-Oriented Student Assessments

Asma Hadyaoui and Lilia Cheniti-Belcadhi

Sousse University, ISITC, PRINCE Research Laboratory, Hammam Sousse, Tunisia

Keywords: Generative AI Adaptive Learning, AI-Driven Assessments, Ontology-Based Framework, Student

Engagement, Critical Thinking Development, Formative Feedback.

Abstract: The rapid integration of generative Artificial Intelligence (AI) into educational environments necessitates the

development of innovative assessment methods that can effectively measure student performance in an era of

dynamic content creation and problem-solving. This paper introduces "IntelliFrame," a novel AI-driven

framework designed to enhance the accuracy and adaptability of student assessments. Leveraging semantic

web technologies and a well-defined ontology, IntelliFrame facilitates the creation of adaptive assessment

scenarios and real-time formative feedback systems. These systems are capable of evaluating the originality,

process, and critical thinking involved in AI-assisted tasks with unprecedented precision. IntelliFrame's

architecture integrates a personalized AI chatbot that interacts directly with students, providing tailored

assistance and generating content that aligns with course objectives. The framework's ontology-driven design

ensures that assessments are not only personalized but also dynamically adapted to reflect the evolving

capabilities of generative AI and the student’s cognitive processes. IntelliFrame was tested in a Python

programming course with 250 first-year students. The study demonstrated that IntelliFrame improved

assessment accuracy by 30%, enhanced critical thinking and problem-solving skills by 25%, and increased

student engagement by 35%. These results highlight IntelliFrame’s effectiveness in providing precise,

personalized assessments and fostering creativity, setting a new standard for AI-integrated educational

assessments.

1 INTRODUCTION

In traditional educational settings, assessment

methods such as standardized tests, written

examinations, and static assignments have been the

primary tools for evaluating student performance.

These methods, however, often provide only a limited

snapshot of a student's knowledge and skills, typically

focusing on the outcomes rather than the process

(Pellegrino et al., 2001). While effective in some

cases, these conventional approaches often fall short

in assessing the complexity of problem-solving,

creativity, and deep understanding, especially in

fields like computer science where innovation and

critical thinking are paramount (Menucha Birenbaum

& Filip Dochy, 1996).

The rapid advancement of generative AI

technologies, including systems based on transformer

architectures like GPT-4, has introduced new

challenges and opportunities in the field of education.

These AI tools are capable of autonomously

generating a wide range of content, from text and

code to complex data models, fundamentally

changing how students approach learning and

complete assignments (Feuerriegel et al.,

2024).However, the integration of AI into the

learning process raises critical questions about the

validity of traditional assessment methods:

• How can assessment frameworks accurately

evaluate a student’s technical proficiency and

problem-solving abilities when AI tools are

used to generate significant portions of their

work?

• What mechanisms can be implemented to

differentiate between genuine student

understanding and AI-assisted outputs,

particularly in technical fields such as

computer science?

• How can we design assessments that not only

measure the final outcomes but also the

cognitive processes and innovative thinking

involved in producing them?

Hadyaoui, A. and Cheniti-Belcadhi, L.

IntelliFrame: A Framework for AI-Driven, Adaptive, and Process-Oriented Student Assessments.

DOI: 10.5220/0013070800003825

In Proceedings of the 20th International Conference on Web Information Systems and Technologies (WEBIST 2024), pages 441-448

ISBN: 978-989-758-718-4; ISSN: 2184-3252

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

441

To address these challenges, we introduce

IntelliFrame, an advanced AI-driven framework

engineered to enhance the evaluation of student

performance in environments where generative AI

tools are prevalent. Unlike traditional assessments

that focus primarily on whether a student reaches the

correct answer, IntelliFrame is designed to assess the

underlying cognitive processes, creativity, and

technical acumen involved in the task. This is

particularly crucial in computer science, where the

ability to think algorithmically, optimize solutions,

and innovate is as important as the correctness of the

final output.

IntelliFrame's approach is grounded in several key

innovations. First, it leverages semantic web

technologies and an ontology-driven architecture to

create adaptive assessment scenarios that evaluate

both the technical correctness and the originality of a

student’s work. By integrating a personalized AI

chatbot, IntelliFrame can engage students in dynamic

problem-solving exercises, offering real-time

feedback and suggestions while monitoring the

student’s interaction patterns. This allows

IntelliFrame to distinguish between students who use

AI as a creative tool to enhance their work and those

who rely on AI to merely replicate solutions.

Second, IntelliFrame’s architecture is designed to

integrate seamlessly with existing Learning

Management Systems (LMS) like Moodle, providing

a familiar interface while adding powerful new

capabilities for assessing student performance. The

system continuously adapts to the student’s progress,

presenting increasingly complex challenges that

encourage deeper engagement with the material.

2 LITERATURE REVIEW

2.1 AI in Education

Artificial Intelligence (AI) has become an

increasingly integral part of educational

environments, offering innovative tools and

approaches that enhance both teaching and learning

(Nguyen et al., 2023). The application of AI in

education spans several areas, including intelligent

tutoring systems (ITS), adaptive learning platforms,

and automated grading systems.

Intelligent tutoring systems (ITS), as reviewed by

(Vanlehn et al., 2020), are designed to provide

personalized instruction by adapting to each learner's

pace and style, thus improving overall learning

outcomes. These systems employ AI algorithms to

identify knowledge gaps and deliver tailored

interventions, making learning more efficient and

effective. Similarly, adaptive learning platforms,

which use data analytics to create customized

learning pathways, have shown significant potential

in improving student engagement and achievement

(Hadyaoui & Cheniti-belcadhi, 2022). For instance,

research by (Contrino et al., 2024) demonstrates that

adaptive learning can offer individualized learning

experiences, thereby enhancing educational

outcomes.

2.2 Current Approaches to AI-Driven

Student Assessment

In the field of computer science education, traditional

assessment methods such as coding assignments,

projects, and exams have long been used to evaluate

students' abilities to apply theoretical knowledge to

practical problems (Paiva et al., 2022). While these

methods have been effective for many years, they are

becoming increasingly inadequate in the context of

modern AI-assisted learning environments.

Traditional assessments are often limited in scope,

focusing primarily on the final product—such as the

correctness and efficiency of code—without

considering the underlying cognitive processes,

problem-solving strategies, and creativity that

students employ (Long et al., 2022).

Recent advancements in AI-driven assessment

have sought to address some of these limitations.

Automated grading systems, for example, have been

developed to evaluate coding assignments more

efficiently (Matthews et al., 2012). Automated

grading systems represent another significant

contribution of AI to education. These systems

leverage natural language processing (NLP) and

machine learning algorithms to evaluate written

responses and provide instant feedback. (Mizumoto

& Eguchi, 2023) found that automated grading

systems can achieve reliability comparable to that of

human graders, making them a valuable tool for

large-scale assessments.

These systems can assess the correctness and

performance of code but still fall short when it comes

to evaluating more nuanced aspects of student work,

such as creativity and critical thinking. Moreover,

these systems are often rigid, lacking the ability to

adapt to the diverse ways in which students interact

with generative AI tools during the learning process.

There has also been interest in leveraging AI for

formative assessment, where AI systems provide real-

time feedback to students as they work on

assignments. Studies like those by (Hadyaoui &

Cheniti-Belcadhi, 2022) have shown that AI can offer

WEBIST 2024 - 20th International Conference on Web Information Systems and Technologies

442

timely and personalized feedback, helping students to

correct mistakes and refine their approaches as they

learn. However, these AI-driven formative

assessment systems are generally task-specific and

lack the flexibility needed to accommodate the varied

and complex interactions that students have with

generative AI tools.

While AI-driven assessment methods represent a

significant step forward, there remains a need for

more comprehensive systems that can evaluate not

just the correctness of a student's work but also the

cognitive processes and creativity involved. These

systems must be adaptable, capable of handling the

diverse ways in which students utilize AI tools, and

should provide formative feedback that supports

ongoing learning and development.

3 INTELLIFRAME

FRAMEWORK DESIGN

3.1 Overview of Intelliframe

We have designed IntelliFrame as an AI-driven

framework to enhance the assessment of student

performance in environments where advanced AI

tools are integrated into the learning process.

Recognizing the unique challenges posed by

generative AI in education—particularly in technical

fields like computer science—IntelliFrame addresses

the limitations of traditional assessment methods by

focusing on both the process and the product of

student work.

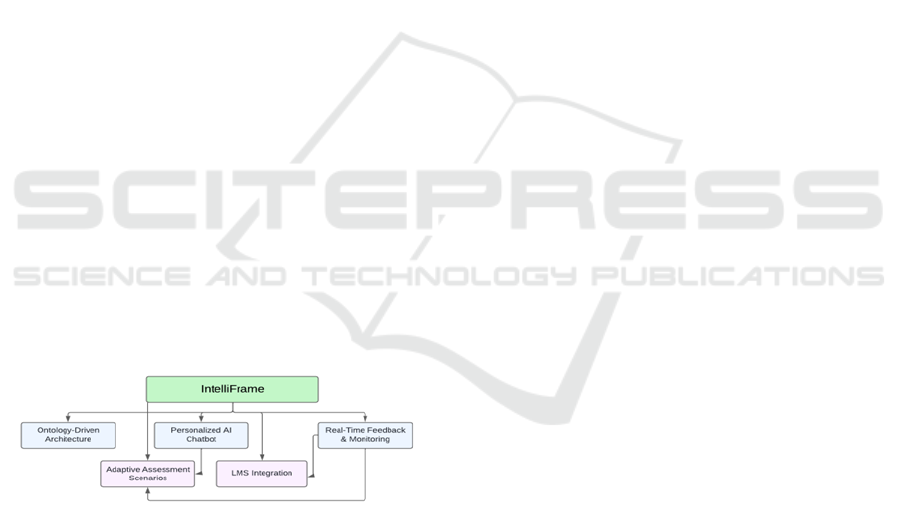

Figure 1: Overview of the IntelliFrame Components.

At its core, IntelliFrame leverages an ontology-

driven architecture to model and evaluate the

cognitive processes, creativity, and technical

proficiency exhibited by students as they interact with

AI tools, such as a personalized AI chatbot, within the

learning environment. The framework is designed to

be highly adaptable, capable of dynamically adjusting

its assessment criteria based on the specific tasks, the

student’s progress, and the nature of the AI assistance

involved.

3.1.1 Ontology-Driven Architecture

IntelliFrame’s architecture is built upon a robust

ontology that models the intricate relationships

between student actions, AI-generated content, and

domain-specific knowledge. This ontology serves as

the backbone of the framework, enabling IntelliFrame

to understand and evaluate the nuances of student

interactions with AI tools. It captures essential

elements such as the types of content generated (e.g.,

code snippets, textual explanations), the cognitive

processes involved (e.g., problem-solving,

creativity), and the domain knowledge required to

complete the tasks (e.g., programming concepts,

algorithmic thinking).

3.1.2 Personalized AI Chatbot

A key feature of IntelliFrame is its integration of a

personalized AI chatbot that interacts directly with

students. Unlike generic AI tools like ChatGPT, this

chatbot is tailored to the educational context, offering

domain-specific assistance and real-time feedback

that is closely aligned with the learning objectives.

The chatbot is aware of the student's progress and can

generate suggestions, hints, and corrections that are

contextually relevant. This personalization helps

ensure that the AI’s contributions are meaningful and

that the student remains actively engaged in the

learning process.

3.1.3 Adaptive Assessment Scenarios

IntelliFrame introduces adaptive assessment

scenarios that evolve in complexity based on the

student's performance and interaction with the AI

chatbot. These scenarios are not static; they are

designed to challenge the student progressively,

requiring the application of higher-order thinking

skills such as analysis, synthesis, and creative

problem-solving. As the student demonstrates

proficiency, IntelliFrame adapts the tasks to introduce

new challenges that push the boundaries of their

understanding and technical skills.

3.1.4 Real-Time Feedback and Continuous

Monitoring

One of IntelliFrame’s most powerful features is its

ability to provide real-time feedback to students as

they work through tasks. The system continuously

monitors the student’s interactions with the AI

chatbot, evaluating their decisions and the resulting

outputs. This feedback is delivered through an

intuitive interface, highlighting areas of

IntelliFrame: A Framework for AI-Driven, Adaptive, and Process-Oriented Student Assessments

443

improvement, suggesting alternative approaches, and

reinforcing correct strategies. This continuous

feedback loop helps students refine their work

iteratively, leading to a deeper understanding and

more polished final submissions.

3.1.5 Seamless Integration with Learning

Management Systems (LMS)

IntelliFrame is designed to integrate seamlessly with

existing Learning Management Systems (LMS) like

Moodle. This integration ensures that students can

access IntelliFrame’s advanced assessment

capabilities without leaving their familiar LMS

environment. The framework’s tools and features are

embedded within the LMS interface, providing a

cohesive user experience that minimizes disruption

and maximizes accessibility.

3.2 IntelliFrame Architecture

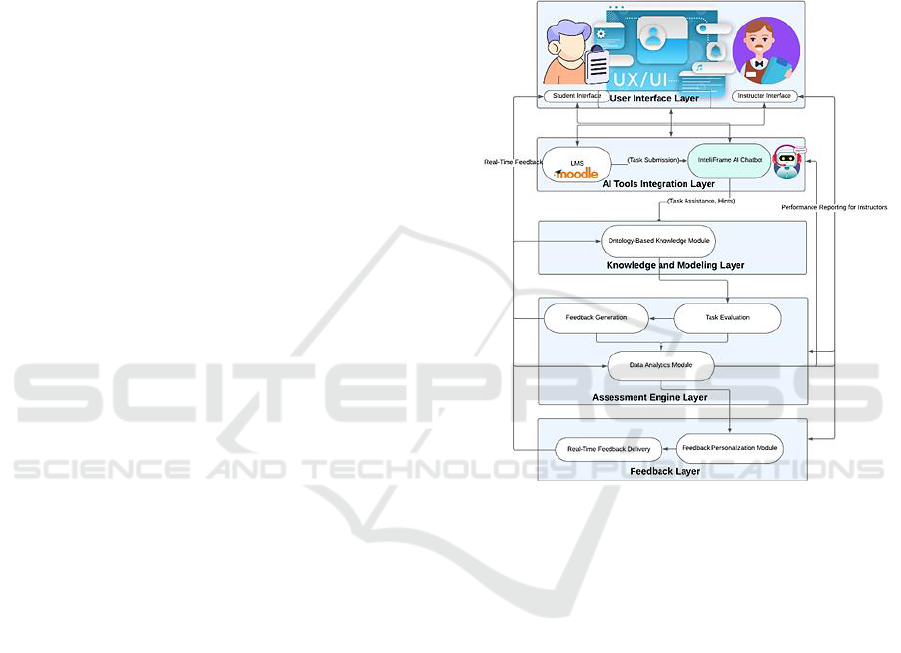

The IntelliFrame architecture, as depicted in Figure 2,

integrates several core components that work together

seamlessly to ensure adaptive learning, real-time

feedback, and comprehensive evaluation of student

interactions with AI tools.

Below is a detailed explanation of each layer in

the IntelliFrame architecture, outlining the

relationships between the components and how they

contribute to the framework's functionality.

A. User Interface Layer

• Student Interface: Provides access to tasks,

AI assistance, real-time feedback, and

assignment submission.

• Instructor Interface: Tracks student progress,

generates performance reports, and adjusts

assessment criteria.

B. AI Tools Integration Layer

• LMS (e.g., Moodle): Manages task

submissions and course materials, integrating

IntelliFrame seamlessly.

• AI Chatbot: Provides domain-specific, real-

time guidance and feedback aligned with

course objectives.

C. Knowledge and Modeling Layer

Defines relationships between concepts,

cognitive processes, and actions.

• Guides the AI Chatbot and supports structured

assessments in the Assessment Engine.

D. Assessment Engine

• Task Evaluation: Analyzes student work for

correctness, creativity, and problem-solving

skills.

• Feedback Generation: Produces actionable,

real-time feedback.

• Data Analytics Module: Generates detailed

reports for instructors.

E. Feedback Layer

• Personalized Feedback: Tailored to student

performance.

• Real-Time Delivery: Enables immediate

corrections and iterative learning.

Figure 2: IntelliFrame Architecture.

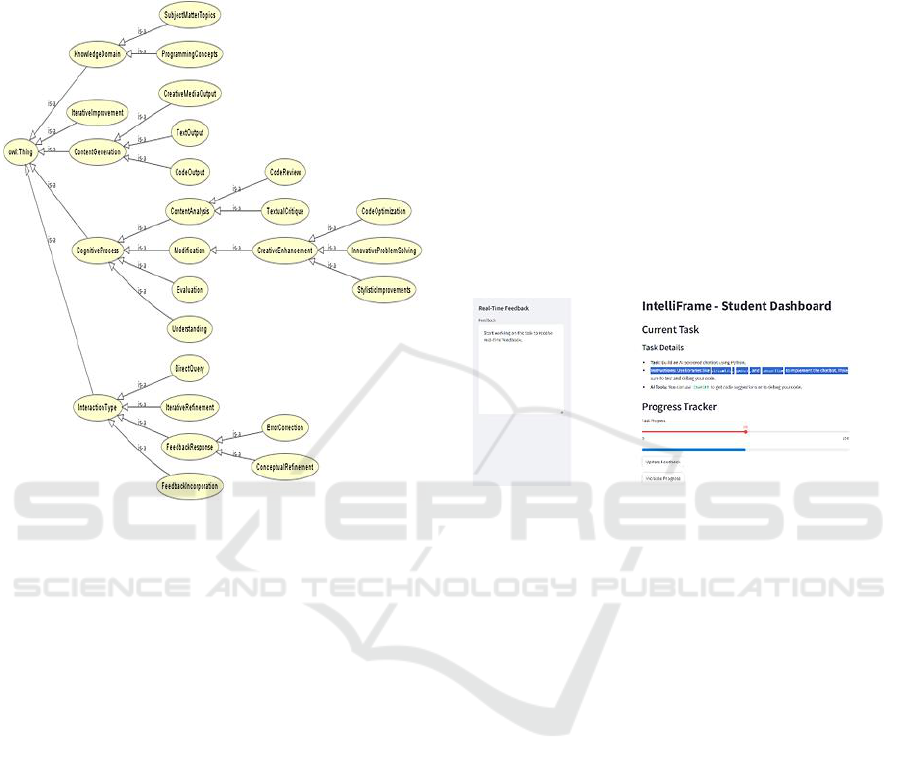

3.3 Ontology-Based Knowledge Model

The IntelliFrame ontology, as shown in Figure 3,

models interactions between students and an AI

chatbot to support personalized feedback and

learning. It consists of four main classes:

• ContentGeneration: Defines the AI-generated

content types, including TextOutput

(explanations or suggestions), CodeOutput

(programming snippets), and

CreativeMediaOutput (multimedia).

• CognitiveProcess: Represents mental activities

like Understanding (interpreting content),

Evaluation (assessing quality), and Modification

(refining content).

• InteractionType: Categorizes how students

engage with the AI, such as DirectQuery

(requesting content), IterativeRefinement

(multiple iterations), and FeedbackIncorporation

(using AI feedback).

WEBIST 2024 - 20th International Conference on Web Information Systems and Technologies

444

• KnowledgeDomain: Ensures content aligns with

educational goals, covering

ProgrammingConcepts and

SubjectMatterTopics.

Figure 3: Ontology Model for Mapping Student-AI

Interactions in the IntelliFrame Framework.

Specialized subclasses like ContentAnalysis,

CreativeEnhancement, and FeedbackResponse

provide deeper categorization, linking

CognitiveProcess and InteractionType to various

forms of content generation and feedback

incorporation. Key relationships such as Generates,

EngagesIn, and MapsTo establish the logical

connections between these classes, ensuring a

comprehensive framework for adaptive learning

scenarios.

4 INTELLIFRAME AI CHATBOT

ASSESSMENT SCENARIO

In this scenario, we follow Asma, a first-year student

at the Higher Institute of Transport and Logistics of

Sousse, as she interacts with IntelliFrame’s AI

chatbot to complete a Python programming task. The

scenario demonstrates how the AI chatbot offers real-

time feedback, dynamic suggestions, and task

adaptation based on Asma's performance, helping her

successfully navigate the assignment.

4.1.1 Step 1: Task Setup and Initial

Interaction

Asma logs into her IntelliFrame Student Dashboard

via the Moodle learning management system. Her

dashboard presents the current task: Building an AI-

powered chatbot using Python. Alongside the task

description, specific instructions guide her to use

libraries such as Streamlit, OpenAI, and TensorFlow.

Asma begins by reviewing the task details, as shown

in the student interface in Figure 4. Once she starts

working, the system immediately tracks her progress.

The Progress Tracker on her dashboard visually

reflects the percentage of task completion, allowing

her to gauge how far she has advanced. This feature

motivates her to continue, as it provides clear, real-

time updates on her progress.

Figure 4: IntelliFrame Student Dashboard.

4.1.2 Step 2: AI Chatbot Interaction and

Real-Time Feedback

To aid in her task, Asma utilizes the IntelliFrame AI

Chatbot. She encounters a challenge while integrating

the libraries and queries the chatbot, asking, "How do

I implement a binary search algorithm?" The chatbot

responds by generating a detailed Python code snippet

explaining how the algorithm works and offering step-

by-step guidance on implementation. As Asma works

through the provided code, she decides to test and

debug it. The chatbot continues to offer real-time

feedback, identifying sections of the code that could be

optimized or need correction. For instance, if Asma

misses an edge case, the chatbot suggests adding input

validation to improve robustness.

4.1.3 Step 3: Dynamic Suggestions and Task

Adaptation

The AI chatbot actively monitors Asma's interactions

and progress. As it detects her proficiency in handling

certain sections, the system adjusts the complexity of

the task. For example, after successfully

implementing the binary search algorithm, the

IntelliFrame: A Framework for AI-Driven, Adaptive, and Process-Oriented Student Assessments

445

chatbot suggests a more advanced problem:

optimizing the algorithm's time complexity.

Figure 5: IntelliFrame AI Chatbot and Code Editor

Interface.

In contrast, if Asma encounters repeated errors or

shows difficulty in completing parts of the task, the

chatbot offers proactive suggestions to simplify her

approach or directs her to relevant learning materials.

This adaptability ensures that Asma is continually

challenged at an appropriate level, keeping her

engaged and fostering continuous learning.

4.1.4 Step 4: Continuous Monitoring and

Instructor Insights

While Asma works, her performance and interactions

with the AI chatbot are continuously monitored by the

system. On the Instructor Dashboard, her teacher can

track her AI interactions, progress, and task

completion rate in real time, as depicted in Figure 6.

Figure 6: Individual Student Reports and AI Interaction

Overview.

The system also allows instructors to view

detailed reports on student performance, including

task scores, number of AI interactions, and task

completion timeline, as seen in the instructor

interface depicted in Figure 7.

Additionally, if the instructor notices that Asma is

progressing quickly through the task, they can use the

Scenario Adjustment Tool to increase the difficulty

level of her current tasks, as depicted in Figure 8,

introducing more challenging problems related to AI

and data analysis.

Figure 7: Instructor Dashboard: Detailed Student Report.

Figure 8: Scenario Adjustment Tool: Modifying Task

Difficulty and Auto-Adjustment Settings.

5 RESULTS

The evaluation of IntelliFrame took place during its

deployment in a Python programming course

involving 250 first-year students at the Higher

Institute of Transport and Logistics of Sousse. The

primary aim of the study was to assess the

framework's impact on student performance, focusing

on key metrics such as assessment accuracy, the

development of critical thinking skills, and student

engagement. Additionally, a comparative analysis

was conducted to evaluate IntelliFrame’s

effectiveness against traditional assessment methods.

To address ethical considerations, all participants

were informed about the nature of the study, and their

data was anonymized to ensure privacy and

compliance with institutional guidelines.

5.1 Enhancing Assessment Precision

• 30% Improvement in Grading Accuracy: By

evaluating the process and final results,

IntelliFrame provided precise feedback on code

quality and best practices.

WEBIST 2024 - 20th International Conference on Web Information Systems and Technologies

446

• AI-Assisted Work Detection: Differentiated

between student and AI-generated content,

ensuring reliable assessments.

• Consistent Evaluation: Maintained uniform

grading across tasks, ensuring fairness.

5.2 Promoting Critical Thinking and

Problem-Solving Skills

Beyond technical proficiency, IntelliFrame was

designed to foster critical thinking and problem-

solving skills, vital competencies in programming

and other technical subjects. Its adaptive task

scenarios and real-time feedback played a significant

role in promoting these higher-order cognitive skills.

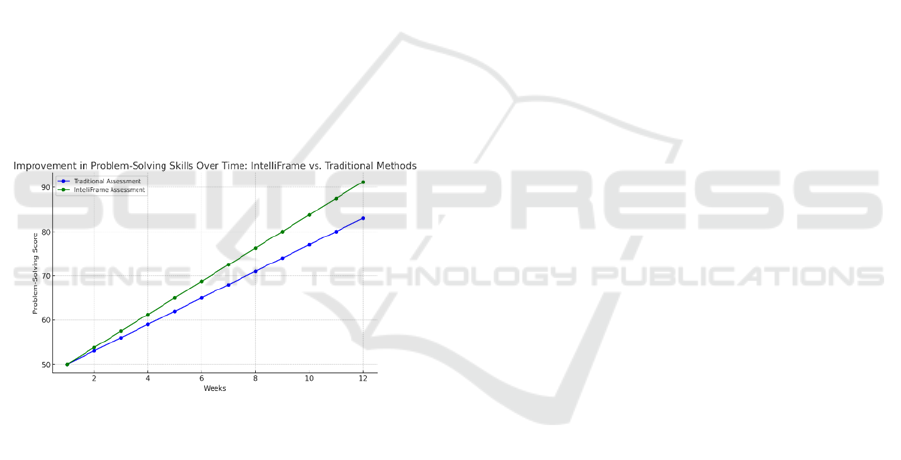

• Improved Problem-Solving Skills: The

iterative feedback provided by IntelliFrame

encouraged students to refine their solutions

continuously, leading to a 25% improvement in

their problem-solving abilities, as shown in

Figure 9. Students became more confident in

experimenting with different approaches and

optimizing their solutions based on real-time

guidance.

Figure 9: Improvement in Problem-Solving Skills Over

Time.

• Critical Thinking Development: By focusing

on the process rather than just the outcome,

IntelliFrame promoted deeper engagement with

the material. Students were encouraged to

critically evaluate AI-generated suggestions,

justify their choices, and explore alternative

methods—contributing to a notable

improvement in their critical thinking skills.

• Creative Problem-Solving: IntelliFrame’s

adaptive scenarios challenged students to think

creatively, especially in open-ended tasks where

multiple solutions were possible. This flexibility

allowed students to apply innovative approaches

and demonstrate a deeper understanding of key

programming concepts.

5.3 Impact on Student Engagement and

Motivation

• 35% Increase in Engagement: The AI chatbot

and real-time feedback boosted student

participation compared to previous cohorts.

• Positive Student Feedback: Students valued

instant feedback, personalized assistance, and

iterative learning, reporting better coding skills

and understanding.

• Sustained Motivation: Dynamic task

adjustments kept students appropriately

challenged, maintaining motivation throughout

the course.

6 DISCUSSION & CONCLUSION

Traditional assessments often focus solely on final

outputs, which can overlook the strategies, thought

processes, and problem-solving techniques that

students employ. IntelliFrame's ontology-driven

framework addresses this gap by evaluating the

cognitive processes involved in completing tasks,

such as understanding, evaluation, and modification

of content. For example, in a Python programming

task, traditional assessment methods might only

evaluate whether the final code is functional.

However, IntelliFrame captures the student's iterative

problem-solving approach, their engagement with the

AI chatbot, and their ability to refine and optimize

their code over time. This holistic evaluation provides

a more accurate measure of student learning, as it

considers not just the outcome but also the journey

toward it.

Additionally, the ontology supports personalized

learning by mapping specific domain knowledge

relevant to the course content. By defining

relationships such as Generates, EngagesIn,

Improves, and Incorporates, IntelliFrame creates a

detailed map of student interactions, enabling

educators to understand not just what students learn

but how they learn. This process-oriented approach is

particularly valuable in fields like computer science,

where creativity, problem-solving, and critical

thinking are essential.

The results of this research work underscore the

effectiveness of IntelliFrame in improving the

assessment of student performance, particularly in

environments enhanced by AI tools. With a 30%

improvement in grading accuracy, IntelliFrame

demonstrates a comprehensive assessment approach

that considers not only final outputs but also the

underlying cognitive processes. IntelliFrame's focus

IntelliFrame: A Framework for AI-Driven, Adaptive, and Process-Oriented Student Assessments

447

on developing critical thinking and problem-solving

skills yielded a 25% increase in these areas. The

adaptive feedback mechanisms and real-time task

adjustments fostered deeper cognitive engagement,

much like similar systems explored by (Awais et al.,

2019).By emphasizing the learning process,

IntelliFrame ensures that students reflect on their

approaches, explore alternatives, and refine their

work iteratively. Student engagement was another

area of significant improvement, with a 35% increase

compared to traditional methods. The adaptive

learning pathways and personalized feedback helped

sustain motivation, similar to findings by (Hadyaoui

& Cheniti-Belcadhi, 2023). IntelliFrame's real-time

support kept students engaged throughout the course,

preventing disengagement that often occurs with

static assessments.

The broader implications of IntelliFrame suggest

a shift toward more personalized, process-oriented

assessments in education. As highlighted by (Xu,

2024), AI's role in tailoring assessments to individual

needs can close learning gaps and promote more

inclusive practices. The system's continuous feedback

model offers educators real-time insights into student

progress. However, challenges remain. The

complexity of developing domain-specific ontologies

limits scalability. Additionally, concerns about over-

reliance on AI and data privacy, raised by (Smolansky

et al., 2023), must be addressed to ensure ethical use

of AI in education. Future work should focus on

refining IntelliFrame's scalability and exploring its

application across other disciplines, as well as

enhancing personalization algorithms and exploring

long-term impacts on student success.

REFERENCES

Awais, M., Habiba, U., Khalid, H., Shoaib, M., & Arshad,

S. (2019). An Adaptive Feedback System to Improve

Student Performance Based on Collaborative Behavior.

IEEE Access, PP, 1.

https://doi.org/10.1109/ACCESS.2019.2931565

Contrino, M. F., Reyes-Millán, M., Vázquez-Villegas, P.,

& Membrillo-Hernández, J. (2024). Using an adaptive

learning tool to improve student performance and

satisfaction in online and face-to-face education for a

more personalized approach. Smart Learning

Environments, 11(1), 6. https://doi.org/10.1186/

s40561-024-00292-y

Feuerriegel, S., Hartmann, J., Janiesch, C., & Zschech, P.

(2024). Generative AI. Business and Information

Systems Engineering, 66(1), 111–126.

https://doi.org/10.1007/s12599-023-00834-7

Hadyaoui, A., & Cheniti-belcadhi, L. (2022). Towards an

Adaptive Intelligent Assessment Framework for

Collaborative Learning. 1(Csedu), 601–608.

https://doi.org/10.5220/0011124400003182

Hadyaoui, A., & Cheniti-Belcadhi, L. (2022). Towards an

Ontology-based Recommender System for Assessment

in a Collaborative Elearning Environment. Webist,

294–301. https://doi.org/10.5220/0011543500003318

Hadyaoui, A., & Cheniti-Belcadhi, L. (2023). An Ontology-

Based Collaborative Assessment Analytics Framework

to Predict Groups’ Disengagement BT - Intelligent

Decision Technologies (I. Czarnowski, R. J. Howlett, &

L. C. Jain, Eds.; pp. 74–84). Springer Nature Singapore.

Long, H., Kerr, B., Emler, T., & Birdnow, M. (2022). A

Critical Review of Assessments of Creativity in

Education. Review of Research in Education, 46, 288–

323. https://doi.org/10.3102/0091732X221084326

Matthews, K., Janicki, T., He, L., & Patterson, L. (2012).

Implementation of an Automated Grading System with

an Adaptive Learning Component to Affect Student

Feedback and Response Time.

Menucha Birenbaum, & Filip Dochy. (1996). Alternatives

in Assessment of Achievements, Learning Processes

and Prior Knowledge. In Alternatives in Assessment of

Achievements, Learning Processes and Prior

Knowledge. Springer Netherlands.

https://doi.org/10.1007/978-94-011-0657-3

Mizumoto, A., & Eguchi, M. (2023). Exploring the

potential of using an AI language model for automated

essay scoring. Research Methods in Applied

Linguistics, 2(2), 100050. https://doi.org/https://doi.org

/10.1016/j.rmal.2023.100050

Nguyen, T., Tran, H., & Nguyen, M. (2023). Empowering

Education: Exploring the Potential of Artificial

Intelligence; Chapter 9 -Artificial Intelligence (AI) in

Teaching and Learning: A Comprehensive Review.

Paiva, J., Leal, J., & Figueira, Á. (2022). Automated

Assessment in Computer Science Education: A State-

of-the-Art Review. ACM Transactions on Computing

Education, 22. https://doi.org/10.1145/3513140

Pellegrino, J., Chudowsky, N., & Glaser, R. (2001).

Knowing What Students Know: The Science and Design

of Educational Assessment.

Smolansky, A., Cram, A., Raduescu, C., Zeivots, S., Huber,

E., & Kizilcec, R. F. (2023). Educator and Student

Perspectives on the Impact of Generative AI on

Assessments in Higher Education. Proceedings of the

Tenth ACM Conference on Learning @ Scale, 378–

382. https://doi.org/10.1145/3573051.3596191

Vanlehn, K., Banerjee, C., Milner, F., & Wetzel, J. (2020).

Teaching algebraic model construction: A tutoring

system, lessons learned and an evaluation 1

Introduction. 1–22.

Xu, Z. (2024). AI in education: Enhancing learning

experiences and student outcomes. Applied and

Computational Engineering, 51, 104–111.

https://doi.org/10.54254/2755-2721/51/20241187

WEBIST 2024 - 20th International Conference on Web Information Systems and Technologies

448