Advancing Urban Transportation Management: A Comprehensive

Review of Computer Vision-Based Vehicle Detection

and Counting Systems

Manish Mathur

1

, Mrinal Kanti Sarkar

2

and G. Uma Devi

1

1

University of Engineering and Management Jaipur, Rajasthan, India

2

Dept. of Computer Science, Sri Ramkrishna Sarada Vidya Mahapitha, West Bengal, India

Keywords: Urban Transportation Management, Computer Vision, Vehicle Detection, Vehicle Counting, Traffic Control,

Real-Time Monitoring, Deep Learning, Traffic Flow Optimization, Transportation Efficiency, Road Safety.

Abstract: In the landscape of urban transportation management, computer vision-based vehicle detection and counting

systems have emerged as transformative solutions. This review delves into the evolution and efficacy of such

systems in modern traffic control. Examining a spectrum of methodologies, from traditional to deep learning

approaches, the study highlights how computer vision accurately tracks and tallies vehicles on roads and

highways. These systems provide real-time insights, aiding authorities in identifying congestion points,

optimizing signal timings, and implementing dynamic lane management strategies. Moreover, they facilitate

diverse applications like toll collection and parking management, enhancing overall transportation efficiency

and safety. With their adaptability across environments and seamless integration into existing infrastructure,

these systems are indispensable for modern transportation authorities. This review emphasizes their role in

advancing urban transportation management, promising tangible enhancements in traffic flow efficiency,

safety, and urban mobility.

1 INTRODUCTION

In the landscape of urban transportation management,

the efficient flow of vehicles is critical for ensuring

smooth mobility, minimizing congestion, and

enhancing road safety. However, the increasing

complexity of modern road networks coupled with

the rise in vehicular traffic poses significant

challenges for conventional traffic control methods.

In this con text, the integration of advanced

technologies such as computer vision has emerged as

a promising solution to address these challenges.

Computer vision-based vehicle detection and

counting systems leverage sophisticated image

processing techniques to analyze video feeds from

cameras or sensors, enabling the accurate

identification and tracking of vehicles on roads and

highways. These systems play a pivotal role in

providing real-time insights into traffic dynamics,

empowering transportation authorities to make data-

informed decisions for optimizing traffic flow and

alleviating congestion.

This comprehensive review aims to explore the

evolution, methodologies, and real-world

applications of computer vision-based vehicle

detection and counting systems in urban

transportation management. By analyzing a diverse

range of studies, methodologies, and applications,

this review seeks to provide insights into the

significance and effectiveness of these systems in

revolutionizing traffic control practices.

Through meticulous examination of the existing

literature, this review will elucidate the underlying

principles of computer vision-based vehicle detection

systems, ranging from traditional feature-based

approaches to state-of-the-art deep learning

techniques. Additionally, it will highlight the various

applications of these systems, including toll

collection, parking management, and traffic violation

detection, emphasizing their role in enhancing overall

transportation efficiency and safety. Furthermore,

this review will identify key research challenges and

opportunities for innovation in the field, aiming to

contribute to the advancement of urban transportation

management practices. By synthesizing findings from

196

Mathur, M., Sarkar, M. K. and Devi, G. U.

Advancing Urban Transportation Management: A Comprehensive Review of Computer Vision-Based Vehicle Detection and Counting Systems.

DOI: 10.5220/0013305600004646

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 1st International Conference on Cognitive & Cloud Computing (IC3Com 2024), pages 196-204

ISBN: 978-989-758-739-9

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

a wide range of sources, this review seeks to provide

a comprehensive understanding of the current state-

of-the-art and future directions of computer vision-

based vehicle detection and counting systems in real-

world traffic management.

2 LITERATURE REVIEW

The literature review encompasses recent

advancements in vehicle detection technologies

spanning from 2015 to 2023. It discusses

methodologies such as SINet for scale-insensitive

detection, Faster R-CNN for improved performance,

and various approaches addressing challenges like

shadow detection, real-time detection, and object

classification. The motive for presenting the literature

review in tabular format is to provide a concise

summary of each technology's, aiding researchers in

comprehensively understanding and comparing

different methodologies in the field of vehicle

detection.

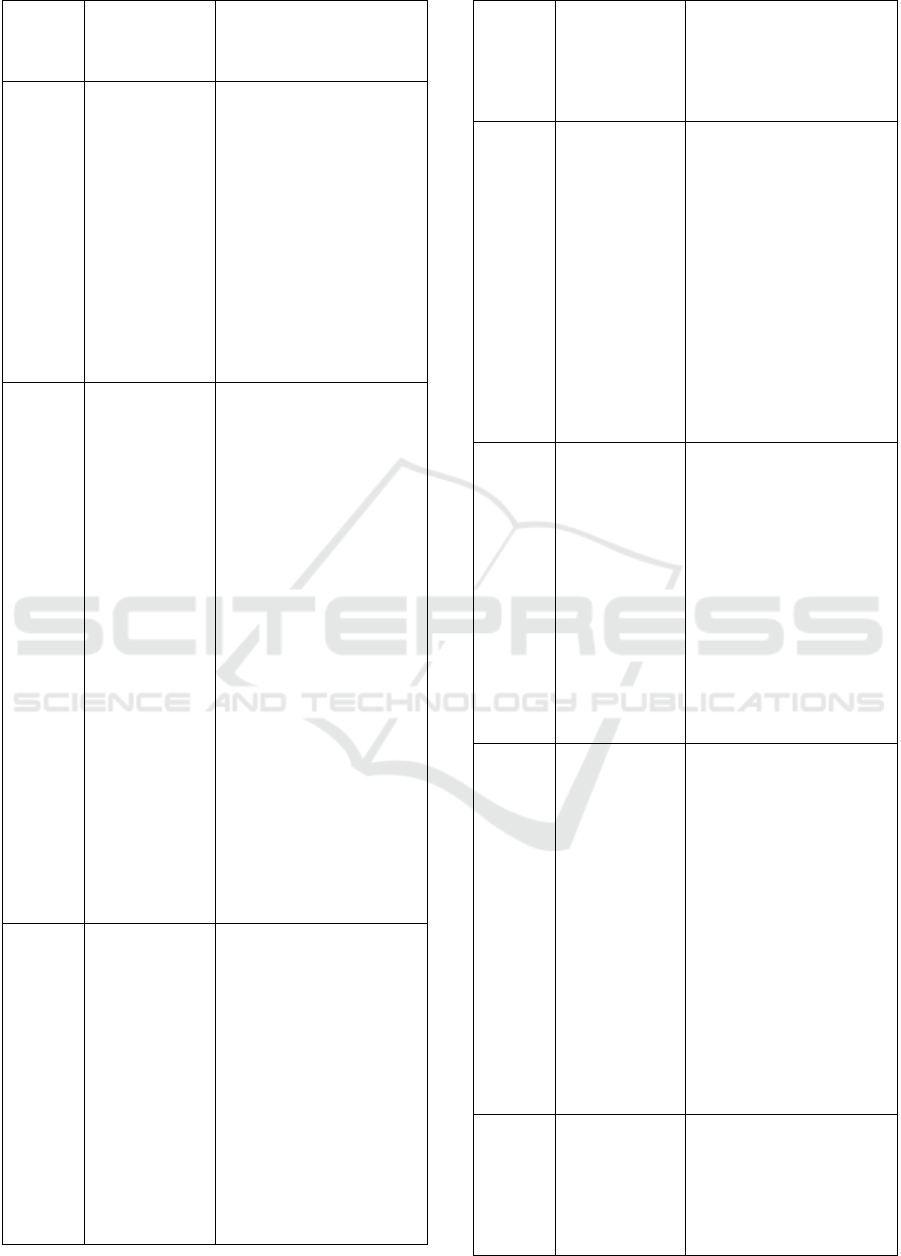

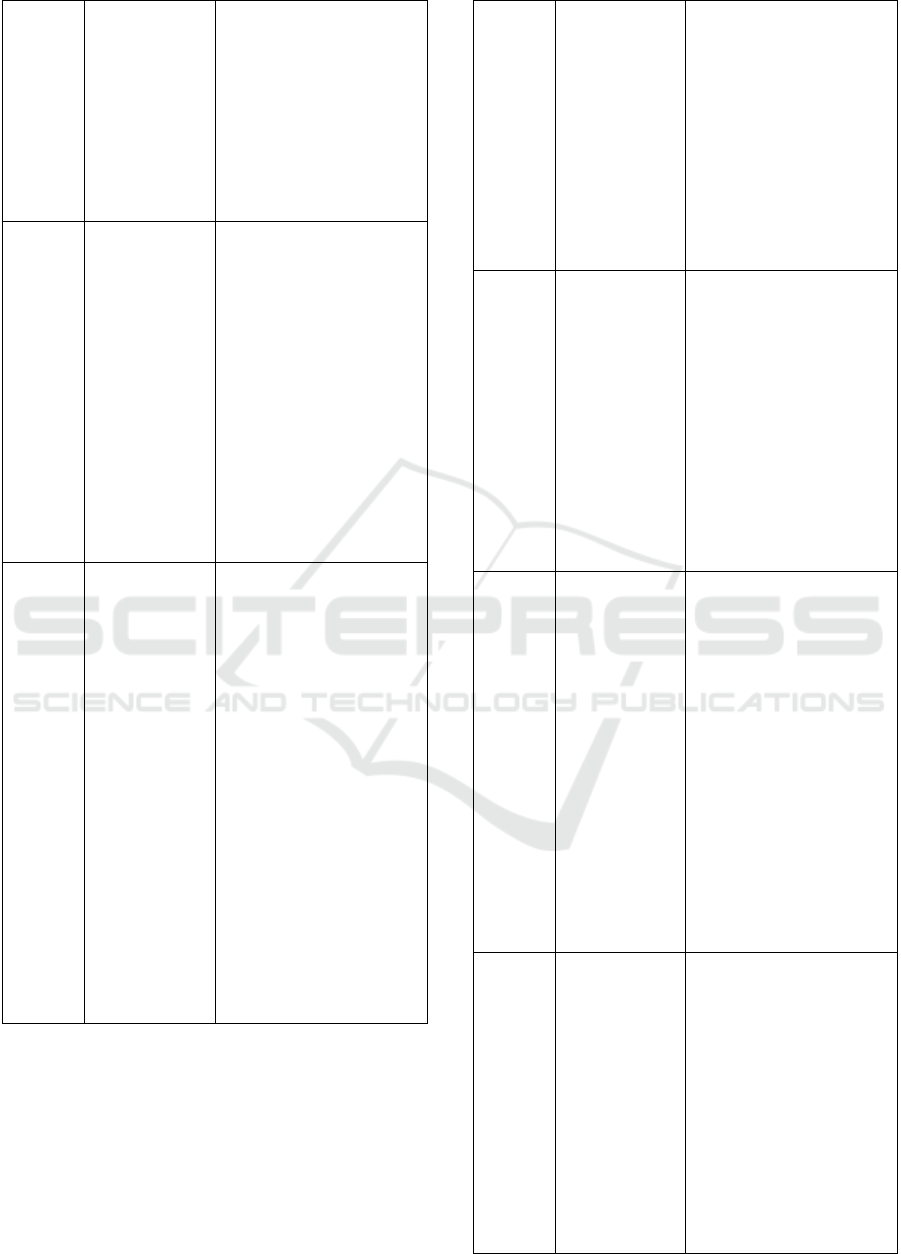

Table 1. Summary of Recent Advancements in Vehicle

Detection Technologies (2015-2023).

Ref.

(Year)

Technology Overall Concept

[1]

(2024)

The Artificial

Hummingbird

Optimization

Algorithm

(AHOA) with

Hierarchical

Deep Learning

for Traffic

Management

(HDLTM)

Advantages: Improved

traffic flow prediction,

Enhanced traffic

management in smart

cities, Real-time traffic

flow prediction.

Limitations: Complexity

in hyperparameter tuning.

Datasets: Raw sensor data.

Evaluation Criteria: Mean

Absolute Percentage

Error, Root Mean Square

Error, Mean Absolute

Error, Equal Coefficient,

Runtime.

[2]

(2024)

Faster R-CNN

with

Deformable

Convolutional

Network

Advantages: Enhanced

detection accuracy for

vehicles in low-light

conditions, Improved

precision in bounding box

position prediction,

Addressing sample

imbalance for enhanced

learning effectiveness,

Reduction in missed

detections through Soft-

NMS.

Limitations: Potential

dependency on specific

dataset characteristics,

Sensitivity to parameter

tuning.

Datasets: UA-DETRAC,

BDD100K.

Evaluation Criteria:

Nighttime Detection

Accuracy, Model

Complexity, Learning

Effectiveness,

Localization Precision.

[3]

(2024)

YOLOv8

architecture

with FasterNet,

Decoupled

Head,

Deformable

Attention

Module

(DAM),

MPDIoU loss

function

Advantages: Enhanced

feature extraction from

satellite images, Improved

computational efficiency,

Increased sensitivity to

small targets, Enhanced

feature correlation capture.

Limitations: Minor

reduction in Frames Per

Second (FPS).

Datasets: Satellite Remote

Sensing Images.

Evaluation Criteria:

Precision, Recall, Mean

Average Precision.

[4]

(2024)

MV2_S_YE

Object

Detection

Algorithm

Advantages: MobileNetV2

backbone reduces

complexity, improving

speed; Integrates channel

attention and SENet for

accuracy.

Limitations: Sacrifices

some accuracy, Increased

complexity, Requires

parameter tuning.

Datasets: Pascal VOC,

Udacity, KAIST.

Evaluation Criteria: mAP at

IoU 0.5, FPS detection

speed.

[5]

(2023)

R-YOLOv5

with Angle

Prediction

Branch, CSL

Angle

Classification,

Cascaded

STrB, FEAM,

ASFF

Advantages: Effective

detection of rotating

vehicles in drone images,

Enhanced feature fusion

and semantic information,

Improved utilization of

detailed information

through local feature self-

supervision, Multi-scale

feature fusion for better

object detection.

Limitations: Potential

sensitivity to complex

environmental conditions,

Performance may vary

depending on dataset

characteristics.

Datasets: Drone-Vehicle

Dataset, UCAS-AOD

Advancing Urban Transportation Management: A Comprehensive Review of Computer Vision-Based Vehicle Detection and Counting

Systems

197

Remote Sensing Dataset.

Evaluation Criteria:

Detection Accuracy, Para-

mete

r

Count, Frame Rate.

[6]

(2022)

YOLOv4

optimization

with attention

mechanism

and enhanced

FPN

Advantages: Suppression

of interference features in

images, Enhanced feature

extraction, Improved object

detection and classification

performance.

Limitations: May require

substantial computational

resources.

Datasets: BIT-Vehicle

dataset, UA-DETRAC

dataset.

Evaluation Criteria: Mean

Average Precision (mAP),

F1 score.

[7]

(2022)

Improved

Lightweight

RetinaNet for

SAR Ship

Detection

Advantages: Utilizes ghost

modules and reduced deep

convolutional layers for

efficiency, Embeds spatial

and channel attention

modules for enhanced

detectability, Adjusts aspect

ratios using K-means

clustering algorithm.

Limitations: Potential loss

of representation power

with shallower convolu-

tional layers, Complexity of

architecture may impact

interpretability, K-means

clustering may require

careful parameter tuning.

Datasets: SSDD dataset,

Gaofen-3 mini dataset,

Hisea-1 satellite SAR

image.

Evaluation Criteria: Detec-

tion accuracy, Recall ratio,

Reduction in floating-point

operations and parameters,

Robustness to small

datasets.

[8]

(2021)

YOLOv4 with

Secondary

Transfer

Learning and

Hard Negative

Example

Mining

Advantages: Enhanced

detection of severely

occluded vehicles in weak

infrared aerial images,

Utilization of secondary

transfer learning for

improved model

performance.

Limitations: Potential

sensitivity to variations and

environmental conditions,

Computational associated

with successive transfer

learning.

Datasets: UCAS_AOD

Visible Dataset, VIVID

Visible Dataset, VIVID

Infrared Dataset.

Evaluation Criteria:

Average Precision, F1

Score, False Detection Rate

Reduction.

[9]

(2021)

Computer

Vision, Time-

Spatial Image

(TSI)

Advantages: Fast and

accurate vehicle counting,

Efficient traffic volume

estimation, Utilization of

attention mechanism for

enhanced feature extraction.

Limitations: Reliance on

manual annotation for TSI

creation, Potential

challenges in handling

complex traffic scenarios.

Datasets: UA-DETRAC

Dataset.

Evaluation Criteria:

Accuracy, Speed, Traffic

Volume Estimation.

[10]

(2021)

W-Net: Multi-

Feature CNN

Advantages: Addresses

segmentation challenges,

Utilizes

contracting/expanding

networks, Incorporates

inception layers and

refinement modules.

Limitations: Requires

sufficient training data,

Increased computational

complexity.

Datasets: Water body, Crack

detection.

Evaluation Criteria: Accu-

racy, IoU, Precision, Recall.

[11]

(2020)

Enhanced tiny-

YOLOv3 with

Contextual

Feature

Integration, SPP

Module, Grid

Size

Adjustment, K-

means

Clustering

Advantages: Improved

recognition rates in

complex road

environments, Enhanced

real-time performance,

Increased feature extraction

capability through

contextual information and

SPP module.

Limitations: Sensitivity to

variations in road and

lighting conditions,

Performance degradation in

highly cluttered scenes.

Datasets: KITTI Datasets.

Evaluation Criteria:

Average Accuracy,

Detection Speed.

[12]

(2020)

Multi-Modal

Fusion, DNN

Advantages: Blends

features from multiple

ConvNets, enhancing DR

recognition, Utilizes

pooling for better

representation, Dropout

aids convergence.

IC3Com 2024 - International Conference on Cognitive & Cloud Computing

198

Limitations: Increased

computational complexity,

Dependency on labeled

data, Interpretability

challenges.

Datasets: Kaggle APTOS

2019.

Evaluation Criteria:

Accuracy, Kappa Statistic

for DR identification and

severity prediction.

[13]

(2020)

MobileNetV2-

SVM

Advantages: Uses efficient

MobileNetV2 architecture,

Combines with SVM for

improved performance,

Data augmentation

enhances model

generalization.

Limitations: May capture

fewer complex features,

Dependency on data

quality, SVM integration

requires tuning.

Datasets: APTOS 2019.

Evaluation Criteria:

Quadratic Weighted Kappa,

Accuracy, AUROC for each

DR se

v

erity class.

[14]

(2020)

Aggregation

Channel

Attention

Network

(ACAN) - Deep

Learning for

Glaucoma

Diagnosis

Advantages: Utilizes

context information

effectively for semantic

segmentation, Achieves

high accuracy in optic disc

segmentation tasks for

glaucoma diagnosis.

Limitations: May require

substantial computational

resources due to the

integration of channel

dependencies and multi-

scale information.

Datasets: Messidor dataset,

RIM-ONE dataset.

Evaluation Criteria:

Overlapping Error,

Segmentation accuracy,

Computational Efficiency,

DiceCoefficient, Cross

Entropy Loss, Balanced

contribution of loss

functions.

[15]

(2019)

Deep learning,

object

detection,

object tracking,

trajectory

processing

Advantages: Accurate

vehicle counting, Compre-

hensive traffic flow

information, High overall

accuracy (>90%).

Limitations: Processing

speed may vary depending

on hardware and dataset size.

Datasets: Dataset (VDD),

Vehicle Counting Results

Verification Dataset.

Evaluation Criteria: Overall

accuracy, Processing speed.

[16]

(2019)

Convolutional

Neural

Networks

(CNNs)

Advantages: Effective

differentiation between

interesting and uninteresting

regions, High classification

efficiency with maintained

accuracy.

Limitations: Performance

may vary depending on

environmental conditions

and dataset characteristics.

Datasets: CDNET 2014

dataset, Custom dataset.

Evaluation Criteria:

Classification Speed (fps),

Detection Accuracy.

[17]

(2019)

Computer

Vision, UAV

Imagery

Advantages: Automation of

labor-intensive counting

process, Utilization of

multispectral UAV imagery

for accurate detection,

Potential for cost and time

savings in forestry

operations.

Limitations: Dependence on

quality and resolution of

UAV imagery, Potential

challenges in accurately

delineating planting

microsites.

Datasets: Custom Dataset of

Aerial Images.

Evaluation Criteria: Effi-

ciency, Validity under

Challenging Conditions.

[18]

(2019)

Feature

Pyramid

Siamese

Network

(FPSN)

Advantages: Extends

Siamese architecture with

FPN, Incorporates

spatiotemporal motion

feature for improved MOT

performance.

Limitations: Potential

complexity increase, Depen-

dency on data quality for

effective learning,

Computational overhead.

Datasets: Public MOT

challenge benchmark.

Evaluation Criteria: MOTA,

MOTP, IDF1 compared to

Advancing Urban Transportation Management: A Comprehensive Review of Computer Vision-Based Vehicle Detection and Counting

Systems

199

state-of-the-art MOT

methods.

[19]

(2018)

Magnetic

Sensor-based

Detection

Advantages: Precise

vehicle quantity and

category data acquisition,

Robustness enhanced with

parking-sensitive module,

42-D feature extraction for

classification.

Limitations: Limited

validation on specific traffic

scenario, Potential

dependence on sensor

placement and environment.

Datasets: Data collected at a

Beijing freeway exit.

Evaluation Criteria:

Accuracy Rate,

Effectiveness in Traffic

Environment, Algorithm

Robustness, Practicality.

[20]

(2018)

Convolutional

Neural

Networks

Advantages: Efficient and

effective vehicle detection,

Higher precision and recall

rates.

Limitations: Performance

may vary depending on

dataset characteristics and

environmental conditions.

Datasets: Munich dataset,

Overhead Imagery Research

Dataset.

Evaluation Criteria:

Precision, Recall Rate.

[21]

(2016)

Faster R-CNN Advantages: State-of-the-art

performance on generic

object detection, Adaptable

for various applications

including vehicle detection.

Limitations: Performs

unimpressively on large

vehicle datasets without

suitable parameter tuning and

algorithmic modification.

Datasets: KITTI vehicle

dataset.

Evaluation Criteria:

Detection accuracy,

Precision, Recall,

Computational efficiency.

[22]

(2016)

YOLO Advantages: Direct

regression approach

improves speed and

efficiency.

Limitations: More

localization errors compared

to some other methods.

Datasets: COCO Dataset,

PASCAL VOC Dataset.

Evaluation Criteria: Speed,

mAP, False Positive Rate,

Localization Accuracy.

[23]

(2015)

Virtual line-

based sensors,

gradient and

range feature

analysis

Advantages: Effective

vehicle detection, Robust

performance under diverse

environmental conditions.

Limitations: Potential

challenges in complex road

layouts.

Datasets: Experimentally

obtained data.

Evaluation Criteria:

Accuracy rate, Performance

under various conditions.

[24]

(2015)

Regression

Analysis,

Computer

Vision

Advantages: Effective in

scenarios with severe

occlusions or low vehicle

resolution, Utilization of

warping method to detect

foreground segments,

Adoption of cascaded

regression approach.

Limitations: Complexity

associated with feature

extraction and regression

modeling, Potential

limitations in handling

complex traffic scenarios.

Datasets: Custom Dataset.

Evaluation Criteria:

Accuracy, Robustness,

Reliability.

To provide an in-depth comparison of various

object detection networks with a focus on their

applicability to road object detection, we will analyze

YOLOv1, YOLOv2, YOLOv3, YOLOv4, YOLOv5,

MobileNet, SENet, and RetinaNet. We will assess

their architecture, performance, and suitability for

road object detection tasks.

YOLOv1: YOLOv1 (You Only Look Once) [22] was

groundbreaking for its real-time object detection

capabilities. It divides the input image into a grid and

predicts bounding boxes and class probabilities

directly from the full image.

• Architecture: YOLOv1 consists of a single

convolutional neural network (CNN)[14] that

simultaneously predicts bounding boxes and class

probabilities.

• Performance: While fast, YOLOv1 struggles with

small object detection and localization accuracy

due to its coarse feature maps.

IC3Com 2024 - International Conference on Cognitive & Cloud Computing

200

• Suitability for Road Object Detection: YOLOv1

may not be ideal for road object detection [4] due

to its limitations in handling small objects like

road signs and pedestrians.

YOLOv2: YOLOv2 addressed the shortcomings of

YOLOv1 by introducing architectural improvements

such as anchor boxes, batch normalization, and multi-

scale feature extraction.

• Architecture: YOLOv2 features a more

sophisticated CNN architecture [2] with

additional layers for better feature representation.

• Performance: YOLOv2 improved accuracy and

expanded its application to smaller objects.

• Suitability for Road Object Detection: YOLOv2

performs better than YOLOv1 for road object

detection tasks, but may still struggle with small

objects and occlusions.

YOLOv3: YOLOv3 further improved accuracy by

introducing a new backbone architecture and

incorporating feature pyramid networks (FPN) [21]

for better object detection across different scales.

• Architecture: YOLOv3 includes a Darknet-53

backbone and utilizes FPN for multi-scale feature

extraction.

• Performance: YOLOv3 achieved notable

improvements in accuracy compared to its

predecessors.

• Suitability for Road Object Detection: YOLOv3

offers better performance for road object

detection, especially for small and occluded

objects.

YOLOv4: YOLOv4 pushed the boundaries of object

detection with advancements in network architecture

[10], data augmentation, and optimization techniques.

• Architecture: YOLOv4 features a more complex

backbone network with additional optimization

techniques.

• Performance: YOLOv4 achieved state-of-the-art

performance in terms of accuracy and speed.

• Suitability for Road Object Detection: YOLOv4

offers excellent performance for road object

detection tasks, with improved accuracy and

efficiency.

YOLOv5: YOLOv5 introduced a streamlined

architecture with a focus on simplicity and efficiency,

leveraging advancements in neural architecture

search (NAS) [20].

• Architecture: YOLOv5 utilizes a smaller, more

efficient CNN architecture compared to previous

versions.

• Performance: YOLOv5 achieved competitive

performance while being faster and more

lightweight.

• Suitability for Road Object Detection: YOLOv5 is

well-suited for road object detection, offering a

good balance between performance and efficiency

[5].

MobileNet: MobileNet is designed for resource-

constrained environments such as mobile devices,

offering lightweight and efficient CNN architectures.

• Architecture: MobileNet utilizes depthwise

separable convolutions to reduce computational

complexity.

• Performance: While not as accurate as larger

networks, MobileNet offers excellent

performance considering its low computational

requirements [13].

• Suitability for Road Object Detection: MobileNet

is suitable for road object detection applications

where computational resources are limited.

SENet: SENet (Squeeze-and-Excitation Network)

introduced channel-wise attention mechanisms to

enhance feature representation and improve model

performance.

• Architecture: SENet integrates attention modules

into CNN [21] architectures to adaptively

recalibrate feature maps.

• Performance: SENet improves model

performance by effectively capturing feature

dependencies .

• Suitability for Road Object Detection: SENet can

enhance the performance of object detection

models for road scenes by improving feature

representation and context awareness.

RetinaNet : RetinaNet introduced focal loss to

address the class imbalance problem in object

detection, focusing training on hard examples [7].

• Architecture: RetinaNet utilizes a feature pyramid

network (FPN) [18] backbone and a two-branch

detection head.

• Performance: RetinaNet achieved state-of-the-art

performance by effectively handling class

imbalance and small object detection.

• Suitability for Road Object Detection: RetinaNet

excels in road object detection tasks, particularly

in scenarios with small objects and class

imbalance, making it the best choice among the

discussed networks.

RetinaNet stands out as the best choice for road object

detection due to its ability to handle small objects,

class imbalance, and occlusions effectively. Its

performance surpasses other networks like YOLOv3,

YOLOv4, and MobileNet, offering state-of-the-art

Advancing Urban Transportation Management: A Comprehensive Review of Computer Vision-Based Vehicle Detection and Counting

Systems

201

accuracy while maintaining efficiency. By addressing

key challenges in road object detection, RetinaNet

provides superior performance and reliability,

making it the preferred choice for various road safety

and autonomous driving applications.

Nasaruddin Nasaruddin et al [16] introduce a

novel attention-based detection system designed to

handle challenging outdoor scenarios characterized

by swaying movement, camera jitter, and adverse

weather conditions. they innovative approach

employs bilateral texturing to construct a robust

model capable of accurately identifying moving

vehicle areas.

In their methodology, they generate an attention

region that encompasses the entirety of the moving

vehicle areas by leveraging bilateral texturing. This

attention region is then fed into the classification

module as a grid input. Subsequently, the

classification module produces a class map of

probabilities along with the final detections.

The classification task in our system involves four

classes: car, truck, bus, and motorcycle. To train their

model, they utilize a dataset comprising 49,652

annotated training samples.

Figure 1 provides an overview of our system

workflow, illustrating the key components and their

interactions. The subsequent sections delve into the

intricate details of their approach, specifically

focusing on attention-based detection and lightweight

fine-grained classification techniques. Through this

comprehensive exploration, their aim to present a

robust and efficient solution for vehicle detection in

challenging outdoor environments

Figure 1: System workflow of our approach [16].

Basis of current exposure, in the future they could

focus on advancing neural network architectures for

attention-based detection in outdoor scenes,

addressing challenges like swaying movement and

adverse weather. Optimizing algorithms for real-time

performance on edge devices and embedding

multimodal sensor data could enhance detection

reliability. Additionally, exploring domain adaptation

techniques and transfer learning could improve model

generalization across diverse conditions and datasets.

These advancements aim to bolster the robustness and

applicability of attention-based detection systems in

practical scenarios.

3 RESEARCH GAP

We focus on the evolution and efficacy of computer

vision-based vehicle detection and counting systems

in urban transportation management. Through

meticulous examination of existing literature and

methodologies, we have identified several research

gaps that need to be addressed:

• Limited Generalizability: Many existing studies

focus on specific scenarios or datasets, which

may not accurately represent the diverse range of

environmental conditions and road networks

encountered in real-world traffic management

scenarios. There is a need for research that

explores the adaptability of vehicle detection

systems across various contexts to ensure their

effectiveness in different urban environments.

• Lack of Standardized Evaluation Metrics: The

absence of standardized evaluation metrics and

benchmarks hinders fair comparisons between

different methodologies. This makes it

challenging for researchers and practitioners to

assess the performance of vehicle detection

systems accurately. Addressing this gap requires

the development of standardized evaluation

protocols that encompass a wide range of

scenarios and conditions.

• Practical Deployment Challenges: While the

theoretical effectiveness of computer vision-

based systems is well-documented, there is

limited discussion on the practical challenges and

considerations involved in deploying these

systems in real-world traffic management

scenarios. Our aims to bridge this gap by

investigating the practical implications of

implementing vehicle detection systems,

including cost, scalability, and integration with

existing infrastructure.

4 RESEARCH CHALLENGES

• Data Collection and Annotation: Gathering

IC3Com 2024 - International Conference on Cognitive & Cloud Computing

202

large-scale datasets with diverse environmental

conditions and ground truth annotations is a

significant challenge. We need to collaborate

with transportation authorities and industry

partners to collect high-quality data that

accurately represents real-world scenarios.

• Algorithm Development and Optimization:

Developing and optimizing algorithms for

vehicle detection and counting requires expertise

in computer vision, machine learning, and

optimization techniques. Our collaborate with

interdisciplinary teams to develop state-of-the-

art algorithms that balance accuracy, efficiency,

and scalability.

• Integration with Existing Infrastructure:

Integrating computer vision-based systems with

existing traffic management infrastructure poses

technical and logistical challenges. Our works

closely with stakeholders to ensure seamless

integration and compatibility with existing

systems and protocols.

5 CONCLUSION

In the realm of urban transportation management, the

integration of computer vision-based vehicle

detection systems marks a significant stride towards

enhancing traffic control and optimization. Through

a comprehensive review spanning methodologies

from traditional to deep learning approaches, this

research has elucidated the evolution and efficacy of

such systems in modern traffic management.

The findings underscore the pivotal role of

computer vision technologies in providing real-time

insights into traffic dynamics. These systems offer

accurate tracking and counting of vehicles,

empowering transportation authorities to make data-

informed decisions for optimizing traffic flow,

identifying congestion points, and implementing

dynamic lane management strategies. Moreover, the

adaptability of these systems across diverse

environments and their seamless integration into

existing infrastructure make them indispensable tools

for modern transportation authorities.

While the review has highlighted the efficacy of

various methodologies, including deep learning

techniques like RetinaNet, it also identifies several

research challenges and opportunities for innovation.

Performance evaluation remains a crucial aspect,

necessitating standardized benchmarks and

evaluation metrics for fair comparisons. Additionally,

there is a need for further research into the

adaptability of vehicle detection systems across

different environmental conditions and road

networks.

In conclusion, computer vision-based vehicle

detection systems hold immense promise for

revolutionizing urban transportation management

practices. By addressing the identified challenges and

capitalizing on opportunities for innovation,

researchers and practitioners can unlock the full

potential of these systems, leading to tangible

enhancements in traffic flow efficiency, safety, and

urban mobility. Ultimately, the integration of

advanced technologies like computer vision lays the

foundation for a smarter, more efficient transportation

ecosystem, benefiting communities and societies

worldwide.

REFERENCES

1. IEEE Journals & Magazine, 2024. "Artificial

Hummingbird Optimization Algorithm With

Hierarchical Deep Learning for Traffic Management in

Intelligent Transportation Systems." Accessed April 05,

2024. https://ieeexplore.ieee.org/document/10379096.

2. Xu, Y., Chu, K., Zhang, J., 2024. Nighttime Vehicle

Detection Algorithm Based on Improved Faster-RCNN.

IEEE Access, 12, 19299–19306. https://doi.org/

10.1109/ACCESS.2023.3347791.

3. IEEE Journals & Magazine, 2024. "SatDetX-YOLO: A

More Accurate Method for Vehicle Target Detection in

Satellite Remote Sensing Imagery." Accessed April 05,

2024. https://ieeexplore.ieee.org/document/10480425.

4. Wang, P., Wang, X., Liu, Y., Song, J., 2024. Research on

Road Object Detection Model Based on YOLOv4 of

Autonomous Vehicle. IEEE Access, 12, 8198–8206.

https://doi.org/10.1109/ACCESS.2024.3351771.

5. Li, Z., Pang, C., Dong, C., Zeng, X., 2023. R-YOLOv5:

A Lightweight Rotational Object Detection Algorithm

for Real-Time Detection of Vehicles in Dense Scenes.

IEEE Access, 11, 61546–61559. https://doi.org/

10.1109/ACCESS.2023.3262601.

6. IEEE Journals & Magazine, 2024. "Improved Vision-

Based Vehicle Detection and Classification by

Optimized YOLOv4." Accessed February 22, 2024.

https://ieeexplore.ieee.org/document/9681804.

7. Miao, T., et al., 2022. An Improved Lightweight

RetinaNet for Ship Detection in SAR Images. IEEE J.

Sel. Top. Appl. Earth Obs. Remote Sens., 15, 4667–

4679. https://doi.org/10.1109/JSTARS.2022.3180159.

8. Du, S., Zhang, P., Zhang, B., Xu, H., 2021. Weak and

Occluded Vehicle Detection in Complex Infrared

Environment Based on Improved YOLOv4. IEEE

Access, 9, 25671–25680. https://doi.org/10.

1109/ACCESS.2021.3057723.

9. Yang, H., Zhang, Y., Zhang, Y., Meng, H., Li, S., Dai,

X., 2021. A Fast Vehicle Counting and Traffic Volume

Estimation Method Based on Convolutional Neural

Advancing Urban Transportation Management: A Comprehensive Review of Computer Vision-Based Vehicle Detection and Counting

Systems

203

Network. IEEE Access, 9, 150522–150531. https://

doi.org/10.1109/ACCESS.2021.3124675.

10. Tambe, R.G., Talbar, S.N., Chavan, S.S., 2021. Deep

Multi-Feature Learning Architecture for Water Body

Segmentation from Satellite Images. J. Vis. Commun.

Image Represent., 77, 103141. https://doi.org/

10.1016/j.jvcir.2021.103141.

11. Wang, X., Wang, S., Cao, J., Wang, Y., 2020. Data-

Driven Based Tiny-YOLOv3 Method for Front Vehicle

Detection Inducing SPP-Net. IEEE Access, 8, 110227–

110236.https://doi.org/10.1109/ACCESS.2020.3001279

12. Bodapati, J.D., et al., 2020. Blended Multi-Modal Deep

ConvNet Features for Diabetic Retinopathy Severity

Prediction. Electronics, 9(6), 6. https://doi.org/10.

3390/electronics9060914.

13. Taufiqurrahman, S., Handayani, A., Hermanto, B.R.,

Mengko, T.L.E.R., 2020. Diabetic Retinopathy

Classification Using A Hybrid and Efficient

MobileNetV2-SVM Model. In: 2020 IEEE Region 10

Conference (TENCON). pp. 235–240.

https://doi.org/10.1109/TENCON50793.2020.9293739.

14. Jin, B., Liu, P., Wang, P., Shi, L., Zhao, J., 2020. Optic

Disc Segmentation Using Attention-Based U-Net and

the Improved Cross-Entropy Convolutional Neural

Network. Entropy, 22(8), 8. https://doi.org/1

0.3390/e22080844.

15. Dai, Z., et al., 2019. Video-Based Vehicle Counting

Framework. IEEE Access, 7, 64460–64470.

https://doi.org/10.1109/ACCESS.2019.2914254.

16. Nasaruddin, N., Muchtar, K., Afdhal, A., 2019. A

Lightweight Moving Vehicle Classification System

Through Attention-Based Method and Deep Learning.

IEEE Access, 7, 157564–157573. https://doi.org/10.

1109/ACCESS.2019.2950162.

17. Bouachir, W., Ihou, K.E., Gueziri, H.-E., Bouguila, N.,

Bélanger, N., 2019. Computer Vision System for

Automatic Counting of Planting Microsites Using UAV

Imagery. IEEE Access, 7, 82491–82500. https://

doi.org/10.1109/ACCESS.2019.2923765.

18. IEEE Journals & Magazine, 2024. "Multiple Object

Tracking via Feature Pyramid Siamese Networks."

Accessed April 06, 2024. https://ieeexplore.

ieee.org/document/8587153.

19. Dong, H., Wang, X., Zhang, C., He, R., Jia, L., Qin, Y.,

2018. Improved Robust Vehicle Detection and

Identification Based on Single Magnetic Sensor. IEEE

Access, 6, 5247–5255. https://doi.org/10.

1109/ACCESS.2018.2791446.

20. Tayara, H., Gil Soo, K., Chong, K.T., 2018. Vehicle

Detection and Counting in High-Resolution Aerial

Images Using Convolutional Regression Neural

Network. IEEE Access, 6, 2220–2230. https://doi.

org/10.1109/ACCESS.2017.2782260.

21. Fan, Q., Brown, L., Smith, J., 2016. A Closer Look at

Faster R-CNN for Vehicle Detection. In: 2016 IEEE

Intelligent Vehicles Symposium (IV). pp. 124–129.

https://doi.org/10.1109/IVS.2016.7535375.

22. Redmon, J., Divvala, S., Girshick, R., Farhadi, A.,

2016. You Only Look Once: Unified, Real-Time Object

Detection. In: 2016 IEEE Conference on Computer

Vision and Pattern Recognition (CVPR). pp. 779–788.

https://doi.org/10.1109/CVPR.2016.91.

23. Tian, Y., Wang, Y., Song, R., Song, H., 2015. Accurate

Vehicle Detection and Counting Algorithm for Traffic

Data Collection. In: 2015 International Conference on

Connected Vehicles and Expo (ICCVE). pp. 285–290.

https://doi.org/10.1109/ICCVE.2015.60.

24. Liang, M., Huang, X., Chen, C.-H., Chen, X., Tokuta,

A., 2015. Counting and Classification of Highway

Vehicles by Regression Analysis. IEEE Trans. Intell.

Transp. Syst., 16(5), 2878–2888. https://doi.org/10.

1109/TITS.2015.2424917.

IC3Com 2024 - International Conference on Cognitive & Cloud Computing

204