Immersive versus Non-Immersive Virtual Reality Environments:

Comparing Different Visualization Modalities in a Cognitive-Motor

Dual-Task

Marianna Pizzo

a

, Matteo Martini

b

, Fabio Solari

c

and Manuela Chessa

d

Department of Informatics, Bioengineering, Robotics and Systems Engineering,

University of Genoa, Genoa, Italy

{marianna.pizzo, matteo.martini}@edu.unige.it, {fabio.solari, manuela.chessa}@unige.it

Keywords:

Perspective Visualization, Orthographic Visualization, Reaching Task, Counting Task, Presence in VR,

Usability in VR, Cognitive Load.

Abstract:

In fields like cognitive and physical rehabilitation, adopting immersive visualization devices can be unfeasible.

In these cases, the main challenge is to develop Virtual Reality (VR) scenarios that still provide a strong

sense of presence, usability, and user agency, even without full immersion. This paper explores a cognitive-

motor dual-task in VR, consisting in counting and reaching, comparing three non-immersive visualization

methods on a 2D screen (tracked perspective camera, fixed perspective camera, fixed orthographic camera)

with the immersive experience provided by a head-mounted display. The comparison focused on factors like

sense of presence, usability, cognitive load, and task accuracy. Results show, as expected, that immersive

VR provides a higher sense of presence and better usability with respect to the non-immersive visualization

methods. Unexpectedly, the implemented 2D visualization based on a tracked perspective camera seems not

to be the best approximation of immersive VR. Finally, the two fixed camera conditions showed no significant

differences in performance based on the type of projection.

1 INTRODUCTION

Immersive Virtual Reality (VR) technology has ex-

panded beyond gaming and entertainment into fields

such as education, training, medical simulation, and

rehabilitation. However, transitioning VR into clini-

cal practice remains challenging due to financial con-

straints, resistance to change, privacy concerns, and

gaps in staff training.

Clinicians have recently shown growing interest in

serious games and exergames for rehabilitation (Lee

et al., 2024; Ehioghae et al., 2024; Ren et al., 2024;

Garzotto et al., 2024). Nevertheless, immersive VR

is not always suitable for every rehabilitation proto-

col due to the unique needs of patients. For instance,

it may be unusable in cases of severe cognitive im-

pairment, epilepsy, or simply when clinicians opt for

non-immersive VR based on the patient’s condition.

Another limitation is the lack of sufficient data on the

a

https://orcid.org/0009-0004-8653-4018

b

https://orcid.org/0009-0006-3929-5055

c

https://orcid.org/0000-0002-8111-0409

d

https://orcid.org/0000-0003-3098-5894

use of head-mounted displays (HMDs) for rare condi-

tions, though this gap is gradually narrowing (Malihi

et al., 2020).

Exergames and serious games in clinical settings

are often delivered via non-immersive VR on 2D

screens, but the lack of depth cues reduces movement

precision, increases cognitive strain (Wenk et al.,

2022), and limits the immersive benefits of VR (Rao

et al., 2023). Moreover, the use of perspective cam-

eras in 3D virtual environments can distort object pro-

portions on 2D screens. To address this issue, clin-

icians often require to downgrade exergames to 2D

scenarios; although these are easier to manage, they

lose foundamental advantages of 3D environments,

such as depth perception, spatial representation, and

improved eye-hand coordination. A potential solution

to these issues is the adoption of orthographic camera

visualization for non-immersive VR.

In all these cases where immersive VR is not fea-

sible, developers of such systems who collaborate

with clinicians, must know which is the best non-

immersive alternative to it. For this reason, in this

paper, we aim to evaluate which non-immersive vi-

sualization modality can provide a user experience

Pizzo, M., Martini, M., Solari, F. and Chessa, M.

Immersive versus Non-Immersive Virtual Reality Environments: Comparing Different Visualization Modalities in a Cognitive-Motor Dual-Task.

DOI: 10.5220/0013133600003912

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 20th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2025) - Volume 1: GRAPP, HUCAPP

and IVAPP, pages 561-568

ISBN: 978-989-758-728-3; ISSN: 2184-4321

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

561

that most closely resembles immersive VR. Specifi-

cally, we aim to understand if a non-immersive per-

spective visualization (onto a 2D screen), continu-

ously updated by considering the tracked user’s ac-

tual pose (in this paper referred to as point-of-view,

POV), could be a valid substitution for immersive VR.

Moreover, we aim to analyze the differences between

non-immersive fixed (i.e., without tracking the users’

head pose) perspective and orthographic visualization

(PERSP and ORTHO, respectively). The following

hypotheses are formulated:

• H1. The non-immersive tracked perspective visu-

alization (POV modality) is the best approxima-

tion of immersive VR, thus showing (a) a higher

sense of presence, (b) better usability, and (c) a

lower cognitive load compared to PERSP and OR-

THO modalities.

• H2. The non-immersive perspective (PERSP

modality) and orthographic visualization (OR-

THO modality) provide comparable (a) sense of

presence, (b) usability, and (c) cognitive load.

• H3. As a control hypothesis, the experimen-

tal setup confirms the finding of previous work

(Pallavicini et al., 2019; Boyd, 1997; Wenk et al.,

2022) that demonstrated the advantages of immer-

sive VR with respect to non-immersive visuali-

sation modalities, particularly in terms of (a) a

higher sense of presence, (b) better usability, and

(c) reduced cognitive load.

To compare the different visualization modalities,

we consider a cognitive-motor dual-task: subjects are

asked to count specific objects appearing in the virtual

scene (cognitive task) while reaching specific targets

(motor task). This approach is widely used in the lit-

erature to evaluate the cognitive load in different con-

ditions (Baumeister et al., 2017; Souchet et al., 2022).

2 RELATED WORK

Serious games and exergames are interactive

computer-based games designed for purposes beyond

entertainment, such as education, skill enhance-

ment, and behaviour change. Both are examples

of gamification, which applies game elements in

non-game contexts (Landers, 2014). Gamification is

widely used in fields like education, healthcare, and

wellness, often integrating VR to enhance immersion

and user experience through realistic, ecological 3D

environments (Carlier et al., 2020).

According to (Bassano et al., 2022), VR se-

tups can be classified into non-immersive, semi-

immersive, and immersive categories. Non-

immersive systems use screens that do not occlude the

user’s field of view (FOV), allowing a persistent sense

of the real world. Semi-immersive systems, such as

driving simulators based on multi-monitor configura-

tions or like the CAVEs, provide partial virtual en-

vironments and do not block external sensorial stim-

ulations, whereas immersive systems, e.g., the Meta

Quest 3

1

or HTC Vive

2

, fully immerse the user in the

virtual environment.

Despite the benefits of immersive VR in enhanc-

ing presence, most exergames and serious games still

rely on non-immersive setups due to their accessi-

bility, affordability, and portability (Bassano et al.,

2022). Even with advancements in affordable HMDs

with high performance, non-immersive VR remains

the most widely used visualization technology, fol-

lowed by immersive VR. Recent findings in (Sud

´

ar

and Csap

´

o, 2024) also show that cognitive load in 2D

tasks using standard UIs and non-immersive 3D envi-

ronments is comparable.

In their systematic review and meta-analysis, (Ren

et al., 2024) examined the impact of VR-based reha-

bilitation on patients with mild cognitive impairment

or dementia, highlighting the benefits of immersive

over non-immersive VR. Immersive VR showed sig-

nificant improvements in cognition and motor func-

tion compared to non-immersive setups, due to the

transfer of cognitive skills from the game to reality,

enhancing real-world performance.

In (Wenk et al., 2019), the impact of visualiza-

tion technologies on movement quality and cognitive

load was assessed by comparing (i) an immersive VR

HMD, (ii) an Augmented Reality (AR) HMD, (iii)

and a computer screen. Participants performed goal-

oriented reaching motions (measured with an HTC

Vive controller) while completing a concurrent count-

ing task to assess cognitive load. Compared to screen

displays, VR improved motor performance, which is

likely due to the more direct mapping between virtual

representation and physical movement. On the other

hand, there was not a noticeable impact of the display

mode on cognitive load.

The same authors repeated the experiment with

twenty elderly participants and five subacute brain-

injured patients (Wenk et al., 2022) to evaluate the ef-

fects of different visualization technologies on move-

ment quality and cognitive load. Results for 3D reach-

ing movements mirrored the first study, but HMDs

appeared to reduce cognitive load. Participants also

rated HMDs as highly usable, supporting their use in

future VR-based rehabilitation.

These findings were further confirmed in a sub-

1

https://www.meta.com/it/quest/quest-3

2

https://www.vive.com/eu/

HUCAPP 2025 - 9th International Conference on Human Computer Interaction Theory and Applications

562

sequent study (Wenk et al., 2023), where twenty

healthy participants performed the same task under

the same conditions and completed questionnaires to

assess cognitive load, motivation, usability, and em-

bodiment. While cognitive load remained unaffected

across technologies, VR was rated as more motivating

and usable than AR and 2D screens. Additionally, VR

and AR achieved higher levels of embodiment com-

pared to the 2D screen.

These studies align with our control hypothesis

H3 suggesting that immersive HMDs are better suited

for training 3D movements in VR-based therapy com-

pared to conventional 2D screens, and also have a pos-

itive effect on system’s usability and cognitive load.

However, as highlighted in the introduction, HMDs

are not always a feasible option. This motivates the

need to further evaluate the effects on users of differ-

ent non-immersive visualization modalities. Notably,

no other studies have specifically addressed the im-

pact of visualization modalities on user experience in

virtual environments.

3 EXPERIMENT

3.1 Participants

Participants were recruited voluntarily from the Uni-

versity of Genoa for a within-subject study evaluat-

ing the effects of four visualization modalities on par-

ticipants’ cognitive load, sense of presence, and us-

ability. Twenty-four healthy participants (8 female,

16 male), aged 20 to 56 years (26.71 ± 8.10), with

no known motor, cognitive disorders, or color blind-

ness took part. Most had little to no prior experience

with HMDs, and no compensation was provided. The

study complied with the Declaration of Helsinki.

3.2 Visualization Modalities

In this study, we evaluated four visualization modali-

ties (see Figure 1):

• VR: immersive VR using HMD;

• POV: non-immersive VR on 2D screen with

tracked perspective camera;

• PERSP: non-immersive VR on 2D screen with

fixed perspective camera;

• ORTHO: non-immersive VR on 2D screen with

fixed orthographic camera.

In the VR condition, participants used a fully im-

mersive HMD: the Meta Quest 2

3

. Hand movements

3

https://www.meta.com/it/quest/products/quest-2

Figure 1: The four visualization modalities considered in

the cognitive-motor dual-task.

were tracked using the XR Hands

4

Unity package,

while the body animation was handled with the Final

IK

5

plugin.

In the POV condition, participants experienced the

virtual environment (VE) on a 47” LG 47LM615S

screen (1920x1080 resolution) placed 155 cm away,

while seated on a fixed chair 112 cm from the screen

center (consistent across all non-immersive condi-

tions). In between the participant and the screen, a

ZED Mini depth camera

6

is positioned 35 cm ahead

of the monitor and tracks the user’s 3D pose, includ-

ing 38 body joints. In this way, the avatar’s arms and

the virtual rendering camera can move in sync with

the user’s arm and head movements, tracked by the

ZED device. A virtual perspective camera with a ver-

tical FOV of 75

◦

is used in this condition, and its 6

degrees of freedom (6DOF) pose is updated with re-

spect to the tracked 6DOF position of the users’ head.

In the PERSP condition, the same screen and ZED

setup were used, but the virtual perspective camera

was fixed with a 7

◦

downward tilt to ensure all shelves

were in view. Arm movements were tracked and

replicated into the virtual scene.

In the ORTHO condition, participants used the

same screen and setup, but in this condition, we use an

orthographic camera with a viewport size set so that

all the shelves are in the field of view. Again, only

arm movements were tracked and replicated.

In all conditions, a gender-neutral, light-skinned

full-body avatar downloaded from Adobe Mixamo

7

was used to match participants’ demographics. The

virtual environment (VE) featured light grey walls

and a wooden floor to minimize distractions.

4

https://docs.unity3d.com/Packages/com.unity.xr.

hands@1.5

5

http://root-motion.com/#final-ik

6

https://store.stereolabs.com/en-it/products/zed-mini

7

https://www.mixamo.com

Immersive versus Non-Immersive Virtual Reality Environments: Comparing Different Visualization Modalities in a Cognitive-Motor

Dual-Task

563

Figure 2: The shelving unit (left) and the types of cats

and flowers shown during the cognitive-motor task (right).

The white spheres (not shown during the experiment) on

the shelving highlight the position where objects were

spawned.

3.3 Experimental Setup

The experiment was conducted in a room with con-

trollable artificial lighting. The ZED Mini depth cam-

era was used across all visualization modalities to

record upper body movement data (head, trunk, and

arms) consistently.

The VE was developed using the Unity 3D game

engine (version 2022.3.23F 1, Unity Technologies,

USA). The ZED plugin version 4.1 for Unity 3D han-

dled motion tracking. The avatar was animated using

the IMMERSE framework (Viola et al., 2024) which

requires a brief calibration phase at the start of the VR

session to adapt the avatar to participants’ body pro-

portions.

The workstation operated on Windows 11 Home

64-bit (Microsoft, USA), equipped with an AMD

Ryzen 9 5900X processor (12 cores/32 threads) and

an NVIDIA RTX 3080 Ti graphics card.

3.4 The Dual Cognitive-Motor Task

Participants performed the same dual-task across dif-

ferent visualization modalities. A shelving unit with

nine fixed positions, arranged in a 3x3 square layout

at equal depth, was placed centrally in front of them

(see Figure 2). To exclude depth estimation cues,

all objects were positioned equidistant from the user.

Objects included three types of cats (white, black,

tabby) and three types of flowers (pink, blue, yellow),

all of the same size (see Figure 2).

For the motor task, participants were instructed to

reach for pink flowers using their bare hands, tracked

and displayed in the virtual environment. Reaching

could be executed with either hand without specific

arm positioning instructions, though most rested their

arms on their laps. Successfully reaching an item

made it disappear with a pop sound for auditory feed-

back. For the cognitive task, participants counted

aloud the cats appearing on the shelves.

The items were presented in four blocks of in-

creasing difficulty: Block 1 had one item per trial,

Block 2 had two, Block 3 had three, and Block 4

had four items. Each block consisted of 7 trials (so

for each modality, users are exposed to 28 trials), in-

cluding a final randomized trial added to vary the cat

count. Each trial lasted 5 seconds or ended earlier if

all items were reached. Trials within each block were

randomized, while block order remained sequential to

ensure progressive difficulty.

3.5 Procedure

A demo video of the experiment is shown here

8

.

A researcher was present throughout the experi-

ment. After a briefing on the task objectives, partic-

ipants completed a brief training session to confirm

their understanding. They then performed the dual

cognitive-motor task in VR, followed by the three

non-immersive modalities (POV, PERSP, ORTHO) in

a randomized order. The six possible modality orders

were evenly distributed among participants (four per

order).

At the start of each condition, participants were in-

formed of the current visualization modality. Before

the VR task, a calibration phase ensured the shelving

unit was positioned at shoulder height and equidistant

from the user by having participants hold their arms

up at shoulder level, palms down.

After VR, the system automatically calibrated the

avatar’s position to align with the shelving and cam-

era for the subsequent non-immersive modality. At

the end of each condition, participants completed self-

assessment questionnaires on sense of presence, us-

ability, and perceived cognitive load. Finally, partici-

pants ranked the visualization modalities in a tier list

based on their preference.

3.6 Instruments

To assess participants’ sense of presence, we used the

Igroup Presence Questionnaire (IPQ) (Schubert et al.,

2001), a 14-question tool on a 7-point Likert scale

(0–6) measuring three key aspects:

• Spatial Presence (SP), the sense of being physi-

cally present in the virtual environment;

• Involvement (INV), measuring attention and en-

gagement with the virtual environment;

• Experienced Realism (REAL), assessing the per-

ceived realism of the virtual environment.

Additionally, one item evaluates the general sense

of “being there” (PRES), which encompasses spatial

presence, emotional engagement, and cognitive in-

volvement, along with the illusion of ownership over

8

https://youtu.be/k1AMaxmxgAM

HUCAPP 2025 - 9th International Conference on Human Computer Interaction Theory and Applications

564

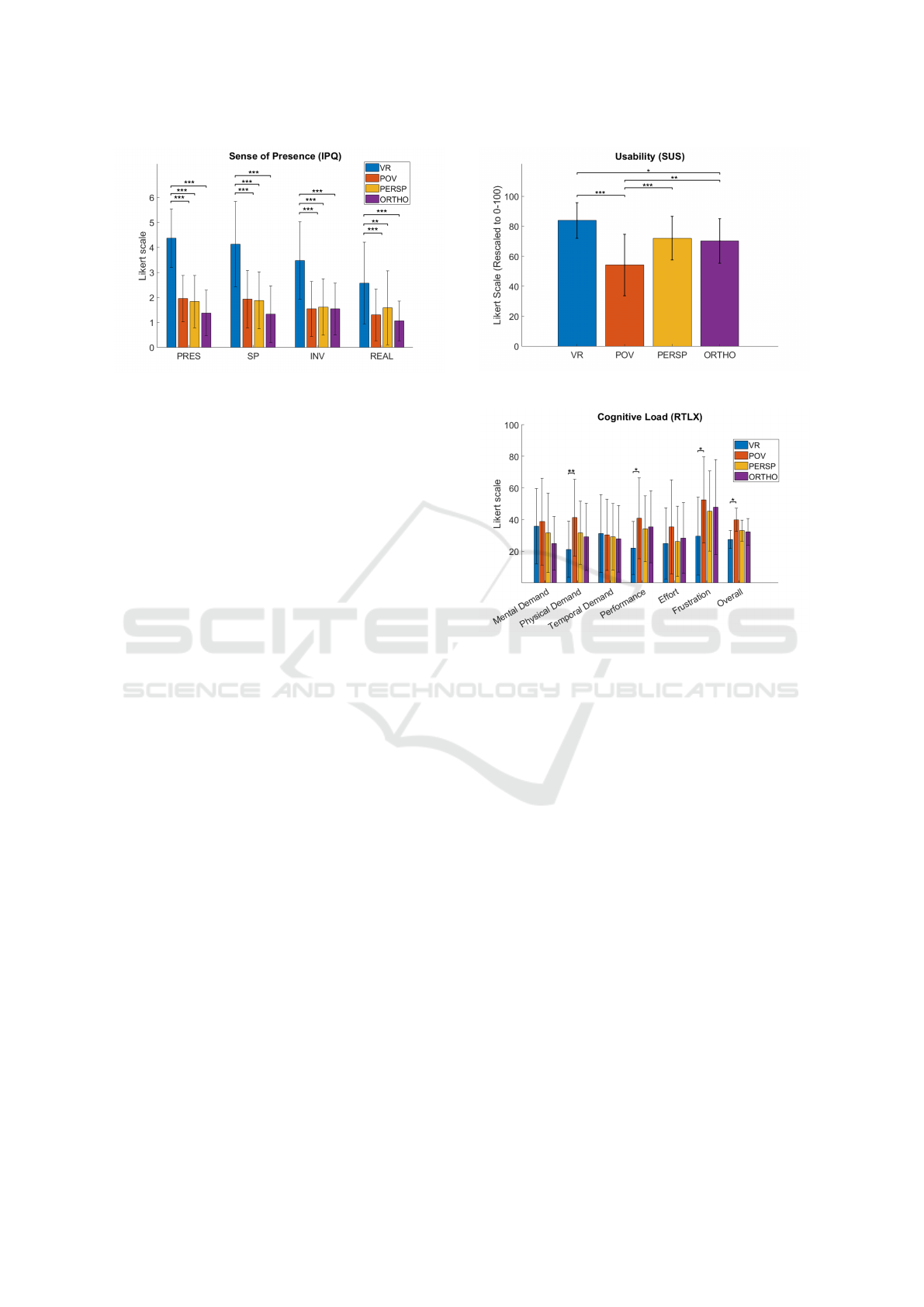

Figure 3: The IPQ results are reported for the three sub-

scales: spatial presence (SP), involvement (INV), and ex-

perienced realism (REAL). Lastly, PRES refers to the addi-

tional item for the general sense of “being there”.

a virtual body (Slater et al., 2022; Hartmann et al.,

2015).

To evaluate usability, we employed the System

Usability Scale (SUS) (Brooke et al., 1996), a 10-

question, 5-point Likert scale. Raw scores are re-

scaled to a 1–100 range, where 68 is the average

score:

• Below 51 indicates serious usability issues;

• Scores around 68 suggest room for improvement;

• Above 80.3 signifies excellent usability.

This scoring is the result of a statistical analysis of

three different datasets of SUS questionnaires, en-

compassing nearly 450 studies.

For cognitive load, we combined task perfor-

mance scores with the Raw Task Load Index (RTLX)

(Hart, 2006), a shortened version of the NASA Task

Load Index (Hart and Staveland, 1988). The RTLX

uses six sub-scales to measure mental, physical, and

temporal demand, as well as performance, effort, and

frustration, with responses rated on a 100-point Likert

scale.

4 RESULTS

Figure 3 shows the results of the IPQ. A one-way re-

peated measures ANOVA was conducted to examine

variations in IPQ subjective reports across different

modalities. The significance threshold was set at α =

0.05, with post-hoc analysis performed where neces-

sary to identify differences between specific condi-

tions. The VR condition demonstrated higher IPQ

scores across all considered aspects. In the corre-

sponding figure, asterisks indicate the presence of sta-

tistically significant difference, and their number rep-

Figure 4: The SUS questionnaire results.

Figure 5: The RTLX questionnaire results.

resents its level: one asterisk for p ≤ 0.05, two for

p ≤ 10

−2

, and three for p ≤ 10

−3

.

ANOVA was conducted to analyze differences

among visualization modalities in usability and to ex-

plore variations in SUS subjective reports. As shown

in Figure 4, the VR modality achieved an average

usability score of 83.85, indicating optimal usability

and ranking first among the four modalities. PERSP

and ORTHO, with scores of 72.08 and 70.31 respec-

tively, demonstrated usability slightly above the pass-

ing grade. Lastly, POV is below the usability thresh-

old, with a SUS score of 54.17: following the litera-

ture results, it is not unusable but does have some us-

ability issues. Asterisks indicate the presence of sig-

nificant difference following the same rules as those

used for the IPQ score.

As shown in Figure 5, for the cognitive load, VR

and POV modalities showed statistically significant

differences, through ANOVA analysis, in the physical

demand, performance, and frustration sub-scales in-

dividually and in the overall score. Also here, the as-

terisks indicate a significant difference emerged from

the post-hoc analysis, following the same conventions

described previously.

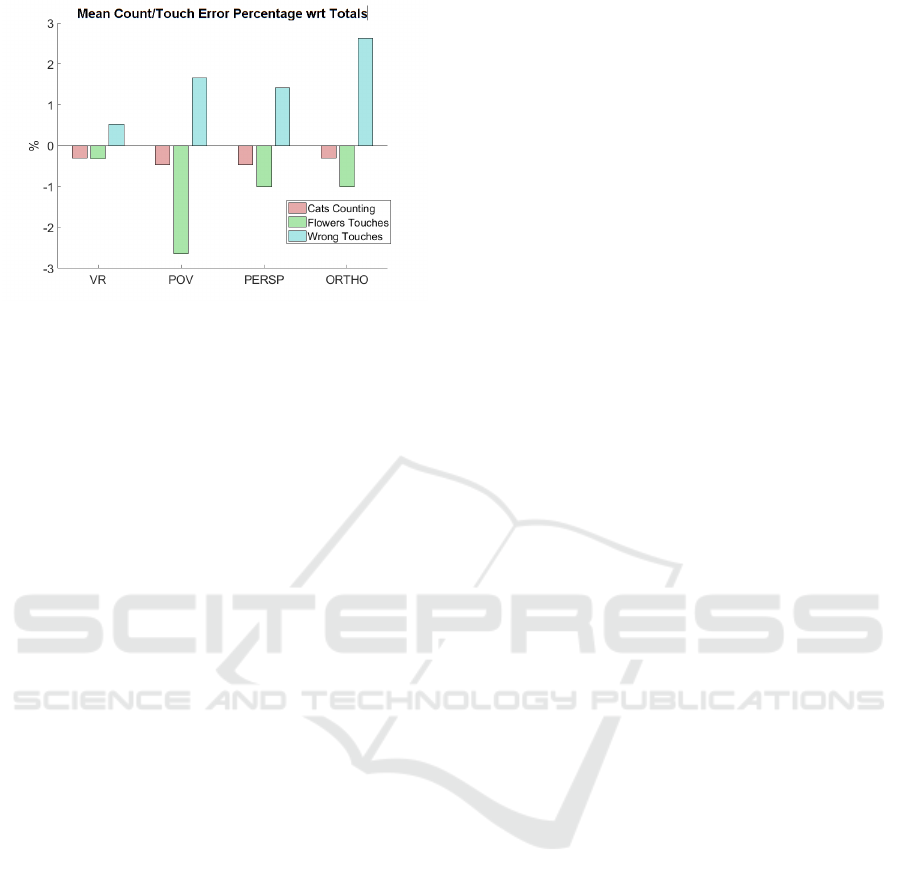

In Figure 6, we present the errors made by par-

Immersive versus Non-Immersive Virtual Reality Environments: Comparing Different Visualization Modalities in a Cognitive-Motor

Dual-Task

565

Figure 6: Mean percentage errors in the cognitive-motor

task. In particular, we have: the amount of miscounted cats

with respect to the total amount of shown cats (in pink);

the mean percentage error for the reaching task, i.e., the

number of untouched target flowers with respect to the total

amount of target shown flowers (in green); and the mean

percentage of wrongly touched objects (objects that should

not be touched but were, in blue).

ticipants during the cognitive-motor task experiment.

Specifically, we calculate the mean percentage er-

ror for the cognitive task (miscounted cats relative to

the total shown), the reaching task (untouched target

flowers relative to the total shown), and the percent-

age of wrongly touched objects (cats or non-target

flowers).

The number of miscounted cats (pink) can theoret-

ically be positive or negative, as users might overesti-

mate or underestimate the count. However, the graph

shows negative values, reflecting a general tendency

to underestimate. The number of untouched target

flowers (green) is inherently negative, indicating fail-

ures to touch required flowers. Lastly, the percentage

of wrongly touched objects (blue) is always positive,

as it measures incorrect touches relative to the total

displayed.

5 DISCUSSION

Among the three non-immersive visualization modal-

ities, the POV condition is, in principle, the most simi-

lar to immersive VR. Indeed, in this modality, the vir-

tual camera pose is continuously updated according

to the tracked user position, as it happens in HMDs.

The PERSP and ORTHO conditions do not update

the pose of the virtual camera, and they are often

preferred in order to avoid discomfort. The PERSP

condition allows us to maintain depth cues, like per-

spective, but it could generate distortions due to the

fact that the projection plane and the virtual cam-

era parameters are different with respect to the ob-

server’s ones. To this aim, sometimes people prefer

not to have these distortions, thus using the ORTHO

condition, in which perspective division is no longer

present and depth cues are eliminated.

Firstly, the results support our control hypothesis

H3 about the superiority of the immersive condition

with respect to other modalities both in terms of pres-

ence and usability, with IPQ scores and SUS scores

significantly higher. While no significant differences

in cognitive load (RTLX) were found between VR

and ORTHO or PERSP, immersive VR showed lower

cognitive load than POV and achieved the lowest er-

ror rates in the motor task (0.5% wrong targets, 0.3%

missed flowers), confirming its advantage in motor

tasks. These findings align with the previous result.

The absence of a significant difference in cognitive

load between the immersive and non-immersive con-

ditions might be due to the task itself.

Then, we aimed to understand whether POV visu-

alization is the best approximation of immersive VR.

Our results seem to reject the H1 hypothesis. Indeed,

POV visualization does not provide a better sense of

presence, better usability, or a lower cognitive load.

Regarding presence, the POV condition, like other

non-immersive conditions, shows significant differ-

ences compared to immersive VR, but no significant

differences were found between POV and the other

non-immersive conditions. For usability, the score

was 54.17, significantly lower than all other modal-

ities, which exceeded the usability threshold of 68

mentioned in 3.6. This is reflected in the RTLX re-

sults, which show significant differences from the im-

mersive condition in physical demand, performance,

frustration, and overall experience. Additionally, the

reaching task results indicate more errors in the POV

condition, with 1.6% of wrongly touched targets and

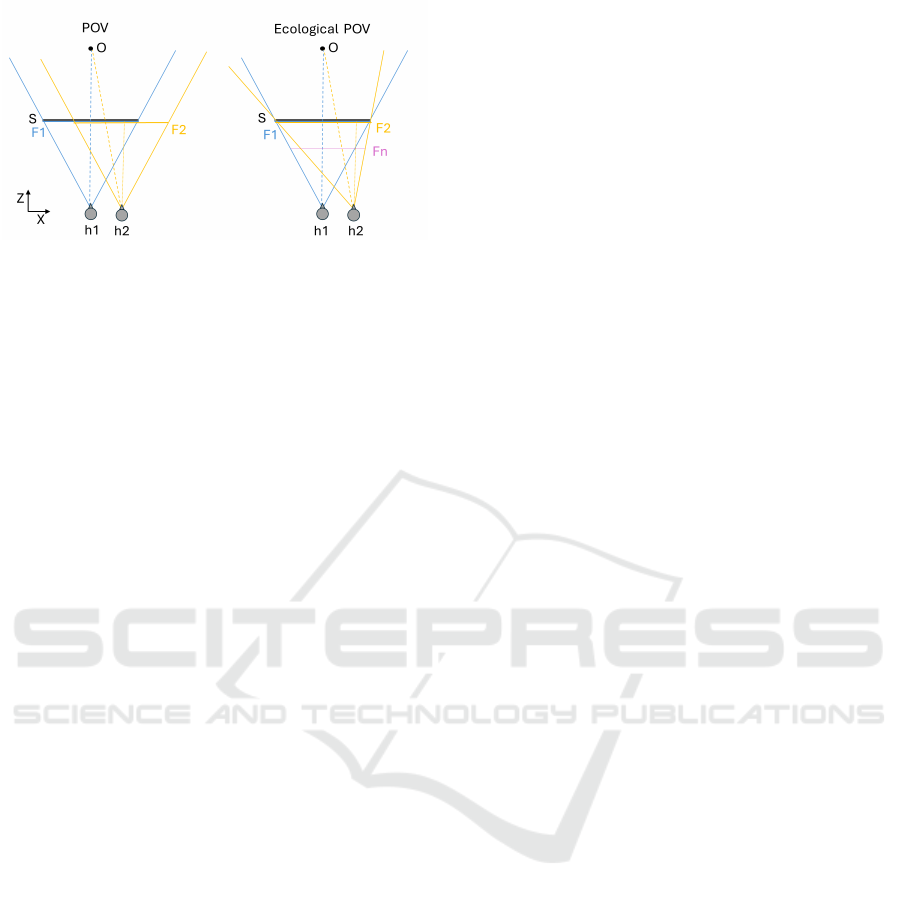

2.5% of missed pink flowers. This usability issue may

be due to the specific implementation of the POV vi-

sualization technique. As shown in Figure 7 (left),

the frustum follows the tracked head position, caus-

ing the projection of a virtual object O to shift left on

the screen during rightward head movement, unlike

real-world perception. An ecological implementation

(Figure 7, right) uses an asymmetric frustum with the

focal plane aligned to the screen, making the projec-

tion shift right, as in the real world. It is worth noting

that, given the setup and the task required of the par-

ticipants, the amount of head rotation observed during

the experiments was quite limited. As a result, their

perception was not significantly different from what

they would have experienced with the ecological im-

plementation. However, a focal plane at a different

position would still cause incorrect motion (see (So-

lari et al., 2013) for the stereoscopic case).

HUCAPP 2025 - 9th International Conference on Human Computer Interaction Theory and Applications

566

Figure 7: A sketch of the geometry for the POV visualiza-

tion technique: a view from above (X-Z plane). Two head

positions (h

1

and h

2

) and a virtual object (O) are consid-

ered. The screen (S) and the frustum with the relative focal

plane (F

1

) are also drawn: specifically, F

1

and dark blue for

h

1

, and F

2

and orange for h

2

(dashed lines denote the pro-

jection rays). (left) The current implementation of the POV:

the frustum follows the tracked head. (right) An ecologi-

cal implementation of the POV (the purple F

n

shows a focal

plane in a different position).

Finally, our results confirm that the PERSP and

ORTHO visualization modalities are characterized by

a comparable sense of presence, usability, and cogni-

tive load (H2 accepted). Looking at the results, it is

worth noting that the PERSP modality shows higher

(though not significant) values of the presence and

spatial presence factors in the IPQ. Moreover, though

the percentage of errors in the counting task and in

the pink flower reaching task are comparable between

the two modalities, the ORTHO visualization shows

a slightly higher percentage of wrongly touched ob-

jects. These results may confirm the added value of

perspective cues in 2D visualization.

At the end of the experiments, we also asked the

participants to provide a ranking of the visualization

modalities. Their choices allowed us to have the fol-

lowing ranking: 1) immersive VR, 2) PERSP, 3) OR-

THO, and finally, 4) POV. This ranking maps exactly

to the obtained quantitative results.

6 CONCLUSION AND FUTURE

WORK

In this paper, we have compared four visualization

techniques considering a cognitive-motor dual task.

This work is motivated by the fact that in some spe-

cific contexts, such as healthcare, immersive visual-

ization is not possible and for this reason we aimed

to provide a guidance on non-immersive visualization

modalities to developers working in this field.

Results shows that immersive VR (HMD) out-

performs the non-immersive visualization modalities.

Although there are few statistically significant dif-

ferences between immersive VR and non-immersive

methods, immersive VR excelled in cognitive (count-

ing) and motor (reaching) tasks. Furthermore, immer-

sive VR significantly surpassed the non-immersive

conditions in terms of users’ sense of presence and

usability. Contrary to our expectations, our imple-

mentation of a non-immersive visualization that ac-

counts for users’ head movements does not outper-

form the fixed non-immersive modalities (PERSP and

ORTHO). Indeed, the implemented POV technique

shows the worst results in terms of usability and cog-

nitive load. Lastly, fixed non-immersive visualization

techniques do not show significant differences with

respect to the kind of projection (perspective or ortho-

graphic). However, perspective one is more appreci-

ated and slightly better in terms of percentage errors.

Our analysis has several limitations. First, the

POV implementation shows discrepancies in projec-

tions compared to the real world. An ecological

POV with an asymmetric frustum, as shown in Fig-

ure 7, should be implemented to reassess H1. Ad-

ditionally, the low error rates in cognitive and mo-

tor tasks suggest that a more complex dual-task could

better highlight differences between modalities. We

also observed qualitative differences in arm trajecto-

ries during the reaching task, warranting further anal-

ysis of potential non-natural behavior. Future work

will incorporate an ecologically valid POV, investi-

gate screen distance effects, and explore how differ-

ent modalities impact movement naturalness. We also

plan to evaluate the impact of these modalities on

users’ embodiment, using the embodiment question-

naire from (Gonzalez-Franco and Peck, 2018).

ACKNOWLEDGEMENTS

This work was supported by the Italian Ministry of

Research, under the complementary actions to the

NRRP “Fit4MedRob - Fit for Medical Robotics”

Grant (# PNC0000007).

REFERENCES

Bassano, C., Chessa, M., and Solari, F. (2022). Visual-

ization and interaction technologies in serious and ex-

ergames for cognitive assessment and training: A sur-

vey on available solutions and their validation. IEEE

Access, 10:104295–104312.

Baumeister, J., Ssin, S. Y., ElSayed, N. A., Dorrian, J.,

Webb, D. P., Walsh, J. A., Simon, T. M., Irlitti,

A., Smith, R. T., Kohler, M., et al. (2017). Cogni-

tive cost of using augmented reality displays. IEEE

Immersive versus Non-Immersive Virtual Reality Environments: Comparing Different Visualization Modalities in a Cognitive-Motor

Dual-Task

567

transactions on visualization and computer graphics,

23(11):2378–2388.

Boyd, C. (1997). Does immersion make a virtual environ-

ment more usable? In CHI’97 Extended Abstracts

on Human Factors in Computing Systems, pages 325–

326.

Brooke, J. et al. (1996). Sus-a quick and dirty usability

scale. Usability evaluation in industry, 189(194):4–7.

Carlier, S., Van der Paelt, S., Ongenae, F., De Backere, F.,

and De Turck, F. (2020). Empowering children with

asd and their parents: design of a serious game for

anxiety and stress reduction. Sensors, 20(4):966.

Ehioghae, M., Montoya, A., Keshav, R., Vippa, T. K.,

Manuk-Hakobyan, H., Hasoon, J., Kaye, A. D., and

Urits, I. (2024). Effectiveness of virtual reality–based

rehabilitation interventions in improving postopera-

tive outcomes for orthopedic surgery patients. Current

Pain and Headache Reports, 28(1):37–45.

Garzotto, F., Gianotti, M., Patti, A., Pentimalli, F., and

Vona, F. (2024). Empowering persons with autism

through cross-reality and conversational agents. IEEE

Transactions on Visualization and Computer Graph-

ics.

Gonzalez-Franco, M. and Peck, T. C. (2018). Avatar

embodiment. towards a standardized questionnaire.

Frontiers in Robotics and AI, 5:74.

Hart, S. G. (2006). Nasa-task load index (nasa-tlx); 20 years

later. In Proceedings of the human factors and er-

gonomics society annual meeting, volume 50, pages

904–908. Sage publications Sage CA: Los Angeles,

CA.

Hart, S. G. and Staveland, L. E. (1988). Development of

nasa-tlx (task load index): Results of empirical and

theoretical research. In Advances in psychology, vol-

ume 52, pages 139–183. Elsevier.

Hartmann, T., Wirth, W., Schramm, H., Klimmt, C.,

Vorderer, P., Gysbers, A., B

¨

ocking, S., Ravaja, N.,

Laarni, J., Saari, T., et al. (2015). The spatial presence

experience scale (spes). Journal of Media Psychology.

Landers, R. N. (2014). Developing a theory of gamified

learning: Linking serious games and gamification of

learning. Simulation & gaming, 45(6):752–768.

Lee, J., Phu, S., Lord, S., and Okubo, Y. (2024). Effects

of immersive virtual reality training on balance, gait

and mobility in older adults: a systematic review and

meta-analysis. Gait & Posture.

Malihi, M., Nguyen, J., Cardy, R. E., Eldon, S., Petta, C.,

and Kushki, A. (2020). Evaluating the safety and

usability of head-mounted virtual reality compared

to monitor-displayed video for children with autism

spectrum disorder. Autism, 24(7):1924–1929.

Pallavicini, F., Pepe, A., and Minissi, M. E. (2019). Gaming

in virtual reality: What changes in terms of usability,

emotional response and sense of presence compared

to non-immersive video games? Simulation & Gam-

ing, 50(2):136–159.

Rao, A. K., Choudhary, G., Negi, R., and Dutt, V. (2023).

Is virtual reality better than desktop-based cognitive

training? A neurobehavioral evaluation of visual pro-

cessing and transfer performance. In Bruder, G.,

Olivier, A., Cunningham, A., Peng, Y. E., Grubert, J.,

and Williams, I., editors, IEEE International Sympo-

sium on Mixed and Augmented Reality Adjunct, IS-

MAR 2023, Sydney, Australia, October 16-20, 2023,

pages 308–314. IEEE.

Ren, Y., Wang, Q., Liu, H., Wang, G., and Lu, A.

(2024). Effects of immersive and non-immersive vir-

tual reality-based rehabilitation training on cognition,

motor function, and daily functioning in patients with

mild cognitive impairment or dementia: A system-

atic review and meta-analysis. Clinical Rehabilita-

tion, 38(3):305–321.

Schubert, T., Friedmann, F., and Regenbrecht, H. (2001).

The experience of presence: Factor analytic insights.

Presence: Teleoperators & Virtual Environments,

10(3):266–281.

Slater, M., Banakou, D., Beacco, A., Gallego, J., Macia-

Varela, F., and Oliva, R. (2022). A separate reality:

An update on place illusion and plausibility in virtual

reality. Frontiers in virtual reality, 3:914392.

Solari, F., Chessa, M., Garibotti, M., and Sabatini, S. P.

(2013). Natural perception in dynamic stereo-

scopic augmented reality environments. Displays,

34(2):142–152.

Souchet, A. D., Diallo, M. L., and Lourdeaux, D. (2022).

Cognitive load classification with a stroop task in vir-

tual reality based on physiological data. In Duh, H.

B. L., Williams, I., Grubert, J., Jones, J. A., and

Zheng, J., editors, IEEE International Symposium on

Mixed and Augmented Reality, ISMAR 2022, Singa-

pore, October 17-21, 2022, pages 656–666. IEEE.

Sud

´

ar, A. and Csap

´

o,

´

A. B. (2024). Comparing desktop 3d

virtual reality with web 2.0 interfaces: Identifying key

factors behind enhanced user capabilities. Heliyon.

Viola, E., Martini, M., Solari, F., and Chessa, M. (2024).

Immerse: Immersive environment for representing

self-avatar easily. In 2024 IEEE Gaming, Enter-

tainment, and Media Conference (GEM), pages 1–6.

IEEE.

Wenk, N., Buetler, K. A., Penalver-Andres, J., M

¨

uri, R. M.,

and Marchal-Crespo, L. (2022). Naturalistic visual-

ization of reaching movements using head-mounted

displays improves movement quality compared to

conventional computer screens and proves high us-

ability. Journal of NeuroEngineering and Rehabili-

tation, 19(1):137.

Wenk, N., Penalver-Andres, J., Buetler, K. A., Nef, T.,

M

¨

uri, R. M., and Marchal-Crespo, L. (2023). Effect

of immersive visualization technologies on cognitive

load, motivation, usability, and embodiment. Virtual

Reality, 27(1):307–331.

Wenk, N., Penalver-Andres, J., Palma, R., Buetler, K. A.,

M

¨

uri, R., Nef, T., and Marchal-Crespo, L. (2019).

Reaching in several realities: motor and cognitive

benefits of different visualization technologies. In

2019 IEEE 16th International Conference on Rehabil-

itation Robotics (ICORR), pages 1037–1042. IEEE.

HUCAPP 2025 - 9th International Conference on Human Computer Interaction Theory and Applications

568