Usability Evaluation of a Chatbot for Fitness and Health

Recommendations Among Seniors in Assisted Healthcare

William Philipp

1

, Ali G

¨

olge

2

, Andreas Hein

3

and Sebastian Fudickar

1

1

Institute of Medical Informatics, University of Luebeck, Luebeck, Germany

2

ATLAS Elektronik GmbH, Bremen, Germany

3

Carl von Ossietzky University Oldenburg, Oldenburg, Germany

{w.philipp, sebastian.fudickar}@uni-luebeck.de, andreas.hein@uol.de, a.goelge@gmail.com

Keywords:

Senior Healthcare, Assisted Healthcare, Human-Computer Interaction (HCI) in Healthcare, Elderly

Technology Use.

Abstract:

This study explores the acceptance of seniors for a chatbot designed to support in maintaining activity levels

and quality of life in an assisted healthcare setting. Building on findings from the TUMAL study, which de-

veloped a self-assessment tool for physical functioning, a proof-of-concept chatbot was created as an Android

app. The chatbot enables users to view their health data, inquire about activity levels, and receive recom-

mendations based on their results. A study involving 12 seniors (aged 75+) was conducted to evaluate the

chatbot’s usability and the participants’ attitudes toward its recommendations. The System Usability Scale

(SUS) revealed a suboptimal usability score of 66.3, with wide-ranging results indicating varying user ex-

periences. While fitness-related recommendations were positively received, health-related advice prompted

mostly negative feedback. Despite these challenges, the data querying functionality was considered useful,

demonstrating a degree of acceptance among the senior user group. The study suggests that the participants’

technical proficiency may have influenced their overall usability ratings.

1 INTRODUCTION

1.1 Motivation

The demographic shift poses one of the greatest chal-

lenges for industrialized nations. The World Health

Organization (WHO) projects that by 2025, the num-

ber of people over 60 will rise to 1.5 billion (R

¨

ocker,

2012). This aging population will lead to a relevant

increase in the demand for healthcare personnel in

countries like Germany, which may not be met (Wolf

et al., 2017). Currently, 15% of Europe’s population

reports difficulty performing daily tasks due to physi-

cal limitations, increasing the need for care. Chronic

diseases and declining physical abilities are the main

drivers of this demand (R

¨

ocker, 2012). To address

these challenges, the German Federal Ministry of Ed-

ucation and Research has focused on ”Ambient As-

sisted Living” (AAL) systems since 2002 (Wolf et al.,

2017). These systems aim to help seniors main-

tain autonomy in their homes and improve well-being

(Dohr et al., 2010). In the ”Technology-supported

motivation to maintain activity and quality of life”

(TUMAL) study, a self-assessment measurement box

was developed for seniors to track their physical abil-

ities. The tests included the Timed Up and Go (TUG)

test and the 5x Sit-to-Stand (SST) test, both assess-

ing participants’ mobility. The TUG involves stand-

ing, walking three meters, turning around, and sitting

back down, while the SST involves standing and sit-

ting five times consecutively (Fudickar et al., 2020).

Interviews showed that participants wanted immedi-

ate access to their test results (Fudickar et al., 2022).

However, providing real-time feedback requires sig-

nificant personnel resources, which hinders indepen-

dent and regular use. A solution is to deliver results

via mobile devices in the form of a chatbot. Chatbots

are now widely used in healthcare, marketing, and ed-

ucation (Adamopoulou and Moussiades, 2020), with

customer support being a key application to reduce

personnel costs (Adam et al., 2020). A recent re-

view on the use of chatbots among older adults in

healthcare concluded that there is a lack of options

designed specifically for older adults. They find that

adjacent studies are mainly focused on home moni-

toring and cognitive impairments. Furthermore, they

did not identify any studies in this field that were con-

ducted in Germany (Zhang et al., 2024). The chatbot

Philipp, W., Gölge, A., Hein, A. and Fudickar, S.

Usability Evaluation of a Chatbot for Fitness and Health Recommendations Among Seniors in Assisted Healthcare.

DOI: 10.5220/0013139800003911

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 18th International Joint Conference on Biomedical Engineering Systems and Technologies (BIOSTEC 2025) - Volume 2: HEALTHINF, pages 483-490

ISBN: 978-989-758-731-3; ISSN: 2184-4305

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

483

developed in this work will address this need by vi-

sualizing and delivering the physical activity and test

results audiovisually.

1.2 Research Goals

The TUMAL study revealed a clear need for seniors

to receive immediate feedback on their test results and

the current technological advancements in the field

of chatbots make them a suitable alternative for per-

sonal discussion of the results, from a technical stand-

point. However, little is known about if seniors ac-

cept the presentation of assessments results via such

chatbots and if they are suitable for usage. The core

research question aims to clarify this research need:

Do seniors aged 75 and above accept a chatbot de-

signed to display health data and provide person-

alized recommendations? To answer this, two sub-

questions are formed:

1. How do seniors rate the usability and ease of use

of the chatbot?

2. What is their attitude toward the recommenda-

tions provided by the chatbot?

2 METHODS

In order to address the research question, a mobile

chatbot application specifically for seniors is imple-

mented and is evaluated regarding the user acceptance

and usability with representative participants of the

age group.

2.1 Chatbot Application

The chatbot’s main function is to visually display in-

formation about physical activity and measurement

results from the health monitoring system, while also

delivering audio-visual feedback based on the data.

Additionally, it offers personalized recommendations

aimed at improving both the user’s fitness and over-

all health. It serves as a proof-of-concept, exploring

whether seniors over 75 find this type of technology

useful and could see themselves using it. The require-

ments for the chatbot have so far been vaguely formu-

lated, or only the purpose has been derived from the

results of the TUMAL study. To specify the func-

tions, the requirements will be further defined to de-

rive technical objectives. Accordingly, there are two

specific requirements. The first is the verbal inquiry

of the measurement box results/activity level and the

audiovisual transmission of this information. The sec-

ond involves the provision of action recommendations

from the chatbot based on the measurement box re-

sults or the activity level. From these two require-

ments, application scenarios will be defined in the

next sections.

2.1.1 Scenario 1: Querying Information

The first application scenario involves querying infor-

mation regarding physical activity. The query can per-

tain to the measurement box results or the user’s ac-

tivity level. In response to the query, the chatbot dis-

plays a graphic that describes the user’s performance.

The chatbot verbally provides key information as an

assessment. For self-evaluation and motivation, the

user is also shown the average rating. Furthermore,

the current performance is compared with past val-

ues. Additionally, there should be an option to access

information about the TUG and SST tests along with

reference values in an overlay window. The standard

workflow of this use case starts with the user initiat-

ing a conversation with the chatbot. After launching

the chatbot, the user can verbally communicate their

desired query and will receive the described response.

2.1.2 Scenario 2: Making Recommendations

The second application scenario involves communi-

cating recommendations to the user. At the start of the

conversation, the chatbot first asks a question regard-

ing the user’s well-being. If the response is positive,

the chatbot subsequently provides fitness recommen-

dations intended to motivate the user to become more

physically active. However, if the response is nega-

tive, the user is advised to consult a doctor. There-

fore, these recommendations are referred to as health

recommendations. The action recommendations are

only communicated to the user in the event of a dete-

rioration in the measurement box results or the activ-

ity level, or if the user indicates feeling unwell. The

flow of this use case also begins with the user initiat-

ing a conversation with the chatbot. Before the user

can start a query, the chatbot immediately asks about

the user’s well-being right after the conversation be-

gins. Depending on the course of the conversation,

as described above, the action recommendations are

derived from the measurement values and communi-

cated to the user.

2.1.3 Design Guidelines for Seniors

The user group of seniors aged over 75 includes var-

ious factors that must be considered in the design of

the user interface. To create a user-friendly and in-

tuitive interface for this demographic, it is essential

to incorporate design guidelines tailored for seniors.

HEALTHINF 2025 - 18th International Conference on Health Informatics

484

Listing all aspects that were considered goes beyond

the scope of this work. A sample of decisions that

were made for the interface based on the user group

were:

• Minimize the number of elements on the interface

• Use familiar elements from other apps

• Large elements and scalability

• No gesture controls

Figure 1 provides an example of how the design

considerations were factored into the development of

the application.

Figure 1: Example of visual clarity implemented in the user

interface. Left: The audiowave icon changes animation and

colour when the chatbot is speaking. Right: The micro-

phone icon lights up and displays the text ”microphone is

active”.

2.2 Technology Stack

The chatbot was developed for Android due to its

accessibility for developers and wide range of inter-

faces, making it suitable for mobile devices. Once

integrated into a health app, the chatbot could ac-

cess user activity data monitored in real-world scenar-

ios. To meet technical requirements, offline data pro-

cessing was prioritized. AIML (Artificial Intelligence

Markup Language) was chosen to handle user inputs

and generate outputs, as it is commonly used for chat-

bot development in research. For speech recognition

(Speech-to-Text), the open-source API VOSK, based

on the Kaldi toolkit, was selected due to its low mem-

ory usage and easy integration with Android. For

speech synthesis (Text-to-Speech), Android’s built-in

interface is used for simplicity.

2.3 Study Setup

With the chatbot application, a study is conducted

with subjects from the target age group to evaluate

its usability and assess how well the users accept the

chatbot’s recommendations.

2.3.1 Study Group Selection

The recruitment process involved contacting a sub-

group of 36 participants of the TUMAL study who

agreed to be contacted for further studies by phone.

15 participants agreed to participate, of which 12

showed up for the study (see Table 1 for details on the

makeup of the cohort). The participants were sched-

uled for appointments and invited to the university.

Despite the smaller sample size, the methods used are

expected to yield sufficient feedback to evaluate the

chatbot’s usability.

2.3.2 Experimental Setup - Qualitative Phase

The study was conducted in individual sessions, with

40 minutes allocated per participant. The study re-

quired only a mobile device with the Android op-

erating system, on which the application was in-

stalled. This device was provided to participants

and positioned on a phone holder for convenient use.

Both quantitative and qualitative metrics were estab-

lished to measure during the study, with a focus on

answering the research question and achieving the

study’s objectives, particularly verifying the proof-

of-concept. Participants were asked to evaluate not

only the usability but also the general functionality

and characteristics of the chatbot, such as voice clarity

and speed, as well as satisfaction with the graphical

display of information. Additionally, the study aimed

to assess participants’ attitudes toward receiving rec-

ommendations from a chatbot. From a technical per-

spective, additional metrics were defined to evaluate

the chatbot’s quality. The metrics are summarized in

Table 2.

The first phase consisted of a practical user test,

where participants had the opportunity to try out the

chatbot. The usage involved having a conversation

with the chatbot. No specific tasks were given to the

participants, only the context was provided. The con-

versation consisted of two runs. In the first run, par-

ticipants were given the freedom to choose between

the two paths. In simple terms, this means that par-

ticipants could freely respond to the chatbot’s ques-

tion about how they were feeling with either ”Good”

or ”Bad.” In the second run, participants were asked

to choose the other option. The intention behind this

was to show the participants the respective recom-

Usability Evaluation of a Chatbot for Fitness and Health Recommendations Among Seniors in Assisted Healthcare

485

Table 1: Overview of the cohort that participated in the usability study.

Cohort No. Participants Avg. age Min. age Max. age SD (age)

Men 8 82 76 90 4.03

Women 4 80 76 84 2.92

Total 12 81 76 90 3.77

Table 2: Metrics measured in the study.

Metrics

Category Feature

Qualitative

- Statements and questions from the Thinking Aloud method

- Attitude towards recommendations

Quantitative

- Usability score according to the System Usability Scale

- Usability results based on the User Experience Questionnaire

- Satisfaction with the user interface

- Satisfaction with the chatbot

* Voice (speed, tone)

* Speech recognition

* Chatbot responsess

- Satisfaction with the graphical representation of information

- Number of matches between the user’s speech and the system’s understanding

- Number of successful intent matchings

- Error rate of intent matchings

- Error rate of speech recognition

mendations provided by the chatbot. During the us-

age, participants were asked to follow the Thinking-

Aloud method (JØRGENSEN, 1990). During this

phase, a screen recording, including audio, was made

for evaluation purposes. This allowed the tracking of

user interactions and the documentation of statements

according to the Thinking-Aloud method. Addition-

ally, the chatbot application generated a log file in the

background to record the conversation and store data

related to speech recognition.

2.3.3 Experimental Setup - Quantitative

Questionnaire Phase

Following the practical user test, the second phase

involved a questionnaire survey. The questionnaire

was provided to the participants in written form dur-

ing the study. It began with personal information,

such as name, age, gender, and a self-assessment of

the participant’s technical knowledge. The question-

naire is divided into three sections. The first section

contains the User Experience Questionnaire (UEQ).

The UEQ is a questionnaire designed to measure the

usability of applications. It consists of a total of 26

questions that relate to six different metrics (Laugwitz

et al., 2008). Each question presents two opposing at-

tributes, and participants indicate their preference on

seven item Likert scales. The next section covers the

System Usability Scale (SUS). Participants respond

to each statement using a five item Likert scale, rang-

ing from ”Strongly disagree” to ”Strongly agree.” The

statements consist of five positive and five negative

ones. The total score, calculated using a predefined

formula, ranges from 0 to 100 and reflects the user’s

perceived usability. A score of 68 or higher is consid-

ered indicative of good usability (Devy et al., 2017).

The final section consists of six questions regarding

the satisfaction with qualitative attributes, as listed in

Table 2. Participants again had the option to express

their satisfaction on a five-item Likert scale ranging

from ”Not satisfied at all” to ”Very satisfied.” Addi-

tionally, participants were asked about their prefer-

ence for a voice, with options including male, female,

or no preference. Finally, participants were asked to

assess how well they believed they managed the oper-

ation of the system.

2.3.4 Experimental Setup - Qualitative

Interview Phase

At the end of the study, a short interview was con-

ducted with the participants. The aim of this con-

versation was to understand the participants’ attitudes

towards the chatbot’s health and fitness recommenda-

tions. This referred not to the content of the recom-

mendations, but to the general acceptance of a techni-

cal recommendation system for health in the form of

a chatbot. Additionally, participants’ overall opinions

about the chatbot were gathered.

HEALTHINF 2025 - 18th International Conference on Health Informatics

486

3 RESULTS

The results of the conducted study are summarized

in this section. During data analysis, the audio

recordings from the practical user test were first tran-

scribed. Evaluations and questions expressed during

the Thinking-Aloud method were recorded in an Ex-

cel sheet for analysis. Based on this data, difficul-

ties encountered during the use of the system were

identified. The SUS score and UEQ result were cal-

culated to quantitatively assess usability. Key met-

rics included the mean and standard deviation of both

results. The data analysis focused on three key as-

pects. The first aspect was the measurement of us-

ability, which was derived according to the meth-

ods presented in the previous section. The quanti-

tative evaluations from the SUS score and UEQ re-

sult were supported by qualitative insights gathered

through the Thinking-Aloud method. The second as-

pect was the participants’ attitude towards the chat-

bot’s recommendation feature. Interview transcripts

were used for this evaluation. Finally, the third aspect

focused on analyzing the speech recognition technol-

ogy. The log files were compared with the transcrip-

tions to determine the reliability of the speech recog-

nition. It was also important to analyze how many

words the participants used per input to understand

their usage behavior.

3.1 Quantitative Evaluation

First, an overview of the qualitative results is pre-

sented in Table 3. The cohort rated their own technical

knowledge on a five-item Likert scale ranging from

”Very low” to ”Very high,” with an average score of

4 (=Good). The participants’ responses to the ques-

tion ”Did you manage to use the system?” also re-

sulted in an average score of 4.08 (=Good) on a five-

point scale. The cohort’s satisfaction averaged 4.18

(=Good). Of the twelve participants, four indicated

that they would prefer a female voice. The remaining

participants stated that they had no preference. Mea-

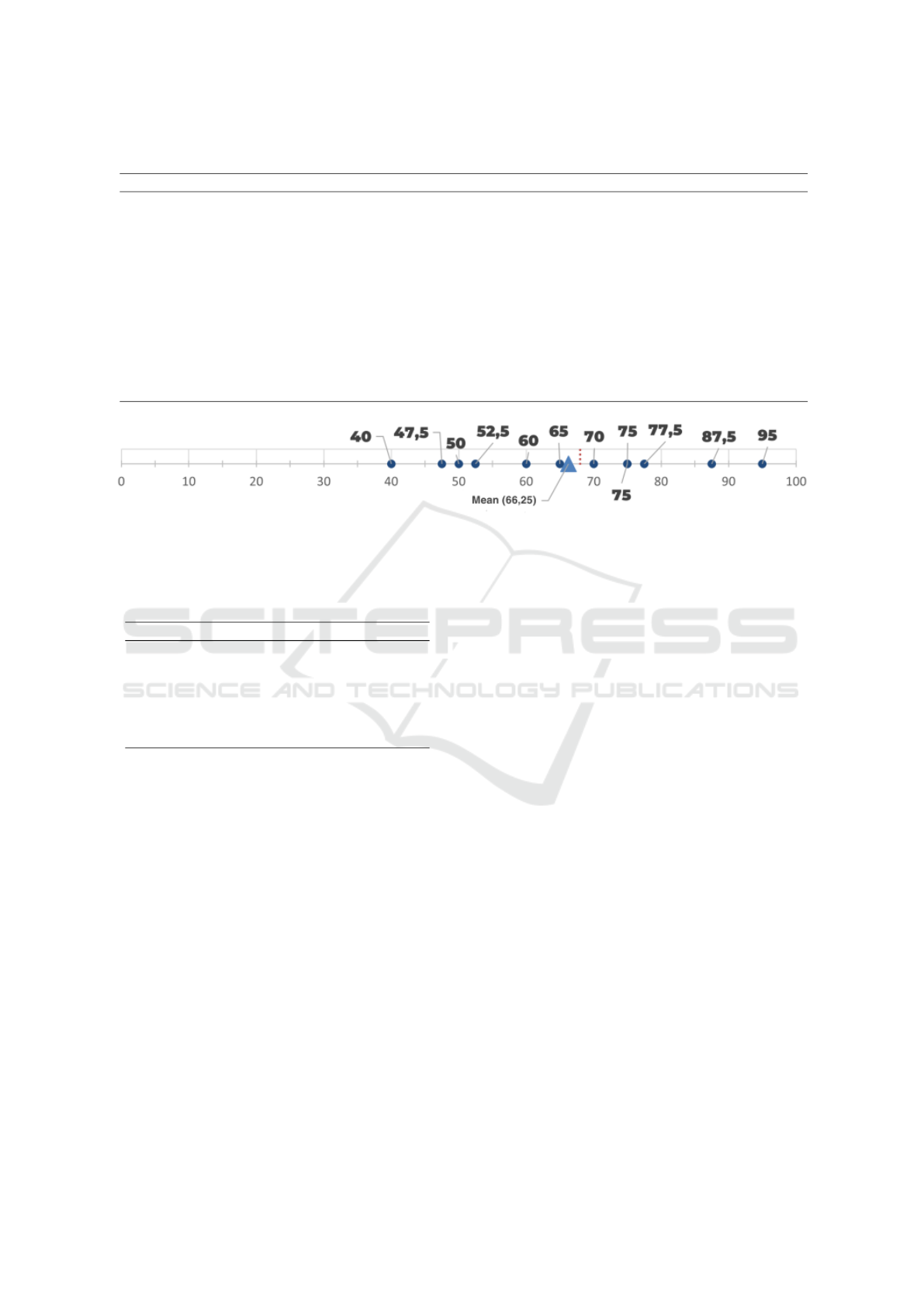

sured by the System Usability Scale (SUS), the us-

ability resulted in an average score of 66.3. Accord-

ing to SUS, a score of 68 or higher indicates good

usability. The score determined here is slightly below

this threshold, which suggests poor usability. Figure

2 illustrates the results in a box plot. Of the twelve

participants, six scored above the threshold of 68,

while the remaining six scored below. A wide dis-

persion is noticeable. The standard deviation is 16.8.

The results from the User Experience Questionnaire

(UEQ), summarised in Table 4, are as follows: The

outcome was slightly positive overall. The highest

score was achieved in the attractiveness of the app,

with a mean value of 1.36, while the lowest score

was for efficiency, with a mean value of 1.04. The

maximum standard deviation was observed for attrac-

tiveness and stimulation, both with a value of 1.48.

The minimum standard deviation was recorded for ef-

ficiency and dependability, both with a value of 0.67.

Except for efficiency (which was below average), all

other metrics scored above average compared to other

products within their benchmark distribution.

3.2 Qualitative Evaluation

3.2.1 Thinking Aloud Method

The following difficulties in operating the chatbot

were determined using the Thinking-Aloud method.

It should be noted that this methodology, when an-

alyzed both quantitatively and qualitatively, did not

yield significant results. The reason for this, accord-

ing to observations, was the overwhelm experienced

by many participants while using the chatbot. Conse-

quently, most of the insights gained from this method

consist of questions about the chatbot’s usage, which

were asked during the practical portion. The follow-

ing list contains an excerpt of common problems the

users experience and is ordered by frequency of oc-

currence. Each difficulty is accompanied by an exam-

ple from the transcription of the audio recordings:

• Microphone issues: Participants either forgot

or were unsure about pressing the microphone

icon before speaking to the chatbot to activate it

(”Should I have pressed it again first?”). This led

some participants to initially think that the chatbot

had not understood them (”It didn’t understand,

did it?”).

• Touchscreen operation issues: Some partici-

pants had trouble using the touchscreen (”Press-

ing here isn’t working so well.”). Several clicks

were not recognized, which led to further issues.

For instance, when trying to activate the micro-

phone, it wasn’t clear to participants that their

click hadn’t been recognized, and they spoke into

the microphone anyway. In this context, it’s worth

noting that visual indicators do appear when the

microphone is activated.

• Confusion between microphone and info but-

tons: Despite clicking on the info button and an

overlay window appearing, participants continued

speaking as if the microphone was active (”(Click

on Info button) - What is my activity level?”). Fur-

thermore, participants were unsure how to interact

with the information window (”Where do I need

to press here?”). In one case, the information

Usability Evaluation of a Chatbot for Fitness and Health Recommendations Among Seniors in Assisted Healthcare

487

Table 3: Overview of quantitative results. The participants are sorted by SUS score, descending.

# Participant Gender Age Tech. Knowledge SUS UX Rating Avg. Satisfaction Pref. Voice

1 P26 M 77 5 95.0 5 4 None

2 P38 M 83 4 87.5 5 4.5 Female

3 P36 F 84 1 77.5 4 4.5 Female

4 P4 M 90 5 75.0 4 4.5 None

5 P9 M 83 3 75.0 4 3.83 None

6 P8 F 76 4 70.0 5 4 None

7 P19 M 83 2 65.0 4 4 None

8 P31 M 80 1 60.0 3 3.83 None

9 P16 M 76 2 52.5 4 4 None

10 P20 F 79 2 50.0 4 4 Female

11 P40 M 82 4 47.5 3 3.5 Female

12 P34 M 80 2 40.0 3 3.83 None

Figure 2: SUS scores plotted along a line. The cutoff point for good usability is marked in red.

Table 4: UEQ Results (Tabular). AA and BA denote above

and below average results when compared to the bench-

mark, respectively.

Measure Mean SD Benchmark

Attractiveness 1.36 1.48 AA

Perspicuity 1.33 0.90 AA

Efficiency 1.04 0.67 BA

Dependability 1.15 0.67 AA

Stimulation 1.24 1.48 AA

Novelty 1.08 1.38 AA

provided about the SST and TUG tests was inter-

preted by a participant as an instruction to per-

form the tests (”What should I do now? Stand up

and do the exercise...”). The confusion was not

limited to buttons. One participant, for example,

mistook a speech bubble for a button or failed to

realize that they had already clicked a button, and

the subsequent speech bubble was displaying the

result of that input (”What should I press now (...)

what should I press now?”).

3.2.2 Interviews

The structured interview protocols provided insights

into how participants viewed the fact that a chatbot

gives health and fitness recommendations. The evalu-

ation of the protocols led to the following findings:

• Eight participants found the chatbot’s fitness rec-

ommendations to motivate more physical activity

as useful.

• Only five participants expressed a positive attitude

toward the chatbot’s health recommendations and

considered them unproblematic, noting that se-

niors regularly visit a doctor. However, these rec-

ommendations should be accepted with caution.

• Counterarguments included:

– Not carrying a smartphone regularly.

– A negative attitude toward technology in gen-

eral.

– Receiving recommendations based only on ac-

tivity data was not viewed positively. The chat-

bot should collect more personal health data.

– Regular visits to the doctor made the chatbot’s

health recommendations seem unnecessary.

– Health recommendations were seen as too ex-

treme by some participants.

• There was consensus that the chatbot is a good

idea for querying results after using the measure-

ment box.

• Suggestions for improvement:

– More dialog options.

– The chatbot should provide information on why

increased activity can positively impact partici-

pants and offer general health information.

– The user interface (UI) should be better adapted

for non-smartphone users using larger displays.

HEALTHINF 2025 - 18th International Conference on Health Informatics

488

3.3 Evaluation of Speech Recognition

The following results emerged from the evaluation of

the recordings and their comparison with the log files.

Over the course of the practical part of the study, the

twelve participants made a total of 127 inputs, com-

prising 694 recognized words. The average length of

these inputs was five words. The Speech-to-Text API,

VOSK, correctly recognized 75 out of the 127 inputs

without errors (equaling 59.06% accuracy). A single

incorrectly recognized word was counted as an error,

with 97 out of the total 694 words being incorrectly

recognized, resulting in a 13.98% error rate. A total

of 531 words were recognized with a confidence level

of 100%. The average confidence was 0.923, with a

minimum value of 0.215. Despite low confidence, 65

words were correctly recognized.

4 DISCUSSION

4.1 Acceptance of Recommendations

Regarding the participants’ attitudes toward the chat-

bot’s recommendations, there was no clear consen-

sus. Based on the findings, fitness recommendations

aimed at motivating more physical activity were gen-

erally viewed more positively than health-related rec-

ommendations. This attitude could be attributed to

a negative stance toward technology. Without a cer-

tain level of acceptance, it seems that trust in the

technology is lacking, which makes the health-related

recommendations from a chatbot seem irrelevant, es-

pecially for such a critical aspect of seniors’ lives.

Furthermore, many seniors mentioned that they are

regularly under medical supervision and thus per-

ceived these health recommendations as unnecessary

from the start. One participant explained their neg-

ative stance based on observations of their social

circle. They categorized seniors into two groups:

those who visit the doctor regularly and those who

avoid confronting their potentially poor health status,

which is why they don’t seek medical advice as often.

This hesitation was also reflected in the interviews,

where poor test results were sometimes taken person-

ally. Participants emphasized that recommendations

should be presented cautiously, as they could lead to

panic among users.

4.2 Usability

Despite the low SUS score, the participants’ individ-

ual evaluation reveals a differentiated picture, which

was further highlighted through the interpretative ap-

proach presented. This is reinforced by the findings

from the Thinking-Aloud method. It became evident

that using the chatbot was associated with a high un-

certainty for some participants, as difficulties during

usage surfaced through frequent questions. Another

example of uncertainty relates to the confusion be-

tween buttons. Despite the stark visual differences

between two buttons, they were still confused. The

results of the UEQ further underscore these usabil-

ity difficulties. No significant correlation between

the SUS score and other quantitative metric has been

found. This is particularly interesting for the subjec-

tive metric of ”Technical Knowledge”, where partici-

pants where asked to rate their own technical knowl-

edge. For instance, participant P40 rated the chatbot

with a score of only 48, even though they assessed

their technical knowledge as ”good”. Conversely, an-

other outlier can be seen in Figure 2, challenging

this assumption. Participant P36 rated their techni-

cal knowledge as ”low,” yet still awarded a SUS score

of 78.

4.3 Speech Recognition

The speech synthesis created using the VOSK API

proved to be a viable mobile solution that operates en-

tirely offline. Despite the use of a small language cor-

pus, an error rate of 14% was achieved. Considering

that the participants were less tech-savvy compared

to younger age groups, this error rate appears accept-

able. However, when using AIML, which is responsi-

ble for output generation, the issue arises that even

a single misrecognized word can cause the intent-

matching to fail. Given the relatively long average

length of inputs, the likelihood that a query results in

no input matching is quite high. To mitigate this is-

sue, the intents were cautiously designed based on the

keyword method, which is why this problem did not

appear in the results. The downside of this approach,

however, is that conversations cannot be made more

detailed. Therefore, a compromise must be found, us-

ing various matching methods to ensure a high likeli-

hood of successful matching while also offering more

diverse dialogue options.

5 CONCLUSIONS

It can be concluded that the general acceptance of

the chatbot is evident. The interviews revealed that

a majority of the participants considered the chatbot

a good idea for checking results after using the mea-

surement box. Based on the results, it was determined

Usability Evaluation of a Chatbot for Fitness and Health Recommendations Among Seniors in Assisted Healthcare

489

that the below-average usability rating may correlate

with the technical proficiency of the participants. To

avoid confusion with input methods, it appears nec-

essary to limit users to one form of input. Currently,

users can input commands either through voice or but-

tons, depending on the context. This switching be-

tween input methods caused confusion for some par-

ticipants, which should be avoided. Supplementary

features, such as displaying extra information regard-

ing the TUG and SST tests, should be fully integrated

into the chatbot. It was found that pop-up windows

caused users to lose track of the interaction flow. Fur-

ther usability improvements can be made according to

the suggested enhancements. These include increas-

ing the range of dialogue options, delving deeper into

personal data queries for formulating recommenda-

tions, and supplementing the recommendations with

explanations that justify them. Regarding the ac-

ceptance of recommendations, it would be better to

limit the chatbot’s advice to fitness-related sugges-

tions. This might be achieved by considering the ”mo-

bility and endurance”, ”strength” and ”balance”, as

main components to be considered in these assess-

ments (Hellmers et al., 2017). Concerning the tech-

nology used, there is a need for improvements due

to the demand for more dialogue options. The cur-

rent AIML (Artificial Intelligence Markup Language)

is error-prone due to its strict rules. As highlighted in

the results, even a single error in the input can cause

the intent-matching to fail. A potential solution would

be to insert an additional module between the speech

recognition and AIML systems. This module could

function to improve the linguistic quality of the in-

puts. By addressing grammatical and spelling errors,

this would reduce input errors and make the intent-

matching more reliable.

ACKNOWLEDGEMENTS

This work was supported by the German Federal Min-

istry of Education and Research (BMBF) under grant

agreement no. 16SV8958 and 1ZZ2007.

REFERENCES

Adam, M., Wessel, M., and Benlian, A. (2020). Ai-based

chatbots in customer service and their effects on user

compliance. Electronic Markets, 31(2):427–445.

Adamopoulou, E. and Moussiades, L. (2020). An Overview

of Chatbot Technology, pages 373–383. Springer In-

ternational Publishing.

Devy, N. P. I. R., Wibirama, S., and Santosa, P. I. (2017).

Evaluating user experience of english learning inter-

face using user experience questionnaire and system

usability scale. In 2017 1st International Conference

on Informatics and Computational Sciences (ICICoS),

pages 101–106.

Dohr, A., Modre-Opsrian, R., Drobics, M., Hayn, D., and

Schreier, G. (2010). The internet of things for ambient

assisted living. In 2010 Seventh International Confer-

ence on Information Technology: New Generations.

IEEE.

Fudickar, S., Hellmers, S., Lau, S., Diekmann, R., Bauer,

J. M., and Hein, A. (2020). Measurement system for

unsupervised standardized assessment of timed “up

& go” and five times sit to stand test in the commu-

nity—a validity study. Sensors, 20(10):2824.

Fudickar, S., Pauls, A., Lau, S., Hellmers, S., Gebel, K.,

Diekmann, R., Bauer, J. M., Hein, A., and Koppelin,

F. (2022). Measurement system for unsupervised stan-

dardized assessments of timed up and go test and 5

times chair rise test in community settings—a usabil-

ity study. Sensors, 22(3):731.

Hellmers, S., Steen, E.-E., Dasenbrock, L., Heinks, A.,

Bauer, J. M., Fudickar, S., and Hein, A. (2017). To-

wards a minimized unsupervised technical assessment

of physical performance in domestic environments. In

Proceedings of the 11th EAI PervasiveHealth Confer-

ence, PervasiveHealth ’17, page 207–216. ACM.

JØRGENSEN, A. H. (1990). Thinking-aloud in user in-

terface design: a method promoting cognitive er-

gonomics. Ergonomics, 33(4):501–507.

Laugwitz, B., Held, T., and Schrepp, M. (2008). Construc-

tion and Evaluation of a User Experience Question-

naire, page 63–76. Springer Berlin Heidelberg.

R

¨

ocker, C. (2012). Smart medical services: A discussion of

state-of-the-art approaches. International Journal of

Machine Learning and Computing, pages 226–230.

Wolf, B., Scholze, C., and Friedrich, P. (2017). Digital-

isierung in der Pflege – Assistenzsysteme f

¨

ur Gesund-

heit und Generationen, pages 113–135. Springer

Fachmedien Wiesbaden.

Zhang, Q., Wong, A. K. C., and Bayuo, J. (2024). The role

of chatbots in enhancing health care for older adults:

A scoping review. Journal of the American Medical

Directors Association, 25(9):105108.

HEALTHINF 2025 - 18th International Conference on Health Informatics

490