Onboarding Customers in Car Sharing Systems: Implementation of

Know Your Customer Solutions

Magzhan Kairanbay

a

Array Innovation, Bahrain

Keywords:

Know Your Customer, Object Detection, Optical Character Recognition.

Abstract:

Car-sharing systems have become an essential part of modern life, with Know Your Customer (KYC) pro-

cesses being crucial for onboarding users. This research presents a streamlined KYC solution designed to effi-

ciently onboard customers by extracting key information from identity cards and driving licenses. We employ

techniques from Computer Vision and Machine Learning, including object detection and Optical Character

Recognition (OCR), to facilitate this process. The paper concludes by exploring additional features, such as

gender recognition, age prediction, and liveness detection, which can further enhance the KYC system.

1 INTRODUCTION

The proliferation of vehicles has been linked to a wide

array of environmental and economic challenges. In-

creased emissions from automobiles contribute sig-

nificantly to air quality degradation, which poses se-

rious health risks to the population (Cerovsky and

Mindl, 2008). Furthermore, traffic congestion ad-

versely impacts national economic performance (Ka-

gawa et al., 2013). In response to these issues, govern-

ments are actively seeking strategies to mitigate car

usage.

One promising solution is the implementation of

car sharing systems. Car sharing can take various

forms; while some people share rides to a common

destination, others may utilize a vehicle when its

owner is not actively using it. This paper focuses on

the latter model, in which individuals can access ve-

hicles for personal use through a shared platform.

Car-sharing systems can be categorized into two

primary approaches: traditional car-sharing, which

operates a fleet of vehicles owned by the company,

and peer-to-peer (P2P) car-sharing, where individu-

als offer their own vehicles for community use. The

P2P model typically requires fewer resources from

the company, making it an attractive option for both

service providers and users.

A critical component of any car-sharing system is

the onboarding process, which ensures that customers

can legally operate vehicles within the system. Ef-

a

https://orcid.org/0000-0002-8741-434X

fective onboarding relies on robust customer identifi-

cation processes that gather essential information, in-

cluding full name, IC number, driving license number,

and photographic verification. These data are vital to

establish trust and enable the seamless operation of

car-sharing services.

In this paper, we present a comprehensive ap-

proach to implementing a Know Your Customer

(KYC) solution tailored for car-sharing systems. Our

proposed system integrates several machine learning

(ML) models to facilitate the following functionali-

ties:

• Face detection

• Face comparison

• Identity card (IC) detection

• Driving license detection

• Verification of the authenticity of ICs, driving li-

censes

• Extraction of Regions of Interest (ROI)

• Optical Character Recognition (OCR) for ex-

tracted ROIs

• User communication with the ML solution

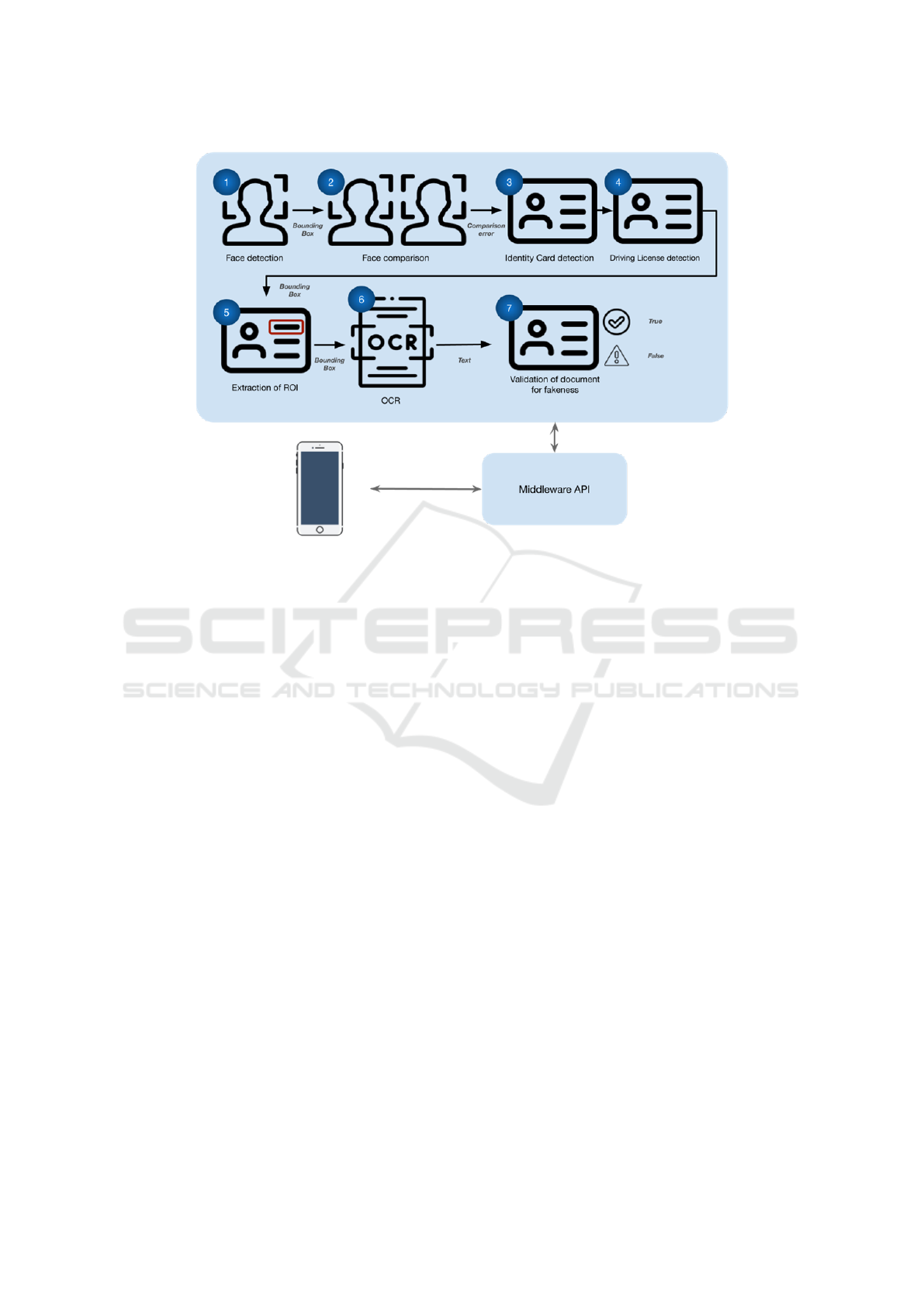

Fig. 1 illustrates the architecture of the proposed

system, showcasing its key components and their in-

teractions.

Kairanbay, M.

Onboarding Customers in Car Sharing Systems: Implementation of Know Your Customer Solutions.

DOI: 10.5220/0013145100003912

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 20th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2025) - Volume 3: VISAPP, pages

381-391

ISBN: 978-989-758-728-3; ISSN: 2184-4321

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

381

Figure 1: ML-based KYC Solution Architecture for Car Sharing Systems.

2 RELATED WORK

KYC systems are essential for financial institutions,

playing a key role in preventing illegal activities such

as money laundering, fraud, and terrorist financing.

These systems verify client identities, ensure com-

pliance with regulatory requirements, and mitigate

financial crimes. Traditionally, KYC processes in-

volved manual steps; however, advancements in tech-

nology have led to the development of automated so-

lutions aimed at increasing efficiency and accuracy.

This shift has been driven by the need for quicker

and more secure customer onboarding while ensuring

compliance with rigorous regulatory standards.

Recent developments in KYC technologies in-

clude the adoption of biometric authentication (Miller

and Smith, 2019), ML (Charoenwong, 2023), and

blockchain (Liu and Zhang, 2019). Biometric meth-

ods, such as facial recognition (Jain and Gupta, 2017)

and fingerprint scanning (Al and Kumar, 2020), have

significantly enhanced the accuracy and security of

identity verification procedures. ML techniques, in-

cluding anomaly detection (Bhardwaj and Sharma,

2021) and pattern recognition (Yoon and Kim, 2020),

help identify suspicious activities in real time. Addi-

tionally, blockchain technology (Zohdy and Thomas,

2018) provides decentralized solutions for secure and

transparent sharing of customer data. However, de-

spite these technological advancements, challenges

such as regulatory compliance, data privacy concerns,

and the financial costs associated with implementing

sophisticated systems remain significant issues for fi-

nancial institutions (Liu and Zhang, 2019).

The increasing importance of KYC systems is not

limited to financial institutions alone but spans vari-

ous industries. While the documents required for ver-

ification may vary based on the industry (e.g., finance,

healthcare, or real estate), the underlying principles of

document validation and data extraction remain the

same. This section reviews current KYC solutions,

examining their strengths and limitations, and out-

lines how proposed innovations aim to address these

challenges.

Recent studies highlight the application of ML

techniques in improving KYC processes. For in-

stance, research by (ACTICO, 2023) shows that

supervised learning methods, particularly Random

Forests, are effective in enhancing the performance of

KYC applications. Their findings suggest that adopt-

ing these techniques can reduce the number of clarifi-

cation requests by up to 57%, significantly streamlin-

ing the compliance process and improving efficiency.

(Technologies, 2023) employed several advanced

techniques to tackle the KYC challenge, including:

• Convolutional Neural Networks (CNNs) utilizing

Python and TensorFlow

• OpenCV for computer vision tasks

VISAPP 2025 - 20th International Conference on Computer Vision Theory and Applications

382

• Optical Character Recognition (OCR) and Ma-

chine Readable Zone (MRZ) packages

Their approach involves scanning documents to

extract personal information and passport expiration

dates. Once extracted, this data is compared with

database records to validate the submitted documents.

Their model classifies documents as verified, expired,

canceled, or mismatched based on validation results.

Documents that cannot be confidently categorized are

referred for manual review. Over time, the model

benefits from manual classifications through auto-

mated retraining, integrating new and corrected data.

The authors assert that ML-based solutions can sig-

nificantly expedite the customer onboarding process,

achieving document verification in one-tenth the time

required for manual processing. Furthermore, their

solution boasts high accuracy and efficiency, adher-

ing to standard procedures while minimizing manual

intervention. They report a 70% reduction in man-

ual effort for KYC verification, along with a 70% im-

provement in resource management, allowing orga-

nizations to redirect human resources to more value-

added tasks.

Conversely, (Charoenwong, 2023) argue that

KYC solutions often do not yield significant bene-

fits for banks. They contend that the challenges as-

sociated with KYC are not merely issues of data sci-

ence or ML, but rather a stem from systemic incen-

tives within the banking industry. They posit that the

underlying problems are trivial, yet banks may profit

from circumventing these processes.

In the following section, we present our solu-

tion for addressing the KYC challenge through deep

learning methods combined with OCR techniques.

The literature reviewed indicates that customer on-

boarding processes can be automated and accelerated.

This research aims to validate that premise, demon-

strating that ML approaches can effectively automate

and expedite the onboarding process with minimal

effort. We also aim to showcase the applicability

of such solutions in real-world scenarios, including

car-sharing systems. To substantiate our hypothesis,

we conducted experiments using Kazakhstani docu-

ments, specifically ICs and driving licenses. These

documents were processed using object detection and

character recognition models, with the details of our

proposed solution outlined below.

3 PROPOSED ML-BASED KYC

SYSTEM

The proposed solution encompasses several key com-

ponents, including:

• Face detection

• Face comparison

• IC detection

• Driving license detection

• Validation of ICs and driving licenses for authen-

ticity

• Extraction of regions of interest (ROI)

• Optical character recognition (OCR) for the ex-

tracted regions

In the following subsections, we will explore each

component in detail. Our solution is designed for real-

world applications, streamlining the customer on-

boarding process, reducing manual workload, and en-

hancing customer satisfaction while accommodating

a greater number of users.

3.1 Data Collection and Labeling

ML methodologies rely on data-driven approaches,

necessitating the collection of data prior to initiating

any training processes. Given that we are employ-

ing a supervised learning strategy, it is essential for

the data to include corresponding ground truth labels.

Our focus will primarily be on object detection tasks,

such as identifying ICs, driving licenses, and identity

numbers, where the goal is to locate specific objects

within the provided images. Object detection involves

drawing the smallest bounding box that encapsulates

the object of interest. For this bounding box, we iden-

tify the coordinates of the top-left and bottom-right

corners.

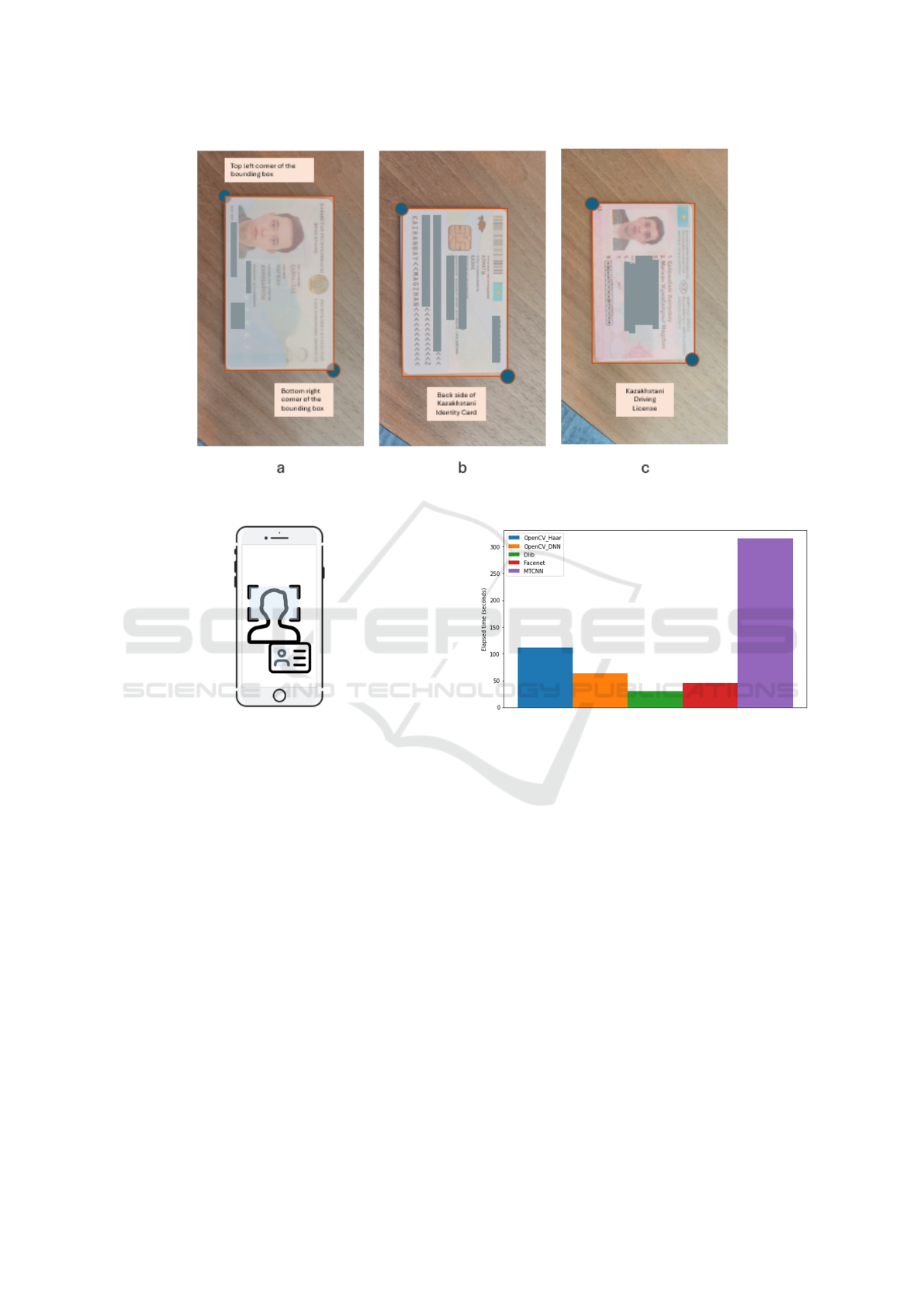

For each object detection task, we have gathered

2,000 images, and for each image, we have manually

annotated the bounding boxes. Figure 2 below illus-

trates the data alongside its corresponding bounding

box. Each bounding box serves as a ground truth

label, defined by the coordinates (x

topleft

,y

topleft

) and

(x

bottomright

,y

bottomright

). There are no constraints on

the input image sizes, allowing for the use of images

with any dimensions.

3.2 Face Detection

Face detection is a fundamental component of most

KYC systems (Pic et al., 2019), (Darapaneni et al.,

2020), (Do et al., 2021). Since many official docu-

ments include a photo of the customer, it is crucial to

verify that the individual in the photo matches the per-

son during the onboarding process. To achieve this,

our proposed solution requires customers to upload

a selfie while holding their IC. This allows us to de-

tect faces from both the selfie and the IC, enabling a

Onboarding Customers in Car Sharing Systems: Implementation of Know Your Customer Solutions

383

Figure 2: Sample data for a) front and b) back side of IC and c) driving license detection.

Figure 3: Face detection from the selfie and IC.

comparison to confirm that they belong to the same

individual (see Fig. 3).

Our face detection implementation utilizes a va-

riety of algorithms (Sun et al., 2018), (Mita et al.,

2005), (Chang-Yeon, 2008), (Dalal and Triggs, 2005).

(Stefanovic, 2023) conducted a comparative analy-

sis of five face detection algorithms: Haar cascade,

OpenCV DNN, Dlib, MTCNN, and Facenet. Their

results indicated that while the detection accuracy of

these models is comparable (Table 1), Dlib stands

out for its processing speed, completing image pro-

cessing in approximately 30 seconds, compared to

Facenet and OpenCV DNN, which take about 50 sec-

onds, Haar cascade around 100 seconds, and MTCNN

approximately 300 seconds (Stefanovic, 2023) (see

Fig. 4). Dlib is integrated into a user-friendly “face

recognition” API, making it straightforward to im-

plement in our solution. Given our priority of ex-

pediting customer onboarding, we opted to utilize

this “face recognition” API (fac, 2024), which offers

two methodologies: Histogram of Oriented Gradients

Figure 4: Comparison of face detection models based on

processing time.

(HoG) (Dalal and Triggs, 2005) and Convolutional

Neural Network (CNN) (cnn, 2024).

Table 2 outlines the differences between these ap-

proaches, highlighting that HoG processes images 16

times faster than CNN. Since rapid onboarding is a

primary requirement, we decided to use HoG, espe-

cially as the faces we detect will consistently be pre-

sented from a frontal angle, rendering additional CNN

features unnecessary. The face extraction using HoG

is subsequently classified with Linear Support Vector

Machines.

During the face detection process, it is imper-

ative to ensure that facial landmarks, such as the

eyes, eyebrows, and lips, are visible. If any of these

landmarks are obstructed, we cannot proceed to the

next step. Common scenarios leading to landmark

blockage include obscured lips due to mask-wearing,

which has become prevalent during the COVID-19

pandemic. Therefore, we instruct users to remove

masks when taking their photos. Other obstructions,

VISAPP 2025 - 20th International Conference on Computer Vision Theory and Applications

384

Table 1: Comparison of Face Detection Methods: Accuracy and Performance.

Method Accuracy Key Strengths Performance

OpenCV

Haar Cas-

cades

90% - 95% Fast, real-time detection, low compu-

tational requirements

Fast, but less accurate on diverse

faces (e.g., varied poses)

OpenCV

DNN (e.g.,

ResNet)

96% - 98% Higher accuracy than Haar, robust to

pose/occlusion/lighting

Slower than Haar, but real-time with

proper hardware

Dlib (CNN-

based)

95% - 98% Accurate, works well with various

poses and occlusions

Slower than Haar, but more accurate,

especially with CNN

FaceNet 98% - 99% Highly accurate, also provides em-

beddings for face recognition

Slower than other methods, requires

high computational power

MTCNN 95% - 98% Good for detecting faces at various

angles and scales

Moderate speed, good accuracy, can

be slower than Haar and Dlib

Table 2: Differences Between HoG and CNN-Based Solu-

tions.

HoG CNN

Detects faces primar-

ily from frontal an-

gles, making it less

effective for identify-

ing faces at various

angles.

Capable of detecting

faces from a wide

range of angles.

Detection time is 0.2

seconds when using a

CPU.

Detection time is 3.3

seconds when using a

CPU.

such as glasses or long hair, can also hinder visibility

of the eyebrows and eyes. To address these issues, we

will provide a user manual outlining proper practices

for capturing the selfie photo. Any photos that do not

meet the visibility criteria for processing through our

ML solutions will be subject to manual review. It is

crucial that all requirements—visibility of lips, eye-

brows, and eyes—are confirmed during the manual

KYC validation process. Each case must ensure the

presence of two faces: one from the selfie and one

from the document. Once both faces are available, we

can begin the comparison to ascertain if they belong

to the same person. The subsequent subsection will

detail the face comparison methodology.

3.3 Face Comparison

For each detected face, we need to obtain its face

encodings, which are numerical representations of

the face stored as a one-dimensional array. Typi-

cally, these encodings are derived from the penulti-

mate fully connected layers of CNNs in deep learning

models. However, since we are utilizing a HoG-based

approach, we will focus on extracting HoG features.

The process for obtaining these features is illustrated

step-by-step in Figure 5.

The first step of the algorithm involves calculating

the centered horizontal and vertical gradients, which

can be expressed mathematically as

f =

g

x

g

y

=

∂ f /∂x

∂ f /∂y

, (1)

where g

x

=

∂ f

∂x

and g

y

=

∂ f

∂y

represent the deriva-

tives in the x and y directions. These derivatives can

be approximated using convolution with the filters

h = [−1, 0, 1] and h

T

in their respective directions.

Once the image derivatives are calculated, they can

be used to derive the gradient direction and magni-

tude, computed as

θ = tan

−1

g

y

g

x

, (2)

and

(g

2

x

+ g

2

y

)

0.5

, (3)

respectively.

Next, the image is divided into overlapping blocks

of 16 × 16 pixels with a 50% overlap. Each block

comprises 2 × 2 cells, each sized 8 × 8 pixels. We

then quantize the gradient orientations into N bins and

concatenate these to form the final feature vector. The

”Face Recognition API” provides a method for ob-

taining these face encodings, which are represented

as a one-dimensional array of length 128. For the i-th

face encoding, we denote it as

f (i) = [ f (i)

0

, f (i)

1

, f (i)

2

,..., f (i)

127

].

Figure 6 illustrates the values of this feature vec-

tor, which range from -0.3 to 0.3.

Once we have the face encodings for two faces

(face i and face j), we need to determine their simi-

larity. To do this, we calculate the Euclidean distance

between the two vectors (Recognition, ). This dis-

tance measures the discrepancy between the vectors:

if the distance is close to 0, the vectors are similar; if it

Onboarding Customers in Car Sharing Systems: Implementation of Know Your Customer Solutions

385

Figure 5: Feature extraction technique utilized by the HoG algorithm.

Figure 6: Visual representation of the feature vector for the

”face recognition” API.

is significantly greater than 0, the vectors are dissimi-

lar, indicating a low probability that the faces belong

to the same person. We establish a threshold of 0.6

for this validation process. Thus, if the distance is be-

low 0.6, we conclude that the faces (face i and face

j) belong to the same person; otherwise, they belong

to different individuals, as expressed in the equations

below:

dis(i, j) =

127

∑

k=0

( f

k

(i) − f

k

( j))

2

!

0.5

< 0.6 (indicating the same person), (1)

dis(i, j) =

127

∑

k=0

( f

k

(i) − f

k

( j))

2

!

0.5

≥ 0.6 (indicating different individuals),

(2)

Here, dis(i, j) denotes the Euclidean distance be-

tween feature vectors i and j. While we are using

the Euclidean distance for this purpose, other distance

metrics could also be applied. According to the au-

thors (fac, 2024), optimal performance is achieved

with a threshold of 0.6, which we adopt in our ap-

proach. If the threshold value is lowered, the criteria

for matching become stricter; conversely, raising the

threshold makes the criteria more lenient.

3.4 IC Detection

After confirming that the faces in the selfie and IC be-

long to the same individual, we proceed to the next

step: extracting key information from the documents.

The IC serves as the primary document for verifying

a person’s identity. We will focus on retrieving the

Identity Number from the card, as this is typically suf-

ficient; additional details such as fines or penalties can

be accessed using just the Identity Number.

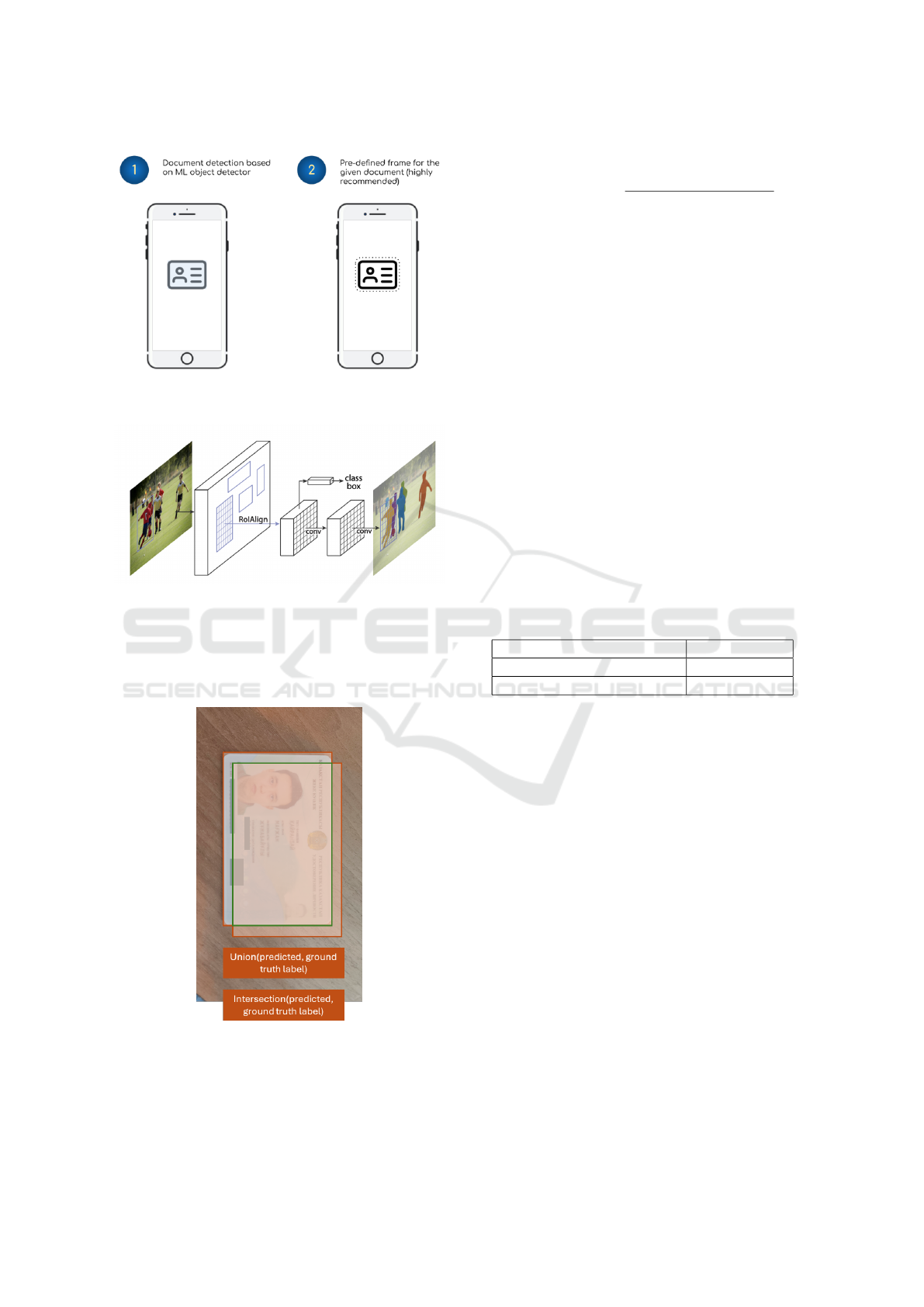

There are two approaches to IC detection. The

first involves developing a ML model specifically for

detecting ICs. The second, simpler approach en-

hances detection accuracy by employing a bounding

box drawn in the application. The user simply needs

to align the IC with this box for effective detection and

cropping. Therefore, the second approach is strongly

recommended (Fig. 7). For the first method, we uti-

lized an object detection technique known as Mask

R-CNN (He et al., 2017). The architecture of Mask

R-CNN is illustrated in Figure 8 (He et al., 2017),

demonstrating its capability to segment the target ob-

ject. Once the object is identified, we can establish the

bounding box by determining the top-left and bottom-

right coordinates. Mask R-CNN achieved an accuracy

VISAPP 2025 - 20th International Conference on Computer Vision Theory and Applications

386

Figure 7: Two methods for document detection, with the

second approach being strongly recommended.

Figure 8: Architecture of the Mask R-CNN Algorithm.

of 91% for this task. We used the Jaccard index (Ley-

desdorff, 2008) as our evaluation metric, which is de-

fined as the intersection over union of our predictions

and the ground truth labels (see Figure 9).

Figure 9: The intersection and union between the predicted

bounding box and the ground truth bounding box.

Jaccard(predicted,ground truth) =

predicted ∩ ground truth

predicted ∪ ground truth

(4)

In an ideal scenario where object detection is

perfect, the Jaccard index equals 1, indicating com-

plete overlap between the predicted and ground truth

bounding boxes. Once the IC is detected, we will pro-

ceed to validate its authenticity. However, before that,

we will discuss the detection of driving licenses.

3.5 Driving License Detection

Detecting a driving license follows a process similar

to that of IC detection. We have two approaches avail-

able, with the second approach being strongly pre-

ferred. For the first approach, we will employ Mask

R-CNN, which achieves an accuracy of 91% in de-

tecting driving licenses (as shown in Table 3). We uti-

lized nearly 2,000 data samples, allocating 80% for

training and 20% for testing in both IC and driving

license detection tasks. The accuracy for both tasks

is comparable due to the consistent dataset and train-

ing/testing split used for each. The primary distinc-

tion lies in the specific document type being identi-

fied.

Table 3: Document Detection Accuracy.

Task Accuracy

IC Detection 91%

Driving License Detection 91%

Once the necessary document is detected, we can

proceed to extract key information from it. Typically,

the expiration date and type of driving license are crit-

ical data points needed for customer onboarding. The

extraction of regions of interest (ROIs) from these

documents will be addressed in subsequent chapters.

Before that, we will discuss the validation process for

both ICs and driving licenses to ensure their authen-

ticity.

3.6 Validation of IC and Driving

License for Authenticity

After the documents have been identified, the subse-

quent step is to verify their authenticity. Individuals

sometimes attempt to use counterfeit documents for

various illicit purposes, including fraud, theft, vandal-

ism, and other criminal activities related to vehicles.

Therefore, it is essential to ensure that customers are

presenting legitimate and accurate documents. The

methods of validation differ depending on the type

and format of the documents. Typically, these doc-

uments adhere to specific standards, which include

Onboarding Customers in Car Sharing Systems: Implementation of Know Your Customer Solutions

387

Figure 10: Verification of the document’s authenticity by

identifying key landmarks from a) front, b) back side of IC

and from c) driving license.

identifiable landmarks located at designated points.

Consequently, the initial validation process involves

detecting these landmarks (Fig. 10). Once we confirm

the presence of all required landmarks, this validation

step is deemed complete. This method represents a

basic form of validation, as the primary aim of this pa-

per is to demonstrate a comprehensive KYC solution

for car-sharing systems. More advanced algorithms

for document validation will be explored beyond the

scope of this research, but we plan to enhance this as-

pect in future work.

3.7 Extraction of Regions of Interest

The ”identity number” and ”driving license expiration

date” are crucial data points for the car-sharing sys-

tem. To enable customers to begin driving, it is essen-

tial to obtain and verify these values. The extraction

process for these key data points is based on similar

principles for both the identity number and the expi-

ration date detection tasks.

The primary objective is to identify a unique fea-

ture, often a specific landmark on the document. Once

this unique feature is located, we can determine the

positions of the required data relative to it. This

methodology allows us to effectively extract the nec-

essary information, which is then passed on to the

OCR step (Fig. 11).

In the context of Kazakhstani documents, unique

landmarks may include a chip on the driving license

or the facial images present on both documents. For

chip detection, we employed the MaskRCNN model.

Consistent with our previous methodology, we uti-

lized 80% of the data for training and the remaining

20% for testing.

Figure 11: Extraction of ROIs using the fixed locations of

document landmarks.

3.8 Optical Character Recognition

(OCR) for Extracted ROIs

After the ROIs have been extracted and cropped, we

can proceed with the OCR process. This process con-

sists of three main components: 1) Text Detection, 2)

Character Segmentation, and 3) Character Recogni-

tion. The initial step, text detection, has already been

addressed in the previous section. We will now focus

on character segmentation and recognition, utilizing

open-source libraries like Tesseract, which automates

these functions.

However, simply using Tesseract may not suffice,

as the cropped text data can often include noise. Thus,

it is essential to preprocess the data to eliminate any

noise before applying Tesseract for OCR. The noise

removal techniques typically include:

• Blurring the image (using methods such as aver-

age or Gaussian filtering)

• Histogram equalization

• A combination of morphological operations like

erosion and dilation

Once the cropped text data has been adequately

cleaned, we can apply Tesseract OCR. It is crucial to

select the appropriate language during the character

recognition phase. In the following subsection, we

will integrate all these features to demonstrate how

VISAPP 2025 - 20th International Conference on Computer Vision Theory and Applications

388

to create a comprehensive system for automated cus-

tomer onboarding.

3.9 Integration of ML with the end

Application

All functionalities of the KYC system, provided as

APIs, will be hosted on the ML server (see Fig. 12).

Requests originating from the client side (mobile app)

will be processed by the back-end server. This server

will manage a queue of requests and forward them to

the ML server for the appropriate API. Upon receiv-

ing a request from a specific customer, the ML server

will process it and return the results to the back-end

server. The back-end server will then relay these re-

sponses to the mobile app for display to the end users.

For future enhancements, we plan to implement

a queue system to effectively manage customer load.

This queue will be placed between the back-end

server and the ML server.

4 ADDITIONAL TASKS

The KYC system has the potential to incorporate a va-

riety of additional features. Among these are gender

recognition and age estimation, which provide valu-

able insights into customer demographics. This in-

formation can later be leveraged for various purposes,

such as marketing campaigns. The following subsec-

tion will detail the implementation of these additional

features, focusing on age and gender prediction.

4.1 Demographic Attribute Prediction

Understanding the demographic attributes of cus-

tomers is crucial for enhancing the KYC system.

These attributes include location, gender, and age.

While the customer’s location can often be easily

determined using GPS, identifying age and gender

presents more complex challenges. In this research,

we aim to tackle these tasks through Computer Vision

and ML techniques.

Age prediction is treated as a regression task,

where the output is a floating-point number represent-

ing an age within a specified range (e.g., 18 to 100

years). In contrast, gender classification is framed as

a classification problem, where each input image must

be categorized into one of a limited number of classes.

For both tasks, we utilize the Histogram of Ori-

ented Gradients (HoG) as the feature extractor, pro-

ducing feature vectors for each face (denoted as

f (i) = [ f (i)

0

, f (i)

1

,..., f (i)

127

]). These feature vec-

tors serve as input for training the gender classifier

and age predictor, which can be implemented using

various methods such as Neural Networks or Support

Vector Machines.

Let’s denote the classifier as g. For the clas-

sification task, the model output is represented as

g( f (x(i))), where x(i) denotes the i-th input image.

This classification will yield a one-hot encoded out-

put, such as [0,0,...,1,...,0], where only one value

is ”1” and all others are ”0.” The index of the array

with the value of ”1” indicates the predicted class.

In the case of the regression task, g( f (x(i))) will

produce a floating-point number, as previously de-

scribed. These prediction functions, g( f (x(i))), can

be integrated into the ML server as separate APIs.

Whenever this demographic information is required,

we can easily call these APIs from the back end.

5 FUTURE WORK

The current KYC solution presents several limita-

tions. For instance, a customer could potentially take

a photo of someone else and submit it as their selfie,

thereby circumventing the ML system and gaining ac-

cess to the platform with false information. This sce-

nario poses significant risks, including vehicle theft,

parts theft, and other criminal activities.

To address these vulnerabilities, we need to ex-

plore solutions that can effectively mitigate such risks.

One promising approach is the implementation of

”liveness detection.” This feature aims to verify that

the customer is a live individual and not merely pre-

senting a printed photo as their selfie. The following

subsection will delve into the specifics of the ”live-

ness detection” feature.

5.1 Liveness Detection

Various methods exist for ”liveness detection,” which

determine whether the individual on the client side is

indeed a live person. We will utilize one of the most

common techniques, which involves providing cus-

tomers with a set of facial commands and monitoring

their compliance. This method is referred to as ”ac-

tive liveness detection.”

If the customer successfully follows the com-

mands, we will conclude that they are a live individ-

ual and proceed with their onboarding. Conversely, if

they fail to comply, their request will be rejected, and

the KYC process will be sent for manual verification.

The list of facial commands may include:

• Open the mouth

• Close the eyes

Onboarding Customers in Car Sharing Systems: Implementation of Know Your Customer Solutions

389

Figure 12: Comprehensive architecture of the ML-based KYC system.

Figure 13: Illustration of the liveness detection process.

• Close the right/left eye

• Turn the head to the left/right

• Look up/down

• Etc.

We can randomly select three commands from the

list above. Once a command is issued, we will clas-

sify the customer’s actions. If the predicted action

matches the intended command, we will proceed to

the next command. If there are three correct matches

out of three attempts, we will consider the person to

be live; otherwise, the customer will not pass our test

(Fig. 13).

5.2 Improving Photo Quality

Image quality can vary significantly based on the

type of smartphone and its camera capabilities. Low-

quality cameras and poor lighting conditions often re-

sult in subpar images, which can hinder the perfor-

mance of the ML KYC system and create challenges

for customers during onboarding. To address this, we

can enhance image quality to achieve two main objec-

tives: 1) improve text readability and 2) reduce noise.

To enhance text clarity, we can apply deblurring

algorithms that focus on refining image quality, mak-

ing input photos clearer and more legible. One effec-

tive approach for deblurring is to utilize Generative

Adversarial Networks (GAN) (Lu et al., 2019). For

reducing noise, we can implement various noise re-

moval algorithms (Verma and Ali, 2013).

6 CONCLUSION

In this research, we have presented an architecture for

developing a straightforward KYC solution suitable

for various car-sharing use cases. Our proposed meth-

ods leverage Computer Vision and Machine Learning

techniques, emphasizing the detection of regions of

interest (ROIs) from images. Additionally, the ML

server can be further enhanced by incorporating fea-

VISAPP 2025 - 20th International Conference on Computer Vision Theory and Applications

390

tures such as a gender classifier and an age predictor.

To bolster the security of the KYC solution, we rec-

ommend implementing a “liveness detection” feature,

which verifies whether the individual on the screen is

indeed a live person.

REFERENCES

(2024). Cnn based face detector. Accessed: 2024-10-14.

(2024). Face recognition api. Accessed: 2024-10-14.

ACTICO (2023). Why machine learning brings up to 57%

savings in the KYC process. Accessed: 2024-10-14.

Al, S. and Kumar, J. (2020). Fingerprint scanning tech-

nology in modern identity verification systems. Inter-

national Journal of Computer Applications, 28(3):65–

78.

Bhardwaj, R. and Sharma, P. (2021). Anomaly detection

algorithms in anti-money laundering systems. Journal

of Artificial Intelligence Research, 34(4):45–60.

Cerovsky, Z. and Mindl, P. (2008). Hybrid electric cars,

combustion engine driven cars and their impact on

environment. In 2008 International Symposium on

Power Electronics, Electrical Drives, Automation and

Motion, pages 739–743. IEEE.

Chang-Yeon, J. (2008). Face detection using lbp features.

Final Project Report, 77:1–4.

Charoenwong, B. (2023). The one reason why AI/ML for

AML/KYC has failed (so far). Accessed: 2024-10-14.

Dalal, N. and Triggs, B. (2005). Histograms of oriented

gradients for human detection. In 2005 IEEE com-

puter society conference on computer vision and pat-

tern recognition (CVPR’05), volume 1, pages 886–

893. Ieee.

Darapaneni, N., Evoori, A. K., Vemuri, V. B., Arichandra-

pandian, T., Karthikeyan, G., Paduri, A. R., Babu, D.,

and Madhavan, J. (2020). Automatic face detection

and recognition for attendance maintenance. In 2020

IEEE 15th International Conference on Industrial and

Information Systems (ICIIS), pages 236–241. IEEE.

Do, T.-L., Tran, M.-K., Nguyen, H. H., and Tran, M.-T.

(2021). Potential threat of face swapping to eKYC

with face registration and augmented solution with

deepfake detection. In Future Data and Security En-

gineering: 8th International Conference, FDSE 2021,

Virtual Event, November 24–26, 2021, Proceedings 8,

pages 293–307. Springer.

He, K., Gkioxari, G., Doll

´

ar, P., and Girshick, R. (2017).

Mask R-CNN. In Proceedings of the IEEE interna-

tional conference on computer vision, pages 2961–

2969.

Jain, A. K. and Gupta, S. B. (2017). Facial recognition: Ad-

vances and applications in identity verification. IEEE

Transactions on Biometrics, Behavior, and Identity

Science, 4(2):115–130.

Kagawa, S., Hubacek, K., Nansai, K., Kataoka, M., Man-

agi, S., Suh, S., and Kudoh, Y. (2013). Better cars

or older cars?: assessing co2 emission reduction po-

tential of passenger vehicle replacement programs.

Global Environmental Change, 23(6):1807–1818.

Leydesdorff, L. (2008). On the normalization and visualiza-

tion of author co-citation data: Salton’s cosine versus

the jaccard index. Journal of the American Society for

Information Science and Technology, 59(1):77–85.

Liu, X. and Zhang, Y. (2019). Blockchain technology for

secure kyc in financial systems. Journal of Blockchain

Research, 5(2):45–59.

Lu, B., Chen, J.-C., and Chellappa, R. (2019). Uid-gan:

Unsupervised image deblurring via disentangled rep-

resentations. IEEE Transactions on Biometrics, Be-

havior, and Identity Science, 2(1):26–39.

Miller, M. and Smith, J. (2019). Biometric authentication

systems in financial applications. Journal of Financial

Technology, 12(3):89–104.

Mita, T., Kaneko, T., and Hori, O. (2005). Joint haar-like

features for face detection. In Tenth IEEE Interna-

tional Conference on Computer Vision (ICCV’05) Vol-

ume 1, volume 2, pages 1619–1626. IEEE.

Pic, M., Mahfoudi, G., and Trabelsi, A. (2019). Remote

KYC: Attacks and counter-measures. In 2019 Eu-

ropean Intelligence and Security Informatics Confer-

ence (EISIC), pages 126–129. IEEE.

Recognition, F. Face comparison distance calculation. Ac-

cessed: 2024-10-14.

Stefanovic, S. (2023). Face detection algorithms compari-

son. Accessed: 2024-10-14.

Sun, X., Wu, P., and Hoi, S. C. (2018). Face detection us-

ing deep learning: An improved faster rcnn approach.

Neurocomputing, 299:42–50.

Technologies, S. (2023). How we built an intelligent au-

tomation solution for KYC validation. Accessed:

2024-10-14.

Verma, R. and Ali, J. (2013). A comparative study of var-

ious types of image noise and efficient noise removal

techniques. International Journal of advanced re-

search in computer science and software engineering,

3(10).

Yoon, J. and Kim, R. (2020). Pattern recognition in finan-

cial fraud detection systems. IEEE Transactions on

Computational Intelligence, 9(5):98–112.

Zohdy, M. and Thomas, L. (2018). Blockchain technol-

ogy for secure customer data sharing in kyc systems.

Blockchain Technology Review, 2(1):23–37.

Onboarding Customers in Car Sharing Systems: Implementation of Know Your Customer Solutions

391