Brain-Driven Robotic Arm: Prototype Design and Initial Experiments

Fatma Abdelhedi

1,2 a

, Lama Aljedaani

1b

, Amal Abdallah Batheeb and Renad Abdullah Aldahasi

2c

1

Department of Electrical and Computer Engineering, King Abdulaziz University, Jeddah, Saudi Arabia

2

Control and Energy Management Lab, National Engineering School of Sfax, Sfax University, Tunisia

Keywords: Assistive Device, Robotic Arms, Brain-Computer Interface Technology (BCI), Electroencephalogram (EEG).

Abstract: Advances in robotic control have revolutionized assistive technologies for individuals with upper limb

amputations. Daily tasks, which are often complex or time-consuming, can be challenging without assistance.

Traditional assistive devices often demand significant physical effort and lack versatility, limiting user

independence. In response, the Brain-Driven Robotic Arm project aims to develop an advanced assistive

device that allows individuals with disabilities to control a robotic arm using their brain signals. Utilizing

brain-computer interface (BCI) technology with electroencephalogram (EEG) signals, the system processes

brain activity to generate commands for the robotic arm, offering a more intuitive and efficient assistive

solution. The experimental setup integrates the 6-DOF Yahboom DOFBOT Robotic Arm Kit with the 14-

Channel EPOC X EEG Headset, where the system control is managed via Python software, using the Latent

Dirichlet Allocation (LDA) algorithm for AI-driven tasks.

1 INTRODUCTION

In recent years, significant progress has been made in

developing methods to control robotic systems for

individuals with paralysis or limb amputations.

According to the World Health Organization (WHO)

and the World Bank, an estimated 35-40 million

people worldwide require prosthetic or orthotic

services, yet only one in ten has access to them

(Lemaire, 2018). By 2050, this figure is expected to

rise to over two billion. Additionally, diabetes leads

to a major limb amputation every 30 seconds

globally, resulting in over 2,500 limbs lost daily

(Bharara, M.). To address these challenges,

researchers have focused on non-invasive approaches

like brain-computer interface (BCI) technology,

which uses EEG signals to create a communication

and control link between the brain and external

devices (Shedeed, 2013). The Brain-Driven Robotic

Arm is a BCI-based solution designed to assist

individuals with disabilities by interpreting their brain

signals to control robotic systems.

Advances in BCI and robotics have significantly

enhanced the precision and control of robotic arms.

However, many existing assistive devices remain

limited, often demanding considerable physical effort

a

https://orcid.org/0000-0003-1658-8067

and providing only basic functionality, which

compromises user independence and versatility.

There is a growing need for a more advanced and

cost-effective solution that improves control and

usability. A brain-driven robotic arm offers a

promising alternative, empowering individuals with

severe motor disabilities, such as limb loss, to regain

mobility and independence. By integrating principles

from neuroscience, computer science, and robotics,

this system establishes a direct interface between the

brain and the robotic arm, allowing users to control

the arm’s movements through their brain signals

(Mu, 2024), (Yurova, 2022).

1.1 Robotic Arm

Robotic systems have evolved from their early

industrial automation to becoming versatile tools

across a multitude of industries. In particular, robotic

arms have undergone significant advancements,

becoming increasingly flexible and adept at executing

complex tasks with precision. The general

representation of a dynamic model of a robotic arm is

presented as follows:

M(q) q + C (q, q) q + G(q) = τ

Abdelhedi, F., Aljedaani, L., Batheeb, A. A. and Aldahasi, R. A.

Brain-Driven Robotic Arm: Prototype Design and Initial Experiments.

DOI: 10.5220/0013146700003890

In Proceedings of the 17th International Conference on Agents and Artificial Intelligence (ICAART 2025) - Volume 3, pages 427-434

ISBN: 978-989-758-737-5; ISSN: 2184-433X

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

427

where vectors q, q

̇

, q

̈

∈ ℝⁿ denote the measured

position, velocity, and the articulatory acceleration

vectors, respectively. Besides:

- M(q) ∈ ℝⁿˣⁿ is a uniformly bounded-symmetric-

positive-definite inertia matrix,

- C(q, q

̇

) ∈ ℝⁿ is the centrifugal and Coriolis

forces' vector,

- G(q) ∈ ℝⁿ presents the vector of gravitational

forces,

- u = τ ∈ ℝⁿ presents the vector of external control

torques and forces applied to the robot's joints,

For the considered robot we have $n=3$, where

the open-loop manipulator comprises three revolute

joints as demonstrated in Figure 1.

Equipped with sensors, robotic arms can perceive

and react to its surroundings, enhancing its

adaptability across diverse environments (Aljedaani,

2024). The integration of various types of end

effectors, such as grippers or specialized tools,

enables these arms to interact effectively with their

surroundings, extending applications across

manufacturing, healthcare, and various other sectors.

Ongoing progress in robotics, sensing technologies,

Artificial intelligence (AI), including collaborative

robotics, is propelling the robot’s evolution to learn

and adapt their actions, further amplifying their

capabilities and expanding their potential

applications .

1.2 Brain-Computer Interface (BCI)

Brain-Computer Interface (BCI) enables interaction

between the human brain and machines, employing

advanced algorithms to analyze brain signals and

recognize user commands. BCI is classified into three

types based on signal acquisition: invasive (inserting

an electrode into the brain), semi-invasive

(positioning electrodes on the brain's surface), and

non-invasive (using scalp sensors) (Ramadan, 2017).

This study focuses on EEG technology, a non-

invasive method, to record brain activity. The

combination of EEG, signal processing, and machine

learning enables direct and intuitive interaction with

a robotic arm, enhancing the independence of

individuals with disabilities in their environment.

1.3 Electroencephalogram (EEG)

The human brain is composed of billions of cells that

control various bodily functions. It consists of

different regions responsible for functions like

movement, vision, hearing, and intelligence.

Brainwaves, which are small electrical signals, are

generated by these brain cells. To record these

brainwaves, electrodes are connected to the scalp, and

this technique is called an electroencephalogram

(EEG) (Zhou, 2023). EEG has been extensively used

in clinical applications and research, including Brain-

Computer Interfaces (BCI) (Biasiucci, 2019). One

significant application of EEG is the brain-driven

robotic arm, which enables direct communication

between the brain and a machine, benefiting

paralyzed or amputated individuals. EEG sensors

capture numerous snapshots of brain activity, which

are then transmitted for analysis and storage in

various formats like computer files, mobile devices,

or cloud databases.

2 PRELIMINARIES AND

PREVIOUS WORKS

Significant progress has been achieved in BCI in

recent years, allowing a direct connection between

the human brain and external technology. This

literature review aims to offer a thorough overview of

the present status of research on brain-driven robotic

arms.

2.1 The Generation and Detection of

EEG Signals

Electroencephalography (EEG) is a method that

involves placing metal electrodes on the scalp to

measure and record the brain's electrical activity. This

activity is generated by the communication between

neurons and produces

continuous and persistent

electrical currents. Hans Berger, the scientist credited

with introducing the term "electroencephalogram"

(EEG), observed that these brain signals exhibit

regular patterns rather than random activity. This

discovery paved the way for various applications that

rely on EEG signals to infer different brain functions.

The detection of electric fields in the brain is made

possible by the coordinated activity of pyramidal

neurons located in the cortical regions (Khosla,

2020). These specialized neurons are critical in

generating and synchronizing the electrical signals

captured by EEG. The EEG technique records

changes in electrical potentials that result from

synaptic transmissions. When an action potential

reaches the axon terminal, neurotransmitters are

released, leading to the formation of excitatory

potentials and the flow of ionic currents in the

extracellular space. The cumulative effect of these

potentials from groups of neurons amplifies the

overall electric field, making EEG signals valuable

ICAART 2025 - 17th International Conference on Agents and Artificial Intelligence

428

for measurement and analysis. Different regions of

the cerebral cortex are responsible for processing

distinct types of information (Yip, 2023). For

instance, the motor cortex, located in the frontal lobe,

is central to controlling body movement and consists

of three main areas: the primary motor cortex

(Brodmann Area 4), the premotor cortex, and the

supplementary motor area (Brodmann Area 6).The

primary motor cortex is responsible for transmitting

the majority of electrical impulses from the motor

cortex, while the premotor cortex is essential for

movement preparation, particularly for proximal

muscle groups. The supplementary motor area aids in

stabilizing body posture and coordinating

movements. Notably, research indicates that sensory

input to one hemisphere of the brain can evoke

electrical signals that result in movement on the

opposite side of the body, highlighting the cross-

wiring of motor functions between the two

hemispheres (Rolander, 2023).

2.2 EEG Rhythms

To provide a complete understanding of

Electroencephalography (EEG) and the different

mental states of the brain, previous literature reviews

were consulted. According to (Huang, 2021) (Orban,

2022), EEG signals exhibit distinct frequency ranges

corresponding to different types of brain waves. Delta

(δ) waves, with a frequency range of 0.5-4 Hz, are

observed during deep sleep. Theta (𝜃) waves, ranging

from 4-8 Hz, are associated with emotions and mental

states. Alpha (𝛼) waves, in the frequency range of 8-

14 Hz, are typically detected in the frontal and

parietal regions of the scalp during awake or resting

states. Beta (𝛽) waves, ranging from 14-30 Hz, are

prominent during movements and can be observed in

the central and frontal scalp areas. Finally, gamma (𝛿)

waves have a frequency higher than 30 Hz and are

linked to processes such as idea formation, language

processing, and learning.

2.3 Electrode Placement and EEG

Recording

As The placement of metal electrodes over the scalp

is crucial for measuring and recording EEG signals.

To capture arm movement, the electrodes need to be

positioned strategically. Research indicates that the

primary region responsible for controlling body

movement is the motor cortex in the brain's frontal

lobe. Several electrode placement systems exist, but

one of the most promising is the (10-20) system, as

mentioned in reference (Orban, 2022). As seen in

Figure 2, this system uses a combination of letters and

numbers to denote specific brain regions and

electrode locations. The letters "F," "T," "P," and "O"

represent Frontal, Temporal, Parietal, and Occipital

regions, respectively. Odd numbers (1, 3, 5, 7) are

assigned to electrodes on the left hemisphere, while

even numbers (2, 4, 6, 8) represent the right

hemisphere. The letter "z" indicates an electrode

along the midline (CAO, 2021). This standardized

system ensures consistent and precise electrode

placement for EEG recordings.

Figure 1: The 10-20 System of Electrode Placement.

In reference (Bousseta, 2018), a study used a 14-

channel EEG sensor and identified that electrodes

AF3, AF4, F3, and F4 were associated with moving a

robot’s arm right, left, up, down.

The frequency band utilized is 8 Hz to 22 Hz.

A study referenced in (HAYTA, 2022) utilized a

64-channel EEG sensor to control a robot's arm

movement along multiple axes (x, y, and z). For this

purpose, twenty EEG were selected within the

frequency range of 8 Hz to 30 Hz. In another study

(Arshad, 2022), researchers developed an intelligent

robotic arm controller including a BCI integrated with

AI to aid individuals with physical disabilities. This

study employed EEG to capture brain activity and

proposed a method for controlling the robotic arm

using various AI-based classification algorithms.

Algorithms such as Random Forest, K-Nearest

Neighbors (KNN), Gradient Boosting, Logistic

Regression, Support Vector Machine (SVM), and

Decision Tree were tested, with Random Forest

achieving the highest accuracy of 76%. The paper also

highlighted the influence of individual variations in

dominant frequencies and activation bandwidths,

which can affect the EEG dataset. The research

provides insights into effective electrode placement for

detecting different arm movements and demonstrates

the feasibility of intelligently controlling a robotic arm

through BCI and AI methods. The proposed technique

offers a reliable and non-invasive approach to assist

individuals with physical disabilities, and the results

highlight the effectiveness of Random Forest

compared to other classification algorithms.

Brain-Driven Robotic Arm: Prototype Design and Initial Experiments

429

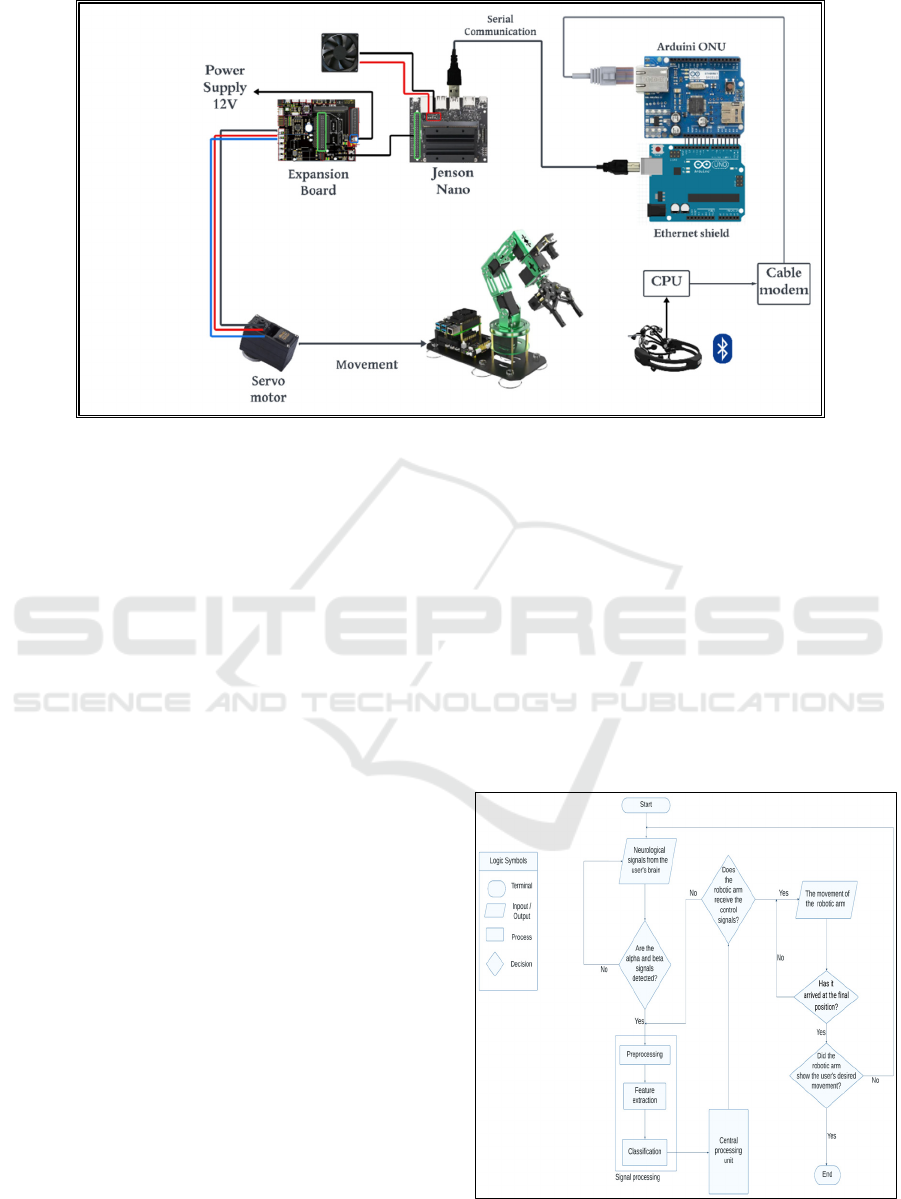

Figure 2: Brain Driven Robotic Arm Circuit Diagram.

3 PROPOSED BRAIN DRIVEN

ROBOTIC SYSTEM

This paper introduces the design of a robotic system

aimed at empowering individuals with severe motor

disabilities by providing functional robotic limb

movements and enhancing their independence. The

brain-driven robotic arm achieves this by precisely

interpreting the user’s brain signals and converting

them into commands that control and manipulate a

robotic arm’s movement.

3.1 Proposed Design Solution

The proposed system integrates cutting-edge

hardware components, a versatile programming

platform, and advanced machine learning techniques.

This combination creates a highly interactive and

sophisticated system that can efficiently interpret

brain signals to effectively control the robotic arm.

The proposed robotic system design involves the

following important steps:

1- EEG wave reading.

2- Transmission of the EEG waves to a

processing unit.

3- Analysis of the waves/signals.

4- Activation of the Robotic Arm for

movement.

Using the LucidChart website, we demonstrate

the circuit diagram of the Brain Control Robotic Arm,

illustrating the visual representation of the circuit

diagram as presented in Figure 3. A 12V DC voltage

supply is used to power the system, which includes a

Jetson Nano microcontroller and a Yahboom Dofbot

expansion board for controlling a robotic arm. The

EEG sensor is connected to the CPU (Laptop) via a

Bluetooth module, enabling wireless communication.

The CPU undergoes a machine learning phase, and

the data is then sent to Arduino UNO through an

Ethernet cable. A serial communication between

Arduino and Jetson Nano transmits real-time data. In

expansion board, each port can accommodate up to 6

cascade motors, and in this configuration, 6 motors

Figure 3: Flow Chart of Process Flow of the Design.

ICAART 2025 - 17th International Conference on Agents and Artificial Intelligence

430

are connected in series into one port. These

connections ensure efficient power

distribution, machine learning capabilities, robotic

arm control, EEG data acquisition, and temperature

regulation within the system.

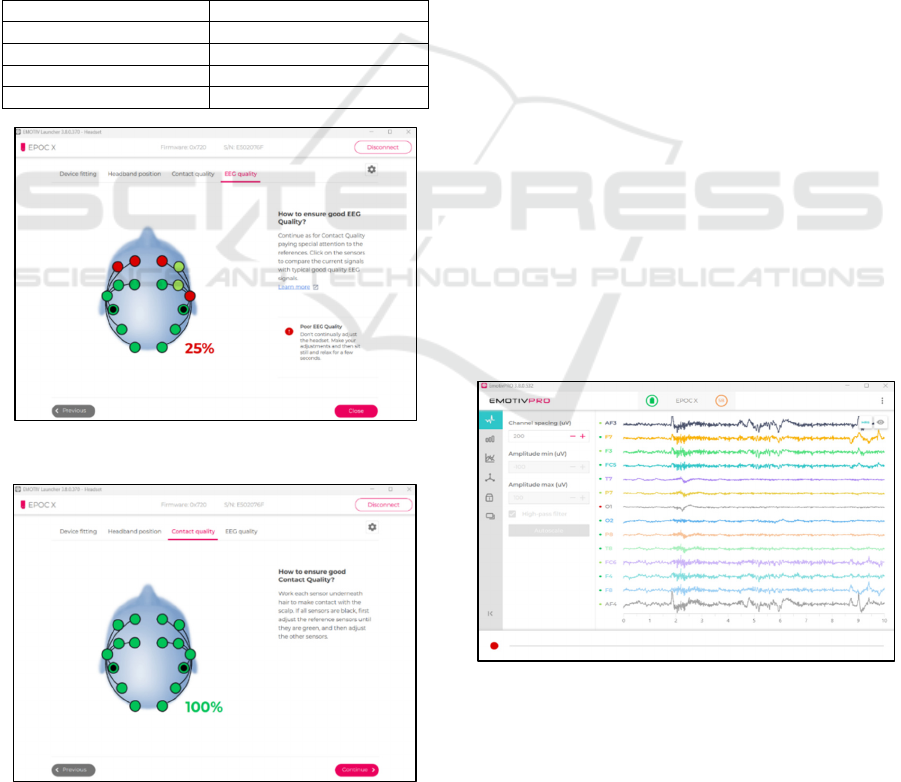

3.2 Flowchart

The flowchart in Figure 4 outlines the sequence of

actions and conditions necessary to achieve a specific

task or outcome in the robotic system. The process

starts with the acquisition of neurological signals

from the user’s brain using EEG. The system then

verifies whether these signals contain specific

frequency patterns, such as alpha and beta waves. If

these frequencies are detected, the signals proceed to

the signal processing block. Next, the system checks

if the robotic arm is successfully receiving the

categorized control signals generated from the

previous step. If the signals are received, they are

processed to control the movement of the robotic arm.

The system subsequently verifies whether the

robotic arm has reached its target position and

executed the intended movement as per the user’s

commands. Once the movement aligns with the

intended commands, the flowchart indicates the

successful completion of the process.

In this work, machine learning plays a significant

role in the project by enabling users to control the arm

using their brain signals. The machine learning model

has several stages. The first stage is preprocessing the

data, then feature extraction, and lastly choosing an

appropriate classification model. In this project, a

large data set is used to reduce the dimensionality of

the dataset, a feature selection technique was

employed, also a filter between 8 and 30 Hz is used

to keep the required frequencies. The Fast Fourier

Transform (FFT) is used as a feature extraction

method. Lastly, the Latent Dirichlet Allocation

(LDA) method is used as a classification method.

4 EXPERIMENTAL RESULTS

4.1 Test Bench Description

The proposed design involves utilizing Yahboom

DOFBOT Robotic Arm Kit, in combination with the

14-Channel EPOC X EEG Headset shown in Figures

5 and 6, respectively.

Figure 4: 6-DOF Yahboom DOFBOT Robotic Arm.

The system is controlled by Jetson Nano and

programmed using Python. Regarding machine

learning, the project employs a Latent Dirichlet

Allocation (LDA) method. Yahboom DOFBOT

Robotic Arm Kit provides a versatile and precise

robotic arm mechanism capable of performing

complex tasks.

Figure 5: The 14-Channel EEG Headset ‘EPOC X’.

In addition, EPOC X enables the system to

capture brain signals and interpret them as commands

or inputs for controlling the robotic arm. The Jetson

Nano and Arduino Uno act as the controller,

coordinating the communication and interaction

between the two main parts of our system: EEG brain

signal extraction and robotic arm movement control.

4.2 EEG Data Extraction Testing and

Results

The EmotivBCI application is designed to capture

and interpret brain signals. Detecting facial

expressions presents the first testing task using EEG

Headset ‘EPOC X’, that has many practical

applications serving accessibility technology,

neuromarketing, psychological research...etc. By

training the system to recognize specific facial

expressions, it becomes possible to map those

expressions to corresponding neural patterns. This

enables the creation of a responsive system that could,

for instance, help individuals with mobility

Brain-Driven Robotic Arm: Prototype Design and Initial Experiments

431

impairments communicate more effectively or

provide insights into a user's emotional response to

stimuli for biomedical research.

In this context, advanced brain-computer

interface (BCI) technology requires a high-quality

connection with the EEG sensor headset worn by the

user. The first brain signal extraction yields real-time

feedback on signal strength across multiple EEG

channels, ensuring the device is properly connected

and signals are accurately captured.

The EEG quality for each position is measured

and visually represented in the sensor map, as

illustrated in Figure 7. However, in order to enhance

the EEG quality, it is necessary to allow for a period

of relaxation. Table 1 provides a comprehensive

representation of various signals’ colors.

Table 1: Colors and their Corresponding Status.

Color Status

Blac

k

No contact detecte

d

Re

d

Poor contact qualit

y

Li

g

ht Green Avera

g

e contact

q

ualit

y

Green Good contact

q

ualit

y

Figure 6: Contact quality 25%.

Figure 7: Contact quality 100%.

This calibration ensures that the BCI system can

distinguish between various states and respond

appropriately. The collected data underwent

processing, as depicted in Figure 9.

In this figure, a multichannel EEG signal readout

with varying amplitudes and frequencies across

different electrodes placed on the scalp. These

electrodes are labeled according to standard EEG

placement nomenclature such as AF3, F7, F3, etc.

The signals exhibit the brain's electrical activity, with

each line representing a different sensor position on

the EEG headset.

The application's interface allows the user to

adjust settings such as channel spacing and amplitude

to optimize the visualization of these brain waves.

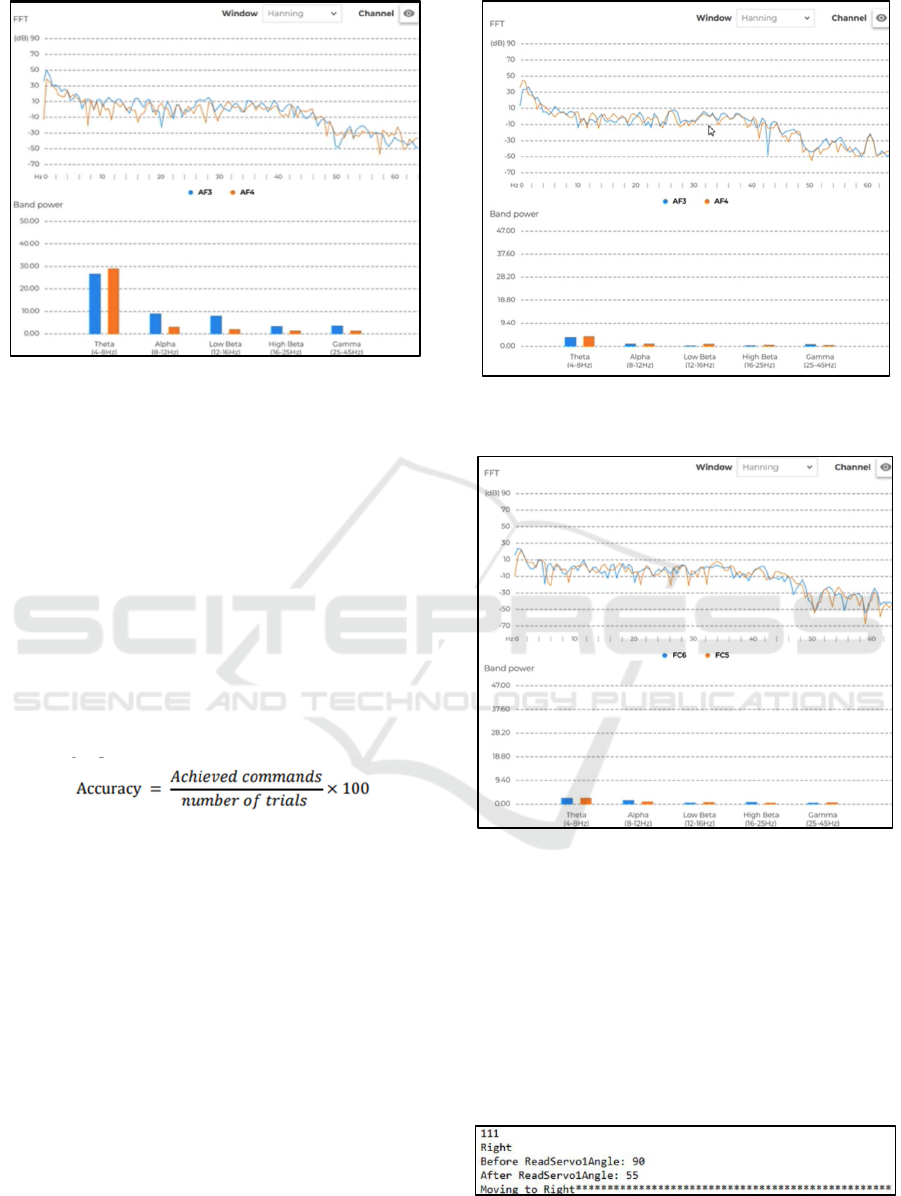

4.2.1 Extract Brain Signals (Alpha, Beta,

Theta)

To analyze the signals, the EMOTV PRO software

was utilized. This software offers the capability to

visualize real-time signals while utilizing the EEG

headset sensor. Initially, an attempt was made to

detect the signal in a normal state using two

electrodes, which are AF3 and AF4. However, the

signals exhibited variability and did not demonstrate

a specific pattern, as illustrated in Figure 10.

To analyze the signals extracted from the "Right"

command, four electrodes were utilized: AF3, AF4,

FC5, and FC6. The signals displayed almost similar

patterns for alpha and beta waves, which are

associated with the mental state. However, the theta

waves vary since they are influenced by the emotional

state, as depicted in Figures 11 and 12.

Figure 8: Collected data.

To gather the data, each movement was tested ten

times, and the response time was recorded. The

response time varied across trials, due to various

factors, including user relaxation and other parameters.

ICAART 2025 - 17th International Conference on Agents and Artificial Intelligence

432

Figure 9: Obtained AF3 and AF4 signals in Normal States.

4.2.2 Translate the Brain Signals to

Commands:

The objective is to verify the accuracy of converting

the collected signal into commands using an Arduino.

The process involves a five-second duration to gather

the signal. The testing focuses on three movements:

Right, Drop, and Left. The signal is collected during

these movements, and the most common signal

pattern is identified. By repeating this process ten

times for each movement, an average signal pattern

for movement is derived. The aim is to achieve an

accuracy level of 70% in accurately translating the

signals into corresponding commands. Using the

following equation:

the accuracy for the overall device has been

calculated when testing all movements, to obtain the

accuracy percentage of 76.67%.

It is important to note that the processing stage

involves filtering out noise, identifying characteristic

features of the EEG signals associated with each

expression, and using machine learning algorithms to

improve the recognition of these patterns over time.

Analysing these waves requires filtering the raw EEG

data to focus on the frequencies of interest. The

software might apply band-pass filters to isolate the

frequency range associated with each type of brain

wave. For example, to analyze alpha waves, the

software would use a band-pass filter to isolate

frequencies between 8-12 Hz.

Figure 10: Obtained AF3 and AF4 signals for Right

movements.

Figure 11: Obtained FC5 and FC6 signals for Right

direction move.

4.2.3 Movement of the Robotic Arm

To ensure the reliability of the results, after the

training phase and obtaining brain commands, the

participant was instructed to continuously generate

the mental command of moving the arm to the right.

The data was collected every 5 seconds, and the

command with the highest occurrence was considered

the chosen command.

Figure 12: Output of Serial Communication After Detecting

Right Movement.

Brain-Driven Robotic Arm: Prototype Design and Initial Experiments

433

As illustrated in Figure 13, a specific code such as

"111" is transmitted during serial communication,

which corresponds to the "right" mental command.

As a result, the arm will move to the right at a 35-

degree angle. The same procedure was applied for

“drop” mental command.

5 CONCLUSION

This paper highlights the development of a new design

for a Brain-driven Robotic Arm as an advanced

assistive device for individuals with upper limb

amputations. In fact, the convergence of advancements

in brain-computer interfaces (BCI) and robotics has

created a new era of enhanced precision and control for

robotic arms, addressing the pressing need for assistive

devices that offer greater independence and

functionality for individuals with disabilities. The

project aims to provide greater control and

functionality to enhance amputees' independence, by

utilizing brain-computer interface (BCI) technology

and electroencephalogram (EEG) signals. The

proposed experimental design involves utilizing the 6

DOF-Yahboom DOFBOT Robotic Arm Kit, in

combination with the 14-Channel EPOC X EEG

Headset. The system is controlled by Jetson Nano and

programmed using Python, employing a Latent

Dirichlet Allocation (LDA) method for Artificial

intelligence task. Finally, as traditional devices, often

limited by their demand for substantial physical effort

and lack of versatility, fall short of meeting the daily

needs of these individuals, the development of the

proposed robotic arm emerges as a vital solution,

promising to revolutionize the support available to

individuals with severe motor disabilities, including

limb loss. Future work will focus on advancements in

feature extraction techniques for EEG signals to

enhance control accuracy. Specifically, exploring

advanced methods such as time-frequency analysis and

deep learning-based feature extraction holds

significant potential for improving the discrimination

of relevant brain activity patterns.

REFERENCES

Lemaire, E. D., Supan, T. J., & Ortiz, M. (2018). Global

standards for prosthetics and orthotics. In Canadian

Prosthetics & Orthotics Journal, 1(2).

Bharara, M., Mills, J. L., Suresh, K., Rilo, H. L., &

Armstrong, D. G. (2009). Diabetes and landmine‐

related amputations: a call to arms to save limbs. In

International Wound Journal, 6(1), 2.

Shedeed, H. A., Issa, M. F., & El-sayed, S. M. (2013). Brain

EEG signal processing for controlling a robotic arm. In

8th International Conference on Computer Engineering

& Systems (ICCES), pp. 152–157.

Aljedaani, L. T., Abdelhedi, F., Aldahasi, R. A., & Batheeb,

A. A. (2024, April). Design of a Brain Controlled

Robotic Arm: Initial Experimental Testing. In 2024

21st International Multi-Conference on Systems,

Signals & Devices (SSD) (pp. 209-215). IEEE.

Ramadan, R. A., & Vasilakos, A. V. (2017). Brain

computer interface: control signals review. In

Neurocomputing, vol. 223, pp. 26–44.

ZHOU, Y., et al. (2023). Shared three-dimensional robotic

arm control based on asynchronous BCI and computer

vision. In IEEE Transactions on Neural Systems and

Rehabilitation Engineering.

Biasiucci, A., Franceschiello, B., & Murray, M. M. (2019).

Electroencephalography. In Curr. Biol., vol. 29, no. 3,

pp. R80–R85.

Khosla, A., Khandnor, P., & Chand, T. (2020). A

comparative analysis of signal processing and

classification methods for different applications based

on EEG signals. In Biocybern. Biomed. Eng., vol. 40,

no. 2, pp. 649–690.

Yip, D. W., & Lui, F. (2023). Physiology, Motor Cortical.

Rolander, A. (2023). Analyzing the Effects of Non-

Generative Augmentation on Automated Classification

of Brain Tumors.

Huang, Z., & Wang, M. (2021). A review of

electroencephalogram signal processing methods for

brain-controlled robots. In Cognitive Robotics, vol. 1,

pp. 111–124.

Orban, M., Elsamanty, M., Guo, K., Zhang, S., & Yang, H.

(2022). A review of brain activity and EEG-based

brain–computer interfaces for rehabilitation

application. In Bioengineering (Basel), vol. 9, no. 12, p.

768.

CAO, L., et al. (2021). A brain-actuated robotic arm system

using non-invasive hybrid brain–computer interface

and shared control strategy. In Journal of Neural

Engineering, 18(4), 046045.

Bousseta, R., El Ouakouak, I., Gharbi, M., & Regragui, F.

(2018). EEG based brain computer interface for

controlling a robot arm movement through thought. In

IRBM, vol. 39, no. 2, pp. 129–135.

HAYTA, Ü., et al. (2022). Optimizing Motor Imagery

Parameters for Robotic Arm Control by Brain-

Computer Interface. In Brain Sciences, 12(7), 833.

Arshad, J., Qaisar, A., Rehman, A. U., Shakir, M., Nazir,

M. K., Rehman, A. U., & Hamam, H. (2022). Intelligent

Control of Robotic Arm Using Brain Computer

Interface and Artificial Intelligence. In Applied

Sciences, 12(21), 10813.

Mu, Y., Zhang, Q., Hu, M., Wang, W., Ding, M., Jin, J., &

Luo, P. (2024). Embodiedgpt: Vision-language pre-

training via embodied chain of thought. In Advances in

Neural Information Processing Systems, 36.

Yurova, V. A., Velikoborets, G., & Vladyko, A. (2022).

Design and implementation of an anthropomorphic

robotic arm prosthesis. In Technologies, 10(5), 103.

ICAART 2025 - 17th International Conference on Agents and Artificial Intelligence

434