GWNet: A Lightweight Model for Low-Light Image Enhancement Using

Gamma Correction and Wavelet Transform

Ming-Yu Kuo and Sheng-De Wang

Department of Electrical Engineering, National Taiwan University, Taipei, Taiwan

Keywords:

Low-Light Image Enhancement, Light-Weighted Model, Gamma Correction, Wavelet Transform.

Abstract:

Low-light image enhancement is essential for improving visual quality in various applications. We introduce

GammaWaveletNet (GWNet), a novel approach that is composed of a gamma correction module and a wavelet

network. The wavelet network is a sequential model with L subnetwork and H subnetwork. Both subnetworks

use a U-Net architecture with Spatial Wavelet Interaction (SWI) component that is making use of wavelet

transforms and convolution layers. The L subnetwork handles low-frequency components, while the H sub-

network refines high-frequency details, effectively combining spatial and frequency domain information for

superior performance. Experimental results across datasets of different sizes demonstrate that GWNet achieves

performance on par with state-of-the-art methods in terms of Peak Signal-to-Noise Ratio and Structural Sim-

ilarity Index. Notably, the incorporation of wavelet transforms in GWNet leads to remarkable computational

efficiency, reducing GFLOPs by approximately 75% and parameters by 40%, highlighting its potential for

real-time applications on resource-constrained devices.

1 INTRODUCTION

Low-light image enhancement is a vital area of re-

search within computer vision and image processing.

Its importance is underscored by high-stakes applica-

tions such as surveillance, autonomous driving, med-

ical imaging (Ullah et al., 2020), and photography.

Images captured in such conditions often suffer from

noise, low contrast, and detail loss, which can impair

system performance and lead to significant risks in

critical applications.

The primary challenges in this field stem from the

computational complexity and the need for efficient

algorithms. With increasing demand for lightweight

models suitable for mobile and embedded devices, de-

veloping methods that optimize both performance and

resource consumption is essential.

Conventional approaches (Wang et al., 2020b)

such as gray-level transformation, histogram equal-

ization (Banik et al., 2018), Retinex theory (Guo

et al., 2016), and frequency domain methods aim to

improve low-light images through direct image pro-

cessing. However, these techniques often struggle

with high computational costs, manual parameter tun-

ing, and noise amplification.

Recent advancements in machine learning, espe-

cially Retinex-based models (Chen Wei, 2018; Zhang

et al., 2022), enhance low-light images by refin-

ing illumination maps using histogram techniques.

These methods address limitations of traditional ap-

proaches by leveraging the Retinex decomposition of

reflectance and illumination components to improve

visibility while maintaining color fidelity.

CNN-based models (Hussain et al., 2024; Wang

and Zhang, 2024), utilizing advanced feature ex-

traction, skip connections, and contextual aggrega-

tion, effectively mitigate noise and enhance con-

trast. Although they provide significant improve-

ments, CNN-based models require extensive labeled

datasets and high computational resources. Diffu-

sion models (Jiang et al., 2023), though powerful

in capturing global information and denoising, face

challenges related to computational costs and detail

preservation, limiting their practical deployment in

resource-constrained environments.

Methods based on Fourier (Wang et al., 2023a;

Huang et al., 2022) and wavelet transforms (Xu et al.,

2022) apply frequency domain techniques to adjust

image structure and brightness. While effective, these

approaches can be computationally intensive and less

adaptable to real-time applications.

We introduce ”GammaWaveletNet (GWNet)”,

which integrates gamma correction, wavelet trans-

forms, and CNN layers within a U-Net framework.

264

Kuo, M.-Y. and Wang, S.-D.

GWNet: A Lightweight Model for Low-Light Image Enhancement Using Gamma Correction and Wavelet Transform.

DOI: 10.5220/0013148800003890

In Proceedings of the 17th International Conference on Agents and Artificial Intelligence (ICAART 2025) - Volume 2, pages 264-274

ISBN: 978-989-758-737-5; ISSN: 2184-433X

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

This method aims to enhance image quality while re-

ducing network complexity, outperforming traditional

methods in both efficiency and performance.

Our contributions to low-light image enhancement

are as follows:

• Wavelet and Spatial Fusion. Combining wavelet

transforms with spatial domain data, our model

preserves image details and minimizes artifacts.

• Efficiency. We reduce computational costs

(FLOPs) and parameters, making the model suit-

able for deployment on devices with limited re-

sources.

• Scalability. Our model demonstrates consistent

performance across datasets of varying sizes, en-

suring versatility in low-light image enhancement

tasks.

2 RELATED WORK

In the related work section, we review gamma correc-

tion and wavelet-based enhancement methods, along

with a Fourier-based model.

2.1 Gamma Correction

Gamma correction is a non-linear technique for ad-

justing image brightness. While it is widely used for

brightness enhancement, selecting fitting parameters

remains challenging, as improper gamma values can

intensify noise in low-light regions and degrade image

quality. To address this, several algorithms (Rahman

et al., 2016; Cao et al., 2018) have been proposed for

optimizing parameter selection.

The gamma correction formula (Huang et al.,

2013) is given in Eq. 1, where I

out

is the output image,

I

in

is the input image, and c and γ control the transfor-

mation curve:

I

out

= c ∗I

γ

in

(1)

Traditionally, gamma correction is applied as a

preprocessing step (Senthilkumar and Kamarasan,

2020). In contrast, our method integrates gamma cor-

rection into the training process, as done in (Wang

et al., 2023b), allowing dynamic adjustment of lumi-

nance. This integration enables more precise and ef-

fective image enhancement during model training.

2.2 Wavelet Transform

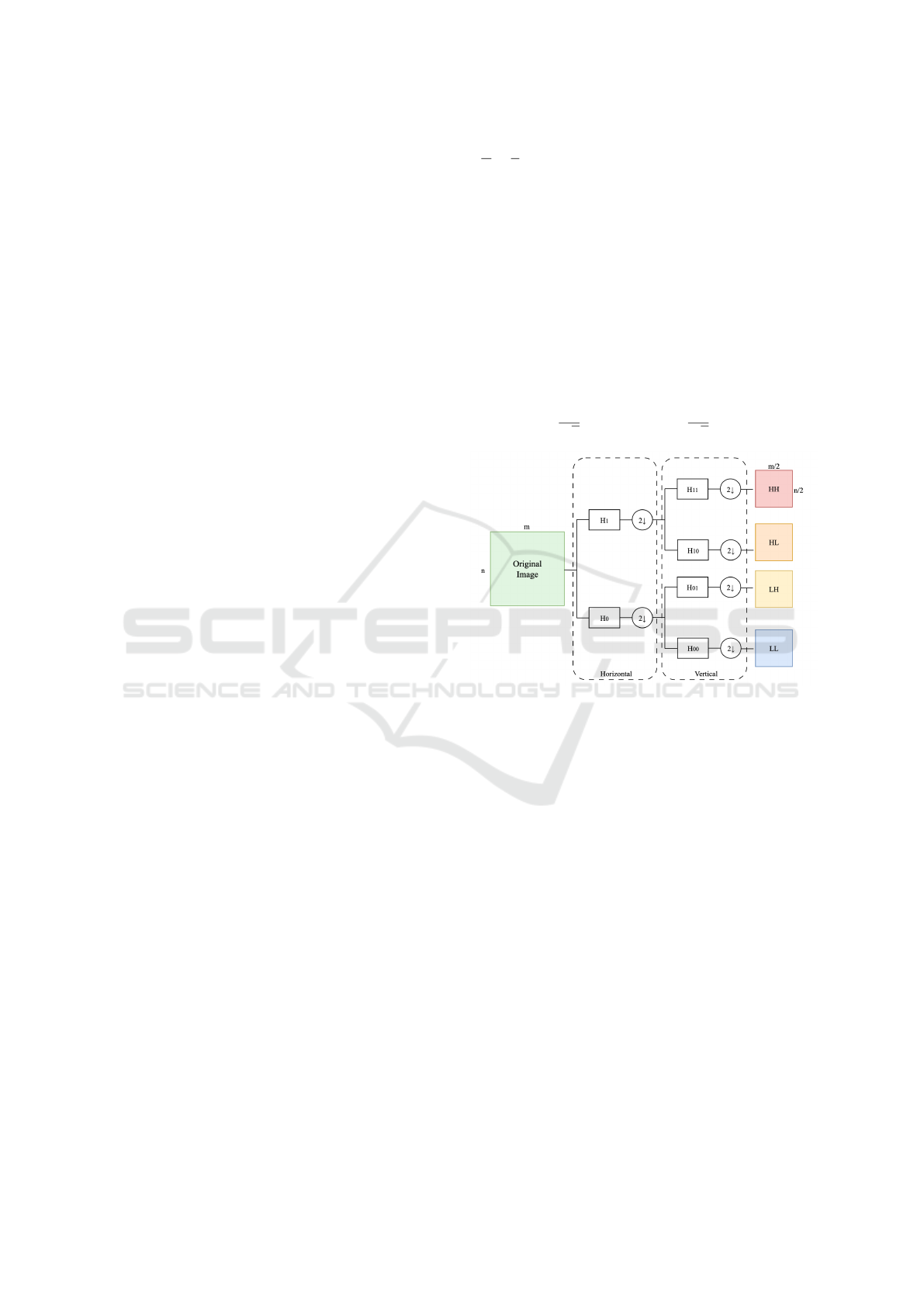

The 2D Discrete Wavelet Transform (2D-DWT), il-

lustrated in Fig. 1, decomposes an image of dimen-

sions M ×N into four subbands, each with dimensions

M

2

×

N

2

. The Low-Low (LL) subband captures the im-

age’s average information, while the Low-High (LH),

High-Low (HL), and High-High (HH) subbands cap-

ture horizontal, vertical, and diagonal details, respec-

tively (Othman and Zeebaree, 2020). We refer to the

LH, HL, and HH subbands as the high-frequency sub-

bands, collectively denoted as YH. The wavelet trans-

form is applied first along the rows and then along the

columns, creating a hierarchical decomposition. In

our work, we employ 2D-DWT (Ding, 2009; Mallat,

1999) with the Haar wavelet (Guf and Jiang, 1996),

where H in Fig. 1 represents the Haar matrix, Eq. 2

represents the Haar matrix for a 1D signal of length 2,

used for filtering operations.

H

0

=

1

√

2

1 1

, H

1

=

1

√

2

1 −1

(2)

Figure 1: 2D Discrete Wavelet Transform Decomposition

Tree (Weeks and Bayoumi, 2003): The wavelet transform

divides the image into smaller frequency subband images,

enabling more precise adjustments for different frequency

features.

2.3 Fourier-Based Model

As noted in (Xu et al., 2021), the amplitude com-

ponent in the Fourier domain represents an image’s

brightness, crucial for exposure correction, while the

phase component captures structural details and is

less affected by brightness changes. The Fourier

transform-based low-light image recovery process

uses the Fast Fourier Transform (FFT) to convert low-

light and normal-light images into the frequency do-

main. By transferring the amplitude from the normal-

light image to the low-light image and combining it

with the phase of the low-light image, followed by an

inverse FFT, effective relighting is achieved. FECNet

(Huang et al., 2022) introduces a two-step process: an

amplitude subnetwork enhances the low-light image’s

amplitude, which is then merged with the original

low-light amplitude and processed through a phase

subnetwork to refine image quality. In this study,

GWNet: A Lightweight Model for Low-Light Image Enhancement Using Gamma Correction and Wavelet Transform

265

we further innovate by adapting the U-Net architec-

ture within the Amplitude subnetwork, incorporating

a spatial-frequency interaction block to better inte-

grate spatial and frequency domain representations by

using wavelet transform. This enhances FECNet’s U-

Net structure for improved integration between spatial

and frequency images.

3 PROPOSED METHOD

This study introduces GammaWaveletNet (GWNet),

a novel method combining gamma correction,

wavelet transforms, and spatial image processing to

enhance structural details and luminance in low-light

images.

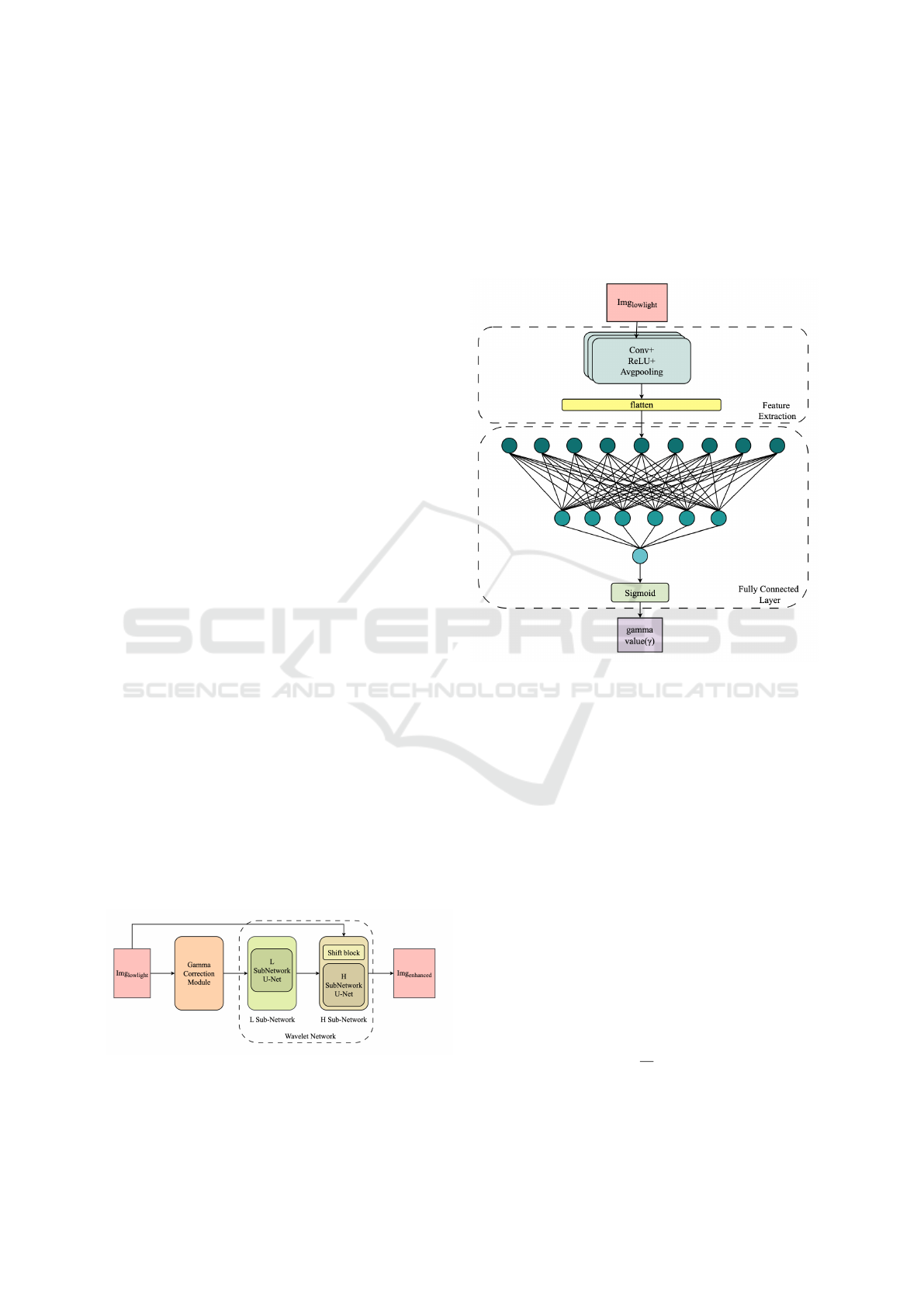

GWNet follows a sequential architecture for low-

light image enhancement, as outlined in Fig. 2. Ini-

tially, gamma correction improves visibility in dark

regions by adjusting luminance, with the gamma

value estimated through CNN layers in Section 3.1.

The GWNet has two subnetworks, as described in

the following Section 3.2 to enhance the quality

of the gamma correction enhanced image. In the

L subnetwork then processes the image, enhancing

low-frequency components via denoising and bright-

ness adjustment. This output is passed to the H

subnetwork, which refines high-frequency details by

merging high-frequency components from the origi-

nal low-light image with the enhanced low-frequency

output in the shift block, as shown in Fig. 6. Both

subnetworks employ a U-Net architecture, facilitating

efficient feature extraction, denoising, and enhance-

ment, resulting in clearer images across diverse light-

ing conditions.

Unlike traditional methods that only operate on

wavelet sub-images (Ji and Jung, 2021), GWNet in-

tegrates spatial image data with wavelet-based net-

works, leveraging spatial and frequency domain infor-

mation. This dual-domain approach effectively cap-

tures and enhances image features, leading to superior

image quality.

Figure 2: GWNet Model Structure: In GWNet, a sequential

approach is used to enhance low-light images, and it is di-

vided into gamma correction module and wavelet network.

3.1 Gamma Correction Module

Gamma correction is applied to adjust image bright-

ness, enhancing visibility in dark regions and improv-

ing visual quality under poor lighting conditions. It

modifies pixel intensity values to make dark areas

more visible without oversaturating brighter regions.

Figure 3: Gamma Correction Module: The gamma correc-

tion module utilizes feature extraction and fully connected

layers to optimize image brightness enhancement.

We use a CNN structure, as illustrated in Fig. 3,

to estimate gamma correction value (γ) for each im-

age. This adjustment brings the image brightness to

a suitable level, allowing subsequent wavelet network

to further enhance and refine the image details. In

standard CNN architectures (Sakib et al., 2019)(Bhatt

et al., 2021), max pooling is often employed to select

the maximum value within a pooling window, as de-

fined in Eq. 3. Average pooling, defined in Eq. 4,

computes the average value in a pooling window. In

both equations, x

i, j

represents a 2D input, and pool-

ing occurs over a window of size k × k (Yu et al.,

2014). To improve stability and performance, we re-

place max pooling layers with average pooling.

MaxPool(x)

i, j

= max

0≤m<k

max

0≤n<k

{x

i+m, j+n

} (3)

AvgPool(x)

i, j

=

1

k

2

k−1

∑

m=0

k−1

∑

n=0

x

i+m, j+n

(4)

For low-light images, the CNN extracts features

via convolutional layers, followed by ReLU activation

ICAART 2025 - 17th International Conference on Agents and Artificial Intelligence

266

(Agarap, 2018) (Eq. 5) and average pooling. The ex-

tracted features are then processed through fully con-

nected layers, with a sigmoid function constraining

the predicted gamma value to an appropriate range.

This gamma value is applied to the input image using

Eq. 1, resulting in the output Img

gamma

.

ReLU(x) =

(

x if x ≥ 0

0 if x < 0

(5)

3.2 Wavelet Network

In GWNet, two specialized subnetworks process dif-

ferent frequency components following wavelet de-

composition: the Low-frequency (L) subnetwork in

Section 3.2.1 and the High-frequency (H) subnetwork

in Section 3.2.2.

The L subnetwork focuses on denoising and en-

hancing the brightness of the low-frequency compo-

nents, specifically targeting the LL sub-image gener-

ated after gamma correction. This network effectively

reduces noise and enhances clarity in low-frequency

regions. The H subnetwork processes high-frequency

components by combining the high-frequency details

from the original low-light image with the output

of the L subnetwork, preserving critical information

such as edges and textures.

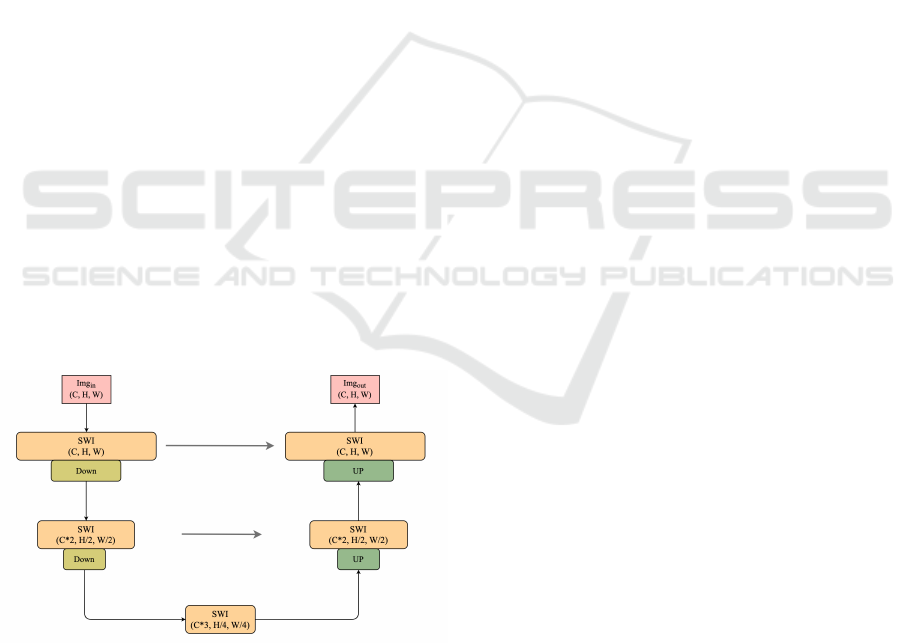

Both subnetworks employ a U-Net architecture, as

shown in Fig. 4, inspired by (Huang et al., 2022). The

U-Net’s encoder-decoder framework (Ronneberger

et al., 2015), along with the Spatial Wavelet Interac-

tion (SWI) block in Section 3.2.3, facilitates feature

extraction, denoising, and detail preservation, making

it ideal for our method.

Figure 4: Wavelet subnetwork Structure: Both subnetworks

use a U-Net architecture combined with the SWI block to

enhance image quality.

In Fig. 4, C, H, and W represent the number of

channels, height, and width of the feature maps. In the

encoder path, downsampling layers capture increas-

ingly abstract features. In the decoder path, upsam-

pling reconstructs the image to its original resolution,

with feature maps concatenated along the channel di-

mension with corresponding maps from the encoder.

3.2.1 L Subnetwork

The L subnetwork receives the gamma-corrected im-

age Img

gamma

as input, with the primary goals of de-

noising and enhancing brightness, particularly focus-

ing on the low-frequency components in the LL sub-

image.

As shown in Fig. 4, the L subnetwork is effec-

tive for image-to-image translation tasks. It denoises

Img

gamma

and continuously enhances brightness us-

ing a series of convolutional layers integrated with

SWI blocks, as shown in Fig. 5. The SWI blocks

in the L subnetwork specifically target the wavelet-

transformed LL sub-image, which is essential for pre-

serving the image’s overall structure.

3.2.2 H Subnetwork

The H subnetwork processes the high-frequency sub-

bands, specifically enhancing details and textures cap-

tured in the LH, HL, and HH sub-images of the

wavelet transform. These components are essential

for preserving image sharpness and fine details.

While similar to the L subnetwork, the H subnet-

work incorporates a unique shift block utilizing the

wavelet transform, as shown in Fig. 6. Although

typically used for image relighting, the direct appli-

cation of the shift function can hinder integration be-

tween low- and high-frequency sub-images. To ad-

dress this, the output Img

shi f t

is processed through a

U-Net block (Fig. 4), which reduces noise in the high-

frequency subbands and ensures better integration of

the wavelet transform sub-images.

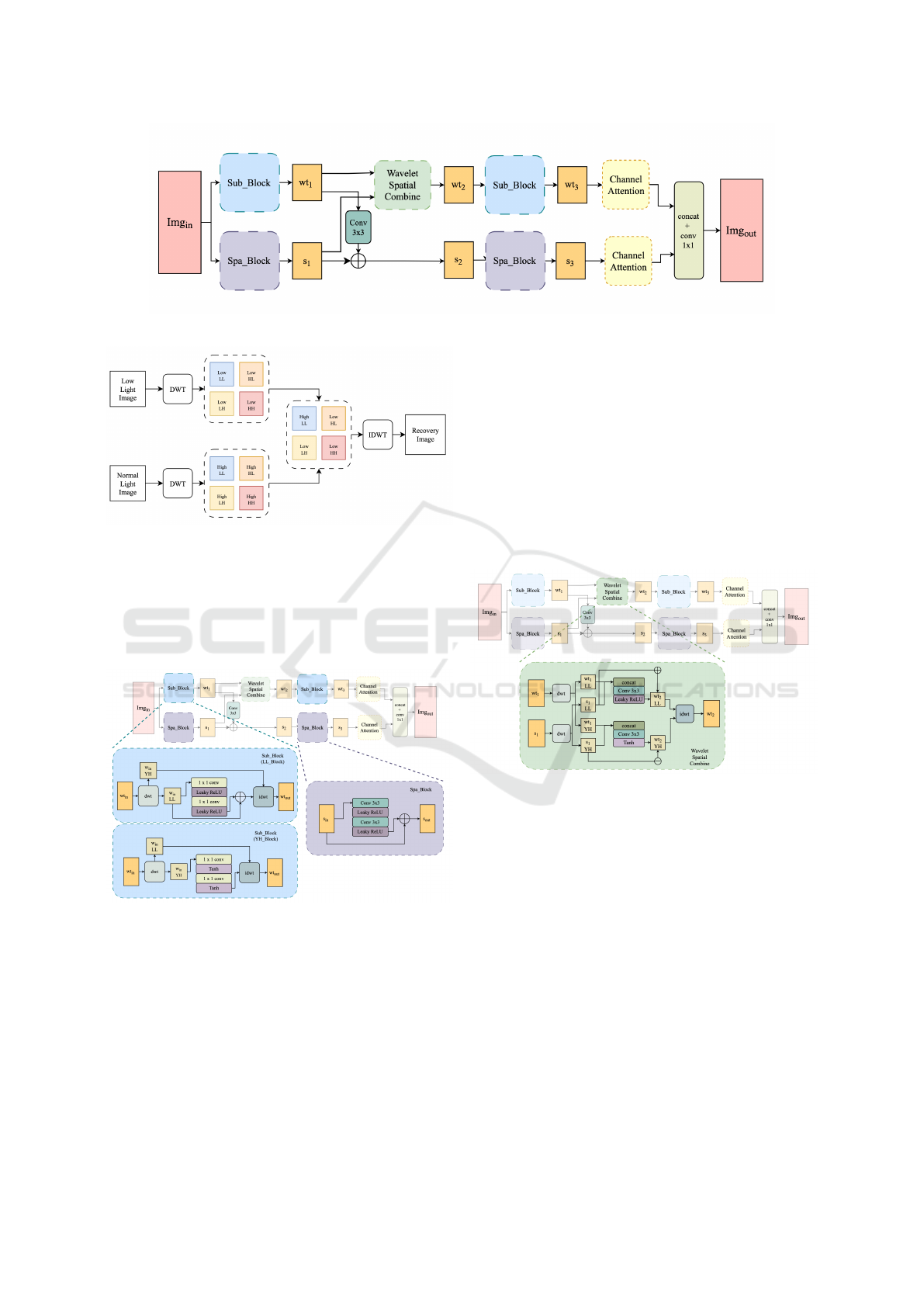

3.2.3 Spatial Wavelet Interaction (SWI)

The SWI block structure, shown in Fig. 5, is a cru-

cial component in both the L and H subnetworks.

It merges spatial and frequency domain information,

improving input image quality. Though both subnet-

works employ the SWI block, they have specific im-

plementations to suit their respective tasks, as shown

in Fig. 7 for frequency sub-images and spatial images.

The spatial processing is consistent across both sub-

networks, ensuring uniformity. The outputs from the

SWI blocks are further processed to enhance image

quality, focusing on brightness and denoising in the

L subnetwork and texture refinement in the H subnet-

work.

The SWI block processes and enhances the sub-

images obtained from the wavelet transform by com-

bining spatial features with frequency components.

GWNet: A Lightweight Model for Low-Light Image Enhancement Using Gamma Correction and Wavelet Transform

267

Figure 5: Spatial Wavelet Interaction Structure (SWI): Both subnetworks utilize SWI blocks to process different frequency

subband images and the spatial image.

Figure 6: Wavelet Transform-Based Low-Light Image

Recovery Process: This figure illustrates the wavelet

transform-based recovery process, which serves as the shift

block in our model.

This ensures comprehensive enhancement, with tai-

lored handling of both low- and high-frequency de-

tails.

Figure 7: SWI Frequency Sub-Block for Different sub-

networks and Spa-Block: The Frequency Sub-Block dif-

fers between the two subnetworks, tailored to low- or high-

frequency subband images, while the Spa-Block processes

spatial images.

In the L subnetwork, the SWI block processes

the LL sub-image , which captures smooth, contin-

uous features. The Leaky ReLU activation (Xu et al.,

2015) is used to maintain structural information with-

out harsh transitions, particularly useful for the LL

subband.

In contrast, the SWI block in the H subnetwork

handles the high-frequency subbands (LH, HL, HH),

using the Tanh activation function (Mertens et al.,

2004) to suppress noise while preserving fine details.

The SWI block combines the wavelet sub-image

and spatial image, as illustrated in Fig. 8. After

applying the Discrete Wavelet Transform (DWT) to

both, the low-frequency subbands are enhanced using

Leaky ReLU, and high-frequency subbands are pro-

cessed with Tanh to reduce noise and enhance bright-

ness. The final image is reconstructed using the In-

verse DWT (IDWT).

Figure 8: SWI Structure for Combining Frequency and Spa-

tial Image: This block combines frequency and spatial im-

ages by utilizing wavelet subband images, tailored to differ-

ent frequency characteristics.

The SWI block also includes a channel attention

mechanism, shown in Fig. 5, inspired by Efficient

Channel Attention (ECA) (Woo et al., 2018) and Con-

volutional Block Attention Module (CBAM) (Wang

et al., 2020a). Both average and max pooling (Eq. 3

and Eq. 4) capture channel-wise statistics, followed

by lightweight convolutional layers and sigmoid acti-

vation, improving feature selection for enhanced low-

light image quality.

3.3 Loss Function

In our approach, we utilize multiple loss functions,

including L1 loss, MSE loss (Janocha and Czarnecki,

ICAART 2025 - 17th International Conference on Agents and Artificial Intelligence

268

2017), and SSIM loss (Nilsson and Akenine-M

¨

oller,

2020), to ensure effective training. Additionally, we

incorporate the wavelet transform sub-images into the

loss function to enhance the training process.

L1 loss (Eq. 6) calculates the average absolute

differences between predicted and target values, while

MSE loss (Eq. 7) estimates the mean squared error.

L1 Loss =

1

MN

M

∑

m=1

N

∑

n=1

|t

m,n

−p

m,n

| (6)

MSE Loss =

1

MN

M

∑

m=1

N

∑

n=1

(t

m,n

−p

m,n

)

2

(7)

In these equations, t represents target images, and

p represents predicted images, with dimensions M ×

N.

SSIM loss measures the perceptual difference be-

tween the target and predicted images by consider-

ing structural information, luminance, and contrast.

SSIM (Eq. 8) ranges from -1 to 1, and the SSIM loss

is computed using Eq. 9. In Eq. 8, µ

t

and µ

p

represent

the mean intensities, σ

2

t

and σ

2

p

indicate the variances,

and σ

t p

refers to the covariance. Constants c

1

and c

2

are included to stabilize the division process.

SSIM(t, p) =

(2µ

t

µ

p

+ c

1

)(2σ

t p

+ c

2

)

(µ

2

t

+ µ

2

p

+ c

1

)(σ

2

t

+ σ

2

p

+ c

2

)

(8)

SSIM Loss = 1 −SSIM(t, p) (9)

Inspired by (Kim and Cho, 2023), we propose a

loss function that leverages wavelet sub-images. Each

subnetwork output is paired with a dedicated loss:

the LL Loss in Algorithm 1 and the YH Loss in

Algorithm 2. The LL Loss employs a combination

of two loss functions on the low-frequency subband,

ensuring both accurate reconstruction and enhance-

ment of critical low-frequency features. In contrast,

the YH Loss targets the high-frequency subbands.

Since accurate reconstruction of small-scale features

is essential, we use only the MSE loss, thereby sim-

plifying the optimization process. Together, these

loss functions effectively refine both low- and high-

frequency subbands, preserving detailed information

across multiple scales.

The total loss function is defined as:

Total Loss = α ·LL Loss + β ·YH Loss + δ ·SSIM Loss

(10)

where α, β, and δ are weights that balance the contri-

bution of each loss term.

Input: L subnetwork output Img

Lout

, target

image Img

target

Output: LL Loss

Step 1: Apply 2D wavelet transform to

obtain LL sub-images

LL

Lout

= 2D-DWT(Img

Lout

)

LL

target

= 2D-DWT(Img

target

)

Step 2: Calculate L1 and MSE Loss

L1 Loss = L1 Loss(LL

Lout

, LL

target

)

MSE Loss = MSE Loss(LL

Lout

, LL

target

)

Step 3: Combine Losses

LL Loss = L1 Loss + MSE Loss

Algorithm 1: LL Loss Calculation: The loss function for

low-frequency subband image.

Input: H subnetwork output Img

Hout

, target

image Img

target

Output: YH Loss

Step 1: Apply 2D wavelet transform to

obtain LH, HL, HH sub-images

LH

target

, HL

target

, HH

target

=

2D-DWT(Img

target

)

LH

Hout

, HL

Hout

, HH

Hout

= 2D-DWT(Img

Hout

)

Step 2: Calculate MSE Loss for each

sub-image

MSE Loss

LH

= MSE Loss(LH

Hout

, LH

target

)

MSE Loss

HL

= MSE Loss(HL

Hout

, HL

target

)

MSE Loss

HH

= MSE Loss(HH

Hout

, HH

target

)

Step 3: Combine MSE Losses

YH Loss =

MSE Loss

LH

+ MSE Loss

HL

+ MSE Loss

HH

Algorithm 2: YH Loss Calculation: The loss function for

high-frequency subband image.

4 EXPERIMENTS

4.1 Datasets

In this research, we evaluate the performance of our

method using two datasets of varying sizes, allowing

us to demonstrate its effectiveness on both small and

large datasets, as well as across diverse image resolu-

tions.

The first dataset, LOL V1, designed for low-light

image enhancement, contains 500 image pairs, 485

for training and 15 for testing, each with dimensions

of 400x600 pixels. The dataset includes advanced

post-processing to reduce artifacts caused by wind

and hand movement, making it a reliable benchmark

for algorithm evaluation, particularly in small-scale

datasets. Initially introduced in (Chen Wei, 2018),

GWNet: A Lightweight Model for Low-Light Image Enhancement Using Gamma Correction and Wavelet Transform

269

Table 1: Comparison of different approaches using various applied methods.

Method

RetinextNet FourLLIE FECNet WaveDiff CDAN LYT-Net GWNet

(Chen Wei, 2018) (Wang et al., 2023a) (Huang et al., 2022) (Jiang et al., 2023) (Shakibania et al., 2023) (Brateanu et al., 2024) (Ours)

Retinex ✓ × × × × ✓ ✓

Frequency × ✓ ✓ ✓ × × ✓

Frequency-Spatial Interaction × × ✓ × × × ✓

Table 2: Quantitative results (PSNR, SSIM) on the each dataset: The best performance is marked in bold, while the second-

best is marked in underlined.

RetinextNet FourLLIE FECNet WaveDiff CDAN LYT-Net GWNet

Dataset

Metrics

(Chen Wei, 2018) (Wang et al., 2023a) (Huang et al., 2022) (Jiang et al., 2023) (Shakibania et al., 2023) (Brateanu et al., 2024) (Ours)

PSNR 16.77 21.53 22.29 21.59 18.96 22.10 22.33

LoL-v1

SSIM 0.42 0.78 0.80 0.79 0.73 0.82 0.80

PSNR 16.82 21.09 21.40 20.10 19.73 20.62 21.36

LSRW-Huawei

SSIM 0.38 0.62 0.61 0.51 0.54 0.61 0.63

PSNR 13.49 17.86 17.88 16.92 16.53 16.67 17.90

LSRW-Nikon

SSIM 0.28 0.51 0.51 0.41 0.46 0.49 0.52

the LOL V1 Dataset serves as a critical benchmark

for low-light enhancement methods.

The second dataset, LSRW Dataset, developed

by (Hai et al., 2023), includes 5,650 image pairs:

3,170 captured with a Nikon D7500 and 2,480 with

a Huawei P40 Pro. The images, sized at 960x640

for Nikon and 960x720 for Huawei, cover diverse in-

door and outdoor scenes under low and normal-light

conditions. Despite slight misalignments in some

outdoor pairs, this dataset provides a comprehensive

resource for evaluating low-light enhancement tech-

niques, contributing to the robustness and generaliz-

ability of algorithms.

4.2 Results

To assess the effectiveness of various methods, we

employ the following metrics: Peak Signal-to-Noise

Ratio (PSNR)(Hor

´

e and Ziou, 2010), Structural Sim-

ilarity Index (SSIM)(Wang et al., 2004), parame-

ter count (Params), and Floating Point Operations

(FLOPs).

4.2.1 Comparative Analysis with Existing

Methods

Our experiments compare the proposed GWNet

method with several advanced techniques, including

the Retinex method (Chen Wei, 2018), Fourier-based

methods (Wang et al., 2023a; Huang et al., 2022), and

Diffusion models (Jiang et al., 2023). We also evalu-

ate the impact of post-processing (Shakibania et al.,

2023) and different data formats (Brateanu et al.,

2024) on performance. Table 1 presents a compar-

ison of these advanced approaches, utilizing various

methods for low-light image enhancement, includ-

ing Retinex, frequency-based techniques, and com-

bined frequency and spatial interactions. Our pro-

posed method incorporates all of these approaches.

4.2.2 Quantitative Results Compare with

Existing Methods

The comparisons are conducted using two datasets:

LOL V1 and LSRW. The LSRW dataset is further di-

vided into two subsets, Huawei and Nikon, based on

the cameras used for capturing the images.

GWNet has demonstrated exceptional perfor-

mance across these datasets, surpassing other state-of-

the-art methods, as shown in Table 2. This confirms

GWNet’s effectiveness and versatility in low-light im-

age enhancement tasks. On the LOL V1 dataset,

GWNet achieves the highest PSNR of 22.33 dB and

a competitive SSIM of 0.80, indicating its ability to

enhance image quality while preserving structural de-

tails. In the LSRW Huawei subset, GWNet shows

strong performance with a PSNR of 21.36 dB and

the highest SSIM of 0.63, reflecting superior detail

preservation and perceptual quality. Similarly, in the

LSRW Nikon subset, GWNet achieves a PSNR of

17.90 dB and an SSIM of 0.52, demonstrating its ro-

bustness across various imaging conditions.

Fig. 9, 10, and 11 show example results from the

LOL V1, LSRW Huawei, and LSRW Nikon datasets,

respectively. Each figure presents a sample image

demonstrating the performance of different models.

These examples illustrate that GWNet consistently

delivers balanced results under various low-light con-

ditions. The red boxes highlight zoomed-in areas for

closer examination of image quality, showcasing the

enhancement of specific details. GWNet excels in im-

proving image quality, reducing noise, and preserving

structural details across all datasets, making it a robust

solution for low-light image enhancement.

4.2.3 Computational Efficiency Compare with

Existing Methods

One of the key strengths of GWNet is its exceptional

computational efficiency, as demonstrated in Table 3.

With only 0.027 M parameters and 0.89 GFLOPs,

ICAART 2025 - 17th International Conference on Agents and Artificial Intelligence

270

Table 3: Model Complexity Comparison: The best performance is marked in bold, while the second-best is marked in

underlined.

Method

RetinextNet FourLLIE FECNet WaveDiff CDAN LYT-Net GWNet

(Chen Wei, 2018) (Wang et al., 2023a) (Huang et al., 2022) (Jiang et al., 2023) (Shakibania et al., 2023) (Brateanu et al., 2024) (Ours)

Params(M) 0.84 0.12 0.15 22 3.5 0.045 0.027

FLOPs(G) 587.47 5.83 13.24 197.89 5.07 3.49 0.89

Figure 9: LOL V1 Result: A comparison of one example

produced by each model, alongside the low-light image and

the ground truth from the LOL V1 dataset.

Figure 10: LSRW Huawei Result: A comparison of one ex-

ample produced by each model, alongside the low-light im-

age and the ground truth from the LSRW Huawei dataset.

GWNet reduces GFLOPs by approximately 75% and

parameters by 40% compared to other methods. This

makes GWNet the most lightweight model among

those compared, making it ideal for real-time applica-

tions on resource-constrained devices such as portable

phones and embedded systems.

4.2.4 Ablation Study

In our ablation experiment, we compare different

structural configurations of our model using the LOL

V1 dataset. First, we assess the impact of apply-

Figure 11: LSRW Nikon Result: A comparison of one ex-

ample produced by each model, alongside the low-light im-

age and the ground truth from the LSRW Nikon dataset.

ing a single gamma correction value across the en-

tire dataset versus assigning a unique value to each

image. Second, we investigate the effect of using

leaky ReLU in the L subnetwork for processing low-

frequency sub-images, similar to the spatial image.

These configurations are evaluated using PSNR and

SSIM metrics, and the observed differences are dis-

cussed.

Table 4 presents the results of the LOL V1

dataset comparison, exploring different gamma cor-

rection methods and activation functions for low-

frequency sub-image processing. Since each im-

age in the dataset contains multiple light sources,

assigning a single gamma value across the dataset

(gamma dataset) fails to address the variations in

light intensity. Conversely, assigning a unique gamma

correction value for each image (gamma image) pro-

vides more accurate corrections, leading to improved

enhancement results. For the low-frequency (LL)

sub-image, which captures the overall structure of the

image, we find that using leaky ReLU (leakyReLU L)

helps maintain structural integrity. Unlike high-

frequency components that benefit from the tanh acti-

vation function (tanh L), the LL sub-image requires

leaky ReLU to avoid limiting the range, ensuring bet-

ter preservation of structural details.

The ablation study reveals that using unique

gamma correction values for each image

(gamma image) and leaky ReLU for LL sub-images

results in improved PSNR. However, the SSIM for

GWNet: A Lightweight Model for Low-Light Image Enhancement Using Gamma Correction and Wavelet Transform

271

Table 4: Ablation study on LOL V1 dataset: The best per-

formance is marked in red and bold, while the second-best

is marked in blue and underlined.

Configuration PSNR SSIM

gamma dataset + tanh L 19.24 0.77

gamma dataset + leakyReLU L 21.79 0.82

gamma image + leakyReLU L 22.33 0.80

this configuration is slightly lower than when using

a single gamma value with leaky ReLU. This slight

decrease in SSIM may be attributed to the increased

complexity and variability introduced by the different

gamma values.

We performed an error map analysis to evaluate

the impact of each subnetwork on overall enhance-

ment quality. As illustrated in Fig. 12, two exam-

ple images from the LOL V1 dataset highlight the

differences between the ground truth and the outputs

from various stages of our model. These visualiza-

tions provide insight into the contributions of the L

and H subnetworks in reducing errors and enhancing

image quality.

The visualizations include a low-light image, a

normal light image (ground truth), and the outputs

Img

Lout

and Img

Hout

, representing the results from

the L subnetwork and H subnetwork, respectively.

The error maps reveal how the L subnetwork signif-

icantly improves the low-light image, while further

processing Img

Lout

through the H subnetwork leads

to Img

Hout

, showing even greater enhancement. This

two-stage process effectively reduces noise and im-

proves image quality.

Figure 12: Error Map Analysis for Enhanced Images: The

figure illustrates the model’s effectiveness in reducing er-

rors. The reduction in error demonstrates the effectiveness

of each subnetwork.

5 CONCLUSIONS

In this paper, we introduced GammaWaveletNet

(GWNet), a novel approach for low-light image en-

hancement. GWNet integrates gamma correction,

wavelet transforms, and CNN layers within a U-Net

architecture. Experimental results demonstrate that

GWNet achieves comparable performance to state-

of-the-art methods in terms of PSNR and SSIM,

while outperforming them in computational effi-

ciency with significantly lower FLOPs and parame-

ter counts. Both visual and quantitative results con-

firm that GWNet effectively enhances brightness, pre-

serves structural details, and reduces noise, achieving

high-quality enhancement.

Future work will focus on optimizing GWNet for

mobile devices. This involves reducing computa-

tional complexity and memory usage while maintain-

ing performance. By employing hardware accelera-

tion and model compression techniques, we aim to

make low-light image enhancement practical for ev-

eryday mobile applications.

REFERENCES

Agarap, A. F. (2018). Deep learning using rectified linear

units (relu). arXiv preprint arXiv:1803.08375.

Banik, P. P., Saha, R., and Kim, K.-D. (2018). Contrast en-

hancement of low-light image using histogram equal-

ization and illumination adjustment. In 2018 inter-

national conference on electronics, information, and

Communication (ICEIC), pages 1–4. IEEE.

Bhatt, D., Patel, C., Talsania, H., Patel, J., Vaghela, R.,

Pandya, S., Modi, K., and Ghayvat, H. (2021). Cnn

variants for computer vision: History, architecture,

application, challenges and future scope. Electronics,

10(20):2470.

Brateanu, A., Balmez, R., Avram, A., and Orhei, C. (2024).

Lyt-net: Lightweight yuv transformer-based network

for low-light image enhancement. arXiv preprint

arXiv:2401.15204.

Cao, G., Huang, L., Tian, H., Huang, X., Wang, Y., and

Zhi, R. (2018). Contrast enhancement of brightness-

distorted images by improved adaptive gamma correc-

tion. Computers & Electrical Engineering, 66:569–

582.

Chen Wei, Wenjing Wang, W. Y. J. L. (2018). Deep retinex

decomposition for low-light enhancement. In British

Machine Vision Conference.

Ding, J.-J. (2009). Time frequency analysis and wavelet

transform class note. Class notes. Department of

Electrical Engineering, National Taiwan University

(NTU), Taipei, Taiwan.

Guf, J.-S. and Jiang, W.-S. (1996). The haar wavelets oper-

ational matrix of integration. International Journal of

Systems Science, 27(7):623–628.

Guo, X., Li, Y., and Ling, H. (2016). Lime: Low-light

image enhancement via illumination map estimation.

IEEE Transactions on image processing, 26(2):982–

993.

Hai, J., Xuan, Z., Yang, R., Hao, Y., Zou, F., Lin, F., and

Han, S. (2023). R2rnet: Low-light image enhance-

ment via real-low to real-normal network. Journal

ICAART 2025 - 17th International Conference on Agents and Artificial Intelligence

272

of Visual Communication and Image Representation,

90:103712.

Hor

´

e, A. and Ziou, D. (2010). Image quality metrics: Psnr

vs. ssim. In 2010 20th International Conference on

Pattern Recognition, pages 2366–2369.

Huang, J., Liu, Y., Zhao, F., Yan, K., Zhang, J., Huang, Y.,

Zhou, M., and Xiong, Z. (2022). Deep fourier-based

exposure correction network with spatial-frequency

interaction. In Avidan, S., Brostow, G., Ciss

´

e, M.,

Farinella, G. M., and Hassner, T., editors, Computer

Vision – ECCV 2022, pages 163–180, Cham. Springer

Nature Switzerland.

Huang, S.-C., Cheng, F.-C., and Chiu, Y.-S. (2013). Ef-

ficient contrast enhancement using adaptive gamma

correction with weighting distribution. IEEE Trans-

actions on Image Processing, 22(3):1032–1041.

Hussain, S. A., Chalicham, N., Garine, L., Chunduru, S.,

Nikitha, V., Prasad V, P., and Sanki, P. K. (2024). Low-

light image restoration using a convolutional neural

network. Journal of Electronic Materials, pages 1–

12.

Janocha, K. and Czarnecki, W. M. (2017). On loss func-

tions for deep neural networks in classification. arXiv

preprint arXiv:1702.05659.

Ji, Z. and Jung, C. (2021). Subband adaptive enhancement

of low light images using wavelet-based convolutional

neural networks. In 2021 IEEE International Confer-

ence on Image Processing (ICIP), pages 1669–1673.

Jiang, H., Luo, A., Fan, H., Han, S., and Liu, S. (2023).

Low-light image enhancement with wavelet-based

diffusion models. ACM Transactions on Graphics

(TOG), 42(6):1–14.

Kim, M. W. and Cho, N. I. (2023). Whfl: Wavelet-domain

high frequency loss for sketch-to-image translation. In

Proceedings of the IEEE/CVF Winter Conference on

applications of computer vision, pages 744–754.

Mallat, S. (1999). A Wavelet Tour of Signal Processing.

Electronics & Electrical. Elsevier Science.

Mertens, K. C., Verbeke, L. P., Westra, T., and De Wulf,

R. R. (2004). Sub-pixel mapping and sub-pixel sharp-

ening using neural network predicted wavelet coeffi-

cients. Remote Sensing of Environment, 91(2):225–

236.

Nilsson, J. and Akenine-M

¨

oller, T. (2020). Understanding

ssim. arXiv preprint arXiv:2006.13846.

Othman, G. and Zeebaree, D. Q. (2020). The applications

of discrete wavelet transform in image processing: A

review. Journal of Soft Computing and Data Mining,

1(2):31–43.

Rahman, S., Rahman, M. M., Abdullah-Al-Wadud, M., Al-

Quaderi, G. D., and Shoyaib, M. (2016). An adaptive

gamma correction for image enhancement. EURASIP

Journal on Image and Video Processing, 2016(1):35.

Ronneberger, O., Fischer, P., and Brox, T. (2015). U-

net: Convolutional networks for biomedical image

segmentation. In Medical image computing and

computer-assisted intervention–MICCAI 2015: 18th

international conference, Munich, Germany, October

5-9, 2015, proceedings, part III 18, pages 234–241.

Springer.

Sakib, S., Ahmed, N., Kabir, A. J., and Ahmed, H. (2019).

An overview of convolutional neural network: Its ar-

chitecture and applications.

Senthilkumar, C. and Kamarasan, M. (2020). An effective

classification of citrus fruits diseases using adaptive

gamma correction with deep learning model. Int. J.

Eng. Adv. Technol, 9(2):2249–8958.

Shakibania, H., Raoufi, S., and Khotanlou, H. (2023).

Cdan: Convolutional dense attention-guided network

for low-light image enhancement.

Ullah, Z., Farooq, M. U., Lee, S.-H., and An, D. (2020).

A hybrid image enhancement based brain mri im-

ages classification technique. Medical Hypotheses,

143:109922.

Wang, C., Wu, H., and Jin, Z. (2023a). Fourllie: Boosting

low-light image enhancement by fourier frequency in-

formation. In Proceedings of the 31st ACM Interna-

tional Conference on Multimedia, pages 7459–7469.

Wang, Q., Wu, B., Zhu, P., Li, P., Zuo, W., and Hu, Q.

(2020a). Eca-net: Efficient channel attention for deep

convolutional neural networks. In Proceedings of the

IEEE/CVF conference on computer vision and pattern

recognition, pages 11534–11542.

Wang, W., Wu, X., Yuan, X., and Gao, Z. (2020b). An

experiment-based review of low-light image enhance-

ment methods. IEEE Access, 8:87884–87917.

Wang, Y., Liu, Z., Liu, J., Xu, S., and Liu, S. (2023b). Low-

light image enhancement with illumination-aware

gamma correction and complete image modelling net-

work. In Proceedings of the IEEE/CVF Interna-

tional Conference on Computer Vision (ICCV), pages

13128–13137.

Wang, Z., Bovik, A., Sheikh, H., and Simoncelli, E. (2004).

Image quality assessment: from error visibility to

structural similarity. IEEE Transactions on Image

Processing, 13(4):600–612.

Wang, Z. and Zhang, X. (2024). Contextual recovery net-

work for low-light image enhancement with texture

recovery. Journal of Visual Communication and Im-

age Representation, 99:104050.

Weeks, M. and Bayoumi, M. (2003). Discrete wavelet trans-

form: architectures, design and performance issues.

Journal of VLSI signal processing systems for signal,

image and video technology, 35:155–178.

Woo, S., Park, J., Lee, J.-Y., and Kweon, I. S. (2018). Cbam:

Convolutional block attention module. In Proceed-

ings of the European conference on computer vision

(ECCV), pages 3–19.

Xu, B., Wang, N., Chen, T., and Li, M. (2015). Empiri-

cal evaluation of rectified activations in convolutional

network. arXiv preprint arXiv:1505.00853.

Xu, J., Yuan, M., Yan, D.-M., and Wu, T. (2022). Illumi-

nation guided attentive wavelet network for low-light

image enhancement. IEEE Transactions on Multime-

dia.

Xu, Q., Zhang, R., Zhang, Y., Wang, Y., and Tian, Q.

(2021). A fourier-based framework for domain gen-

eralization. In Proceedings of the IEEE/CVF con-

ference on computer vision and pattern recognition,

pages 14383–14392.

GWNet: A Lightweight Model for Low-Light Image Enhancement Using Gamma Correction and Wavelet Transform

273

Yu, D., Wang, H., Chen, P., and Wei, Z. (2014). Mixed pool-

ing for convolutional neural networks. In Rough Sets

and Knowledge Technology: 9th International Con-

ference, RSKT 2014, Shanghai, China, October 24-

26, 2014, Proceedings 9, pages 364–375. Springer.

Zhang, Z., Zheng, H., Hong, R., Xu, M., Yan, S., and

Wang, M. (2022). Deep color consistent network for

low-light image enhancement. In Proceedings of the

IEEE/CVF conference on computer vision and pattern

recognition, pages 1899–1908.

ICAART 2025 - 17th International Conference on Agents and Artificial Intelligence

274