Implementation of Quantum Machine Learning on Educational Data

Sof

´

ıa Ramos-Pulido

1 a

, Neil Hern

´

andez-Gress

1

, Glen S. Uehara

2

, Andreas Spanias

2

and H

´

ector G. Ceballos-Cancino

1

1

Tecnologico de Monterrey, Av. Eugenio Garza Sada 2501 Sur, Tecnol

´

ogico, 64849 Monterrey, N.L, Mexico

2

SenSIP Center, School of ECEE, Arizona State University, Tempe, AZ 85287, U.S.A.

Keywords:

Educational Data, Quantum-Kernel Machine Learning Algorithms, Support Vector Classifier, Alumni,

Principal Component Analysis.

Abstract:

This study is the first to implement quantum machine learning (QML) on educational data to predict alumni

results. This study aims to show that we can design and implement QML algorithms for this application case

and compare their accuracy with those of classical ML algorithms. We consider three target variables in a

high-dimensional dataset with approximately 100 features and 25,000 instances or samples: whether an alum-

nus will secure a CEO position, alumni salary, and alumni satisfaction. These variables were selected because

they provide insights into the effect of education on alumni careers. Due to the computational limitations of

running QML on high-dimensional data, we propose to use principal component analysis for dimensionality

reduction, a barycentric correction procedure for instance reduction, and two quantum-kernel ML algorithms

for classification, namely quantum support vector classifier (QSVC) and Pegasos QSVC. We observe that

currently one can implement quantum-kernel ML algorithms and achieve results comparable to those of clas-

sical ML algorithms. For example, the accuracy of the classical and quantum algorithms is 85% in predicting

whether an alumnus will secure a CEO position. Although QML currently offers no time or accuracy advan-

tages, these findings are promising as quantum hardware evolves.

1 INTRODUCTION

Machine learning (ML) is promising for revolution-

izing many domains, including healthcare and edu-

cation. However, the increasing complexity of con-

temporary challenges has highlighted the limitations

of classical ML algorithms. Handling big data, long

model-training durations, and hardware constraints

are among the challenges associated with analyzing

current data (Nath et al., 2021). Quantum computing

seems to be a promising solution in this regard (Alam

and Ghosh, 2022), with new possibilities to address

some of these challenges.

Quantum machine learning (QML) is a new do-

main involving the use of quantum computers for in-

formation processing (Payares and Mart

´

ınez, 2023).

QML combines quantum computing with ML tech-

niques (Zeguendry et al., 2023), promising improve-

ments in speedups and conventional ML tasks (Alam

and Ghosh, 2022). Through further research, quan-

tum algorithms will have the potential to enhance ar-

tificial intelligence algorithms, leading to more ac-

a

https://orcid.org/0000-0003-0101-4511

curate predictions, faster optimization, and improved

ML capabilities (Singh, 2023)

Recent advancements in quantum hardware have

advanced the development and application of QML.

Improvements in qubit stability and coherence

(Veps

¨

al

¨

ainen et al., 2022; Bal et al., 2024) as well as

an increase in the number of available qubits in quan-

tum processors (IBM, 2023) are expected to enable

the execution of more complex and precise algorithms

compared to current ones (Boger, 2024). The tech-

nology used for generating qubits, an essential com-

ponent of quantum computers, is rapidly advancing

(Ullah and Garcia-Zapirain, 2024). In 2023, IBM sur-

passed the 1,000-qubit milestone with Condor, which

is a 1,121-qubit superconducting quantum processor

built using the cross-resonance gate technology (IBM,

2023).

Herein, we aimed to develop a calibrated, quan-

tum educational-modeling framework to accurately

predict the career outcomes of university alumni. To

the best of our knowledge, there are no studies on

the performance of QML algorithms on educational

data. We used a large dataset of a private university

480

Ramos-Pulido, S., Hernández-Gress, N., Uehara, G. S., Spanias, A. and Ceballos-Cancino, H. G.

Implementation of Quantum Machine Learning on Educational Data.

DOI: 10.5220/0013154500003890

In Proceedings of the 17th International Conference on Agents and Artificial Intelligence (ICAART 2025) - Volume 3, pages 480-487

ISBN: 978-989-758-737-5; ISSN: 2184-433X

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

to predict three target variables: CEO (i.e., whether

an alumnus will secure a CEO position), salary (i.e.,

whether alumni salary will exceed the median salary),

and alumni satisfaction (i.e., whether alumni would

prefer to study again at the university). As is known,

QML algorithms cannot be implemented on classical

hardware using Qiskit for high-dimensional datasets

(at least without advanced data encoding into quan-

tum states). Hence, this paper proposes a method to

reduce dimensions and instances prior to the imple-

mentation of QML algorithms. We aimed to examine

whether QML algorithms can achieve results compa-

rable to or better than those of classical ML algo-

rithms. Furthermore, we demonstrate that currently,

QML algorithms can be implemented in a space with

reduced dimensions without affecting prediction ac-

curacy in comparison with implementing classical

ML algorithms on original datasets.

Education is a key element of economic devel-

opment (Hanushek and Woessmann, 2010), and au-

thors of (Ceci and Williams, 1997) stated that a pro-

fessional gains notable benefits for each additional

month or year of schooling. Analysis of alumni re-

sults is important for students and the reputation of

educational institutions. Future advancements in this

analysis are expected to benefit students and educa-

tional institutions. This study contributes to educa-

tional data mining by exploring and benchmarking

QML algorithms on an educational dataset of a pri-

vate university and highlighting the potential and cur-

rent limitations of QML algorithms.

The remainder of this paper is organized as fol-

lows: a literature review is presented in Section 2.

The research methodology is introduced in Section 3.

Next, we present the findings of our study in Section

4. Finally, Section 5 concludes this study and outlines

future research directions.

2 LITERATURE REVIEW

This section presents the findings of a review of Sco-

pus articles performed to understand the state of the

art of publications pertaining to QML applications.

The query keywords used were “quantum,” “machine

learning,” and “applications” for article titles. This

query was raised on June 26, 2024 and it returned 76

articles. The United States and China have published

the most number of articles on QML, highlighting its

applications in computer science, physics, engineer-

ing, and mathematics.

QML showed a noticeable growing number of

publications recently, with a spike observed in 2023.

A total of 2 articles were published in 2018, 14 in

2020, and 27 in 2023. Because we aimed to elucidate

the state of the art of QML applications, we applied

exclusion criteria and a filtration process for articles

focused on QML applications using real data. This

process decreased the number of analyzed articles to

17.

QML has been applied in diverse fields. In en-

vironmental chemical studies, ML-based quantum

chemical methods are used to understand the behav-

ior and toxicology of chemical pollutants (Xia et al.,

2022). Interestingly, authors of (Lachure et al., 2023)

showed that the current progress in QML and quan-

tum computers may lead to technological advance-

ments in climate change research. In biochemical

thermodynamics, QML is used for metabolism mod-

eling and prediction (Jinich et al., 2019).

In the healthcare domain, QML is used for drug

discovery (Batra et al., 2021; Vijay et al., 2023), onco-

logical treatments (Rahimi and Asadi, 2023), and dis-

ease detection (Pomarico et al., 2021; Esposito et al.,

2022; Miller et al., 2023; Upama et al., 2023; Prabhu

et al., 2023)]. In physics, it is applied in high-energy

physics (Wu et al., 2021b; Wu et al., 2021a; Chan

et al., 2021; Wu et al., 2022; Delgado and Hamilton,

2022), spintronics (Ghosh and Ghosh, 2023), and par-

ticle physics (Fadol et al., 2022).

Several algorithms are pivotal in the current ap-

plication of QML, including quantum support vector

classifier (QSVC), Pegasos QSVC, variational quan-

tum classifier, and quantum neural networks. These

algorithms are implemented using various quantum

simulators and hardware platforms, such as the IBM

Quantum Platform (using IBM Qiskit), Google Quan-

tum AI (using Google Cirq), and quantum computers

and simulators (using Amazon Braket quantum com-

puting).

Currently, several studies have achieved compara-

ble results for classical and QML algorithms (Espos-

ito et al., 2022; Wu et al., 2021b; Wu et al., 2021a;

Chan et al., 2021; Wu et al., 2022; Ghosh and Ghosh,

2023; Fadol et al., 2022; Gujju et al., 2024). Au-

thors of (Prabhu et al., 2023; Ghosh and Ghosh, 2023)

highlighted that QSVC and Pegasos QSVC consider-

ably outperformed a classical support vector classi-

fier (SVC) when using the Aer simulator provided by

Qiskit.

Interestingly, classical and QML algorithms cur-

rently face difficulty in handling big datasets (Wu

et al., 2022; Turtletaub et al., 2020), although fu-

ture QML algorithms are expected to offer more

efficient solutions to handle large datasets (Singh,

2023). However, this will require a higher number

of qubits and stability (Nath et al., 2021; Ullah and

Garcia-Zapirain, 2024; Wu et al., 2022; Peral-Garc

´

ıa

Implementation of Quantum Machine Learning on Educational Data

481

et al., 2024) along with the addressal of challenges

associated with noise reduction and error mitigation

in quantum computing (Ullah and Garcia-Zapirain,

2024; Wu et al., 2022; Gujju et al., 2024; Peral-Garc

´

ıa

et al., 2024). Our research seeks solutions to these

challenges of QML algorithms.

The current results of the research and application

of QML algorithms are only hinting at the beginning

of QML applications. As quantum computers become

more powerful and accessible, the accuracy and the

model-training durations difference between classical

and QML algorithms will increase, eliciting new pos-

sibilities for QML applications across various indus-

tries and sciences.

3 RESEARCH METHODOLOGY

This section presents data, the modeling framework,

and validation processes used in this study. Next,

we propose the use of principal component analysis

(PCA), barycentric correction procedure (BCP), and

QML algorithms in the proposal. The proposal in-

tends to enable the practical application of QML algo-

rithms and generate a reduced-dimensionality dataset

that preserves data variability while accurately pre-

dicting target variables.

3.1 Proposal

Herein, we used a large dataset with approximately

100 features and 25,000 instances. Execution of

QML algorithms in simulators is not feasible for large

datasets because of memory constraints. For example,

one cannot run QSVC on a dataset with 750 instances

and 7 features using Google Colab.

Therefore, we propose a methodology to enable

the execution of QML algorithms on a reduced-

dimensionality educational dataset:

1. Dimensionality Reduction Using PCA. We use

PCA to reduce the number of features while pre-

serving data variability of at least 90% in the

reduced-dimensionality dataset.

2. Instance Reduction Using BCP. We employ

BCP to reduce the number of instances, which

previously did not affect the accuracy even on a

small dataset (Ramos-Pulido et al., 2024).

3. Implementation of Classical and QML Algo-

rithms. We implement the classic (i.e., SVC),

and QML (i.e., QSVC and Pegasos QSVC) algo-

rithms.

4. Comparison Between the Results Obtained in

Step 3. We compare the results of the classical

and QML algorithms.

The Sklearn library was used to fit the SVC model

(Pedregosa et al., 2011). The Qiskit library was used

to implement the QML models, following the recom-

mendations provided in (Team, 2024; Javadi-Abhari

et al., 2024). Qiskit is an open-source quantum com-

puting framework, which enables the development

and execution of quantum algorithms on real quantum

processors and simulators. We used Sampler from

qiskit.primitives to execute quantum circuits and ob-

tain statistical results of measurements.

Further, we processed classical data using QML

algorithms by following three steps described in

(Learning, 2023): 1) encoding of quantum data or

preparation of states, 2) processing of quantum data,

and 3) reading and outputting of learning results. Pa-

rameterized quantum circuits (PQCs) can be used

to implement QML algorithms on near-term quan-

tum devices and are sufficiently versatile to depict a

broad spectrum of intricate quantum states (Learn-

ing, 2023). In QML, PQCs are typically used for two

primary purposes: 1) data encoding, wherein the pa-

rameters are determined by data being encoded, and

2) as quantum models, wherein an optimization pro-

cess determines the parameters (Learning, 2023). We

encoded our classical data into quantum states using

the ZZFeatureMap method and employed the Fideli-

tyQuantumKernel class from Qiskit to generate the

kernel. The number of qubits used was dependent on

the model and number of features (refer to Table 1 for

the number of qubits or components used). In addi-

tion, we employed QSVC and Pegasos QSVC from

the Qiskit library for classification tasks.

3.2 Barycentric Correction Procedure

BCP followed in Step 2 of the proposal is described in

(Poulard and Est

`

eve, 1995). BCP relies on the calcu-

lation of individual weights and a threshold parame-

ter. The training process involves iteratively adjusting

the weights of the barycenters to minimize the num-

ber of misclassified values. The algorithm defines a

hyperplane w

T

x + θ, which separates the input space

into two classes. First, we define I

1

= 1,..,N

1

and

I

0

= 1, ..,N

0

, where N

1

represents number of posi-

tive cases and N

0

represents number of negative cases.

The barycenters of the classes are defined using the

following weighted averages:

b

1

=

∑

i∈I

1

α

i

x

i

∑

i∈I

1

α

i

, b

0

=

∑

i∈I

0

µ

i

x

i

∑

i∈I

0

µ

i

The weight vector w is defined as the vector dif-

ference w = b

1

− b

0

. The range of w is not fixed, as it

ICAART 2025 - 17th International Conference on Agents and Artificial Intelligence

482

depends on the relationships between the classes, the

distribution of the data, and the scale of the features.

This vector is central to the classification process be-

cause it defines the orientation of the hyperplane in

the feature space. At each iteration, the barycenter

shifts towards the misclassified patterns. Increasing

the value of a specific barycenter causes the hyper-

plane to move in that direction.The bias term, θ, is

computed as follows:

θ =

maxγ

1

+ min γ

0

2

where γ(x) = −w · x. The range of θ will depend

on the positions of the points in the feature space

and how the classes are distributed. The bias term

θ adjusts the position of the decision boundary. The

barycentric correction is calculated by modifying the

weighting coefficients. We have

α

new

= α

old

+ β µ

new

= µ

old

+ λ

where β = min

{

1,max [30,N

1

/N

0

]

}

and

λ = min

{

1,max [30,N

0

/N

1

]

}

, (Poulard and Labreche,

1995). In some cases, BCP has considerably outper-

formed the perceptron in time (Poulard and Labreche,

1995).

3.3 Data

The university supplied an anonymized dataset com-

prising survey responses from alumni regarding their

social and economic conditions. In 2023, as a part

of its 80th anniversary celebration, the university

conducted a survey to assess the social and eco-

nomic condition of its alumni since its establish-

ment in 1943. The survey invitation was sent to

all alumni through email and social media. The

Quacquarelli Symonds Intelligence Unit Team and re-

searchers from the university conducted a descriptive

analysis of this survey, a report of which can be found

in (de Monterrey, 2023).

We did not focus on identifying input features as-

sociated with target variables. Instead, we aimed to

predict the following output features: “CEO” indi-

cates whether an alumnus has secured a CEO posi-

tion, “Salary” indicates whether an alumnus’ salary

is higher than the median salary, and “Satisfaction”

indicates whether an alumnus would choose to study

again at the university. The input features included

age, gender, school attended, campus, level of edu-

cation, current address, region of birth, parental edu-

cation and occupation, weekly working hours, years

spent working abroad, life satisfaction, and income

satisfaction along with evaluations of social intelli-

gence, self-knowledge management, and communica-

tion, among others. After transforming the categori-

cal variables into dummy features via one-hot encod-

ing, the total number of features was 104.

3.4 Modeling and Validation

Two experiments were performed to evaluate the dif-

ferences between the performances of the QML al-

gorithms and the classical SVC. In the first exper-

iment, we employed the proposed method to com-

pare the performances of QSVC and Pegasos QSVC

with that of SVC on the same reduced-dimensionality

dataset. In the second experiment, we again used

the proposed method to compare the performance

of the SVC on the complete dataset with those of

the QML algorithms on the reduced-dimensionality

dataset. Notably, the SVC was trained on the com-

plete dataset, while the QSVC and Pegasos QSVC

were fitted on the reduced-dimensionality dataset, i.e.,

a dataset whose instances and dimensions were re-

duced using the proposed method.

Effectiveness of the algorithms was assessed via

random cross validation (CV), which involved gener-

ating five random splits of the complete dataset. For

each split, the models were trained on the training set

(70%) and their prediction accuracy was assessed on

the testing set (30%). The average performance of

each model was then determined by averaging the re-

sults across the five splits.

The metric “accuracy” was used for each algo-

rithm. The tuned hyperparameters and grid were as

follows:

• SVC: C: 1,10,100,1000,10000

• Pegasos QSVC: C: 1,10,100,1000,10000; tau:

100,200,300,400; sample: 1000, 2000, 3000,

4000; components = 8,9,10,11,12

• QSVC: sample: 500, 600, 700, 800; components

= 4,5,6

During training, the optimal hyperparameter val-

ues were selected via random fivefold CV for each

algorithm across each split. The following steps were

involved: the training set (70% of the data) was di-

vided into five almost equal splits. For each value in

the hyperparameter grid (see the last paragraph, sec-

tion 3.4), the algorithms were trained on four of the

splits and evaluated on the remaining one. This pro-

cess was repeated five times, leaving out a different

split each time so that every split was used for vali-

dation once. Average accuracy of each hyperparam-

eter value was then calculated across these five rep-

etitions. The “optimal” hyperparameters were those

with highest average accuracy. Finally, the accuracy

Implementation of Quantum Machine Learning on Educational Data

483

of the algorithms with the optimal hyperparameters

was evaluated on the testing set.

The hyperparameter C was tuned during the train-

ing of the SVC on the complete dataset. The sam-

ple size and dimensions of the dataset used during the

training of the QML algorithms were adjusted to the

maximum possible extent with the available comput-

ing resources via Google Colab (Research, 2024). An

optimal combination of number of samples and num-

ber of principal components was identified to allow

for effective model training and satisfactory perfor-

mance. In particular, for Pegasos QSVC, C (the reg-

ularization parameter) and tau (number of steps per-

formed during training) were tuned. QSVC could not

be trained with > 6 components and > 750 cases;

therefore, it was tuned with fewer components and

cases. Lastly, for the SVC trained on the reduced-

dimensionality dataset, only C was tuned and dimen-

sions same as those of Pegasos QSVC were retained

to ensure fair comparison for same sample dimen-

sions.

4 RESULTS

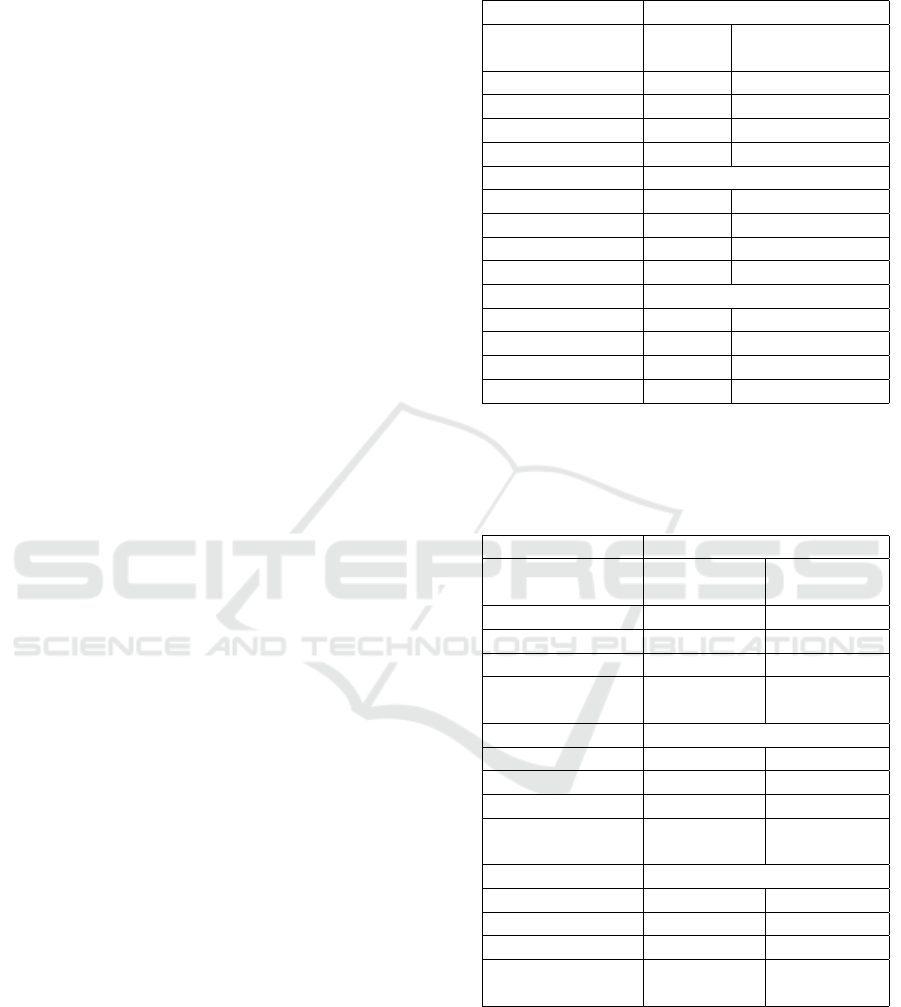

Tables 1 and 2 lists all hyperparameters selected and

considerations for each target variable and algorithm.

It provides the number of components extracted via

PCA, sample size used in each case, and optimal hy-

perparameter selected during tuning. For datasets cre-

ated for each target variable, the variability was 92%

when the number of components was five and 96%

when the number of components was 10, implying

that most of the variability was captured. For exam-

ple, for the target variable “CEO” and the proposed

method with Pegasos QSVC, the dataset dimensions

were 1,500 with the optimal hyperparameters being

C = 1000 and τ = 100. Tables 1 and 2 also lists rest

of the features.

Table 3 presents the prediction results for the dif-

ferent algorithms and target variables. Overall, the

SVC trained using the complete dataset performed the

best across all target variables, achieving the highest

accuracy for “Salary” and “Alumni Satisfaction.” For

“CEO,” the SVC with the complete dataset and QSVC

with < 4% of the instances retained and five principal

components showed comparable results.

The performance of the QML algorithms was ob-

served to improve with increasing number of compo-

nents and instances. Conversely, decreasing the num-

ber of components and instances reduced the perfor-

mance. This important finding shows that in future,

when we can use increasing amount of information,

the performance of the QML algorithms may substan-

Table 1: Hyperparameters and Considerations for Classical

Methods.

Target: CEO

SVC BCP + PCA

+ SVC

Components All 10

Variability 100% 96%

sample All 1,500

Hyperparameters C=1000 C= 10

Target: Salary

Components All 10

Variability 100% 96%

Sample All 4,000

Hyperparameters C=100 C= 1000

Target: Alumni Satisfaction

Components All 10

Variability 100% 96%

Sample All 2,000

Hyperparameters C=1 C= 1

Abbreviations: BCP: barycentric correction procedure,

PCA: principal component analysis, SVC: support vector

classifier

Table 2: Hyperparameters and Considerations for Quantum

Methods.

Target: CEO

BCP + PCA BCP + PCA

+ QSVC + Pegasos

Components 5 10

Variability 92% 96%

sample 1,000 1,500

Hyperparameters C=1000

tau=100

Target: Salary

Components 5 10

Variability 92% 96%

Sample 1,000 4,0000

Hyperparameters C=1000

tau=100

Target: Alumni Satisfaction

Components 5 10

Variability 92% 96%

Sample 1,000 2,000

Hyperparameters C=100

tau=100

Abbreviations: BCP: barycentric correction procedure,

PCA: principal component analysis, QSVC: quantum

SVC, Pegasos: pegasos QSVC.

tially improve.

For “Salary,” the BCP + PCA + QSVC model

exhibited performance comparable to that of the

BCP+PCA+SVC model, highlighting the effective-

ness of QSVC even on limited data. The proposed

ICAART 2025 - 17th International Conference on Agents and Artificial Intelligence

484

Table 3: Accuracy of the different models across different

target variables.

Target: CEO

SVC BCP + PCA

+ SVC + QSVC + Pegasos

Acc 86 85 86 85

Target: Salary

Acc 73 60 59 56

Target: Alumni Satisfaction

Acc 86 81 81 81

SVC: support vector classifier; BCP + PCA + SVC:

Proposed method with SVC, BCP + PCA + QSVC:

Proposed method with QSVC, BCP + PCA + Pegasos

QSVC: Proposed method with Pegasos QSVC

method with SVC and that with Pegasos QSVC

yielded the same accuracy for “CEO” and “Alumni

satisfaction.” In particular, BCP + PCA + QSVC and

BCP + PCA + SVC achieved 85% accuracy for pre-

dicting whether an alumnus would secure a high-

level-management position and 81% accuracy for pre-

dicting whether an alumnus would choose to study at

the university again.

5 CONCLUSIONS

This study demonstrated that quantum machine learn-

ing algorithms can achieve results comparable to

those of classical ML algorithms when applied to

reduced-dimensional educational data, addressing

three key target variables: whether an alumnus se-

cures a CEO position, alumni salary, and alumni satis-

faction. These results are promising, as they confirm

the feasibility of designing and implementing QML

algorithms for practical applications in educational

analytics despite current hardware limitations. The

findings highlight the potential for QML methods, es-

pecially as quantum computing technology evolves.

Notably, while the accuracy of QML algorithms,

such as QSVC, outperforms the 85% accuracy of their

classical counterpart, SVC, for the CEO prediction

task, no significant advantages in terms of computa-

tional efficiency were observed. However, this aligns

with expectations given the current state of quantum

hardware.

Future research will focus on addressing computa-

tional constraints and exploring quantum-native tech-

niques such as quantum principal component analy-

sis (QPCA). Incorporating QPCA into the proposed

method has the potential to reduce dimensionality in

a quantum framework, which could enhance the scal-

ability of QML algorithms.

The findings of this research reinforce the rel-

evance of QML for educational applications, with

implications extending beyond this domain to other

fields, such as social sciences, where complex data is

prevalent.

ACKNOWLEDGEMENTS

The authors would like to thank Tecnol

´

ogico de Mon-

terrey for the opportunity to use the data for this re-

search. The authors also thank Tecnologico de Mon-

terrey and Conahcyt for providing S.R.-P. with Ph.D.

scholarships.

REFERENCES

Alam, M. and Ghosh, S. (2022). Qnet: A scalable

and noise-resilient quantum neural network architec-

ture for noisy intermediate-scale quantum computers.

Frontiers in physics, 9.

Bal, M., Murthy, A. A., Zhu, S., Crisa, F., You, X., Huang,

Z., Roy, T., Lee, J., Zanten, D. V., Pilipenko, R., et al.

(2024). Systematic improvements in transmon qubit

coherence enabled by niobium surface encapsulation.

npj Quantum Information, 10(1):43.

Batra, K., Zorn, K. M., Foil, D. H., Minerali, E., Gawriljuk,

V. O., Lane, T. R., and Ekins, S. (2021). Quantum ma-

chine learning algorithms for drug discovery applica-

tions. Journal of Chemical Information and Modeling,

61(6):2641–2647.

Boger, Y. (2024). Crossing the quantum threshold: The path

to 10,000 qubits. Accessed: 2024-07-17.

Ceci, S. J. and Williams, W. M. (1997). Schooling,

intelligence, and income. American Psychologist,

52(10):1051–1058.

Chan, J., Guan, W., Sun, S., Wang, A., Wu, S., Zhou, C.,

Livny, M., Carminati, F., Meglio, A., et al. (2021). Ap-

plication of quantum machine learning to high energy

physics analysis at lhc using ibm quantum computer

simulators and ibm quantum computer hardware. vol-

ume 390.

de Monterrey, T. (2023). Impacto economico y social global

de las y los egresados del exatec en 80 a

˜

nos de historia.

Accessed: 2024-07-17.

Delgado, A. and Hamilton, K. E. (2022). Quantum machine

learning applications in high-energy physics. In Pro-

ceedings of the 41st IEEE/ACM International Con-

ference on Computer-Aided Design, pages 1–5, New

York, NY, USA. Association for Computing Machin-

ery.

Esposito, M., Uehara, G., and Spanias, A. (2022). Quan-

tum machine learning for audio classification with ap-

plications to healthcare. In 2022 13th International

Conference on Information, Intelligence, Systems &

Applications (IISA), pages 1–4. IEEE.

Fadol, A., Sha, Q., Fang, Y., Li, Z., Qian, S., Xiao, Y.,

Zhang, Y., and Zhou, C. (2022). Application of quan-

Implementation of Quantum Machine Learning on Educational Data

485

tum machine learning in a higgs physics study at the

cepc. arXiv preprint arXiv:2209.12788.

Ghosh, K. J. and Ghosh, S. (2023). Classical and quantum

machine learning applications in spintronics. Digital

Discovery, 2(2):512–519.

Gujju, Y., Matsuo, A., and Raymond, R. (2024). Quantum

machine learning on near-term quantum devices: Cur-

rent state of supervised and unsupervised techniques

for real-world applications. Physical Review Applied,

21(6):067001.

Hanushek, E. A. and Woessmann, L. (2010). Education and

economic growth. Economics of Education, 60(67):1.

IBM (2023). The hardware and software for the era of quan-

tum utility is here. Accessed: 2024-07-18.

Javadi-Abhari, A., Treinish, M., Krsulich, K., Wood, C. J.,

Lishman, J., Gacon, J., Martiel, S., Nation, P. D.,

Bishop, L. S., Cross, A. W., Johnson, B. R., and Gam-

betta, J. M. (2024). Quantum computing with Qiskit.

Jinich, A., Sanchez-Lengeling, B., Ren, H., Harman, R.,

and Aspuru-Guzik, A. (2019). A mixed quantum

chemistry/machine learning approach for the fast and

accurate prediction of biochemical redox potentials

and its large-scale application to 315 000 redox reac-

tions. ACS Central Science, 5(7):1199–1210.

Lachure, S., Lohidasan, A., Tiwari, A., Dhabu, M., and

Bokde, N. (2023). Quantum machine learning ap-

plications to address climate change: A short review,

pages 65–83. Advances in Systems Analysis, Soft-

ware Engineering, and High Performance Computing

(ASASEHPC). IGI global.

Learning, I. Q. (2023). Quantum machine learning course.

Accessed: 2024-07-23.

Miller, L., Uehara, G., Sharma, A., and Spanias, A. (2023).

Quantum machine learning for optical and sar clas-

sification. In 2023 24th International Conference on

Digital Signal Processing (DSP), pages 1–5. IEEE.

Nath, R. K., Thapliyal, H., and Humble, T. S. (2021). A re-

view of machine learning classification using quantum

annealing for real-world applications. SN Computer

Science, 2(5):365.

Payares, E. and Mart

´

ınez, J. C. (2023). The enhancement

of quantum machine learning models via quantum

fourier transform in near-term applications. In AIP

Conference Proceedings, volume 2872. AIP Publish-

ing.

Pedregosa, F., Varoquaux, G., Gramfort, A., Michel, V.,

Thirion, B., Grisel, O., Blondel, M., Prettenhofer, P.,

Weiss, R., Dubourg, V., et al. (2011). Scikit-learn:

Machine learning in python. Journal of Maching

Learning Research, 12:pp.2825–2830.

Peral-Garc

´

ıa, D., Cruz-Benito, J., and Garc

´

ıa-Pe

˜

nalvo, F. J.

(2024). Systematic literature review: Quantum ma-

chine learning and its applications. Computer Science

Review, 51:100619.

Pomarico, D., Fanizzi, A., Amoroso, N., Bellotti, R., Bi-

afora, A., Bove, S., Didonna, V., Forgia, D. L., Pas-

tena, M. I., Tamborra, P., et al. (2021). A proposal of

quantum-inspired machine learning for medical pur-

poses: An application case. Mathematics, 9(4):410.

Poulard, H. and Est

`

eve, D. (1995). A convergence theorem

for barycentric correction procedure. Soumisa Neural

Computation.

Poulard, H. and Labreche, S. (1995). A new unit learning

algorithm. ipi, 10:i2I1.

Prabhu, S., Gupta, S., Prabhu, G. M., Dhanuka, A. V., and

Bhat, K. V. (2023). Qucardio: Application of quan-

tum machine learning for detection of cardiovascular

diseases. IEEE Access, 11:136122–136135.

Rahimi, M. and Asadi, F. (2023). Oncological applications

of quantum machine learning. Technology in Cancer

Research and Treatment, 22:15330338231215214.

Ramos-Pulido, S., Hern

´

andez-Gress, N., and Ceballos-

Cancino, H. G. (2024). Machine learning training op-

timization using the barycentric correction procedure.

In 5th International Conference on Artificial Intelli-

gence and Big Data (AIBD 2024), volume 14, pages

189–198.

Research, G. (2024). Google colaboratory. Accessed: 2024-

07-18.

Singh, S. (2023). The role of quantum computers in the

future of ai and data. Accessed: 2024-08-15.

Team, Q. M. L. D. (2024). Machine learning tutorials. Ac-

cessed: 2024-07-15.

Turtletaub, I., Li, G., Ibrahim, M., and Franzon, P. (2020).

Application of quantum machine learning to vlsi

placement. In Proceedings of the 2020 ACM/IEEE

Workshop on Machine Learning for CAD, pages 61–

66, New York, NY, USA. Association for Computing

Machinery.

Ullah, U. and Garcia-Zapirain, B. (2024). Quantum ma-

chine learning revolution in healthcare: a system-

atic review of emerging perspectives and applications.

IEEE Access, 12:11423–11450.

Upama, P. B., Kolli, A., Kolli, H., Alam, S., Syam, M.,

Shahriar, H., and Ahamed, S. I. (2023). Quantum ma-

chine learning in disease detection and prediction: A

survey of applications and future possibilities. In 2023

IEEE 47th Annual Computers, Software, and Appli-

cations Conference (COMPSAC), pages 1545–1551.

IEEE.

Veps

¨

al

¨

ainen, A., Winik, R., Karamlou, A. H., Braum

¨

uller,

J., Paolo, A. D., Sung, Y., Kannan, B., Kjaergaard, M.,

Kim, D. K., Melville, A. J., et al. (2022). Improving

qubit coherence using closed-loop feedback. Nature

Communications, 13(1):1932.

Vijay, A., Bhargava, H., Pareek, A., Suravajhala, P., and

Sharma, A. (2023). Quantum Machine Learning for

Biological Applications, pages 75–86. Chapman and

Hall/CRC.

Wu, S. L., Chan, J., Cheng, A., Guan, W., Sun, S., Zhang,

R., Zhou, C., Livny, M., Meglio, A., Li, A., et al.

(2022). Application of quantum machine learning to

hep analysis at lhc using quantum computer simula-

tors and quantum computer hardware. In European

Physical Society Conference on High Energy Physics,

page 842.

Wu, S. L., Chan, J., Guan, W., Sun, S., Wang, A., Zhou,

C., Livny, M., Carminati, F., Di Meglio, A., Li, A. C.,

ICAART 2025 - 17th International Conference on Agents and Artificial Intelligence

486

et al. (2021a). Application of quantum machine learn-

ing using the quantum variational classifier method to

high energy physics analysis at the lhc on ibm quan-

tum computer simulator and hardware with 10 qubits.

Journal of Physics G: Nuclear and Particle Physics,

48(12):125003.

Wu, S. L., Sun, S., Guan, W., Zhou, C., Chan, J., Cheng,

C. L., Pham, T., Qian, Y., Wang, A. Z., Zhang, R.,

et al. (2021b). Application of quantum machine learn-

ing using the quantum kernel algorithm on high en-

ergy physics analysis at the lhc. Physical Review Re-

search, 3(3):033221.

Xia, D., Chen, J., Fu, Z., Xu, T., Wang, Z., Liu, W., Xie,

H.-B., and Peijnenburg, W. J. (2022). Potential appli-

cation of machine-learning-based quantum chemical

methods in environmental chemistry. Environmental

Science and Technology, 56(4):2115–2123.

Zeguendry, A., Jarir, Z., and Quafafou, M. (2023). Quantum

machine learning: A review and case studies. Entropy,

25(2):287.

Implementation of Quantum Machine Learning on Educational Data

487