Leveraging Vision Language Models for Understanding and Detecting

Violence in Videos

Jose Alejandro Avellaneda Gonzalez

a

, Tetsu Matsukawa

b

and Einoshin Suzuki

c

ISEE, Kyushu University, Fukuoka, 819-0395, Japan

joseavellaneda88@gmail.com, {matsukawa, suzuki}@inf.kyushu-u.ac.jp

Keywords:

Video Violence Detection, Vision-Language Models, Large Language Models (LLMs), Video Violence

Analysis.

Abstract:

Detecting violent behaviors in video content is crucial for public safety and security. Ensuring accurate iden-

tification of such behaviors can prevent harm and enhance surveillance. Traditional methods rely on manual

feature extraction and classical machine learning algorithms, which lack robustness and adaptability in diverse

real-world scenarios. These methods struggle with environmental variability and often fail to generalize across

contexts. Due to the nature of violence content, ethical and legal challenges in dataset collection result in a

scarcity of data. This limitation impacts modern deep learning approaches, which, despite their effectiveness,

often produce models that struggle to generalize well across diverse contexts. To address these challenges,

we propose VIVID: Vision-Language Integration for Violence Identification and Detection. VIVID leverages

Vision Language Models (VLMs) and a database of violence definitions to mitigate biases in Large Language

Models (LLMs) and operates effectively with limited video data. VIVID functions through two steps: key-

frame selection based on optical flow to capture high-motion frames, and violence detection using VLMs to

translate visual representations into tokens, enabling LLMs to comprehend video content. By incorporating

an external database with definitions of violence, VIVID ensures accurate and contextually relevant under-

standing, addressing inherent biases in LLMs. Experimental results on five datasets—Movies, Surveillance

Fight, RWF-2000, Hockey, and XD-Violence— demonstrate that VIVID outperforms LLM-based methods

and achieves competitive performance compared with deep learning-based methods, with the added benefit of

providing explanations for its detections.

1 INTRODUCTION

Detecting violent behaviors in video content is essen-

tial for public safety and security research. Video

violence detection aims to identify actions involv-

ing physical force that harm individuals or damage

property, such as fighting and assault (Ullah et al.,

2023). It has various applications in video surveil-

lance, such as automated systems that can promptly

deliver timely alerts for hazardous scenarios, enabling

swift responses (Mumtaz et al., 2023).

Traditional methods primarily focus on manual

feature extraction from video data (De Souza et al.,

2010; Bermejo et al., 2011; Deniz et al., 2014; Senst

et al., 2017; Das et al., 2019; Febin et al., 2020).

These methods manually extract spatial, temporal, or

spatiotemporal features and use supervised or unsu-

a

https://orcid.org/0009-0008-3082-8921

b

https://orcid.org/0000-0002-8841-6304

c

https://orcid.org/0000-0001-7743-6177

pervised classification methods to determine whether

the content is violent. However, these methods ex-

hibit deficiencies in robustness and adaptability in

real-world scenarios due to limitations such as instal-

lation angles, diverse locations, varying backgrounds,

and video resolutions (Park et al., 2024).

Several works (Park et al., 2024; Abdali and Al-

Tuma, 2019; Sudhakaran and Lanz, 2017; Cheng

et al., 2021; Aktı et al., 2019) explore deep learning

models that autonomously identify features and pat-

terns to classify violent behavior. However, datasets

specifically related to video violence detection are

scarce (Mumtaz et al., 2023). Despite their favor-

able performance, the practical applicability of these

methods heavily relies on the training data. Training

deep models or learning motion patterns from an in-

sufficient set of violent videos results in non-generic

models which may not be practical for real-world sce-

narios. Therefore, current violent detection methods

are insufficient for generalizing and identifying vio-

Gonzalez, J. A. A., Matsukawa, T. and Suzuki, E.

Leveraging Vision Language Models for Understanding and Detecting Violence in Videos.

DOI: 10.5220/0013160000003912

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 20th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2025) - Volume 2: VISAPP, pages

99-113

ISBN: 978-989-758-728-3; ISSN: 2184-4321

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

99

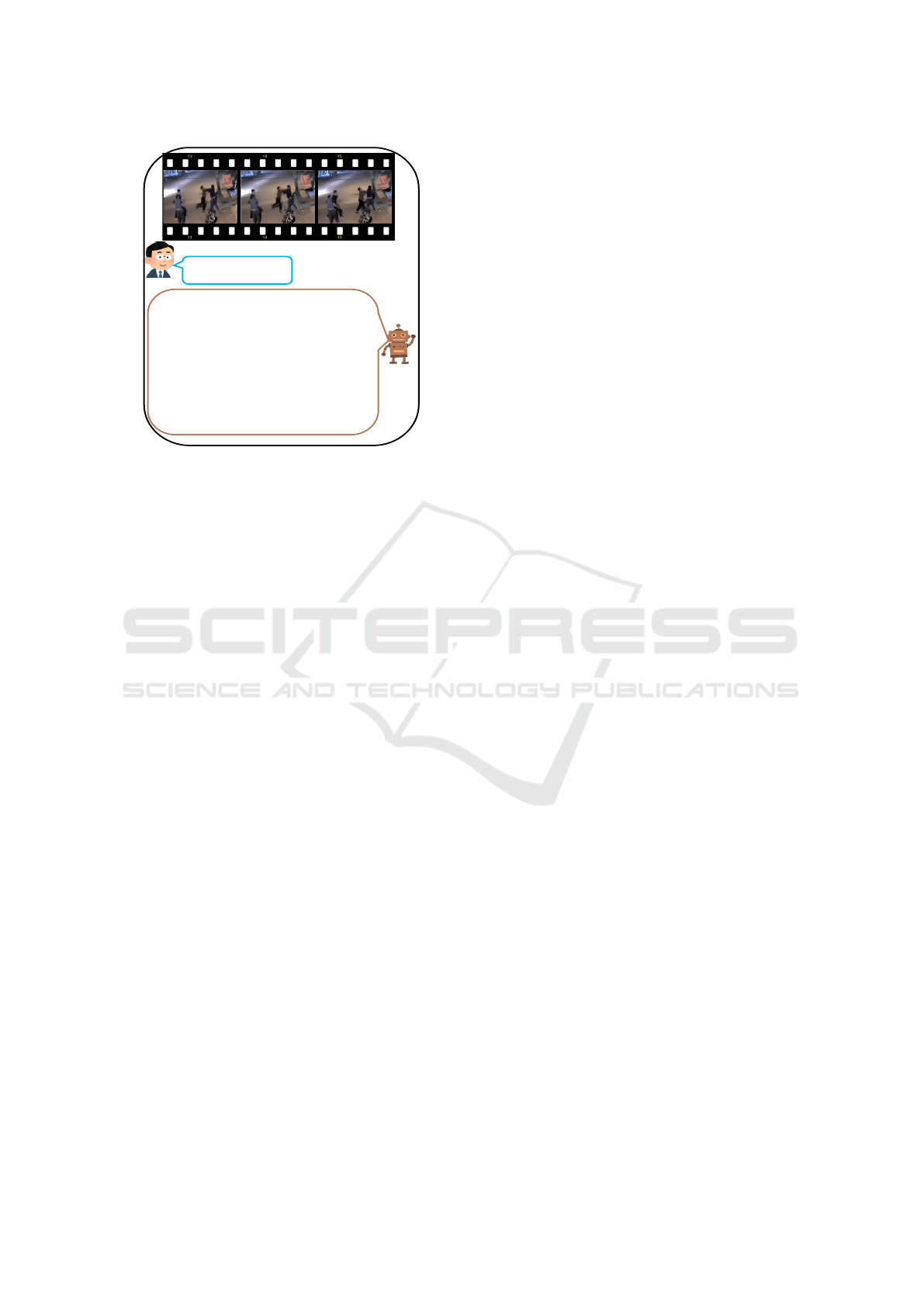

Is this image

violent?

User

No, there are

two girls

fighting but

there is no

violence

because they

are girls.

(a) BLIP-2.

Is this video

violent?

User

I would not classify it as

violent. The video appears to

show police officer subduing

and arresting another person in

a hallway. It does not appear

to be violent or aggressive.

(b) Video-LLaMA.

Is this video

violent?

User

No, the video does not

show any violent

behavior. The children

are seen playing and

having fun in a safe and

controlled environment

(c) Video-LLaVA.

Figure 1: LLM’s inherited bias examples: they contain gender, occupation, age, race, and other biases.

lence in real-world contexts.

Recently, several efforts have been made to con-

struct frameworks for visual understanding based on

Vision Language Models (VLMs) (Li et al., 2023a;

Dai et al., 2023; Panagopoulou et al., 2023; Lin et al.,

2023; Li et al., 2023b). These models take images

or videos as input and utilize Large Language Models

(LLMs) to provide descriptions or answer questions

based on the visual content.

However, LLMs inherit biases from their training

data, impacting their ability to generalize across vari-

ous problems, including violence detection. For in-

stance, as shown in Figure 1b, consider a scenario

where the input of the VLM is a video of a police of-

ficer subduing and arresting someone. This scenario

contains elements of violence, such as physical force

and restraint, which can cause harm or distress. The

VLM classified this scenario as non-violent due to an

inherent bias from the training data, reflecting societal

perceptions of law enforcement actions. This misclas-

sification arises from the LLM’s reliance on biased

data, skewing its interpretation of violent behavior.

Furthermore, guardrails in LLMs, aligned with

ethical standards, may lead to ambiguous answers

on sensitive topics. Fine-tuning video understanding

methods to align with video content (Lin et al., 2023;

Li et al., 2023b) can further alter their understanding.

Addressing bias and ensuring robustness across dif-

ferent scenarios remains an ongoing challenge.

To overcome these challenges, we propose a novel

method called VIVID: Vision-Language Integration

for Violence Identification and Detection. VIVID

is designed for scenarios where video or image data

is scarce or insufficient. Specifically, our approach

leverages a VLM in conjunction with an external

knowledge violence database. This combination

helps address inherent biases and improves the detec-

tion and identification of violent behaviors by provid-

ing accurate and contextually relevant information.

VIVID involves two main steps: key-frame selec-

tion and violence detection. In the first step, we an-

alyze the optical flow within the video to capture es-

sential moments that may potentially contain forceful

actions capable of causing harm or damage.

In the second step, we leverage a VLM and an

LLM to serve as our violence detector. VLM models

are pre-trained on large datasets containing paired im-

ages and texts, allowing them to understand and gen-

erate texts based on images. LLMs, on the other hand,

are designed to comprehend and generate human-like

language, meaning that if a concept such as violence

can be semantically defined, an LLM would have the

ability to identify the concept in a given context.

We propose to address the intrinsic biases of

LLMs by using an external knowledge base that

contains definitions of violence and violence-related

terms. We handle the bias in two main steps: (1) iden-

tifying the most relevant violence-related definition

associated with the video content, and (2) combin-

ing this definition with a video representation to en-

hance the LLM’s accuracy related to violence. These

steps ensure that the LLM’s responses are both ac-

curate and contextually relevant. VIVID requires

no additional training and small computational over-

head during inference, making it efficient and practi-

cal for real-world applications. Additionally, VIVID

not only classifies violent content but also provides an

explanation in text, allowing users to understand why

the content is deemed violent.

In summary, our contributions are as follows:

VISAPP 2025 - 20th International Conference on Computer Vision Theory and Applications

100

• We propose VIVID, a novel method that in-

tegrates Vision-Language Models (VLMs) with

Language Models (LLMs) for robust and inter-

pretable video violence detection.

• We offer a zero-shot classification alternative, al-

lowing the model to classify violent content with-

out additional training, making it particularly suit-

able for scenarios with limited labeled data.

• We propose a multimodal retrieval method to

compare visual and text features, addressing bi-

ases inherent in LLMs and leading to more accu-

rate and contextually relevant results.

• By combining VLMs with an external knowledge

base, our method effectively captures complex vi-

sual and contextual cues, improving its accuracy

in detecting violence.

• Our framework provides clear explanations for its

classifications, enhancing user trust and under-

standing its decisions.

• We demonstrate that the proposed method consis-

tently outperforms other methods based on LLMs

across multiple datasets, highlighting its effective-

ness in identifying violent content.

2 RELATED WORK

2.1 Violence Detection

Violence detection in videos is a critical area of re-

search with significant implications for public safety

and surveillance. Traditional approaches to vio-

lence detection have predominantly relied on manu-

ally crafted features and classical machine learning

algorithms. Early methods like those proposed by

De Souza et al. (De Souza et al., 2010) and Deniz et

al. (Deniz et al., 2014) focused on extracting spatio-

temporal features from video frames to identify vio-

lent actions. For instance, De Souza et al. (2010)

(De Souza et al., 2010) utilized local spatio-temporal

features with the Bag of Visual Words (BoVW) rep-

resentation, extracting features using descriptors such

as Scale-Invariant Feature Transform (SIFT). These

features were then classified using Support Vector

Machines (SVMs) to identify violent actions. Simi-

larly, Deniz et al. (2014) (Deniz et al., 2014) devel-

oped a method for fast violence detection by extract-

ing extreme acceleration patterns through the applica-

tion of the Radom transform to the power spectrum of

consecutive frames, using SVMs to rapidly identify

violent events.

Senst et al. (2017) (Senst et al., 2017) em-

ployed global motion-compensated Lagrangian fea-

tures and scale-sensitive video-level representation to

capture motion patterns and dynamics within video

footage. This method utilized histogram-intersection-

based clustering to detect instances of violence ef-

fectively. Das et al. (2019) (Das et al., 2019) uti-

lized Histogram of Oriented Gradient (HOG) features

to capture edge and gradient information from video

frames. These features were used with classifiers such

as SVM, Logistic Regression, Random Forest, Linear

Discriminant Analysis (LDA), Na

¨

ıve Bayes, and K-

Nearest Neighbors (KNN) are used for classification

purposes.

Hassner et al. (2012) (Hassner et al., 2012) intro-

duced the concept of violent flows, utilizing optical

flow and motion regions to detect violent activities in

real time. This approach involved analyzing motion

patterns within video frames to identify sudden and

aggressive movements, which were then classified us-

ing SVMs.

However, these traditional methods exhibit sev-

eral limitations when applied to real-world scenarios.

They often struggle with robustness and adaptabil-

ity to diverse environmental factors, such as differ-

ent installation angles, backgrounds, and video reso-

lutions. For instance, a video captured from a high

angle might obscure crucial details, while a cluttered

environment may introduce noise, complicating fea-

ture extraction and analysis. Consequently, there has

been a shift towards deep learning-based methods in

recent years to address these challenges and improve

the accuracy and reliability of violence detection sys-

tems.

Recent advancements in deep learning have led to

significant improvements in violence detection sys-

tems. These methods leverage the power of neu-

ral networks to automatically learn and extract fea-

tures from video data, often leading to superior per-

formance compared to traditional approaches.

One of the latest methods is by Park et al. (2024)

(Park et al., 2024), who proposed a Conv3D-based

network that combines optical flow and RGB data

to detect violent behaviors in videos. This method

utilizes three-dimensional convolutions to capture

spatio-temporal features from video frames, provid-

ing a comprehensive understanding of motion and ap-

pearance. Similarly, Abdali and Al-Tuma (2019) (Ab-

dali and Al-Tuma, 2019) introduced a robust real-time

violence detection system using Convolutional Neu-

ral Networks (CNNs) and Long Short-Term Memory

(LSTM) networks. By capturing both spatial and tem-

poral features, this method effectively enables the de-

tection of violence in video sequences.

Leveraging Vision Language Models for Understanding and Detecting Violence in Videos

101

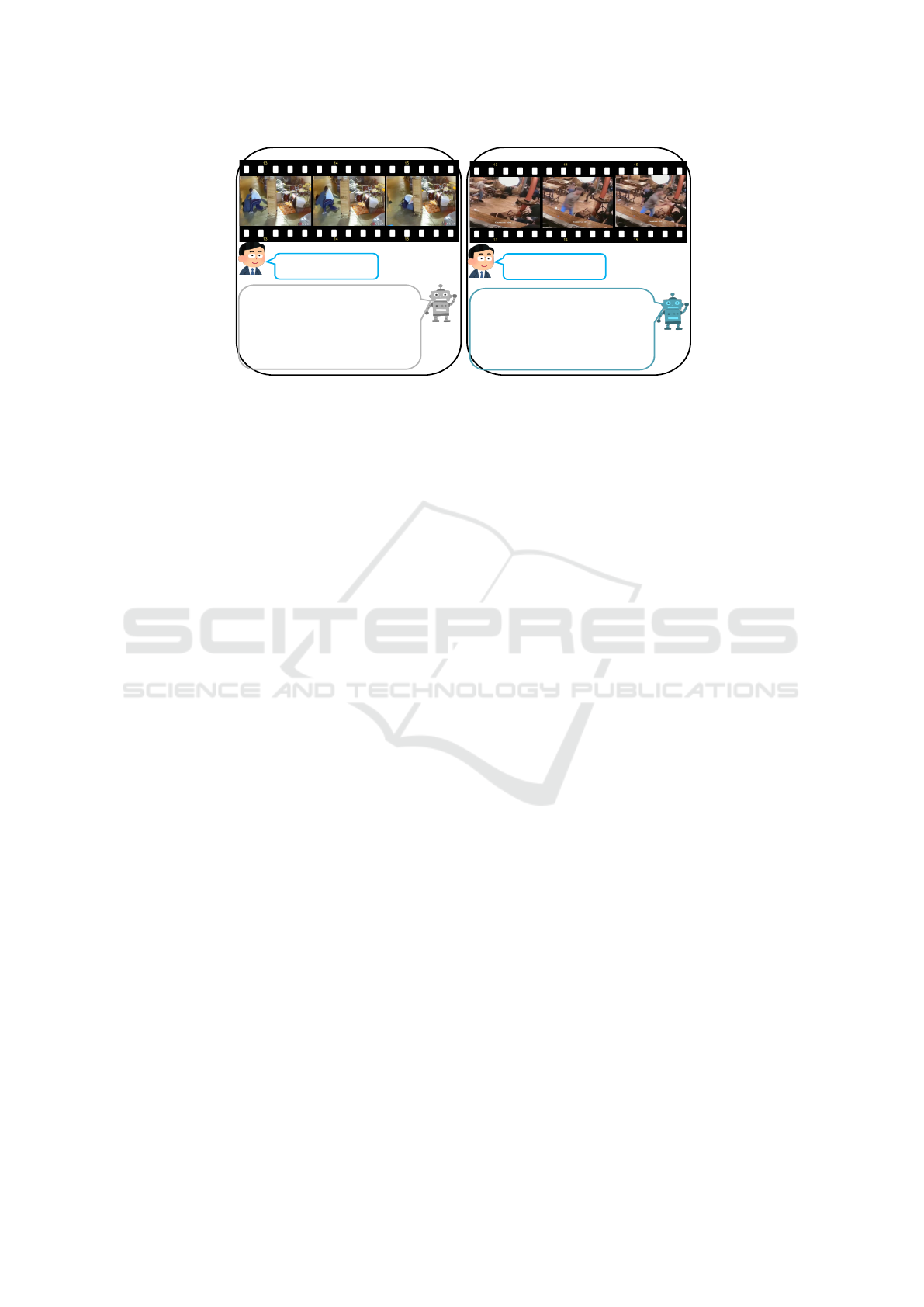

Is this video

violent?

User

It appears that the video depicts a

group of men in a confrontation.

While there is some physical

contact, it does not appear to be a

violent scene. However, I

emphasize that violence can take

many forms and can be

subjective, depending on the

individual's perspective.

Figure 2: LLM’s guardrail bias example. This example

shows an ambiguous answer from Video-LLaMA.

Sudhakaran and Lanz (2017) (Sudhakaran and

Lanz, 2017) developed a method leveraging con-

volutional LSTM networks to detect violent activi-

ties. The convolutional layers extract spatial features,

which are then processed by LSTM units to capture

temporal patterns. Furthermore, Wu et al. (2020)

(Wu et al., 2020) proposed a multimodal approach

that combines audio and visual information to detect

violence under weak supervision, particularly in sce-

narios where one modality might be less effective

Traor

´

e et al. (2020) (Traor

´

e and Akhloufi, 2020)

used a combination of Deep Recurrent Neural Net-

works (RNNs) and CNNs to detect violence in videos.

The CNNs are responsible for extracting spatial fea-

tures from video frames, while the RNNs, particularly

LSTM networks, capture the temporal dynamics.

Another approach by Ullah et al. (2019) (Ullah

et al., 2019) leverages 3D Convolutional Neural Net-

works (3D CNNs) to extract spatiotemporal features

from video sequences for violence detection. The 3D

CNN captures both spatial and temporal dimensions

of violent actions.

However, these methods are often limited by the

scarcity of violence-specific datasets, resulting in

non-generalizable models. Training these models on

a limited amount of data can affect their ability to

generalize effectively across various real-world sce-

narios. Additionally, the sensitive nature of violent

content poses challenges for dataset collection due to

ethical and legal considerations, further complicating

the development of robust and generalizable models.

2.2 Vision Language Models

Vision Language Models for image understanding (Li

et al., 2023a; Dai et al., 2023) and video understand-

ing (Zhang et al., 2023; Lin et al., 2023; Li et al.,

2023b; Panagopoulou et al., 2023) process images or

videos as input and leverage LLMs to generate de-

scriptions or respond to queries based on the visual

content. This integration of visual and textual data fa-

cilitates a more holistic comprehension of the content.

The Salesforce Research Division proposed the

“BLIP family models,” which include BLIP-2 (Li

et al., 2023a), InstructBLIP (Dai et al., 2023), and

X-InstructBLIP (Panagopoulou et al., 2023). These

models share a common goal of integrating vision

and language models and building upon each other’s

architectures and concepts. For instance, BLIP-2

(Li et al., 2023a) is a vision-language pre-training

method that bootstraps from frozen pre-trained uni-

modal models to bridge the modality gap by using

a Querying Transformer (Q-Former). The Q-Former

is a trainable module designed to connect a frozen

image encoder with a frozen LLM. Similarly, In-

structBLIP (Dai et al., 2023) extends BLIP-2 with

the aim of creating general-purpose vision-language

models through instruction tuning, thereby enhancing

the model’s ability to follow natural language instruc-

tions. Furthermore, X-InstructBLIP (Panagopoulou

et al., 2023) extends the capabilities of Instruct-

BLIP by enabling cross-modal reasoning across var-

ious modalities, including video, without extensive

modality-specific pre-training.

Similarly, Video-LLaMA (Zhang et al., 2023) en-

ables frozen LLMs to understand both visual and au-

ditory content in videos. It features two branches: the

Vision-Language Branch and the Audio-Language

Branch, which convert video frames and audio signals

into query representations compatible with LLMs en-

hancing its ability to comprehend complex video con-

tent.

However, these methods rely entirely on frozen

LLMs, which introduces potential biases inherited

from the training data. These biases can significantly

affect the model’s capacity to generalize across di-

verse contexts, including the detection of violent con-

tent. For example, suppose the training data encom-

passes biases related to specific demographics or sce-

narios. In that case, the model may encounter dif-

ficulties in accurately interpreting or responding to

novel, unseen data that diverges from these patterns

as shown in Figure 1. This issue is particularly criti-

cal in applications such as violence detection, where

an accurate and unbiased interpretation is essential.

Moreover, many LLMs are equipped with

VISAPP 2025 - 20th International Conference on Computer Vision Theory and Applications

102

Is this video

violent?

User

No, the video does not

show any violent actions.

The man is seen playing

the drums and the

camera pans around him.

(a) Video-LLaVA.

User

Is this video

violent?

No, this video is not

violent. It shows a group

of people dancing and

having fun in a

restaurant.

(b) LLaMA-VID.

Figure 3: Example of LLM’s alignment bias.

guardrails intended to align with ethical standards and

societal expectations. These guardrails are designed

to prevent generation of harmful or inappropriate con-

tent. However, these measures can inadvertently in-

troduce additional biases (Dong et al., 2024). For ex-

ample, when dealing with sensitive topics such as vi-

olence, LLMs may generate ambiguous responses to

avoid potential controversy. This ambiguity can hin-

der the model’s effectiveness in providing clear and

precise answers, particularly in critical applications

like violence detection or other safety-related tasks,

as illustrated in Figure 2.

Other methodologies, such as those proposed in

Video-LLaVA (Lin et al., 2023) and LLaMA-VID (Li

et al., 2023b), involve not only training a model to

bridge the gap between visual and language modali-

ties but also fine-tuning the LLMs to align with video

content for better understanding. For instance, Video-

LLaVA (Lin et al., 2023) is a model that handles both

images and videos. It aligns image and video repre-

sentations into a unified visual feature space, enabling

an LLM to learn from this unified visual representa-

tion. Additionally, LLaMA-VID (Li et al., 2023b)

is a method where each frame is transformed into

two distinct tokens: the context token, which captures

the overall high-level context, and the content token,

which focuses on specific visual details. During train-

ing, the LLM learns to associate these tokens with the

corresponding visual and textual data.

These adaptations entail modifying the model’s

parameters based on the specific attributes of the

video data. While this process can enhance the

model’s performance in comprehending and interpret-

ing video content, it can also alter the LLM’s under-

standing within specific contexts. Consequently, the

model’s responses may become excessively tailored

to the content observed in the videos used for fine-

tuning, potentially diminishing its ability to general-

ize to new, diverse scenarios as shown in Figure 3.

3 PROBLEM FORMULATION

Our objective is to detect violence within video clips

in the context of human monitoring. Given the

scarcity, diversity, and limited availability of labeled

data specifically containing violent content, we ap-

proach this problem as a zero-shot violence detec-

tion scenario, i.e., without prior training on video

clips from target datasets that include violence or non-

violence classes.

Following this paradigm, the model receives as in-

put a set of video clips {(C

i

)}

n

i=1

. For C

i

, the model

produces two outputs: an associated class prediction

ˆy

i

∈ {0, 1} and an explanatory text e

i

detailing the

classification decision. In this context, a class label

0 represents non-violent content, while 1 denotes vi-

olent content.

Our focus lies in the specific detection of physical

violence defined as an act attempting to cause, or re-

sulting in, pain and/or physical injury, or damage to

the state of something (American Psychological As-

sociation, 2024).

To assess the effectiveness of our method, we em-

ploy accuracy and F1-score. These measures collec-

tively provide insights into the model’s performance,

ensuring robustness and reliability in detecting violent

content within video clips.

4 PROPOSED METHOD

4.1 Overview

We propose VIVID: Vision-Language Integration for

Violence Identification and Detection. This method

Leveraging Vision Language Models for Understanding and Detecting Violence in Videos

103

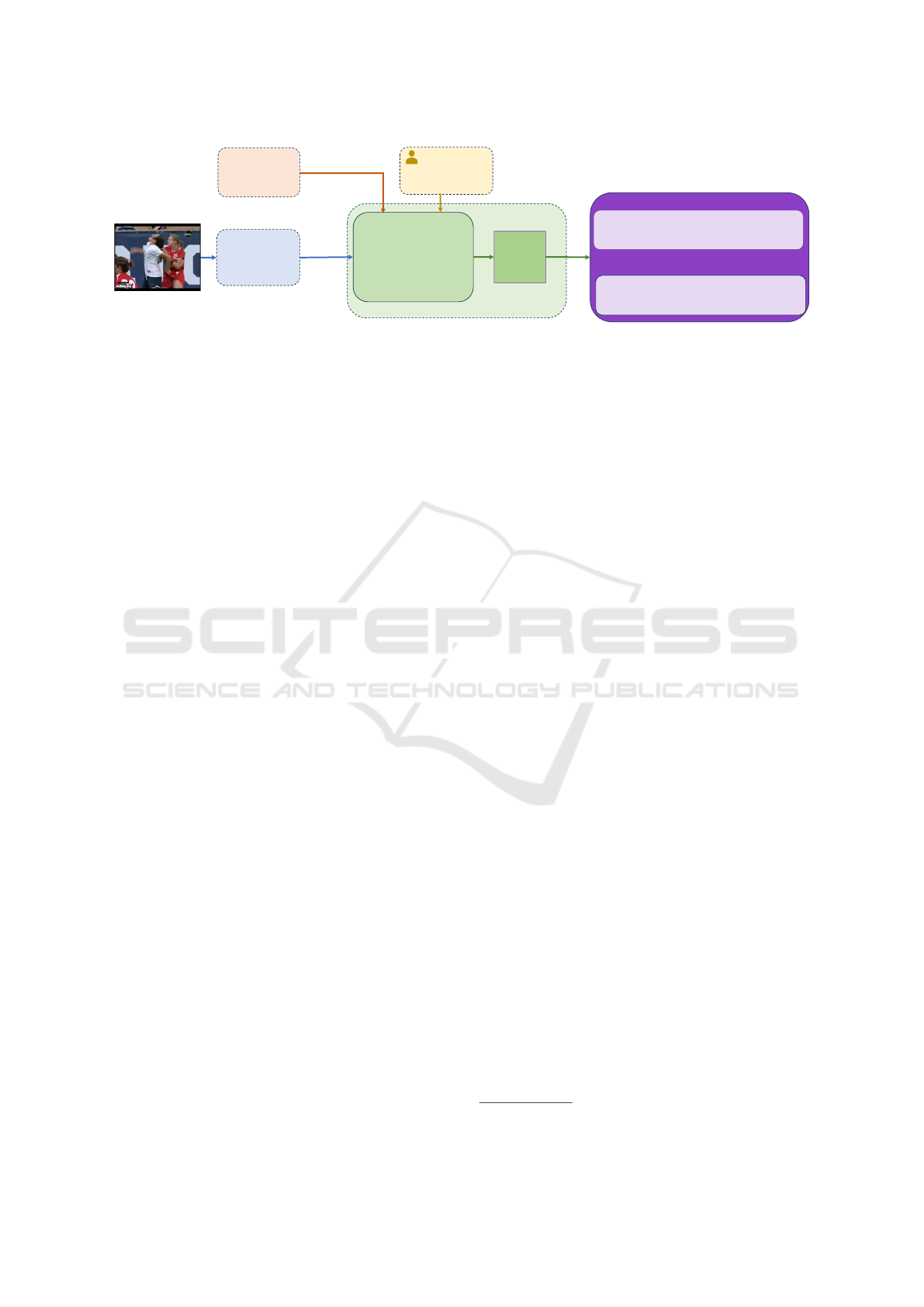

Video

Mo�on

detector

Key-frame selec�on

Transformer

Relevant term

retrieval

Defini�on Retriever

KB

Q

Former

FC

Layer

Modality translator

Visual

prompts

LLM

Final

prompt

Query: Is this

video violent?

User

Answer

Output Generator

Tokenizer

Reordering

Figure 4: Architecture of the proposed method.

Input: Video clip C, Knowledge base KB,

Number of frames k

Output: Prediction ˆy and Explanation e

// Key-frame Selection;

key frames ← Top k frames

with the greatest magnitude of motion;

// Rest: Violence Detector;

// Modality Translator;

queries ← [];

for each frame I

t

∈ key frames do

V

t

← Q Former(I

t

);

append (queries, V

t

);

end

// Definition Retriever;

definitions ← [];

for each query V

t

∈ queries do

D

t

← retrieve definition(V

t

, KB);

append (definitions, D

t

);

end

D

s

← select definition(definitions);

V

′

t

← reorder queries(queries, D

s

);

// Modality Translator;

T

v

← FC layer(V

′

t

);

// Output Generator;

Q

u

← ”Is this video violent?”;

T

u

← LLM tokenizer(Q

u

);

T

d

← LLM tokenizer(D

s

);

final prompt ← create prompt(T

u

, T

v

, T

d

);

( ˆy, e

t

) ← LLM process(final prompt);

return ( ˆy, e)

Algorithm 1: Violence Detection using VIVID for a sin-

gle video clip C.

leverages the strengths of Vision-Language Models

(VLMs) and Language Models (LLMs) to provide a

robust, interpretable solution for video violence de-

tection.

The detailed steps of the VIVID algorithm, out-

lined in Algorithm 1. It involve two main processes:

key-frame selection and violence detection.

In the first step, key-frame selection, the model

utilizes optical flow (Bobick and Davis, 2001) anal-

ysis to quantify motion within the video. For each

frame I

t

in the video C, the magnitude of motion is

computed and stored. The frames with the highest

motion activity are selected as keyframes, capturing

essential moments that potentially contain violent ac-

tions.

In the second step, violence detection, the model

performs several sub-tasks to analyze the keyframes.

It begins by translating each frame into visual queries

V

t

through the Q-Former, enabling direct comparison

with text embeddings. The definitions related to each

query D

t

are then retrieved from the knowledge base

KB. The most relevant definition for the video D

s

is

then selected from the list that contains all the defi-

nitions of each frame. The append(x, y) function is

used to add the element y to the list x. In this context,

it is utilized to add new items to lists queries and def-

initions during the processing steps.

D

s

is used to reorder V

t

to mitigate the ‘lost in

the middle’ effect, which will be explained in detail

in Section 4.3.2. The reordered queries V

′

t

are subse-

quently processed through a fully connected layer to

create visual tokens. A prompt is created using the vi-

sual tokens, definition tokens, and user query tokens.

The final prompt is then processed by the language

model to produce a label indicating whether the con-

tent is violent or not, along with an explanatory text

detailing the classification decision.

VISAPP 2025 - 20th International Conference on Computer Vision Theory and Applications

104

A summary of the architecture is shown in Figure 4.

4.2 Key-Frame Selection

As we argued in Section 1, violent content is scarce

and has limited availability. This scarcity makes it

challenging to train models that can generalize pat-

terns associated with violent actions. To address this

issue, we propose using optical flow to extract poten-

tial violent frames.

Optical flow (Bobick and Davis, 2001) effectively

captures dynamic movements in video sequences,

which are often indicative of violent behaviors. By

semantic definition, a violent action involves forceful

behaviors like hitting, kicking, or grappling, which in-

herently exhibit a greater degree of motion compared

with other non-violent activities (Herrenkohl, 2011).

Consequently, we extract potential violent frames by

calculating the norm of dense optical flow vectors,

summarizing the overall motion magnitude.

Several alternatives to optical flow could be con-

sidered for frame extraction, including traditional ap-

proaches like Frame Differencing and Background

Subtraction (Szeliski, 2022), as well as more ad-

vanced techniques such as Motion History Images

(MHI) (Bobick and Davis, 2001). For instance,

Frame Differencing calculates the difference between

consecutive frames to detect changes in the scene.

While simpler and computationally less expensive, it

may miss subtle movements and fail to capture com-

plex motion patterns associated with violent actions

(Szeliski, 2022). Similarly, Background Subtraction

isolates moving objects by subtracting a background

model from each frame. However, it is susceptible

to lighting changes and background clutter, leading to

false detections (Szeliski, 2022). MHIs create a mo-

tion that represents a single image over time by ac-

cumulating motion information. However, they may

blur detailed motion information and do not provide

specific frames in the video (Sun et al., 2022), mak-

ing it challenging to identify and extract frames cor-

responding to specific violent actions.

Unlike these alternatives, optical flow offers a

balanced approach that effectively captures dynamic

motion. It quantifies the velocity and direction of

motion at each pixel, providing detailed information

about movements within the scene (Szeliski, 2022).

This granularity is essential for identifying violent

actions, which typically involve rapid and forceful

movements. Additionally, optical flow is computa-

tionally efficient and can be applied to both low-

resolution and high-resolution video data.

The importance of using optical flow in violence

detection scenarios has been emphasized in several

works (Dalal et al., 2006; Hassner et al., 2012; Wang

et al., 2013; Park et al., 2024). These methods utilize

optical flow to extract trajectories, build descriptors,

or extract features from video data. However, they

primarily use optical flow for training models to learn

patterns from violent video content, whereas our ap-

proach focuses on selecting key-frames directly based

on motion magnitude.

Consider a video clip C that is decomposed into a

set of frames, where a frame at time t, represented as

I(x, y, t), with (x, y) being the pixel coordinates. The

dense optical flow relates the partial derivatives of im-

age intensity with respect to spatial coordinates and

time as follows:

I

x

· u

x

+ I

y

· u

y

+ I

t

= 0, (1)

where, I

x

and I

y

are the spatial gradients of image in-

tensity in the x and y directions, respectively, and I

t

is the temporal gradient. u

x

and u

y

represent the flow

velocities in the x and y directions, respectively. The

norm of optical flow for a single pixel is calculated as:

|u| =

q

(u

x

)

2

+ (u

y

)

2

. (2)

By summing the norms across all pixels in the

frame, we obtain the total motion magnitude for the

frame as:

U

t

=

∑

x

∑

y

|u(x, y, t)|. (3)

After calculating U

t

for different frames along the

video, we extract the top k frames with the highest

values, which we assume correspond to the frames

containing the highest activity. These frames serve

as input for the subsequent analysis.

We acknowledge that extracting keyframes with

the highest activity does not guarantee selecting all

violent frames. For instance, high motion, such as

a passing car, might deceive the optical flow selec-

tor, causing it to neglect frames with slow-motion vi-

olence, such as a person threatening another with a

knife. In such cases, VIVID may fail to detect the vi-

olent scenario. We leave this challenge to our future

work, which can be addressed by incorporating the

detection of potentially dangerous objects during the

frame selection process.

4.3 Violence Detection

In this step, we combine a Vision Language Model

(VLM) with a Language Model (LLM) to detect vio-

lence in videos. The detection process involves sev-

eral key modules working together, as illustrated in

Figure 4.

Leveraging Vision Language Models for Understanding and Detecting Violence in Videos

105

The Modality Translator component translates vi-

sual data from the video frames into sequence of to-

kens, which can be processed by the LLM. This in-

volves using the VLM’s Q-Former to generate visual

queries V

t

. After reordering the queries V’

t

, they are

processed by a fully connected (FC) layer that con-

verts them into tokens T

v

compatible with the LLM.

This translation ensures that the visual information is

appropriately represented for subsequent analysis.

Following this, the Retrieval module plays a criti-

cal role. It retrieves relevant information by compar-

ing V

t

with the definitions in the knowledge base KB,

identifying the most pertinent violence-related defini-

tions D

t

. From these, we extract the most relevant

definition D

s

for the entire video. To address the ‘lost

in the middle’ effect often observed in LLMs, the vi-

sual queries are reordered to prioritize the most rele-

vant information, ensuring the LLM receives a coher-

ent and contextually appropriate set of inputs.

Finally, the Generator module combines T

v

, user

query tokens T

u

, and the most relevant definition to-

kens T

d

to create a comprehensive final prompt. This

prompt is then processed by the LLM, which gen-

erates a response to the user’s query, determining

whether the video contains violent content.

4.3.1 Modality Translator

As shown in Figure 4, we selected an architecture

based on BLIP-2 (Li et al., 2023a) for the VLM.

This architecture includes a Q-Former, which pro-

vides visual queries containing visual representations

V

t

corresponding to the text, making them directly

comparable with text embeddings in a common sub-

space. Additionally, the VLM has a fully-connected

(FC) layer that linearly projects the reordered visual

queries V

′

t

to match the input dimension of LLM. This

means that the FC layer acts as a visual tokenizer,

converting the reordered visual queries into a set of

tokens T

v

that the LLM can process. The reordering

of visual queries ensures that the most relevant visual

information is emphasized, which is crucial for accu-

rate analysis. This process will be explained in detail

in section 4.3.2.

4.3.2 Definition Retriever

In this section, we explain the processes of retrieving

the definitions to ensure their relevance and accuracy

for violence detection. We break down this section

into three sub-modules: Creating KB, where we de-

fine and build the knowledge base of violence-related

terms and definitions; Relevant Term Retrieval, which

covers how visual queries are compared with the

knowledge base to extract the most relevant defini-

tions; and Reordering Visual Queries, where we dis-

cuss the reordering of visual queries to prioritize the

most pertinent information, mitigating the ‘lost in the

middle’ effect in LLMs.

Creating KB. Retrieval Augmented Generation

(RAG) (Lewis et al., 2020) enhances LLMs by

integrating external knowledge, such as databases,

to improve performance in knowledge-intensive or

domain-specific applications that require continually

updating information (Gao et al., 2023). RAG re-

trieves relevant documents based on the user’s query

and combines them with the original prompt to gen-

erate a response.

Our proposed method addresses biases within

LLMs by drawing inspiration from the RAG frame-

work. However, instead of adding new information,

it focuses on mitigating existing biases related to sen-

sitive topics like violence. To achieve this goal, we

leverage the VLM output, which provides informative

visual representations V

t

corresponding to the text.

Instead of relying on user queries to retrieve docu-

ments, we compare these visual representations with

a predefined set of violence-related terms and defini-

tions, referred to as the knowledge base KB.

Since, to the best of our knowledge, there is no

existing comprehensive knowledge base of violence-

related terms, we propose one that is defined as:

KB = {(w

1

, d

1

), (w

2

, d

2

), . . . , (w

n

, d

n

)}, (4)

where w

i

represents the i-th violence-related term

(word) and d

i

represents the corresponding definition.

We created the knowledge base (KB) of violence-

related terms, comprising 48 entries, each explaining

various aspects of physical violence. These aspects

include direct physical contact, violent sports, col-

lective violence, and broader terms denoting physical

force.

First, direct physical contact encompasses terms

such as “physical abuse”, “physical disputes”, “rape”,

“battery”, and “fights”, describing incidents where

physical harm is directly inflicted upon individuals.

Regarding terms that frequently escalate into

physical confrontations, this includes “clash”, “as-

sault”, “aggression”, and “confrontation”, highlight-

ing situations that may start as verbal or non-physical

conflicts but can quickly escalate to physical violence.

In terms of violent sports, these are activities

where the objective is to subdue opponents through

physical force, such as “boxing”, “wrestling”, “muay

Thai”, and “karate”, where physical engagement is a

structured and accepted part of the sport.

For collective violence, the terms include “riot”,

“brawl”, “vandalism”, and “scuffle”, reflecting sce-

VISAPP 2025 - 20th International Conference on Computer Vision Theory and Applications

106

narios where multiple individuals are involved in vio-

lent acts, often leading to public disorder or damage.

Lastly, broader terms denoting physical force en-

compass words like “war”, “savagery”, “oppression”,

“bullying”, and “genocide”, representing extensive

and severe forms of violence, highlighting large-scale

or systematic acts of harm and intimidation.

This diverse compilation ensures a comprehen-

sive understanding of violence across different con-

texts. The definitions were obtained from well-known

sources, including the Cambridge and Oxford dictio-

naries, Wikipedia, and Wex, a legal dictionary spon-

sored by the Legal Information Institute at Cornell

Law School.

Relevant Term Retrieval. By comparing V

t

with

the violence-related terms in the knowledge base, we

identify the most relevant violence-related definition

D

t

associated with each content frame. Specifically,

we calculate the similarity between the visual repre-

sentations of each frame and the textual definitions.

Given a video frame I

t

∈ R

H×W ×3

, the pre-trained

Q-Former produces visual queries V

t

∈ R

N×d

q

. Here,

N is the number of visual queries obtained by the Q-

Former, and d

q

is the dimension of the visual embed-

ding of each query, respectively. Subsequently, we

use the pre-trained text transformer from BLIP2 to

obtain the definition embedding D

t

∈ R

M×d

q

. Here

M is the number of text tokens and the dimension of

the embedding of each token are set to d

q

. For each

frame, we retrieve the definition from KB that has the

smallest squared Euclidean (L2) distance to the visual

embedding.

In BLIP2 (Li et al., 2023a), to calculate the con-

trastive loss, they propose to compare the [CLS] token

from the text embedding and the visual query with

the highest pairwise similarity. However, since our

goal is not to align visual and text representations, but

rather to select the most relevant violent definition for

the video, we use mean pooling to compare the en-

tire visual sentence with the entire definition sentence.

Specifically, through the mean-pooling step, we ob-

tain and compare the visual sentence V

t

s

∈ R

d

q

and

definition sentence D

t

s

∈ R

d

q

. After processing the

top k frames, we select the definition with the high-

est pairwise similarity across all frames as the most

relevant for the video. We denote the definition sen-

tence vector of the selected definition as D

s

.

Reordering Visual Queries. The ‘lost in the mid-

dle’ effect is a phenomenon observed in LLMs where

the model’s performance degrades when critical infor-

mation is situated in the middle of a long input context

(Liu et al., 2024). This phenomenon occurs because

LLMs tend to focus more on the input’s beginning and

end, often neglecting the information in the middle. In

our method, this effect poses a significant challenge.

To mitigate the ‘lost in the middle’ effect, we pro-

pose reordering the visual queries to prioritize those

that are more relevant to the violence definition. By

aligning the visual queries (V

t

) with the violence def-

inition (D

s

), the LLM receives a coherent and contex-

tually appropriate set of inputs. This alignment en-

sures that the most critical information is positioned at

the beginning of each frame, where the LLM is more

likely to focus its attention.

By doing so, the LLM can better understand

whether the visual content is directly related to the

definition of violence, enabling it to disregard irrel-

evant definitions when necessary. Overall, this ap-

proach not only addresses the ‘lost in the middle’ ef-

fect but also enhances the system’s robustness across

diverse scenarios.

To reorder the visual queries, first, we compute

a similarity vector S by calculating the dot product

between V

t

and D

s

as follows:

S = (V

1

· D

s

, . . . , V

t

· D

s

). (5)

This similarity vector indicates how closely each vi-

sual query aligns with the definition. Next, we sort the

indices of the visual queries based on their similarity

scores in descending order. This sorting arranges the

visual queries from the most to the least relevant ac-

cording to their similarity to the definition.

Finally, the visual queries are reordered using

these sorted indices, resulting in the reordered visual

queries V

′

t

. This process is repeated for each of the top

k frames to ensure that the LLM receives a consistent

and contextually relevant input.

4.3.3 Output Generator

To create the final prompt for the LLM, we extract the

tokens from the user query T

u

and the most relevant

definition T

d

, obtained directly by the LLM tokenizer.

The text embeddings are used solely for comparison

purposes between visual and text data: first, to obtain

the most relevant violence-related definition for the

video, and second, to reorder visual queries according

to this definition.

Finally, we receive from the Modality Translator

module T

v

for the corresponding frame. We then

combine T

u

, T

v

, and T

d

to form the final prompt: “Is

this video: <frame 1>, . . . , <frame k> violent, con-

sidering that <definition> is also an expression of

violence?” This prompt enhances LLM’s understand-

ing of the concept of violence, helping to mitigate bi-

ases and ensure robustness across diverse scenarios.

Figure 5 illustrates an example using a single frame.

Leveraging Vision Language Models for Understanding and Detecting Violence in Videos

107

Defini�on

Retriever

Modality

translator

LLM

Query: Is this

image violent?

User

Output Generator

Is this <image>

violent considering

that <defini�on> is

also a expression

of violence?

<image>

<defini�on>

Yes, there are two girls fighting and is

violence because one girl is trying to hurt

the other using her body.

No, there are two girls fighting but there is

no violence because they are girls.

Answer with bias handling

Answer without bias handling

Figure 5: Example of handling bias.

5 EXPERIMENTS

5.1 Datasets

Table 1 summarizes the five datasets we used in the

experiments.

Hockey Fight Detection Dataset (Bermejo et al.,

2011) was specifically created to introduce a new

video dataset for evaluating violence detection sys-

tems, where both normal and violent activities occur

in similar, dynamic settings. It contains 1 000 clips

of action from the National Hockey League (NHL)

games. Each clip comprises 50 frames of 720×576

pixels and is manually labeled as “fight” or “non-

fight”.

Movies Fight Detection Dataset (Bermejo et al.,

2011) was constructed to explore the generalization

capacity of learning fight patterns. It consists of 200

video clips obtained from action movies, of which

100 contain fight, and 100 videos with non-fight

scenes from football games, and other events. The

dataset contains videos depicting a wide variety of

scenes that were captured at different resolutions and

are manually labeled as “fight” or “non-fight”.

Surveillance Camera Fight Dataset (Aktı et al.,

2019) was collected mostly from YouTube. It encom-

passes various fight scenarios, including kicks, fists,

hitting with objects, and wrestling. The dataset fea-

tures footage from different locations such as cafes,

bars, streets, buses, and shops. In total, there are 300

videos in the dataset: 150 depict fighting sequences,

while the remaining 150 show non-fight sequences.

These videos vary in resolution, and frame numbers,

and have an approximate duration of two seconds

each.

Real-World Fighting-2000 Dataset (RWF-2000)

(Cheng et al., 2021) was collected from YouTube. It

consists of 2 000 video clips captured from around

1 000 raw surveillance videos with extended footage.

This dataset is split into two parts: the training set

(80%) and the test set (20%), in which half depicts vi-

olent behaviors, while the other half represents non-

violent activities. The videos have different resolu-

tions and are uniformly cut into 5-second clips with a

frame rate of 30 frames per second.

XD-Violence Dataset (Wu et al., 2020) is a large-

scale and multi-scene dataset that was collected from

movies and YouTube. The dataset includes a total

of 91 movies, which were used to collect both vio-

lent and non-violent events. Additionally, in-the-wild

videos were collected from YouTube. This dataset

has a total duration of 217 hours, containing 4 754

untrimmed videos with audio signals and weak labels,

from which 2 405 are classified as violent, while 2 349

are non-violent. The dataset is split into two parts: a

training set containing 3 954 videos and a test set with

800 videos. The test set consists of 500 violent videos

and 300 non-violent videos.

5.2 Implementation Details

In the Key-frame selection step, we utilized the Gun-

nar Farneback technique, which is implemented in the

Open Source Computer Vision Library (OpenCV)

1

to

calculate dense optical flow. We set the parameter

k = 5, meaning that five frames are selected to repre-

sent each video. This selection helps in reducing the

computational load while maintaining essential mo-

tion information.

We employed a divide-and-conquer strategy for

longer videos, which we define as those containing

more than 5 000 frames. This approach involves split-

ting the video into up to five parts, treating each part

independently to manage the data more efficiently. A

video is classified as violent if at least one of its parts

contains violent content.

For the VLM (modality translator), the architec-

ture includes a Q-Former with N = 32 visual queries,

each having a dimension of d

q

= 768 (Li et al.,

2023a). Additionally, the VLM has an FC layer that

linearly projects each output visual query embedding

1

https://opencv.org/

VISAPP 2025 - 20th International Conference on Computer Vision Theory and Applications

108

Table 1: Dataset description.

Dataset Data Scale

(# clips)

Length/Clip # Violent clips # Non-violent clips Source of scenarios

Movies 200 1.6-2 sec 100 100 Movies and sports

Surveillance

Fight

300 1.4-2 sec 150 150 CCTV and mobile cameras

RWF-2000

2

400 5 sec 200 200 CCTV and mobile cameras

Hockey 1000 1.6-1.96 sec 500 500 Ice hockey games

XD-Violence

2

800 0-10 min 500 300 Movies, sports, games,

hand-held cameras,

CCTV, car cameras, etc.

to match the text embedding dimension of the LLM,

resulting in a 2048-dimensional output layer.

For the LLM, we chose the instruction fine-tuned

version of Google’s T5 (Raffel et al., 2020), also

known as the Text-to-Text Transfer Transformer Lan-

guage Model (Flan-T5) (Chung et al., 2024). Since

we intend for VIVID to be used in detecting violence

in real-time scenarios, we selected the Flan-T5-XL

(Chung et al., 2024), which contains around 3 billion

parameters. This model size allows for a nuanced un-

derstanding and processing of complex language in-

puts on a single NVIDIA GeForce RTX 3090 GPU,

which has 24 GB of GDDR6X memory, and a boost

clock of 1 700 MHz .

5.3 Experimental Results

5.3.1 Comparison with LLM-Based Methods

We compare VIVID with other LLM-based methods

using the test split of video clips from each dataset as

input, along with the user prompt: ”Is this video vi-

olent?” This user query is consistently applied across

all baseline methods to ensure a fair comparison.

The results of our experiments, as summarized

in Table 2 , reveal significant insights into the per-

formance of various models on the five datasets.

VIVID consistently outperforms other LLM-based

models across most datasets, achieving improve-

ments of 0.01–0.324, 0.007–0.187, 0.014–0.294, and

0.066–0.334 in terms of accuracy, and 0.01–0.492,

0.009–0.446, 0.011–0.277, and 0.062–0.537 in terms

of F1-Score on the Movies, RWF-2000, Hockey, and

XD-Violence datasets, respectively.

In the Movies dataset, VIVID significantly outper-

forms other LLM-based models by effectively cap-

2

The Data Scale column lists video clips from the test

split of the dataset, used for model comparison. We use only

the test split to align our evaluation with other methods that

measure performance using the test split.

turing complex visual and contextual cues. It also

leads in the RWF-2000 dataset, demonstrating its ef-

fectiveness in real-world fight detection. The Hockey

dataset results show substantial improvements, high-

lighting VIVID’s capability to detect violent actions

in fast-moving and complex interactions. In the XD-

Violence dataset, VIVID’s robustness is validated by

its ability to handle diverse and complex violent sce-

narios.

The effectiveness of our method lies in its abil-

ity to identify potential violent frames and manage

various biases associated with LLMs: inherited bias,

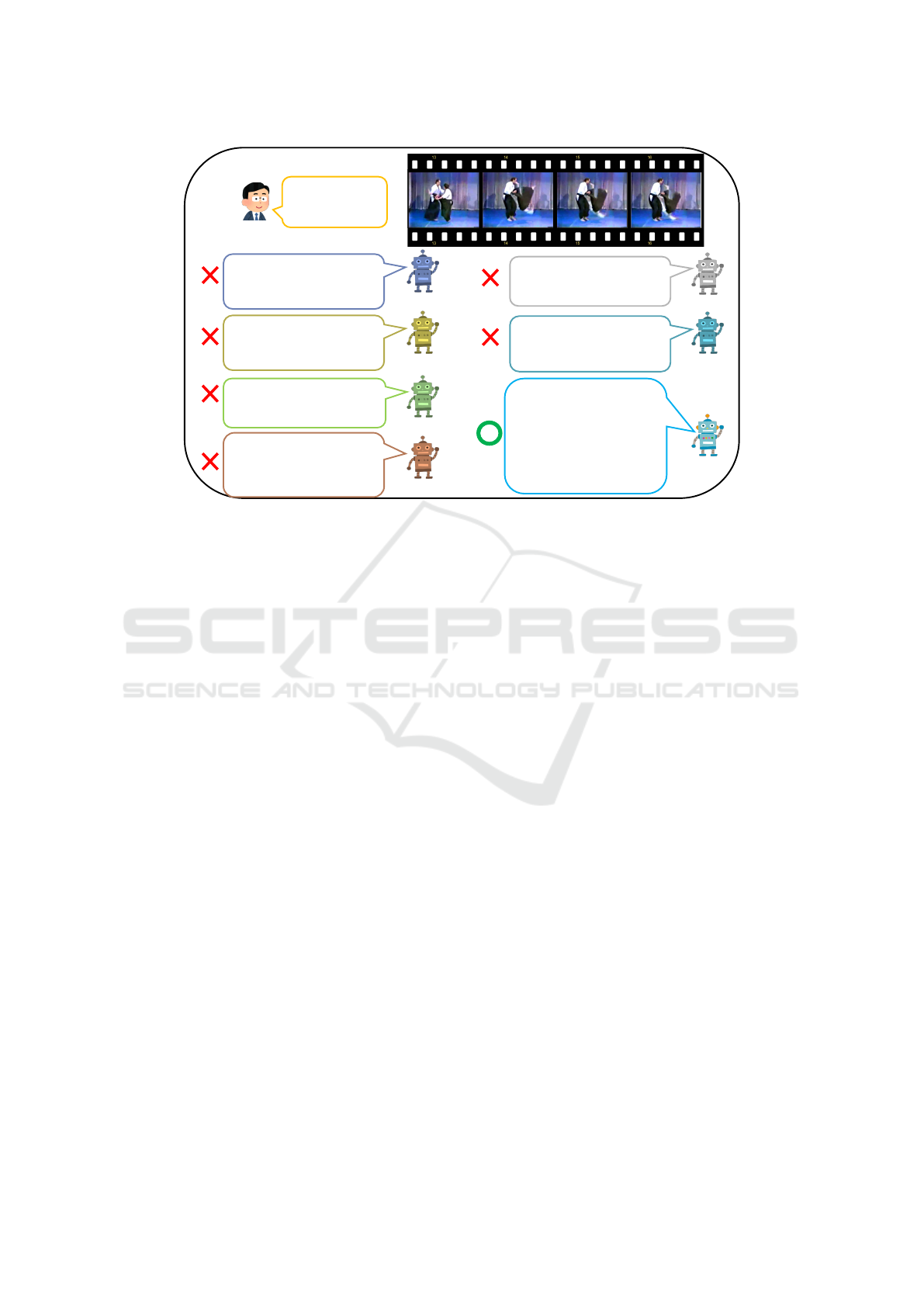

guardrails bias, and alignment bias. Figure 7 shows

the classification made by VIVID over the examples

shown in Figures 1c, 2, and 3b, where our method

successfully identifies the videos as violent. As il-

lustrated in Figure 8, incorporating a violence-related

term in the prompt enables users to address the bias

and influence the LLM’s judgment based on the defi-

nition of violence. The dataset (Bermejo et al., 2011)

categorizes all martial arts as violent sports. There-

fore, by including martial arts as violence-related

terms in the KB, the LLM’s response aligns with the

definition of Karate as a violent sport. This compre-

hensive approach ensures that our method remains ro-

bust and effective across various scenarios, providing

a reliable tool for bias management in LLMs.

In the Surveillance Fight dataset, the “Instruct-

BLIP” model shows the highest accuracy 0.796, while

the “LLaMA-VID” model achieves the highest F1-

Score 0.805. Our model performs well with an ac-

curacy of 0.736 and an F1-Score of 0.781 but does

not lead in this dataset. This fact suggests that while

our model is effective, it may struggle with the spe-

cific challenges posed by surveillance footage, such

as varying lighting conditions like insufficient illumi-

nation, and occlusions as shown in Figure 6. These

challenges could be addressed by jointly fine-tuning

the VLM and the LLM with a small amount of vi-

olent and non-violent videos under these conditions.

Leveraging Vision Language Models for Understanding and Detecting Violence in Videos

109

Table 2: Comparison with LLM-based methods in terms of Accuracy and F1-Score.

Model

LLM

Backbone

Movies Surveillance

Fight

RWF-2000 Hockey XD-Violence

Acc F1 Acc F1 Acc F1 Acc F1 Acc F1

BLIP2

3

Flan-T5-XL (3B) 0.900 0.880 0.776 0.797 0.767 0.760 0.841 0.859 0.760 0.770

InstructBLIP

3

Vicuna-1.1 (7B) 0.810 0.765 0.796 0.784 0.742 0.685 0.932 0.932 0.535 0.436

X-InstructBLIP Vicuna-1.1 (7B) 0.880 0.863 0.793 0.800 0.785 0.766 0.856 0.831 0.648 0.616

Video-LLaMA LLaMA-2 (7B) 0.661 0.492 0.656 0.511 0.610 0.365 0.660 0.676 0.492 0.330

Video-LLaVA Vicuna-1.5 (7B) 0.975 0.974 0.726 0.777 0.782 0.802 0.662 0.743 0.726 0.802

LLaMA-VID Vicuna-1.5 (7B) 0.965 0.963 0.783 0.805 0.790 0.794 0.940 0.942 0.755 0.805

VIVID Flan-T5-XL (3B) 0.985 0.984 0.736 0.781 0.797 0.811 0.954 0.953 0.826 0.867

No, a group of people

standing around a pool table

Is this video

violent?

User

Figure 6: Example where occlusion caused by the pool table

lights partially blocking the violent scene, along with lack

of illumination, resulted in the LLM failing to detect the

violent scene correctly.

However, the primary objective of our research is to

evaluate the capabilities of an LLM to classify violent

content in a zero-shot manner. This approach allows

us to assess the inherent strengths and limitations of

the LLM without further modifications. Nonetheless,

we recognize the potential for improvement through

fine-tuning and consider it a promising direction for

our future work.

5.3.2 Comparison with Traditional

Hand-Crafted Features and Deep

Learning-Based Methods

When comparing VIVID with traditional hand-

crafted features and deep learning-based methods, as

shown in Table 3, we notice a clear difference in per-

formance. Traditional methods such as HOG + HIK

3

BLIP2 and InstructBLIP do not support video input.

We analyze violent content at the frame-level, extracting

frames as in X-InstructBLIP. If any frame contains violent

content, the entire video is classified as violent.

(Bermejo et al., 2011), HOF + HIK (Bermejo et al.,

2011), and MoSIFT + HIK (Bermejo et al., 2011)

show lower accuracy across the datasets. For in-

stance, MoSIFT + HIK achieves an accuracy of 0.895

in the Movies dataset and 0.909 in the Hockey dataset,

which are significantly lower than our model’s per-

formance. This fact indicates that traditional hand-

crafted features are less effective in capturing com-

plex patterns of violence in videos. Deep learning-

based methods, such as Xception + Bi-LSTM + at-

tention (Aktı et al., 2019), Flow Gated (Cheng et al.,

2021), and Conv3D (Park et al., 2024), demonstrate

high performance. For example, Xception + Bi-

LSTM + attention achieves an accuracy of 1.0 in

the Movies dataset and 0.98 in the Hockey dataset.

However, these methods often require a considerable

amount of training data to generalize. Due to the re-

stricted amount of available training data, the result-

ing models are often ad-hoc and fail to generalize vi-

olent behavior across different scenarios.

In contrast, VIVID uses zero-shot classification,

which leverages the pre-existing knowledge of the

LLM along with bias handling to enhance perfor-

mance. This approach not only effectively classifies

violent content but also provides interpretability. The

interpretability feature is particularly advantageous as

it helps users understand why a video instance was

classified as violent or not, making it a valuable tool

for real-world applications. Even though VIVID does

not always achieve the highest accuracy compared

to some deep learning methods, its performance is

competitive or comparable to these methods. Addi-

tionally, our method outperforms the Xception + Bi-

LSTM + attention method in the Surveillance Fight

dataset, achieving an accuracy of 0.736 compared to

0.720.

5.3.3 Ablation Study

To investigate the effects of reordering visual queries,

we conducted experiments using three distinct vari-

VISAPP 2025 - 20th International Conference on Computer Vision Theory and Applications

110

User

Is this video

violent?

Yes, a camera capture a

group of people fighting

at a bar.

VIVID

(a) BLIP-2.

Is this video

violent?

User

Yes, two children are

fighting in a kitchen.

VIVID

(b) Video-LLaMA.

Is this video

violent?

User

Yes, a group of people

are fighting on a street

at night.

VIVID

(c) Video-LLaVA.

Figure 7: Example in which VIVID successfully handles the biases of the LLMs.

Table 3: Comparison with hand-crafted features and deep

learning-based methods in terms of accuracy. A hyphen in-

dicates that the results are not provided in the corresponding

paper.

Type Model Movies S.

Fight

RWF

2000

Hockey

Hand

Crafted

Features

HOG + HIK 0.490 - - 0.917

HOF + HIK 0.590 - - 0.886

MoSIFT + HIK 0.895 - - 0.909

DL

Based

Xception

+ Bi-LSTM

+ attention

1.000 0.720 - 0.980

Flow Gated 1.000 - 0.872 0.980

Conv3D 1.000 - - 0.981

LLM

based

VIVID 0.985 0.736 0.797 0.954

ants of our method. These variants share the com-

mon approach of comparing a visual sentence with

text embedding to identify the most relevant violence-

related definition for an image. However, in each vari-

ation, the text and visual embeddings interact differ-

ently.

Variant 1: Inspired by BLIP2 (Li et al., 2023a), we

compare the [CLS] token from the text with the visual

queries. The most relevant violent definition is iden-

tified as the one with the highest pairwise similarity.

Variant 2: Instead of using the [CLS] token, we use

mean pooling. Specifically, we compare a visual sen-

tence obtained by mean pooling all the visual queries

with a definition sentence obtained by mean pooling

all sentence tokens, as explained in Section 4.3.2.

VIVID: In addition to using the mean pooling ap-

proach from Variant 2, we also reorder the visual

queries. By calculating the dot product between the

visual sentence and each text token, we can prioritize

visual queries that are more relevant to the violence

definition, as explained in Section 4.3.2.

Table 4 shows the results of each variant on the

five datasets. From these results, it is evident that

Table 4: Comparison of our method’s variants in terms of

Accuracy.

Model Movies S.

Fight

RWF

2000

Hockey XD

Violence

Variant 1 0.915 0.706 0.800 0.942 0.671

Variant 2 0.940 0.680 0.792 0.920 0.730

VIVID 0.985 0.736 0.797 0.954 0.826

VIVID demonstrates superior performance across

most datasets. It achieves the highest accuracy in

the Movies, Surveillance Fight, Hockey, and XD-

Violence datasets. Although VIVID was not the best

in the RWF-2000 dataset, the difference in accuracy

was small enough to be negligible. Therefore, we

consider VIVID to have achieved same performance

to Variant 1 in this dataset. Overall, the robust per-

formance of VIVID in four out of five datasets high-

lights its effectiveness in enhancing the model’s accu-

racy through the reordering of visual queries.

6 CONCLUSIONS

In this paper, we introduced VIVID, a novel method

for detecting violent content in videos by integrat-

ing Vision-Language Models (VLM) with Large Lan-

guage Models (LLMs). Our method demonstrates

superior performance across multiple datasets, effec-

tively capturing complex visual and contextual cues.

VIVID’s zero-shot classification capability allows

it to identify violent content without additional train-

ing, making it an alternative in scenarios with limited

labeled data. By incorporating an external knowledge

base, VIVID mitigates biases and provides reliable re-

sults. Its interpretability characteristics enhance user

trust by explaining the reasons behind its classifica-

tions.

There are a number of directions that can be ex-

Leveraging Vision Language Models for Understanding and Detecting Violence in Videos

111

InstructBLIP

No, the video of two men

practicing karate is not

violent.

Is this video

violent?

User

No, the video shows two

people performing

traditional Japanese

martial art.

No, it only shows a man

and a woman performing

a karate routine on stage.

No, it shows two men

practicing martial arts in

a dojo.

No, a man and a woman

in a kimono performing a

karate move.

BLIP2

Video_LLaMA

Video_LLAVA

LLaMA_VID

X-InstructBLIP

No, two men are

practicing a martial art.

VIVID

Yes, a man doing a

karate move on a blue

stage. It is violent

because karate is a

striking art that uses

punches, kicks, and

knee strikes.

Figure 8: Example in which the inclusion of a violent-related definition improves performance compared to the baseline

methods.

plored for future research, including (1) fine-tuning

the VLM and LLM with a small amount of violent

and non-violent videos to address specific challenges

like varying lighting conditions and occlusions in

surveillance footage, (2) including prompt engineer-

ing to have a broader range of violence-related terms

and scenarios, and (3) enhancing the interpretability

features to provide more detailed explanations for the

model’s classifications. Overall, VIVID offers a bal-

anced approach combining accuracy, interpretability,

and generalizability, making it a robust solution for

violent content classification in real-world applica-

tions.

REFERENCES

Abdali, A.-M. R. and Al-Tuma, R. F. (2019). Robust Real-

Time Violence Detection in Video Using CNN and

LSTM. In Proc. IEEE SCCS, pages 104–108.

Aktı, S¸., Tataro

˘

glu, G. A., and Ekenel, H. K. (2019). Vision-

Based Fight Detection from Surveillance Cameras. In

Proc. IEEE IPTA, pages 1–6.

American Psychological Association (2024). Physical

abuse and violence. Accesed on 20/12/204 from:

https://www.apa.org/topics/physical-abuse-violence.

Bermejo, E. N., D

´

eniz, O. S., Bueno, G. G., and Sukthankar,

R. (2011). Violence Detection in Video Using Com-

puter Vision Techniques. In Proc. CAIP, Part II 14,

pages 332–339.

Bobick, A. F. and Davis, J. W. (2001). The Recognition of

Human Movement Using Temporal Templates. IEEE

Transactions on Pattern Analysis and Machine Intel-

ligence, 23(3):257–267.

Cheng, M., Cai, K., and Li, M. (2021). RWF-2000: An

Open Large Scale Video Database for Violence De-

tection. In Proc. ICPR, pages 4183–4190.

Chung, H. W., Hou, L., Longpre, S., Zoph, B., Tay, Y., Fe-

dus, W., Li, Y., Wang, X., Dehghani, M., Brahma,

S., et al. (2024). Scaling Instruction-Finetuned Lan-

guage Models. Journal of Machine Learning Re-

search, 25(70):1–53.

Dai, W., Li, J., Li, D., Tiong, A. M. H., Zhao, J., Wang,

W., Li, B., Fung, P., and Hoi, S. (2023). Instruct-

BLIP: Towards General-Purpose Vision-Language

Models with Instruction Tuning. arXiv preprint

arXiv:2305.06500.

Dalal, N., Triggs, B., and Schmid, C. (2006). Human De-

tection Using Oriented Histograms of Flow and Ap-

pearance. In Proc. ECCV, Part II 9, pages 428–441.

Das, S., Sarker, A., and Mahmud, T. (2019). Violence De-

tection from Videos Using HOG Features. In Proc.

IEEE EICT, pages 1–5.

De Souza, F. D., Chavez, G. C., do Valle Jr, E. A., and

Ara

´

ujo, A. d. A. (2010). Violence Detection in Video

Using Spatio-Temporal Features. In Proc. SIBGRAPI,

pages 224–230.

Deniz, O., Serrano, I., Bueno, G., and Kim, T.-K. (2014).

Fast Violence Detection in Video. In Proc. VISI-

GRAPP (VISAPP), volume 2, pages 478–485.

Dong, Y., Mu, R., Zhang, Y., Sun, S., Zhang, T., Wu, C., Jin,

G., Qi, Y., Hu, J., Meng, J., et al. (2024). Safeguarding

Large Language Models: A Survey. arXiv preprint

arXiv:2406.02622.

Febin, I., Jayasree, K., and Joy, P. T. (2020). Violence De-

tection in Videos for an Intelligent Surveillance Sys-

tem Using MoBSIFT and Movement Filtering Algo-

VISAPP 2025 - 20th International Conference on Computer Vision Theory and Applications

112

rithm. Pattern Analysis and Applications, 23(2):611–

623.

Gao, Y., Xiong, Y., Gao, X., Jia, K., Pan, J., Bi, Y., Dai, Y.,

Sun, J., and Wang, H. (2023). Retrieval-Augmented

Generation for Large Language Models: A Survey.

arXiv preprint arXiv:2312.10997.

Hassner, T., Itcher, Y., and Kliper-Gross, O. (2012). Violent

Flows: Real-Time Detection of Violent Crowd Behav-

ior. In Proc. IEEE CVPR Workshops, pages 1–6.

Herrenkohl, T. I. (2011). Violence in Context: Current Evi-

dence on Risk, Protection, and Prevention. OUP USA.

Lewis, P., Perez, E., Piktus, A., Petroni, F., Karpukhin,

V., Goyal, N., K

¨

uttler, H., Lewis, M., Yih, W.-t.,

Rockt

¨

aschel, T., et al. (2020). Retrieval-Augmented

Generation for Knowledge-Intensive NLP Tasks. Ad-

vances in Neural Information Processing Systems,

33:9459–9474.

Li, J., Li, D., Savarese, S., and Hoi, S. (2023a). BLIP-

2: Bootstrapping Language-Image Pre-Training with

Frozen Image Encoders and Large Language Models.

In Proc. ICML, pages 19730–19742.

Li, Y., Wang, C., and Jia, J. (2023b). LLaMA-VID: An

Image is Worth 2 Tokens in Large Language Models.

arXiv preprint arXiv:2311.17043.

Lin, B., Zhu, B., Ye, Y., Ning, M., Jin, P., and Yuan, L.

(2023). Video-LLaVA: Learning United Visual Rep-

resentation by Alignment Before Projection. arXiv

preprint arXiv:2311.10122.

Liu, N. F., Lin, K., Hewitt, J., Paranjape, A., Bevilac-

qua, M., Petroni, F., and Liang, P. (2024). Lost in

the Middle: How Language Models Use Long Con-

texts. Transactions of the Association for Computa-

tional Linguistics, 12:157–173.

Mumtaz, N., Ejaz, N., Habib, S., Mohsin, S. M., Tiwari, P.,

Band, S. S., and Kumar, N. (2023). An Overview of

Violence Detection Techniques: Current Challenges

and Future Directions. Artificial Intelligence Review,

56(5):4641–4666.

Panagopoulou, A., Xue, L., Yu, N., Li, J., Li, D., Joty, S.,

Xu, R., Savarese, S., Xiong, C., and Niebles, J. C.

(2023). X-InstructBLIP: A Framework for Aligning

X-Modal Instruction-Aware Representations to LLMs

and Emergent Cross-Modal Reasoning. arXiv preprint

arXiv:2311.18799.

Park, J.-H., Mahmoud, M., and Kang, H.-S. (2024).

Conv3D-Based Video Violence Detection Network

Using Optical Flow and RGB Data. Sensors,

24(2):317.

Raffel, C., Shazeer, N., Roberts, A., Lee, K., Narang, S.,

Matena, M., Zhou, Y., Li, W., and Liu, P. J. (2020).

Exploring the Limits of Transfer Learning with a Uni-

fied Text-to-Text Transformer. Journal of Machine

Learning Research, 21(140):1–67.

Senst, T., Eiselein, V., Kuhn, A., and Sikora, T.

(2017). Crowd Violence Detection Using Global

Motion-Compensated Lagrangian Features and Scale-

Sensitive Video-Level Representation. IEEE Trans-

actions on Information Forensics and Security,

12(12):2945–2956.

Sudhakaran, S. and Lanz, O. (2017). Learning to Detect

Violent Videos Using Convolutional Long Short-Term

Memory. In Proc. IEEE AVSS, pages 1–6.

Sun, Z., Ke, Q., Rahmani, H., Bennamoun, M., Wang, G.,

and Liu, J. (2022). Human Action Recognition from

Various Data Modalities: A Review. IEEE Transac-

tions on Pattern Analysis and Machine Intelligence,

45(3):3200–3225.

Szeliski, R. (2022). Computer Vision: Algorithms and Ap-

plications. Springer Nature.

Traor

´

e, A. and Akhloufi, M. A. (2020). Violence Detection

in Videos Using Deep Recurrent and Convolutional

Neural Networks. In Proc. IEEE SMC, pages 154–

159.

Ullah, F. U. M., Obaidat, M. S., Ullah, A., Muhammad,

K., Hijji, M., and Baik, S. W. (2023). A Comprehen-

sive Review on Vision-Based Violence Detection in

Surveillance Videos. ACM Comput. Surv., 55(10).

Ullah, F. U. M., Ullah, A., Muhammad, K., Haq, I. U., and

Baik, S. W. (2019). Violence Detection Using Spa-

tiotemporal Features with 3D Convolutional Neural

Network. Sensors, 19(11):2472.

Wang, H., Kl

¨

aser, A., Schmid, C., and Liu, C.-L. (2013).

Dense Trajectories and Motion Boundary Descrip-

tors for Action Recognition. International Journal of

Computer Vision, 103:60–79.

Wu, P., Liu, J., Shi, Y., Sun, Y., Shao, F., Wu, Z., and Yang,

Z. (2020). Not Only Look, But Also Listen: Learning

Multimodal Violence Detection Under Weak Supervi-

sion. In Proc. ECCV, pages 322–339. Springer.

Zhang, H., Li, X., and Bing, L. (2023). Video-

LLaMA: An Instruction-Tuned Audio-Visual Lan-

guage Model for Video Understanding. arXiv preprint

arXiv:2306.02858.

Leveraging Vision Language Models for Understanding and Detecting Violence in Videos

113