Active Physicalization of Temporal Bone

Fatemeh Yazdanbakhsh

a

and Faramarz Samavati

b

Department of Computer Science, University of Calgary, Calgary, Alberta, Canada

Keywords:

Physicalization, Active Physicalization, Interactive, Touch Sensor, 3D Printing.

Abstract:

This paper explores the integration of conductivity, electricity, controllers, and 3D printing technologies to

develop an active physicalization model. The model is interactive and utilizes conductive 3D filaments as

sensors to trigger a feedback system when activated. The resulting interactive model can be applied to various

physicalized models where the internal structure is crucial. As a case study, we have 3D printed a temporal

bone model with its inner organs, using conductive material to sense the proximity of a drill around the

inner organs. When a surgical drill comes into contact with these conductive-material-printed inner organs,

it triggers the feedback system, producing feedback in the form of a buzzer or blinking LED. Our adaptable

feedback system extends beyond surgery rehearsal, with the case study serving as a representative example.

1 INTRODUCTION

Physicalization is defined as a ”physical artifact, the

geometry or material properties of which encode

data” or in other words, physical representation of

data (Jansen et al., 2015). Physicalization offers sig-

nificant benefits by transforming abstract data into

tangible forms, enhancing understanding of data and

engagement of working with data. One major advan-

tage is the ability to interact physically with data, al-

lowing users to touch, manipulate, and explore infor-

mation in ways that digital screens cannot provide.

This tactile interaction stimulates multiple senses, en-

hancing both the understanding and retention of com-

plex information. Additionally, physical models serve

as intuitive tools for communicating intricate data to

non-experts, making abstract concepts more acces-

sible. Moreover, physicalization promotes collabo-

rative exploration and discussion, as physical arti-

facts can be easily shared and examined in group set-

tings. This collaborative aspect enhances the commu-

nication of ideas and supports team-based problem-

solving (Huron et al., 2017).

Physicalization is divided into three categories:

passive, augmented and active (Djavaherpour et al.,

2021). Passive physicalization is defined as using dig-

ital fabrication devices to create a static object with-

out any enhanced interaction; in contrast, augmented

physicalization is defined as a combination of data

a

https://orcid.org/0000-0002-1082-6522

b

https://orcid.org/0000-0001-9440-7562

physicalization usually passive and augmented reality

(AR) techniques. Active physicalization usually in-

volves other sources of energy like electricity to cre-

ate more interactive movements and actions. These

are usually controlled with a microcontroller.

Unlike passive physicalizations, active physical-

izations offer several distinct advantages. They en-

able the visualization of evolving datasets (Pahr et al.,

2024), allowing users to observe changes and trends

over time (Barbosa et al., 2023; Sauv

´

e et al., 2020).

This capability is particularly beneficial in applica-

tions where real-time monitoring is crucial, such as

in biological and medical diagnostics (Roo et al.,

2020; Karolus et al., 2021), environmental monitor-

ing (Houben et al., 2016), and interactive art installa-

tions (LOZANO-HEMMER, 2011). Active physical-

izations can also support enhanced interactivity, en-

abling users to manipulate data through physical in-

terfaces, thus fostering a more engaging data explo-

ration experience.

Overall, active physicalization bridges the gap be-

tween the digital and physical worlds, offering a pow-

erful medium for data interaction and interpretation.

In 3D printing as a common method for fabricat-

ing passive physicalization, it is sometimes crucial to

accurately replicating inner structures, specially when

reproducing medical organs with intricate inner struc-

tures and vessels (Bao et al., 2023; Sun et al., 2023;

Tan et al., 2022). For example for medical surgery re-

hearsal and education purposes, there have been many

interests in using physicalization for temporal bone

Yazdanbakhsh, F. and Samavati, F.

Active Physicalization of Temporal Bone.

DOI: 10.5220/0013160200003912

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 20th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2025) - Volume 1: GRAPP, HUCAPP

and IVAPP, pages 903-912

ISBN: 978-989-758-728-3; ISSN: 2184-4321

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

903

(Ang et al., 2024; Iannella et al., 2024; Freiser et al.,

2019; Rose et al., 2015; Suzuki et al., 2018; Cohen

and Reyes, 2015), brain (Jeising et al., 2024; Huang

et al., 2019; Guo et al., 2020) and dental (Domysche

et al., 2024; Reich et al., 2022; Park et al., 2019; Hou

et al., 2024) surgery. The internal structure in these

works is commonly revealed or displayed through

drilling or cutting, as exemplified in (Longfield et al.,

2015; Cohen and Reyes, 2015), where organs are dis-

sected to show the inner structure and measure them.

Another method to display inner organs involves us-

ing transparent external structures, such as in the work

by (Suzuki et al., 2018), where the temporal bone is

made transparent, allowing the inner organs to be seen

in color and their proportions to be visible. Addition-

ally, some approaches print the organ as several dis-

assembled parts, making it easy to measure and ob-

serve the inner organs in the cut areas, as utilized in

(Wanibuchi et al., 2016) for surgical training.

When employing cutting methods for surgical

simulations and educational purposes, the precision

of the internal structures and their spatial relationships

with the external structures become critical. More-

over, it is crucial to have a means to guide the cutting

process when in contact with the internal structures,

necessitating supportive actions and the implementa-

tion of active physicalization.

In this work, we introduce an active physicaliza-

tion of the temporal bone to facilitate interaction with

the critical internal organs. Based on the welling-

scale criteria outlined in (Wan et al., 2010), certain

inner organs (i.e. sigmoid sinus, facial nerve, inner

ear) should remain untouched during surgery. In our

active physicalization, during the cutting or drilling

of internal structures, or when the drill is in proximity

of these structures, a warning action such as a buzzer

sound or an LED light, is triggered. We use afford-

able 3D printers, Raspberry Pi, and off-the-shelf con-

ductive 3D printing material for our physicalizations.

The sensors are designed using conductive materials

and connected to the internal structures. We utilized

a simple circuit controlled by Raspberry Pi to activate

the warning actions when the drill tip touches the in-

ternal structures.

The complexity of using ready-to-use sensors

encouraged us to design a self-designed sensor. Us-

ing ready-to-use sensors in small structures presents

significant challenges due to their standardized sizes,

shapes, and power needs. Furthermore, integration

complexity and insufficient sensitivity add to the dif-

ficulties. Our custom-designed sensors offer precise

tailoring to the specific size, shape and performance

requirements of the application.

To create the 3D printed model of temporal bone,

we need to address several challenges. For instance,

selecting the materials that can better simulate bone

and inner organs; 3D printing using multi-color and

multi-material; recreating small and complex inner

organs precisely; removing extra material from the

hollow spaces present in temporal bone datasets. The

type of printer plays an important role in choosing the

appropriate material. We print the main bone parts

with white PETG (won’t be melt when drilled away)

and the inner organs with special PLA to sense the

proximity of drill to the inner organs using affordable

3D printers.

An additional challenge with this design is to en-

sure that the circuit initiates the warning action when

the drill tip is close to the internal structure, just be-

fore touching or cutting the critical internal organ. To

address this challenge, we strategically offset the se-

lected internal organs. We then implemented our sen-

sor circuit, attaching it to this offset region. This ap-

proach allows for precise monitoring and warning the

user before the actual inner structure is touched.

The main contribution of this work includes:

• Active physicalization of temporal bone

• Warning system integration

• Custom sensor design for active physicalization of

objects with complex internal structures

Section 2 delves into some of the related works.

Section 2.2, covers different types of physcalization

and example of them. In Section 4, you will find

detailed information about the conductive PLA used

in the experiment and the circuit design in Section 4.

The conclusion can be found in Section 6.

2 BACKGROUND AND RELATED

WORK

In this section, we review some of the state of the arts

papers in regard to physicalization and physicaliza-

tion of temporal bone.

2.1 Temporal Bone Anatomy

The temporal bone is a highly complex structure

within the skull that houses several critical anatomi-

cal features, making it a focal point in surgical proce-

dures like mastoidectomy, which is often performed

to treat chronic infections. During this procedure, the

temporal bone is dissected, and sometimes a cochlear

implant is placed to restore hearing. This bone con-

tains essential structures, including the facial nerve

IVAPP 2025 - 16th International Conference on Information Visualization Theory and Applications

904

and the sigmoid sinus, among others, which must be

preserved to avoid severe complications.

The facial nerve is responsible for controlling the

muscles of facial expression, and damage to this nerve

can result in facial paralysis. The sigmoid sinus, a

major venous channel, is crucial for draining blood

from the brain; any injury to it can lead to signifi-

cant bleeding and potentially fatal outcomes. Ensur-

ing the safety and integrity of these structures during

mastoidectomy is paramount to maintain the patient’s

health and functionality. To aid in understanding the

complex anatomy of the temporal bone, a compre-

hensive atlas of images detailing its structure is pro-

vided (Lane and Witte, 2009). Additionally, the Oto-

laryngology Department of Stanford University offers

a graphical atlas of ear and temporal bone anatomy,

available at (Jackler and Gralapp, 2024), which serves

as educational tool for both students and profession-

als. Moreover, embracing other visualization tools

can enhance the understanding of the temporal bone’s

intricate structures. Moreover, embracing other visu-

alization tools can enhance the understanding of the

temporal bone’s intricate structure.

2.2 Physicalization

Physicalization, encompassing passive, augmented,

and active forms, has become increasingly impor-

tant in the medical field for enhancing the under-

standing and interaction with complex data. Pas-

sive physicalization involves creating tangible mod-

els from data, such as using 4D flow MRI images to

represent blood flow (Ang et al., 2019) or 3D printing

resin-based artificial teeth for dentistry (Chung et al.,

2018). To address the challenge of large models ex-

ceeding 3D printer capacities, methods like segment-

ing large geospatial models into smaller parts have

been developed (Allahverdi et al., 2018), and multi-

scale representations of historical sites have been cre-

ated (Etemad et al., 2023).

Augmented physicalization, or mixed reality,

combines physical models with virtual information to

enhance utility. For example, the integration of AR

with 3D-printed anatomical models (McJunkin et al.,

2018; Barber et al., 2018; Mossman et al., 2023) uses

platforms such as Unity (Haas, 2014) and ITK-SNAP

for organ segmentation (Yushkevich et al., 2006).

Active physicalization, a form of interaction design

where physical objects change shape in response to

data or environmental stimuli, has been explored in

various fields. In art installations, for instance, actu-

ated tape measures (LOZANO-HEMMER, 2011) is

used to create immersive, responsive environments by

dynamically reacting to the presence and movement

of people. These installations exemplify how physi-

cal objects can actively respond to their surroundings.

Similarly, platforms developed for active physicaliza-

tion often incorporate features from web-based visu-

alization tools, such as search, filtering, and highlight-

ing, offering a comprehensive and versatile approach

to data representation (Djavaherpour et al., 2021). In

the biomedical field, shape-changing interfaces, such

as the one introduced in (Boem and Iwata, 2018), en-

able remote monitoring of vital signs, allowing indi-

viduals to perceive real-time health data of a hospital-

ized person through tactile shape changes.

These applications of physicalization and mixed

reality within the medical domain offer detailed repre-

sentations and interactive enhancements, proving in-

valuable for education, diagnosis, and treatment plan-

ning. The following section explores state-of-the-art

methodologies and innovations in this rapidly evolv-

ing area.

2.3 Temporal Bone Physicalization

The physicalization of the temporal bone holds signif-

icant potential in surgery training and planning, draw-

ing the attention of numerous researchers.

Several studies utilize FDM 3D printers Haffner

et al. (Haffner et al., 2018) experimented with var-

ious materials, including PLA, ABS, nylon, PETG,

and PC, finding that PETG provided the best haptic

feedback and appearance. Cohen et al. (Cohen and

Reyes, 2015) printed models using ABS filament, not-

ing that while the qualitative feel of ABS was softer

than bone, the resulting dust was similar to bone.

Resin-based printers have also been utilized.

Suzuki et al. (Suzuki et al., 2018) created a scale

model using transparent and white resin to recon-

struct the temporal bone and vestibulocochlear organ.

Freiser et al. (Freiser et al., 2019) used white acrylic

resin, finding moderate similarity in tactile sense to

human bone. Additionally, Rose et al. (Rose et al.,

2015) employed multi-material printing with varying

polymer ratios for surgery planning, measuring accu-

racy in terms of absolute and relative distances.

SLS 3D printers are another approach. Longfield

et al. (Longfield et al., 2015) developed affordable

pediatric temporal bone models using multiple-color

printing. Takahashi et al. (Takahashi et al., 2017)

used plaster powder to reproduce most structures, ex-

cept for the stapes, tympanic sinus, and mastoid air

cells. Hochman et al. (Hochman et al., 2014) tested

different infiltrants to achieve high internal anatomic

fidelity in their rapid-prototyped models.

In comparison, our active physicalization model

offers several advantages. Unlike the passive models

Active Physicalization of Temporal Bone

905

that focus on static representations, our model inte-

grates interactive elements, providing dynamic feed-

back and enhancing the surgical planning and train-

ing experience. This approach not only improves

the anatomical accuracy and tactile feel but also al-

lows for the simulation of various surgical scenarios,

thereby may offer a more comprehensive training tool

for medical professionals.

3 TEMPORAL BONE 3D MODEL

The temporal bone model in this work was created us-

ing micro CT scan data with a resolution of 0.154mm

and 570 × 506 × 583 dimensions. The datasets are

private and come from researchers at the University

of Calgary and Western University. The micro CT

scans were converted into a 3D model via the Visu-

alization toolkit (Schroeder et al., 2004). The model

was segmented using 3D-slicer’s semi-automatic fea-

ture segmentation (Fedorov et al., 2012), isolating the

main bone by adjusting the intensity thresholds based

on the Hounsfield unit (ScienceDirect, 2024). Post

segmentation review led to further manual segmen-

tation to reduce artifacts and include missing areas.

The final segmentation was converted to STL format

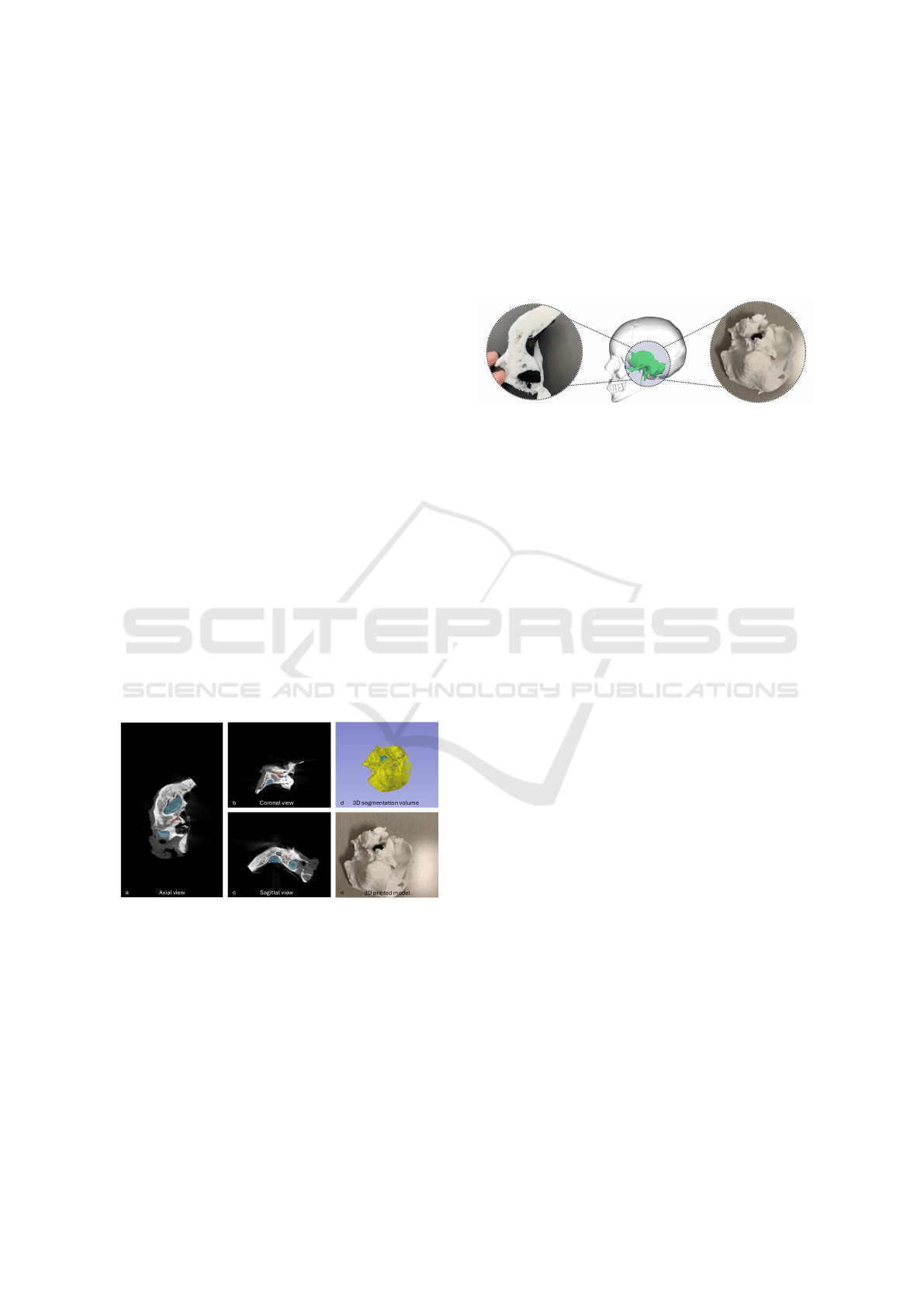

for 3D printing. Figure 1.(a-c) shows the axial, coro-

nal, and sagittal views of the temporal bone along

with the segmentation of inner organs. Figure 1.(d-e)

demonstrates the final segmentation of the temporal

bone and its 3D-printed version. We opted to use the

Figure 1: Axial, coronal, and sagittal views of the temporal

bone with organ segmentation (a-c), and final segmentation

with 3D-printed model (d-e).

MakerGear M3-ID, a dual extruder Fused Deposition

Modeling (FDM) printer chosen for its affordability

and practicality in our research. This choice ensures

that our methods are reproducible and accessible to

others in the field. The printer’s bed size adequately

accommodates our fabrication needs for the size of

objects we intend to create. The 3D-printed model is

shown in Figure 2, with the partially printed bone on

the left, fully printed on the right, and its mapping to

the human head in the center. White PETG was used

for the main bone structure due to its reported sim-

ilarity to bone texture when printed (Haffner et al.,

2018), while black conductive PLA was selected for

the inner organs for its conductivity and clean finish,

making it suitable for our feedback system and easier

to print compared to materials like ABS.

Figure 2: 3D-printed model: partially printed (left), fully

printed (right), and mapped to the human head (center).

White PETG is used for printing bone, black conductive

PLA for inner organs including facial nerve, and sigmoid

sinus.

4 DESIGN AND

IMPLEMENTATION OF

WARNING SYSTEM

In cochlear implant surgery, it is crucial to avoid con-

tact with certain internal organs due to the severe and

irreversible risks associated with their disturbance.

Critical inner organs, such as the sigmoid sinus and

the facial nerve, must be handled with extreme care.

These organs present a unique challenge due to their

complex structures and their locations, often hidden

or covered by bones. To aid in navigating these com-

plexities, we have designed and implemented a warn-

ing system in our active physicalization specifically

for these two organs.

The main application of this feedback system is

to evaluate the intactness of inner organs, including

the sigmoid sinus and the facial nerve. The feed-

back system utilizes embedded sensors in the 3D-

printed model. These sensors provide feedback, such

as buzzer sounds or flashing lights, to notify the users

when they are cutting part of the anatomy that should

not be disturbed.

Instead of using off-the-shelf sensors, which can

be challenging to integrate into rigid 3D-printed ob-

jects, we opted for self-designed sensors. These cus-

tom sensors offer the flexibility to adjust their size ac-

cording to specific requirements, incorporating parts

of the 3D-printed object itself into the sensor design.

We designed a circuit to activate an LED/buzzer.

The circuit consists of hardware components such

as resistors, batteries, and wires. However, instead

IVAPP 2025 - 16th International Conference on Information Visualization Theory and Applications

906

of traditional metal wires covered with plastic insu-

lation, we used conductive PLA to function as the

wires. PLA-made wires are more convenient to em-

bed within a 3D-printed model due to their flexibility

in shape and size. Therefore, we employed conduc-

tive PLA to design the connections that allow cur-

rent to flow through the circuit. Current-conductive

PLA material is suitable for printing these connec-

tors (Kwok et al., 2017; Flowers et al., 2017). In

this work, we utilized Carbon Fiber Reinforced PLA

(Proto-Pasta) for printing the segmentation of the in-

ner organs.

The standard measurement used to characterize a

conductive material is volume resistivity, measured

in ohm-cm units. It is the resistance through a one-

centimeter cube of conductive material. The resis-

tance of the Proto-Pasta material is reported as fol-

lows:

• Volume resistivity of 3D-printed parts perpendic-

ular to layers: 30 ohm-cm

• Volume resistivity of 3D-printed parts through

layers (along the Z-axis): 115 ohm-cm

This volume resistivity data guides us in selecting

appropriate resistors for our circuits, a topic further

elaborated in section 4.1, which details the circuit de-

sign process.

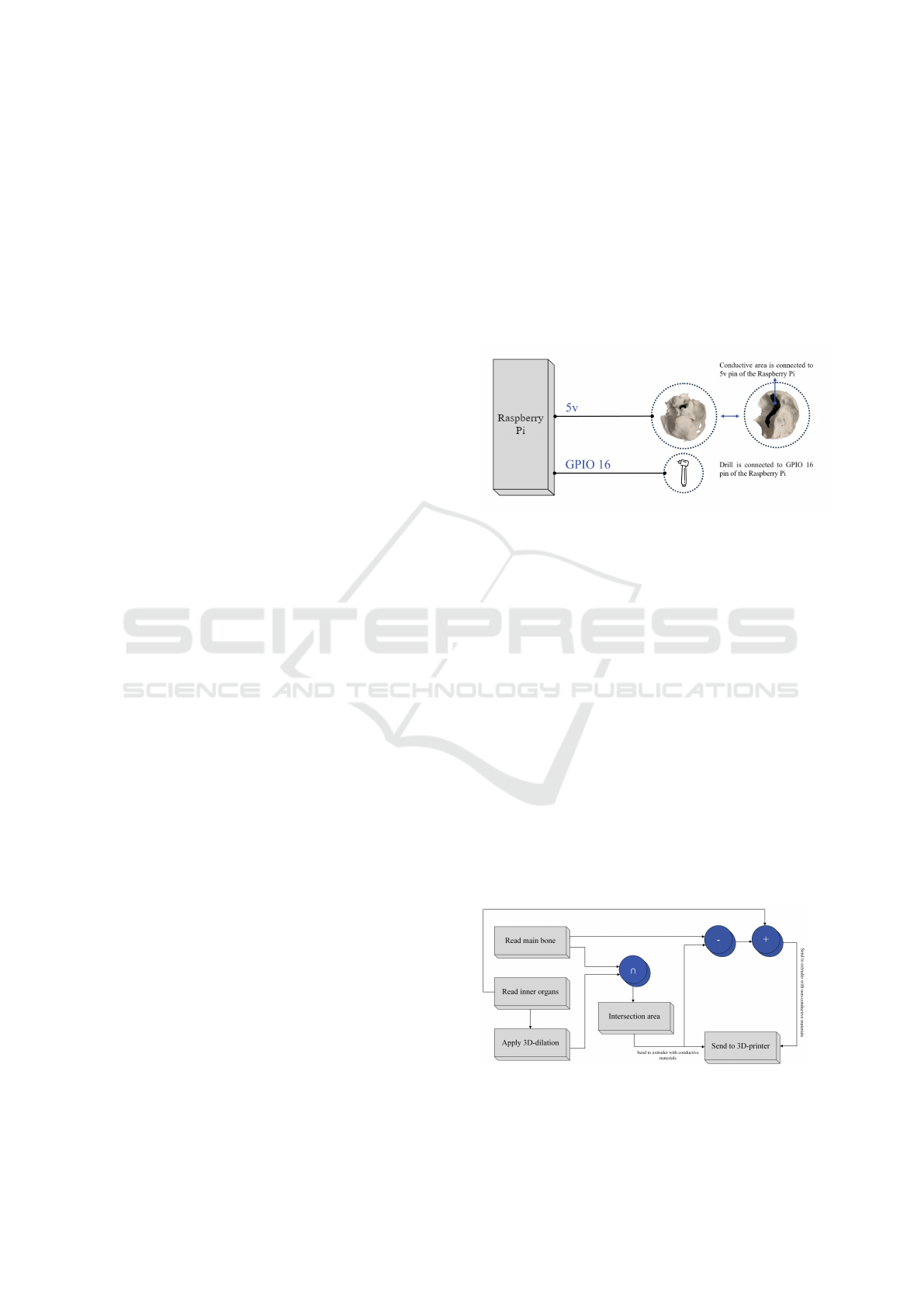

4.1 Designing the Circuit

In this subsection, we outline the design of the pro-

posed self-designed sensor utilizing conductive PLA.

As discussed, in the first step, we create a 3D-printed

temporal bone using two distinct materials. The non-

conductive material (PETG) is employed to fabricate

the temporal bone structure, while the conductive ma-

terial (PLA) is utilized for the inner organs. Protect-

ing these inner organs against drilling is significantly

important; therefore, the feedback system needs to be

designed with this consideration in mind. Printing the

inner organs with conductive material enables us to

use them as part of an electrical circuit, thereby creat-

ing feedback. Figure 3 illustrates how the conductive

elements function as wires in our circuit, facilitating

the flow of electrical current upon circuit closure.

To develop a flexible and general warning feed-

back system, we utilized a Raspberry Pi and a mod-

ified drill in the circuit. The modified drill has been

designed and connected to the circuit to serve as a

sensing tool for the internal material, providing real-

time feedback on material changes during the drilling.

This setup allows for various warning systems, such

as activating a sound player for verbal instructions,

activating a buzzer, or blinking LEDs. The circuit is

designed as an open circuit, which closes when the

drill touches the conductive inner organs. Upon con-

tact, the circuit closes, and electricity flows through

the system, triggering the buzzer by sending high

voltage to the GPIO pin responsible for triggering the

feedback system. A GPIO gate on a Raspberry Pi

refers to the functionality of its GPIO pins. These pins

facilitate the board’s interaction with external hard-

ware, allowing for programmability to either read sig-

nals from a sensor (input) or control devices such as

LEDs and buzzers (output).

Figure 3: Simplified design of the sensor.

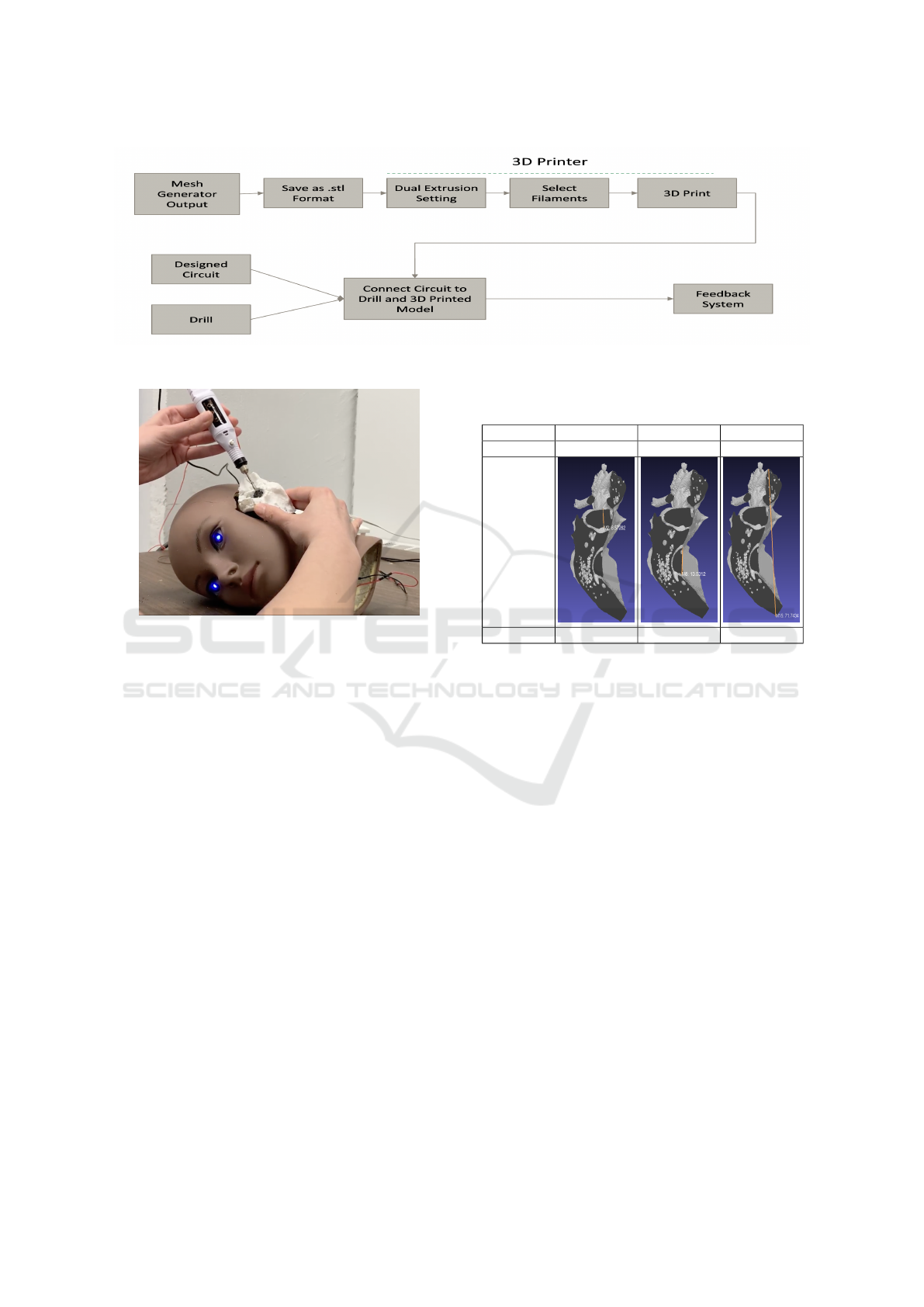

4.2 Offsetting Internal Organs

One of the challenges is ensuring that the circuit pro-

vides a warning when the drill tip is near critical or-

gans. We address this challenge by offsetting the or-

gans and connecting the sensor circuit to this offset re-

gion. To achieve this, we use a conductive material for

the offset region. First, we read the main bone mesh

and the inner organs mesh, followed by performing

a 3D dilation of the inner organs. This 3D dilation

expands the inner organs’ mesh in all directions. To

calculate the offset region, we compute the intersec-

tion of the main bone region with the 3D dilated inner

organs. In a 3D printer with dual extruders, the offset

region is printed using conductive material, while the

main bone (after subtracting the offset region) and the

inner organs are printed using non-conductive white

PLA. Figure 4 illustrates the process of preparing this

offsetting approach.

Figure 4: Process of creating the offset region and 3D print

model with offset.

Active Physicalization of Temporal Bone

907

The offset region is analogous to the skeletoniza-

tion concept in head and neck surgery. Skeletoniza-

tion, in surgical techniques, refers to the method in

which the surgeon carefully removes the bony struc-

ture covering vital organs such as the facial nerve, in-

ner ear, etc. The objective is to expose these organs

while maintaining their integrity, allowing the sur-

geon to perform the operation without risking dam-

age to these delicate areas. The protective layer pro-

vided by the offset region improves the feedback sys-

tem by notifying the user before the inner organs are

injured or cut. Specifically, when the drill reaches

the conductive area, the user will be alerted, indicat-

ing proximity to the inner organs. This warning al-

lows the user to decide whether to continue drilling

with a larger burr or switch to a smaller burr. More-

over, the user can choose to identify a specific organ

or leave it skeletonized by cleaning the bony parts

and retaining only the conductive layer. Figure 8.a

shows the combination of inner organs with a pro-

tective layer, integrated into the main bone, forming

the ready-to-print model. Figure 8.b depicts the 3D-

printed version of this model. Figure 8.c displays the

designed inner organs, while Figure 8.d adds the pro-

tective layer. Lastly, Figure 8.e separates the inner

organs, protective layer, and main bone for individual

visualization. Both the main bone and inner organs

were printed using non-conductive material, while the

protective layer was printed using conductive mate-

rial. The same circuit used for intactness detection

in the first design was utilized here, producing buzzer

sounds and activating blinking LEDs when the drill

tip touches the conductive layer.

5 RESULTS AND EXPERIMENTS

In this section, we describe the process of creating the

physicalization of the temporal bone and evaluate the

accuracy of the 3D-printed model by comparing its

size to the .stl file (ground truth). We focus on both

the segmentation of critical anatomical structures and

the precision of the final 3D-printed temporal bone.

5.1 Physicalization of Temporal Bone

For 3D printing purposes, the segmentation of crit-

ical anatomical structures within the temporal bone

is essential. These structures include the sigmoid si-

nus, facial nerve, stapes, malleus, incus, semicircular

canals, cochlea, etc. Segmentation enables the accu-

rate visualization and physicalization of these struc-

tures, which is necessary for precise 3D printing.

Two main steps are required for the physicaliza-

tion process: first, segmenting the inner structures of

the temporal bone using an appropriate method, and

second, developing a 3D printing technique that can

accurately recreate the bone’s complex anatomy. The

segmentation of the inner organs has already been

completed, leaving only the segmentation of the bone

itself. To accomplish this, we apply Hounsfield Unit

(HU) thresholding, using a range of 600 to 2390 HU

to isolate the bone structure from the surrounding tis-

sues. The segmented parts then converted to 3D mesh

using Marching Cubes.

Figure 5 presents the complete flowchart outlin-

ing the physicalization steps, from segmentation to

mesh generation and 3D printing. The mesh created

through segmentation is exported in a 3D-printable

format, such as .stl. After adjusting the printer set-

tings, the model is ready to be printed. This physical

3D model will be integrated into the feedback system

described in Section 4. The feedback system, which

includes a Raspberry Pi and sensors, is attached to

the printed temporal bone. This system is designed

to provide real-time feedback during active interac-

tion scenarios such as surgery simulations, with the

sensors detecting interactions with the bone structure

during the procedure. The final setup, illustrated in

Figure 6, shows the final active physicalization in-

cluding 3D-printed temporal bone model, the Rasp-

berry Pi and sensor attachments.

The 3D printing process, utilizing our MakerGear

M3-ID Dual Extruder printer, takes approximately

5 hours to complete a single temporal bone model.

After printing, the Raspberry Pi and sensors are

connected to the model using predefined attachment

points to ensure the proper integration of the feed-

back system into the printed structure. Additionally,

a video has been provided to demonstrate the func-

tionality of the our active Physicalization in action

(see the supplementary material). This video show-

cases the interactive features of the system, such as

the blinking LEDs and buzzer sounds, which respond

to sensor input during the simulation. Furthermore, as

an examplary action for applications such as surgery

simulation, audio tracks guide the users through the

steps of mastoidectomy surgery.

The feedback system in our active physicaliza-

tion offers real-time feedback through sensory sig-

nals. One of the key advantages of this system is its

ability to provide both visual and auditory cues, which

can significantly assist training process.

IVAPP 2025 - 16th International Conference on Information Visualization Theory and Applications

908

Figure 5: The steps for creating active Physicalization of the temporal bone.

Figure 6: Feedback system Implemented in the real world.

5.2 Evaluating the Accuracy of 3D

Printed Model

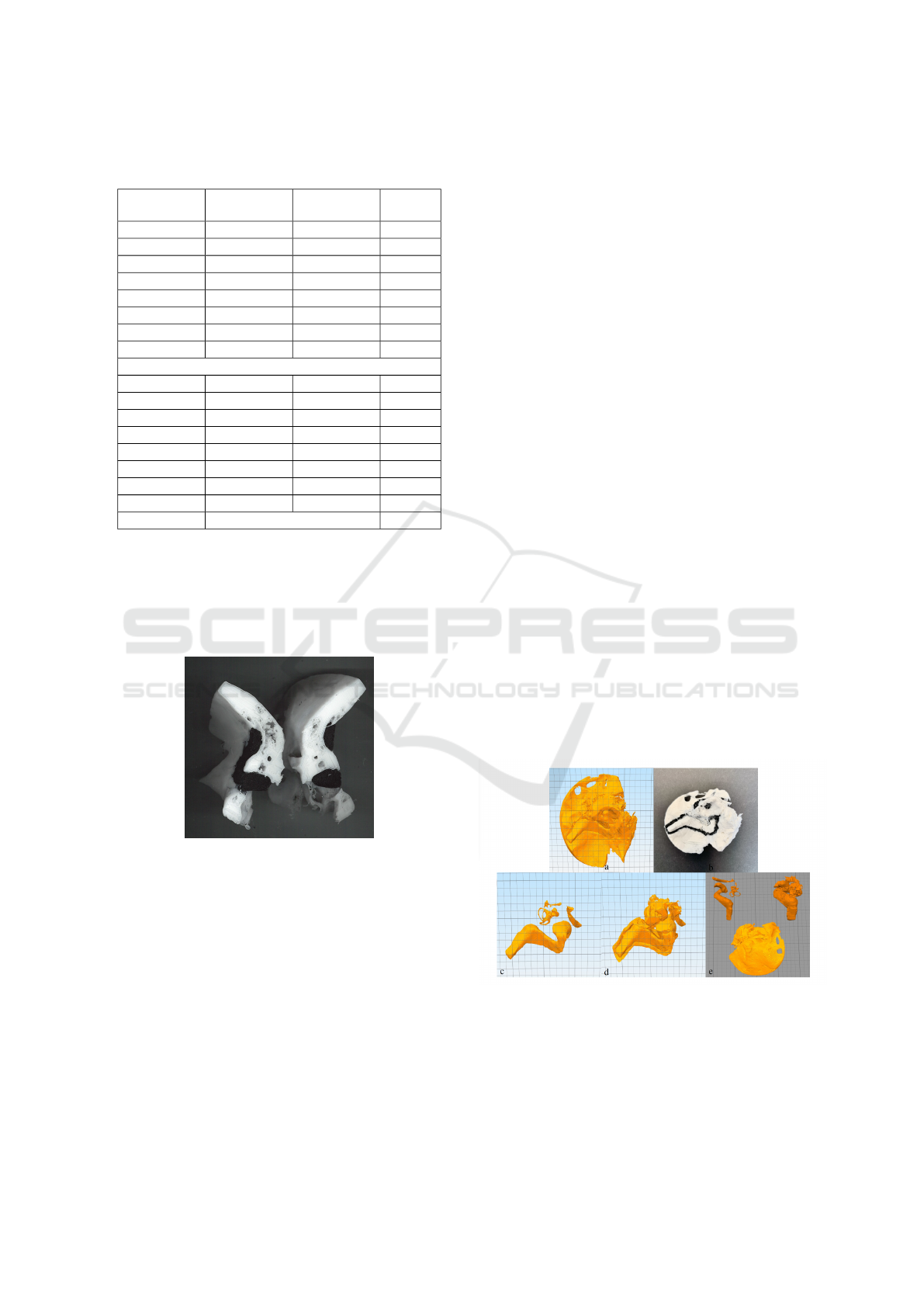

To assess the precision of the 3D-printed model, we

first analyze cross-sections of sample bones, as shown

in Figure 7. Two sample bones were printed in halves,

and their dimensions were measured using a caliper.

These measurements were then compared with the

original dimensions of the .STL file, which were mea-

sured using the MeshLab software. The differences

between these measurements, recorded in millime-

ters.

The measurements include assessments of the

bone and the visible inner organs in the cross-section

from various directions and angles, such as left to

right and top to bottom. The goal is to demonstrate

the accuracy of the printed models and provide an

average difference to highlight the precision of the

printing process. These two samples were specifically

chosen because they represent the sigmoid sinus in

two different parts, which enhances the reliability of

the measurements. Another reason for selecting these

samples is that the sigmoid sinus is a larger structure,

making it easier to showcase through images. Exam-

ples of such measurements are displayed in Table 1

comparing ground truth .stl file and the 3D-printed

Table 1: Comparison between ground truth measurements

and 3D printed images for different parts.

Image Part Image Part Image Part

SS Part-1 SS Part-2 Main Bone

ground

truth

3D Printed 8.7 mm 13.4 mm 71.5 mm

model.

As illustrated in Table 2, there are four key

columns. The first column indicates the name of the

anatomical landmark. The second column reports

the measurements obtained from the .STL file, which

serves as the ground truth. The third column provides

the measurements taken from the 3D-printed cross-

section. Finally, the fourth column presents the rela-

tive difference between the measurements in the sec-

ond and third columns. The accuracy evaluation re-

vealed that the 3D-printed models closely match the

dimensions of the original .STL files, with a relative

difference ranging from 0% to approximately 4% for

most structures and an overal average of 1.48%. The

larger anatomical structures, such as the sigmoid si-

nus, exhibited a higher degree of accuracy with min-

imal differences, as they were easier to replicate and

measure.

Smaller structures, on the other hand, showed

slightly larger deviations, particularly when the di-

mensions were more intricate and complex to repli-

cate. The relative differences for these smaller fea-

tures ranged between 2% and 4%.

Overall, the accuracy results confirm that the 3D-

printed temporal bone models are reliable represen-

tations of the anatomical structures they replicate.

Active Physicalization of Temporal Bone

909

Table 2: Measurement results for two samples of printed

temporal bones.

Measurement Ground Truth 3D Printed Rel. Diff.

(%)

SS-1 (L→R) 16.83 16.9 0.42%

SS-1 (T→B) 8.57 8.7 1.52%

SS-2 (T→B) 13.03 13.4 2.83%

SS-2 (L→R) 5.20 5.3 1.92%

Main bone (Diag.) 56.81 55.9 1.60%

Main bone (T→B) 71.74 71.5 0.33%

Main bone (Peak) 6.71 7.0 4.32%

Main bone (L→R) 22.94 22.43 2.22%

Second Sample

SS-1 (L→R) 16.3 16.2 0.61%

SS-1 (T→B) 8.64 9.0 4.17%

SS-2 (T→B) 16.51 16.3 1.27%

SS-2 (L→R) 5.83 5.9 1.20%

Main bone (L→R) 20.53 20.4 0.63%

Main bone (T→B) 72.09 72.2 0.15%

Main bone (Diag.) 57.27 57.2 0.12%

Main bone (Peak) 7.27 7.3 0.41%

Overall Avg. 1.48%

However, there is room for further improvement, par-

ticularly in refining the mesh smoothing process and

optimizing the segmentation of smaller anatomical

features. These enhancements would help reduce the

occurrence of minor artifacts and further improve the

fidelity of the printed models.

Figure 7: Partially 3D printed bones used for verifying mea-

surements.

6 CONCLUSIONS

In this paper, we have developed an active physi-

calization model with interactive features enabled by

conductive materials and a controller. The model em-

ploys conductive 3D filaments to create sensors that

trigger a feedback system upon activation. This inter-

active model is applicable to a range of physicalized

models where the internal structure is critical.

As a case study, we considered utelizing the Phys-

icalization for feedback in drilling or cutting actions.

When a modified surgical drill comes into contact

with these conductive-material-printed inner organs,

the feedback system is triggered, producing responses

such as a buzzer sound or blinking LEDs.

The 3D model was fabricated using an FDM 3D

printer, with white PETG for the main bone structure

and conductive PLA for the inner organs. In an alter-

native design, both the main bone structure and inner

organs were printed using white PETG, with a pro-

tective layer of conductive PLA added. In both de-

signs, the conductive areas acted as sensors to detect

the proximity of surgical tools to inner organs. The

feedback system, powered by a Raspberry Pi, was fur-

ther enhanced with oral instructions to provide sup-

plementary guidance during surgical rehearsals.

By integrating conductivity, electronics, con-

trollers, and 3D printing technologies, we have de-

veloped an interactive model capable of providing

real-time feedback. The case study presented demon-

strates the adaptability and versatility of our approach,

which can be extended to various disciplines beyond

temporal bone surgery.

In future work, researchers can explore the ap-

plication of active physicalization in clinical settings,

particularly for surgical simulation and training. This

interactive physicalization model holds potential for

any application requiring real-time feedback, such

as enhancing precision in delicate procedures or in-

dustrial tasks. Further development could focus on

improving the accuracy of 3D-printed models and

implementing more systematic methods to compare

them against ground truth data. This would provide a

robust framework for validating the efficacy of physi-

calized models across various disciplines.

Figure 8: A) Combined model of inner organs, protective

layer, and main bone. b) 3D-printed model. c) Inner or-

gans. d) Inner organs with protective layer. e) Separate

components: inner organs, protective layer, and main bone.

IVAPP 2025 - 16th International Conference on Information Visualization Theory and Applications

910

ACKNOWLEDGEMENTS

We would like to thank Dr. Joseph Dort and Dr.

Sonny Chan for providing the temporal bone dataset

essential to this research. We also appreciate Farima

Golchin’s assistance with figure styling and Katayoun

Etemad’s guidance in creating physical models. This

work was supported by the Natural Sciences and En-

gineering Research Council of Canada (NSERC).

REFERENCES

Allahverdi, K., Djavaherpour, H., Mahdavi-Amiri, A., and

Samavati, F. (2018). Landscaper: A modeling sys-

tem for 3d printing scale models of landscapes. In

Computer Graphics Forum, volume 37, pages 439–

451. Wiley Online Library.

Ang, A. J. Y., Chee, S. P., Tang, J. Z. E., Chan, C. Y., Tan, V.

Y. J., Lee, J. A., Schrepfer, T., Ahamed, N. M. N., and

Tan, M. B. (2024). Developing a production workflow

for 3d-printed temporal bone surgical simulators. 3D

Printing in Medicine, 10(1):1–15.

Ang, K. D., Samavati, F. F., Sabokrohiyeh, S., Garcia, J.,

and Elbaz, M. S. (2019). Physicalizing cardiac blood

flow data via 3d printing. Computers & Graphics,

85:42–54.

Bao, G., Yang, P., Yi, J., Peng, S., Liang, J., Li, Y., Guo, D.,

Li, H., Ma, K., and Yang, Z. (2023). Full-sized realis-

tic 3d printed models of liver and tumour anatomy: A

useful tool for the clinical medicine education of be-

ginning trainees. BMC Medical Education, 23(1):574.

Barber, S. R., Wong, K., Kanumuri, V., Kiringoda, R.,

Kempfle, J., Remenschneider, A. K., Kozin, E. D.,

and Lee, D. J. (2018). Augmented reality, surgi-

cal navigation, and 3d printing for transcanal endo-

scopic approach to the petrous apex. OTO open,

2(4):2473974X18804492.

Barbosa, C., Sousa, T., Monteiro, W., Ara

´

ujo, T., and

Meiguins, B. (2023). Enhancing color scales for active

data physicalizations. Applied Sciences, 14(1):166.

Boem, A. and Iwata, H. (2018). “it’s like holding a human

heart”: the design of vital+ morph, a shape-changing

interface for remote monitoring. AI & SOCIETY,

33(4):599–619.

Chung, Y.-J., Park, J.-M., Kim, T.-H., Ahn, J.-S., Cha, H.-

S., and Lee, J.-H. (2018). 3d printing of resin material

for denture artificial teeth: Chipping and indirect ten-

sile fracture resistance. Materials, 11(10):1798.

Cohen, J. and Reyes, S. A. (2015). Creation of a 3d printed

temporal bone model from clinical ct data. American

Journal of Otolaryngology, 36(5):619–624.

Djavaherpour, H., Samavati, F., Mahdavi-Amiri, A., Yaz-

danbakhsh, F., Huron, S., Levy, R., Jansen, Y., and

Oehlberg, L. (2021). Data to physicalization: A sur-

vey of the physical rendering process. In Computer

Graphics Forum, volume 40, pages 569–598. Wiley

Online Library.

Domysche, M., Terekhov, S., Astapenko, O., Vefelev, S.,

and Tatarina, O. (2024). The use of 3d printers in den-

tal production practice: Possibilities for the manufac-

ture of individual dental prostheses and elements.

Etemad, K., Samavati, F., and Dawson, P. (2023). Multi-

scale physicalization of polar heritage at risk in

the western canadian arctic. The Visual Computer,

39(5):1717–1729.

Fedorov, A., Beichel, R., Kalpathy-Cramer, J., Finet, J.,

Fillion-Robin, J.-C., Pujol, S., Bauer, C., Jennings,

D., Fennessy, F., Sonka, M., et al. (2012). 3d slicer

as an image computing platform for the quantita-

tive imaging network. Magnetic resonance imaging,

30(9):1323–1341.

Flowers, P. F., Reyes, C., Ye, S., Kim, M. J., and Wiley,

B. J. (2017). 3d printing electronic components and

circuits with conductive thermoplastic filament. Addi-

tive Manufacturing, 18:156–163.

Freiser, M. E., Ghodadra, A., Hirsch, B. E., and McCall,

A. A. (2019). Evaluation of 3d printed temporal bone

models in preparation for middle cranial fossa surgery.

Otology & Neurotology, 40(2):246–253.

Guo, X.-Y., He, Z.-Q., Duan, H., Lin, F.-H., Zhang, G.-

H., Zhang, X.-H., Chen, Z.-H., Sai, K., Jiang, X.-

B., Wang, Z.-N., et al. (2020). The utility of 3-

dimensional-printed models for skull base menin-

gioma surgery. Annals of Translational Medicine,

8(6).

Haas, J. K. (2014). A history of the unity game engine.

Worcester Polytechnic Institute.

Haffner, M., Quinn, A., Hsieh, T.-y., Strong, E. B., and

Steele, T. (2018). Optimization of 3d print material for

the recreation of patient-specific temporal bone mod-

els. Annals of Otology, Rhinology & Laryngology,

127(5):338–343.

Hochman, J. B., Kraut, J., Kazmerik, K., and Unger, B. J.

(2014). Generation of a 3d printed temporal bone

model with internal fidelity and validation of the me-

chanical construct. Otolaryngology–Head and Neck

Surgery, 150(3):448–454.

Hou, R., Hui, X., Xu, G., Li, Y., Yang, X., Xu, J., Liu,

Y., Zhu, M., Zhu, Q., and Sun, Y. (2024). Use of

3d printing models for donor tooth extraction in au-

totransplantation cases. BMC Oral Health, 24(1):179.

Houben, S., Golsteijn, C., Gallacher, S., Johnson, R.,

Bakker, S., Marquardt, N., Capra, L., and Rogers, Y.

(2016). Physikit: Data engagement through physical

ambient visualizations in the home. In Proceedings of

the 2016 CHI conference on human factors in comput-

ing systems, pages 1608–1619.

Huang, X., Liu, Z., Wang, X., Li, X.-d., Cheng, K., Zhou,

Y., and Jiang, X.-b. (2019). A small 3d-printing model

of macroadenomas for endoscopic endonasal surgery.

Pituitary, 22:46–53.

Huron, S., Gourlet, P., Hinrichs, U., Hogan, T., and Jansen,

Y. (2017). Let’s get physical: Promoting data physi-

calization in workshop formats. In Proceedings of the

2017 Conference on Designing Interactive Systems,

pages 1409–1422.

Iannella, G., Pace, A., Mucchino, A., Greco, A., De Vir-

gilio, A., Lechien, J. R., Maniaci, A., Cocuzza,

Active Physicalization of Temporal Bone

911

S., Perrone, T., Messineo, D., et al. (2024). A

new 3d-printed temporal bone:‘the sapiens’—specific

anatomical printed-3d-model in education and new

surgical simulations. European Archives of Oto-

Rhino-Laryngology, pages 1–10.

Jackler, D. and Gralapp, C. (2024). Oto surgery atlas –

department of ohns. https://otosurgeryatlas.stanford.

edu/. Last accessed October 2024, Images belong to

the illustrators and the Stanford Otolaryngology —

Head & Neck Surgery Department, permission ob-

tained from illustrator (Christine Gralapp).

Jansen, Y., Dragicevic, P., Isenberg, P., Alexander, J.,

Karnik, A., Kildal, J., Subramanian, S., and Hornbæk,

K. (2015). Opportunities and challenges for data phys-

icalization. In Proceedings of the 33rd Annual ACM

Conference on Human Factors in Computing Systems,

pages 3227–3236.

Jeising, S., Liu, S., Blaszczyk, T., Rapp, M., Beez, T., Cor-

nelius, J. F., Schwerter, M., and Sabel, M. (2024).

Combined use of 3d printing and mixed reality tech-

nology for neurosurgical training: getting ready for

brain surgery. Neurosurgical Focus, 56(1):E12.

Karolus, J., Brass, E., Kosch, T., Schmidt, A., and Woz-

niak, P. (2021). Mirror, mirror on the wall: Exploring

ubiquitous artifacts for health tracking. In Proceed-

ings of the 20th International Conference on Mobile

and Ubiquitous Multimedia, pages 148–157.

Kwok, S. W., Goh, K. H. H., Tan, Z. D., Tan, S. T. M., Tjiu,

W. W., Soh, J. Y., Ng, Z. J. G., Chan, Y. Z., Hui, H. K.,

and Goh, K. E. J. (2017). Electrically conductive fila-

ment for 3d-printed circuits and sensors. Applied Ma-

terials Today, 9:167–175.

Lane, J. I. and Witte, R. J. (2009). The temporal bone. An

imaging atlas. Heidelberg: Springer.

Longfield, E. A., Brickman, T. M., and Jeyakumar, A.

(2015). 3d printed pediatric temporal bone: a novel

training model. Otology & Neurotology, 36(5):793–

795.

LOZANO-HEMMER (2011). Tape recorders:

Time-measuring tapes. http://dataphys.org/list/

tape-recorders-measure-time-instead-of-distance/.

McJunkin, J. L., Jiramongkolchai, P., Chung, W., South-

worth, M., Durakovic, N., Buchman, C. A., and Silva,

J. R. (2018). Development of a mixed reality platform

for lateral skull base anatomy. Otology & neurotology:

official publication of the American Otological Soci-

ety, American Neurotology Society [and] European

Academy of Otology and Neurotology, 39(10):e1137.

Mossman, C., Samavati, F. F., Etemad, K., and Dawson,

P. (2023). Mobile augmented reality for adding de-

tailed multimedia content to historical physicaliza-

tions. IEEE Computer Graphics and Applications,

43(3):71–83.

Pahr, D., Ehlers, H., Wu, H.-Y., Waldner, M., and Raidou,

R. G. (2024). Investigating the effect of operation

mode and manifestation on physicalizations of dy-

namic processes. arXiv preprint arXiv:2405.09372.

Park, J. H., Lee, Y.-B., Kim, S. Y., Kim, H. J., Jung, Y.-

S., and Jung, H.-D. (2019). Accuracy of modified

cad/cam generated wafer for orthognathic surgery.

PloS one, 14(5):e0216945.

Reich, S., Berndt, S., K

¨

uhne, C., and Herstell, H. (2022).

Accuracy of 3d-printed occlusal devices of different

volumes using a digital light processing printer. Ap-

plied Sciences, 12(3):1576.

Roo, J. S., Gervais, R., Lain

´

e, T., Cinquin, P.-A., Hachet,

M., and Frey, J. (2020). Physio-stacks: Supporting

communication with ourselves and others via tangi-

ble, modular physiological devices. In 22nd Inter-

national Conference on Human-Computer Interaction

with Mobile Devices and Services, pages 1–12.

Rose, A. S., Webster, C. E., Harrysson, O. L., Formeis-

ter, E. J., Rawal, R. B., and Iseli, C. E. (2015). Pre-

operative simulation of pediatric mastoid surgery with

3d-printed temporal bone models. International jour-

nal of pediatric otorhinolaryngology, 79(5):740–744.

Sauv

´

e, K., Bakker, S., and Houben, S. (2020). Econun-

drum: Visualizing the climate impact of dietary choice

through a shared data sculpture. In Proceedings of the

2020 ACM Designing Interactive Systems Conference,

pages 1287–1300.

Schroeder, W. J., Lorensen, B., and Martin, K. (2004). The

visualization toolkit: an object-oriented approach to

3D graphics. Kitware.

ScienceDirect (2024). Hounsfield scale - an

overview. https://www.sciencedirect.com/topics/

medicine-and-dentistry/hounsfield-scale. Accessed:

October 21, 2024.

Sun, Z., Wong, Y. H., and Yeong, C. H. (2023). Patient-

specific 3d-printed low-cost models in medical educa-

tion and clinical practice. Micromachines, 14(2):464.

Suzuki, R., Taniguchi, N., Uchida, F., Ishizawa, A.,

Kanatsu, Y., Zhou, M., Funakoshi, K., Akashi, H.,

and Abe, H. (2018). Transparent model of temporal

bone and vestibulocochlear organ made by 3d print-

ing. Anatomical science international, 93(1):154–

159.

Takahashi, K., Morita, Y., Ohshima, S., Izumi, S., Kubota,

Y., Yamamoto, Y., Takahashi, S., and Horii, A. (2017).

Creating an optimal 3d printed model for temporal

bone dissection training. Annals of Otology, Rhinol-

ogy & Laryngology, 126(7):530–536.

Tan, L., Wang, Z., Jiang, H., Han, B., Tang, J., Kang, C.,

Zhang, N., and Xu, Y. (2022). Full color 3d printing

of anatomical models. Clinical Anatomy, 35(5):598–

608.

Wan, D., Wiet, G. J., Welling, D. B., Kerwin, T., and Stred-

ney, D. (2010). Creating a cross-institutional grad-

ing scale for temporal bone dissection. The Laryngo-

scope, 120(7):1422–1427.

Wanibuchi, M., Noshiro, S., Sugino, T., Akiyama, Y.,

Mikami, T., Iihoshi, S., Miyata, K., Komatsu, K., and

Mikuni, N. (2016). Training for skull base surgery

with a colored temporal bone model created by three-

dimensional printing technology. World neurosurgery,

91:66–72.

Yushkevich, P. A., Piven, J., Hazlett, H. C., Smith, R. G.,

Ho, S., Gee, J. C., and Gerig, G. (2006). User-guided

3d active contour segmentation of anatomical struc-

tures: significantly improved efficiency and reliability.

Neuroimage, 31(3):1116–1128.

IVAPP 2025 - 16th International Conference on Information Visualization Theory and Applications

912