Urban Re-Identification: Fusing Local and Global Features with

Residual Masked Maps for Enhanced Vehicle Monitoring in Small

Datasets

William A. Ramirez

a

, Cesar A. Sierra Franco

b

, Thiago R. da Motta

c

and Alberto Raposo

d

Pontifical Catholic University of Rio de Janeiro, Brazil

Keywords:

Urban Re-Identification, Dilated Region Proposal, Local and Global Attribute Fusion, Residual Connection

Modules, Multi-Object Tracking.

Abstract:

This paper presents an optimized vehicle re-identification (Re-ID) approach focused on small datasets. While

most existing literature concentrates on deep learning techniques applied to large datasets, this work addresses

the specific challenges of working with smaller datasets, mainly when dealing with incomplete partitioning

information. Our approach explores automated regional proposal methods, examining residuality and uniform

sampling techniques for connected regions through statistical methods. Additionally, we integrate global and

local attributes based on mask extraction to improve the generalization of the learning process. This led to a

more effective balance between small and large datasets, achieving up to an 8.3% improvement in Cumulative

Matching Characteristics (CMC) at k=5 compared to attention-based methods for small datasets. We improved

generalization regarding context changes of up to 13% in CMC for large datasets. The code, model, and

DeepStream-based implementations are available at https://github.com/will9426/will9426-automatic-Region-

proposal-for-cars-in-Re-id-models.

1 INTRODUCTION

Re-identification in urban areas, and generally in un-

common classes, often falls into small dataset scenar-

ios. In these cases, video frames with a temporal rela-

tionship and tracking information from one or multi-

ple cameras are commonly considered. This field can

involve various types of approaches categorized into

Mask-Guided Models (Song et al., 2018; Kalayeh

et al., 2018; Zhang et al., 2020; Lv et al., 2024),

Stripe-Based Methods (Luo et al., 2019b; Wang et al.,

2018; Fan et al., 2019), Attention-Based Methods

(Si et al., 2018; Chen et al., 2021), and GAN-Based

Methods (Jiang et al., 2021), often supplemented by

re-ranking methods (Luo et al., 2019a). The previ-

ously mentioned techniques result from challenges

focused on data availability and the need to extract in-

creasingly deeper and more representative attributes.

Mask-guided models often entail additional costs in

annotation or inference, as it is necessary to have a

a

https://orcid.org/0000-0003-1060-1523

b

https://orcid.org/0000-0002-5825-8798

c

https://orcid.org/0000-0002-9579-5867

d

https://orcid.org/0000-0001-7279-1823

process for detecting parts of the instances. (Zhang

et al., 2020) shows how this can involve an addi-

tional annotation task in exchange for optimizing

the identification process due to the availability of

more contextual information. On the other hand,

Stripe-Based Methods highlight another possible ap-

proach to global and localized attribute extraction, us-

ing statistical methods to propose areas that may be

more representative, shifting the annotation cost to a

method embedded within the model. Regarding the

use of attention modules along with partition-based

techniques, (Zhang et al., 2020) points out the fusion

of local and global attributes using squeeze and exci-

tation layers (SElayers) and attention, achieving im-

provements of up to 1.2% in the Cumulative Match-

ing Characteristics (CMC), a metric specialized in re-

identification. Each of these methods presents trade-

offs: Mask-guided models offer fine-grained detail

but require extensive annotation, increasing complex-

ity; Stripe-Based Methods simplify this at the risk of

oversimplifying regions of interest; Attention-Based

Methods balance attribute fusion but may overfit or

add computational overhead; and GAN-Based Meth-

ods provide flexibility in augmenting data but can be

computationally intensive and challenging to stabi-

574

Ramirez, W. A., Franco, C. A. S., R. da Motta, T. and Raposo, A.

Urban Re-Identification: Fusing Local and Global Features with Residual Masked Maps for Enhanced Vehicle Monitoring in Small Datasets.

DOI: 10.5220/0013176300003912

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 20th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2025) - Volume 2: VISAPP, pages

574-581

ISBN: 978-989-758-728-3; ISSN: 2184-4321

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

lize.

This paper addresses the challenges associated

with small datasets by studying algorithms for effi-

cient region estimation and improving feature map

diversity to enhance model generalization. We ex-

plore using statistical methods and attribute extrac-

tion techniques to create connected areas, proposing

more representative regions or stripes that lead to fea-

ture maps with greater representativeness. The pro-

posed model consists of three branches: localized fea-

ture maps (using RPN), globalized features (leverag-

ing the backbone and our custom attribute extraction

block), and base features from the backbone. Our

case study uses the VRIC and VeRi datasets, includ-

ing cross-validation experiments with mixed training

and testing configurations, specifically Vric− >VeRi

and VeRi− >Vric. Our approach builds on the base-

line established in (Zhang et al., 2020; Luo et al.,

2019a), which emphasizes the use of camera-guided

partitioned attention and attribute fusion while dis-

cussing the limitations associated with this module.

In summary, the main contributions of our work

are as follows:

• We propose an optimized approach for region ex-

traction, demonstrating how the use of statistical

methods like Monte Carlo can be helpful for stripe

extraction while simultaneously showing how us-

ing the same backbone to propose regions can be

a successful path to achieving a more balanced

model in terms of response to variance.

• We propose a validation method for the trained

model, introducing the concept of cross-inference

between two datasets with the same category

about the instance but with apparent differences

in context and resolution. We aim to demonstrate

that the trained model can be used in other con-

texts.

• Based on the challenges discussed around re-

identification, we proposed a computationally bal-

anced and reliable model to establish a real-time

baseline using DeepStream.

1.1 Data Augmentation

Deep learning approaches are often highlighted for

their reliance on large datasets. Data augmentation

has emerged as a valuable strategy in the context of

re-identification with small datasets. Techniques such

as grayscale conversion, random erasing, image ori-

entation flips, and zooming generate synthetic data

with some variability (Gong et al., 2021; Jiang et al.,

2021). Similarly, Generative Networks (Zheng et al.,

2019; He et al., 2023; Karras et al., 2020; Karras et al.,

2021) have proven effective in increasing variability

in small datasets with low variability, facilitating data

augmentation in labeled Re-identification datasets.

In the context of GANs, DG-Net (Zheng et al.,

2019) emphasizes its ability to generalize key features

such as pose and clothing. The model incorporates a

feature disentangling module and reconstruction loss,

enhancing cross-domain generation. DG-GAN (He

et al., 2023) aims to learn from defects or irregular

regions. DG-GAN architecture includes two genera-

tors and four discriminators across two domains, en-

hancing synthetic data generation for pattern recog-

nition in land surfaces, such as roads or open areas.

The StyleGAN architecture has gained attention in re-

cent years for its contributions to synthetic datasets

(Karras et al., 2020; Karras et al., 2021). StyleGAN3

(Karras et al., 2021), represents a significant advance-

ment in computer vision, albeit with high computa-

tional demands. StyleGAN3 employs components

like the Mapping Network, Synthesis Network, and

Weight Demodulation to provide fine control over im-

age style while effectively addressing aliasing, result-

ing in high-quality images without noise associated

with generative learning.

1.2 Baseline for ReID

In the past decade, ReID methods have evolved into

several categories: Mask-Guided Models, Stripe-

Based Methods, Pose-Guided Methods, Attention-

Based Methods, and GAN-Based Methods, often with

re-ranking techniques to address data limitations and

identification challenges. Mask-guided models use

instance-specific masks derived from segmentation or

detection (Song et al., 2018; Kalayeh et al., 2018;

Zhang et al., 2020; Lv et al., 2024), combining lo-

cal and global attributes. Despite the additional pro-

cessing costs, they enhance matching performance,

achieving up to 93% for CMC@5 (Zhang et al., 2020;

Lv et al., 2024). Stripe-based methods segment im-

ages to create local embeddings, as seen in (Luo et al.,

2019b; Wang et al., 2018; Fan et al., 2019; Fawad

et al., 2020). However, alignment issues challenge

these methods, leading to approaches like Aligne-

dReID++ (Luo et al., 2019b), which reported a 3%

improvement in Rank-1 accuracy compared to mod-

els without alignment. Attention-based methods ex-

tract discriminative features without explicit masks

(Si et al., 2018; Chen et al., 2021). While effec-

tive for large datasets, they face overfitting issues

in smaller ones. For instance, (Chen et al., 2021)

reported a 9% improvement in MAP@5 for larger

datasets. Gan-based methods address small dataset

limitations by generating synthetic data. For exam-

Urban Re-Identification: Fusing Local and Global Features with Residual Masked Maps for Enhanced Vehicle Monitoring in Small Datasets

575

ple, (Jiang et al., 2021) enhanced person category

diversity using GANs, achieving a 1% improvement

in CMC@1. Batch Normalization improves training

stability and generalization for large datasets. (Luo

et al., 2019a) demonstrated up to a 6% enhancement

for CMC@1.

2 METHOD

2.1 Dilated Region Proposal for Cars

(DRPC)

We proposed a module inspired by (Chen et al.,

2023; Lv et al., 2024), where we use the ResNet-

50 backbone to propose regions. Initially, we use

the first layer of our backbone, where we extract

low-level features. In this layer, with tensor dimen-

sions [B, C, H, W], a feature map is generated as

F = ResNet(input tensor). We then average across

the channel dimension to obtain a 2D representation

of the feature magnitudes, as shown in Eq. 1.

M

avg

(b, h, w) =

1

C

out

C

out

∑

c=1

F(b, c, h, w) (1)

Where M

avg

is the average tensor with dimensions

[B, 1, H

out

,W

out

].

To create a candidate region based on Eq. 2, we

use an adaptative threshold over the global average

value of M

avg

:

M

avg

(b, h, w) =

(

1 if M

avg

(b, h, w) > µ

avg

0 if M

avg

(b, h, w) ≤ µ

avg

(2)

Where µ

avg

is the global average value of M

avg

de-

scribed on Eq. 3.

µ

avg

=

1

B · H

out

·W

out

B

∑

b=1

H

out

∑

h=1

W

out

∑

w=1

M

avg

(b, h, w) (3)

Thus, we obtain a binary candidate mask that

highlights areas of interest based on the feature map

extracted. However, our goal is to create regions

around these initially highlighted characteristics in

stripes, aiming for the model to emphasize contours

and other low-level features of the pre-selected area.

To create the areas, we initialize a mask M

regions

with dimensions [B, N, H

out

,W

out

], where N is the

number of desired regions. Each vertical stripe is ex-

tracted and dilated. Consider a stripe R with dimen-

sions [H

out

, width], where ‘width‘ is the width of the

stripe. The dilation is performed using a structural

operation that expands the stripe. If R is the original

stripe and D is the result after dilating R, as shown in

Eq. 4:

D

dilated

= R ⊕ K (4)

Where ⊕ denotes the morphological dilation and

K is a dilation kernel (in this case, a matrix of ones).

Finally, the dilated stripe D

dilated

is assigned to the re-

gion mask M

regions

in the corresponding position, Eq.

[5,6] describe the proposed region and the result in

the extraction within the proposed area.

M

regions

(b, i, :, start

i

: end

i

) = D

dilated

(5)

F

prop

n

= F ⊙ M

regions

(6)

2.2 Quasi-Monte Carlo for Proposal

Regions (QMCPR)

This section introduces the use of Quasi-Monte

Carlo (QMC) to generate proposal regions without

relying on pre-annotated masks or outputs from seg-

menters or detectors. Strips x

n

are defined in a unit

space [0, 1]

d

using the Halton sequence, which dis-

tributes points uniformly. For the two-dimensional

case d = 2, each strip x

n

= (x

n

, y

n

) is derived using

bases b

1

and b

2

, as shown in Eq. 7.

x

n

= (φ

b

1

(n), φ

b

2

(n)) (7)

where φ

b

(n) is the inverse radical function in base

b, which converts an integer n into a fraction in base

b. Initially, the most statistically discriminative ar-

eas are selected through a histogram analysis of the

instance-level image to restrict the search areas and

highlight the discriminative areas. Based on this anal-

ysis, greater weight is given to the bases in the most

relevant regions.

Subsequently, the QMC method is applied to dis-

tribute the strips in these areas, ensuring that the strips

cover at least 15% of the total width or length of the

image, which is crucial to guarantee that the strips

contain sufficient visual context. This strategy is ben-

eficial for providing variability of context or informa-

tion to the model, allowing the model to relate the

instance from a smaller context. The generated strips

x

n

are originally fractions within the range [0, 1]. To

adapt them to the image space of size H ×W (where H

and W are the dimensions of the image), these points

are scaled to the appropriate range, as shown in Eq. 8.

p

n

= x

n

×

W − w

m

H − h

m

(8)

VISAPP 2025 - 20th International Conference on Computer Vision Theory and Applications

576

where w

m

and h

m

are the dimensions of the pro-

posed mask. The scaled values p

n

= (p

x

, p

y

) are

rounded to obtain the pixel indices (x

start

, y

start

) where

the mask will begin in the image. For each generated

strip p

n

, a binary strip mask M

n

of size w

m

× h

m

is

defined in an image of size H × W . Eq. 9 shows the

cases for generating the strip.

M

n

(i, j) =

1 if

x

start

≤ i < x

start

+ w

m

y

start

≤ j < y

start

+ h

m

0 otherwise

(9)

Where (i, j) are the pixel indices in the image, the

masks M

n

are used to propose specific regions of the

image that are then processed to extract a series of

features. F is an image feature obtained from an in-

termediate layer of the network, so by applying the

mask M

n

on F, a proposed region feature is generated.

Eq. 10 describes how we use the proposed region to

define our proposed feature map.

F

prop

n

= F ⊙ M

n

(10)

where ⊙ denotes the element-wise product. This

proposed feature F

prop

n

can be used to train the model

by combining it with the global feature of the image,

allowing the model to focus on both local and global

features.

2.3 Feature Maps

The proposed model will address three branches of

the extraction and fusion of attributes: a base feature

map, a global feature map, and a local feature map.

The base map will describe the most superficial at-

tributes found in the initial layers of the network, ob-

tained through convolutions at different levels. In this

stage, our model aims to capture patterns that may be

associated with edges, textures, and colors (low di-

mensionality). Eq. 11 shows what this branch repre-

sents.

f

base

= σ(W ∗ X + b) (11)

where

W is the convolution kernel, ∗ represents the con-

volution operation, X is the input (image or previous

features), b is the bias, and σ is an activation function

(such as ReLU).

Concerning the global attributes, these are ob-

tained by aggregating all the spatial information of the

image into a single representative vector using Global

Average Pooling (GAP), just as shown in Eq. 12.

f

global

=

1

H ×W

H

∑

i=1

W

∑

j=1

f

i j

(12)

where f

i j

is the feature vector at the spatial posi-

tion (i, j), and H ×W is the size of the spatial feature.

In our small dataset context, f

global

will contain

representations that help prevent overfitting by not re-

lying on specific details and providing a robust repre-

sentation that tolerates minor variations in the image.

f

proposal

will represent the local attributes ex-

tracted from the regions or stripes. In our case,

M

regions

will be the proposed stripes using the meth-

ods mentioned above, which will be used as delim-

iters to extract more localized attributes, f

proposal

in

Eq. 13.

f

proposal

=

M

∑

M=1

α

M

· f

(Input,M)

(13)

where α

M

are the selection weights, and f

Input,M

are the features in M region . f

proposal

generates repre-

sentative regions obtained through the outputs of the

DRPRC or QMCPR modules.

Subsequently, the reduced features are obtained

through a reduction of dimensionality for the feature

map of the proposed regions, performing an aggrega-

tion along with the activation, see Eq. 14.

f

reduce

= σ

1

F

F

∑

f =1

proposal

b, f

!

(14)

In this case, we calculate the mean of the values

across the feature dimension for each batch b.

2.4 Set up

Regarding our loss metric, we considered a fusion of

losses in triplet loss, starting with a cross-entropy loss

related to the class, followed by a loss for base at-

tributes, another for the proposed region, and another

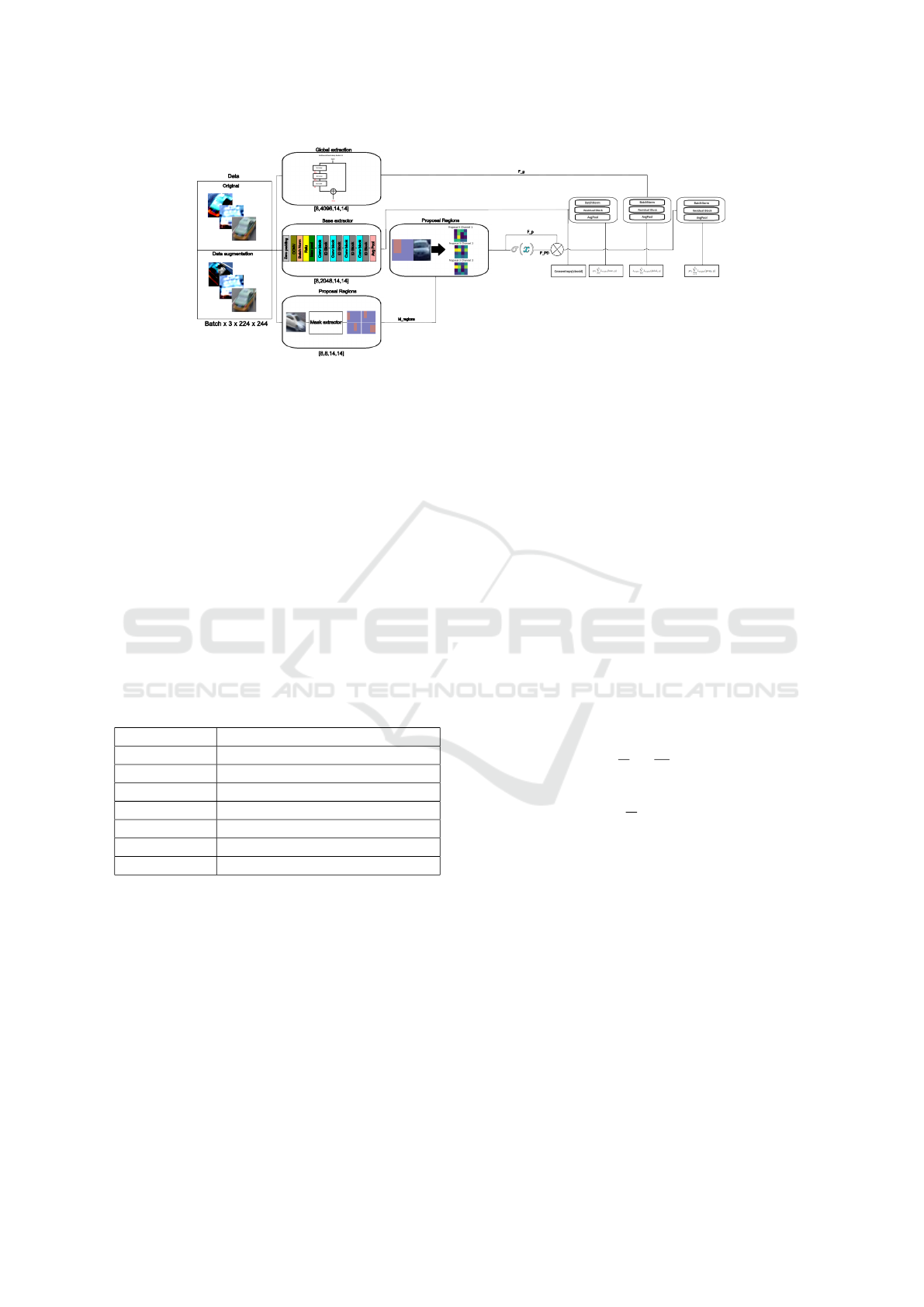

for global attributes. Figure 1 shows the meaning of

each of these losses.

The total loss function (L

total

) is given by the Eq.

15 where λ

triplet

is defined in 16.

L

total

=λ

id

· L

CE

(cls

i

, y)

+ λ

triplet

· L

triplet

(global

i

, y)

+ pr

1

· L

triplet

(prop

i

, y)

+ pr

2

· L

triplet

(base

i

, y)

(15)

L

triplet

= max(0, dist

ap

− dist

an

+ margin) (16)

Where:

λ

id

is the weight assigned to the identification loss.

λ

triplet

is the weight assigned to the triplet loss. pr

1

is

the weight assigned to the proposal loss. pr

2

is the

Urban Re-Identification: Fusing Local and Global Features with Residual Masked Maps for Enhanced Vehicle Monitoring in Small Datasets

577

Figure 1: Reid training Pipeline.

weight assigned to the base loss. L

CE

is the cross-

entropy loss function, which measures the discrep-

ancy between the class prediction and the target label.

L

triplet

is the triplet loss function, which measures the

relative distance between an anchor, a positive, and

a negative in the feature space. N is the number of

layers or extracted features to which the loss is ap-

plied. cls

i

refers to the classification outputs of each

layer. global

i

refers to the global features extracted

from each layer. prop

i

refers to the proposed features

extracted from each layer. base

i

refers to the base fea-

tures extracted from each layer. y is the target label

for classification. dist

ap

defines Anchor-Positive Dis-

tance and dist

an

defines Anchor-Negative Distance.

About our approach, we used the training config-

urations described in Table 1.

Table 1: Training Configuration.

Configuration Value

Input Size 3x224x224

Epochs 200

Early Stop CMC rank 1 tolerance (10 epochs)

Augmentation Flip, Rotation, Scaling, Grayscale

Learning Rate 0.001

Optimizer Adam

Reduce LR On Plateau

3 EXPERIMENTS AND RESULTS

3.1 Datasets and Metrics

We use the VRIC dataset (Kanaci et al., 2018) in

both small and large variants. The small version has

5,854 training samples (220 IDs, 10-30 samples/ID)

and 2,811 gallery samples (validation), maintaining

the original test set for consistent comparative anal-

ysis. The large version retains all 60,430 samples

(5,622 IDs). Additionally, we use the VeRi dataset

(Liu et al., 2016) to evaluate the model’s adaptabil-

ity to domain shifts. Our validation protocol consid-

ered cross-dataset evaluation of metrics to assess per-

formance in two different scenarios: one with richer

contextual information and the other with higher res-

olution.

The small dataset is designed to evaluate model

performance with fewer, more variable inputs, test-

ing generalization on unseen validation IDs. Data

augmentation includes flips, grayscale conversions,

area-specific and full rotations, and GAN-based view

changes.

Performance is measured using mean Average

Precision (mAP) and Cumulative Matching Char-

acteristic (CMC) metrics, commonly used in re-

identification tasks. mAP assesses retrieval effective-

ness (Eq. 17), while CMC evaluates ranking accuracy

(Eq. 18) for top k = 1, 5, 10.

mAP =

1

N

N

∑

i=1

1

m

i

m

i

∑

k=1

P(k) (17)

CMC(r) =

1

N

N

∑

i=1

δ(rank

i

≤ r) (18)

3.2 Analysis of Attribute Extraction

Method

The present work evaluated two methods for region

generation. The first, DRPC, is based on generating

dilated regions using contours from the first layer of

the ResNet50 backbone, which involves a computa-

tional cost of O(batch) × processing1 + O(regions).

In the first stage, we iterate over the entire batch and

return the first layer in the forward pass, performing a

pass through the model and returning the first convo-

lution with binarization. The second stage encloses

each binarized area based on a dilation. The sec-

ond method, QMCPR, has a sublinear computational

VISAPP 2025 - 20th International Conference on Computer Vision Theory and Applications

578

cost and generates masks with an approximate com-

putational cost of O(batch × regions), approximately

O(8n), where ”regions” is a parameter fixed at 8, and

”batch” is a variable input parameter. Both methods

did not drastically modify the computational cost dur-

ing training execution.

On the other hand, these two algorithms provide

a base approach using masks that can be generalized

to any ReID dataset, reducing the need for manual

annotations or training models for part segmentation.

The previously mentioned methods will enable the lo-

cal extraction of features. Since these do not rely on

pre-annotated masks, they will evaluate more repre-

sentative regions using the ResNet backbone’s upper

layers or by selecting regions based on distributions

within a unit field representing the area of interest.

Subsequently, using extraction blocks, the features

within these regions will be extracted and reduced

to more relevant values using activations suggested

in this work. The proposed use of regions enhances

feature diversity by leveraging incomplete partition-

ing, which fuses and refines attributes from local,

global, and base extractions. This approach enables

the model to learn more discriminative contextual and

instance-specific information in small datasets, mak-

ing it a more robust solution.

Table 2 provides an initial notion of what these

methods imply in small datasets. Here, we compare

the model with mask guidance, the GRFR, and the

DRPC model against the baseline PGAN model with-

out attention. We observe that the proposed methods

are quite close to the training done entirely with the

masks generated for the VRIC dataset, provided in

(Zhang et al., 2020), showing a correlation with the

extraction of local attributes, which is a positive in-

dicator that our method successfully proposes highly

discriminative regions.

Table 2: Results of Different Models on Small-dataset Re-

Identification Tasks.

Model mAP CMC Top 1 CMC Top 5

PGAN 45.8 33.7 60.1

model mask guided 53.9 43.2 66.2

model GRFR (ours) 53.5 43.1 67.3

model DRPC (ours) 56.0 44.5 68.3

model DRPC+AUG view (ours ) 63.3 53.3 74.9

In the experimental stage, we observed that pay-

ing attention to small datasets tends to degrade the

gradient, resulting in less model generalization. Com-

paring PGAN with and without attention (PGAN vs.

model mask guided), we see that in small datasets,

more globalized learning tends to bring better perfor-

mance in terms of the CMC Top K=5 metric (approx-

imately 6% improvement). In small datasets, the lim-

ited diversity and number of examples lead the atten-

tion mechanism to overfit specific, less generalizable

local features rather than capturing broader patterns.

This overfitting causes the model to become sensitive

to noise or small variations in the training data, which

in turn degrades the gradient during optimization and

hinders the model’s ability to generalize effectively

to unseen samples. Regarding other masking meth-

ods, our trend was quite in line with PGAN without

attention, in some cases having a better CMC K=5

(approximately 1.1% improvement).

3.3 Performance of Our Model

Our model is designed to handle small datasets with-

out region annotations, using a model masking ap-

proach based on the forward pass of the same model.

As depicted in Figure 1, we emphasize more repre-

sentative areas of the feature maps through a reduc-

tion facilitated by an activation function. This ap-

proach reduced training time by several seconds due

to its lower complexity, eliminating the need for at-

tention mechanisms and specialized refinement mod-

ules. Specifically, the training times were 66 seconds

per batch (size of 8 samples) for the base model, com-

pared to 62 seconds with the DRPC method and 60

seconds with the QMCPR method. These tests were

performed using an NVIDIA GeForce RTX 3060, a

13th Gen Intel® Core™ i5-13600KF (20 cores), and

32GB of RAM.

Table 3: Experimental results of Different Models on Large

Dataset Re-Identification Tasks, V RIC− > V RIC.

Model mAP CMC Top 1 CMC Top 5

PGAN 84.5 77.4 93.1

model DRPC (ours) 82.0 74.9 89.8

As shown in Table 3, our model lags behind

attention-based models on large datasets. This is be-

cause attention-based models typically involve deeper

feature extraction layers. In contrast, models based on

Squeeze-and-Excitation layers and attention mecha-

nisms achieved up to a 3.3% improvement.

However, as illustrated in Table 4, our model

demonstrated balanced performance across various

scenarios due to our generalized approach in extract-

ing local discriminative features and global attributes.

Our model had fewer parameters, reducing inference

times: 0.0302 seconds for the base model, 0.0095 sec-

onds for QMPRC, and 0.0216 seconds for DPRC.

Table 4 details the performance of our model in

mixed scenarios. The first scenario involves train-

ing and evaluating the VeRi model on the VeRi test

set. This setup shows that VeRi, a higher-quality

dataset, allows more straightforward methods that im-

prove variability by expanding the visual context to

Urban Re-Identification: Fusing Local and Global Features with Residual Masked Maps for Enhanced Vehicle Monitoring in Small Datasets

579

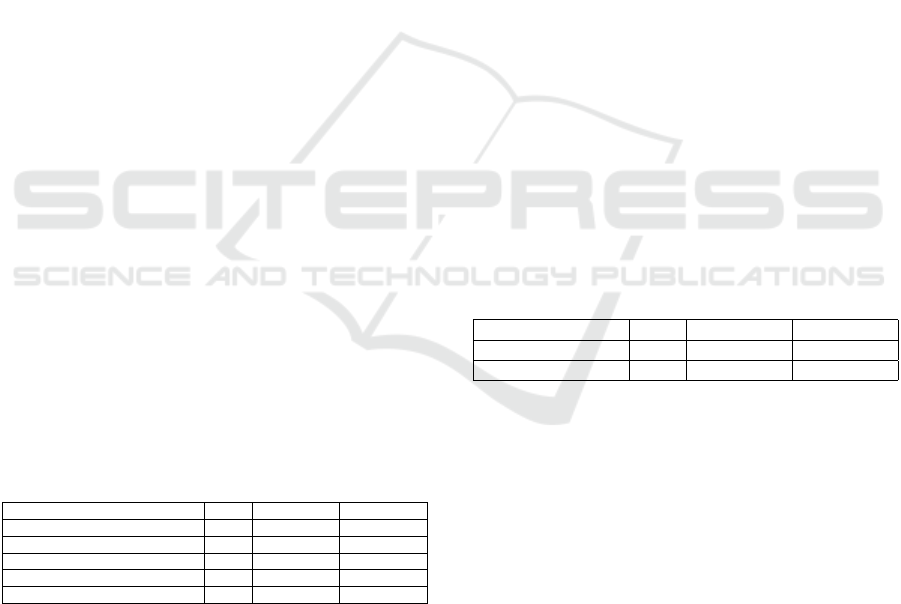

Figure 2: Qualitative inference of our model, VRIC to VeRi vs. VRIC to VRIC vs. large VRIC to VeRi.

Table 4: Experimental results of Different Models on Large

dataset Re-Identification Tasks, V RIC− > VeRi vs VeRi− >

V RIC.

Model CMC Top 1 CMC Top 5 CMC Top 10 Train Test

PGAN 95.2 97.5 98.7 VeRi VeRi

PGAN 14.3 26.1 33.0 VeRi VRIC

PGAN 44.9 56.9 66.2 VRIC large VeRi

PGAN 37.5 43.2 50.8 VRIC small VeRi

DRPC (ours) 94.8 97.2 98.4 VeRi VeRi

DRPC (ours) 39.6 53.3 60.9 VRIC small VeRi

DRPC (ours) 56.8 62.1 68.0 VRIC large VeRi

DRPC (ours) 21.8 38.2 46.4 VeRi VRIC

perform well in similar contexts. As observed, PGAN

and the proposed method are nearly identical regard-

ing the CMC curve for VeRi vs. VeRi, with only a 0.5

percentage point difference at K=5.

Regarding susceptibility to input variability, our

model’s ability to learn contextual regions allowed it

to achieve superior performance in the Cumulative

Matching Characteristic (CMC) metric with K = 5

when transitioning from the VeRi dataset to the VRIC

dataset (VeRi vs VRIC). Specifically, the model im-

proved up to 12.1 percentage points, indicating en-

hanced generalization capabilities. In contrast, the

model trained with a smaller dataset and data aug-

mentation fell 5.3 percentage points short compared

to the baseline model trained on the full dataset.

3.4 Discussion of Results

Our model focused on improving generalization and

performance on small datasets. This led to evaluating

the performance of our model in terms of changes in

the number of samples and context variations. Figure

2 shows how the matching works for the k=5 smallest

distances concerning our query, evaluated on a gallery

or query set.

Figure 2 shows some critical and common cases

regarding re-identification for VRIC and VeRi. Re-

garding the VRIC dataset, we found quite a few

matches for the top 5, considering the dimensionality

of the samples used in the training, which is quite pos-

itive. The shift in context to VeRi demonstrated that

part of the knowledge extracted from VRIC helped to

re-identify a wide variety of instances in this dataset.

The scores in the context shift was deficient compared

to the base model trained with attention and the entire

VRIC set due to the inherent limitations of our small

dataset’s dimensionality. Regarding our model’s ef-

fectiveness in generalizing learning in a large dataset,

we also observed that our model not only reduced

the necessary training time but also generalized the

learning optimally, achieving a balance between per-

formance and susceptibility to variability.

4 COPYRIGHT FORM

This work is licensed under the Creative Com-

mons Attribution-NonCommercial-NoDerivatives 4.0

International License. To view a copy of this

license, visit http://creativecommons.org/licenses/

by-nc-nd/4.0/. This license permits non-commercial

use, distribution, and reproduction in any medium,

provided the original work is properly cited and is not

modified or adapted in any way.

5 CONCLUSIONS

Our approach demonstrated how to tackle a mask-

based method by creating regions through the same

activations proposed by the backbone, generating

partitions with greater context, and establishing a

baseline for scenarios with high-resolution instances

where emphasis is placed on the fusion of global and

local attributes. Along the same lines, we empha-

sized creating more discriminative feature maps by

reducing feature maps of proposed regions, leading to

more representative representations per proposed re-

gion. This made our approach more robust in domain

VISAPP 2025 - 20th International Conference on Computer Vision Theory and Applications

580

shift and small datasets. Providing greater context and

more localized information resulted in our model out-

performing our baseline by 8% in the small dataset

case, comparing our baseline PGAN result with our

DPRC-based model in the context of VRIC small. In

domain shift, our model (DPRC) exceeded our base-

line (PGAN) by up to 11% in CMC k=1, showing pos-

itive effects of proposing context-rich region parts in

using VRIC to train and VeRI for inference. Addi-

tionally, our approach aimed to verify the limitations

involved in small sample volumes and observed how

implementing classical techniques oriented towards

morphological transformations and using GANs for

simple changes like color and texture can help address

issues where our study instance is highly costly to an-

notate.

ACKNOWLEDGEMENTS

This study was financed in part by the Coordination of

Superior Level Staff Improvement - Brasil (CAPES)

- Finance Code 001.

REFERENCES

Chen, H., Zhao, Y., and Wang, S. (2023). Person re-

identification based on contour information embed-

ding. Sensors, 23(2):774.

Chen, X., Xu, H., Li, Y., and Bian, M. (2021). Person re-

identification by low-dimensional features and metric

learning. Future Internet, 13(11):289.

Fan, X., Luo, H., Zhang, X., He, L., Zhang, C., and

Jiang, W. (2019). Scpnet: Spatial-channel paral-

lelism network for joint holistic and partial person re-

identification. In Computer Vision–ACCV 2018: 14th

Asian Conference on Computer Vision, Perth, Aus-

tralia, December 2–6, 2018, Revised Selected Papers,

Part II 14, pages 19–34. Springer.

Fawad, Khan, M. J., and Rahman, M. (2020). Person re-

identification by discriminative local features of over-

lapping stripes. Symmetry, 12(4):647.

Gong, Y., Zeng, Z., Chen, L., Luo, Y., Weng, B., and Ye, F.

(2021). A person re-identification data augmentation

method with adversarial defense effect. arXiv preprint

arXiv:2101.08783.

He, X., Luo, Z., Li, Q., Chen, H., and Li, F. (2023). Dg-

gan: A high quality defect image generation method

for defect detection. Sensors, 23(13):5922.

Jiang, Y., Chen, W., Sun, X., Shi, X., Wang, F., and Li, H.

(2021). Exploring the quality of gan generated images

for person re-identification. In Proceedings of the 29th

ACM International Conference on Multimedia, pages

4146–4155.

Kalayeh, M. M., Basaran, E., G

¨

okmen, M., Kamasak,

M. E., and Shah, M. (2018). Human semantic pars-

ing for person re-identification. In Proceedings of

the IEEE conference on computer vision and pattern

recognition, pages 1062–1071.

Kanaci, A., Zhu, X., and Gong, S. (2018). Vehicle re-

identification in context. In Pattern Recognition - 40th

German Conference, GCPR 2018, Stuttgart, Ger-

many, September 10-12, 2018, Proceedings.

Karras, T., Aittala, M., Laine, S., H

¨

ark

¨

onen, E., Hellsten, J.,

Lehtinen, J., and Aila, T. (2021). Alias-free generative

adversarial networks. Advances in neural information

processing systems, 34:852–863.

Karras, T., Laine, S., Aittala, M., Hellsten, J., Lehtinen, J.,

and Aila, T. (2020). Analyzing and improving the im-

age quality of StyleGAN. In Proc. CVPR.

Liu, X., Liu, W., Mei, T., and Ma, H. (2016). A deep

learning-based approach to progressive vehicle re-

identification for urban surveillance. In Computer

Vision–ECCV 2016: 14th European Conference, Am-

sterdam, The Netherlands, October 11-14, 2016, Pro-

ceedings, Part II 14, pages 869–884. Springer.

Luo, H., Jiang, W., Gu, Y., Liu, F., Liao, X., Lai, S., and

Gu, J. (2019a). A strong baseline and batch normal-

ization neck for deep person re-identification. IEEE

Transactions on Multimedia, 22(10):2597–2609.

Luo, H., Jiang, W., Zhang, X., Fan, X., Qian, J., and Zhang,

C. (2019b). Alignedreid++: Dynamically matching

local information for person re-identification. Pattern

Recognition, 94:53–61.

Lv, G., Ding, Y., Chen, X., and Zheng, Y. (2024).

Mp2pmatch: A mask-guided part-to-part matching

network based on transformer for occluded person re-

identification. Journal of Visual Communication and

Image Representation, 100:104128.

Si, J., Zhang, H., Li, C.-G., Kuen, J., Kong, X., Kot, A. C.,

and Wang, G. (2018). Dual attention matching net-

work for context-aware feature sequence based per-

son re-identification. In Proceedings of the IEEE con-

ference on computer vision and pattern recognition,

pages 5363–5372.

Song, C., Huang, Y., Ouyang, W., and Wang, L. (2018).

Mask-guided contrastive attention model for person

re-identification. In Proceedings of the IEEE con-

ference on computer vision and pattern recognition,

pages 1179–1188.

Wang, G., Yuan, Y., Chen, X., Li, J., and Zhou, X. (2018).

Learning discriminative features with multiple granu-

larities for person re-identification. In Proceedings of

the 26th ACM international conference on Multime-

dia, pages 274–282.

Zhang, X., Zhang, R., Cao, J., Gong, D., You, M., and Shen,

C. (2020). Part-guided attention learning for vehicle

instance retrieval. IEEE Transactions on Intelligent

Transportation Systems, 23(4):3048–3060.

Zheng, Z., Yang, X., Yu, Z., Zheng, L., Yang, Y., and Kautz,

J. (2019). Joint discriminative and generative learn-

ing for person re-identification. In proceedings of the

IEEE/CVF conference on computer vision and pattern

recognition, pages 2138–2147.

Urban Re-Identification: Fusing Local and Global Features with Residual Masked Maps for Enhanced Vehicle Monitoring in Small Datasets

581