A Proposed Immersive Digital Twin Architecture for Automated Guided

Vehicles Integrating Virtual Reality and Gesture Control

Mokhtar Ba Wahal

1

, Maram Selsabila Mahmoudi

1

, Ahmed Bahssain

1

, Ikrame Rekabi

1

,

Abdelhalim Saeed

1

, Mohamad Alzarif

1

, Mohamed Ellethy

2

, Neven Elsayed

3

, Mohamed Abdelsalam

2

and Tamer ElBatt

1,4

1

Department of Computer Science and Engineering, The American University in Cairo, Cairo, Egypt

2

Siemens EDA, Cairo, Egypt

3

Know-Center, Graz, Austria

4

Department of Electronics and Communications Engineering, Faculty of Engineering, Cairo University, Giza, Egypt

{mokhtarsalem, marammahmoudi, ahmed.bahusain, ikrame.rekabi, abdelhalim saeed, mohamadalzarif,

tamer.elbatt}@aucegypt.edu, {mohamed.abd el salam ahmed, mohamed.ellethy}@siemens.com, nelsayed@know-center.at

Keywords:

Human-Robot Interaction, Virtual Reality, Modeling, Digital Twin, Immersive Systems, Cyber-Physical

Systems.

Abstract:

Digital Twins (DTs) are virtual replicas of physical assets, facilitating a better understanding of complex

Cyber-Physical Systems (CPSs) through bidirectional communication. As CPS grows in complexity, the need

for enhanced visualization and interaction becomes essential. This paper presents a framework for integrating

virtual reality (VR) with a Dockerized private cloud to minimize communication latency between digital and

physical assets, improving real-time communication. The integration, based on the Robot Operating System

(ROS), leverages its modularity and extensive libraries to streamline robotic control and system scalability.

Key innovations include a proximity heat map surrounding the digital asset for enhanced situational awareness

and VR-based hand gesture control for intuitive interaction. The framework was tested using TurtleBot3 and

a 5-degree-of-freedom robotic arm, with user studies comparing these techniques to traditional web-based

control methods. Our results demonstrate the efficacy of the proposed VR and private cloud integration,

providing a promising approach to advance Human-Robot Interaction (HRI).

1 INTRODUCTION AND

RELATED WORK

Designing a digital representation of a physical as-

set has always been considered a method to study,

analyze, and control physical assets effectively. Dr.

Michael Grieves first introduced the concept of Dig-

ital Twins (DTs) in 2002 as real-time duplicates of

physical assets with seamless data exchange for sev-

eral purposes, including monitoring, comprehension,

and optimization (Alexandru et al., 2022). However,

the concept of Digital Twins gained more attention in

2010 when NASA saw its potential uses. The inter-

est of NASA in the concept of Digital Twins helped

shift the focus to advancing the technology, even if it

did not successfully implement the concept of Digital

Twins at the time (Saddik, 2018). DTs are considered

important strategic technologies because of their ef-

fectiveness in time, cost reduction, and precise simu-

lation of operational settings by using the digital asset

as a virtual representation for designing, testing, and

operations (Attaran and Celik, 2023) (Crespi et al.,

2023).

The implemented applications of Digital Twins

are mostly focused on manufacturing compared to the

number of Digital Twins in critical mission applica-

tions such as robotics operating in rescue missions

(Pirker et al., 2022). The success of critical missions

operated by robotics is dependent on the situational

awareness of the robot and the environment (Riley

and Endsley, 2004). Most of the rescue Digital Twins

found in the literature do not include an Autonomous

Guided Vehicle (AGV), a mobile robot designed for

autonomous navigation, limiting their effectiveness in

dynamic environments. The traditional methods for

actuating robotic arms using web-based dashboards

or keyboard buttons have proven to be limited in terms

of flexibility and ease of use, particularly for users un-

Wahal, M. B., Mahmoudi, M. S., Bahssain, A., Rekabi, I., Saeed, A., Alzarif, M., Ellethy, M., Elsayed, N., Abdelsalam, M. and ElBatt, T.

A Proposed Immersive Digital Twin Architecture for Automated Guided Vehicles Integrating Virtual Reality and Gesture Control.

DOI: 10.5220/0013176900003912

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 20th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2025) - Volume 1: GRAPP, HUCAPP

and IVAPP, pages 267-275

ISBN: 978-989-758-728-3; ISSN: 2184-4321

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

267

familiar with robotic systems (Abdulla et al., 2020).

In recent years, there has been increasing inter-

est in the potential of Human-Robot Interaction (HRI)

techniques for enhancing Digital Twins applications,

driven by the need for more intuitive and effective

methods to improve the situational awareness of op-

erators (Gallala et al., 2022). One effective tech-

nology for enhancing HRI is Virtual Reality (VR),

which enables users to engage and interact within a

synthesized three-dimensional environment (Gigante,

1993). VR directly enhances HRI by offering a more

immersive user experience, interactive simulations,

and intuitive actuation methods. All these features to-

gether result in a more effective and efficient environ-

ment for monitoring and controlling DT-aided teler-

obotics, specifically AGV with an integrated robotic

arm. In their survey, (Mazumder et al., 2023) high-

lighted the effectiveness of VR data processing for

enhancing robot control and assisting human opera-

tors. They discussed the development of a telerobotic

workspace with Digital Twin integration for gesture-

based robot control. Additionally, they noted that

VR/MR (Mixed Reality) interfaces significantly im-

proved DT-aided telerobotics by providing more im-

mersive controls, particularly useful for operations in

hazardous environments. Both assets must be syn-

chronized to ensure the correct bi-directional data

flow. Ellethy et al. (Ellethy et al., 2023) proposed

a novel architecture that utilizes a Dockerized pri-

vate cloud to minimize the latency between the two

edges of the system. A Dockerized private cloud is

a set of Docker containers hosting services such as

storage, AI, and real-time monitoring (Ellethy et al.,

2023). A comparative analysis of public vs. private

cloud-based systems using latency and computation

as metrics showed the comparative advantage of us-

ing a Dockerized private cloud to host the services

provided by the Digital Twin.

In this work, we propose a novel architecture that

increases the HRI and immersive experience of Digi-

tal Twins practitioners through the integration of VR

with a Dockerized private cloud ensuring minimized

latency that allows the user to handle critical missions.

We used an open-source Software Development Kit

(SDK) to construct the Digital Twin, in addition to

extending it to integrate the virtual reality part of the

system.

This paper consists of the following sections. Sec-

tion 2 provides an overview of the system by high-

lighting the tools and components used to implement

the project. Afterward, a detailed description of the

system architecture is presented in Section 3, with

technical details about integrating a Dockerized Pri-

vate Cloud and Virtual Reality. In Section 4, methods

to improve HRI using VR technology are introduced,

including a Heat Map to enhance situational aware-

ness of the user and gesture-based actuation meth-

ods for more immersive control of the AGV and the

robotic arm. In addition, this section discusses a user

study that evaluates the proposed actuation methods.

Finally, Section 5 presents conclusions about the sys-

tem and suggests potential directions for future re-

search.

Figure 1: A diagram showcasing the proposed Digital Twin

architecture, making use of a Dockerized private cloud.

2 SYSTEM OUTLINE

This section gives a background about the tools used

in the project, as familiarity with the following con-

cepts and tools is essential to the full understanding

of the details of the project.

The TurtleBot3 is a small, programmable mobile

robot that has two models: “Waffle” and “Burger.” We

opted for the waffle model in our setup for its com-

patibility with the arm manipulator and its suitability

for research and educational purposes. In general, the

TurtleBot3 was selected for its open-source architec-

ture, modularity, and extensive community support,

making it ideal for developing complex robotic appli-

cations (Amsters and Slaets, 2020).

The TurtleBot3 is equipped with a Light Detec-

tion and Ranging (LIDAR) sensor, mounted on top

of the robot, that can accurately measure the dis-

tance to the surrounding objects by emitting laser

pulses and analyzing their reflections. It can navi-

gate autonomously using Simultaneous Localization

and Mapping (SLAM), a mapping method that allows

robots and other autonomous vehicles to build a map

and localize themselves within it simultaneously. A

G-mapping package that provides laser-based SLAM

as a ROS node called slam gmapping was used to

map our environment.

In addition to the LIDAR, other key components

of the TurtleBot3 include the Raspberry Pi (RPi) and

the OpenCR board. The Raspberry Pi, placed one

level below the LIDAR, serves as the main computer,

GRAPP 2025 - 20th International Conference on Computer Graphics Theory and Applications

268

providing processing power to run the robot’s soft-

ware, manage communication, and handle data from

sensors. While the OpenCR board, placed below the

RPi, is the robot’s microcontroller, it interfaces with

the hardware components like motors and sensors and

executes low-level control tasks for precise and real-

time operation. Finally, on the lowest level, we find

the battery and two Dynamixel XL430 W250 servo

motors to help stabilize the robot’s speed and position

(Amsters and Slaets, 2020).

Beyond the TurtleBot3, we integrated a five-

degrees-of-freedom robotic arm, which is digitally

represented by the OpenMANIPULATOR-X simula-

tion, providing a comprehensive Digital Twin for test-

ing and control.

The TurtleBot3 operates on a Linux-based op-

erating system, specifically Ubuntu 20.04, installed

on the RPi SD card. To program and control the

TurtleBot3 and its robotic arm, we utilized ROS, a

flexible and powerful framework for robotics soft-

ware development. ROS provides features that sup-

port a microservices architecture, emphasizing decen-

tralization, fault tolerance, and decoupling of system

components through the use of ROS nodes and top-

ics. These nodes can run across multiple devices and

connect via various mechanisms, such as requestable

services or publisher/subscriber communication. We

selected ROS as the primary framework due to its

widespread adoption in the robotics community, ex-

tensive library support, and facilitation of modular

development, which accelerates implementation and

fosters collaboration (Estefo et al., 2019).

The Dockerized private cloud architecture is a lay-

ered architecture originally proposed by Ellethy et

al.(Ellethy et al., 2023). The architecture offers sig-

nificant advantages regarding latency reduction, scal-

ability, and maintenance. It is composed of three main

layers:

The Hardware-Specific Layer. It includes both dig-

ital and physical assets. Each asset is managed by a

ROS Master and an identical ROS subsystems. The

separation of ROS Masters is to avoid single points of

failure and prevent bottlenecks. At the same time, the

identical subsystems ensure modularity and seamless

replication. Individual ROS nodes handle different

functionalities, such as collecting sensory data, pro-

cessing actuation commands, and video streaming.

The Middleware Layer. It facilitates the commu-

nication between the first and third layers. It mar-

shals data between the two layers, ensuring that each

ROS node from Layer 1 has a corresponding middle-

ware node for data translation. Communication be-

tween the hardware-specific layer and the Dockerized

private cloud layer is achieved through web sockets,

making this layer hardware-agnostic and scalable.

Dockerized Private Cloud Layer. As illustrated in

the grey box of Figure 1. This layer provides a

set of Docker containers that offer services such as

AI-based processing, real-time monitoring, and data

storage. By leveraging Docker Compose, multiple

containers are orchestrated to run services such as

database management and server operations. This

setup ensures that the digital twin is always avail-

able with minimal latency and improved security as

compared to architectures relying on public or remote

clouds.

As shown in Figure 1, each of the subsystems

–Digital Twin, Physical Twin, and Virtual Reality–

comes with its designated SDK. These SDKs stream-

line the process of reproducing the system or creating

other Digital Twins for customized use. Instead of

building everything from scratch, users can rely on the

SDKs to generate the essential scripts for all subsys-

tems. They can then edit and customize these scripts

as needed to suit their specific requirements.

The hand gesture control interface was imple-

mented using an Oculus Quest 2 and its enabling

SDKs. Oculus Quest 2 provides an affordable entry

point into Virtual Reality and offers users an immer-

sive VR experience. To enable the user to control

both the AGV and the robotic arm in a fully immer-

sive environment, we utilized the XR Controller pack-

age in Unity. This package integrates the Input Sys-

tem and translates tracked input from controllers into

XR interaction states. Specifically, we used Unity’s

OpenXR XRSDK framework, which allows for ro-

bust interaction with VR hardware.

3 VIRTUAL REALITY

INTEGRATION WITH A

DOCKERIZED PRIVATE

CLOUD

The system presents a comprehensive framework for

integrating Virtual Reality with a Dockerized private

cloud infrastructure to benefit from the minimized

communication latency between both the physical and

digital assets and the VR headset. Our work builds

upon the findings of a previous work in which Ellethy

et al. (Ellethy et al., 2023) highlighted the benefits

of using a private cloud over a public one. A com-

parative study was conducted to evaluate the latency

and accuracy of a Digital Twin using different cloud

systems. The results showed that a local cloud was

5.5 times faster than a public cloud when transmitting

an average data size of 128 bytes. In a local network

A Proposed Immersive Digital Twin Architecture for Automated Guided Vehicles Integrating Virtual Reality and Gesture Control

269

environment, such as a Wireless Local Area Network

(WLAN), data does not need to travel long distances

or pass through multiple servers, as it does in public

cloud infrastructures like AWS. This significantly re-

duces network latency between physical and digital

assets, resulting in faster response time and more ac-

curate real-time control, which are critical for AGV

operations and enhancing the immersion of VR envi-

ronments through a synchronized DT.

The integration of VR with a Dockerized private

cloud plays a significant role in enhancing user inter-

action with the digital asset. VR provides immersive

interaction for the user by simulating its presence in

this digital environment, which is an ideal tool for in-

terfacing with DTs. The choice of Unity as the holder

of the digital asset instead of Gazebo was driven by

several factors. Primarily, Gazebo plugins for deploy-

ing the system to VR headsets have not been updated

to keep pace with the rapid development the industry

has seen in recent years. Additionally, Unity provides

greater flexibility for creating custom environments

and integrating advanced graphic features. Most no-

tably, Unity excels in supporting innovative interac-

tive input interfaces, such as hand gestures. In gen-

eral, the ROS-Unity3D architecture for the simulation

of mobile ground robots proved to be a viable alterna-

tive for ROS-Gazebo and the best option to integrate

VR into the system (Platt and Ricks, 2022). Due to

the mentioned reasons, the common practice among

robotics developers and researchers is to divide the

system into two subsystems: Ubuntu for interaction

with the physical asset and Windows for the VR de-

velopment in Unity.

The system’s latest update involves creating a

Unity scene to host the digital simulation.

Map: The environment was first constructed on

an Ubuntu machine using the slam gmapping node.

The mesh files were then transferred to the Unity

project on a Windows machine, where surfaces and

colliders were added to the walls to enhance realism.

URDF Importer: The Unified Robotics Descrip-

tion Format (URDF) Importer is a Unity robotics

package that enables the import of robot assets de-

fined in the URDF format into Unity scenes. The

imported asset retains its geometry, visual meshes,

kinematic, and dynamic attributes. The Importer

leverages PhysX 4.0 articulation bodies to parse the

URDF file. Using the URDF Importer, the Turtle-

Bot3 OpenMANIPULATOR-X was imported, and

additional objects were added to replace some of the

default actuation scripts, as advised by the package

development team. Customized scripts were imple-

mented to better represent the AGV and its robotic

arm within Unity.

3.1 Robotic Arm Integration

The TurtleBot3 was augmented with a five-degree-

of-freedom robotic arm. The arm is powered by the

TurtleBot3 OpenCR board while its servo motors are

connected to a servo motor controller board for eas-

ier setup. The latter board is connected to the RPi and

communicates with it using the I2C protocol, a simple

serial protocol used for communication between two

devices or chips in an embedded system, to receive

commands and control the arm.

To digitally represent the robotic arm, we uti-

lized the OpenMANIPULATOR-X model, which is

mounted on the TurtleBot3 and imported into Unity.

The arm follows the same three-layer architecture as

the TurtleBot3, with two separate ROS Masters: one

for the Physical Twin and the other for the Digital

Twin. The hardware layer of the Digital Twin is re-

sponsible for collecting the sensory data from the arm,

specifically the joint state data. This data is orga-

nized and sent to the middleware layer, which propa-

gates it to the cloud layer via web sockets. Once the

data reaches the cloud, it is processed and sent back

through the same layers in reverse, eventually reach-

ing the hardware layer of the physical asset to actuate

the robotic arm.

The robotic arm utilized underwent meticulous as-

sembly via piece-by-piece integration, following the

manufacturer’s instructions. During the assembly se-

quence, linkages were established between the com-

ponents. Each connection necessitated proper execu-

tion to ensure the arm’s proper functioning. Given the

study’s specific demands, minor modifications were

implemented on the arm. Despite its six servo motors,

only five were ultimately employed in the experiment

to match the Digital Twin in the degrees of freedom.

Of the accessories initially included, only a select few

were deemed suitable for the task; the arm grabber re-

quired replacement. This modification resulted from

uncertainties regarding its precision in the execution

of object manipulation tasks. The robotic arm was

mounted on an additional surface placed on top of the

TurtleBot3 platform to integrate the robotic arm into

the experimental setup. This position was chosen to

prevent interference with the LIDAR sensor and, at

the same time, to keep the balance of the TurtleBot3.

The robotic arm consists of five servos, each of-

fering a range of 180 degrees. Each servo motor was

strategically placed to enable precise control over the

arm’s movements. Operating particularly, a merger of

such functions:

• Servo Motor 1 facilitates the rotation of the arm

from side to side, serving as the base of its move-

ment.

GRAPP 2025 - 20th International Conference on Computer Graphics Theory and Applications

270

• Servo Motor 2 controls the forward and backward

motion of the arm, allowing it to extend and re-

tract as needed.

• Servo Motor 3 is responsible for the vertical

movement of the upper half of the arm, enabling

it to adjust its height.

• Servo Motor 4 controls the opening and closing of

the gripper mechanism, enabling the arm to grasp

and release objects.

• Servo Motor 5 provides control over the gripper it-

self, allowing precise manipulation during object

handling tasks.

Overall, the robotic arm was integrated into the

experimental setup with careful consideration of its

design and functionality. By selecting appropriate

components and making necessary modifications, the

arm was tailored to meet specific needs while ensur-

ing reliable performance and accurate data collection.

3.2 Unity-ROS Messages and

Communications

Integrating VR with ROS necessitated incorporating

Unity into the project due to its robust support for

3D simulation and VR technologies (Allspaw et al.,

2023). Unity-ROS communication was established

using a ROS-TCP connector, which involved initiat-

ing a TCP endpoint on the ROS Master of the Digi-

tal Twin. Within Unity, publishers and subscribers for

various topics were implemented to facilitate commu-

nication with the ROS network. Through the server

endpoint, serialized ROS messages—such as distance

measurements from the distance-sensor topic, cam-

era feed frames, Twist messages, and joint-state mes-

sages—are exchanged between Unity and the ROS

Master of the Digital Twin.

As illustrated in Figure 2, the data flow between Unity

and ROS comprises multiple critical stages. Unity

serves as a simulation environment, generating mes-

sages that are published to topics created by the Dig-

ital ROS Master. These messages are subsequently

transmitted to the physical ROS Master via the Dock-

erized private cloud using web sockets. This setup

ensures reliable and low-latency communication be-

tween the digital simulation and the physical robot.

The figure illustrates how serialized ROS messages

are propagated from Unity to the physical asset, with

each message type—such as sensor readings or actua-

tion commands—managed through designated topics.

This architecture is essential for maintaining synchro-

nization between virtual and physical systems, ensur-

ing accurate and real-time operation of robotic assets.

Figure 2: Data flow between ROS and Unity.

4 METHODS TO IMPROVE

HUMAN-ROBOT

INTERACTION USING VR

4.1 Heat Map Implementation

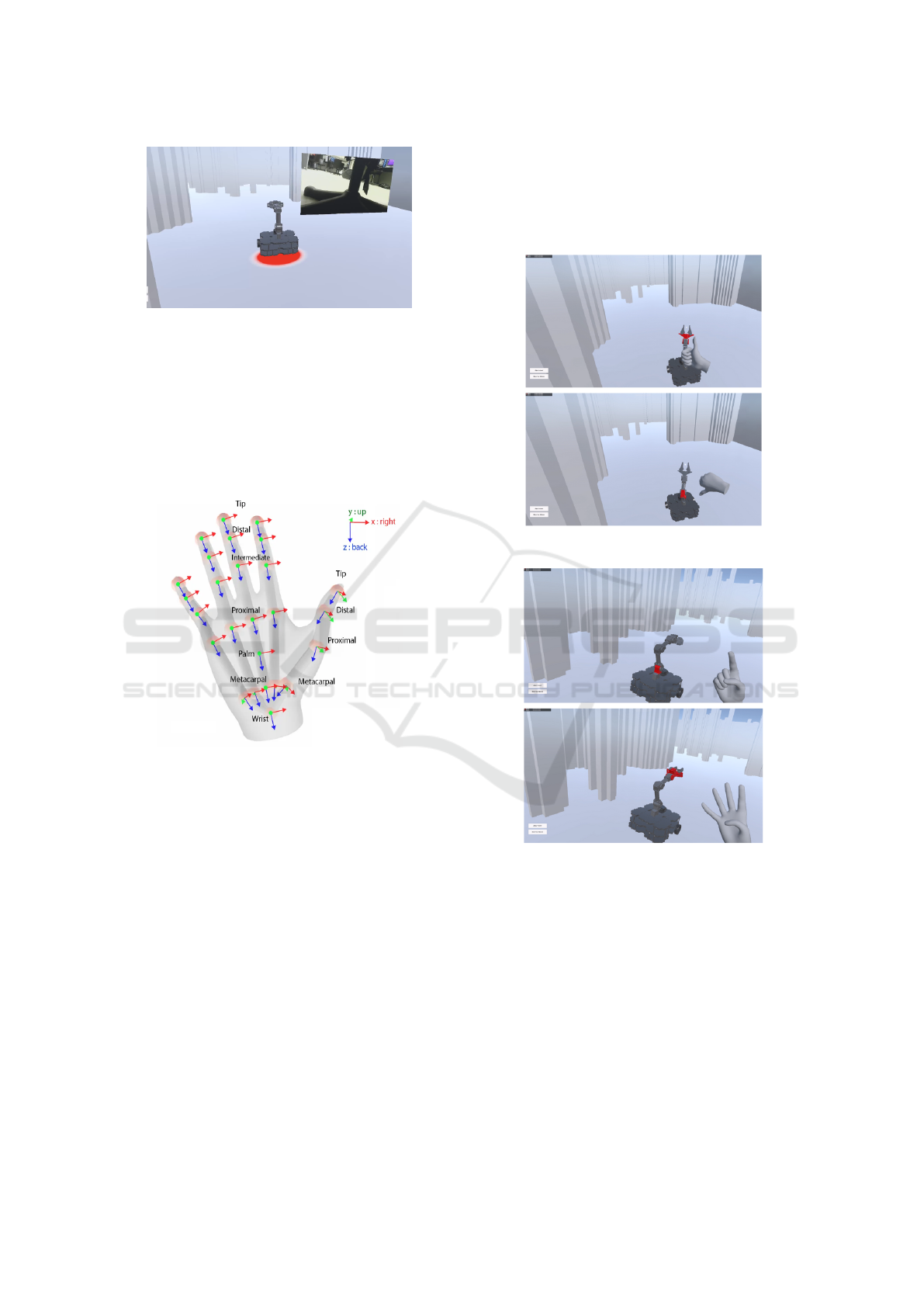

To enhance the user’s situational awareness, we aug-

mented the AGV with several ultrasonic sensors to

implement a Heat Map. This map is integrated on

a quad with a shader and is applied only to the sur-

rounding environment of the TurtleBot3, improving

its ability to detect and react to nearby objects. Ultra-

sonic sensors are strategically positioned around the

TurtleBot3 to ensure full coverage, allowing for ob-

stacle detection from all directions. The Heat Map is

dynamically adjusted by the shader to the red color,

with the intensity of the color depending solely on the

distance from the TurtleBot3 to the surrounding ob-

ject. The distance is determined by the data received

from the ultrasonic sensor. The closer the object, the

more intense the red color is. A threshold is set to de-

termine the change of the color intensity. For exam-

ple, a distance between 1 and 20 cm indicates that the

object is very close, causing the red color to be very

intense. A distance between 20 and 30 cm makes it

less intense, and so on. If the distance is above 50 cm,

no Heat Map is shown, indicating that the TurtleBot3

is navigating in a safe environment.

In addition to providing a visual representation,

the ultrasonic sensors trigger real-time responses

from the AGV based on proximity to obstacles. If an

object is detected within the 1-20 cm range, the AGV

automatically adjusts its movement to avoid collisions

by slowing down or stopping. This reactive behavior

not only provides a visual representation of the pres-

ence and proximity of obstacles but also ensures that

the AGV can operate safely in a dynamic environment

by actively avoiding obstacles. Figure 3 shows an im-

age of how the Heat Map looks when an obstacle is

within the 1-20 cm range from an obstacle.

A Proposed Immersive Digital Twin Architecture for Automated Guided Vehicles Integrating Virtual Reality and Gesture Control

271

Figure 3: The heat map representation.

4.2 Arm Actuation Methods

To address the limitations of traditional robotic arm

actuation methods, we introduce three actuation

methods based on the user’s hand gestures and move-

ments. These methods aim to provide the user con-

trolling the AGV and the robotic arm with a more im-

mersive experience.

Figure 4: XR-Hand Joints.

Hand tracking and gesture recognition of the Ocu-

lus Quest 2 are implemented using the XR Hands

SDK in Unity, which tracks the user’s hand move-

ments. The headset’s cameras stream live video to

Unity, where the XR Hands package provides real-

time tracking of the (x,y,z) coordinates of several

joints of the hands including the wrist, the palm,

and individual finger joints as demonstrated in Fig-

ure 4. Moreover, the XR hands package provides ges-

ture recognition based on the hand’s shape and ori-

entation. Finally, the aforementioned collected data

about the user’s hand is used to design and imple-

ment three different VR-based methods to actuate the

robotic arm. After recognizing the user’s hand ges-

tures and movements, they are mapped to actuation

commands. These commands are propagated from

Unity to the digital ROS Master through a TCP end-

point as demonstrated in Figure 2. Once the messages

are received, the Dockerized private cloud is then re-

sponsible for managing the communication between

the digital and physical ROS Masters. It forwards the

ROS messages to the physical ROS Master, which is

responsible for actuating the physical assets based on

the received ROS messages.

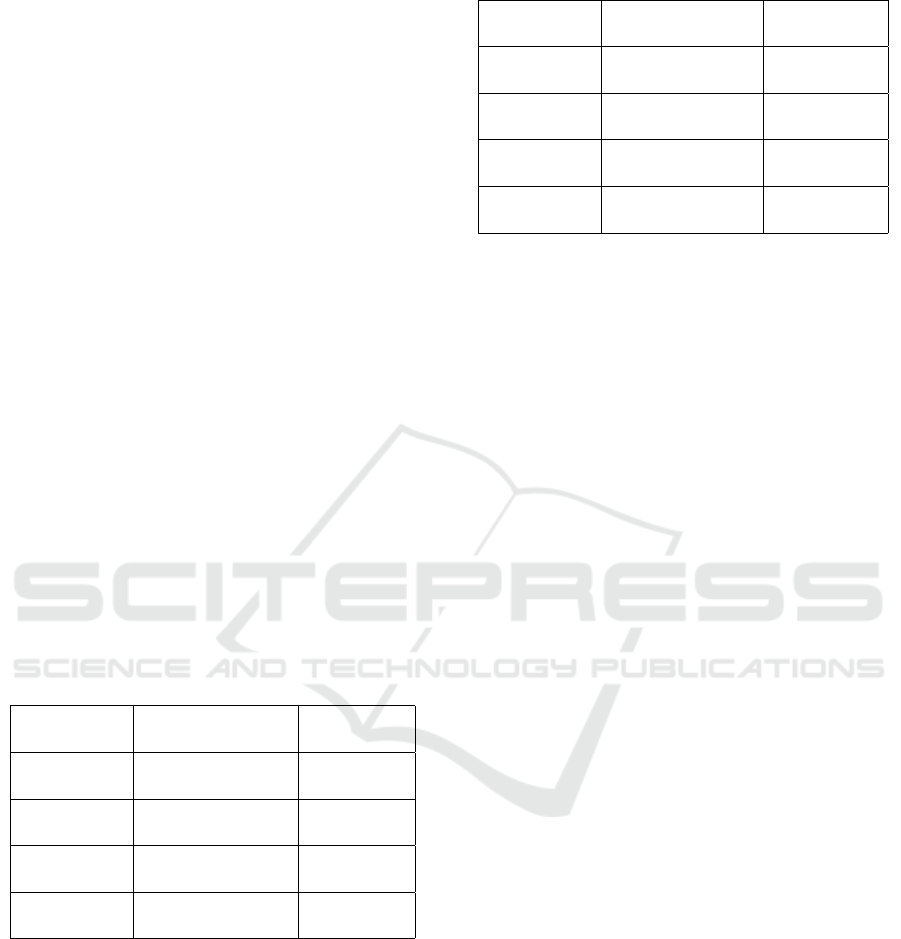

Figure 5: Method 1 : Sequential Selection.

Figure 6: Method 2 : Numerical Selection.

The three actuation methods are:

Method 1, Sequential Selection: It utilizes hand ges-

ture recognition of the user’s hand to perform the se-

lection of the target joint out of the five joints in the

robotic arm, in addition to the actuation of the se-

lected joint shown in Figure 5, given that the joints

are numbered from 1 to 5. The user can switch be-

tween the five joints sequentially, using thumbs-up

and thumbs-down gestures to switch to the next upper

or lower joint, respectively. To actuate the arm based

on the selected joint, highlighted in red in Figure 5,

the opening or closing hand gesture can be detected

and used to increase or decrease the angle of the se-

GRAPP 2025 - 20th International Conference on Computer Graphics Theory and Applications

272

lected joint, respectively. This method utilizes two

separate and independent procedures for the selection

and actuation of the arm joints.

Method 2, Numerical Selection: Similar to method

1 in terms of the actuation of the selected joint. How-

ever, the selection is numerical. The user shows the

index of the target joint using their hand, and the re-

spective joint is selected and highlighted in red, as

demonstrated in Figure 6. This method also separates

the selection and actuation of the arm joints.

Figure 7: Method 3: Mixed Selection and Actuation.

Method 3, Intuitive Control: This method differs

from the first two by integrating the selection and

actuation of the joints together. In this approach,

the user’s hand movements are tracked, enabling the

robotic arm to replicate them as demonstrated in Fig-

ure 7 (e.g., if the user moves their hand upward, the

robotic arm moves upward). The hand’s position

along the x-axis controls the first joint (Servo Motor

1), allowing the arm to rotate, while movements along

the z-axis control the second joint (Servo Motor 2) for

forward and backward motion. Similarly, movements

along the y-axis adjust the third joint (Servo Motor

3) for upward and downward motion. The orientation

of the user’s hand dictates the position of the fourth

joint (Servo Motor 4); for example, when the hand

points downward, the gripper points downward, and

vice versa. Additionally, hand gestures, such as open-

ing or closing the palm, control the gripper (Servo

Motor 5). The gripper closes when the user’s hand

is closed and opens when the hand is open.

4.3 User Study of the Arm Actuation

Methods

To ensure an immersive user experience for the hu-

man controller, a user study was conducted with two

main objectives. The first objective was to test the in-

tegration of VR features into the system and evaluate

the performance of the VR-based features and actu-

ation methods compared to traditional methods (e.g.,

keyboard controls and dashboard buttons). The sec-

ond objective was to compare the three VR-based ac-

tuation methods for the robotic arm, identify the ad-

vantages and shortcomings of each, and determine the

most effective and immersive method based on the

collected data.

(a) Task 1: move the arm

close to the object.

(b) Task 2: pick up the ob-

ject (e.g., pen).

(c) Task 3: move the pen

to the target position.

(d) Task 4: Drop the object

(e.g., the pen).

Figure 8: The sequence of tasks in the user study.

The user study was conducted as follows: 15 vol-

unteers were randomly selected from the university,

and most of the volunteers were asked to test two of

the actuation methods, as we assumed that asking ev-

ery volunteer to test each of the actuation methods

could be overwhelming. In an effort to ensure fair-

ness and reduce bias, the methods each user evaluates,

as well as their evaluation order, are determined ran-

domly. Before each volunteer begins evaluating an

actuation method, they are provided with a detailed

explanation of control instructions for the respective

method. Once they complete the evaluation of the

first selected method, they are asked to take a 15-

minute break to reset and minimize confusion with

the upcoming selected method for evaluation. After

the break, the control instructions for the next method

are explained before the volunteer begins the subse-

quent evaluation. For each evaluation procedure, vol-

unteers were then asked to wear the Quest 2 headset

and perform a sequence of four tasks:

1. Move the arm close to the target object(e.g., a

pen), shown in Figure 8(a).

A Proposed Immersive Digital Twin Architecture for Automated Guided Vehicles Integrating Virtual Reality and Gesture Control

273

2. Use the gripper to pick up the target object, as

shown in Figure 8(b).

3. Move the arm to the target position, as shown in

Figure 8(c).

4. Open the gripper and drop the object to the target

position, as shown in Figure 8(d).

To achieve the objectives of this study, both quanti-

tative and qualitative metrics were specified. For the

quantitative metrics shown in Table 1, two key mea-

sures were chosen: (1) Completion time, defined as

the average time required for a volunteer to complete

the four aforementioned tasks; and (2) Offset error,

which represents the distance between the target po-

sition of the object (e.g., a pen) and its final position

after the volunteer’s attempt to complete the sequence

of tasks. These quantitative metrics provide objec-

tive and measurable data that helps assess the devel-

oped actuation methods. The completion time reflects

how efficient each method is, while the offset error

measures how accurate volunteers were in complet-

ing tasks. In contrast, qualitative metrics shown in

Table 2 capture subjective user experiences, focusing

on factors such as the intuitiveness of each actuation

method and the level of user satisfaction. These met-

rics are crucial for evaluating the actuation methods,

as they offer insights into how users perceive the sys-

tem and help assess the user-friendliness of the devel-

oped features, insights that cannot be easily inferred

from quantitative metrics alone.

Table 1: Quantitative Results of the user study.

Method Completion Time Offset error

(in minutes) (in cm)

Method 0 04:13 7.3

(Dashboard)

Method 1 03:36 6.67

(Sequential)

Method 2 03:34 7.72

(Numerical)

Method 3 01:28 2.2

(Intuitive)

As demonstrated in Table 1, all three novel VR-

based actuation methods resulted in faster comple-

tion times compared to the traditional dashboard but-

ton control method. The average completion time

for the traditional method was over 4 minutes (4:13),

while the average completion time for the VR-based

methods was notably lower, particularly for method

3 (1:28). These results clearly indicate that VR in-

tegration significantly improved efficiency, with vol-

unteers completing the tasks more quickly using the

VR-based actuation methods. Among the VR actu-

ation methods, method 3 proved to be the most ef-

Table 2: Qualitative Results of the user study.

Method User-Satisfaction Intuitiveness

(out of 5) (out of 5)

Method 0 3.1 2.4

(Dashboard)

Method 1 3.83 3.33

(Sequential)

Method 2 3.58 3.56

(Numerical)

Method 3 4.55 4.90

(Intuitive)

ficient (1:28), showing a substantial time difference

compared to methods 1 and 2 (around 3:30).

For the average offset error measurements shown

in Table 1, the offset error of the traditional dashboard

actuation method was not much larger than that of

methods 1 and 2, with all methods exhibiting an aver-

age offset error of around 7 centimeters, except for the

third VR-based method. Method 3 demonstrated sig-

nificantly better accuracy, with an average offset error

of only 2.2 centimeters, making it the most efficient

and accurate of the proposed methods.

The qualitative results presented in Table 2 show

that the traditional actuation method (method 0) re-

ceived the lowest user ratings in both intuitiveness

(2.6/5) and user satisfaction (2.1/5) compared to the

VR-based actuation methods. On the other hand,

the third VR-based method received the highest user

ratings, with a score of 4.9/5 for intuitiveness and

4.55/5 for user satisfaction, significantly outperform-

ing methods 1 and 2. The high user ratings for in-

tuitiveness, particularly for method 3, likely explain

why volunteers were able to complete the sequence

of tasks much faster (as shown in Table 1) compared

to the traditional dashboard method.

5 CONCLUSION AND FUTURE

WORK

Digital Twins are powerful tools that can be utilized

throughout the whole manufacturing life-cycle, from

designing and planning to maintaining existing facili-

ties. Additionally, they are beneficial for telepresence,

remote control, and situations where human presence

is risky, such as rescue missions. This paper presents

a novel and comprehensive framework for integrat-

ing Virtual Reality with a Digital Twin architecture,

using a Dockerized private cloud for minimal com-

munication latency. Our project yields significant in-

sights into the practical applications of Digital Twins

in robotics.

Through this research, the feasibility and effec-

GRAPP 2025 - 20th International Conference on Computer Graphics Theory and Applications

274

tiveness of integrating Digital Twins with robotic sys-

tems have been demonstrated, particularly in areas

such as robotic arm control, and virtual reality inter-

faces. The user study, in particular, shows the advan-

tages of integrating the VR interfaces in robot and

arm control. As the results of the user study clearly

show that the VR-based methods have generally per-

formed better than the traditional dashboard control

in almost all of the aforementioned quantitative and

qualitative metrics. Moving forward, future work will

focus on refining and expanding the capabilities of

Digital Twins in robotics for critical missions. This

includes exploring advanced AI algorithms for ob-

stacle avoidance and autonomous path suggestions,

as well as developing robust communication systems

for remote operation. Additionally, efforts will be

made to secure data within the Dockerized private

cloud to ensure the safety and integrity of sensitive

information. Future work will also focus on optimiz-

ing the system for portable, low-power hardware to

make it more adaptable in real-world scenarios. Fur-

thermore, we aim to extend the range of hand ges-

tures for actuation and allow user personalization to

improve the system’s versatility and user experience.

Finally, real-world testing and validation of our ap-

proach in simulated and controlled rescue scenarios

will be conducted, with the ultimate goal of deploy-

ing these technologies in emergency response situa-

tions to save lives and mitigate disaster impacts.

REFERENCES

Abdulla, R., Gadalla, A., and Balakrishnan, A. (2020). De-

sign and implementation of a wireless gesture con-

trolled robotic arm with vision.

Alexandru, M., Dragos, C., and Zamfirescu, B.-C. (2022).

Digital twin for automated guided vehicles fleet man-

agement. Procedia Computer Science.

Allspaw, J., LeMasurier, G., and Yanco, H. (2023). Com-

paring performance between different implementa-

tions of ros for... OpenReview.

Amsters, R. and Slaets, P. (2020). Turtlebot 3 as a robotics

education platform. In Chapter in Robotics Educa-

tion.

Attaran, M. and Celik, B. G. (2023). Digital twin: Bene-

fits, use cases, challenges, & opportunities. Decision

Analytics Journal.

Crespi, N., Drobot, A., and Minerva, R. (2023). The digital

twin: What & why? In Springer eBooks.

Ellethy, M. et al. (2023). A digital twin architecture for

automated guided vehicles using a dockerized private

cloud.

Estefo, P., Simmonds, J., Robbes, R., and Fabry, J. (2019).

The robot operating system: Package reuse and com-

munity dynamics. Journal of Systems and Software,

151:226–242.

Gallala, A., Kumar, A. A., Hichri, B., and Plapper, P.

(2022). Digital twin for human–robot interactions by

means of industry 4.0 enabling technologies. Sensors,

22(13):4950.

Gigante, M. (1993). Virtual reality: Definitions, history and

applications.

Mazumder, A. et al. (2023). Towards next-generation digital

twin in robotics: Trends, scopes, challenges, & future.

Heliyon, 9(2).

Pirker, J., Loria, E., Safikhani, S., K

¨

unz, A., and Rosmann,

S. (2022). Immersive virtual reality for virtual and

digital twins: A literature review to identify state of

the art and perspectives. In 2022 IEEE Conference

on Virtual Reality and 3D User Interfaces Abstracts

and Workshops (VRW), pages 114–115, Christchurch,

New Zealand.

Platt, J. and Ricks, K. (2022). Comparative analysis of ros-

unity3d and ros-gazebo for mobile ground robot sim-

ulation. Journal of Intelligent & Robotic Systems.

Riley, J. M. and Endsley, M. R. (2004). The hunt for sit-

uation awareness: Human-robot interaction in search

and rescue. Proceedings of the Human Factors and

Ergonomics Society Annual Meeting, 48(3):693–697.

Saddik, A. E. (2018). Digital twins: The convergence of

multimedia technologies. IEEE Journals & Magazine

– IEEE Xplore.

A Proposed Immersive Digital Twin Architecture for Automated Guided Vehicles Integrating Virtual Reality and Gesture Control

275