On the Quest for an NLP-Driven Framework for Value-Based

Decision-Making in Automatic Agent Architecture

Alicia Pina-Zapata

a

and Sara Garc

´

ıa-Rodr

´

ıguez

b

CETINIA, Rey Juan Carlos University, Madrid, Spain

Keywords:

Automatic Agents, Value-Aware Engineering, Natural Language Processing.

Abstract:

As automatic agents begin to operate in high-stakes areas like finance and healthcare, the alignment of AI goals

with human values becomes increasingly critical, addressing the so-called “alignment problem”. To tackle this

challenge, the paper proposes the architecture of a Value-Based autonomous Agent capable of interpreting its

environment through the lens of human values and guiding its decission-making processes in accordance with

its own values. The agent utilizes a natural language processing (NLP) technique to detect and assess the

values associated with various actions, selecting those most aligned with its moral guidelines. The integration

of NLP into the agent’s architecture is crucial for enhancing its ability to make autonomous value-aligned

decisions, offering a framework for incorporating ethical considerations into AI development.

1 INTRODUCTION

Artificial Intelligence (AI) systems are already well-

integrated in our society. The scope of this technol-

ogy spans from automated agents that manage home

energy use or recommendation systems that help you

make the perfect choice for your next movie night to

autonomous vehicles, financial algorithms or medical

diagnosis assistance.

This highly innovative field has grown rapidly in

recent years and represent numerous advancements

and benefits that are part of a major technological

breakthrough. However, as autonomous agents be-

gin to make decisions in high-risk domains, such as

finance or healthcare, it becomes crucial to exercise

caution.

One of the primary risks of AI, according to Stu-

art Russell, is that autonomous agents can inadver-

tently cause harm by pursuing goals that conflict with

human values. This issue is often referred to as the

“alignment problem” (Russell, 2022). For instance,

an intelligent agent tasked with reducing pollution

might shut down entire industries without consider-

ing the social and economic consequences. His view

focuses on ensuring that AI systems are benefitial,

controllable and aligned with human well-being. This

perspective is closely related to the concept of value-

a

https://orcid.org/0009-0005-0412-4128

b

https://orcid.org/0009-0001-4880-605X

aware engineering, where the goal is to incorporate

ethical and social considerations into the design and

deployment of technology.

Within this framework arises the challenge of de-

veloping systems or intelligent agents capable of in-

terpreting the environment in terms of human val-

ues—referred to as value-aware agents (Osman and

d’Inverno, 2023). Once an agent can reason about

values, it is crucial that it also acts according to its

own moral or value guidelines, ensuring it can make

value-aligned decisions.

This paper presents the architecture of an au-

tonomous agent designed to guide its behavior based

on human values. The agent can infer which values

are promoted or demoted by a set of possible actions

and select the one most aligned with its own values.

To detect the values promoted or demoted by a partic-

ular option, an NLP technique is employed to extract

human values from the text description of the option.

This value-detection model consists of a pre-trained

text analyser and a neural network to determine which

values, ranked by their importance, are implicit in the

descriptions of the actions. Additionally, an aggre-

gation function is used to integrate the agent’s values

into the decision-making process, determining the de-

gree of alignment between the agent and each option.

The article’s content is structured as follows: Sec-

tion 2 presents related work concerning value con-

cepts and their computational extensions, along with

a brief review of existing value detection techniques.

Pina-Zapata, A. and García-Rodríguez, S.

On the Quest for an NLP-Driven Framework for Value-Based Decision-Making in Automatic Agent Architecture.

DOI: 10.5220/0013183300003890

In Proceedings of the 17th International Conference on Agents and Artificial Intelligence (ICAART 2025) - Volume 3, pages 797-804

ISBN: 978-989-758-737-5; ISSN: 2184-433X

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

797

In Section 3, the proposed Value-Based Agent is ex-

plained, with a particular focus on the integration of

the NLP model into the agent’s architecture. In Sec-

tion 4, a real-world domain is introduced where the

simulations will be conducted to observe the behav-

ior of diferent agents. Finally, conclusions and future

work are presented in Section 5.

2 STATE OF THE ART

A wide range of research across psychology, phi-

losophy and social sciences agree that values guide

human behaviour, playing a crucial role in human

decision-making processes. Related works regarding

the integration of human values in automatic agents

decision-making schemes include research on value-

based formal reasoning (VFR) frameworks (Wyner

and Zurek, 2024), value-based argumentation frame-

works (VAFR) (van der Weide et al., 2010) or the use

of LLM to generate responses that align with human

values (Abbo et al., 2024).

In state-of-the-art proposals, values are engi-

neered into the decision-making architecture of au-

tonomous agents as automatic behaviour, learned be-

haviour or through value-based reasoning (Noriega

and Plaza, 2024). When considering the implemen-

tation of a value-based computational framework, it

is essential to formally establish an explicit repre-

sentation of human values and their relations. This

involves defining sets of values and creating tax-

onomies. Among other theoretical frameworks that

structure value concepts, the Moral Foundations The-

ory (MFT) (Haidt, 2013) proposes six fundamental

moral values as universal across cultures: care, fair-

ness, loyalty, authority, sanctity, and liberty. An-

other well established framework is the Basic Hu-

man Values (BHV) (Schwartz, 1992), also known as

Schwartz’s Value Theory. This widely recognized

theory, which explores values and the relationships

between them, has been integrated into agents’ ar-

chitectures in various ways (Heidari, 2022) (Karanik

et al., 2024). In this article, Schwartz’s Value Theory

serves as the foundation for the proposed value-based

reasoning architecture, which will facilitate the im-

plementation of a value-driven agent.

According to Schwartz, values are beliefs that

relate to desirable end states or modes of conduct,

which go beyond specific situations and guide the

selection or evaluation of behaviour, people, and

events. In BHV, Schwartz proposes ten fundamen-

tal values, based on the motivational goal they ex-

press: self-direction, stimulation, hedonism, achieve-

ment, power, security, conformity, tradition, benevo-

lence and universalism. In addition to identifying ten

basic values, the theory explicates the structure of dy-

namic relations among them. One basis of this value

structure is the idea that pursuing the promotion of a

specific value will tipically be congruent with foster-

ing some values but will create conflict with others.

It is following this idea that he defines two bipo-

lar dimensions in which the 10 basic values are clas-

sified. One refers to the emphasis of values on per-

sonal interests, or on the well-being of others: so-

cial focus vs personal focus. The second dimension

captures the conflict between values that emphasize

personal growth and exploration and those that fo-

cus on maintaining stability and preventing potential

risks: anxiety-free vs anxiety-based. This classica-

tion leads to four main groups of values: openness

to change, conservation, self-enhancement and self-

transcendence.

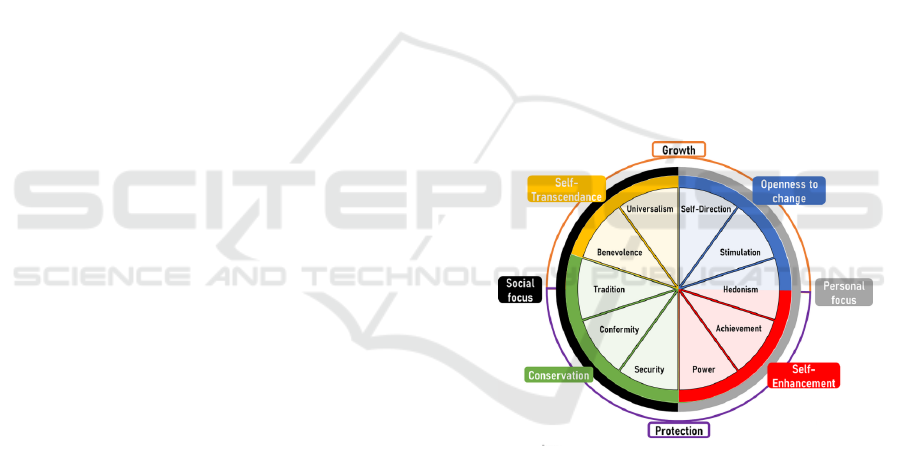

The circular arrangement of the 10 basic values

following the previous dimensions leads to a moti-

vational continuum, as in Figure 1. Values located

closer together on the circle are motivationally re-

lated, while those farther apart tend to be motivation-

ally opposed.

Figure 1: Basic Continuum (Schwartz, 1992).

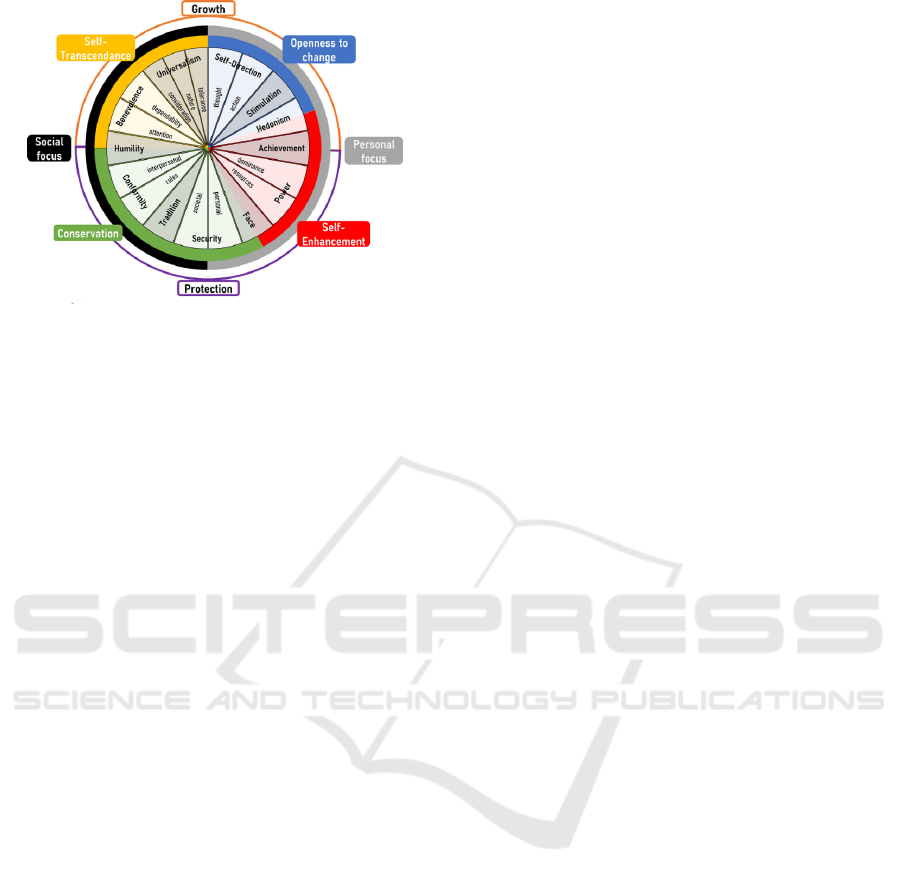

In an extension of his theory (Schwartz et al.,

2012), Schwartz refines the original 10 values into 19

to create a more comprehensive framework for under-

standing human motivations. This expansion allows

for greater specificity in capturing diverse human ex-

periences and highlights the complexity of value in-

teractions across different contexts. The resulting ex-

tended continuum can be seen in Figure 2.

When making a value-aligned decision, individu-

als evaluate and select behavior that maximizes har-

mony with their values. The first step is to identify

the degree to which an available action promotes or

demotes each of the values (ex: SVT 19 values). The

second step is to determine the degree of alignment

ICAART 2025 - 17th International Conference on Agents and Artificial Intelligence

798

Figure 2: Extended Continuum (Schwartz et al., 2012).

of the individual with every possible choice. It is then

that conflicts may arise, when trying to seek the pro-

motion of all values. For example, one action might

promote self-direction, while another action might

prioritize security. In such cases, the individual must

decide between the promotion of two diferent values.

According to van der Weide (van der Weide et al.,

2010), each person can determine the importance they

give to each of their values, creating a personal Value

System that guides their decision-making. This can

help resolve conflict between values, by stablishing

an order of preference between them.

Given this two concepts, the promotion magni-

tudes that relate every possible action with the values

and an individual’s own value system, the value align-

ment can be determined as a conjunction of both me-

assures. Based on this concept of calculating align-

ment by combining or aggregating the two magni-

tudes, an automatic agent can be built so that it repli-

cates the value-aligned decision-making process of an

specific profile of individual (Karanik et al., 2024).

In this state-of-the-art Value-Based architecture,

the agent receives a set of actions along with a pre-

computed list indicating the values that each action

promotes or demotes. This implies that the agent

needs someone to interpret the set of actions in terms

of values for them, which can be a major drawback

when the goal is to construct an autonomous Value-

Based Agent, as the agent depends on this value in-

terpreter. The automatization of the value-elicitation

process could enhance the agent’s autonomy while

also eliminating the bias that a human annotator might

introduce. If we consider the characterization of each

action through a textual description that reflects the

underlying motivations and objectives driving it, the

value-extraction problem can be reframed as a value-

detection in text problem.

This problem is still on ongoing challenge, with

approaches that go from simple word-count-based

methods (Fulgoni et al., 2016) to feature-based

methods utilizing word embeddings and sequences

(Kennedy et al., 2021). The used methods can be

classified in unsupervised or supervised. Some su-

pervised methods are based on The Frame Axis tech-

nique (Hopp et al., 2020), that projects words onto

micro-dimensions defined by two opposing sets of

words to analyze their semantic orientation without

labeled data, and others use the extended Moral Foun-

dation Dictionary (MFD), which includes words as-

sociated with virtues, vices, and moral aspects related

to the five dyads of Moral Foundations Theory (MFT)

(Mokhberian et al., 2020). Supervised techniques in-

clude performing multi-label classification, in which

each label corresponds to a specific human value. The

degree of association of the text with a category (hu-

man value) reflects the extent to which the text pro-

motes that value. In this group it can be highlighted

the use of NLP transformer-based models, such as

XLNET or BERT (Bulla et al., 2024).

The following section explains an extention of

the state-of-the-art Value-Based agent (Karanik et al.,

2024), focusing on the integration of a NLP model

into the agent’s architecture.

3 PROPOSED MODEL

The proposed model in this paper builds on the dis-

cussed Value-Based architecture concept. Along with

the value-detection model incorporation, further con-

tributions of this proposal include the use of the re-

fined SVT with 19 values, instead of the basic 10-

value theory used earlier or the inclusion of the con-

cept of negative promotion (i.e., demotion) of values,

which was absent in the base model, where only pos-

itive promotion was considered. This is an impor-

tant upgrade, as it better reflects real-world scenarios

where situations not only promote values positively

but can also demote them. It also allows for the ex-

pression of disagreement with the possible actions, in-

dicating a negative alignment with them. By consid-

ering both positive promotion and negative promotion

(demotion) of values, the model more accurately cap-

tures the dynamic and sometimes conflicting nature

of how values are influenced in real-life contexts. All

in all, the result is a value-aware and value-aligned

agent capable of perceiving its environment through

the lens of values and acting accordingly.

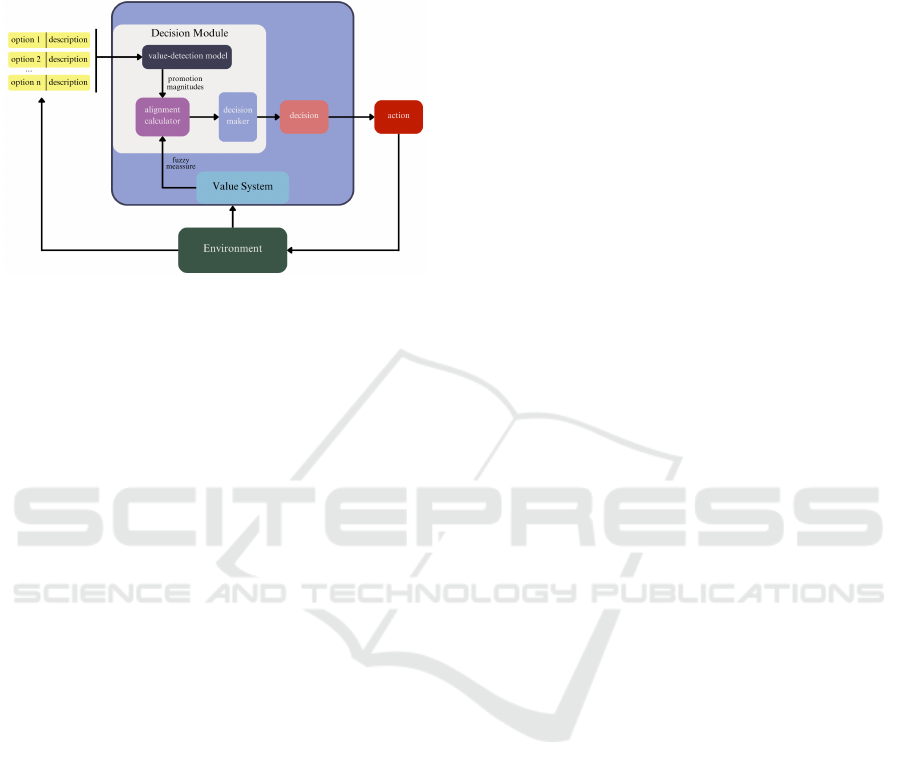

The proposed architecture for an autonomous

agent capable of making value-aligned decisions (see

Figure 3) consists of two main components: the

agent’s Value System, that represent its value pref-

erences, and a Decision Module, which simulates a

On the Quest for an NLP-Driven Framework for Value-Based Decision-Making in Automatic Agent Architecture

799

value-based decision-making process. The central

idea is that, given a set of options described in text,

the agent’s Decision Module is able to make decisions

guided by its Value System, and act in consequence.

Figure 3: Value-aligned Agent model.

The Decision module includes an NLP-based

value detection model that extracts the magnitudes of

value promotion and demotion from the text descrip-

tions of the actions. Based on these promotion and

demotion magnitudes, along with the agent’s Value

System, an alignment calculator computes the align-

ment magnitude for each option.

Once these magnitudes are calculated, the deci-

sion maker evaluates them to make a final decision.

This could involve selecting the action with the high-

est alignment if only one action is possible, or deter-

mining whether to proceed with each potential action

depending on the positivity or negativity of its align-

ment score. Finally, the agent will act according to

the decision made.

Next, a more in-depth analysis of the various com-

ponents will be presented.

3.1 Value System

As discussed in the previous section, the agent’s Value

System is designed to represent its preferences con-

cerning Schwartz’s 19 human values. These prefer-

ences can be captured by assigning importance mag-

nitudes to each individual value. However, following

the principles of Schwartz’s Value Theory (SVT), it

is crucial to not only consider values in isolation but

also the relationships and synergies between them.

This approach leads to evaluating the importance of

groups of values rather than solely focusing on indi-

vidual ones.

In line with prior models (Karanik et al., 2024),

a normalized fuzzy measure can be used to describe

the importance weights assigned to different value

groups. This allows for a more detailed representa-

tion, as it captures the interactions between values and

how they collectively influence the agent’s decision-

making process.

In this extension of the model, it is crucial to im-

pose a restriction on the values from the 19-refined-

values set that are result from the disaggregation of

one of the 10 Schwartz’s basic values. Specifically,

the sum of these sub-values’ individual importances

cannot exceed 1 (the maximum importance value al-

lowed for their corresponding higher-level Schwartz

value). This restriction is essential to preserve the

monotonicity property of the constructed fuzzy mea-

sure, which could otherwise be violated.

The computation of the fuzzy meassure (that is af-

terwards normalized) given a set of values is as fol-

lows:

ιω

i

({v

s

, ..., v

t

}) = ιω

i

({v

s

}) + ... + ιω

i

({v

t

})+

+

d p

∑

k=1

ic({v

1

, v

2

}

k

) × ιω

i

({v

1

}) × ιω

i

({v

2

}),

(1)

where d p is the number of distinct pairs within

the set and ic is the interaction coefficient used to

model the dynamic interaction of values. Following

Schwartz, three main interaction between values are

considered: (a) negative interaction between values

in the same wedge. Likely, an agent who prefers one

value will also prefer another of the same wedge, for

example, power and achievement, and the weight of

the importance of the group formed by both should

be less than the sum of their single weights (subaddi-

tive measure); (b) positive interaction between values

in opposite wedges. Due to it being unlikely that the

agent would prefer both values of opposite wedges,

such as power and universalism, the weight for this

group should be greater than the sum of their single

weights (superadditive measure) and (c) no interac-

tion between values in adjacent wedges. Values be-

longing to multiple wedges, such as hedonism, face

and humility, are considered to have a negative inter-

action with the values in the two wedges they are as-

sociated with and a positive interaction with the val-

ues in the other two wedges. The resulting expression

of the interaction coefficient is

ic({v

1

, v

2

}

k

) =

+0.25 v

1

, v

2

in opposite wedges

0 v

1

, v

2

in adjacent wedges

−0.25 v

1

, v

2

in the same wedge

(2)

In this way, the fuzzy measure constructed based

on the agent’s individual importance magnitudes over

each value will effectively capture and represent the

agent’s Value System.

ICAART 2025 - 17th International Conference on Agents and Artificial Intelligence

800

3.2 Decision Module

The Decision Module enables the agent to make deci-

sions aligned with its Value System. Firstly, the agent

should detect the promotions and demotions of values

for each possible action. Secondly, it needs to com-

pute its alignment with each option, using the promo-

tion magnitudes and the fuzzy measure that represents

the importance the agent assigns to each subset of val-

ues. Lastly, given the collections of all the alignment

magnitudes, the agent makes its final decision.

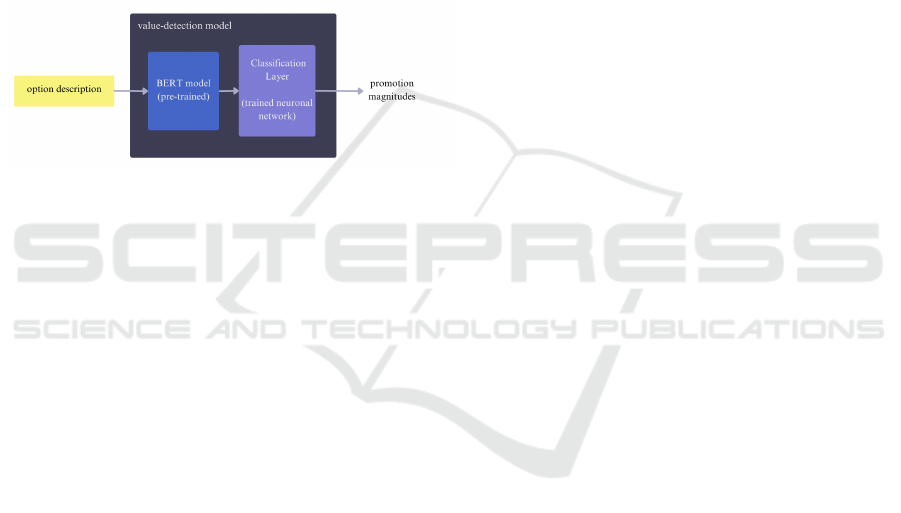

The value-detection model allows the agent to per-

ceive how each action promotes or demotes distinct

values. Given the text description of each available

action, this model outputs the promotion magnitudes

associated with each of them.

Figure 4: Value-detection model.

The proposed value-detection model, shown in

Figure 4, is structured into two fundamental compo-

nents: a pre-trained sub-model and a Classification

Layer. The former refers to the well-known BERT

(Bidirectional Encoder Representations from Trans-

formers) model, while the latter consists of a neu-

ral network that serves as the Classification Layer.

This layer allows to fine-tune the pre-trained model

to our specific task and is trained using a labeled

dataset (ValuesMLProject, 2024) consisting of text

fragments and the corresponding promotion or demo-

tion for each of the 19 Schwartz values.

The output of the model is a vector of promotion

magnitudes for the input action, representing the pro-

motion or demotion of each of the considered values.

Given the promotion magnitudes and the Value

System, the alignment of the agent with each action

is calculated using an aggregation function. The func-

tion selected in this paper is the signed Choquet’s in-

tegral (Choquet, 1954). The positive Choquet’s in-

tegral is computed considering the promoted values,

while the negative Choquet’s integral is calculated us-

ing only the demoted values. This two integrals repre-

sent the positive and negative alignment of the agent

with the action, respectively. Then, the alignment is

computed by subtracting the negative alignment from

the positive alignment.

Once the alignment with each possible action is

computed, the agent can make a decision, that ranges

from perceiving each action as desirable or undesir-

able to selecting the most aligned action.

4 CASE OF STUDY

4.1 Domain

Values not only guide human behaviour on an indi-

vidual level, but they also shape human behaviour

at a societal level. As several previous studies

(Caprara et al., 2006) (Barnea and Schwartz, 2008)

(Schwartz et al., 2010) have indicated, political voting

is strongly related to and driven by personal values.

This strong correlation between Schwartz’s theory of

values and political choices provides an ideal field of

application for our current research.

It was demonstrated that voters’ political choices

in Western democracies depend more on personal

preferences, especially values, than on other factors

such as voters’ social characteristics (Caprara et al.,

2006). This study not only proved the primacy of val-

ues among the factors that drive voters’ choices but

also highlighted their lasting influence over time.

It has already been established that human deci-

sions are driven by values, but the direct relation-

ship between values and politics appears to be even

more significant. Certain values are specifically re-

lated, both positively and negatively, to center-left and

center-right political parties (Caprara et al., 2006),

or more specifically to ideologies such as Classical

Liberalism and Economic Egalitarianism (Barnea and

Schwartz, 2008). Basic values are reflected in core

political values (Schwartz et al., 2010), such as law

and order, equality, or the acceptance of immigrants.

Taking into account these foundational studies

that demonstrated the direct correlation between vot-

ers’ values and their political choices, we propose

to extend this research by considering not only

Schwartz’s 10 basic values but also his extended set

of 19 values. One difficulty mentioned in previous

studies on political psychology (Schwartz et al., 2010)

was how to determine which values the political par-

ties are promoting or demoting. The NLP model we

proposed in Section 3 is the key element for overcom-

ing this problem.

4.2 Simulations

The simulations consist of analyzing the political vot-

ing process of several Value-Based Agents with dif-

ferent value preferences. To do so, different pro-

files of agents are implemented following the pro-

posed model, considering the relationship between

On the Quest for an NLP-Driven Framework for Value-Based Decision-Making in Automatic Agent Architecture

801

Schwartz’s values and their corresponding ideology

as discussed in the prior subsection.

The voting process simulates the elections to the

UK Parliament, in which the two major parties, the

Labour Party and the Conservative and Unionist

Party, present very strong ideologies (Social Democ-

racy and Conservatism/Economic Liberalism).

In this voting scenario, agents are given two alter-

natives: the Labour Party or the Conservative Party.

The agents will evaluate each party in terms of val-

ues, calculate their alignment with each of them and

decide on the one that best aligns with their own val-

ues. To facilitate this process, two texts summariz-

ing the ideology of each party (this is, the underlying

motivation of each voting alternative) are considered.

The promotion magnitudes extracted from these texts

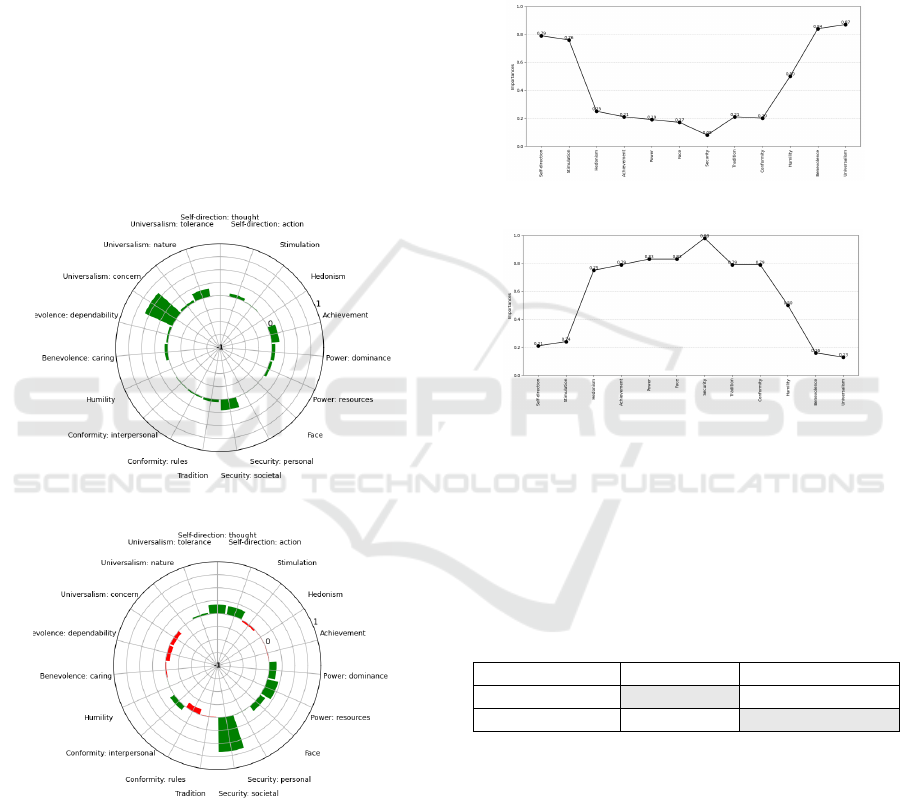

can be observed in Figure 5, and represent the values

promoted and demoted by the two considered parties.

(a) Labour Party promotion magnitudes.

(b) Conservative Party promotion magnitudes.

Figure 5: Promotion magnitudes detected.

As a starting point, the voting process is tested

considering two agents with clearly defined ideolog-

ical profiles: one representing a left-wing voter and

the other representing a right-wing voter. The Value

Systems of these agents are constructed based on

Schwartz’s demonstrated correlations between human

values and the political preferences of center-left vot-

ers. Assuming the opposite correlations for center-

right voters, the fixed importances of each value for

both agents are illustrated in Figure 6. Note that these

importances are derived from the combination of sub-

values that constitute each primal value, which are

later broken down into their individual sub-values im-

portances. The Value System of each agent is built

according to this importances, computing the fuzzy

meassure described in Section 3.

(a) Left-wing voter importances.

(b) Right-wing voter importances.

Figure 6: Agent’s importances.

Each agent then computes its alignment with both

parties, by aggregating the promotion magnitudes of

each party (Figure 5) with the fuzzy measure that rep-

resents its Value System (Figure 6). The alignment

magnitudes results can be seen in Table 1.

Table 1: Voter alignments.

Labour Party Conservative Party

Left-wing voter 0.0727 0.0265

Right-wing voter 0.0636 0.0924

Following this, two simulations are carried out,

each considering a different number of agents and a

distinct method for constructing their Value Systems.

For the first simulation, we generate a population

of 200 agents, with 100 agents representing slight

variations of the left-wing profile and 100 represent-

ing variations of the right-wing profile defined earlier.

The Value Systems of these agents are constructed

by making small modifications to the importance val-

ues of the ideological profiles. These modifications

are introduced randomly, adjusting the importance

lw({v

i

}) of each value i by up to 0.1 + 0.1 · lw({v

i

}).

ICAART 2025 - 17th International Conference on Agents and Artificial Intelligence

802

The fuzzy measure is then constructed based on these

modified ideological profiles. Having generated the

population, the voting process is simulated.

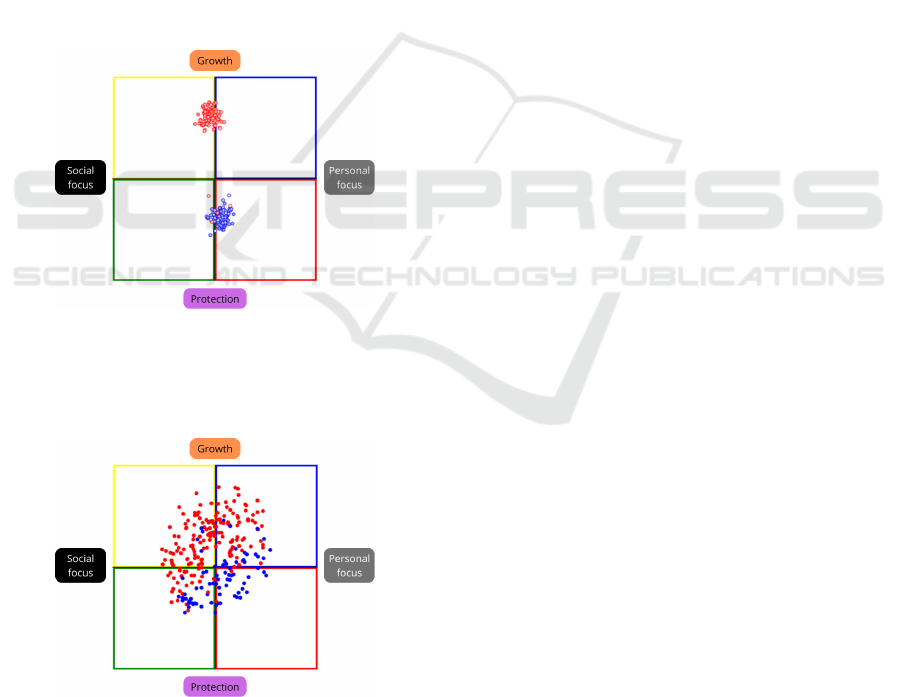

To visualize the voting results (see Figure 7),

each agent is positioned within Schwartz’s two-

dimensional value space according to its preference

profile. As outlined in Section 2, this space is defined

by two bipolar dimensions: the x-axis represents the

continuum from social focus to personal focus, while

the y-axis from anxiety-based (protection) to anxiety-

free (growth). This two-dimensional division results

in four quadrants, each of them corresponding to a

wedge of Schwartz’s continuum (Figure 2).

The positioning of each agent reflects its orienta-

tion along both dimensions, and therefore across each

wedge, providing a clear graphical representation of

their Value System orientation. Each agent is repre-

sented in red or blue, indicating their choice to vote

for the Labour Party or the Conservative Party, re-

spectively, based on their alignment with each party.

Figure 7: Random population voting results.

For the second simulation, the voting process is

simulated for a population of 250 randomly generated

agents.

Figure 8: Random population voting results.

Instead of considering only agents with left-wing

or right-wing profiles, each agent’s preferences across

the 19 values are randomly assigned, but always

adhering to the structure established by Schwartz.

Specifically, the order of preferences follows the con-

tinuum of values (see Figure 2). The voting results

are shown in Figure 7.

5 CONCLUSIONS AND FUTURE

WORK

This paper proposes a model for a Value-Based Agent

able to interpret a set of options in terms of values

and make decisions based on its own values. By inte-

grating an NLP model for value detection, the agent

gains greater independence in its decision-making

processes and can evaluate real-time situations based

on relevant values, allowing it to respond dynamically

to changing conditions and leading to more informed

and effective decisions. Moreover, the inclusion of

NLP within the agents architecture paves the way

for future research, where each agent could develop

its own value detection capabilities, creating diferent

ways to perceive and interpret the environment.

The simulation results show the voting decisions

made by agents with different preferences over val-

ues (i.e., different Value Systems). It can be observed

(Table 1) that the two agents constructed with left and

right-wing profiles are more aligned with the Labour

Party (center-left) and the Conservative and Unionist

Party (right-wing), respectively. Moreover, the agents

whose profiles are generated as small variations of

these profiles vote in 98 % of the cases in alignment

with the profile from which they were generated. This

behavior is consistent with Schwartz’s value-based

characterization of a left and right-wing voter. The

graphical representation of the voting decisions of the

population of agents with random value preferences

(following Schwartz’s restrictions) shows that agents

with a tendency towards anxiety-free values, and more

notably, those with values in the Self-Transcendence

wedge, tend to vote for the Labour Party. In contrast,

agents oriented towards protection or anxiety-based

values, especially those with high preferences in the

Self-Enhancement wedge, show a tendency to vote

for the Conservative Party. These voting tendencies

are appropriate given the values associated with each

party’s ideology. Moreover, the voting patterns are

consistent with the agent’s Value Systems, as agents

with similar values tend to cast the same vote.

For future work, it is essential to explore improve-

ments to the NLP model or even investigate alterna-

tive models, as the precision of the model is crucial for

the agent’s interpretation of the environment based on

values. For instance, in the simulations conducted, it

was observed that while the demotion of some values

On the Quest for an NLP-Driven Framework for Value-Based Decision-Making in Automatic Agent Architecture

803

was detected for the Conservative party, no negative

promotion magnitudes were detected for the Labour

Party, which results into a predisposition for agents

with values not strongly associated with a specific ide-

ology to vote for the Labour Party (see Figure 8). Re-

fining the NLP model could lead to more reliable and

accurate results, ultimately influencing the agents’ de-

cisions in a meaningful way.

Additionally, a future line of research could in-

volve the integration of this Value-Based Agent archi-

tecture into an agent with practical applications, such

as an intelligent traffic light or a chatbot.

ACKNOWLEDGEMENTS

This work has been supported by grant VAE:

TED2021-131295B-C33 funded by MCIN/AEI/

10.13039/501100011033 and by the “European

Union NextGeneration EU/PRTR”, by grant

COSASS: PID2021-123673OB-C32 funded by

MCIN/AEI/ 10.13039/501100011033 and by “ERDF

A way of making Europe”, and by the AGROBOTS

Project of Universidad Rey Juan Carlos funded by

the Community of Madrid, Spain.

REFERENCES

Abbo, G. A., Marchesi, S., Wykowska, A., and Belpaeme,

T. (2024). Social value alignment in large language

models. In Osman, N. and Steels, L., editors, Value

Engineering in Artificial Intelligence, pages 83–97,

Cham. Springer Nature Switzerland.

Barnea, M. and Schwartz, S. (2008). Values and voting.

Political Psychology, 19:17 – 40.

Bulla, L., Gangemi, A., and Mongiov

`

ı, M. (2024). Do lan-

guage models understand morality? towards a robust

detection of moral content.

Caprara, G., Schwartz, S., Capanna, C., Vecchione, M., and

Barbaranelli, C. (2006). Personality and politics: Val-

ues, traits, and political choice. Political Psychology,

27:1 – 28.

Choquet, G. (1954). Theory of capacities. Annales de

l’Institut Fourier, 5:131–295.

Fulgoni, D., Carpenter, J., Ungar, L., and Preot¸iuc-Pietro,

D. (2016). An empirical exploration of moral foun-

dations theory in partisan news sources. In Calzolari,

N., Choukri, K., Declerck, T., Goggi, S., Grobelnik,

M., Maegaard, B., Mariani, J., Mazo, H., Moreno,

A., Odijk, J., and Piperidis, S., editors, Proceedings of

the Tenth International Conference on Language Re-

sources and Evaluation (LREC’16), Portoro

ˇ

z, Slove-

nia. European Language Resources Association.

Haidt, J. (2013). Moral psychology for the twenty-first cen-

tury. Journal of Moral Education, 42.

Heidari, S. (2022). PhD Thesis: Agents with Social Norms

and Values: A framework for agent based social sim-

ulations with social norms and personal values.

Hopp, F., Fisher, J., Cornell, D., Huskey, R., and Weber, R.

(2020). The extended moral foundations dictionary

(emfd): Development and applications of a crowd-

sourced approach to extracting moral intuitions from

text.

Karanik, M., Billhardt, H., Fern

´

andez, A., and Ossowski, S.

(2024). Exploiting Value System Structure for Value-

Aligned Decision-Making, pages 180–196.

Kennedy, B., Atari, M., Mostafazadeh Davani, A., Hoover,

J., Omrani, A., Graham, J., and Dehghani, M. (2021).

Moral concerns are differentially observable in lan-

guage. Cognition, 212:104696.

Mokhberian, N., Abeliuk, A., Cummings, P., and Lerman,

K. (2020). Moral Framing and Ideological Bias of

News, pages 206–219.

Noriega, P. and Plaza, E. (2024). On autonomy, governance,

and values: An agv approach to value engineering. In

Osman, N. and Steels, L., editors, Value Engineer-

ing in Artificial Intelligence, pages 165–179, Cham.

Springer Nature Switzerland.

Osman, N. and d’Inverno, M. (2023). A computational

framework of human values for ethical ai.

Russell, S. (2022). Artificial Intelligence and the Problem

of Control, pages 19–24.

Schwartz, S., Caprara, G., and Vecchione, M. (2010). Basic

personal values, core political values, and voting: A

longitudinal analysis. Political Psychology, 31:421 –

452.

Schwartz, S., Cieciuch, J., Vecchione, M., Davidov, E., Fis-

cher, R., Beierlein, C., Ramos, A., Verkasalo, M.,

L

¨

onnqvist, J.-E., Demirutku, K., dirilen gumus, O.,

and Konty, M. (2012). Refining the theory of basic

individual values. Journal of Personality and Social

Psychology, 103:663–88.

Schwartz, S. H. (1992). Universals in the content and struc-

ture of values: Theoretical advances and empirical

tests in 20 countries. volume 25 of Advances in Ex-

perimental Social Psychology, pages 1–65. Academic

Press.

ValuesMLProject (2024). Valuesml dataset. Data pro-

vided as tab-separated values files with one header

line. In addition to the original files in nine languages,

a machine-translated version in English is available.

van der Weide, T. L., Dignum, F., Meyer, J. J. C., Prakken,

H., and Vreeswijk, G. A. W. (2010). Practical rea-

soning using values. In McBurney, P., Rahwan, I.,

Parsons, S., and Maudet, N., editors, Argumentation

in Multi-Agent Systems, pages 79–93, Berlin, Heidel-

berg. Springer Berlin Heidelberg.

Wyner, A. and Zurek, T. (2024). Towards a formalisation

of motivated reasoning and the roots of conflict. In Os-

man, N. and Steels, L., editors, Value Engineering in

Artificial Intelligence, pages 28–45, Cham. Springer

Nature Switzerland.

ICAART 2025 - 17th International Conference on Agents and Artificial Intelligence

804