Federated Learning Harnessed with Differential Privacy for Heart

Disease Prediction: Enhancing Privacy and Accuracy

Wided Moulahi

1

, Tarek Moulahi

2

, Imen Jdey

3

and Salah Zidi

4

1

IResCoMath Laboratory, National Engineering School of Gabes, Tunisia

2

Department of Information Technology, College of Computer, Qassim University, Kingdom of Saudi Arabia

3

ReGIM-Lab. REsearch Groups in Intelligent Machines (LR11ES48), Tunisia

4

University of Haute Alsace, Colmar, 68000, France

Keywords:

Federated Learning, Differential Privacy, Health, Heart Disease, Privacy Preservation.

Abstract:

The increasing digitization of healthcare raises the concerns surrounding the patients’ privacy. Therefore, the

integration of privacy preserving technologies has proven imperative to curb the negative repercussions tied

to technology deployment in the medical sector and to provide trustworthy artificial intelligence healthcare

applications. Two raising approaches are promoted to the forefront of research and gaining momentum in

the realm of healthcare smart systems: Federated Learning and Differential Privacy. On one hand, Federated

Learning (FL) enables collaborative model training across multiple institutions without exchanging raw data.

Differential Privacy (DP), on the other hand, provides a formal framework for safeguarding data against po-

tential privacy breaches. The application of these approaches in healthcare settings ensures the protection of

sensitive patient informations. In this paper, we delve into the challenges posed by medical data to see how

FL and DP can be tailored to suit these requirements. We aim to strike a balance between technology deploy-

ment in the medical field and privacy preservation. To this end, we developed a Multi-layer Perceptron (MLP)

model to predict if a person is at risk to have heart diseases. The model, trained on different medical datasets

for heart diseases, reached an accuracy of 99.57%. The same model was trained in FL framework. It achieved

a FL averaged accuracy reaching 99.15%. In a third scenario, to enhance clients’ privacy, we deployed a DP

framework. The differentially private MLP achieved an accuracy extending to 97.07% in centralized settings

and averaged accuracy attaining 89.94% in FL settings, outperforming existing methods in heart diseases pre-

diction.

1 INTRODUCTION

Machine Learning (ML) (Jordan and Mitchell, 2015)

has the potential to transform healthcare industry by

enhancing diagnosis, treatment, and patients’ well-

being. It is of a paramount importance in the medical

field in diverse ways. One crucial area is diagnosis

and disease prediction. In fact, ML algorithms are

employed in drug discovery and development, where

they assist in finding potential avenues for new treat-

ments and improving existing medicines (Vamathe-

van et al., 2019; Brahmi et al., 2024). They also help

to, early, identify diseases, especially those not eas-

ily detectable at an initial stage. Additionally, ML is

used in medical imaging to aid pathologists in mak-

ing more accurate diagnostic judgments. It stream-

lines routine tasks, enabling healthcare professionals

to focus on essential aspects of patient care. It also

assists in robotic surgeries, and identifies prescription

errors (Kassahun et al., 2016).

However, these advancements come with hurdles,

including the need for large, trustworthy datasets, the

understanding of ML models, and ethical and regula-

tory issues. There are challenges that must be taken

care of prior to the widespread integration of ML into

clinical practice. The requirement for large, excellent

datasets that truly portray the patient population is one

of the main obstacles. Large amounts of patient data

are crucial for the training and accuracy improvement

of ML systems. Sensitive personal data, involving ge-

netic, biometric, and medical history, is frequently in-

cluded in this data. This brings up a number of issues

with control, access, and data security (Hameed et al.,

2021). There is a risk of re-identification and pri-

vacy breaches, given that individuals can potentially

be identified through their data. According to a study

Moulahi, W., Moulahi, T., Jdey, I. and Zidi, S.

Federated Learning Harnessed with Differential Privacy for Hear t Disease Prediction: Enhancing Privacy and Accuracy.

DOI: 10.5220/0013188900003890

In Proceedings of the 17th International Conference on Agents and Artificial Intelligence (ICAART 2025) - Volume 3, pages 845-852

ISBN: 978-989-758-737-5; ISSN: 2184-433X

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

845

conducted in the journal Nature Communications, ap-

proximately 99.98% of americans in an anonymized

dataset could be re-identified (Rocher et al., 2019).

Furthermore, collaboration is needed between

healthcare providers, researchers, and data scientists

in order to collect and arrange data in a way that pre-

serves data integrity and patient confidentiality. This

is especially important in the medical field, where

decisions made can have life-or-death consequences.

To make well-informed judgments about patient care,

physicians and other healthcare professionals ought to

be able to trust and understand the decisions produced

by ML models. Building trust in the technology de-

pends on this transparency. Additionally, address-

ing regulatory and ethical considerations is crucial,

such as algorithms’ fairness, and protecting patient

data. Despite these challenges, the potential benefits

of ML in healthcare are significant, and with meticu-

lous planning and collaboration, these hurdles can be

overcome to improve patient care.

As the medical community continues to lever-

age data-driven approaches for improved diagnos-

tics and treatment, the implementation of FL and DP

emerges as a pivotal strategy to uphold the confi-

dentiality and trustworthiness of patient informations.

This work contributes to the ongoing discourse on pri-

vacy in healthcare by shedding light on the potential

of cutting-edge technologies to revolutionize medical

research and practice while steadfastly safeguarding

individual privacy.

According to the World Health Organisation, the

cardiovascular diseases are, globally, the leading

cause of death. Each year, 17.3 millions of people

die due to heart diseases

1

.

Towards the aforementioned concerns, in this re-

search paper:

1. We developed an efficient Multilayer Perceptron

to predict whether a person is at risk to have heart

diseases.

2. We enhanced the privacy of the approach by de-

ploying it in a FL framework.

3. We optimized the privacy preservation by deploy-

ing DP approach.

The remaining parts of this paper are organized as fol-

lows: in section 2, we summarize the related work. In

section 3, we outline the problem statement. Section

4 introduces the proposed contribution. The results of

our approach are presented and discussed in section

5, and we conclude with section 6.

1

https://www.who.int/health-topics/cardiovascular-

diseases#tab=tab 1

2 RELATED WORK

Several privacy preserving smart frameworks were

developed to tackle medical problems while main-

taining a balance between ML efficiency and privacy

preservation in smart healthcare systems. Table 1

summarizes some of these frameworks.

(Wang et al., 2024) proposed a Differentially Pri-

vate Federated Transfer Learning Framework using

MLP for stress detection. This approach combines

DP with FL. Its accuracy was reported to be 53%.

One limitation of this approach could be the relatively

lower accuracy of 53% achieved in stress detection.

(Savi

´

c et al., 2023) proposed ML techniques to

predict Quality of life (QoL) indicators for cancer pa-

tients using centralized and FL scenarios for model

training on ORB and BcBase datasets. Different ML

models were used for classification and regression

tasks. Centralized and federated models show com-

parable predictive power for QoL. Optimal privacy

values for regressors show steady mean absolute er-

ror (MAE) value decrease.

The work of (Babu Nampalle et al., 2023) in-

tegrated DP into FL for medical image classifica-

tion. The developed model was based on noise

calibration, adaptive privacy budget strategy, and

privacy-utility trade-off analysis. It used the Mo-

bileNetV2 pre trained model on HAM10000 Skin Im-

age Dataset, four discrete datasets from the Cancer

Imaging Archive, PH2 and Memorial Sloan Kettering

datasets for skin images. The proposed framework

achieved the following results of accuracy: 90.68%,

88.21% and 84.64%.

(Letafati and Otoum, 2023) proposed a distributed

DP mechanism for metaverse healthcare using ’mix-

up’ noise. The model was evaluated on Breast Cancer

Wisconsin Dataset addressing privacy-utility trade-

off and diagnosis accuracy. The authors conducted

the research over different numbers of clients for dif-

ferent levels of privacy and compared private scheme

with non-private centralized setup for diagnosis accu-

racy.

(Liu et al., 2024) proposed a Record-Level Per-

sonalized DP (rPDP-FL) FL framework. It was

applied on two datasets namely Fed-Heart-Disease

and MNIST. For Fed-Heart-Disease, the accuracy re-

ported varies between 77.17% and 81.89%. On the

MNIST dataset, the accuracy varies between 84.11%

and 94.77%.

ICAART 2025 - 17th International Conference on Agents and Artificial Intelligence

846

Table 1: Privacy preserving frameworks applied in the medical field.

Ref Method used Results Limitation

(Wang

et al.,

2024)

A differentially private federated

transfer learning framework us-

ing MLP for stress detection

Accuracy: 53% ROC Curve:

NON-DP : Area=0.59 Epsilon=1

: Area=0.56 Epsilon=0.5 :

Area=0.54

Relatively low accuracy

of 53% achieved in stress

detection.

(Savi

´

c

et al.,

2023)

Application of ML techniques to

predict the quality of life features

for patients diagnosed with can-

cer

The accuracy varies between

51.6% and 71.4% among the dif-

ferent datasets and the different

ML models. The MAE values

vary between 5.1055 and 6.7250

for different values of DP and

different regressors

The performances of

the models could be

enhanced.

(Babu Nam-

palle et al.,

2023)

FL framework with integrated

DP for medical image classifi-

cation using MobileNetV2 archi-

tecture

Accuracy: Baseline: 90.68% ,

FL: 88.21% , DP FL: 84.64%

The system could be im-

proved to enhance perfor-

mance, privacy and secu-

rity.

(Letafati

and

Otoum,

2023)

Distributed Differential Privacy

for the metaverse healthcare sys-

tems for breast cancer diagnosis

ε = 20: 62% of accuracy. ε = 60:

90% of accuracy.

The system performance

is enhanced on behalf the

privacy. Find a trade-

off between accuracy and

privacy budget.

(Liu et al.,

2024)

Federated Learning frame-

work based on record-level

personalized Differential Pri-

vacy(referred to as rPDP-FL)

applied on two datasets.

Fed-Heart-Disease: Accu-

racy: from 77.17% to 81.89%

. MNIST: Accuracy: from

84.11% to 94.77%

The performances of the

FL could be enhanced.

3 PROBLEM STATEMENT

Two key approaches are the pillars of our research:

FL and DP.

3.1 Federated Learning

FL is a game-changing concept in ML. Unlike tra-

ditional methods that rely on centralized data, it al-

lows multiple devices to collaborate on training mod-

els. This approach is particularly valuable in scien-

tific domains because it protects privacy by keeping

data on local devices instead of being shared (Moulahi

et al., 2023). Depending on clients’ interaction with

the process, FL can be conducted in three modal-

ities: synchronous FL, asynchronous FL and semi-

synchronous FL.

In FL, the participating devices might have hetero-

geneous computation potentials. The synchronous FL

does not consider how heterogeneous these devices

are. The lowest device determines the speed of the

process. The devices with the highest computational

resources remain idle until the other devices achieve

their local training (Feng et al., 2021; Stripelis et al.,

2022).

In contrast, the asynchronous FL does not syn-

chronise the communication between the different

participating devices. Once it achieves its training,

each device uploads its local updates and downloads

the new updated model without waiting the other de-

vices (Feng et al., 2021; Stripelis et al., 2022).

Semi-synchronous FL combines features of syn-

chronous and asynchronous methods. It allows de-

vices to synchronize periodically with a central server

or each other. Semi-synchronous FL balances speed

and synchronization in FL (Feng et al., 2021; Stripelis

et al., 2022).

3.1.1 FL Security and Privacy Issues

FL faces challenges which call its efficiency into

question. One of these threats are Data poisoning at-

tacks which aim to degrade the model performances.

It consists to inject carefully crafted samples in the

dataset to mislead the model behaviour. These sam-

ples could be injected in the training dataset or in the

testing dataset (Sun et al., 2022). The Model poi-

soning attacks aim to modify the model parameters

and learning rule. These attacks could be injected by

a malicious client or a malicious server (Sun et al.,

Federated Learning Harnessed with Differential Privacy for Heart Disease Prediction: Enhancing Privacy and Accuracy

847

2022). Inference attacks are adversarial algorithms

that trace back the samples in the training dataset.

They aim to divulge the private informations of par-

ticipants (Jdey, 2022). Byzantine attacks aim at de-

grading the global model convergence. Malicious

clients falsify the data or the model updates so that

the model converges slowly (Prakash and Avestimehr,

2020). Free-riding attacks intend to obtain the final

global model without participating in the training pro-

cess (Bouacida and Mohapatra, 2021).

3.2 Differential Privacy

DP is a way to keep data private in data analysis

and ML. It consists of adding an amount of noise to

the data or the results of algorithms (Dwork, 2006).

Technically, DP is based on the idea of ”neighbor-

ing datasets.” Two datasets are considered neighbors

if they only differ by one entity.

DP is a privacy-preserving method that quantifies

how much privacy is lost. It’s measured by a parame-

ter called epsilon (ε), which represents the maximum

privacy loss allowed (Lee and Clifton, 2011). A larger

ε means less privacy but more useful data. DP helps

strike a balance between using data to learn meaning-

ful insights and protecting the privacy of individuals

represented in the dataset (Dwork et al., 2014).

4 PROPOSED CONTRIBUTIONS

4.1 Contributions Description

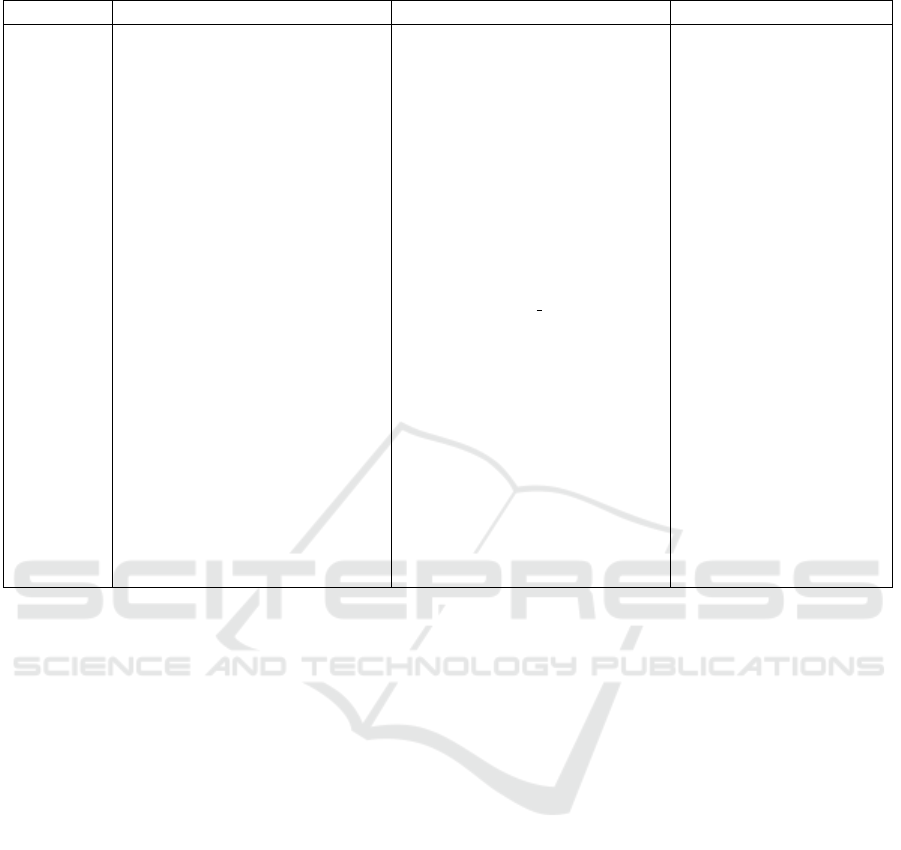

Our approach comprises three distinct processes

aimed at predicting heart diseases while preserving

privacy.

The first process consists of developing an effi-

cient MLP model on different heart disease datasets

to generate predictive insights regarding the likeli-

hood of heart diseases occurrence in individuals. Two

tasks are targeted : binary classification and multi-

class classification.

Following the initial MLP modeling, we em-

bark on the second process, which employs FL ap-

proach. We tend to preserve clients’ privacy through

collaborative learning, each data source contributes

knowledge to the model without exposing individual

records. During this process, several communication

rounds take place. A single cycle consists of clients

carrying out local computations on their data and then

transmitting the updates to the central server for ag-

gregation.

In the final process, to optimize privacy preserva-

tion, we integrate DP mechanism. We apply DP on

the MLP model in centralized settings. This involves

adding noise to the model parameters to protect them

against attacks and preserve the data privacy. The dif-

ferentially private MLP model is then used for making

predictions, while still preserving data stakeholders’

privacy.

The DP technique is, then, extended to FL where

the MLP model is trained across the different clients.

We ensure, thereby, protecting the confidentiality of

sensitive information while allowing effective model

training and prediction. We are applying a local DP

where noise is added locally on each client model pa-

rameters before sending them to the central server for

aggregation.

The results of our approach are discussed compre-

hensively, considering both predictive performance

and privacy preservation. We analyze the accuracy

and robustness of the MLP model trained on different

heart disease datasets, evaluating its efficacy in iden-

tifying individuals at risk of heart diseases. Addition-

ally, we assess the impact of FL and DP on model per-

formance and privacy preservation, highlighting any

trade-offs and benefits observed. Figure 1 presents

the approach our research adopted.

4.2 Suggested Scenarios

4.2.1 Process 1: Centralized Learning

A main goal of our research is to ensure privacy

preservation. Therefore, the datasets we used are

medical datasets for heart diseases:

Heart 1:www.kaggle.com/datasets/johnsmith88/

heart-disease-dataset.

Heart 2:https://www.kaggle.com/datasets/

sid321axn/heart-statlog-cleveland-hungary-final.

Heart 3:https://archive.ics.uci.edu/dataset/193/

cardiotocography.

These datasets are used to train a supervised MLP

model (Popescu et al., 2009). Its parameters are de-

scribed in Table 2.

4.2.2 Process 2: Federated Learning

Our collaborative model involves different numbers

of clients. In a scenario, on the first dataset ( Heart 1),

five clients are involved. In an other scenario (

Heart 2), we involved two clients, and in the last sce-

nario ( Heart 3), three clients were involved. The

training process goes through 10 rounds. For aver-

aging updates coming from clients, we used FedAvg

as an aggregation algorithm (Issa et al., 2023). All

clients participate in each communication round si-

multaneously. This synchronous approach ensures

ICAART 2025 - 17th International Conference on Agents and Artificial Intelligence

848

Figure 1: The proposed Approach which comprises three processes: centralized Learning, FL and DP integration.

Table 2: MLP hyper-parameters values.

Hyper-parameter Heart 1 Heart 2 Heart 13

Input layers 13 11 20

Hidden layers 9 9 9

Optimizer Adam Adam Adam

Loss Function Binary crossentropy Binary crossentropy Sparse categorical crossentropy

Epochs 20 20 20

Activation function Relu, Sigmoid Relu, Sigmoid Relu, Softmax

Classes 2 2 3

Task Binary classification Binary classification Multi-class classification

that all clients are updated with the latest global model

before proceeding to the next round.

4.2.3 Process 3: Differential Privacy Integration

The DP integration process takes place using different

DP parameters (Table 3). We used the PyTorch Opa-

cus library for DP deployment. The performances of

the differentially private MLP model in both central-

ized and federated settings are then analysed to mea-

sure the trade-off between model accuracy and the

level of privacy achieved. The results provide insights

into the effectiveness of the proposed DP technique

in preserving privacy while maintaining model per-

formance. “Small” ε values (canonically, ε ≤ 1) tend

to exhibit great privacy guarantees but often severely

impact performance. ”Medium” and ”large” ε val-

ues (canonically, ε ∈ [1, 10] and ε ≥ 10, respectively)

provide more relaxed privacy guarantees, but increase

utility (Lee and Clifton, 2011)

5 RESULTS AND DISCUSSION

5.1 Results

To evaluate the performances of our approach, we

used the following metrics: accuracy, precision, re-

call, F1 score, F-beta score and Receiver Operation

Characteristic curve (ROC Curve) (Awad and Has-

sanien, 2014).

After implementing the previously proposed ap-

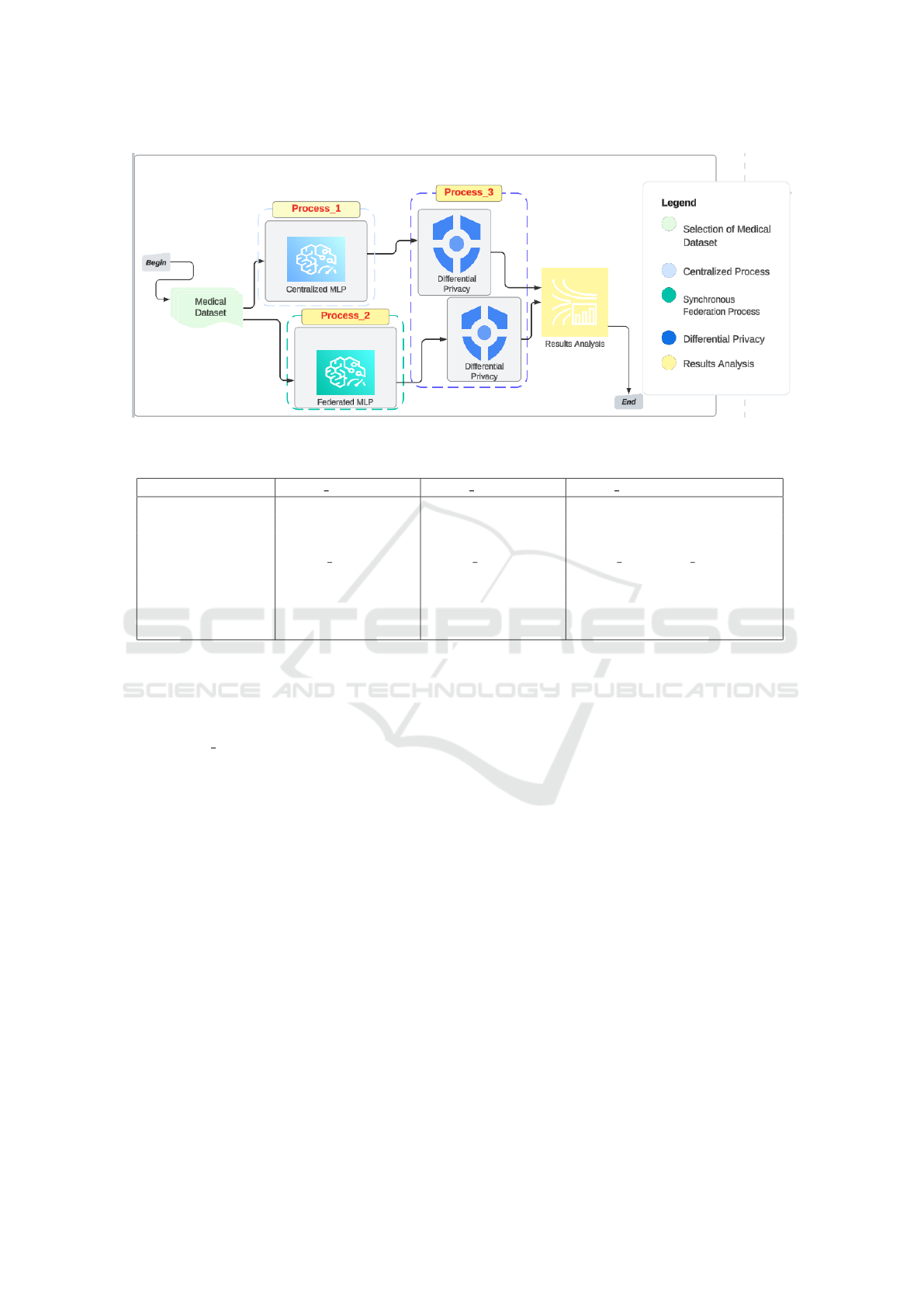

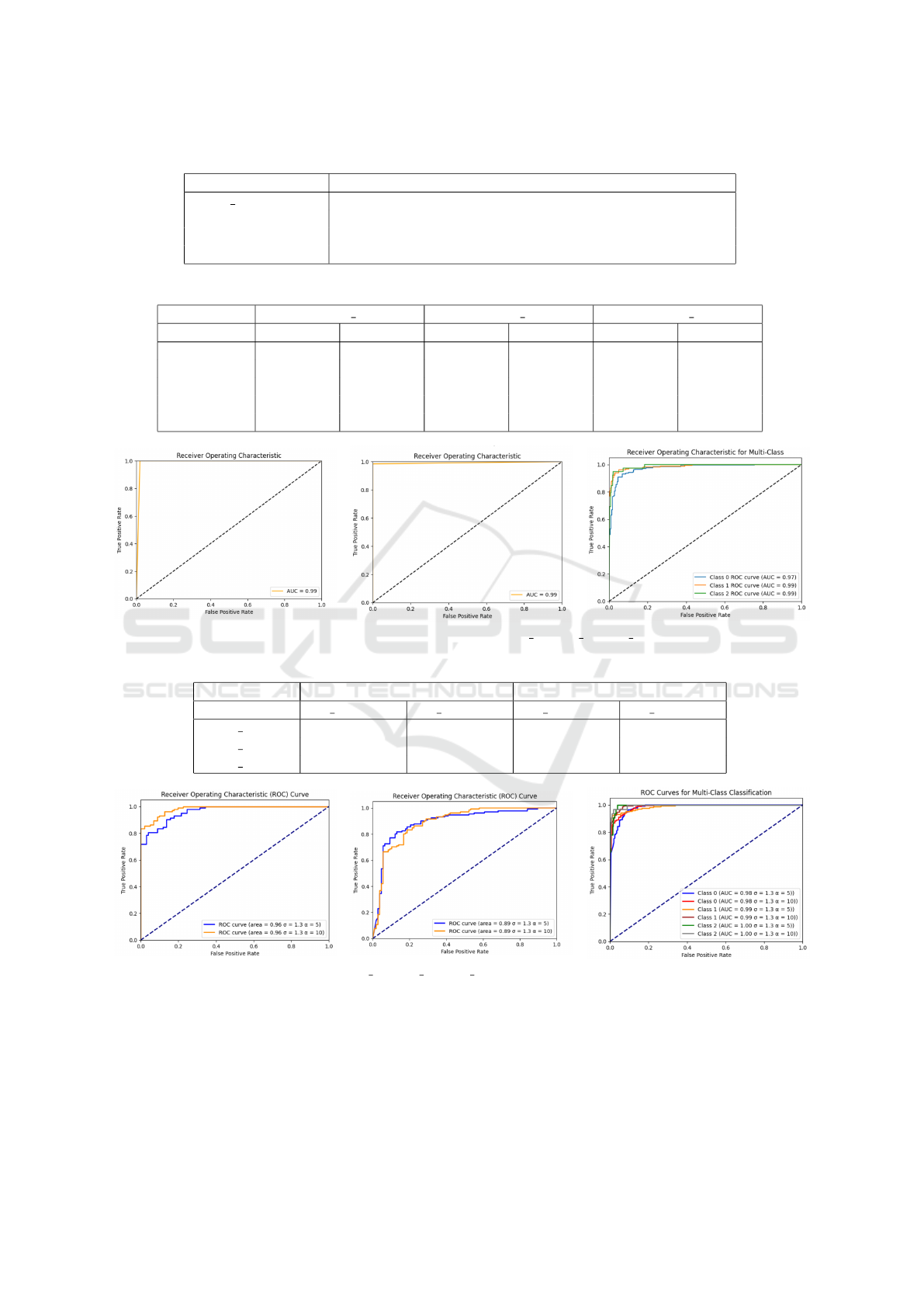

proach, it achieved the following results: Table 4

shows the performances of the MLP model trained

on the selected datasets in centralized settings as

well as in federated settings. Figure 2 depicts the

ROC Curves of the three datasets in centralized set-

tings. Figure 3 depicts the ROC Curves of the three

datasets in centralized settings after applying DP us-

ing different DP parameters values. Table 5 highlights

the model performance after applying DP in cen-

tralized and federated settings on the three datasets.

It presents the model accuracy and privacy budgets

spent for different parameters.

Federated Learning Harnessed with Differential Privacy for Heart Disease Prediction: Enhancing Privacy and Accuracy

849

Table 3: DP parameters.

Parameter Description

Noise multiplier (σ) Amount of noise added to gradients

Delta (δ) Desired upper bound on the probability of information leakage

Alpha (α) Level of privacy protection

Epsilon (ε) Privacy budget

Table 4: MLP performances in centralized settings and federated settings on different datasets.

Dataset Heart 1 Heart 2 Heart 3

Metric Centralized FL Centralized FL Centralized FL

Accuracy 99.51% 98.86% 99.57% 99.15% 98.82% 97.08%

Precision 98.98% 99.23% 100% 100% 99.16% 97.29%

Recall 100% 98.45% 99.20% 98.35% 99.12% 98.13%

F1 score 99.49% 98.81% 99.59% 99.17% 99.13% 98.01%

F Beta score 99.19% 99.05% 99.83% 99.66% 99.15% 98.75%

Figure 2: ROC curves of, respectively, Heart 1, Heart 2, Heart 3.

Table 5: Model performance for different datasets and different privacy budgets with δ = 1e − 5 and sample rate (q)=0.01.

Parameters σ = 1.3 α = 5 ε = 2.25 σ = 1.3 α = 10 ε = 0.91

Dataset DP Centralized DP FL DP Centralized DP FL

Heart 1 92.68% 70.12% 97.07% 75.95%

Heart 2 86.97% 89.04% 86.97% 88.94%

Heart 3 95.06% 89.94% 94.12% 89.71%

Figure 3: ROC curves of, respectively, Heart 1, Heart 2, Heart 3 in centralized settings after applying DP mechanism with

different values of DP parameters.

5.2 Discussion

Building upon the results presented in the previous

section, the developed MLP model shows efficient re-

sults in terms of accuracy, precision, recall, F1 score

and F-beta score and ROC Curve. The high values

of these performance metrics indicate that the MLP

model achieved reliable results in predicting heart dis-

ease risk with a high degree of accuracy and reliabil-

ity. Along with, the accuracy of 99.57%, which mea-

sures the model’s ability to make correct predictions

out of the total predictions, signifies that our MLP has

ICAART 2025 - 17th International Conference on Agents and Artificial Intelligence

850

excellent discriminatory power.

Furthermore, in federated settings, deploying the

MLP model helps preserving clients’ privacy without

sacrificing the model performances. Despite the dis-

tributed nature of data, the FL still achieves competi-

tive accuracy, showcasing the effectiveness of collab-

orative model training and its ability to maintain high

performance levels across repeated interactions and

updates during the FL process. Additionally, figure

4 exhibits the closely related performances between

the centralized learning and FL while providing more

privacy to data stakeholders.

Figure 4: MLP performances in centralized settings against

MLP performances in federated settings on the three

datasets.

The differences between the performances of cen-

tralized DP free MLP and the differentially private

MLP performances are reimbursed by the more secu-

rity and privacy added to the individuals data. Care-

fully tuned DP parameters help, considerably, achiev-

ing the desired level of privacy while maintaining re-

liable levels of accuracy. With σ=1.3, α=10, the con-

sumed privacy budget (ε=0.91) is still in an acceptable

range, for a satisfying accuracy reaching 97.07%.

Highlighting the difference between the accuracy

of the DP Free MLP and the accuracy of differentially

private MLP trained using different parameters in fed-

erated settings, the performances of the model are

still accurate despite the fact that two privacy preserv-

ing mechanisms are deployed. The slight decrease in

model performances is indemnified by a strong pri-

vacy guarantee due to the use of DP harnessed with

FL. With σ=1.3, α=10, the consumed privacy budget

(ε=0.91) is still in an acceptable range for an accuracy

of 89.71% .

Our approach, applied on different heart diseases

datasets, proves its scalability and shows very satisfy-

ing results outperforming existing results. Extended

to a multi-classification task, it achieves reliable and

accurate results.

Compared to the work of (Liu et al., 2024),

our work provides a three-dimensional approach

which involves centralized, federated and differen-

tially private models. That allows examining the

impact of each technique on the learning process.

Besides, the FL performances of our approach ex-

ceed those of the aforementioned work. Our model

achieves an average accuracy of 99.15% against an

average accuracy less than that of (Liu et al., 2024).

Actually, the concerned paper does not provide con-

crete values of the results but rather plots them , which

make the comparison, a bit, confusing. For the feder-

ated learning, the plotted result seems less than 99%.

Furthermore, the result of our differentially private

model exceed the results of his approach. Our pro-

posed approach achieved more accurate results for

less privacy budget. Our DP MLP accuracy in cen-

tralized setting reaches 97.07% for a privacy budget

ε = 0.91 against an accuracy of 81.34% for ε ≥ 1.

6 CONCLUSION

Our research has demonstrated the successful deploy-

ment of a MLP model to predict the likelihood of in-

dividuals to have heart disease. Through the integra-

tion of FL and the incorporation of DP techniques, in-

dividual data contributions remain indistinguishable.

We have not only achieved promising predictive per-

formance but also preserved the privacy of sensitive

medical data. Moreover, by integrating DP into the

training process, we ensured that the model’s predic-

tions did not compromise the confidentiality of sen-

sitive informations. DP mechanism comes to pro-

tect the model parameters against the attacks that may

lead to data re-identification.

Our experimental results indicate that the de-

ployed MLP model achieved satisfying levels of ac-

curacy. That ensures its scalability and generaliza-

tion across diverse datasets, underscoring its poten-

tial for real-world application in healthcare field. Fur-

thermore, the successful implementation of privacy-

preserving techniques highlights the feasibility of

leveraging advanced ML methods while upholding

strict privacy standards.

Overall, our findings contribute to the growing

body of research on privacy-preserving techniques in

ML and underscore the potential of FL combined with

DP in healthcare applications. Moving forward, fur-

ther exploration and refinement of our proposed ap-

proach hold promise for advancing predictive effi-

ciency and privacy preservation in medical data anal-

ysis by applying other DP perturbation mechanisms in

global or distributed DP architectures harnessed with

other aggregation algorithms.

Federated Learning Harnessed with Differential Privacy for Heart Disease Prediction: Enhancing Privacy and Accuracy

851

REFERENCES

Awad, A. I. and Hassanien, A. E. (2014). Impact of some

biometric modalities on forensic science. Computa-

tional intelligence in digital forensics: Forensic inves-

tigation and applications, pages 47–62.

Babu Nampalle, K., Singh, P., Vivek Narayan, U., and Ra-

man, B. (2023). Vision through the veil: Differential

privacy in federated learning for medical image clas-

sification. arXiv e-prints, pages arXiv–2306.

Bouacida, N. and Mohapatra, P. (2021). Vulnerabilities in

federated learning. IEEE Access, 9:63229–63249.

Brahmi, W., Jdey, I., and Drira, F. (2024). Exploring the

role of convolutional neural networks (cnn) in dental

radiography segmentation: A comprehensive system-

atic literature review. Engineering Applications of Ar-

tificial Intelligence, 133:108510.

Dwork, C. (2006). Differential privacy. In International col-

loquium on automata, languages, and programming,

pages 1–12. Springer.

Dwork, C., Roth, A., et al. (2014). The algorithmic founda-

tions of differential privacy. Foundations and Trends®

in Theoretical Computer Science, 9(3–4):211–407.

Feng, L., Zhao, Y., Guo, S., Qiu, X., Li, W., and Yu,

P. (2021). Blockchain-based asynchronous federated

learning for internet of things. IEEE Transactions on

Computers, 99(1):1–9.

Hameed, S. S., Hassan, W. H., Latiff, L. A., and Ghabban,

F. (2021). A systematic review of security and privacy

issues in the internet of medical things; the role of ma-

chine learning approaches. PeerJ Computer Science,

7:e414.

Issa, W., Moustafa, N., Turnbull, B., Sohrabi, N., and Tari,

Z. (2023). Blockchain-based federated learning for

securing internet of things: A comprehensive survey.

ACM Computing Surveys, 55(9):1–43.

Jdey, I. (2022). Trusted smart irrigation system based on

fuzzy iot and blockchain. In International Confer-

ence on Service-Oriented Computing, pages 154–165.

Springer.

Jordan, M. I. and Mitchell, T. M. (2015). Machine learn-

ing: Trends, perspectives, and prospects. Science,

349(6245):255–260.

Kassahun, Y., Yu, B., Tibebu, A. T., Stoyanov, D., Gi-

annarou, S., Metzen, J. H., and Vander Poorten, E.

(2016). Surgical robotics beyond enhanced dexterity

instrumentation: a survey of machine learning tech-

niques and their role in intelligent and autonomous

surgical actions. International journal of computer as-

sisted radiology and surgery, 11:553–568.

Lee, J. and Clifton, C. (2011). How much is enough? choos-

ing ε for differential privacy. In Information Secu-

rity: 14th International Conference, ISC 2011, Xi’an,

China, October 26-29, 2011. Proceedings 14, pages

325–340. Springer.

Letafati, M. and Otoum, S. (2023). Digital healthcare in the

metaverse: Insights into privacy and security. IEEE

Consumer Electronics Magazine.

Liu, J., Lou, J., Xiong, L., Liu, J., and Meng, X. (2024).

Cross-silo federated learning with record-level per-

sonalized differential privacy. In Proceedings of the

2024 on ACM SIGSAC Conference on Computer and

Communications Security, pages 303–317.

Moulahi, W., Jdey, I., Moulahi, T., Alawida, M., and

Alabdulatif, A. (2023). A blockchain-based fed-

erated learning mechanism for privacy preservation

of healthcare iot data. Computers in Biology and

Medicine, 167:107630.

Popescu, M.-C., Balas, V. E., Perescu-Popescu, L., and

Mastorakis, N. (2009). Multilayer perceptron and

neural networks. WSEAS Transactions on Circuits and

Systems, 8(7):579–588.

Prakash, S. and Avestimehr, A. S. (2020). Mitigating

byzantine attacks in federated learning. arXiv preprint

arXiv:2010.07541.

Rocher, L., Hendrickx, J. M., and De Montjoye, Y.-A.

(2019). Estimating the success of re-identifications in

incomplete datasets using generative models. Nature

communications, 10(1):1–9.

Savi

´

c, M., Kurbalija, V., Ili

´

c, M., Ivanovi

´

c, M., Jakoveti

´

c,

D., Valachis, A., Autexier, S., Rust, J., and Kosmidis,

T. (2023). The application of machine learning tech-

niques in prediction of quality of life features for can-

cer patients. Computer Science and Information Sys-

tems, 20(1):381–404.

Stripelis, D., Thompson, P. M., and Ambite, J. L.

(2022). Semi-synchronous federated learning for

energy-efficient training and accelerated convergence

in cross-silo settings. ACM Transactions on Intelligent

Systems and Technology (TIST), 13(5):1–29.

Sun, Y., Ochiai, H., and Sakuma, J. (2022). Semi-targeted

model poisoning attack on federated learning via

backward error analysis. In 2022 International Joint

Conference on Neural Networks (IJCNN), pages 1–8.

IEEE.

Vamathevan, J., Clark, D., Czodrowski, P., Dunham, I., Fer-

ran, E., Lee, G., Li, B., Madabhushi, A., Shah, P.,

Spitzer, M., et al. (2019). Applications of machine

learning in drug discovery and development. Nature

reviews Drug discovery, 18(6):463–477.

Wang, Z., Yang, Z., Azimi, I., and Rahmani, A. M.

(2024). Differential private federated transfer learn-

ing for mental health monitoring in everyday settings:

A case study on stress detection. arXiv preprint

arXiv:2402.10862.

ICAART 2025 - 17th International Conference on Agents and Artificial Intelligence

852