XARF: Explanatory Argumentation Rule-Based Framework

Hugo Eduardo Sanches

1 a

, Ayslan Trevizan Possebom

1 b

and Linnyer Beatrys Ruiz Aylon

2 c

1

Manna Research Team, State University of Maring

´

a, Brazil

2

Federal Institute of Parana, Paranava

´

ı, Brazil

Keywords:

Argumentation Framework, Explainable Artificial Intelligence, Machine Learning.

Abstract:

This paper introduces the Explanatory Argumentation Rule-based Framework (XARF), a new approach in Ex-

plainable Artificial Intelligence (XAI) designed to provide clear and understandable explanations for machine

learning predictions and classifications. By integrating a rule-based system with argumentation theory, XARF

elucidates the reasoning behind machine learning outcomes, offering a transparent view into the otherwise

opaque processes of these models. The core of XARF lies in its innovative utilization of the apriori algorithm

for mining rules from datasets and using them to form the foundation of arguments. XARF further innovates

by detailing a unique methodology for establishing attack relations between arguments, allowing for the con-

struction of a robust argumentation structure. To validate the effectiveness and versatility of XARF, this study

examines its application across seven distinct machine learning algorithms, utilizing two different datasets: a

basic Boolean dataset for demonstrating fundamental concepts and methodologies of the framework, and the

classic Iris dataset to illustrate its applicability to more complex scenarios. The results highlight the capability

of XARF to generate transparent, rule-based explanations for a variety of machine learning models.

1 INTRODUCTION

Explainable Artificial Intelligence (XAI) aims to

make AI systems more transparent, understandable,

and trustworthy. As AI becomes integrated into daily

life and critical decision-making, the need for inter-

pretable systems increases. XAI addresses the gap

between advanced AI capabilities and the human need

for clarity, accountability, and ethical assurance (Gi-

anini and Portier, 2024). An argumentation frame-

work (AF) is a fundamental tool in artificial intelli-

gence and computational logic, used to formalize and

analyze argumentation processes. AF models, evalu-

ates, and resolves conflicts between arguments, draw-

ing on principles of classical and informal logic (Vas-

siliades and Patkos, 2021). In the context of XAI, the

development of methods to interpret machine learn-

ing predictions is essential. This paper introduces the

Explanatory Argumentation Rule-Based Framework

(XARF), an innovative approach leveraging argumen-

tation frameworks to explain machine learning deci-

sions. XARF uses a rule-based mechanism rooted in

explanatory argumentation to provide clear and un-

a

https://orcid.org/0000-0002-4450-1865

b

https://orcid.org/0000-0002-1347-5852

c

https://orcid.org/0000-0002-4456-6829

derstandable explanations. At the core of XARF is

the use of rules derived from datasets via the apriori

algorithm. These rules, which consist of premises and

conclusions, form the backbone of the argumentation

framework. XARF constructs arguments and defines

attack relations among them, enabling structured rea-

soning that aligns with the extensions of the argumen-

tation framework such as grounded and preferred se-

mantics. The adaptability of XARF is demonstrated

through its application to seven machine learning al-

gorithms across two datasets. This highlights its ef-

fectiveness in generating transparent explanations for

diverse models. By combining rule-based insights

from the apriori algorithm with argumentation the-

ory, XARF enhances transparency and understand-

ing of machine learning models. This paper details

the architecture, operational mechanisms, and empir-

ical evidence of the ability of XARF to bridge the in-

terpretability gap in machine learning, contributing a

significant advancement to XAI. This work was con-

ducted in the context of the Manna Team. This arti-

cle begins with a literature review, covering AF, XAI,

and related work. It then details the methodology be-

hind XARF, explaining its application to datasets and

machine learning algorithms. The results section an-

alyzes the effectiveness of the framework, followed

Sanches, H. E., Possebom, A. T. and Aylon, L. B. R.

XARF: Explanatory Argumentation Rule-Based Framework.

DOI: 10.5220/0013193200003929

In Proceedings of the 27th International Conference on Enterprise Information Systems (ICEIS 2025) - Volume 1, pages 683-690

ISBN: 978-989-758-749-8; ISSN: 2184-4992

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

683

by a conclusion summarizing findings and potential

future work.

2 BACKGROUND REVIEW

2.1 Argumentation

Argumentation is an interdisciplinary field that has

attracted significant interest within artificial intelli-

gence, particularly in reasoning and multi-agent sys-

tems, where agents evaluate arguments and reach con-

clusions collectively. This has spurred both theoret-

ical and practical research within computer science

(Lu, 2018). Therefore, the knowledge area known

as computational argumentation was concisely devel-

oped and, furthermore, laid the groundwork for con-

sidering argumentation as a discipline within artificial

intelligence, especially with the model proposed by

Dung (Dung, 1995) defining what is known as Ab-

stract Argumentation. Since Dung’s model, various

semantics have been developed to adapt the frame-

work for different problems and applications. Argu-

mentation semantics formally defines protocols that

dictates all the rules for evaluating arguments (Jha and

Toni, 2020). The extension-based semantics approach

focuses on creating subsets (extensions) of arguments

that can coexist (Amgoud and Ben-Naim, 2018).

2.2 XAI

Explainable Artificial Intelligence (XAI) emphasizes

transparency and understandability in AI systems,

addressing the ”black box” nature of models to

foster trust and accountability (Samek and M

¨

uller,

2021). Reviews by (Arrieta and Herrera, 2020) and

(Linardatos and Kotsiantis, 2021) highlight the evolu-

tion of XAI, from simple methods to advanced tech-

niques for deep learning models. XAI applications

span sectors like healthcare, where it enhances diag-

nostic transparency, and finance, where it clarifies au-

tomated trading and risk assessment (Fan and Wang,

2021). For instance, XAI has improved trust in med-

ical AI systems by explaining predictions related to

patient outcomes (Tjoa and Guan, 2020).

2.3 Related Work

Argumentative systems have been applied to explain

machine learning algorithms. For example, the DAX

framework (Deep Argumentative Explanations) inte-

grates computational argumentation with neural net-

works to enhance interpretability and explainability

(Albini and Tintarev, 2020). Similarly, Quantitative

Argumentation Frameworks (QAF) provide benefits

for neural network explainability using a different

methodology (Potyka, 2021). For Random Forest al-

gorithms, Bipolar Argumentation Graphs (BAP) have

been used to justify and explain decisions (Potyka

and Toni, 2022). In another study (Achilleos and

Pattichis, 2020), ML algorithms like Random For-

est and Decision Trees were applied to brain MRI

images to distinguish between normal controls and

Alzheimer’s Disease cases. Data-Empowered Ar-

gumentation (DEAr) offers an approach to gener-

ate explainable ML predictions by structuring ar-

guments through data relationships (Cocarascu and

Toni, 2020). Similarly, the EVAX framework (Ev-

eryday Argumentative Explanations) generates user-

accessible explanations for AI decisions by mirroring

natural human reasoning, demonstrating its versatility

across four machine learning models (Van Lente and

Sarkadi, 2022).

3 METHODOLOGY

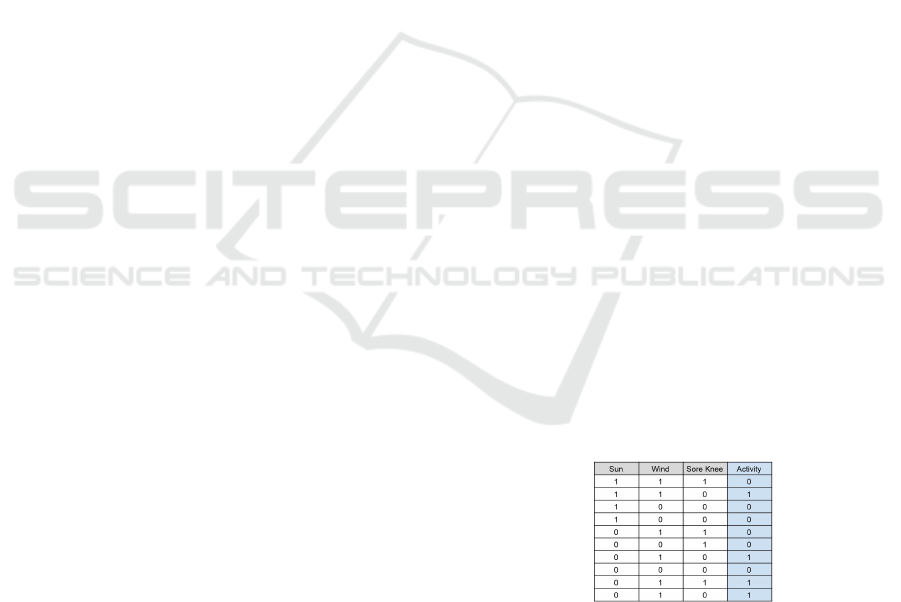

This research was conducted using two distinct

datasets, employing a consistent methodological ap-

proach with necessary adaptations tailored to each

dataset. The first dataset, a Boolean dataset, com-

prises three attributes (Sun, Wind and Sore Knee), a

binary class activity (which is 1 for surfing and 0 for

fishing), and ten instances, serving as a foundation

to elucidate the concepts and methodology integral

to the XARF framework and can be found in Table

I. The second dataset, the well known Iris dataset, is

characterized by four numerical attributes and encom-

passes three distinct class labels representing different

species of the Iris flower and 150 instances.

Table 1: Boolean dataset D. Source: (Dondio, 2021).

3.1 Definitions of Dataset, Attributes,

and Elements

Dataset (D)

Let D be a dataset consisting of tuples

(X

1

, X

2

, . . . , X

n

, CX ), where each X

i

represents

an attribute of the data with i denoting the specific

attribute index, and CX is the class attribute. D can

ICEIS 2025 - 27th International Conference on Enterprise Information Systems

684

also be defined as a set of elements (E

1

, E

2

, . . . , E

n

)

where each E

i

is an element.

Attribute (X)

Each X

i

represents an attribute of the data where i de-

notes the specific attribute index.

Class Attribute (CX)

The class attribute CX indicates the outcome or class

label for each tuple in D. It is a special type of at-

tribute used to distinguish the categories or classes to

which data instances belong. This is the target vari-

able in the classification task. Class attributes can

be represented as CX

j

where j represents different

classes. For example, in a dataset for iris plant classi-

fication, the class attribute could represent the type of

iris plant, such as Setosa, Versicolour, or Virginica.

Element (E)

An element refers to any attribute, including class at-

tributes. Thus, it can be represented as E

k

, where k

can refer to any attribute or class attribute index, and

E ∈ X ∪CX. Types of Attributes/Elements.

• Boolean Attributes/Elements. A Boolean at-

tribute X

i

can have a true or false value, repre-

sented as X

1

i

(true) and X

2

i

(false).

• Numerical/Categorical Attributes/Elements. A

numerical or categorical attribute X

i

is discretized

(divided into bins or encoded categories), for ex-

ample, X

1

i

, X

2

i

, X

3

i

, etc., representing different

ranges of values or categories.

Preprocessing and Application of the Apriori

Algorithm

Transformation for Boolean Attributes. Each at-

tribute X

i

is expanded into two columns representing

the attribute X

1

i

(e.g., Sun) and its negation X

2

i

(no

Sun), thus capturing both presence and absence. This

dichotomy is crucial for constructing comprehensive

association rules that account for both positive and

negative associations.

Discretizing and Encoding for Numerical At-

tributes. Continuous attributes are discretized into

bins. This step simplifies the data structure and en-

ables the application of the Apriori algorithm, which

typically operates on categorical data. For instance, a

numerical attribute like petal length in the Iris dataset

is divided into bins - such as X

1

1

= [0 − 2.5), X

2

1

=

[2.5 − 5.0), X

3

1

= [5.0 − 7.5), and so on - enhancing

the detail and interpretability of the association rules

derived.

Preprocessed Dataset (D

′

). This dataset, D

′

, is

formed by applying preprocessing steps necessary for

the Apriori algorithm, including binning, encoding,

and handling of Boolean attributes and elements as

described previously.

3.2 Application of the Apriori

Algorithm and Argumentation

Framework

Apriori Algorithm

Following the preprocessing phase, the Apriori algo-

rithm is applied to generate association rules from the

dataset, thereby forming the basis for argument cre-

ation within the argumentation framework. The Apri-

ori algorithm is a classic algorithm used to extract fre-

quent itemsets from a dataset and derive association

rules. It operates on a dataset D

′

, producing a set of

rules R, each of the form Ant ⇒ Con where Ant (an-

tecedent) and Con (consequent) are itemsets derived

from D

′

and both are formed by subsets of elements

Ant, Con ⊆ E.

Definition of Arguments

In the context of XARF, an argument arg is formed

by premises and conclusions which are both struc-

tured sets of elements E derived from association

rules where Premise (P) is the antecedent Ant of an

association rule and Conclusion (C) is the consequent

Con of an association rule. An argument arg is a pair

(P, C) formed by a premise leading to a conclusion

Arg = (P, C). Like Ant and Con, P, C ⊆ E.

Definition of Argumentation Framework (AF)

An argumentation framework can be formally defined

as a pair AF = (Ar, att), where:

• Ar is a set of arguments derived from the associa-

tion rules.

• att ⊆ Ar×Ar is the attack relation among these ar-

guments, defining which arguments attack others

based on defined rules (e.g., conflicting premises

and conclusions).

For the Boolean dataset, we provide a selection of

randomly chosen examples of the arguments gener-

ated: arg1, Premise: ’Fishing’, Conclusion: ’Sun’

arg9, Premise: ’Sore Knee’, Conclusion: ’Fish-

ing’ arg12, Premise: ’No Sun’, ’Wind’, Conclusion:

’Surf’ arg16, Premise: ’Wind’, ’Good Knee’, Conclu-

sion: ’Surf’ For instance, arg12 implies that if there is

no sun and there is wind, the activity is most likely to

XARF: Explanatory Argumentation Rule-Based Framework

685

be surfing. As arg = (P, C) argument 12 is mathemat-

ically described as:

arg

12

=

P{X

2

1

- No Sun, X

1

2

- Wind}, C{CX

1

- Surf}

.

Similarly, for the Iris dataset, we present a sub-

set of randomly selected examples of the arguments

produced: arg2, Premise: ’petal length bin (4.0,

5.0]’, Conclusion: ’species versicolor’ arg5, Premise:

’petal width bin (0.1, 0.5]’, ’petal length bin (1.0,

2.0]’, Conclusion: ’species setosa’

Support and Minimum Support Value. The sup-

port of a rule, and the minimum support of a rule,

which are critical in the Apriori algorithm, are defined

as:

Support(R) = Probability(Ant ∩Con)

Support(R) ≥ min support

where Probability denotes the probability of occur-

rence within the dataset, and min support is a thresh-

old value determining which rules are significant

enough to consider. For example, a minimum sup-

port of 0.75 means only the rules found in at least

75% of the data instances are considered. This intro-

duces a significant trade-off: a higher minimum sup-

port value yields fewer arguments, leading to more

generalized yet precise explanations, whereas a lower

value enhances detail at the cost of increased com-

putational complexity. Several minimum support val-

ues were tested, however, for the purposes of the re-

search documented in this paper, minimum support

values of 0.2 and 0.3 were selected for the Boolean

and Iris datasets, respectively. This selection was

to optimize the balance between the granularity of

the explanations generated and the computational effi-

ciency of the Explanatory Argumentation Rule-based

Framework (XARF). Other values can be used with-

out changing the concepts and the main results pre-

sented in the paper.

3.3 Establishing Attack Relations

Within the Argumentation

Framework

For the arguments derived from association rules to

integrate into an argumentation framework, it is im-

perative to define attack relations among them. As

previously mentioned, both premises and conclusions

can comprise multiple elements. However, for clar-

ity, the exemplification of attack rules will consider

premises and conclusions consisting of a singular el-

ement each.

1- Mutual Attack (Based on Opposite Conclu-

sions Within the Same Attribute). For all argi =

((Xi

a

), (X

b

k

)) and arg j = ((X i

a

), (X

c

k

)) ∈ Ar, where

a, b, c, i, j, k are indices representing values and

b ̸= c, i ̸= k, we have att(arg

i

, arg

j

) and att(arg

j

, arg

i

).

This relation highlights a mutual attack when two

arguments share the same premise attribute values

for X

i

but have conclusions with different values for

the attribute X

k

, such as X

b

k

and X

c

k

, where these

values are directly opposite (e.g., true and false for

Boolean attributes). For instance, if arg1 = (X

1

1

;X

1

2

)

and arg2 = (X

1

1

;X

2

2

) there is a mutual attack between

arg1 and arg2, but notice that, if arg1 = (X

1

1

;X

1

2

) and

arg3 = (X

1

1

;X

1

3

) there are no attacks between these ar-

guments.

2- Mutual Attack (Based on Opposite Premises

within the Same Attribute). For all argi =

((Xk

b

), (X

a

j

)) and arg j = ((Xk

c

), (X

a

j

)) ∈ Ar, where

b ̸= c, we have att(arg

i

, arg

j

) and att(arg

j

, arg

i

). This

specifies a mutual attack when two arguments share

the same conclusion but have premises that include

different values of the same attribute X

k

, such as

X

b

k

and X

c

k

. For instance, if arg1 = (X

1

1

;X

1

2

) and

arg2 = (X

2

1

;X

1

2

) there is a mutual attack between

arg1 and arg2, but notice that, if arg1 = (X

1

1

;X

1

2

) and

arg3 = (X

1

3

;X

1

2

) there are no attacks between these ar-

guments.

3- Single Direction Attack (Based on Conclusion-

Premise Attribute Disagreement). For all argi =

((Xk

a

), (X

b

j

)) and arg j = ((X j

c

), (X

a

k

)) ∈ Ar, where

b ̸= c, we have att(arg

i

, arg

j

). This details a directed

attack where the conclusion of arg

i

containing X

b

j

di-

rectly conflicts with the premise of arg

j

containing

X

c

j

, and both arguments share another attribute X

a

k

that

ties them together. For instance, if arg1 = (X

1

1

;X

1

2

)

and arg2 = (X

2

2

;X

1

1

) there is a single direction attack

between arg1 and arg2.

4- Mutual Attack (Based on Different Class

Attributes). For all argi = ((Xk

a

), (CX

m

)) and

arg j = ((Xk

a

), (CX

n

)) ∈ Ar, where m ̸= n, we have

att(arg

i

, arg

j

) and att(arg

j

, arg

i

). This rule accounts

for a mutual attack based on differing class attributes

CX

m

and CX

n

where m ̸= n, occurring despite sharing

the same attribute Xk

a

in their premises or conclu-

sions. For instance, if arg1 = (X

1

1

;CX

1

) and arg2 =

(X

2

2

;CX

2

) there is a mutual attack between arg1 and

arg2. In constructing the argumentation framework

(AF), four attack rules are defined as the foundation

of the methodology. Rules 1 and 3 correspond to clas-

sical rebuttal and undercut attack types. Attack rule 2

enhances explanation consistency, reduces argument

redundancy, and lowers computational complexity by

simplifying the system architecture through a higher

number of attacks. Attack rule 4, combined with the

mapping procedure, ensures model class consistency

by preventing the coexistence of class-conflicting ar-

ICEIS 2025 - 27th International Conference on Enterprise Information Systems

686

guments. In parallel with the development of associ-

ation rules and argumentation frameworks, machine

learning (ML) predictive models are utilized. Consis-

tent with XARF’s objective of employing a black-box

methodology, any ML model capable of class pre-

diction can generate explanations within XARF. This

study employs seven widely used ML algorithms:

decision trees, random forests, k-nearest neighbors

(KNN), neural networks, logistic regression, support

vector machines (SVM), and naive Bayes. Upon the

establishment of the AF, the next step is to apply ex-

tensions, the selection of which is contingent upon

the dataset context. Extensions can vary from be-

ing skeptical, which may yield fewer but more pre-

cise explanations, to naive, potentially resulting in a

greater quantity of arguments and explanations, al-

beit with potentially less precision. In this research,

the completed, preferred, and grounded extensions

were evaluated. The grounded extension yielded neg-

ligible valid outcomes and was consequently con-

sidered unsuitable for these datasets. On the other

hand, the completed extensions were rejected due to

their propensity for generating redundant extensions.

Thus, for the purposes of this paper, the preferred ex-

tension was selected.

3.4 Mapping

The mapping procedure constitutes a critical compo-

nent of our methodology, systematically associating

each argument of every extension with the current

query (the input data for the prediction model) and

the predicted class. This procedure assigns a score to

every argument and, by extension, to every extension,

thereby identifying the combination that provides the

most cogent explanation.

Definitions

• Query (Q): the current input data for which a pre-

diction is made.

• Predicted Class (C

p

): the class predicted by the

machine learning model for the query Q.

Scoring of Arguments

Each argument arg is evaluated based on its alignment

with the query Q and the predicted class C

p

. The score

of an argument arg can be denoted as score(arg).

• 1- Complete Match with Query

score(arg)+ = 1 if P ⊆ Q and C ⊆ Q

This rule awards a point if all elements of both

premises (P) and conclusions (C) of the argument

match elements in the query.

• 2- Extra Elements in Premises/Conclusions

score(arg)+ = |elements(arg)− 1| if rule 1 is 1

This rule awards additional points for each ele-

ment beyond the first in the premises or conclu-

sions, conditional on rule 1 being positive (1).

• 3- Alignment with Predicted Class

score(arg)+ = 1 if C

p

∈ C

This rule gives an extra point if the predicted class

is part of the argument’s conclusion.

• 4- Disagreement with Predicted Class

score(arg) = 0if exists a C

x

in P or C and C

x

̸= C

p

The score is set to zero if any class attribute in

the premises or conclusions conflicts with the pre-

dicted class.

Extension Scoring and Selection

The score of an extension ext, which is a set of argu-

ments, is the sum of the scores of its arguments:

score(ext) =

∑

arg∈ext

score(arg)

The goal is to identify the extension ext

∗

that max-

imizes score(ext) while providing the most relevant

and understandable explanation:

ext

∗

= argmax

ext

score(ext)

Following the application of the mapping procedure

to AF extensions, with thorough consideration of the

ML predicted class, the system provides the explana-

tion behind the ML’s class prediction relative to the

query. A comprehensive summary of the methodol-

ogy is depicted in the enclosed chart found in (Figure

1), offering a view of the procedural framework and

its implementation.

Figure 1: Methodology of XARF.

4 RESULTS AND ANALYSIS

This section presents the explanations generated

by the XARF framework based on various input

parameters. The focus is not on evaluating machine

learning (ML) models’ performance metrics such as

accuracy or precision but on assessing the quality

and relevance of explanations produced by XARF.

XARF: Explanatory Argumentation Rule-Based Framework

687

Examples are provided across different scenarios,

classes, and datasets. Consider the query with input

parameters [Sun: 1, Wind: 1, Sore Knee: 0], or, for

a more detailed representation [Sun: 1, No Sun: 0,

Wind: 1, No Wind: 0, Sore Knee: 0, Good Knee:

1]. For this query, all seven algorithms unanimously

predicted class 1, representing the Surf activity.

Given the size of the database, only two extensions

were identified as preferred extensions. Among

these, one extension had a uniform score of 1 for

its arguments, whereas the other achieved a score

of 10 for all its arguments. The standout argument

was Argument 16, which is arg16, Premise: ’Wind’,

’Good Knee’, Conclusion: ’Surf’, achieving a score

of 3. This score was attributed to its perfect alignment

with the query (+1), the presence of two elements

in the premise (+1), and its conclusion matching

the predicted class (Surf). This argument logically

correlates with the database, indicating that windy

conditions and the absence of knee soreness, rather

than sunny weather, influenced the ML algorithm’s

prediction favoring Surf as the activity. Had the ML

classification model predicted Fishing for the same

query, a completely different set of arguments and

extensions would have emerged, such as Argument

1, with a premise of ’Fishing’ leading to a conclusion

of ’Sun’, thereby attributing the sunny condition as

a decisive factor in predicting Fishing, according to

XARF’s explanation. Examining a query that elicited

split predictions from the ML classifiers [Sun: 0,

Wind: 1, Sore Knee: 1], or more succinctly: [Sun:

0, No Sun: 1, Wind: 1, No Wind: 0, Sore Knee:

1, Good Knee: 0]. The majority of classifiers (5

out of 7) favored class 0 (Fishing), while Random

Forest and Naive Bayes opted for class 1 (Surf).

For models predicting Fishing, the most robust

extension featured a score of 5, with the higher score

argument, arg9, Premise: ’Sore Knee’, Conclusion:

’Fishing’ with a score 2, indicating that a sore knee

is a deterrence from surfing. Additional arguments

in this extension included correlations between the

absence of sun and wind, and a sore knee, further

supporting the Fishing prediction. Conversely, for

models predicting Surfing, the leading extension

scored 13, highlighted by arg12, Premise: ’No Sun’,

’Wind’, Conclusion: ’Surf’. This implies that the

lack of sunshine combined with windy conditions

were considered significant by the classifiers for a

Surf prediction. These explanations, coherent with

both the database content and the predicted classes,

underscore the capability of XARF to generate

plausible explanations, even when classifiers diverge

in their predictions for the same query. Relating

to the experiments in the Iris dataset, upon the

implementation of the Apriori algorithm and the

formulation of attack relations, the argumentation

framework (AF) for the Iris dataset was meticulously

constructed. Analogously to the Boolean dataset, we

herein exhibit examples of explanations generated

by XARF across different scenarios. Consider a

query with the following characteristics: Sepal length

(cm): 5.4, Sepal width (cm): 3.7, Petal length (cm):

1.5, Petal width (cm): 0.2. For this dataset, all

seven ML predictors accurately classified the query

as class 0, corresponding to the Setosa species.

Among the extensions evaluated, one with a score

of 12 was selected, prominently featuring Argument

5 which is arg5, Premise: ’petal width bin (0.1,

0.5]’, ’petal length bin (1.0, 2.0]’, Conclusion:

’species setosa’. This argument, which aligns with

two premises and the conclusion being the predicted

class, received a score of 3. It underscores the

importance of both petal length and width in deter-

mining the Setosa classification. Another query is

examined: Sepal length (cm): 7.0, Sepal width (cm):

3.2, Petal length (cm): 4.7, Petal width (cm): 1.4.

Here, all algorithms concurred on class 1, Versicolor.

The chosen extension scored 4, highlighting two

arguments, Argument 2 and Argument 3, as equally

significant: arg2, Premise: ’petal length bin (4.0,

5.0]’, Conclusion: ’species versicolor’ arg3,

Premise: ’petal width bin (1.0, 1.5]’, Conclu-

sion: ’species versicolor’ Both arguments are

consistent with the query and show that petal sizes

were the most important attributes for the decision

of ML to assign class Versicolor. A contentious

example involves the query: Sepal length (cm): 5.9,

Sepal width (cm): 3.2, Petal length (cm): 4.8, Petal

width (cm): 1.8. Here, a split in predictions occurred:

Naive Bayes, Logistic Regression, KNN, and Neural

Networks opted for class 2, while Decision Tree,

Random Forest, and SVM selected class 1. For

predictions of class 1, XARF highlighted Argument

2, which is arg2, Premise: ’petal length bin (4.0,

5.0]’, Conclusion: ’species versicolor’, as the sole

explanatory factor with a score of 2. Conversely,

for class 2 predictions, the framework found no

supporting arguments, resulting in an extension score

of zero. Although no explanation was discovered in

this particular instance, the outcome aligns with the

dataset and the predicted class. This underscores the

integrity of the framework, as it avoids creating ex-

planations in the absence of sufficient evidence. The

challenge presented by the query, which divided the

”opinion” of the ML algorithms, highlights its com-

plexity and the difficulty in generating explanations

for such cases. Nevertheless, there is a requirement

for a broader spectrum of arguments and, conse-

ICEIS 2025 - 27th International Conference on Enterprise Information Systems

688

quently, a wider range of possible explanations for

complex queries. This can be addressed by adjusting

the minimum support threshold within the Apriori

algorithm. As mentioned earlier, opting for a lower

minimum support value facilitates the generation of

additional explanations, but this adjustment increases

computational complexity and the risk of overfitting

explanations to the data.

4.1 Quantitative Analysis and

Limitations

This section demonstrates the selected granularity and

provides a quantitative analysis of the results by cal-

culating the fidelity metric for both datasets, each

evaluated at two distinct minimum support thresholds.

Fidelity measures the accuracy with which the expla-

nation approximates the prediction of the underlying

black box model. A high fidelity explanation should

faithfully mimic the behavior of the black box model

(Lakkaraju and Leskovec, 2019). It is quantified as

the percentage of instances where both the Explain-

able AI Framework (XARF) and the black box model

assign the same output class. The formula for calcu-

lating fidelity is as follows:

Fidelity =

Number of Correct Predictions

Total Predictions

× 100

A notable feature of XARF, as delineated by its third

rule, is its assurance of producing explanations that

always align with the class predicted by the ma-

chine learning (ML) model. This characteristic distin-

guishes XARF from other explainable AI (XAI) mod-

els and impacts how fidelity is calculated, particularly

in cases of missing explanations. In practical terms,

the framework is designed to avoid incorrect expla-

nations entirely, potentially achieving a 100% fidelity

score across all ML models if the minimum support

is optimally configured and the instance complexity is

manageable. Conversely, when the minimum support

threshold is set sufficiently high, XARF may fail to

generate any explanations. This is particularly likely

in scenarios where ML models deliver conflicting pre-

dictions, thereby complicating the instance. For our

fidelity assessments, minimum support values of 0.3

and 0.4 were tested for the first dataset, and 0.19 and

0.2 for the second dataset. These values were chosen

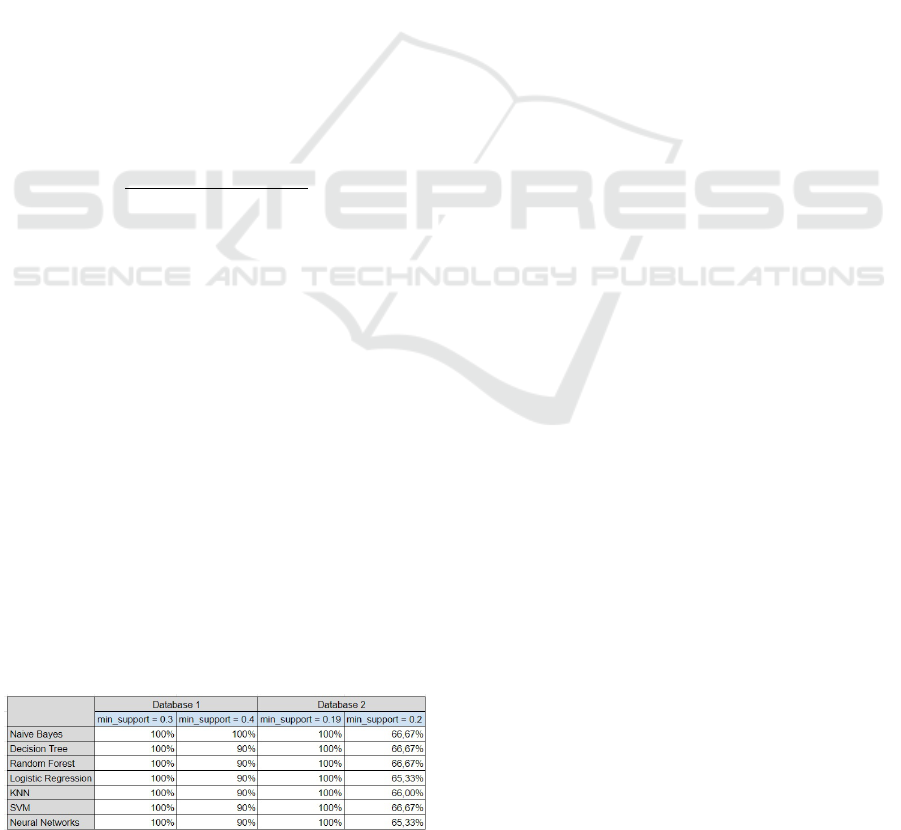

Table 2: Fidelity.

to delineate the boundary between achieving 100% fi-

delity and observing a decline. As observed in Table

2, a fidelity of 100% is achievable provided there are

sufficient arguments to support a specific explanation.

Nonetheless, the system’s fidelity may diminish when

the arguments are insufficient to substantiate the ML

prediction. Moreover, as different ML models find

different predictions, the fidelity could vary. In such

cases, while XARF may provide an explanation for

one predicted class, it may fail to do so for another.

5 CONCLUSION

This paper introduced the Explanatory Argumenta-

tion Rule-based Framework (XARF), a novel ap-

proach in Explainable Artificial Intelligence (XAI)

aimed at explaining the decision-making processes

of machine learning models. Combining argumenta-

tion theory and machine learning, XARF uses a rule-

based methodology to generate clear and understand-

able explanations for predictions made by various

ML algorithms. By applying the Apriori algorithm

and defining attack relations within an argumentation

framework, XARF demonstrated its ability to inter-

pret ML predictions across datasets, including the Iris

dataset. XARF extracts rules from datasets using the

Apriori algorithm, transforming these rules into ar-

guments with premises and conclusions. Its innova-

tive method for defining attack relations enhances the

framework, allowing the application of standard ar-

gumentation extensions like grounded and preferred

semantics. These features improve the interpretabil-

ity of ML models, offering stakeholders valuable in-

sights into AI decision-making. Empirical evalua-

tions using a basic Boolean dataset and the Iris dataset

demonstrated the adaptability of XARF to different

data complexities and machine learning paradigms.

XARF constructs logical arguments that align with

ML predictions, even in cases where traditional ex-

planatory models face challenges. These results high-

light XARF as a valuable tool in the XAI field, ad-

dressing the critical need for transparency and ac-

countability in AI systems. Future research could

refine the rule-mining process, expand compatibility

with additional ML algorithms, and explore applica-

tions in more diverse and complex datasets. A specific

enhancement could involve assigning varying weights

to rules based on application requirements, replac-

ing the current uniform +1 weighting. In conclusion,

XARF represents a significant advancement in XAI,

offering a robust tool for uncovering the rationale

behind machine learning decisions. As it evolves,

XARF: Explanatory Argumentation Rule-Based Framework

689

XARF holds potential for creating more transparent,

trustworthy, and user-focused AI systems.

ACKNOWLEDGEMENTS

Thanks to @manna team, the Arauc

´

aria Foundation

to Support Scientific and Technological Develop-

ment of the State of Paran

´

a (FA) and the National

Council for Scientific and Technological Develop-

ment (CNPq) - Brazil process 421548/2022-3 for the

support.

REFERENCES

Achilleos, K. and Pattichis, C. (2020). Extracting explain-

able assessments of alzheimer’s disease via machine

learning on brain mri imaging data. In Proceed-

ings of the 2020 IEEE 20th International Conference

on Bioinformatics and Bioengineering (BIBE), pages

1036–1041. IEEE.

Albini, E. and Tintarev, N. (2020). Deep argumentative ex-

planations. arXiv preprint arXiv:2012.05766.

Amgoud, L. and Ben-Naim, J. (2018). Weighted bipo-

lar argumentation graphs: Axioms and semantics. In

Proceedings of the Twenty-Seventh International Joint

Conference on Artificial Intelligence (IJCAI), pages

5194–5198.

Arrieta, A. and Herrera, F. (2020). Explainable artificial in-

telligence (xai): Concepts, taxonomies, opportunities

and challenges toward responsible ai. Information Fu-

sion, 58:82–115.

Cocarascu, Oana, S. and Toni, F. (2020). Data-empowered

argumentation for dialectically explainable predic-

tions. In Proceedings of the ECAI 2020, pages 2449–

2456. IOS Press.

Dondio, P. (2021). Towards argumentative decision graphs:

Learning argumentation graphs from data. In Pro-

ceedings of the AI³@AI IA.

Dung, P. M. (1995). On the acceptability of arguments and

its fundamental role in nonmonotonic reasoning, logic

programming and n-person games. Artificial Intelli-

gence, 77(2):321–357.

Fan, Chao, X. and Wang, F.-Y. (2021). On interpretability

of artificial neural networks: A survey. IEEE Trans-

actions on Neural Networks and Learning Systems,

32(11):4793–4813.

Gianini, G. and Portier, P.-E. (2024). Advances in Explain-

able Artificial Intelligence. Springer.

Jha, Rakesh, B. F. and Toni, F. (2020). Formal verification

of debates in argumentation theory. In Proceedings

of the 35th Annual ACM Symposium on Applied Com-

puting, pages 940–947.

Lakkaraju, Himabindu, K. and Leskovec, J. (2019). Faithful

and customizable explanations of black box models.

In Proceedings of the 2019 AAAI/ACM Conference on

AI, Ethics, and Society, pages 131–138.

Linardatos, Pantelis, P. V. and Kotsiantis, S. (2021). Ex-

plainable ai: A review of machine learning inter-

pretability methods. Entropy, 23(1):18.

Lu, Hong, e. a. (2018). Brain intelligence: Go beyond arti-

ficial intelligence. Mobile Networks and Applications,

23:368–375.

Potyka, N. (2021). Interpreting neural networks as quanti-

tative argumentation frameworks. In Proceedings of

the AAAI Conference on Artificial Intelligence, pages

6463–6470.

Potyka, Nils, Y. and Toni, F. (2022). Explaining random

forests using bipolar argumentation and markov net-

works. arXiv preprint arXiv:2211.11699.

Samek, Wojciech, M. and M

¨

uller, K.-R. (2021). Ex-

plainable AI: Interpreting, Explaining and Visualizing

Deep Learning. Springer Nature.

Tjoa, E. and Guan, C. (2020). A survey on explainable ar-

tificial intelligence (xai): Toward medical xai. IEEE

Transactions on Neural Networks and Learning Sys-

tems, 32(11):4793–4813.

Van Lente, Jacob, B.-A. and Sarkadi, S. (2022). Everyday

argumentative explanations for classification. Argu-

mentation & Machine Learning, 3208:14–26.

Vassiliades, Alexander, B. and Patkos, T. (2021). Argumen-

tation and explainable artificial intelligence: A survey.

The Knowledge Engineering Review, 36:e5.

ICEIS 2025 - 27th International Conference on Enterprise Information Systems

690