An Online Integrated Development Environment for Automated

Programming Assessment Systems

Eduard Frankford

1

, Daniel Crazzolara

1

, Michael Vierhauser

1

, Niklas Meißner

2

,

Stephan Krusche

3

and Ruth Breu

1

1

University of Innsbruck, Department of Computer Science, Austria

2

University of Stuttgart, Institute of Software Engineering, Germany

3

Technical University of Munich, School of Computation, Information and Technology, Germany

{eduard.frankford, daniel.crazzolara, michael.vierhauser, ruth.breu}@uibk.ac.at,

Keywords:

Teaching Tools, Automated Programming Assessment Systems, Integrated Development Environments,

Artemis, Advanced Learning Technologies.

Abstract:

The increasing demand for programmers has led to a surge in participants in programming courses, making it

increasingly challenging for instructors to assess student code manually. As a result, automated programming

assessment systems (APASs) have been developed to streamline this process. These APASs support lectur-

ers by managing and evaluating student programming exercises at scale. However, these tools often do not

provide feature-rich online editors compared to their traditional integrated development environments (IDEs)

counterparts. This absence of key features, such as syntax highlighting and autocompletion, can negatively

impact the learning experience, as these tools are crucial for effective coding practice. To address this gap,

this research contributes to the field of programming education by extracting and defining requirements for an

online IDE in an educational context and presenting a prototypical implementation of an open-source solution

for a scalable and secure online IDE. The usability of the new online IDE was assessed using the Technology

Acceptance Model (TAM), gathering feedback from 27 first-year students through a structured survey. In

addition to these qualitative insights, quantitative measures such as memory (RAM) usage were evaluated to

determine the efficiency and scalability of the tool under varying usage conditions.

1 INTRODUCTION

Students’ rapidly growing interest in learning pro-

gramming has caused an increased adoption of

APASs to manage and evaluate student exercises effi-

ciently (Tarek et al., 2022). An important aspect con-

cerning the effectiveness of these systems is the abil-

ity to write code without downloading and installing

an IDE on the students’ computer (Horv

´

ath, 2018)

(Espa

˜

na-Boquera et al., 2017). With the absence of

an online IDE, APASs, in such cases, often require

students to use a version control system (VCS), like

GIT, to submit their code. This is because APASs

commonly use update events triggered by the VCS

to execute a continuous integration (CI) pipeline and

then automatically run pre-defined test cases on the

students’ code. The results of these tests are then

used for assessment and are provided to the students

as feedback.

One example of an APAS is the interactive learn-

ing platform Artemis (Krusche and Seitz, 2018) from

the Technical University of Munich, which evalu-

ates and assesses students’ programming exercises

and provides feedback to the students. Artemis in-

cludes an online editor, but students can also submit

their programming assignments via a GIT repository.

Automated tests are then carried out on the students’

code in the repository, and the results are returned.

To avoid students also having to learn a VCS

while learning programming, some APASs have in-

troduced built-in online editors, allowing students to

complete exercises directly within the platform (Kr-

usche and Seitz, 2018) (Mekterovi

´

c et al., 2020).

However, these online editors typically lack the com-

prehensive set of features commonly found in a mod-

ern desktop IDE. For example, syntax highlighting,

auto-completion, and an integrated terminal are of-

ten missing. This gap in functionality can slow down

the learning process and increase the entry barriers

for students new to programming (Leisner and Brune,

48

Frankford, E., Crazzolara, D., Vierhauser, M., Meißner, N., Krusche, S. and Breu, R.

An Online Integrated Development Environment for Automated Programming Assessment Systems.

DOI: 10.5220/0013203000003932

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 17th International Conference on Computer Supported Education (CSEDU 2025) - Volume 1, pages 48-59

ISBN: 978-989-758-746-7; ISSN: 2184-5026

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

2019). An online IDE embedded in an APAS can

support students in independently identifying issues

and correcting errors in beginner programming. Na-

tive IDEs also support students with those features,

but often student access to these native IDEs can-

not be guaranteed due to the students’ different back-

grounds, resources, and technical equipment. There-

fore, a feature-rich online IDE that can be accessed

within a browser is a good way to ensure that every

student has the same conditions and equal opportuni-

ties. The need for SE tools integrated into learning

platforms, such as an online IDE for APAS, has also

been identified in previous studies and underlines the

need for development (Meißner et al., 2024).

To address these challenges, we found that an

online IDE should at least incorporate several key

features like syntax highlighting, auto-completion,

and syntax error highlighting. Additionally, students

should be able to compile and run code directly in the

APAS without submitting their code, therefore trig-

gering the execution of the test cases and leading to a

significant overhead.

While one might argue that this excessive tooling

could inhibit the memorization of basic syntax, we

believe this concern does not apply to programming

education. Programming syntax is highly language-

specific and does not necessarily translate to an un-

derstanding of the broader concepts essential for pro-

gramming. For instance, focusing on learning the

syntax of a single language by rote could lead to diffi-

culties when transitioning to other languages. Instead,

our position is that tools like syntax highlighting and

code completion enable students to focus on solving

problems and developing algorithms, which are fun-

damental skills in programming. These tools help stu-

dents identify errors and improve their code quality,

ultimately strengthening their conceptual understand-

ing rather than detracting from it.

The primary goal of this work is to further investi-

gate and address the mentioned challenges by explor-

ing the following research questions:

• RQ1: What are the primary challenges and neces-

sary architectural considerations for developing a

feature-rich online IDE for APASs?

• RQ2: What are students’ perceptions of the usabil-

ity and usefulness of the new online IDE?

We conducted a comprehensive study involving

several key steps to address these research questions.

First, we gathered and defined key requirements for

an online IDE in an educational context. Based on

these requirements, we designed and implemented

a prototypical open-source solution to be integrated

with an APAS. Finally, we evaluated the implementa-

tion by conducting user studies to assess its effective-

ness and gather student feedback.

Our evaluation shows that the implemented online

IDE effectively addresses the identified challenges

and is positively received by students, enhancing their

learning experience. Through this study, we aim to

contribute to the field of programming education by

providing insights into developing and integrating an

effective online IDE within an APAS and presenting

how the students perceive and utilize the tool.

The remainder of this paper is structured as fol-

lows: Section 2 provides an overview of related work.

Section 3 elaborates on the requirements an online

IDE should fulfill. Section 4 then presents details

about the prototypical implementation of the online

IDE, which we evaluate in Section 5. In Section 6,

we present the evaluation results and a respective dis-

cussion. Section 7 outlines potential threats to valid-

ity, and we conclude in Section 8, reflecting on the

broader implications of this work.

2 RELATED WORK

Web-based IDEs are gaining popularity with both

open-source and commercially hosted options becom-

ing more widely available. For instance, (Wu et al.,

2011) introduced CEclipse, an online IDE designed

for cloud-based programming. With CEclipse, the au-

thors have focused on implementing an online IDE

with functions similar to Eclipse’s. However, since

2011, Eclipse has been developed further with new

helpful functions, rendering CEclipse obsolete. In ad-

dition, the authors have mainly focused on program

analysis issues to ensure security and analyze pro-

gram behavior to improve programmer efficiency. In

contrast, the approach presented in this paper focuses

on a high-performance online IDE designed explicitly

for APASs.

More recently, (Trachsler, 2018) unveiled

WebTigerJython, a web-based programming IDE for

Python, featuring a clean interface and a textual code

editor. However, the author focused on computer

science courses at Swiss schools in his master’s

thesis. The requirements for this online IDE differed

from ours, as the author had to ensure programming

on a tablet and, therefore, only implemented basic

functionalities for developing and executing Python

programs in the browser. Compared to our work, the

thesis did not focus on integrating APASs.

(Pawelczak and Baumann, 2014) implemented

Virtual-C to teach C programming with visualization

tools to help students understand programming con-

cepts. Their work integrated live coding functions in

the lectures for programming assignments and self-

An Online Integrated Development Environment for Automated Programming Assessment Systems

49

learning functions. However, the authors of Virtual-

C only focused on a programming environment for a

C-programming course. Therefore, the general appli-

cability in other programming languages is question-

able. Also, since the paper was published in 2014, the

enhancements to now common IDE features are not

integrated into Virtual-C, making it outdated.

(Bigman et al., 2021) presented PearProgram, a

learning tool for introductory computer science stu-

dents. Their work focuses on pair programming ac-

tivities in a remote learning setting. While PearPro-

gram provides a code editor, its features are limited

and focus on teaching the principles of pair program-

ming rather than on programming itself, as we focus

on in this paper.

Similarly to PearProgram, CodeHelper by (Liu

and Woo, 2021) is a lightweight IDE facilitating on-

line pair programming in C++ courses. While Code-

Helper also targets pair programming in education,

the authors focused more on implementing and in-

tegrating CodeHelper but did not evaluate their ap-

proach. Furthermore, since the tool was created fo-

cusing on C++ courses, the general applicability in

other programming languages remains uncertain.

(Tran et al., 2013) implemented an online IDE

called IDEOL that leverages cloud computing to fa-

cilitate real-time collaborative coding and code exe-

cution. By shifting the heavy IDE cores to the cloud,

IDEOL offers benefits such as work mobility, device

independence, and lower local resource consumption.

However, its features only focus on creating an in-

teractive and responsive environment where real-time

guidance, communication, and collaboration can be

delivered.

Another notable web-based solution is MOC-

SIDE, which offers a coding environment with auto-

grading capabilities (Barlow et al., 2021). However,

there is no documentation of the supported IDE fea-

tures in its editor, and the open-source GitHub project

seems not to exist anymore.

The inclusion of commercial options like Repl.it

1

,

GitHub Codespaces

2

, and Gitpod

3

, reveals an even

broader spectrum of web-based IDEs with extensive

feature support. In a more detailed analysis of com-

mercial online IDEs, we found that most of them

use the open source editor Eclipse Theia

4

. However,

there are few studies on the integration of Theia in

APASs. An analysis of its performance in Section 4

also revealed significant concerns about using Theia

in APASs.

1

https://replit.com

2

https://github.com/features/codespaces

3

https://www.gitpod.io

4

https://github.com/eclipse-theia/theia

Several APASs also introduced basic online edi-

tors to assist students with their submissions and gen-

eral coding experiences. Notable platforms include

Edgar (Mekterovi

´

c et al., 2020), Fitchfork (Pieterse,

2013), CodeRunner (Lobb and Harlow, 2016), Code-

Board (Truniger, 2023), CodeOcean (Staubitz et al.,

2016), PythonTutor (Guo, 2013) and Artemis (Kr-

usche and Seitz, 2018). However, these platforms typ-

ically only offer basic IDE features, which come out

of the box by including editors like: ACE

5

, Monaco

Editor

6

and CodeMirror

7

, therefore, lacking many of

the more advanced IDE features, like advanced code-

completion, syntax error highlighting or an integrated

terminal or debugger, which is normally available in

other native IDEs or commercial online IDEs.

Through the analysis of related work, we found

that, up until now, there has still been a noticeable gap

in the current capabilities provided by online IDEs

used in APASs. While existing commercial online

IDEs generally perform well in providing platforms

for coding, they largely do not focus on teaching pro-

gramming skills or evaluating coding exercises in an

educational context. On the other hand, APASs do

provide support for teaching coding and the assess-

ment of programming exercises. Still, they often lack

comprehensive IDE features such as advanced code

completion, syntax error highlighting, and execution

capabilities commonly found in commercial online

IDEs. Thus, this study aims to bridge this gap by

explicitly designing an online IDE for APASs and its

prototypical implementation to evaluate the approach.

3 REQUIREMENTS FOR ONLINE

IDEs IN PROGRAMMING

EDUCATION

To create an online IDE tailored to APASs, we must

first define the relevant requirements that must be ful-

filled. Thus, we used an anonymous online question-

naire to conduct a quantitative and qualitative analysis

of students’ requirements.

3.1 Requirements Engineering Process

To identify relevant requirements for online IDEs in

APASs, we conducted an in-depth analysis of state-

of-the-art desktop IDEs such as Visual Studio

8

, Intel-

5

https://ace.c9.io

6

https://github.com/microsoft/monaco-editor

7

https://codemirror.net

8

https://code.visualstudio.com/

CSEDU 2025 - 17th International Conference on Computer Supported Education

50

liJ IDEA

9

, and Eclipse

10

. This analysis aimed to iden-

tify key features that contribute to their effectiveness

and popularity among developers.

In a second step, to ensure that all requirements

for an IDE in the educational context are covered, we

asked 48 APAS users from three different Austrian

universities: (1) Paris Lodron University Salzburg, (2)

Johannes Kepler University Linz, and (3) University

of Innsbruck to evaluate and rate the importance of

the collected IDE features and, if needed, to provide

additional features they perceive as important for an

online IDE.

The survey respondents were predominantly first-

semester students (n=35), with three participants each

in their second and third semesters. One participant

was in their fifth semester, one in the sixth, and one in

the seventh semester. Four respondents did not spec-

ify their semester.

The link to the survey containing the following

questions was displayed on the login page of the

Artemis platform:

• P1. In which semester are you currently enrolled?

• P2. In how many university courses (including

current ones) have you used Artemis?

• P3. How well do you know GIT commands and

functionality? (Single choice regarding five op-

tions ranging from “not at all familiar” to “very

familiar”)

• P4. What tool did you primarily use to solve pro-

gramming exercises in Artemis? (Single choice

regarding five options ranging from “online editor

only” to “local IDE only”)

• Rate the importance of the following IDE features

(Single choice on a five-point scale ranging from

“Very Important” to “Very Unimportant”)

– F0. Syntax Highlighting - uses colors to differ-

entiate various components of the source code.

– F1. Debugger / Breakpoints - enables pausing

code execution at specific lines to inspect vari-

ables and program state.

– F2. Auto-completion / Code Suggestions - of-

fers possible code completions or suggestions

based on the context of the typed code.

– F3. Syntax Error Highlighting - marks parts of

the code that may lead to syntax errors, aiding

in debugging.

– F4. Brace Matching Highlighting - highlights

corresponding braces in the code, helping to vi-

sualize scope boundaries.

9

https://www.jetbrains.com/idea/

10

https://www.eclipse.org/downloads/

– F5. Key Shortcuts - includes shortcuts for com-

mon actions like copying, pasting, cutting, and

searching.

– F6. Compiler / Interpreter (Run Button) - al-

lows you to compile and run code without exe-

cuting tests.

– F7. Dedicated Shell Console - provides access

to a console where you can execute commands

and run code manually with custom options.

• O1. Do you have any additional suggestions for

improving the code editor?

• O2. Are there any other critical features a code

editor should have to better support your coding

needs?

Additionally, for each feature question, we dis-

played an image of the feature when used in a tra-

ditional IDE to make sure that all students knew what

the features were about.

3.2 Gathered Requirements

The preliminary questions P1-P3 revealed that the

majority is not familiar with GIT commands (29% -

“Not at all familiar”, 27% - “Slightly familiar”, 17%

“Neutral”, 23% “Moderately familiar” and only 4%

“Very familiar”). However, more than half of the stu-

dents (58%) use mostly local IDEs and GIT to solve

the exercises, indicating that the available online edi-

tor is not feature-rich enough to complete an exercise

there adequately.

Based on the feature questions (F1-F7) and map-

ping the answer possibility “Very Important” to 1 and

“Very Unimportant” to 5, we were able to order the

requirements by importance. The results are shown in

Table 1.

Table 1: Mean Importance of IDE Features (Ordered).

Feature Mean

Importance

Syntax Highlighting 1.56

Syntax Error Highlighting 1.69

Compiler / Interpreter (Run

Button)

2.03

Auto-completion / Code Sug-

gestions

2.06

Debugger / Breakpoints 2.08

Brace Matching Highlighting 2.19

Dedicated Shell Console 2.44

Key Shortcuts 2.47

Additionally, the open questions (O1 and O2) re-

vealed that students value the following features:

• Presentation Mode. The IDE should include a

presentation mode that rearranges the UI for better

display of solutions in a classroom setting.

An Online Integrated Development Environment for Automated Programming Assessment Systems

51

• Integrated Console Output Pane. To efficiently

monitor the code output, the IDE should incorpo-

rate a console output pane within its interface.

• Resizable Editor’s Pane. The IDE should allow

users to resize the editor’s pane, offering flexibil-

ity in managing the workspace according to their

preferences.

• Mobile Device Compatibility. The IDE should be

responsive for mobile devices, ensuring accessibil-

ity and usability on various devices.

• Multiple File Handling. It should support opening

and working with multiple files simultaneously, al-

lowing for more complex project development.

Additionally, two requirements, based on the

setup at the University of Innsbruck, include:

• Compatibility with the APAS Artemis. The IDE

must be fully compatible with the Artemis learning

platform, ensuring seamless integration and inter-

action to provide a cohesive user experience.

• Low Resource Usage. Given observed peaks of

more than 500 weekly active users in the past three

years on the Artemis platform, it is essential that

the new IDE solution uses a limited amount of

memory and is capable of scaling resources on-

demand to handle exam situations.

In conclusion, the requirements for the online IDE

encompass both features to improve usability, along

with scalability considerations, reflecting the needs

of an educational environment with varying levels of

user engagement.

4 REFERENCE

IMPLEMENTATION

After gathering key requirements, the focus shifted to-

wards evaluating existing solutions for the potential

integration into Artemis. For this phase, we leveraged

results from a previous study, where we conducted

a systematic tool review of online IDEs (Frankford

et al., 2024). As part of this, we assessed and ex-

tracted the set of supported features and programming

languages and evaluated the availability of these tools

as open-source solutions to ensure the source code is

accessible and enables easy integration into APASs.

The main findings were that existing online editors in

APASs only support partial feature sets. The Artemis

code editor, for example, already fulfills the follow-

ing requirements: (1) Syntax Highlighting, (2) Syntax

Error Highlighting, (3) Multiple File Handling, and

(4) Low Resource Usage. However, commercial on-

line IDEs, like Gitpod and Codespaces, support all

IDE features as defined in the requirements specifica-

tion. Still, we cannot access the commercial options’

code bases, making integration impossible.

As discussed in Section 2, we found that most of

the online IDEs use the Eclipse Theia editor, which is

publicly available and open-source. Consequently, in-

tegrating Theia into the Artemis platform was consid-

ered a viable option. However, a closer examination

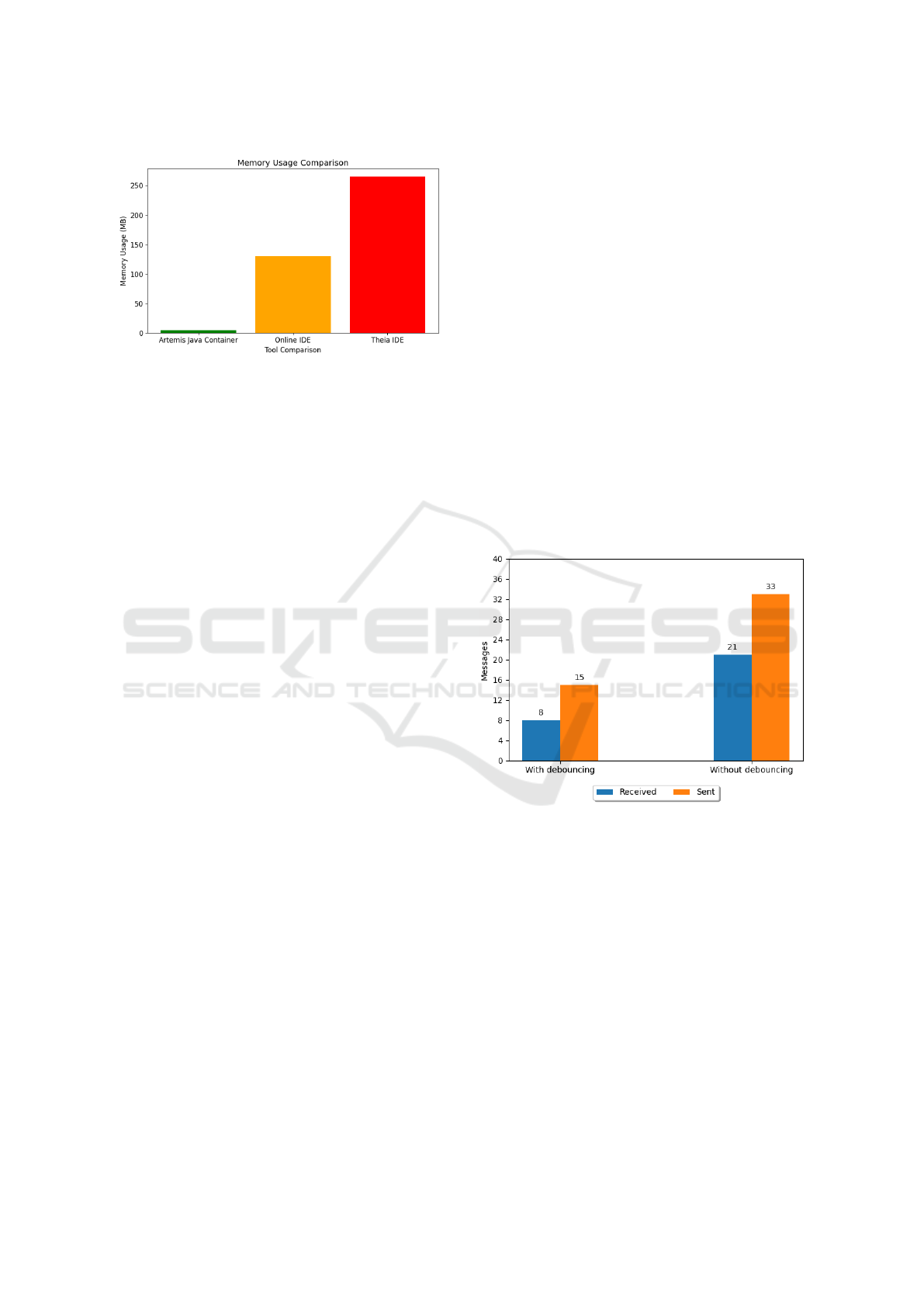

of its memory usage revealed that Theia consumes too

many resources, given that Artemis should be able to

serve hundreds of users simultaneously. This increase

in memory consumption, particularly during exam

periods, where peaks are expected, raised concerns

about Theia’s feasibility. Even without Java plugins,

the containerized Theia IDE exhibited a significantly

larger memory footprint (approximately 135 MB)

than Artemis’ base Java Docker container. Adding

Java plugins, essential for Java IntelliSense features,

almost doubled the memory requirement to about 265

MB.

This observation led to the conclusion that Theia,

in its entirety, is not a feasible solution for online IDEs

in the educational sector where resources are limited.

To mitigate this issue, the focus shifted to enhancing

Artemis’ native code editor.

One reason Theia has high memory requirements

is that it integrates a language server handling the lan-

guage server protocol (LSP), which is responsible for

the IDE features directly within the IDE. Because of

this, we decided to extract this component and use

one language server for multiple user sessions, result-

ing in a significant decrease in memory usage. We

were able to additionally implement a load-balancing

mechanism, which always selects the language server

with the least workload because the language server

offers a set of key performance metrics like (1) Aver-

age Load, (2) CPU Usage, (3) Total Memory, (4) Free

Memory and (5) Number of Active Sessions. As a

result, we were able to address the resource concerns

effectively. In the following Figure 1, you can see

a comparison of the Artemis online editor’s mem-

ory usage without IDE features, with IDE features

(named online IDE), and then the reference, which

is the Theia online IDE.

The VCS service in Artemis is also integral to our

solution, serving as the central element for storing and

distributing exercise data between Artemis and the

LSP servers. This centralized VCS ensures consistent

synchronization across instances. If a user session is

closed, it can be reopened on a different LSP server,

with the central VCS maintaining data coherence.

Incorporating data cloning for each user session

into the LSP server’s workflow inevitably leads to

an escalation in disk space usage. This necessitates

CSEDU 2025 - 17th International Conference on Computer Supported Education

52

Figure 1: RAM comparison of the different IDEs.

regular cleanups to eliminate data that is only tem-

porarily utilized. We have integrated an automated

cleanup system within each LSP server to streamline

this process. This system checks every five minutes

to identify inactive sessions – defined as those with no

LSP messages or file-modifying activity in the past 60

minutes. Subsequently, it removes them along with

their corresponding files.

Another key requirement, the integrated terminal,

introduces various security challenges due to the po-

tential execution of arbitrary code and the risk of users

trying to access other files by traversing the directo-

ries. This threat has been mitigated by ensuring that

a new Docker container is started for each integrated

terminal, and therefore, the code runs in a more or less

controlled environment.

Terminal sessions are initiated only upon user re-

quest to conserve resources and prevent unnecessary

server load rather than starting automatically.

In addition to cleaning exercise data, we have also

implemented a process for managing Docker contain-

ers spawned during sessions. While idle contain-

ers generally have minimal impact on resource usage

and memory footprint, accumulating such containers

could lead to inefficient resource utilization. This

cleanup process is synchronized with the termination

of the related web socket connection to improve re-

source management efficiency. Consequently, when a

connection is closed or disrupted, and a container is

no longer in use, its resources are released.

Analyzing the interaction between the new online

IDE and the LSP server, we observed a substantial

exchange of WebSocket messages during user ses-

sions. This high communication volume is inherent

to the LSP and its language servers, which need real-

time updates with every user interaction, such as typ-

ing or hovering over code elements, to provide en-

hanced IDE features. Despite the relatively small size

of JSON-RPC messages, the sheer frequency and vol-

ume can impose a substantial load on the server when

multiplied by the concurrent user count.

To address this challenge, we have employed cus-

tom debouncing techniques within Artemis. De-

bouncing is a method that throttles the rate of oper-

ations, in this case, message transmissions. It works

by postponing message dispatch until a certain pe-

riod of inactivity has passed. For operations such as

code lenses, documentation on hover, or code com-

pletion, we introduced a delay of one second, while

file change actions were assigned a two-second delay.

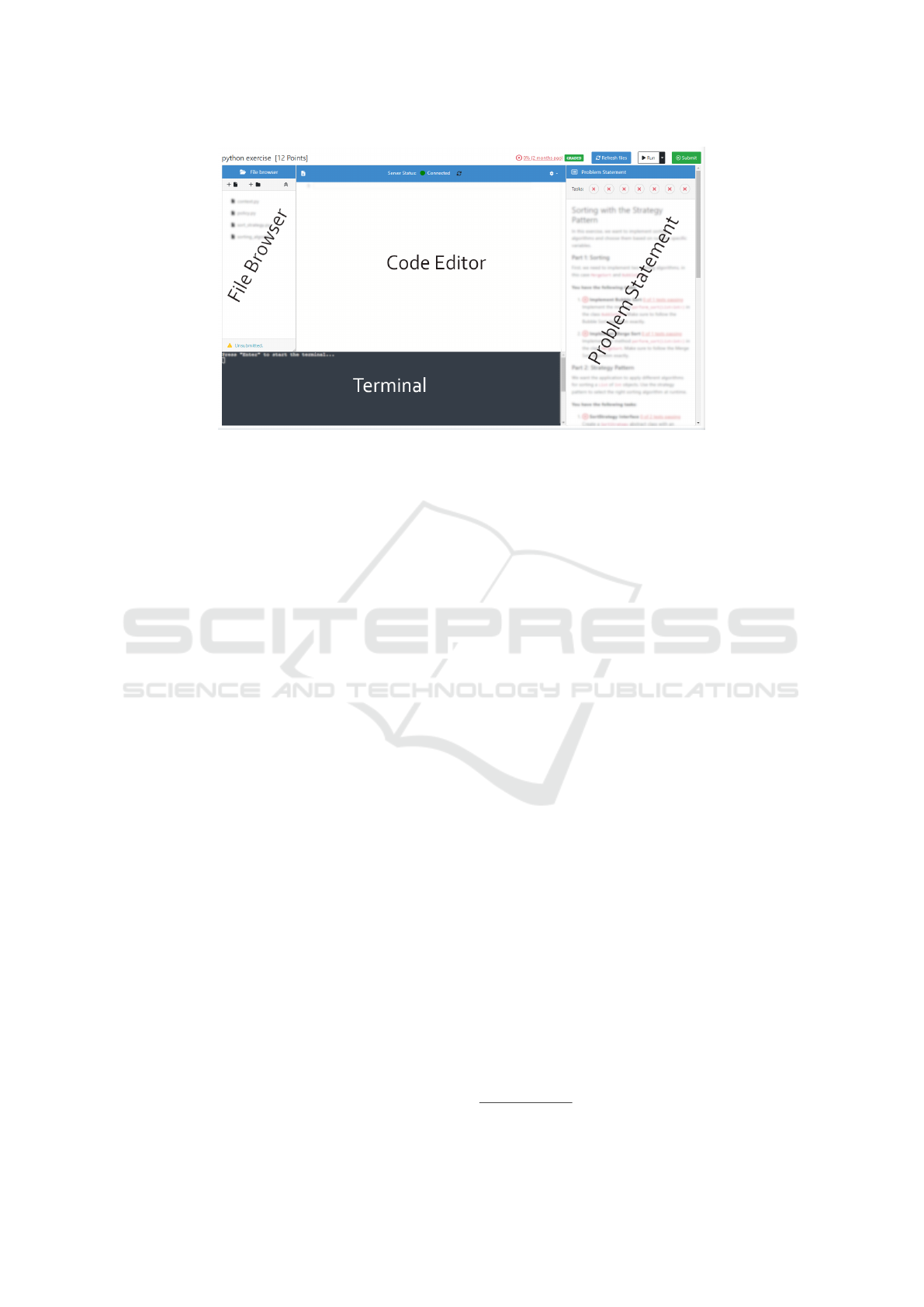

The impact of this debouncing approach was eval-

uated through an experimental comparison. The ex-

periment involved a user typing “Hello world!” in a

Java exercise file on Artemis and measuring the mes-

sage traffic with and without debouncing. The results,

illustrated in Figure 2, indicate a significant reduc-

tion in messages sent. An approximate 43% decrease

in messages sent when debouncing was applied was

achieved without negatively impacting user experi-

ence. This demonstrates the effectiveness of this tech-

nique in mitigating performance issues in an online

IDE environment.

Figure 2: Impact of debouncing on the number of received

and sent messages.

An additional ’run’ button enhances the user inter-

face of the web IDE, offering an automated execution

of predefined command sequences with a single click.

This sequence is designed to terminate any ongoing

processes, clear the terminal, navigate to the relevant

directory, and execute language-specific commands,

such as mvn clean test for Java (Maven), or pytest

for Python. This functionality replaces the need for

a ’stop’ button by allowing users to terminate and

restart terminal sessions at will, thus addressing po-

tential session freezes or faults.

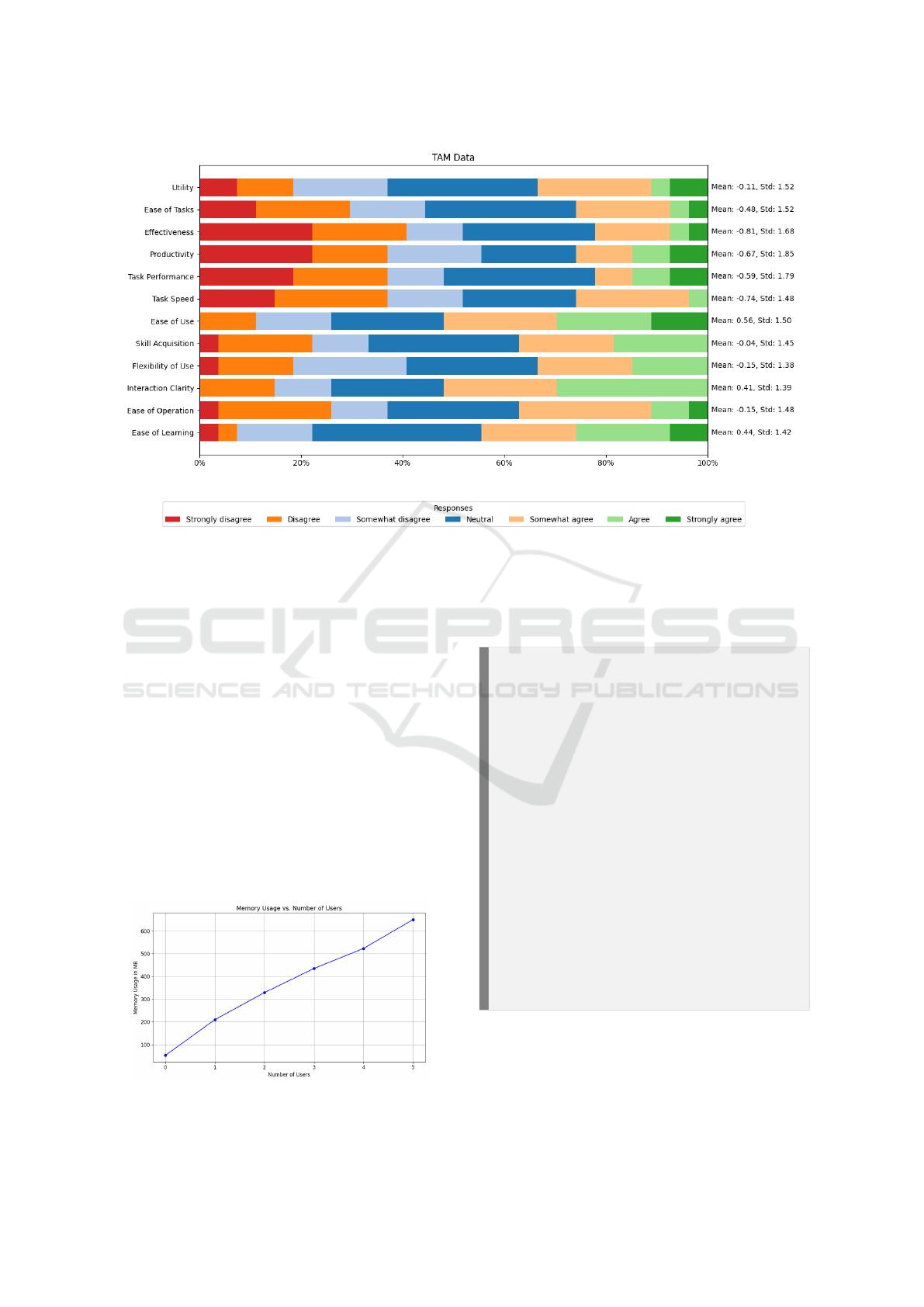

The online IDE now comprises four main compo-

nents: a (1) file browser, a (2) code editor, a (3) prob-

lem statement viewer, and a (4) terminal interface.

An Online Integrated Development Environment for Automated Programming Assessment Systems

53

Each component is designed to offer specific func-

tionalities, as shown in the IDE overview in Fig-

ure 3. Currently, the LSP server solution supports

Java, Python, and C exercises, representing the three

main programming languages taught in courses at the

University of Innsbruck.

Main Findings for RQ1:

• Memory Efficiency: Connecting multiple

user sessions to a single LSP server signifi-

cantly reduces memory usage, making the

online IDE scalable for many concurrent

users.

• Load Balancing: Implementing load-

balancing mechanisms distributes work-

load evenly across servers, ensuring sys-

tem stability during peak usage times like

exams.

• Security Measures: Using restricted

Docker containers for the integrated termi-

nal addresses security concerns by isolat-

ing user code execution from the host sys-

tem.

• Optimized Communication: Custom de-

bouncing techniques reduce the commu-

nication load between clients and servers,

enhancing performance without degrading

the user experience.

5 EVALUATION

In the following, we present the evaluation results of

the new online IDE. We selected students from the

“Introduction to Programming” tutorial, a compul-

sory course of the Bachelor’s degree in Computer Sci-

ence curriculum at the University of Innsbruck. This

tutorial teaches first-year students the fundamentals of

the C programming language. We received a total of

n=27 valid responses.

Prior to the survey, we introduced the students to

the prototype of the online IDE that had been imple-

mented. Afterward, the students were tasked with

solving the Pascal’s Triangle exercise (Hinz, 1992)

using only the online IDE. They had one week to

complete the task. In the next course, we allotted ap-

proximately 15 minutes for students to complete the

online questionnaire.

5.1 Study Design

We used the Technology Acceptance Model (TAM)

to evaluate the prototype’s usability. TAM is a widely

used research model in empirical user studies to de-

termine the actual use of a technology based on the

perceived usefulness (PU) and perceived ease of use

(PEOU) (Chau, 1996). Additionally, for PU and

PEOU we also evaluated four editor properties (CEE

questions). This approach allowed us to systemati-

cally explore the users’ perceptions concerning the

perceived performance of code submissions, the qual-

ity of compilation feedback, the intuitiveness of the

user interface, and their overall impression of the fea-

tures provided (Davis, 1989).

All questions used a 7-point Likert Scale from

‘Strongly Disagree’ (-3) to ‘Strongly Agree’ (3). Re-

garding the PEOU, the following questions were

asked:

• PEOU0. Learning to operate the code editor is

easy for me.

• PEOU1. I find it easy to get the code editor to do

what I want it to do.

• PEOU2. My interaction with the code editor is

clear and understandable.

• PEOU3. I find the code editor flexible to interact

with.

• PEOU4. It is easy for me to become skillful at

using the code editor.

• PEOU5. I find the code editor easy to use.

The following PU questions were asked:

• PU0. Using the code editor in my tasks enables me

to accomplish tasks more quickly.

• PU1. Using the code editor improves my task per-

formance.

• PU2. Using the code editor in my tasks increases

my productivity.

• PU3. Using the code editor enhances my effective-

ness in the tasks.

• PU4. Using the code editor makes it easier to do

my tasks.

• PU5. I find the code editor useful in my tasks.

The survey also included an explanation and

screenshots for each of the properties that were ad-

ditionally questioned. For these questions, a 5-point

scale ranging from ‘Excellent’ (1) to ‘Very poor’ (5)

has been used:

• CEE0: Submission / Testing Performance. The

time it takes to receive feedback after submitting

the exercise.

• CEE1: Compilation / Testing Feedback. The

feedback provided from the submission of an ex-

ercise.

• CEE2: Editor’s User Interface (UI). The way the

editor is structured and displayed.

• CEE3: Editor’s Features/Functionalities. The

set of functionalities and features provided by the

editor.

CSEDU 2025 - 17th International Conference on Computer Supported Education

54

Figure 3: Overview of the new online IDE in Artemis.

In addition to the TAM-based evaluation, we

asked open questions again and measured the mem-

ory usage of the implemented online IDE. These mea-

surements were critical to assess the IDE’s perfor-

mance efficiency, especially regarding resource con-

sumption. This provided valuable insights into the

IDE’s scalability, an important requirement in the ed-

ucational sector as computing and financial resources

are limited.

5.2 Results

Figure 4 presents findings from the TAM question-

naire to measure user attitudes towards the prototype

IDE. Each bar represents the percentage of responses

across a 7-point Likert scale, ranging from “Strongly

disagree” to “Strongly agree” for various attributes.

Study participants generally agreed that the tech-

nology was easy to use and clear in interaction, and

they found it reasonably easy to learn.

However, we observed a general disagreement regard-

ing the utility, effectiveness, productivity, task perfor-

mance, and task speed. High standard deviations sug-

gest that some students appreciate the online IDE, but

some seem to think the opposite.

As mentioned in Section 5.1, we evaluated student

perceptions of the online IDE based on four key as-

pects: (1) Submission/Testing performance, (2) Com-

pilation/Testing feedback, (3) Editor’s user interface

(UI), and (4) Editor’s features/functionalities. ’Com-

pilation/Testing feedback’ received the most favor-

able evaluation, with the highest mean score of 0.78

and a standard deviation of 0.83, indicating a gen-

erally positive user experience. The ’Editor user in-

terface (UI)’ scored well, with a mean of 0.67 and

a standard deviation of 0.86, suggesting that users

found the UI to be above average in terms of us-

ability. Conversely, ’Editor features/functionalities’

and ’Submission/Testing performance’ both recorded

a mean score of 0.30 but with different levels of vari-

ance (standard deviations of 0.94 and 0.71, respec-

tively).

The open-ended feedback provided valuable in-

sights into the students’ perceptions and experiences

with the online IDE, revealing both positive aspects

and areas for improvement. Many participants ex-

pressed that the editor’s utility is limited, with sev-

eral preferring to use established IDEs like VSCode or

CLion

11

instead of the web-based environment. They

highlighted that while the editor may be useful for in-

troductory tasks, it is less suitable for advanced exer-

cises, as they preferred external tools with more ro-

bust features.

Several usability concerns were also identified.

Participants frequently mentioned the inability to re-

size or collapse UI panels, which disrupted their

workflow, and requested more customization options,

such as a dark mode and additional color schemes.

Another common request was enhanced code assis-

tance, including a more ’intelligent’ auto-completion

and better visible error highlighting. Some noted that

the prototype’s current implementation of these fea-

tures was either too slow or inconsistent.

Despite these criticisms, some students appreci-

ated the web IDE, citing its simplicity as a useful

tool for beginners. Positive remarks included its util-

ity for first-year students who may not yet be familiar

with other development environments. Furthermore,

feedback on compilation and testing functionality was

generally positive, though there were isolated cases

where users experienced discrepancies between local

11

https://www.jetbrains.com/clion

An Online Integrated Development Environment for Automated Programming Assessment Systems

55

Figure 4: Distribution of user responses to the TAM questionnaire.

and web-based compilation results.

Users made specific feature requests, such as

adding a ’vim mode,’ implementing automated

bracket closure, and improving the reporting of test

results, particularly for failed tests. These sugges-

tions indicate a desire for more advanced, developer-

friendly features that align with those available in tra-

ditional IDEs.

While the open feedback highlights the current

limitations of the web IDE, it also emphasizes the po-

tential for enhancing its functionalities and user inter-

face to better support novice and experienced users.

Figure 5 shows the memory usage of the pro-

totype. This plot reveals a linear relationship be-

tween the number of users and the memory usage of

the IDE. As the user count increased from 0 to 5,

the memory consumption rose steadily from 53 MB

to 650 MB. This pattern underscores the IDE’s pre-

Figure 5: Function of the online IDE’s memory usage by

the number of users.

dictable resource consumption and suggests that the

IDE demonstrates a capacity for scalability, with

memory usage increasing by approximately 130 MB

for each additional user.

Main Findings for RQ2:

• Perceived Ease of Use (PEOU). Students

generally found the online IDE easy to

learn and use, with clear and understand-

able interactions.

• Mixed Perceived Usefulness (PU). While

the IDE was appreciated for introductory

tasks, many students felt it lacked utility

for advanced exercises, leading to mixed

opinions on its effectiveness and produc-

tivity enhancement.

• Suitability for Beginners. The simplicity

of the web IDE was appreciated by first-

year students who are not yet familiar with

other development environments.

• Areas for Improvement. Students re-

quested enhanced code assistance features

and advanced functionalities, especially

debugging tools or a ’vim mode’.

6 DISCUSSION

Developing and integrating an online IDE into an

APAS such as Artemis presents both challenges and

CSEDU 2025 - 17th International Conference on Computer Supported Education

56

opportunities. This research aimed to address two pri-

mary research questions, leading to a series of signif-

icant findings that contribute to the field of program-

ming education.

With the first research question, we focused on

identifying the key challenges in implementing an on-

line IDE for APASs. One main challenge we un-

covered was the high memory consumption associ-

ated with comprehensive online IDE platforms like

Theia

12

. One Theia container needs approximately

260 MB of RAM, which would be prohibitively ex-

pensive in the educational domain, with peak usage of

more than 500 parallel users. However, we were able

to effectively mitigate this issue by connecting mul-

tiple editors to a single LSP server and by introduc-

ing load-balancing, resulting in a significant memory

reduction by around 50% improving both economic

feasibility while still offering a good user experience

during peak usage times such as exams. Moreover,

we effectively addressed security concerns by imple-

menting a Docker environment for the integrated ter-

minal.

Another key challenge was the high communica-

tion load between the online IDE and LSP servers,

which we addressed by implementing custom de-

bouncing techniques in our prototypical online IDE.

This optimization reduced communication intensity,

further enhancing the system’s efficiency. Addition-

ally, regular session cleanup emerged as crucial for

maintaining system performance, emphasizing the

need for efficient resource management strategies to

develop online IDEs for educational purposes.

The investigation into user perceptions (RQ2) re-

vealed mixed responses regarding the new online

IDE’s utility, effectiveness, and productivity. While

most users found the technology generally easy to

use and learn, some disagreed on its impact on task

performance and speed. These findings suggest that

while the new IDE has improved certain aspects of

the user experience, there are still areas that require

further improvement.

The open feedback offers valuable guidance for

further enhancing the IDE. Several participants sug-

gested that while they appreciated the simplicity of

the web IDE for learning basic concepts, they would

like to see features that support more advanced cod-

ing tasks, similar to those offered by established tools

like VSCode or CLion. This indicates an opportu-

nity for the IDE to evolve beyond its current form by

incorporating advanced features such as a fully inte-

grated debugger, better code completion, and detailed

error highlighting. By expanding the feature set, the

online IDE could become a more attractive option for

12

https://github.com/eclipse-theia/theia

experienced users who prefer working within a single,

consistent environment.

The feedback also included innovative sugges-

tions, such as adding a ’vim mode’ and a quick-save

feature (e.g., mapped to Ctrl-S), illustrating how users

are eager to see features that enhance efficiency and

familiarity. These ideas present opportunities for fu-

ture development. By responding to these user-driven

insights, the IDE has the potential to bridge the gap

between a beginner-friendly platform and a powerful

development tool.

The positive reception of the compilation and test-

ing functionalities demonstrates that the core features

of the online IDE are functioning well, providing

users with reliable tools for checking their code.

In summary, the open feedback highlights the po-

tential of the online IDE as a flexible and scalable

solution that, with further development, can accom-

modate a wide range of user needs. The suggestions

from users provide a clear roadmap for future im-

provements, emphasizing the importance of striking

a balance between simplicity for beginners and ad-

vanced functionality for more experienced users. By

incorporating these insights, the IDE could become an

even more effective tool for programming education.

7 LIMITATIONS

In this paper, we mainly considered the following four

categories of validity, also used by (Wohlin et al.,

2012): (1) Construct validity, (2) Reliability, (3) In-

ternal validity, and (4) External validity.

7.1 Construct Validity

Construct validity in this study refers to how accu-

rately the research design and methods capture the

key challenges of implementing an online IDE and

the subsequent user perceptions. The challenges iden-

tified, such as memory consumption, load balancing,

and security issues, were derived from the technical

implementation process and user feedback. While

these challenges represent common issues faced in

software development, especially in educational set-

tings, they may not encompass all possible hurdles

that could emerge in different contexts or with other

technologies.

The study’s construct validity may also be influ-

enced by the specific features and functionalities im-

plemented in the prototype. The chosen set of fea-

tures aimed to address the most critical needs of pro-

gramming students. However, this selection process

might not fully capture the entire spectrum of useful

An Online Integrated Development Environment for Automated Programming Assessment Systems

57

IDE features, especially those that could be more rele-

vant in different programming languages, paradigms,

or more advanced levels of study.

7.2 Reliability

The reliability of this study is significantly improved

by the open-source nature of the prototypical imple-

mentation of the online IDE within the Artemis plat-

form. By making the source code and the question-

naire results publicly available on Zenodo

13

, the study

allows verification and replication by the broader

community, thereby improving the transparency and

trustworthiness of the implementation process. This

open-source approach ensures that every aspect of the

IDE development, from the initial design decisions to

the specific coding practices, is accessible for review

and reuse.

7.3 External Validity

A potential limitation of this study comes from inte-

grating the online IDE exclusively within the Artemis

platform. While Artemis is an APAS with function-

alities common to many APASs, this exclusive in-

tegration may affect the generalizability of the find-

ings. However, the architectural and functional prin-

ciples applied in developing the online IDE, such

as using LSP servers for multiple user sessions

and Docker containers for sandboxing, are platform-

agnostic. These principles could be adapted for inte-

gration into other educational settings, suggesting that

our findings have relevance beyond the Artemis envi-

ronment.

The participant pool of students from a single “In-

troduction to Programming” course for the second

survey also limits external validity. With 27 respon-

dents, the sample size, while sufficient for a prelimi-

nary investigation, limits the ability to conduct com-

prehensive quantitative analyses and make broad gen-

eralizations.

7.4 Internal Validity

The internal validity of this study is based on the care-

ful design and execution aimed at minimizing external

influences on the variables investigated. Key strate-

gies to improve internal validity included a uniform

and well-tested questionnaire (TAM) and leveraging

objective metrics for system evaluation like memory

usage.

13

https://doi.org/10.5281/zenodo.13959741

8 CONCLUSION

This research presents an innovative concept for an

online IDE designed for programming education. We

developed and implemented a prototype to evaluate

the concept. By providing a feature-rich online IDE

directly within an educational platform, we can offer

a more engaging and effective learning experience for

students beginning their programming journey.

The prototypical implementation addresses criti-

cal technical challenges (RQ1), such as high memory

consumption and security issues, by integrating mul-

tiple editors with a single LSP server and leveraging

Docker containers for sandboxed execution environ-

ments, and the open-source nature of the presented

solution ensures transparency and encourages com-

munity involvement for further refinement. The eval-

uation (RQ2) suggests that while the new online IDE

is user-friendly and easy to learn, there is room for im-

provement in demonstrating its utility and impact on

task performance. The mixed opinions on utility and

productivity highlight the need for further enhance-

ments to increase the practical benefits of the IDE in

real-world tasks.

We plan to refine the IDE for future work by in-

corporating user feedback to enhance features and

functionalities. This includes implementing advanced

tools such as an integrated debugger, improving code

completion, and optimizing performance to reduce

load times and improve reliability. We also aim to

conduct more extensive user studies across different

educational institutions and platforms to validate and

generalize our findings.

REFERENCES

Barlow, M., Cazalas, I., Robinson, C., and Cazalas, J.

(2021). Mocside: an open-source and scalable on-

line ide and auto-grader for introductory programming

courses. Journal of Computing Sciences in Colleges,

37(5):11–20.

Bigman, M., Roy, E., Garcia, J., Suzara, M., Wang, K., and

Piech, C. (2021). Pearprogram: A more fruitful ap-

proach to pair programming. In Proc. of the 52nd

ACM Technical Symposium on Computer Science Ed-

ucation, pages 900–906.

Chau, P. Y. (1996). An empirical assessment of a modi-

fied technology acceptance model. Journal of man-

agement information systems, 13(2):185–204.

Davis, F. D. (1989). Perceived usefulness, perceived ease of

use, and user acceptance of information technology.

MIS quarterly, pages 319–340.

Espa

˜

na-Boquera, S., Guerrero-L

´

opez, D., Hermida-P

´

erez,

A., Silva, J., and Benlloch-Dualde, J. V. (2017). An-

alyzing the learning process (in programming) by us-

CSEDU 2025 - 17th International Conference on Computer Supported Education

58

ing data collected from an online ide. In Proc. of the

16th International Conference on Information Tech-

nology Based Higher Education and Training, pages

1–4. IEEE.

Frankford, E., Crazzolara, D., Sauerwein, C., Vierhauser,

M., and Breu, R. (2024). Requirements for an online

integrated development environment for automated

programming assessment systems. Proc. of the 16th

International Conference on Computer Supported Ed-

ucation (CSEDU 2024) - Volume 1, pages 305-313.

Guo, P. J. (2013). Online python tutor: embeddable web-

based program visualization for cs education. In Proc.

of the 44th ACM technical symposium on Computer

science education, pages 579–584.

Hinz, A. M. (1992). Pascal’s triangle and the tower of hanoi.

The American mathematical monthly, 99(6):538–544.

Horv

´

ath, G. (2018). A web-based programming environ-

ment for introductory programming courses in higher

education. In Annales Mathematicae et Informaticae,

volume 48, pages 23–32.

Krusche, S. and Seitz, A. (2018). Artemis: An automatic

assessment management system for interactive learn-

ing. In Proc. of the 49th ACM technical symposium on

computer science education, pages 284–289.

Leisner, M. and Brune, P. (2019). Good-bye localhost: A

cloud-based web ide for teaching java ee web devel-

opment to non-computer science majors. In Proc. of

the IEEE/ACM 41st International Conference on Soft-

ware Engineering, pages 268–269. IEEE.

Liu, X. and Woo, G. (2021). Codehelper: A web-based

lightweight ide for e-mentoring in online program-

ming courses. In Proc. of the 3rd International Con-

ference on Computer Communication and the Inter-

net, pages 220–224. IEEE.

Lobb, R. and Harlow, J. (2016). Coderunner. ACM Inroads,

7(1):47–51.

Meißner, N., Koch, N. N., Speth, S., Breitenb

¨

ucher, U.,

and Becker, S. (2024). Unveiling Hurdles in Soft-

ware Engineering Education: The Role of Learning

Management Systems. In Proceedings of the 46th

International Conference on Software Engineering:

Software Engineering Education and Training (ICSE-

SEET ’24), page 242–252.

Mekterovi

´

c, I., Brki

´

c, L., Mila

ˇ

sinovi

´

c, B., and Bara-

novi

´

c, M. (2020). Building a comprehensive auto-

mated programming assessment system. IEEE Access,

8:81154–81172.

Pawelczak, D. and Baumann, A. (2014). Virtual-c-a pro-

gramming environment for teaching c in undergrad-

uate programming courses. In Proc. of the IEEE

Global Engineering Education Conference, pages

1142–1148. IEEE.

Pieterse, V. (2013). Automated assessment of programming

assignments. CSERC, 13:4–5.

Staubitz, T., Klement, H., Teusner, R., Renz, J., and Meinel,

C. (2016). Codeocean-a versatile platform for prac-

tical programming excercises in online environments.

In Proc. of the 2016 IEEE Global Engineering Educa-

tion Conference (EDUCON), pages 314–323. IEEE.

Tarek, M., Ashraf, A., Heidar, M., and Eliwa, E. (2022).

Review of programming assignments automated as-

sessment systems. In Proc. of the 2nd International

Mobile, Intelligent, and Ubiquitous Computing Con-

ference, pages 230–237. IEEE.

Trachsler, N. (2018). Webtigerjython-a browser-based pro-

gramming ide for education. Master’s thesis, ETH

Zurich.

Tran, H. T., Dang, H. H., Do, K. N., Tran, T. D., and

Nguyen, V. (2013). An interactive web-based ide to-

wards teaching and learning in programming courses.

In Proc. of International Conference on Teaching, As-

sessment and Learning for Engineering, pages 439–

444. IEEE.

Truniger, S. (2023). Codeboard: Verbesserung und er-

weiterung der automatisierten hilfestellungen.

Wohlin, C., Runeson, P., H

¨

ost, M., Ohlsson, M. C., Reg-

nell, B., and Wessl

´

en, A. (2012). Experimentation in

Software Engineering. Springer Science & Business

Media.

Wu, L., Liang, G., Kui, S., and Wang, Q. (2011). CEclipse:

An online IDE for programing in the cloud. In 2011

IEEE World Congress on Services. IEEE.

An Online Integrated Development Environment for Automated Programming Assessment Systems

59