On the Detection of Retinal Image Synthesis Obtained Through

Generative Adversarial Network

Marcello Di Giammarco

1,2

, Antonella Santone

3

,

Mario Cesarelli

4

, Fabio Martinelli

5

and Francesco Mercaldo

1,3

1

Institute for Informatics and Telematics (IIT), National Research Council of Italy (CNR), Pisa, Italy

2

Department of Information Engineering, University of Pisa, Pisa, Italy

3

Department of Medicine and Health Sciences “Vincenzo Tiberio”, University of Molise, Campobasso, Italy

4

Department of Engineering, University of Sannio, Benevento, Italy

5

Institute for High Performance Computing and Networking (ICAR), National Research Council of Italy (CNR), Rende

(CS), Italy

{marcello.digiammarco, francesco.mercaldo}@iit.cnr.it, {antonella.santone, francesco mercaldo}@unimol.it,

Keywords:

Adversarial Machine Learning, Generative Adversarial Networks, Deep Learning, Retinal Imaging,

Robustness, Security.

Abstract:

Adversarial machine learning on medical imaging is one of the many applications for which the evaluation

of Generative Adversarial Networks in the medical field has demonstrated remarkable interest. This paper

proposes a method in which Convolutional neural Networks are trained and tested on the binary classification

of real and fake images, generated through generative adversarial networks. In this paper, the considered

experiments are on the RGB fundus retina images of the human eye. Results highlight networks with optimal

performance, and completely recognize real/fake classification; however, on the other hand, other networks

mis-classify the images, enhancing security and reliability problems.

1 INTRODUCTION

Generative Adversaries Networks (GANs) are an ex-

citing recent innovation in machine learning. GANs

are generative models: they create new instances of

data similar to your training data. The number of

novel GAN concepts, methods, and applications has

increased significantly the interest to these systems

This huge success is attributed to the high similar-

ity of the generated images to the real ones (Good-

fellow et al., 2020). For the diagnosis and identifi-

cation of diseases, medical imaging is crucial (Pan

and Xin, 2024), (Huang et al., 2024), (Zhou et al.,

2023), (Brunese et al., 2022b). Furthermore, research

on artificial intelligence using such images could po-

tentially have a beneficial effect on improvements in

healthcare. One of the challenges of machine learn-

ing in biomedical imaging regards the discrimination

ability between real and fake images, which can al-

ter the performance and invalidate the results. To ad-

dress this challenge, the fake images can be generated

with GANs. Moreover, GANs reveal their features in

many applications in healthcare and biomedical do-

mains, from data augmentation to anomaly detection

passing through medical image synthesis.

In this paper we propose a method able to rec-

ognize the real/fake classification. Fake images are

generated at different epochs of the GAN consider-

ing RGB fundus retina image datasets. In the ex-

periments, resistance and mis-classifications of the

models in the real/fake discriminations are obtained.

Moreover, the paper analyzed also trends of the out-

put model increasing the epochs of the GAN, showing

interesting results.

In the next section, is reported the related works;

while a brief overview of the GAN and its working

principles is provided in Section 3. The proposed

method, applied in the RGB retinal images, is pre-

sented in Section 4, followed by the experimental

analysis and results in Section 5. Lastly, a conclu-

sion and future research goals are shown in the last

section.

Di Giammarco, M., Santone, A., Cesarelli, M., Martinelli, F. and Mercaldo, F.

On the Detection of Retinal Image Synthesis Obtained Through Generative Adversarial Network.

DOI: 10.5220/0013228300003911

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 18th International Joint Conference on Biomedical Engineering Systems and Technologies (BIOSTEC 2025) - Volume 1, pages 611-618

ISBN: 978-989-758-731-3; ISSN: 2184-4305

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

611

2 RELATED WORKS

A review of the literature on GAN use in medical field

is taken advantage of in this section.

The review of Singh et al.(Singh and Raza, 2021)

reports several GAN applications in the medical field.

The most recent developments in GAN-based clinical

applications for cross-modality synthesis and medi-

cal image production are presented in this chapter.

Deep convolutional GAN (DCGAN), Laplacian GAN

(LAPGAN), pix2pix, CycleGAN, and unsupervised

image-to-image translation model (UNIT) are among

the GAN frameworks that have become popular for

medical image interpretation. They continue to en-

hance their performance by adding more hybrid ar-

chitecture.

Recent work is represented by Sai Khal et al.

(Akhil et al., 2024). In their work, authors in-

vestigated the synthesis of realistic and superior

chest X-ray pictures using DCGAN. Their DCGAN

model generated synthetic images that exhibited re-

markable visual resemblances to real chest X-ray

images, including anatomical characteristics, radio-

graphic noise, and disease patterns. They also em-

ployed a range of evaluation metrics to statistically

examine the diversity and realism of the generated

images, demonstrating that their approach produced

incredibly realistic synthetic data.

Looking for the retinal imaging domain, in (Diaz-

Pinto et al., 2019) authors proposed a novel retinal im-

age synthesizer and a semi-supervised learning tech-

nique for glaucoma evaluation based on deep con-

volutional GANs was innovative. Furthermore, their

system is trained on an unparalleled quantity of pub-

licly accessible photos (86926 images), as far as we

are aware. As a result, their technology can automat-

ically offer labels in addition to producing synthetic

visuals.

In terms of security, the research of Mirsky et al.

(Mirsky et al., 2019) represents a great example of

the DCGAN application. In their article, they demon-

strated how an attacker can modify or delete medi-

cal condition evidence from volumetric (3D) medi-

cal scans using deep learning. This could be done

by an attacker to thwart a political candidate, ruin re-

search, perpetrate insurance fraud, carry out terror-

ism, or even kill someone. The authors demonstrated

how to automate the framework (CT-GAN) and used

a 3D conditional GAN to execute the assault. They

concentrated on injecting and extracting lung cancer

from CT scans to assess the attack. Additionally, they

investigated the attack surface of a contemporary radi-

ology network and illustrated one attack vector: they

used a clandestine penetration test to intercept and al-

ter CT scans on an operational hospital network.

In the work of Nagaraju et al. (Nagaraju and

Stamp, 2022), authors presented a method that em-

ployed GANs to create fake malware images and eval-

uate how well different classification methods worked

for these images. Their results demonstrated that

while the resulting multiclass classification problem

is challenging, they were able to obtain convincing

results when they restricted the problem to differenti-

ating between authentic and fraudulent samples. The

paper’s main finding is that, from a deep learning

standpoint, GAN-generated images fall short of deep

fake malware images, even if they may resemble real

malware images quite a bit.

3 GAN BACKGROUND

Since the proposed method focuses on the GAN, Fig-

ure 1 reports a block schematization of the GAN op-

eration.

A GAN main working principle is based on a

framework for competitive learning that combines

two neural networks: the discriminator and the gen-

erator. By learning to map the latent space to the data

space, the generator creates synthetic data samples

that resemble the real data distribution given random

noise (latent vectors) as input. It produces low-quality

samples at first, but with training, it improves its abil-

ity to produce more realistic samples. In order to

differentiate between actual and GAN-generated data

samples, the discriminator works as a binary classi-

fier. It learns to give real samples high probability and

fraudulent samples low probabilities. The discrimi-

nator may function randomly at first, but it learns to

distinguish between real and fraudulent samples with

practice. The discriminator and generator are simulta-

neously and competitively trained during the training

procedure. The generator’s main objective is to trick

the discriminator by producing samples that are indis-

tinguishable from actual data. The discriminator, on

the other hand, seeks to distinguish between authen-

tic and fraudulent samples correctly. Both networks

gradually improve during the training: the discrimina-

tor gets better at distinguishing real samples from fake

ones, and the generator enhances at producing real

examples. The discriminator and generator engage

in an adversarial interaction in which the discrimi-

nator aims to maximize its ability to distinguish real

from fake samples, while the generator tries to mini-

mize the discriminator’s ability to distinguish real and

synthetic samples by producing increasingly authen-

tic samples. When the generator generates samples

that are statistically comparable to actual data and the

BIOINFORMATICS 2025 - 16th International Conference on Bioinformatics Models, Methods and Algorithms

612

Figure 1: Block schematization of GAN.

discriminator finds it difficult to consistently distin-

guish between the original and GAN-generated sam-

ples, this adversarial process results in a Nash equi-

librium. When the discriminator is unable to differen-

tiate between synthetic and genuine samples more ac-

curately than chance, and the generator produces sam-

ples that are exactly like real data, the GAN has ide-

ally reached convergence. Convergence can be diffi-

cult to achieve and requires careful tweaking of train-

ing procedures, network architectures, and hyperpa-

rameters. The adversarial training dynamics between

the discriminator and generator, which result in the

creation of high-quality synthetic data, are essentially

the fundamental working principle of a GAN (You

et al., 2022; Wang et al., 2023; Singh and Raza, 2020).

This behavior determines a metric for how well

the generator is producing accurate data with gener-

ator loss. The difference between the generated and

actual data is used to calculate this loss. Rather, dis-

criminator loss is a metric that quantifies the discrimi-

nator’s ability to distinguish between created and gen-

uine data. It is calculated using the discriminator’s ac-

curacy in identifying authentic or fraudulent samples.

(Mercaldo et al., 2024b)

Following this broad overview, we consider a par-

ticular kind of GAN known as DCGAN (Deep Convo-

lutional Generative Adversarial Network (Fang et al.,

2018; Liu et al., 2022)) in our work. By adding

deep convolutional neural networks, DCGAN is an

expansion of the GAN concept, although the working

principles are the same as previously shown. Com-

pared to previous GAN architectures, DCGANs can

generate high-quality images, which has led to their

widespread application for tasks like image produc-

tion, super-resolution, and style transfer. By utilizing

convolutional layers (Brunese et al., 2022a), (Mar-

tinelli et al., 2022),(Mercaldo et al., 2024a), (Di Gi-

ammarco et al., 2024a), (Mercaldo et al., 2022), hier-

archical feature learning, and architectural improve-

ments like batch normalization and Leaky ReLU, the

DCGAN architecture strongly improves the quality of

generated images.

4 THE METHOD

In this section, our method is presented and exploited.

The method is based on the discrimination between

GAN-generated images and real ones in the biomed-

ical environment and aims to obtain an output model

able to distinguish those biomedical images.

RGB images of the fundus retina represent the

case study, but the concept behind is available for

any biomedical imaging. The main steps are shown

in Figure 2.

The approach starts from the dataset. The dataset

consists of an RGB fundus retina and is called the

original dataset. This dataset is the ”real dataset”

cited in Figure 1 and several generations of fake im-

ages, varying the number of epochs, are taken into

account for the evaluation goal of the CNNs. In Fig-

ure 2 are reported the epochs, from 250 to 500; and

the GAN-generated fake images samples more and

more similar to the original ones as the epochs in-

crease. 2,000 images are obtained at the end of the

adversarial image generation, beginning with the first

epoch where the distortion is fully superimposed on

the input image and ending with the last epoch where

the distortion is undetectable to medics. In this sense,

On the Detection of Retinal Image Synthesis Obtained Through Generative Adversarial Network

613

Figure 2: Main steps of the case study on RGB retinal images.

the main focus of our study is on the results of the

DL network classification, specifically how well these

networks discriminate between real and fake images.

The selected epochs for the CNNs evaluation are:

250, 300, 350, 400, 450 and 500 Each of these sets

of fake images and the original samples are the in-

puts of the CNNs, which aim to distinguish real and

fake retinal images. The networks under analysis are

the following: Standard CNN (Di Giammarco et al.,

2022a), (Di Giammarco et al., 2022b), InceptionV3

(Xia et al., 2017), Resnet50 (He et al., 2016), and the

MobileNet (Howard et al., 2017). The networks are

evaluated on the main metrics: accuracy, precision,

recall, and loss. The main attention regards the ac-

curacy trends over the GAN-epochs. In other words,

for the previous networks, the CNNs evaluation con-

cerns whether the accuracy was reduced or not after

the classifications of the set of fake images at different

GAN-epochs.

5 RESULTS AND DISCUSSION

In this section, the cited experiments on the dataset

are conducted and reported.

The dataset under analysis is the RetinaMNIST

reported at the following link:

1

. Going into detail,

this dataset consists of 2.000 images of RGB fundus

retina.

The mentioned networks are trained and evaluated

in a binary classification throughout several DCGAN

epochs generation in the experimental analysis sec-

tion to differentiate real from fake retinal images.

For the training, validation, and testing sets, the

two classes—the real and the fake—are split at an

80-10-10 rate following each GAN image genera-

tion. The following hyper-parameter combinations

are used to train and test the datasets on the four net-

works listed above 50 epochs, 32 batch size, 0.0001

learning rate, and the input size as an image, or

64x64x3. This combination is the average combina-

tion for all networks, based on multiple testing and

outcomes. Tables 1, 2, 3 and 4 report the evaluation

1

https://medmnist.com/

BIOINFORMATICS 2025 - 16th International Conference on Bioinformatics Models, Methods and Algorithms

614

of the metrics in terms of accuracy precision, recall,

F-Measure and Area Under the Curve (AUC) for the

four CNNs, respectevely.

A list of considerations through the evaluation of

the results is reported below:

• Standard CNN, network developed by the authors

(Di Giammarco et al., 2022b) shows the best re-

sult. Metrics such as accuracy, precision and re-

call maintain the maximum values in all the DC-

GAN epochs, meaning that this network com-

pletely recognizes the real and the fake images.

Also in the RGB case, the Standard CNN obtains

the most reliable output model, such as the previ-

ous work with the grayscale retinal images (Di Gi-

ammarco et al., 2024b).

• Different behavior is for the MobileNet. The net-

work indeed distinguishes between the real and

the GAN-generated images, but it is possible to

observe that the loss gradually enhances accord-

ing to the number of DCGAN epochs. This be-

havior will produce misclassification when the

DCGAN epochs are enhanced. However, in our

study (until 500 DCGAN epochs), the MobileNet

model correctly recognized real and fake images.

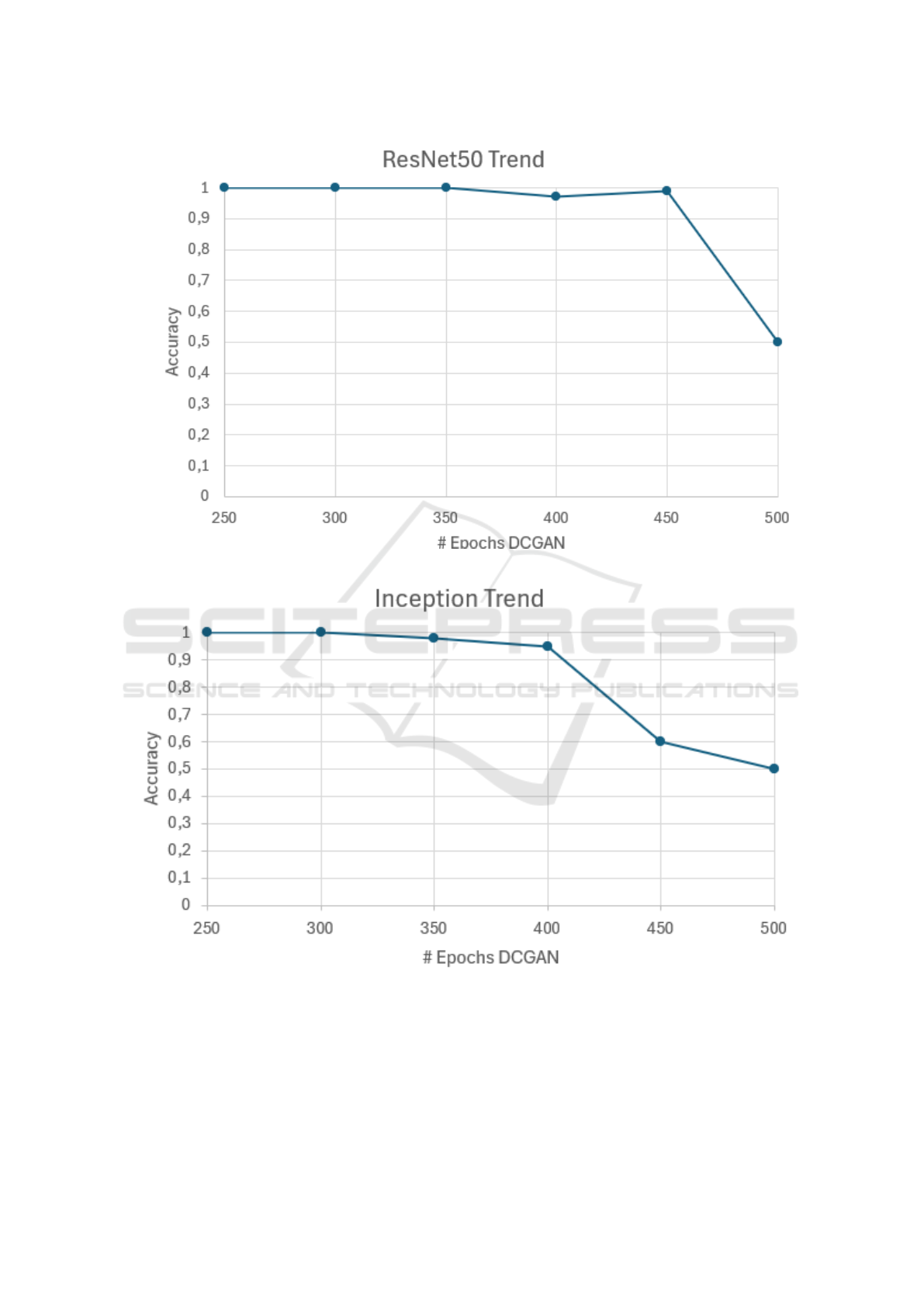

• Finally, the ResNet50 and the InceptionV3

strongly decrease their performance as the DC-

GAN epochs increase. In particular, these de-

creasing trends are evident from 400 to 500

epochs, within this latter situation the networks

fail the classification, and are not able to distin-

guish real and fake images.

A better view of these two trends for the ResNet50

and InceptionV3 is reported in Figures 3 and 4.

The two figures show the accuracy-epochs trend

of the ResNet50 and the InceptionV3. The plot 3 re-

veals that the accuracy quickly drops from 450 to 500

DCGAN epochs, while the int the InceptionV3 plot,

shows in Figure 4 the main decreasing is between 400

and 450, and report the 0,5 at 500 DCGAN epochs.

In the output, networks such as Standard CNN

and MobileNet correctly recognize the images, im-

possible to distinguish with a human eye analysis. In

this way, the output model can be applied to medi-

cal imaging instruments with deep learning prediction

implementation. These models represent, for any dis-

ease retinal image classification, the best solution to

prevent possible attacks and to improve reliability and

credibility. On the other hand, CNNs like ResNet50

and InceptionV3 with high values of DCGAN epochs,

which means fake images are very similar to the orig-

inal ones, not recognize the real and the fake images.

This behavior does not guarantee reliability on possi-

ble predictions, with high risk on the diagnosis proce-

dure and, consequentially, on the patient’s health.

The optimal response of the Standard CNN with

the retinal images is confirmed also in the diabetic

retinopathy classifications reported in the paper (Mer-

caldo et al., 2023).

6 CONCLUSIONS AND FUTURE

WORKS

In conclusion, in this work, we present a general

method to discriminate between real and fake images

generated with the DCGAN to enhance data security

and reliability. Being extendable on each biomedical

imaging technique, the authors decide to analyze the

RGB retinal images case. From the four CNNs in the

experiment, two of these i.e. Standard

CNN and Mo-

bileNet, distinguish the real/fake task, and generate an

output model optimal for automated AI-based imag-

ing devices. The other two networks represent a bad

choice for the same imaging devices, with the pos-

sibility of altering results including GAN-generated

images in the testing folder, drastically reducing the

reliability.

For future works, authors will exploit additional

GANs for testing the CNNs. Another future work is

related to the application of federated machine learn-

ing for data privacy reasons.

ACKNOWLEDGEMENTS

This work has been partially supported by EU DUCA,

EU CyberSecPro, SYNAPSE, PTR 22-24 P2.01 (Cy-

bersecurity) and SERICS (PE00000014) under the

MUR National Recovery and Resilience Plan funded

by the EU - NextGenerationEU projects, by MUR -

REASONING: foRmal mEthods for computAtional

analySis for diagnOsis and progNosis in imagING -

PRIN, e-DAI (Digital ecosystem for integrated anal-

ysis of heterogeneous health data related to high-

impact diseases: innovative model of care and re-

search), Health Operational Plan, FSC 2014-2020,

PRIN-MUR-Ministry of Health, the National Plan for

NRRP Complementary Investments D

∧

3 4 Health:

Digital Driven Diagnostics, prognostics and therapeu-

tics for sustainable Health care, Progetto MolisCTe,

Ministero delle Imprese e del Made in Italy, Italy,

CUP: D33B22000060001, FORESEEN: FORmal

mEthodS for attack dEtEction in autonomous driv-

iNg systems CUP N.P2022WYAEW and ALOHA: a

framework for monitoring the physical and psycho-

logical health status of the Worker through Object de-

On the Detection of Retinal Image Synthesis Obtained Through Generative Adversarial Network

615

Table 1: Metrics evaluation for the Standard CNN.

Metrics 250 Epochs 300 Epochs 350 Epochs 400 Epochs 450 Epochs 500 Epochs

Accuracy 1.0 1.0 1.0 1.0 1.0 1.0

Precision 1.0 1.0 1.0 1.0 1.0 1.0

Recall 1.0 1.0 1.0 1.0 1.0 1.0

F-Measure 1.0 1.0 1.0 1.0 1.0 1.0

AUC 1.0 1.0 1.0 1.0 1.0 1.0

Loss 0.0 0.0 0.0 0.0 0.0 0.0

Table 2: Metrics evaluation for the MobileNet.

Metrics 250 Epochs 300 Epochs 350 Epochs 400 Epochs 450 Epochs 500 Epochs

Accuracy 1.0 1.0 1.0 1.0 1.0 1.0

Precision 1.0 1.0 1.0 1.0 1.0 1.0

Recall 1.0 1.0 1.0 1.0 1.0 1.0

F-Measure 1.0 1.0 1.0 1.0 1.0 1.0

AUC 1.0 1.0 1.0 1.0 1.0 1.0

Loss 1.24x10

−7

4.91x10

−7

1.18x10

−6

5.43x10

−6

9.85x10

−6

2.23x10

−5

Table 3: Metrics evaluation for the ResNet50.

Metrics 250 Epochs 300 Epochs 350 Epochs 400 Epochs 450 Epochs 500 Epochs

Accuracy 1.0 1.0 1.0 0.970 0.997 0.5

Precision 1.0 1.0 1.0 0.970 0.997 0.5

Recall 1.0 1.0 1.0 0.970 0.997 0.5

F-Measure 1.0 1.0 1.0 0.970 0.997 0.5

AUC 1.0 1.0 1.0 0.982 0.999 0.497

Loss 1.24x10

−7

1.35x10

−5

6.0x10

−4

3.74x10

−3

2.358 4.389

Table 4: Metrics evaluation for the InceptionV3.

Metrics 250 Epochs 300 Epochs 350 Epochs 400 Epochs 450 Epochs 500 Epochs

Accuracy 1.0 1.0 0.995 0.950 0.600 0.5

Precision 1.0 1.0 0.995 0.950 0.600 0.5

Recall 1.0 1.0 0.995 0.950 0.600 0.5

F-Measure 1.0 1.0 0.995 0.950 0.600 0.5

AUC 1.0 1.0 0.999 0.965 0.624 0.5

Loss 7.28x10

−3

1.02x10

−2

4.22x10

−2

7.81x10

−2

0.673 0.693

tection and federated machine learning, Call for Col-

laborative Research BRiC -2024, INAIL.

REFERENCES

Akhil, M. S., Sharma, B. S., Kodipalli, A., and Rao, T.

(2024). Medical image synthesis using dcgan for chest

x-ray images. In 2024 International Conference on

Knowledge Engineering and Communication Systems

(ICKECS), volume 1, pages 1–8. IEEE.

Brunese, L., Brunese, M. C., Carbone, M., Ciccone, V.,

Mercaldo, F., and Santone, A. (2022a). Automatic

pi-rads assignment by means of formal methods. La

radiologia medica, pages 1–7.

Brunese, L., Mercaldo, F., Reginelli, A., and Santone, A.

(2022b). A neural network-based method for respira-

tory sound analysis and lung disease detection. Ap-

plied Sciences, 12(8):3877.

Di Giammarco, M., Dukic, B., Martinelli, F., Cesarelli, M.,

Ravelli, F., Santone, A., and Mercaldo, F. (2024a). Re-

liable leukemia diagnosis and localization through ex-

plainable deep learning. In 2024 Fifth International

Conference on Intelligent Data Science Technologies

and Applications (IDSTA), pages 68–75. IEEE.

Di Giammarco, M., Iadarola, G., Martinelli, F., Mercaldo,

BIOINFORMATICS 2025 - 16th International Conference on Bioinformatics Models, Methods and Algorithms

616

Figure 3: Accuracy-epochs trends of the ResNet50.

Figure 4: Accuracy-epochs trends of the InceptionV3.

On the Detection of Retinal Image Synthesis Obtained Through Generative Adversarial Network

617

F., Ravelli, F., and Santone, A. (2022a). Explainable

deep learning for alzheimer disease classification and

localisation. In International Conference on Applied

Intelligence and Informatics, 1-3 September, Reggio

Calabria, Italy. in press.

Di Giammarco, M., Iadarola, G., Martinelli, F., Mercaldo,

F., and Santone, A. (2022b). Explainable retinopathy

diagnosis and localisation by means of class activation

mapping. In 2022 International Joint Conference on

Neural Networks (IJCNN), pages 1–8. IEEE.

Di Giammarco, M., Santone, A., Cesarelli, M., Martinelli,

F., and Mercaldo, F. (2024b). Evaluating deep learn-

ing resilience in retinal fundus classification with gen-

erative adversarial networks generated images. Elec-

tronics, 13(13):2631.

Diaz-Pinto, A., Colomer, A., Naranjo, V., Morales, S., Xu,

Y., and Frangi, A. F. (2019). Retinal image syn-

thesis and semi-supervised learning for glaucoma as-

sessment. IEEE transactions on medical imaging,

38(9):2211–2218.

Fang, W., Zhang, F., Sheng, V. S., and Ding, Y. (2018).

A method for improving cnn-based image recogni-

tion using dcgan. Computers, Materials & Continua,

57(1).

Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B.,

Warde-Farley, D., Ozair, S., Courville, A., and Ben-

gio, Y. (2020). Generative adversarial networks. Com-

munications of the ACM, 63(11):139–144.

He, K., Zhang, X., Ren, S., and Sun, J. (2016). Deep resid-

ual learning for image recognition. In Proceedings of

the IEEE conference on computer vision and pattern

recognition, pages 770–778.

Howard, A. G., Zhu, M., Chen, B., Kalenichenko, D.,

Wang, W., Weyand, T., Andreetto, M., and Adam,

H. (2017). Mobilenets: Efficient convolutional neu-

ral networks for mobile vision applications. arXiv

preprint arXiv:1704.04861.

Huang, P., Xiao, H., He, P., Li, C., Guo, X., Tian, S.,

Feng, P., Chen, H., Sun, Y., Mercaldo, F., et al.

(2024). La-vit: A network with transformers con-

strained by learned-parameter-free attention for in-

terpretable grading in a new laryngeal histopathol-

ogy image dataset. IEEE Journal of Biomedical and

Health Informatics.

Liu, B., Lv, J., Fan, X., Luo, J., and Zou, T. (2022). Ap-

plication of an improved dcgan for image generation.

Mobile information systems, 2022(1):9005552.

Martinelli, F., Mercaldo, F., and Santone, A. (2022). Wa-

ter meter reading for smart grid monitoring. Sensors,

23(1):75.

Mercaldo, F., Ciaramella, G., Iadarola, G., Storto, M.,

Martinelli, F., and Santone, A. (2022). Towards ex-

plainable quantum machine learning for mobile mal-

ware detection and classification. Applied Sciences,

12(23):12025.

Mercaldo, F., Di Giammarco, M., Apicella, A., Di Iadarola,

G., Cesarelli, M., Martinelli, F., and Santone, A.

(2023). Diabetic retinopathy detection and diagno-

sis by means of robust and explainable convolutional

neural networks. Neural Computing and Applications,

35(23):17429–17441.

Mercaldo, F., Di Giammarco, M., Ravelli, F., Martinelli, F.,

Santone, A., and Cesarelli, M. (2024a). Alzheimer’s

disease evaluation through visual explainability by

means of convolutional neural networks. Interna-

tional Journal of Neural Systems, 34(2):2450007–

2450007.

Mercaldo, F., Martinelli, F., and Santone, A. (2024b).

Deep convolutional generative adversarial networks in

image-based android malware detection. Computers,

13(6).

Mirsky, Y., Mahler, T., Shelef, I., and Elovici, Y. (2019).

{CT-GAN}: Malicious tampering of 3d medical im-

agery using deep learning. In 28th USENIX Security

Symposium (USENIX Security 19), pages 461–478.

Nagaraju, R. and Stamp, M. (2022). Auxiliary-classifier

gan for malware analysis. In Artificial Intelligence for

Cybersecurity, pages 27–68. Springer.

Pan, H. and Xin, L. (2024). Fdts: A feature disentangled

transformer for interpretable squamous cell carcinoma

grading. IEEE/CAA Journal of Automatica Sinica,

12(JAS-2024-1027).

Singh, N. K. and Raza, K. (2020). Medical image gen-

eration using generative adversarial networks. arXiv

preprint arXiv:2005.10687.

Singh, N. K. and Raza, K. (2021). Medical image genera-

tion using generative adversarial networks: A review.

Health informatics: A computational perspective in

healthcare, pages 77–96.

Wang, X., Guo, H., Hu, S., Chang, M.-C., and Lyu, S.

(2023). Gan-generated faces detection: A survey and

new perspectives. ECAI 2023, pages 2533–2542.

Xia, X., Xu, C., and Nan, B. (2017). Inception-v3 for flower

classification. In 2017 2nd international conference

on image, vision and computing (ICIVC), pages 783–

787. IEEE.

You, A., Kim, J. K., Ryu, I. H., and Yoo, T. K. (2022).

Application of generative adversarial networks (gan)

for ophthalmology image domains: a survey. Eye and

Vision, 9(1):6.

Zhou, X., Tang, C., Huang, P., Tian, S., Mercaldo, F., and

Santone, A. (2023). Asi-dbnet: an adaptive sparse

interactive resnet-vision transformer dual-branch net-

work for the grading of brain cancer histopathological

images. Interdisciplinary Sciences: Computational

Life Sciences, 15(1):15–31.

BIOINFORMATICS 2025 - 16th International Conference on Bioinformatics Models, Methods and Algorithms

618