GNN-MSOrchest: Graph Neural Networks Based Approach for

Micro-Services Orchestration - A Simulation Based Design Use Case

Nader Belhadj

1

, Mohamed Amine Mezghich

1

, Jaouher Fattahi

2

and Lassaad Latrach

2

1

National School of Computer Sciences, Manouba, Tunisia

2

Heterogeneous Advances Networking & Applications (HANALab), Tunisia

Keywords:

Micro-Services Orchestration, Graph Neural Networks (GNN), Load Balancing, Fault Tolerance, Resource

Allocation.

Abstract:

In recent years, the micro-services architecture has emerged as a dominant paradigm in software engineering,

praised for its modularity, scalability, and ease of maintenance. Nevertheless, orchestrating micro-services

efficiently presents significant challenges, particularly in optimizing communication, load balancing, and fault

tolerance. Graph Neural Networks (GNN), with their ability to model and process data structured as graphs,

are particularly well-suited for representing the complex inter dependencies between micro-services. Despite

their promising applications in micro-services architecture, GNNs are not sufficiently used for micro-services

orchestration, which involves the automated management, coordination, and scaling of services. This paper

proposes a novel GNNs based approach for micro-services orchestration. A simulation based design use case

is studied and analysed.

1 INTRODUCTION

Microservices architectures have emerged as a trans-

formative paradigm in modern software develop-

ment, offering unparalleled modularity, scalability,

and maintainability. These architectures break down

applications into loosely coupled, independently de-

ployable services, each encapsulating a specific busi-

ness capability. Despite these advantages, efficiently

orchestrating microservices poses significant chal-

lenges, particularly in managing complex interdepen-

dencies, ensuring load balancing, and maintaining

fault tolerance in dynamic and large-scale environ-

ments.

Traditional approaches to microservices orches-

tration, such as rule-based methods or heuristic-

driven strategies, often struggle to address the com-

plexities of modern systems. These methods rely

heavily on manual configuration and lack the flexi-

bility to adapt dynamically to evolving workloads or

dependencies. As a result, there is an increasing need

for innovative solutions that automate and optimize

the orchestration process while overcoming the limi-

tations of traditional techniques.

Graph Neural Networks (GNNs) have recently

gained attention for their exceptional ability to pro-

cess graph-structured data and effectively model re-

lationships between entities. By representing mi-

croservices and their interactions as a graph, GNNs

are uniquely positioned to capture the intricate inter-

dependencies inherent in microservices architectures.

This capability enables advanced functionalities such

as anomaly detection, predictive load balancing, and

dynamic resource allocation, making GNNs a promis-

ing solution to the challenges of microservices or-

chestration.

This paper introduces GNN-MSOrchest, a novel

approach leveraging GNNs to optimize microservices

orchestration. By employing a graph-based represen-

tation of services, GNN-MSOrchest models the de-

pendencies and interactions between microservices,

enabling real-time workload predictions and system

optimization. The approach is validated through a

simulation-based design process focusing on an e-

commerce use case, which demonstrates the potential

of GNNs to enhance system performance, resource

utilization, and fault tolerance.

Originality: Unlike existing methods, GNN-

MSOrchest presents an adaptive and predictive frame-

work for microservices orchestration, capitalizing on

the unique capabilities of GNNs. This work not

only underscores the theoretical advantages of GNNs

for modeling microservices architectures but also of-

fers practical insights into their implementation and

Belhadj, N., Mezghich, M. A., Fattahi, J. and Latrach, L.

GNN-MSOrchest: Graph Neural Networks Based Approach for Micro-Services Orchestration - A Simulation Based Design Use Case.

DOI: 10.5220/0013238200003890

In Proceedings of the 17th International Conference on Agents and Artificial Intelligence (ICAART 2025) - Volume 3, pages 933-939

ISBN: 978-989-758-737-5; ISSN: 2184-433X

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

933

performance compared to state-of-the-art techniques.

By addressing critical gaps in current orchestration

methodologies, this paper lays the groundwork for fu-

ture research and practical applications in adaptive,

large-scale microservices systems.

2 OVERVIEW OF RELATED

WORK

As microservices architectures continue to gain trac-

tion for building modular and scalable systems, ef-

fective orchestration strategies are critical to achiev-

ing robust performance, fault tolerance, and efficient

resource allocation. While traditional approaches to

microservices orchestration, such as rule-based and

heuristic methods, can be effective in controlled envi-

ronments, they often struggle to adapt to the complex

interdependencies and dynamic nature of large-scale

applications. These challenges have fueled growing

interest in machine learning-driven approaches that

can capture, analyze, and optimize the intricate rela-

tionships within microservices ecosystems.

2.1 Main Graph Neural Networks

Architectures

Graph Neural Networks (GNNs) represent a transfor-

mative class of neural networks designed to process

and learn from graph-structured data, where relation-

ships among entities are paramount. Different GNN

architectures have been developed, each optimized for

various graph characteristics and application needs.

Graph Convolutional Networks (GCNs) (Zhang

et al., 2019)(Bhatti et al., 2023) extend traditional

convolutional operations to graph structures, allowing

information aggregation from a node’s neighborhood.

This approach enables GCNs to derive meaningful

representations while maintaining computational effi-

ciency, making them ideal for semi-supervised learn-

ing on structured data.

Graph Attention Networks (GATs) (Brody et al.,

2021) enhance GCNs by incorporating attention

mechanisms, prioritizing key neighbors, and thereby

refining the learning process, particularly in heteroge-

neous graph environments.

GraphSAGE (Graph Sample and Aggregate)

(Hamilton et al., 2017)(Oh et al., 2019) addresses

scalability issues by sampling a fixed neighborhood

size and utilizing diverse aggregation methods like

mean or LSTM, which improves its applicability to

large, complex graphs.

Message Passing Neural Networks (MPNNs)

(Gilmer et al., 2020) generalize message-passing pro-

cesses for detailed relational learning. MPNNs are

effective for applications requiring iterative updates

across nodes, such as molecular property prediction.

Graph Transformer Networks (GTNs) (Yun et al.,

2022) (Min et al., 2022)bring the transformer ar-

chitecture to graphs, using self-attention to capture

global dependencies, making them particularly pow-

erful for tasks requiring comprehensive context.

These diverse architectures empower GNNs to ex-

cel across a variety of tasks, including node classifica-

tion, link prediction, and graph classification, offering

adaptability for wide-ranging applications.

2.2 Microservices Orchestration: From

Traditional Approaches to GNNs

Historically, microservices orchestration has relied on

non-machine-learning methods, such as rule-based

systems and heuristic-driven techniques. While these

approaches provide stability and control, they often

falter when applied to large, dynamic ecosystems typ-

ical of high-traffic applications, where dependencies

and workloads shift unpredictably. For example, rule-

based orchestration requires extensive manual tuning,

limiting its adaptability. Likewise, heuristic methods,

although effective for isolated tasks like load balanc-

ing, can struggle with managing complex dependen-

cies across numerous services.

Machine learning, and particularly Graph Neural

Networks (GNNs), has shown considerable promise

in addressing these orchestration challenges. GNNs

are particularly well-suited to environments charac-

terized by interdependent data structures, as they can

model and learn from the relationships among mi-

croservices. In orchestrating microservices, GNNs

enable adaptive insights that optimize load distribu-

tion, resource allocation, and response times. By rep-

resenting microservices as nodes and their interac-

tions as edges, GNNs can uncover patterns that tra-

ditional rule-based approaches often miss.

2.3 Graph Neural Networks for

Microservices-Based Solutions

GNNs have already demonstrated significant poten-

tial in fields that require the analysis of interconnected

data, such as recommendation systems and social net-

work analysis (Tran et al., 2021) (He et al., 2023)(Sun

et al., 2023). In these contexts, GNNs have been

successfully deployed within microservices architec-

tures, with each microservice handling distinct com-

ponents of the GNN pipeline to enable real-time, scal-

able analysis.

ICAART 2025 - 17th International Conference on Agents and Artificial Intelligence

934

In recommendation systems, GNNs handle data

preprocessing, model training, and inference across

modular services, facilitating the provision of low-

latency, personalized recommendations. Similarly, in

social network analysis, GNNs analyze large user and

interaction datasets by modularizing tasks like data

preprocessing, graph construction, and model train-

ing.

Despite the potential for GNNs to transform mi-

croservices architectures, their application remains

primarily in analytical tasks rather than direct orches-

tration, which involves real-time management and

scaling. This paper seeks to bridge this gap by ap-

plying GNNs to the orchestration layer itself, mov-

ing beyond traditional rule-based approaches and in-

tegrating GNNs’ predictive capacities to dynamically

adjust orchestration strategies in response to real-time

changes.

3 GNN-MSOrchest:

METHODOLOGY AND

APPROACH FOR

MICROSERVICES

ORCHESTRATION USING

GRAPH NEURAL NETWORKS

To address the complexities inherent in orchestrating

microservices, we developed a novel approach GNN-

MSOrchest that leverages a Graph Neural Network

(GNN) model specifically tailored for this purpose.

By representing microservices as nodes in a graph,

GNN-MSOrchest models the dependencies and inter-

actions within a microservices ecosystem, enabling

dynamic workload predictions and optimization of

system performance.

3.1 Graph Representation and Data

Structure

In microservices architecture, services are often inter-

connected, requiring continuous interaction and data

sharing. This structure is well-suited for GNNs,

which excel at processing graph-structured data. In

our model, each microservice is represented as a

node, while the interactions between services are

modeled as edges.Each node’s feature vector includes

metrics such as resource consumption, response time,

fault occurrence, and latency, reflecting the real-time

state of each microservice. These features are es-

sential for the GNN to capture interdependencies, al-

lowing GNN-MSOrchest to optimize load distribu-

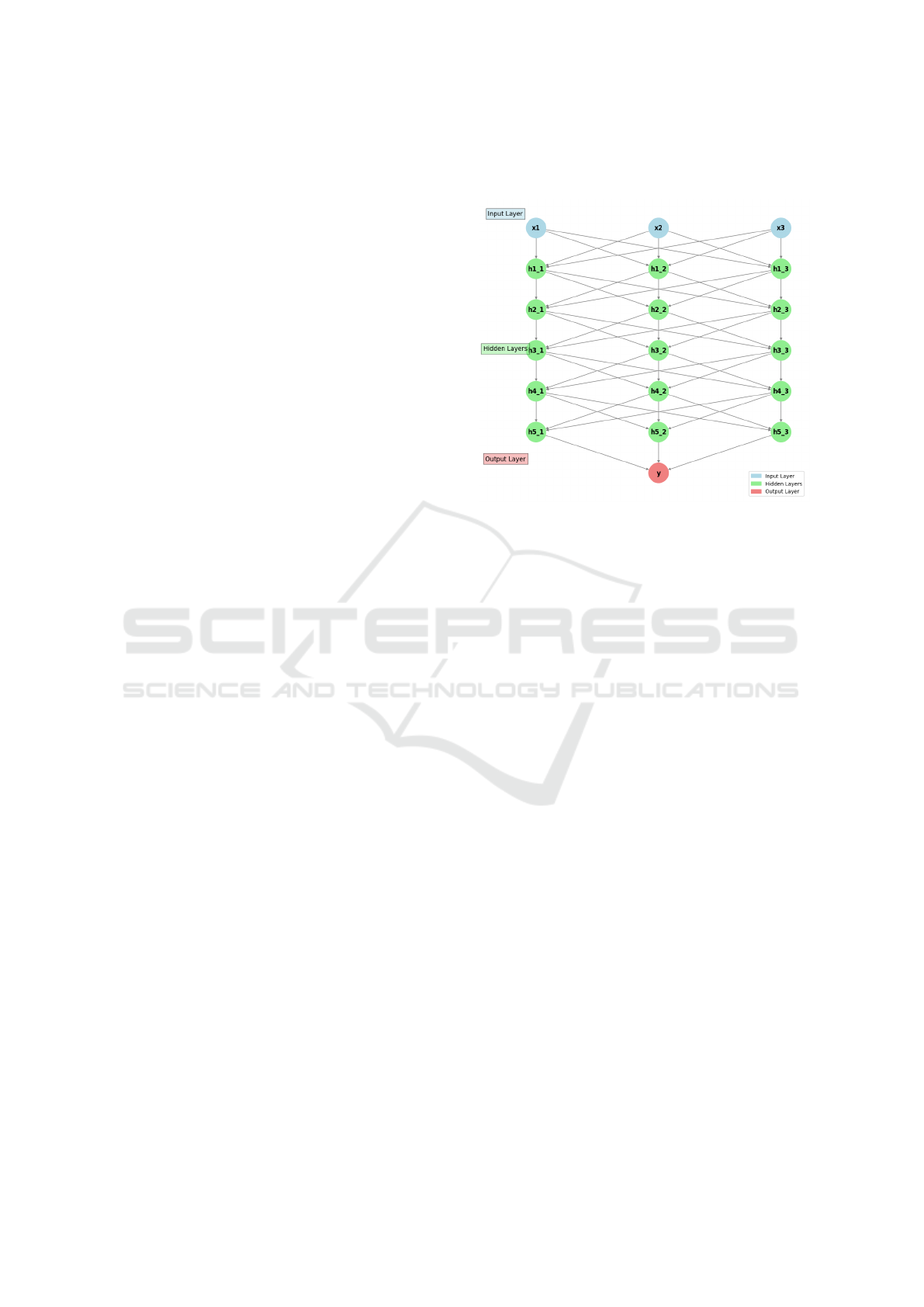

tion and improve response times. Figure 3 presents

the GCN architecture designed for this context.

Figure 1: The GNN architecture for microservices orches-

tration.

3.2 GNN Model Architecture

Our methodology relies on a Graph Convolutional

Network (GCN) architecture that includes:

• Convolutional Layers. Aggregates information

from neighboring nodes, capturing service rela-

tionships.

• ReLU Activation Functions. Enhances non-

linear learning capacity within the feature space.

• Dropout Regularization. Prevents overfitting

with a rate of 0.3, ensuring model robustness.

This layered structure enables GNN-MSOrchest to

aggregate and transform node features, thus making

accurate workload predictions for each microservice

node.

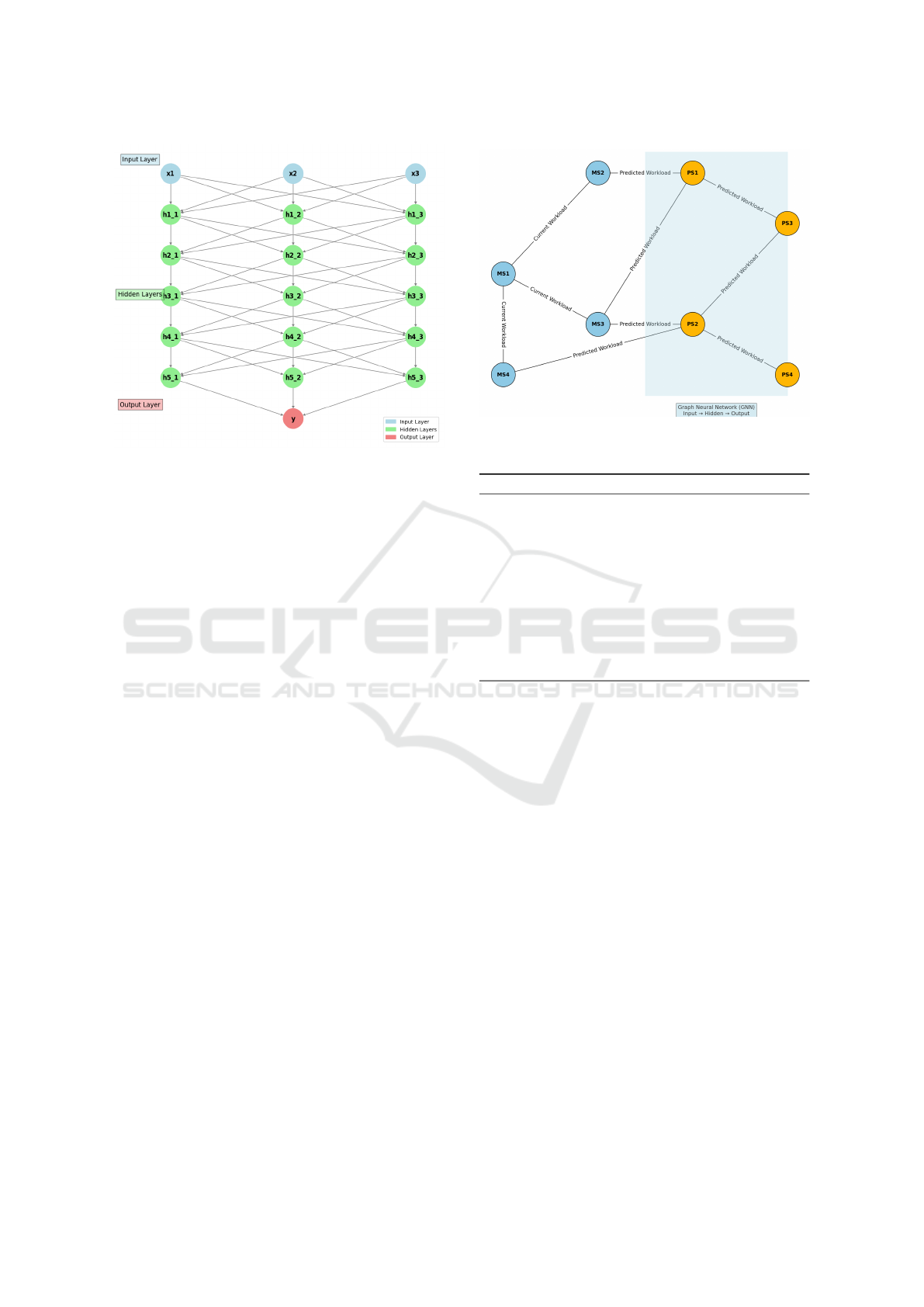

3.3 Workload Prediction Process

Efficient workload management is key to maintaining

system stability in dynamic microservices architec-

tures. In GNN-MSOrchest, each microservice is rep-

resented as a node within a graph, with edges reflect-

ing service dependencies. By processing this graph

structure, the GNN predicts future workloads, aid-

ing in proactive resource allocation and capacity plan-

ning. The workload prediction workflow is detailed in

Figure 4 and Algorithm 2.

GNN-MSOrchest: Graph Neural Networks Based Approach for Micro-Services Orchestration - A Simulation Based Design Use Case

935

Figure 2: Illustration of the GNN Workload Prediction Pro-

cess.

Algorithm 1: GNN Prediction Workflow.

1: Data Collection: Collect key metrics like work-

loads, interactions, and resource usage.

2: Feature Extraction: Extract relevant features for

each node.

3: Model Training: Train the GNN with extracted

features.

4: Real-Time Prediction: Predict future workload

based on the current system state.

5: Decision Making: Use predictions to adjust re-

source allocation and load balancing.

3.4 Synthetic Dataset and

Simulation-Based Training

To train the GNN model, we generated a synthetic

dataset that captures typical microservices interac-

tions, including latency variations, resource usage

patterns, and fault responses. This simulated environ-

ment helps reflect real-world operational conditions,

enabling the GNN to learn from a variety of scenar-

ios.

3.5 Evaluation Metrics and

Performance

We evaluated GNN-MSOrchest using the following

metrics to assess its effectiveness:

• Accuracy. Measures precision in load distribu-

tion predictions.

• Response Time Reduction. Evaluates improve-

ment in response times.

• Load Distribution. Assesses efficiency in dis-

tributing workload across services.

These metrics provide insights into the orchestration

efficiency of GNN-MSOrchest, supporting its scala-

bility and adaptability for different microservices ar-

chitectures.

This methodology highlights the strengths of

GNN-MSOrchest in optimizing workload distribu-

tion, resource management, and system resilience in

complex, large-scale microservices environments.

4 GNN-MSOrchest:

METHODOLOGY AND

APPROACH FOR

MICROSERVICES

ORCHESTRATION USING

GRAPH NEURAL NETWORKS

To address the complexities inherent in orchestrating

microservices, we developed a novel approach GNN-

MSOrchest that leverages a Graph Neural Network

(GNN) model specifically tailored for this purpose.

By representing microservices as nodes in a graph,

GNN-MSOrchest models the dependencies and inter-

actions within a microservices ecosystem, enabling

dynamic workload predictions and optimization of

system performance.

4.1 Graph Representation and Data

Structure

In microservices architecture, services are often inter-

connected, requiring continuous interaction and data

sharing. This structure is well-suited for GNNs,

which excel at processing graph-structured data. In

our model, each microservice is represented as a

node, while the interactions between services are

modeled as edges.Each node’s feature vector includes

metrics such as resource consumption, response time,

fault occurrence, and latency, reflecting the real-time

state of each microservice. These features are es-

sential for the GNN to capture interdependencies, al-

lowing GNN-MSOrchest to optimize load distribu-

tion and improve response times. Figure 3 presents

the GCN architecture designed for this context.

ICAART 2025 - 17th International Conference on Agents and Artificial Intelligence

936

Figure 3: The GNN architecture for microservices orches-

tration.

4.2 GNN Model Architecture

Our methodology relies on a Graph Convolutional

Network (GCN) architecture that includes:

• Convolutional Layers. Aggregates information

from neighboring nodes, capturing service rela-

tionships.

• ReLU Activation Functions. Enhances non-

linear learning capacity within the feature space.

• Dropout Regularization. Prevents overfitting

with a rate of 0.3, ensuring model robustness.

This layered structure enables GNN-MSOrchest to

aggregate and transform node features, thus making

accurate workload predictions for each microservice

node.

4.3 Workload Prediction Process

Efficient workload management is key to maintaining

system stability in dynamic microservices architec-

tures. In GNN-MSOrchest, each microservice is rep-

resented as a node within a graph, with edges reflect-

ing service dependencies. By processing this graph

structure, the GNN predicts future workloads, aid-

ing in proactive resource allocation and capacity plan-

ning. The workload prediction workflow is detailed in

Figure 4 and Algorithm 2.

4.4 Synthetic Dataset and

Simulation-Based Training

To train the GNN model, we generated a synthetic

dataset that captures typical microservices interac-

Figure 4: Illustration of the GNN Workload Prediction Pro-

cess.

Algorithm 2: GNN Prediction Workflow.

1: Data Collection: Collect key metrics like work-

loads, interactions, and resource usage.

2: Feature Extraction: Extract relevant features for

each node.

3: Model Training: Train the GNN with extracted

features.

4: Real-Time Prediction: Predict future workload

based on the current system state.

5: Decision Making: Use predictions to adjust re-

source allocation and load balancing.

tions, including latency variations, resource usage

patterns, and fault responses. This simulated environ-

ment helps reflect real-world operational conditions,

enabling the GNN to learn from a variety of scenar-

ios.

4.5 Evaluation Metrics and

Performance

We evaluated GNN-MSOrchest using the following

metrics to assess its effectiveness:

• Accuracy. Measures precision in load distribu-

tion predictions.

• Response Time Reduction. Evaluates improve-

ment in response times.

• Load Distribution. Assesses efficiency in dis-

tributing workload across services.

These metrics provide insights into the orchestration

efficiency of GNN-MSOrchest, supporting its scala-

bility and adaptability for different microservices ar-

chitectures.

GNN-MSOrchest: Graph Neural Networks Based Approach for Micro-Services Orchestration - A Simulation Based Design Use Case

937

This methodology highlights the strengths of

GNN-MSOrchest in optimizing workload distribu-

tion, resource management, and system resilience in

complex, large-scale microservices environments.

5 SIMULATION DESIGN AND

SCALABILITY ANALYSIS

To evaluate GNN-MSOrchest’s performance, we

modeled a simulation of an e-commerce microser-

vices architecture consisting of four initial services:

payment processing, user authentication, inventory

management, and order fulfillment. This setup al-

lows for an initial assessment of the GNN’s capacity

to manage interdependencies and resource distribu-

tion. Although limited to four services, this structure

provides foundational insights into scalability, with

future expansion planned to assess larger and more

complex systems.

Scalability is further analyzed through computa-

tional complexity assessments, using Big O notation

to evaluate the feasibility of the GNN model as the

number of services increases. This analysis is instru-

mental in understanding GNN-MSOrchest’s behavior

in high-load conditions.

6 SIMULATION-BASED

EVALUATION AND RESULTS

The simulation-based evaluation is centered on three

core metrics: load balancing efficiency, response

time improvement, and fault detection effectiveness.

A comparative analysis with traditional heuristic-

based and non-ML approaches highlights GNN-

MSOrchest’s ability to manage complex interdepen-

dencies effectively, even under load conditions.

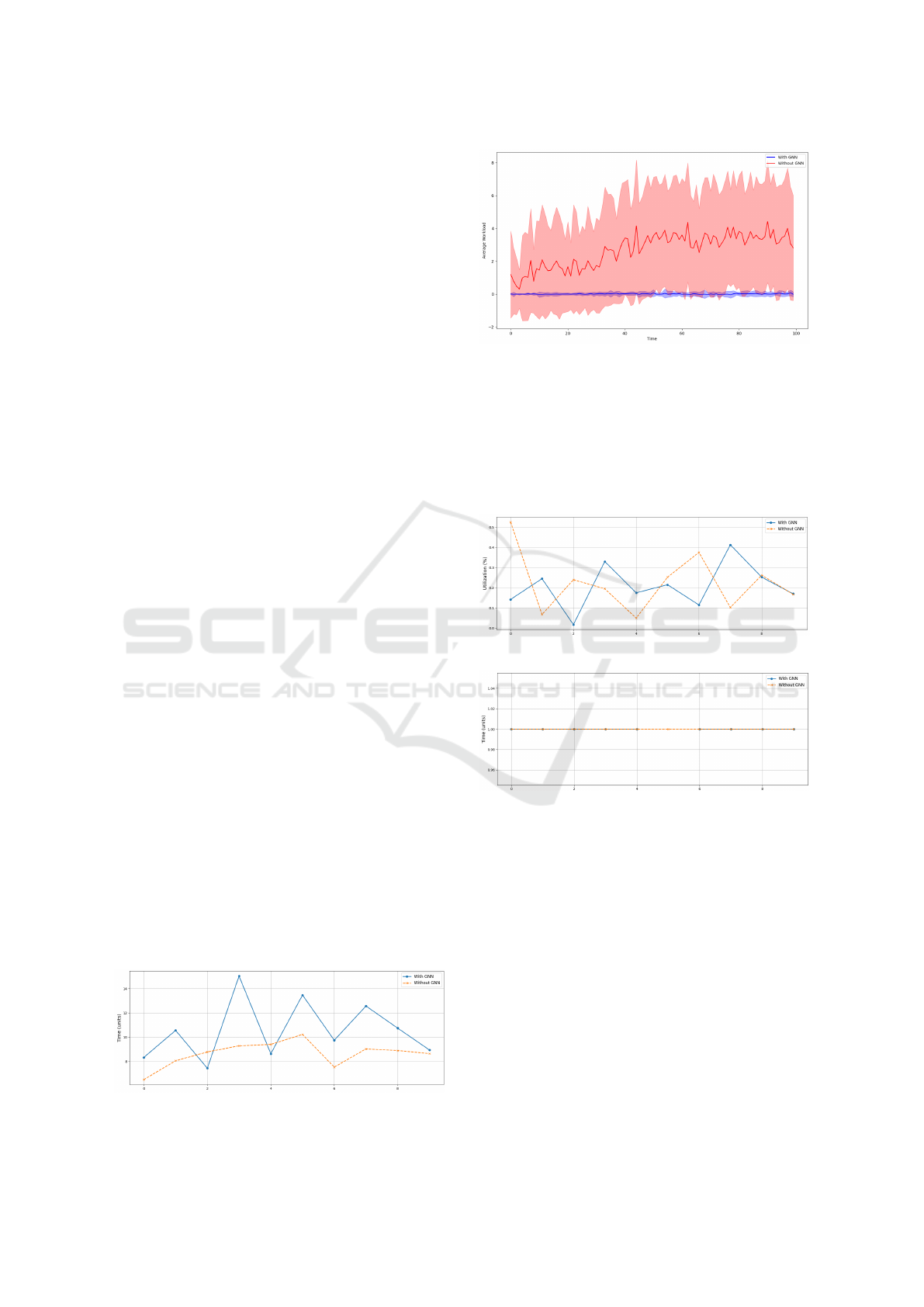

Preliminary results indicate a 15% reduction in

service response times and more balanced load distri-

bution across nodes, demonstrating the GNN’s poten-

tial advantages. Figure 5 displays workload manage-

ment over time, showing stabilized results with GNN

integration.

Figure 6: Response Time Comparison.

Figure 5: Average workload over time.

Figures 5 and 6 highlight that GNN-MSOrchest

significantly stabilizes and optimizes workload dis-

tribution, effectively mitigating spikes and maintain-

ing consistent response times across services. The

integration of GNN-based orchestration also demon-

strates improved resource utilization and fault recov-

ery, as depicted in Figures 7 and 8.

Figure 7: Resource Utilization Comparison.

Figure 8: Failure Recovery Comparison.

7 FUTURE WORK AND

REAL-WORLD APPLICATIONS

The potential of Graph Neural Networks (GNNs) in

microservices orchestration paves the way for exten-

sive future research. Key focus areas include adapt-

ing GNN-MSOrchest for real-world applications, im-

proving computational efficiency, and enabling real-

time decision-making. Future work could involve

deploying GNN-MSOrchest in real-world scenarios,

benchmarking its performance against current orches-

tration frameworks, and integrating reinforcement

learning to enhance adaptability, allowing dynamic

responses to real-time changes in workload and re-

source demands.

ICAART 2025 - 17th International Conference on Agents and Artificial Intelligence

938

Using a GNN for microservices orchestration is

both an intriguing and viable approach, but it should

be viewed as a complement to existing orchestration

tools rather than a full replacement. By analyzing

relationships between microservices and predicting

their behavior, GNNs can support intelligent decision-

making. However, their effective use requires integra-

tion with tools like Kubernetes for executing orches-

tration actions.

Moving forward, our primary objective is to inte-

grate GNNs with existing orchestration tools to en-

hance the management and orchestration of microser-

vices.

8 CONCLUSION

In this paper, we have explored the innovative use of

Graph Neural Networks (GNNs) for microservice or-

chestration, demonstrating significant advancements

in performance, scalability, and fault tolerance. Un-

like traditional approaches that focus solely on

transitioning from monolithic architectures to mi-

croservices, our method uniquely incorporates GNNs

specifically for the orchestration process, highlight-

ing their role in real-time performance enhancement

and resource optimization. Central to our approach

is the use of SimPy, a robust discrete event simula-

tion framework in Python, which allows for precise

modeling and analysis of complex interactions within

microservice architectures. By simulating various op-

erational scenarios, including peak loads and failure

conditions, SimPy provides a risk-free environment to

test and validate our GNN-based orchestration mech-

anisms. This simulation-based design process is cru-

cial for understanding the dynamic behaviors and po-

tential bottlenecks within the system, enabling tar-

geted optimizations that improve overall system per-

formance and resilience. The results from our simu-

lations underscore the transformative potential of in-

tegrating GNNs into microservice orchestration. The

GNN’s workload predictions enable microservices to

take adaptive actions, ensuring responsive and effi-

cient operations even in dynamic environments. This

adaptability significantly enhances the system’s abil-

ity to handle fluctuating workloads, improve user ex-

perience, and maintain service reliability. Our find-

ings demonstrate that GNNs, when combined with

detailed simulations using SimPy, lead to better re-

source utilization, reduced response times, and im-

proved failure recovery. Moreover, this paper sets

a new precedent for the orchestration of microser-

vices, moving beyond traditional methodologies to in-

corporate cutting-edge machine learning techniques.

Future work will focus on integrating real-time data

streams into the GNN model, exploring its application

across various domains, and further enhancing system

scalability to meet the growing complexity of modern

applications.

REFERENCES

Bhatti, U. A., Tang, H., Wu, G., Marjan, S., and Hussain, A.

(2023). Deep learning with graph convolutional net-

works: An overview and latest applications in compu-

tational intelligence. International Journal of Intelli-

gent Systems, 2023(1):8342104.

Brody, S., Alon, U., and Yahav, E. (2021). How atten-

tive are graph attention networks? arXiv preprint

arXiv:2105.14491.

Gilmer, J., Schoenholz, S. S., Riley, P. F., Vinyals, O., and

Dahl, G. E. (2020). Message passing neural networks.

Machine learning meets quantum physics, pages 199–

214.

Hamilton, W., Ying, Z., and Leskovec, J. (2017). Inductive

representation learning on large graphs. Advances in

neural information processing systems, 30.

He, X., Shao, Z., Wang, T., Shi, H., Chen, Y., and Wang,

Z. (2023). Predicting effect and cost of microservice

system evolution using graph neural network. In Inter-

national Conference on Service-Oriented Computing,

pages 103–118. Springer.

Min, E., Chen, R., Bian, Y., Xu, T., Zhao, K.,

Huang, W., Zhao, P., Huang, J., Ananiadou, S.,

and Rong, Y. (2022). Transformer for graphs:

An overview from architecture perspective. arXiv

preprint arXiv:2202.08455.

Oh, J., Cho, K., and Bruna, J. (2019). Advancing graph-

sage with a data-driven node sampling. arXiv preprint

arXiv:1904.12935.

Sun, D., Tam, H., Liu, Y., Xu, H., Xie, S., and

Lau, W. C. (2023). Pert-gnn: Latency prediction

for microservice-based cloud-native applications via

graph neural networks. Proceedings of the 29th ACM

SIGKDD Conference on Knowledge Discovery and

Data Mining, 29:2155–2165.

Tran, D. H., Sheng, Q. Z., Zhang, W. E., Aljubairy, A., Zaib,

M., Hamad, S. A., Tran, N. H., and Khoa, N. L. D.

(2021). Hetegraph: graph learning in recommender

systems via graph convolutional networks. Neural

computing and applications, pages 1–17.

Yun, S., Jeong, M., Yoo, S., Lee, S., Sean, S. Y., Kim,

R., Kang, J., and Kim, H. J. (2022). Graph trans-

former networks: Learning meta-path graphs to im-

prove gnns. Neural Networks, 153:104–119.

Zhang, S., Tong, H., Xu, J., and Maciejewski, R. (2019).

Graph convolutional networks: a comprehensive re-

view. Computational Social Networks, 6(1):1–23.

GNN-MSOrchest: Graph Neural Networks Based Approach for Micro-Services Orchestration - A Simulation Based Design Use Case

939