Exploit the Leak: Understanding Risks in Biometric Matchers

Dorine Chagnon

1

, Axel Durbet

1 a

, Paul-Marie Grollemund

2 b

and Kevin Thiry-Atighehchi

1,∗ c

1

University Clermont Auvergne, LIMOS (UMR 6158 CNRS), Clermont-Ferrand, France

2

University Clermont Auvergne, LMBP (UMR 6620 CNRS), Clermont-Ferrand, France

∗

Keywords:

Privacy-Preserving Distance, Hamming Distance, Information Leakage, Biometric Security.

Abstract:

In a biometric authentication or identification system, the matcher compares a stored and a fresh template to

determine whether there is a match. This assessment is based on both a similarity score and a predefined

threshold. For better compliance with privacy legislation, the matcher can be built upon a privacy-preserving

distance. Beyond the binary output (‘yes’ or ‘no’), most schemes may perform more precise computations, e.g.,

the value of the distance. Such precise information is prone to leakage even when not returned by the system.

This can occur due to a malware infection or the use of a weakly privacy-preserving distance, exemplified by

side channel attacks or partially obfuscated designs. This paper provides an analysis of information leakage

during distance evaluation. We provide a catalog of information leakage scenarios with their impacts on data

privacy. Each scenario gives rise to unique attacks with impacts quantified in terms of computational costs,

thereby providing a better understanding of the security level.

1 INTRODUCTION

Biometric authentication protocols involve the com-

parison of a fresh biometric template with the refer-

ence template. This comparison computes the dis-

tance between the newly acquired data and the stored

template. If this distance is below a given thresh-

old, access is granted; otherwise, it is denied. Ham-

ming distance is a widely used metric in biomet-

ric applications e.g., biohashing (Patel et al., 2015;

Bernal-Romero et al., 2023), iriscode (Daugman,

2009; Dehkordi and Abu-Bakar, 2015; Daugman,

2015), face recognition (Yang and Wang, 2007; He

et al., 2015), gait recognition (Tran et al., 2017),

keystroke (Rahman et al., 2021), ear authentica-

tion (Wang et al., 2021) and palm-vein recogni-

tion (Cho et al., 2021). Computing this distance may

inadvertently leak information that adversaries might

exploit to reconstruct the stored template. These

vulnerabilities may arise from implementation er-

rors, inherent flaws, and server-level attacks such

as malware (Sharma et al., 2023), which can com-

promise system-wide security. Furthermore, Aydin

a

https://orcid.org/0000-0002-4420-1934

b

https://orcid.org/0000-0002-1273-1658

c

https://orcid.org/0000-0003-0042-8771

and Aysu (Aydin and Aysu, 2024) and Hashemi et

al. (Hashemi et al., 2024) have highlighted an increas-

ing prevalence of side-channel attacks. Side-channel

techniques, including timing, differential power anal-

ysis, cache-based, electromagnetic, acoustic, and

thermal attacks, exploit various operational artifacts

to extract sensitive information (Sharma et al., 2023).

One of the concerns is the partial or total leakage

of distance computation information. Such informa-

tion leakage poses significant security and privacy

risks, especially in sensitive applications like privacy-

preserving applications (e.g., biometric recognition

systems). In this paper, we focus on the following

attacks:

• Offline exhaustive search attacks refer to scenar-

ios for which a leaked yet obfuscated database is

available for an attacker. The attacker employs the

public transformation to verify a candidate vector.

This verification may give additional information

beyond the minimal information leakage (‘yes’ or

‘no’), for example via side-channel attacks.

• Online exhaustive search attacks correspond to at-

tacks for which an attacker must interact with the

biometric system to infer information about the

targeted vector. Then, the attacker needs to force

the system to leak additional information beyond

the minimal information leakage (‘yes’ or ‘no’),

Chagnon, D., Durbet, A., Grollemund, P.-M. and Thiry-Atighehchi, K.

Exploit the Leak: Understanding Risks in Biometric Matchers.

DOI: 10.5220/0013250600003899

In Proceedings of the 11th International Conference on Information Systems Security and Privacy (ICISSP 2025) - Volume 2, pages 353-362

ISBN: 978-989-758-735-1; ISSN: 2184-4356

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

353

for example via a malware infection.

Related Works. To the best of our knowledge, two

papers investigate information leakage of biometric

systems using privacy-preserving distance. Pagnin et

al. (Pagnin et al., 2014) shows that the output of a

privacy-preserving distance can be exploited to infer

the hidden input. This type of attack is considered the

most devastating for such systems, as evidenced by

Simoens et al. (Simoens et al., 2012). The work of

Pagnin et al. takes place in the minimal leakage sce-

nario, wherein only the binary output of the biometric

system is given to the attacker. The authors present

the Center Search Attack, designed to recover the hid-

den enrolled input for any ‘valid’ biometric template

in Z

n

q

, where ‘valid’ refers to inputs within a ball cen-

tered at the enrolled template and with a radius equal

to the decision threshold t. To efficiently locate a valid

input, the authors also examine the exhaustive search

attack, particularly its application on binary templates

(q = 2). They suggest implementing a sampling with-

out replacement strategy using their Tree algorithm

to streamline the identification of a suitable input for

the Center Search Attack. This efficient identification

of a proper input requires a number of authentication

attempts that is exponential in the space dimension n

minus the threshold t. While their work focuses on

the minimal leakage scenario, our analysis includes

the consideration of multiple additional information

leaks that may arise during the matching operation.

Contributions. We analyze the impact of poten-

tial information leakage in distance evaluations. Our

contributions detail various leakage scenarios, their

corresponding generic attacks, and the computational

costs involved:

• We revisit the exhaustive search attack in the sce-

nario of a minimal (one-bit) information leak-

age, correcting a previous result (see (Pagnin

et al., 2014)) about the costs of optimal and near-

optimal strategies and include additional informa-

tion on cases that are not well-detailed in the lit-

erature.

• We introduce new attack strategies by malicious

clients that exploit various levels of non-minimal

information leaks from the system. Our complex-

ity results, which detail the cost of these attacks,

apply to both offline exhaustive search attacks that

leverage a leaked (yet obfuscated) database and

online exhaustive search attacks involving direct

interactions with the server.

• We investigate a novel attack, named accumula-

tion attack, where an honest-but-curious server

accumulates knowledge during client authentica-

tion. This type of attack occurs when there is a

minor, yet non-negligible, amount of information

leakage.

The complexities of the attacks, relying on differ-

ent scenarios, are summarized in Table 1.

Outline. Section 2 introduces notations and termi-

nologies and classifies the different types of informa-

tion leakages. Section 3 begins by revisiting the ex-

haustive search attack in the minimal (one-bit) infor-

mation leakage scenario, including a correction of a

previously cited result concerning the costs of optimal

and near-optimal strategies. It then introduces new

strategies for attacks by malicious clients capturing

various other types of information leakages, cover-

ing both offline and online exhaustive search attacks,

with an emphasis on their computational costs. The

section concludes by examining accumulation attacks

performed by an ”honest-but-curious” server during

client authentication, detailing the computational cost

involved. Section 4 provides a discussion of the pre-

sented results.

2 PRELIMINARIES

This section introduces the notations as well as the at-

tacker model and, a list of the considered information

leakage scenarios.

2.1 Notations and Attacker Models

Let Z

n

q

= {0,. ..,q − 1}

n

be a metric space equipped

with the Hamming distance d and ε ∈ N a threshold.

The Hamming distance is defined by

d(x, y) =

|

{i ∈ {1,.. .n, }|x

i

̸= y

i

}

|

for two vectors x = (x

1

,. ..,x

n

) and y = (y

1

,. ..,y

n

) in

Z

n

q

. Let Match

x,ε

denote the oracle modeling the inter-

action between the biometric system using a privacy-

preserving distance and the attacker. Match

x,ε

re-

ceives the template selected by the attacker and com-

pares it with the previously enrolled and stored tem-

plate. If the distance is below the threshold ε, the or-

acle returns 1 and 0 otherwise. In a more formal way,

Match

x,ε

is a function defined as:

Match

x,ε

: Z

n

q

−→ {0,1}

y 7−→

(

1 if d(x, y) ≤ ε.

0 otherwise.

A privacy-preserving distance may leak additional

information beyond its binary output. Under the spec-

ifications of each scenario, the oracle may display this

ICISSP 2025 - 11th International Conference on Information Systems Security and Privacy

354

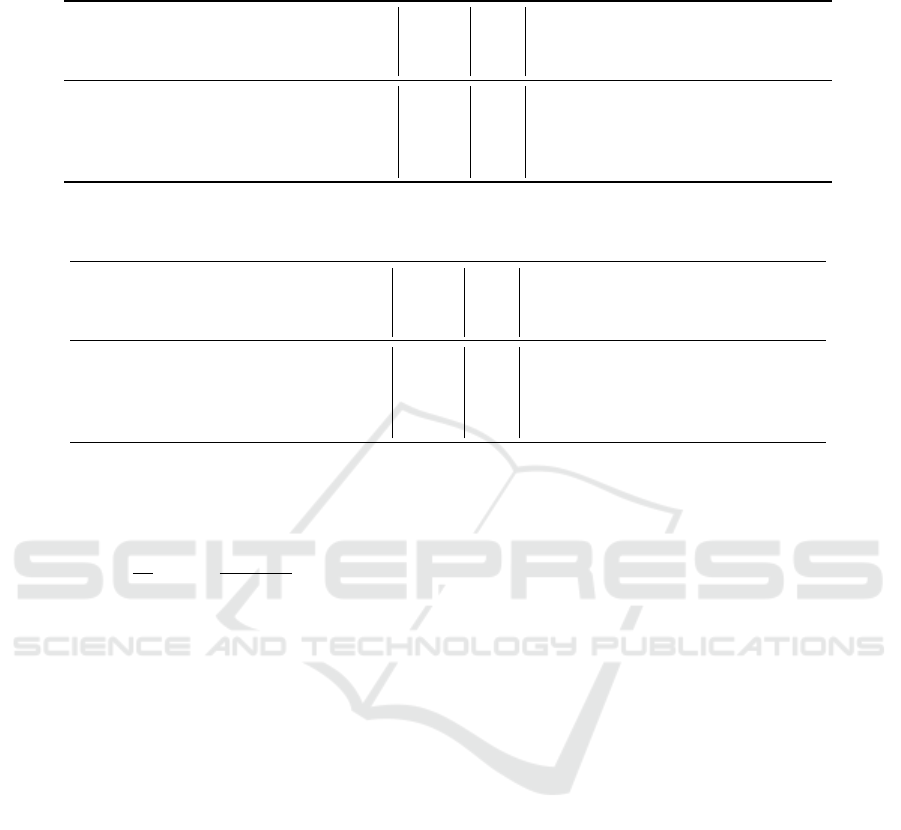

Table 1: Summary of all leakage exploits and their complexities with α such that the occurrence of the rarest error is n

−α

with

α ∈ R

≥1

. The Distance-to-Threshold comparison determines if the leak occurs when d(x,y) ≤ ε (below) or when there is no

distance requirement between x and y (both). For all the complexities, x and y are in Z

n

q

with q ≥ 2 except for the minimal

leakage where x and y are in Z

n

2

. The provided complexities represent worst-case scenarios, except for the accumulation attack

where the result is the expectation.

Distance-to-Threshold comparison Leakage Complexity type Complexity in Big-Oh Theorem

Below

Distance Exponential q

n−ε

+ qε 3.2

Positions Exponential q

n−ε

+ q 3.3

Positions and values Exponential q

n−ε

3.4

Positions and values (accumulation) Linearithmic/Polynomial n

α

logn 3.9

Both

Minimal

1

Exponential q

n−ε

+ n(q − 1) + 2ε 3.5

Distance Linear nq 3.6

Positions Constant q 3.7

Positions and values Constant 1 3.8

1

Note that the Big-Oh complexity of the optimal exhaustive search strategy, in the worst-case, is the same as the naive strategy

as the minimum of h(·) is 0.

additional information. The objective of the attacker

is to find the hidden template x exploiting the oracle

outputs. In the context of a biometric system, the ob-

jective of the attacker may be relaxed to simply find y

that is close to x with respect to d and ε.

2.2 Typology of Information Leakage

In the context of a biometric system, a critical vul-

nerability arises when information is intercepted be-

tween the matcher and the decision module, as illus-

trated in Figure 1 (point 8). This figure, inspired by

Ratha et al. (Ratha et al., 2001), provides an overview

of the attack points in biometric systems while intro-

ducing both the decision module and two additional

attack points. Except for the accumulation attack, the

attacker exploits points 4 and 8 in all discussed sce-

narios. Point 4 allows the submission of a chosen

template, while point 8 grants access to additional in-

formation beyond the binary output. The accumula-

tion attack only necessitates control over the point 8.

For detailed insights into the remaining attack points,

readers are referred to Ratha et al. (Ratha et al., 2001).

There are three main categories of information leak-

age: Below the threshold; Above the threshold; Both

below and above the threshold.

In each of these categories, several sub-settings

can be identified. The first one corresponds to the ab-

sence of any leakage, resulting in Match

x,ε

yielding

only the binary output. Then, the following informa-

tion leakages are examined:

• The distance.

• The positions of the errors.

• Both the error positions and values.

• Both the distance and the positions of the errors.

• Both the distance and the positions and their cor-

responding erroneous values.

It is not relevant to consider that additional informa-

tion is leaked only above the threshold, as no scheme

has such behavior. As a consequence, solely scenar-

ios ‘below the threshold’ and ‘below and above the

threshold’ are examined. The Hamming distance is

a measure of the number of differing coordinates be-

tween two templates. Therefore, knowledge of the

erroneous coordinates implies knowledge of the dis-

tance itself. Hence, we do not consider all possible

scenarios.

3 EXPLOITING THE LEAKAGE

This section provides a comprehensive analysis of the

attacks that can be performed in each leakage sce-

nario, along with an evaluation of their complexity.

3.1 Active Attacks

This section focuses on active attacks, i.e., attacks

where the attacker submits templates to the oracle

Match

x,ε

.

3.1.1 Attack Complexity for the Minimal

(One-Bit) Leakage

In this section, the attacker aims to find a template

that lies in the ball of center x (the target template)

and radius ε (the threshold). To identify such a point,

several methods are available, each with its own set of

advantages and disadvantages.

Brute Force. The objective of this attack is to ex-

haustively test all possible templates until the oracle

Match

x,ε

yields 1. In the worst case, we test every

template, which results in the examination of q

n

vec-

tors. To obtain this result, we ignore the ε acceptance

Exploit the Leak: Understanding Risks in Biometric Matchers

355

Biometric Sensor Feature Extractor

Matcher

System Database

Decision Module

Application Device

1.Override

Sensor

2.Replay

Old Data

3.Override

Feature

Extractor

4.Channel

Attack

5.Override

Matcher

6.Modify

Database

7.Channel

Attack

8.Intercept

or Falsify

Matching

Informa-

tion

9.Override

Decision

Figure 1: Attack points in a generic biometric recognition system.

threshold. On the other hand, if we consider that only

n − ε exact coordinates are needed to be accepted by

the system, complexity decreases to q

n−ε

tests. Since

the attacker specifically targets n − ε coordinates (the

attacker arbitrarily chooses ε coordinates that do not

change), and aims for a perfect match for the n − ε

remaining coordinates yielding the result.

Random Sampling. The attacker randomly

chooses a template in Z

n

q

and tests it by querying

the oracle Match

x,ε

. The precise complexity of this

strategy has not been assessed in the literature. The

worst case for the attacker occurs when the templates

are uniformly distributed in Z

n

q

. The probability

that a template submitted to Match

x,ε

yields 1 is

ρ =

|B

q,ε

(x)|

q

n

. According to this naive strategy, we can

assume that the tests are independent and that each

is modeled as a Bernoulli experiment with a success

probability of ρ. The number of tries needed to obtain

the first success follows a geometric distribution.

Hence, the expected number of tries for an attacker

to get accepted by the system is p

−1

. First, recall that

the cardinal of B

q,ε

(x) is

|B

q,ε

(x)| =

ε

∑

i=0

n

i

(q − 1)

i

,

and that the q-ary entropy is h

q

(x) = xlog

q

(q − 1) −

x log

q

x − (1 − x)log

q

(1 − x). Then, using the Stirling

approximation (see (Timoth

´

ee and Ramanna, 2016;

Thomas and Joy, 2006)), the expected number of tries

for an attacker is

ρ

−1

=

q

n

|B

q,ε

(x)|

=

q

n

ε

∑

i=0

n

i

(q − 1)

i

≤

q

n

q

nh

q

(ε/n)+o(n)

= q

n(1−h

q

(ε/n))+o(n)

if

ε

n

≤ 1 −

1

q

holds, and if n is large enough.

Random Sampling Without Point Replacement.

As the random sampling, the attacker randomly

chooses a template in the set S ⊆ Z

n

q

. At each step,

if Match

x,ε

returns 0, the tested vector b is removed

from the set S. The probability of success does

not remain constant throughout the experiment, un-

like in the previous case. Consequently, the exper-

iment follows a hypergeometric distribution. This

game is equivalent to having an urn with q

n

object

where |B

q,ε

(x)| are considered ‘good’. Then, accord-

ing to Ahlgren (Ahlgren, 2014) the expected number

of queries to Match

x,ε

before success is given by

q

n

+ 1

|B

q,ε

(x)| + 1

≈ ρ

−1

.

This attack has a slightly better performance com-

pared to the previous one, although it is accompanied

by an exponential memory cost that reduces its effi-

ciency, making this version less interesting than the

previous one.

Remark 3.1.1. In the case of random sampling, if

the value of n is large, it is preferable to select a draw

with replacement to save memory while maintaining

a high degree of performance. Indeed, the probability

of drawing a vector that has already been selected is

relatively small if n is sufficiently large.

Tree Search. This algorithm was proposed by

Pagnin et al. (Pagnin et al., 2014). The underlying

idea is to construct a tree of depth n such that each

point of the space is considered to be a leaf. The

tree structure is utilized to establish relative relations

among the points of Z

n

q

and to guarantee that after

each unsuccessful trial, non-overlapping portions of

the space Z

n

q

can be removed. Specifically, if a point

p ∈ Z

n

q

does not satisfy the authentication, the algo-

rithm removes not only the tested point p from the

set of potential centers but also its sibling relatives

ICISSP 2025 - 11th International Conference on Information Systems Security and Privacy

356

generated by the common ancestor ε (i.e., the subtree

of height ε covering these siblings is removed). At

each attempt, the attacker can remove approximately

q

ε

templates from the research space (for more de-

tails, please refer to (Pagnin et al., 2014)). The run-

ning time of the attack is the cost of exploring a q-ary

tree of order n − ε.

Remark 3.1.2. It should be noted that, as intended,

the cost of all the presented attacks is exponential.

Optimal Solution. The optimal solution is to solve

the set-covering problem (Korte et al., 2011) using

balls of radius ε. The main idea is to cover the

space with the smallest number of balls of radius ε

to partition the space. The objective is to remove

an entire ball of radius ε if the query fails. This is

an instance of the set covering problems. Pagnin et

al. (Pagnin et al., 2014) claimed that the number of

points that the adversary needs to query is only a

factor of O(εln(n + 1)) more than the optimal cover.

However, the result is imprecise, as detailed below in

this remark, mainly because the optimal cover is not

given.

Theorem 3.1. Given ε a threshold, x ∈ Z

n

q

a vec-

tor, and Match

x,ε

, an attacker with the optimal strat-

egy can retrieve x in q

n(1−h

q

(ε/n))+o(n)

queries to

Match

x,ε

.

Proof. The strategy between a bounded and an un-

bounded adversary may differ as detailed in the fol-

lowing:

• Unbounded Adversary: The adversary solves

the NP-hard set covering problem (Korte et al.,

2011) to find the optimal covering of Z

n

q

using

balls of radius ε. The adversary exhaustively

searches x using at most q

n(1−h

q

(ε/n))+o(n)

queries

to Match

x,ε

. The number of vectors involved in

a given optimal cover is

q

n

|B

q,ε

(x)|

, which can be

asymptotically approximated as detailed in what

follows. Then, using bounds on the binomial co-

efficient (see (Thomas and Joy, 2006; Timoth

´

ee

and Ramanna, 2016)), the result follows if

ε

n

≤

1 −

1

q

holds and if n is large enough.

• Bounded Adversary: The adversary may use a

greedy algorithm to find a non-optimal covering

containing

q

n

H(n)

|B

q,ε

|

vectors (Chvatal, 1979) with

H(n) =

∑

n

i=1

i

−1

the n-th harmonic number. The

adversary then finds a solution with an exhaus-

tive search in at most

q

n

H(n)

|B

q,ε

|

queries. To provide

a more intuitive value, notice that

q

n

H(n)

|B

q,ε

|

can be

bounded up by

q

n

(ln(n)+1)

|B

q,ε

|

. As in the unbounded

Table 2: Expected number of calls to oracle for the ex-

haustive search method Random Sampling with Replace-

ment (RSR). Examples with real biometric systems with

q = 2.

System n ε

RSR

(log

2

)

IrisCode (Daugman, 2009) 2,048 738 121.37

IrisCode (Daugman, 2009) 2,048 656 199.94

IrisCode (Daugman, 2009) 2,048 574 300.24

FingerCode (Harikrishnan et al., 2024) 80 30 5.92

BioHashing (Belguechi et al., 2013) 180 60 17.74

BioEncoding (Ouda et al., 2010) 350 87 70.62

BioEncoding (Ouda et al., 2010) 350 105 45.18

case, using the q-ary entropy and Stirling’s ap-

proximation, this non-optimal covering leads the

attacker to make at most q

n(1−h

q

(ε/n))+o(n)

queries,

as log

q

(ln(n) + 1) = o(n).

Then, in both cases, the number of queries is

q

n(1−h

q

(ε/n))+o(n)

and the result follows. ■

Remark 3.1.3. The time required to configure the

greedy algorithm is exponential, rendering the afore-

mentioned attack impractical. Moreover, even if an

attacker computes the optimal covering, it still needs

to query an exponential number of times the Match

x,ε

to find a point close to x.

It is also interesting to note that the expected time

for an attacker to be accepted by the system using

the random sampling with and without replacement

method is equivalent to the worst case using the opti-

mal method.

Example of Expectations for the Random Sam-

pling. To illustrate the influence of the threshold

and the choice of q on exhaustive search, we calcu-

late the precise expectation of the number of attempts

required for an attacker to successfully impersonate

the user in different settings using the random sam-

pling method. The results are presented in Table 2.

Experimental results show that to increase the secu-

rity against exhaustive search, it is more interesting to

increase q than to decrease ε.

3.1.2 Attack Complexities for Leakage Below

the Threshold

Leakage below the threshold is considered in this sec-

tion. Given the hidden target x, querying y such that

d(x, y) ≤ ε to the oracle Match

x,ε

provides informa-

tion beyond the binary output.

Leakage of the Distance. The first case occurs

when the distance is given to the attacker as extra in-

formation.

Exploit the Leak: Understanding Risks in Biometric Matchers

357

Theorem 3.2. Given ε a threshold, x ∈ Z

n

q

a vector,

and Match

x,ε

leaks the distance below the thresh-

old, an attacker can retrieve x in the worst case in

O(q

n−ε

+ qε) queries to Match

x,ε

.

Proof. The system, using the Hamming distance, re-

quires a minimum of n − ε accurate coordinates to

output 0. Since the attacker specifically targets n − ε

coordinates (the attacker arbitrarily chooses ε coor-

dinates that do not change), an exhaustive search at-

tack is performed in at most q

n−ε

steps to get ac-

cepted by the system. Then, a hill-climbing attack

runs on the remaining ε coordinates to minimize the

distance at each step. Coordinate by coordinate, the

attacker obtains the right value if the distance de-

creases. Since there are q different values to test on

ε coordinates, determining the correct ones requires a

maximum of (q − 1)ε steps. Then, the overall com-

plexity is O(q

n−ε

+ qε). ■

Leakage of the Positions. The positions of the er-

rors are the extra information given to the attacker,

while their values remain secret.

Theorem 3.3. Given ε a threshold, x ∈ Z

n

q

a vector,

and Match

x,ε

leaks the positions of the errors below

the threshold, an attacker can retrieve x in the worst

case in O(q

n−ε

+ q) queries to Match

x,ε

.

Proof. As the leakage occurs solely below the thresh-

old, the first step is to find a vector y ∈ Z

n

q

such that

d(x, y) ≤ ε. To identify such a vector, the attacker

performs an exhaustive search attack in q

n−ε

steps,

as previously shown. Since ε coordinates remain un-

known, and each coordinate ranges from 0 to q − 1,

every possibility must be examined. By testing all

possibilities simultaneously – for instance, testing all

coordinates at 0, then all coordinates at 1, and so forth

up to q − 2 while retaining the correct values – the

original vector can be identified in no more than q −1

queries (refer to the example illustrated in Figure 2).

Therefore, the complexity of the attack for recovering

x is O(q

n−ε

+ q). ■

Figure 2 gives a representation of the attack de-

scribed above in the case Z

5

4

and the hidden vector

or the missing coordinates is (0,1, 3,2,2). Note that

the actual complexity is q− 1 since the final exchange

is unnecessary, as the coordinates at q − 1 become

known after q −1 queries by inference.

Leakage of the Positions and the Values. When

a vector below the threshold is given to the oracle

Match

x,ε

, the attacker gets information about both er-

ror positions and their values. This is similar to an

error-correction mechanism designed to correct errors

below a given threshold. Note that in the binary case,

this scenario is the same as the previous one, hence

the only considered case is q > 2.

Theorem 3.4. Given ε a threshold, x ∈ Z

n

q

a vector,

and Match

x,ε

leaks the positions and the values of the

errors below the threshold, an attacker can retrieve x

in O(q

n−ε

) queries to Match

x,ε

.

Proof. First, an exhaustive search is performed to find

a vector y for which the distance is below the thresh-

old, for a cost of O(q

n−ε

). Then, given the error po-

sitions and the corresponding error values, y yields

immediately the recovery of x. In the end, the com-

plexity of the attack is O(q

n−ε

). ■

3.1.3 Leakage Below and Above the Threshold

The second scenario is considered in this section,

which involves a leakage independent of the thresh-

old. In other words, when a hidden vector x is tar-

geted, the queried vector y to the oracle Match

x,ε

re-

sults in the leak of additional information.

Minimal Leakage (a Single Bit of Information

Leakage). The basic usage of the system is char-

acterized by the minimal leakage scenario, where the

binary output itself is considered a necessary leak-

age. This minimal leakage is indispensable for the

system’s work and is consistent across these scenar-

ios as the system always responds. Remark that if the

server does not answer above the threshold, the non-

answer gives the attacker the wanted information.

Theorem 3.5. Given ε a threshold, x ∈ Z

n

q

a vector,

and Match

x,ε

that does not leak any extra informa-

tion, an attacker can retrieve x in O (q

n−ε

+n(q −1)+

2ε) queries to Match

x,ε

.

Proof. As in the previous cases, the attacker seeks a

vector y below the threshold. Such a vector is found

by exhaustive search in q

n−ε

steps. Then, the attacker

performs the center search attack (Pagnin et al., 2014)

(generalized to Z

n

q

) to retrieve the original data in at

most n(q − 1) + 2ε queries. Indeed, the generaliza-

tion does not change the cost of the edge detection

but changes the cost of the center search from n to

n(q − 1). The complexity of the attack to find x is

O(2

n−ε

+ n + 2ε). ■

Leakage of the Distance. In this case, d(x,y) the

distance between y ∈ Z

n

q

the fresh template and x ∈ Z

n

q

the old template is leaked to the attacker regardless of

the threshold.

ICISSP 2025 - 11th International Conference on Information Systems Security and Privacy

358

Queries: ( 0 , 0

×

, 0

×

, 0

×

, 0

×

)

(1

×

, 1 , 1

×

, 1

×

, 1

×

)

(2

×

, 2

×

, 2

×

, 2 , 2 )

(3

×

, 3

×

, 3 , 3

×

, 3

×

)

Solution: (0, 1, 3, 2, 2)

Figure 2: Exploiting the error position leaked in the case Z

5

4

and the hidden vector or missing coordinates is (0, 1,3,2, 2).

Theorem 3.6. Given ε a threshold, x ∈ Z

n

q

a vector,

and Match

x,ε

leaks the distance, an attacker can re-

trieve x in O(nq) queries to Match

x,ε

.

Proof. As the attacker has access to the distance, it

is possible to perform a hill-climbing attack, trying

to minimize the distance at each step. The strategy

is to find the vector y, coordinate by coordinate. As

each coordinate has q possible values and there are n

coordinates, this is done in O(nq) steps. ■

Leakage of the Positions. The extra information

given to the attacker is the positions of the errors.

Theorem 3.7. Given ε a threshold, x ∈ Z

n

q

a vector,

and Match

x,ε

leaks the positions of the errors, an at-

tacker can retrieve x in O(q) queries to Match

x,ε

.

Proof. She tries the vector (0,... ,0), (1,...,1) up to,

(q − 1, .. .,q − 1) and keep for each coordinate the

right value (see Figure 2). Hence, the complexity of

the attack to recover x is O(q). ■

Leakage of the Positions and the Values. In this

last case, the positions of the errors and correspond-

ing values are leaked. Unlike the scenario of leakage

below the threshold, such a leak provides an error-

correcting code mechanism that operates irrespective

of any distance and threshold.

Theorem 3.8. Given ε a threshold, x ∈ Z

n

q

a vec-

tor, and Match

x,ε

leaks the positions of the errors

and their values, an attacker can retrieve x in O(1)

queries to Match

x,ε

.

Proof. The submission of any vector gives the posi-

tion of each error, and how to correct them, yielding a

complexity in O(1). ■

Example of the Worst Case for Active Attacks De-

pending on the Leakage. To illustrate the influence

of the leakage type on the attack complexity, we com-

pute the number of attempts (in the worst case) re-

quired for an attacker to successfully impersonate the

user in different settings. The results are presented in

Table 3 and Table 4.

3.2 Accumulation Attack: A Passive

Attack

During the client authentications, the attacker pas-

sively gathers information by observing errors leaked

by the server. More specifically, the server leaks a

list of positions and errors computed over the integers

(i.e., x

i

−y

i

) made by a genuine client during each au-

thentication. Such information gathered during one

successful authentication attempt is called an obser-

vation. The attacker aims to partially or fully recon-

struct x by exploiting these observations.

In the binary case (i.e., q = 2), the errors pre-

cisely yield the bits. If x

i

− y

i

= 1 then x

i

= 1, and

if x

i

− y

i

= −1 then x

i

= 0. This attack is related to

the Coupon Collector’s problem (Ferrante and Salta-

lamacchia, 2014), which involves determining the ex-

pected number of rounds required to collect a com-

plete set of distinct coupons, with one coupon ob-

tained at each round, and each coupon acquired with

equal probability.

Example 3.2.1. Suppose a setting with a metric

space Z

n

2

equipped with the Hamming distance. A

client seeks to authenticate to an honest-but-curious

server that uses a scheme leaking d(x, y) and the

corresponding errors if d(x,y) ≤ ε. As the client

is legitimate, i.e., d(x,y) ≤ ε with a high probabil-

ity, the attacker recovers the values of at most ε

erroneous bits. The attacker needs to collect all

the bits of the client, turning this problem into a

Coupon Collector problem. For example, let as-

sume x = (0,0,1, 1,0, 1,0), ε = 3. The attacker sets

z = (?,?, ?,?, ?,?,?). Session 1: The client authenti-

cates with y = (1,1,0,1,0, 1,0). In this case, d(x,y) =

3 ≤ ε. The values of the erroneous bits of the client

are obtained, yielding z = (0, 0,1,?,?,?, ?). Session 2:

the client authenticates with y = (0,0,0,0,1, 1,0). In

this case, d(x, y) = 3 ≤ ε, and the attacker obtains the

value of the erroneous bits of the client and updates

z = (0,0,1,1, 0,?, ?). At this point, replacing the un-

known values with random bits gives a vector that lies

inside the acceptance ball as the number of unknown

coordinates is smaller than the threshold ε.

In biometrics, some errors happen more fre-

quently than others. In this setup, the Weighted

Coupon Collector’s Problem must be considered.

Each coupon (i.e., each error) has a probability p

i

to occur. Suppose that p

1

≤ p

2

≤ ··· ≤ p

n

and

∑

n

i=1

p

i

≤ 1 then, according to Berenbrink and Sauer-

wald (Berenbrink and Sauerwald, 2009) (Lemma

3.2), the expected number of round E is such that:

1

p

1

≤ E ≤

H(n)

p

1

(1)

Exploit the Leak: Understanding Risks in Biometric Matchers

359

Table 3: Number of calls to oracle depending on the leakage type (worst case analysis). Examples with real biometric

systems (for the leakage below the threshold) with q = 2.

System n ε

Complexity (log

2

)

Distance Position

Distance

and Position

IrisCode (Daugman, 2009) 2,048 738 1,310 1,310 1,310

FingerCode (Harikrishnan et al., 2024) 80 30 50 50 50

BioHashing (Belguechi et al., 2013) 180 60 120 120 120

BioEncoding (Ouda et al., 2010) 350 87 263 263 263

Table 4: Number of calls to oracle depending on the leakage type (worst case analysis). Examples with real biometric

systems (for the leakage both above and below the threshold) with q = 2.

System n ε

Complexity (log

2

)

Distance Position

Distance

and Position

IrisCode (Daugman, 2009) 2,048 738 12.00 1 0

FingerCode (Harikrishnan et al., 2024) 80 30 7.32 1 0

BioHashing (Belguechi et al., 2013) 180 60 8.49 1 0

BioEncoding (Ouda et al., 2010) 350 87 9.45 1 0

with H(n) the n-th harmonic number. The upper

bound on H(n) is 1 +logn, which yields the expected

number of rounds required to complete the collection:

1

p

1

≤ E ≤

ln(n) + 1

p

1

. (2)

However, while in the original problem one coupon is

obtained at each round, the number of errors made by

a client during an authentication session is variable,

i.e., between 1 and ε. In this case, the expected num-

ber of rounds required before all the errors have been

observed is smaller than in the case where only one er-

ror occurs at each round. Consequently, the expected

number of rounds required to collect all the errors is

still in O(logn/p

1

).

Theorem 3.9. Given ε a threshold, x ∈ Z

n

2

a vec-

tor, Match

x,ε

leaks the positions of the errors and

their values below the threshold, and assuming that

the rarest coupon is obtained with probability p

1

=

n

−α

with α ∈ R

≥1

an attacker can retrieve x in

O(n

α

logn).

Proof. According to the Weighted Coupon Collec-

tor’s problem and assuming that the rarest coupon is

obtained with probability p

1

= n

−α

with α ∈ R

≥1

, the

vector x is recovered in O (n

α

logn) observations. ■

It is worth noting that in this scenario, the attacker

does not control the error. If the attacker controls

the error locations, then it is possible to obtain x in

⌈n/ε⌉ queries. This can happen during a fault attack,

akin to side-channel attacks. It should also be noted

that some coordinates of biometric data may be non-

variable and, as a consequence, an attacker cannot re-

cover them. This partial recovery attack is, therefore,

a privacy attack, and leads to an authentication attack

if the number of variable coordinates is sufficiently

large (at least n − ε in the binary case).

Remark 3.2.1. In the non-binary case, the value

x

i

− y

i

does not provide enough information. The

exact value of x

i

can be determined in two cases.

First, if x

i

− y

i

= −q + 1, then x

i

= 0. Second, if

x

i

−y

i

= 2(q −1), then x

i

= q −1. For all other cases,

there is an ambiguity regarding the value of x

i

as y

i

is unknown. However, by knowing the distribution of

x

i

and y

i

, repeating observations yields a statistical

attack.

Attacks for each type of leakage along with their

complexities are summarized in Figure 1.

4 CONCLUDING REMARKS

Our investigation into the information leakage of a

biometric system using privacy-preserving distance

has uncovered critical security vulnerabilities that

arise under various scenarios. By evaluating the im-

pact of different types of leakage, including distance,

error position, and error value, we have highlighted

the potential risks posed to data privacy and security.

Our analysis has encompassed ‘below the thresh-

old’ and ‘below and above the threshold’ setups, al-

lowing us to identify specific conditions under which

ICISSP 2025 - 11th International Conference on Information Systems Security and Privacy

360

information leakage can significantly affect the over-

all security of the system.

It is important to highlight that the leakage ‘be-

low the threshold’ does not notably harm the security

of the system, while the leakage of ‘both below and

above the threshold’ markedly decreases the security.

Indeed, the attacks exploiting the leakage ‘below the

threshold’ are primarily exponential, while those ex-

ploiting information ‘below and above the threshold’

are mainly constant.

The accumulation attack we investigated assumes

errors uniformly distributed throughout each authen-

tication session. The result of the accumulation at-

tack could be further refined by considering a variable

number of coupons, randomly drawn between 0 and

ε in each round, while acknowledging the actual dis-

tribution of the errors. To the best of our knowledge,

no previous studies provide an analysis of the distri-

bution of the errors for any systems.

In practical scenarios, certain errors may occur

more frequently than others, while some may never

occur. A skewed distribution of errors will substan-

tially increase the expected number of authentications

required from the legitimate user for the server to re-

cover the hidden template in its entirety. Future re-

search should involve refining the accumulation at-

tack as suggested above and exploring other distance

metrics, such as L

1

(i.e., Manhattan distance) and L

2

.

ACKNOWLEDGEMENTS

The authors acknowledge the support of the French

Agence Nationale de la Recherche (ANR), under

grant ANR-20-CE39-0005 (project PRIVABIO).

REFERENCES

Ahlgren, J. (2014). The probability distribution for draws

until first success without replacement.

Aydin, F. and Aysu, A. (2024). Leaking secrets in homo-

morphic encryption with side-channel attacks. Jour-

nal of Cryptographic Engineering, pages 1–11.

Belguechi, R., Cherrier, E., Rosenberger, C., and Ait-

Aoudia, S. (2013). Operational bio-hash to preserve

privacy of fingerprint minutiae templates. IET bio-

metrics, 2(2):76–84.

Berenbrink, P. and Sauerwald, T. (2009). The weighted

coupon collector’s problem and applications. In Ngo,

H. Q., editor, Computing and Combinatorics, pages

449–458, Berlin, Heidelberg. Springer Berlin Heidel-

berg.

Bernal-Romero, J. C., Ramirez-Cortes, J. M., Rangel-

Magdaleno, J. D. J., Gomez-Gil, P., Peregrina-

Barreto, H., and Cruz-Vega, I. (2023). A review on

protection and cancelable techniques in biometric sys-

tems. IEEE Access, 11:8531–8568.

Cho, S., Oh, B.-S., Kim, D., and Toh, K.-A. (2021). Palm-

vein verification using images from the visible spec-

trum. IEEE Access, 9:86914–86927.

Chvatal, V. (1979). A greedy heuristic for the set-

covering problem. Mathematics of operations re-

search, 4(3):233–235.

Daugman, J. (2009). How iris recognition works. In The

essential guide to image processing, pages 715–739.

Elsevier.

Daugman, J. (2015). Information theory and the iriscode.

IEEE transactions on information forensics and secu-

rity, 11(2):400–409.

Dehkordi, A. B. and Abu-Bakar, S. A. (2015). Iris code

matching using adaptive hamming distance. In 2015

IEEE International Conference on Signal and Im-

age Processing Applications (ICSIPA), pages 404–

408. IEEE.

Ferrante, M. and Saltalamacchia, M. (2014). The coupon

collector’s problem. MATerials MATem

`

atics, 2014:35.

Harikrishnan, D., Sunil Kumar, N., Joseph, S., and Nair,

K. K. (2024). Towards a fast and secure finger-

print authentication system based on a novel encoding

scheme. International Journal of Electrical Engineer-

ing & Education, 61(1):100–112.

Hashemi, M., Forte, D., and Ganji, F. (2024). Time is

money, friend! timing side-channel attack against gar-

bled circuit constructions. In International Confer-

ence on Applied Cryptography and Network Security,

pages 325–354. Springer.

He, R., Cai, Y., Tan, T., and Davis, L. (2015). Learning

predictable binary codes for face indexing. Pattern

recognition, 48(10):3160–3168.

Korte, B. H., Vygen, J., Korte, B., and Vygen, J. (2011).

Combinatorial optimization, volume 1. Springer.

Ouda, O., Tsumura, N., and Nakaguchi, T. (2010). Bioen-

coding: A reliable tokenless cancelable biometrics

scheme for protecting iriscodes. IEICE TRANS-

ACTIONS on Information and Systems, 93(7):1878–

1888.

Pagnin, E., Dimitrakakis, C., Abidin, A., and Mitrokotsa,

A. (2014). On the leakage of information in biometric

authentication. In International Conference on Cryp-

tology in India, pages 265–280. Springer.

Patel, V. M., Ratha, N. K., and Chellappa, R. (2015). Can-

celable biometrics: A review. IEEE signal processing

magazine, 32(5):54–65.

Rahman, A., Chowdhury, M. E., Khandakar, A., Kiranyaz,

S., Zaman, K. S., Reaz, M. B. I., Islam, M. T., Ezed-

din, M., and Kadir, M. A. (2021). Multimodal eeg

and keystroke dynamics based biometric system using

machine learning algorithms. Ieee Access, 9:94625–

94643.

Ratha, N. K., Connell, J. H., and Bolle, R. M. (2001).

An analysis of minutiae matching strength. In Bi-

gun, J. and Smeraldi, F., editors, Audio- and Video-

Based Biometric Person Authentication, pages 223–

228, Berlin, Heidelberg. Springer Berlin Heidelberg.

Exploit the Leak: Understanding Risks in Biometric Matchers

361

Sharma, S., Saini, A., and Chaudhury, S. (2023). A sur-

vey on biometric cryptosystems and their applications.

Computers & Security, page 103458.

Simoens, K., Bringer, J., Chabanne, H., and Seys, S. (2012).

A framework for analyzing template security and pri-

vacy in biometric authentication systems. IEEE Trans-

actions on Information Forensics and Security, 7:833–

841.

Thomas, M. and Joy, A. T. (2006). Elements of information

theory. Wiley-Interscience.

Timoth

´

ee, P. and Ramanna, S. C. (2016). Tutorial 10 for

Information Theory.

Tran, L., Hoang, T., Nguyen, T., and Choi, D. (2017). Im-

proving gait cryptosystem security using gray code

quantization and linear discriminant analysis. In Inter-

national Conference on Information Security, pages

214–229. Springer.

Wang, Z., Yang, J., and Zhu, Y. (2021). Review of ear bio-

metrics. Archives of Computational Methods in Engi-

neering, 28(1):149–180.

Yang, H. and Wang, Y. (2007). A lbp-based face recog-

nition method with hamming distance constraint. In

Fourth international conference on image and graph-

ics (ICIG 2007), pages 645–649. IEEE.

ICISSP 2025 - 11th International Conference on Information Systems Security and Privacy

362