Towards Secure Biometric Solutions: Enhancing Facial Recognition

While Protecting User Data

Jose Silva

1,2 a

, Aniana Cruz

1 b

, Bruno Sousa

2 c

and Nuno Gonc¸alves

1 d

1

Institute of Systems and Robotics, University of Coimbra (ISR-UC), Coimbra, Portugal

2

Centre for Informatics and Systems of the University of Coimbra (CISUC), Coimbra, Portugal

Keywords:

Facial Recognition, Multi-Factor Authentication, Data Privacy, Biometric Templates, Cryptographic

Algorithms.

Abstract:

This paper presents a novel approach to the storage of facial images in databases designed for biometric au-

thentication, with a primary focus on user privacy. Biometric template protection encompasses a variety of

techniques aimed at safeguarding users’ biometric information. Generally, these methods involve the appli-

cation of transformations and distortions to sensitive data. However, such alterations can frequently result in

diminished accuracy within recognition systems. We propose a deformation process to generate temporary

codes that facilitate the verification of registered biometric features. Subsequently, facial recognition is per-

formed on these registered features in conjunction with new samples. The primary advantage of this approach

is the elimination of the need to store facial images within application databases, thereby enhancing user pri-

vacy while maintaining high recognition accuracy. Evaluations conducted using several benchmark datasets -

including AgeDB-30, CALFW, CPLFW, LFW, RFW, XQLFW - demonstrate that our proposed approach pre-

serves the accuracy of the biometric system. Furthermore, it mitigates the necessity for applications to retain

any biometric data, images, or sensitive information that could jeopardize users’ identities in the event of a data

breach. The solution code, benchmark execution, and demo are available at: https://bc1607.github.io/FRS-

ProtectingData.

1 INTRODUCTION

The era of digitization has brought several opportuni-

ties, as well as challenges and concerns, especially

when it comes to the security and privacy of per-

sonal data. As the use of Multi-Factor Authentica-

tion (MFA) grows, the integration of technologies like

facial recognition is becoming more prevalent. This

trend gives rise to crucial inquiries regarding the safe-

guarding, utilization, and implementation of security

and privacy measures for biometric data. In the initial

quarter of 2024, critical vulnerabilities were identified

in prominent software systems such as Fortinet’s For-

tiOS (CVE-2024-21762)(Bahmanisangesari, 2024),

Jenkins (CVE-2024-23897)(Gioacchini et al., 2024),

and XZ Utils (CVE-2024-3094)(Wu et al., ). These

vulnerabilities were officially listed in the Common

a

https://orcid.org/0000-0002-7114-7777

b

https://orcid.org/0000-0001-5420-6651

c

https://orcid.org/0000-0002-5907-5790

d

https://orcid.org/0000-0002-1854-049X

Vulnerabilities and Exposures (CVE) database with a

Common Vulnerability Scoring System (CVSS) score

of 9.8 or higher, meaning the severity of these secu-

rity risks. Despite being different software programs,

these cases pose threats to information privacy as they

can be exploited to gain access to internal data or to

execute unauthorized commands.

Facial recognition systems (FRS) exhibit vulner-

abilities akin to those found in various software ap-

plications, as their design and implementation may

encompass inherent flaws that are susceptible to ex-

ploitation(Abdullahi et al., 2024). Notably, these sys-

tems are particularly vulnerable to presentation at-

tacks and spoofing techniques, which exploit their in-

trinsic limitations and may lead to erroneous decision-

making processes(Marcel et al., 2023). Furthermore,

the integrity of facial recognition systems, as well

as other systems that depend on them, can be com-

promised by malicious alterations to the underlying

databases. This underscores that vulnerabilities may

arise not only from the algorithms and methodologies

employed but also from the data management prac-

Silva, J., Cruz, A., Sousa, B. and Gonçalves, N.

Towards Secure Biometric Solutions: Enhancing Facial Recognition While Protecting User Data.

DOI: 10.5220/0013252300003905

In Proceedings of the 14th International Conference on Pattern Recognition Applications and Methods (ICPRAM 2025), pages 507-518

ISBN: 978-989-758-730-6; ISSN: 2184-4313

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

507

tices in place (Mousa et al., 2020). Consequently, de-

cisions regarding the selection of biometric features

for database storage, the required accuracy thresh-

olds, and the established procedural frameworks are

crucial for mitigating recognition errors and address-

ing the growing concerns related to data privacy(Lala

et al., 2021). This paper proposes a comprehensive

approach that emphasizes considerations of database

privacy alongside the necessity for an appropriate

level of accuracy.

The aim of this paper is to propose an innova-

tive approach to facial recognition technology for au-

thentication purposes in everyday applications, with a

primary focus on maximizing accuracy while imple-

menting a MFA framework. One of the foundational

principles guiding this design is the elimination of the

need to store biometric data in raw or plaintext for-

mats, which could enable unauthorized recognition of

individuals beyond the confines of the application do-

main. To enhance security in the authentication pro-

cess, it is imperative that biometric authentication op-

erates at high levels of accuracy; consequently, the

methodology presented in this paper is designed to

maintain, if not improve, the accuracy of recognition

and authentication processes. Furthermore, the pro-

posed approach must be inherently scalable, facilitat-

ing the integration of various standardized data pro-

tection regulations pertaining to encryption.

Biometric characteristics refer to unique physical

or behavioral traits of an individual, such as facial

features, retina patterns, signatures, or typing pat-

terns (Delac and Grgic, 2004). Biometric Template

(BT) are digital representations created by extracting

and encoding biometric features (Sarkar and Singh,

2020). In the scientific literature, Biometric Template

Protection (BTP) algorithms can be categorized into

image-level and feature-level approaches.

In image-level BTP, techniques such as distortion

functions (Kirchgasser et al., 2020), morphing can-

cellation (morphed cancellable face images) (Ratha

et al., 2001), block scrambling (Dong et al., 2019),

XOR operations, permutations, and filters are used to

alter the original image (visual secret sharing) (Man-

isha and Kumar, 2020). These methods can also

incorporate revocability through user-defined pass-

words in MFA systems (Singh et al., 2021). Addi-

tionally, images can be protected by employing co-

occurrence matrices to obtain distinct features (Dab-

bah et al., 2007). In this case, it is not possible to cor-

relate the final image with the initial one (Dang and

Choi, 2020).

On the other hand, the majority of BTP methods

operate in the feature-level domain, utilizing cance-

lable functions or cryptosystems. For example, some

methods rely on cryptographic functions like Homo-

morphic Encryption (HE) or employ hash functions.

Approaches in (Ma et al., 2017; Boddeti, 2018; Droz-

dowski et al., 2019; Jindal et al., 2020; ?; Drozdowski

et al., 2021b; Engelsma et al., 2022; Osorio-Roig

et al., 2021) encrypt the features using HE, enabling

comparison in the encrypted domain. However, simi-

lar to other encryption algorithms, features can be de-

crypted with knowledge of the encryption key (Hahn

and Marcel, 2022a). Algorithms based on hash func-

tions are applied to stable objects, derived mathemat-

ically from features (e.g. fuzzy commitment scheme

(Juels and Wattenberg, 1999)).

In this paper, we propose a method that adheres

to the properties of BTP as delineated in the lit-

erature: irreversibility, revocability, and unlinkabil-

ity (Ramu and Arivoli, 2012). To leverage artifi-

cial intelligence and facial image discriminators, we

adopt a feature-level approach. For privacy consider-

ations, our methodology employs standard and secure

cryptographic techniques, including hash functions

(SHA-512), encryption algorithms (Advanced En-

cryption Standard (AES) (National Inst Of Standards

And Technology Gaithersburg Md, 2001)), Pseudo-

random Number Generator (PRNG), and Time-based

one-time password (TOTP). Our approach incorpo-

rates MFA that combines TOTP authenticators with

facial recognition algorithms, specifically Convolu-

tional Neural Network (CNN), along with distance

and similarity functions. Moreover, we implement

a process to protect biometric data through hashing

and encryption techniques. This method enables the

generation of secure objects that can subsequently be

stored in application databases. To enhance accuracy

levels, our solution makes facial recognition decisions

based on the domain of biometric features extracted

by the CNN.

In our approach, the irreversibility, revocabil-

ity, and unlinkability of biometric characteristics are

achieved by creating computationally secure crypto-

graphic objects designed to be difficult to reverse,

revocable, and capable of generating multiple in-

stances from the same facial image, while ensuring

unlinkability so that they do not inherently identify

any individual. An implementation of this proposal

was developed using Python libraries and evaluated

against established benchmarks available in the scien-

tific literature for research purposes, namely the La-

beled Faces in the Wild (LFW) (Huang et al., 2008),

Age Database 30 (AgeDB-30) (Moschoglou et al.,

2017), Cross-Age LFW (CALFW) (Zheng et al.,

2017), Crosspose LFW (CPLFW) (Zheng and Deng,

2018), Racial Faces in the Wild (RFW) (Wang et al.,

2019), and CrossQuality Labeled Faces in the Wild

ICPRAM 2025 - 14th International Conference on Pattern Recognition Applications and Methods

508

(XQLFW) (Knoche et al., 2021).

The benchmarks presented herein comprise a se-

ries of tests along with their respective outcomes. The

CNN model employed in this study is known as the

MagFace model, as proposed by Meng et al. (Meng

et al., 2021). The MagFace model was trained uti-

lizing the MSIM-V2 dataset. The facial recognition

model functions as a black box, utilized solely for the

extraction of feature vectors (embeddings). The pri-

mary Python libraries employed in the implementa-

tion include binascii, random, cryptography, secrets,

hashlib, and pyotp.

The primary contributions of this paper focus on

the design of an approach and methodology aimed at

fulfilling four essential quality requirements. Specifi-

cally, our approach has been crafted to satisfy the fol-

lowing criteria: (1) MFA integrated with facial recog-

nition; (2) user registration through cryptographic ob-

jects that ensure irreversibility, revocability, and un-

linkability; (3) the creation of a database that does not

contain biometric data while still enabling the con-

firmation and assurance of accurate facial authentica-

tion; and (4) the solution must not compromise the

accuracy of the FRS defined by the CNN. In this pa-

per, we implement the proposed approach and con-

duct benchmarks using available tests and metrics to

evaluate a FRS, as outlined in the scientific litera-

ture. This evaluation aims to analyze and ascertain

whether our proposed solution meets the established

objectives.

The Section 2 addresses the relevant literature and

prior research essential for the formulation of the pro-

posed approach.

2 RELATED WORK

FRS must adhere to various quality attributes, includ-

ing accuracy, computational efficiency, security, pri-

vacy, and usability (Hahn and Marcel, 2022b; Ekka

et al., 2022). In our approach, the primary focus lies

on privacy, with data confidentiality being of utmost

importance. Thus, in this study, methods that incorpo-

rate BTP at the feature level using cryptographic sys-

tems or utilizing cancelable functions are considered

appropriate. Generally, BTP aims to achieve proper-

ties such as accuracy, irreversibility, renewability, and

unlinkability (Hahn and Marcel, 2022b). Rui et al.

has introduced the Mission Success Rate property as

a new addition to the biometric privacy criteria (Rui

and Yan, 2019). This property focuses on the sys-

tem’s ability to withstand attacks while also maintain-

ing the confidentiality of biometric data, in addition to

the existing properties of irreversibility, renewability,

and unlinkability.

In (Li and Kot, 2010), a fingerprint authentication

system is proposed which utilizes data hiding and em-

bedding techniques to securely conceal private user

information within a fingerprint template. In the reg-

istration process, a user’s identity is embedded within

their unique fingerprint template. This template, con-

taining the encrypted data, is subsequently stored in

a database for authentication purposes. This proce-

dure outlines a methodology for concealing user data

within images using steganography. In (Li and Kot,

2012), the template is generated by combining two

fingerprints in a way that extracting a single finger-

print would be computationally challenging. Random

and dynamic identifiers can be utilized for the purpose

of associating and disassociating users with random

objects. These random objects help to introduce en-

tropy when combined with biometric characteristics.

In (Dang and Choi, 2020; Smith and Xu, 2011),

the face-based key generation approach is described.

This approach is a deterministic procedure that en-

sures zero-uncertainty key generation by leveraging

auxiliary data storage. The key generation process in-

volves preprocessing, distorting, and extracting facial

features from the photograph, followed by utilizing

randomization to construct a stable template and ul-

timately generate the key. This process is similar to

generating a hash, with the distinction that it allows

for intra-class variances (variations within images of

the same face). The distortion step involves the appli-

cation of cancelable functions before feature extrac-

tion, while the randomization step involves introduc-

ing entropy to the generated data.

In (El-Shafai et al., 2021), a novel authentication

framework based on a genetic encryption algorithm

is proposed. This algorithm takes an image and gen-

erates a cancelable biometric image. The algorithm

utilizes permutation matrices, random number gener-

ator functions, divides the image into parts, processes

the parts, applies crossover and mutation operations

repeatedly. The final output hides the discriminative

features of the biometric templates. It also achieves a

high accuracy, with an average Area Under the Curve

(AUC) of 0.9998. For authentication purposes, the

database will store the cancelable biometric images.

This approach is not exactly what is desired for our

proposal, as it involves storing cancelable biometric

images in a database. Additionally, it is important in

our approach to have direct access to the face photo-

graph for applying active liveness detection (involves

requiring the user to perform a specific action, ensur-

ing their active participation in the authentication pro-

cess) and validating the International Civil Aviation

Organization (ICAO) security list. The International

Towards Secure Biometric Solutions: Enhancing Facial Recognition While Protecting User Data

509

Organization for Standardization (ISO) 19794-5 stan-

dard

1

outlines rules for taking a passport-style face

photograph.

There are several approaches in the literature that

utilize BTP algorithms following the extraction of

features. This preference can be attributed to the

availability of various pre-trained neural networks

that have demonstrated the ability to extract and dis-

criminating facial features from face images (Hahn

and Marcel, 2022a). Some examples of neural net-

works that act as feature discriminators and have

an implemented version available are the Inception

ResNet model (Schroff et al., 2015), the ResNet 50

model (ArcFace) (Deng et al., 2019), the QualFace

model (Tremoc¸o et al., 2021), the Idiap model (Hahn

and Marcel, 2022b), and MagFace (Meng et al.,

2021). The MagFace model was selected for its high

levels of accuracy.

Data: 1999

Result: Recognition system decision

protected BT = registration BT − codeword;

codeword’ = validation BT − protected BT;

if hash(codeword) == hash(codeword’) then

The recognition is successful;

else

The recognition is not successful;

end

Algorithm 1: Fuzzy Commitment scheme.

After feature extraction, various BTP algorithms

can be applied, such as the Fuzzy Commitment

scheme (Juels and Wattenberg, 1999). The Fuzzy

Commitment scheme is a recognition procedure in-

volving registration and validation phases. During the

registration phase, a codeword is generated and linked

with the user. A codeword is a value that is used

to achieve a certain security goal, such as encryp-

tion, decryption, or authentication. The difference be-

tween the registration BT and the codeword produces

the protected BT. The BT registration, along with the

hash of the codeword, is stored in the database. In the

validation phase, the aim is to recover the codeword’

executing the inverse operation. If the retrieved hash

of the codeword’ matches the stored hash, the recog-

nition is successful (see alg. 1). Error correction

functions can rectify a certain number of errors in the

codeword’, but the hashes must match precisely. This

algorithm was found to be insecure, as subsequent

studies revealed the possibility of reversing the pro-

cess and obtaining the template without knowledge of

the codeword (Keller et al., 2020; Keller et al., 2021;

Hahn and Marcel, 2022a). Nevertheless, the essence

1

https://www.iso.org/standard/50867.html

of this method is to present a challenge where the user

must provide a validation object identical to the reg-

istration object.

3 RESEARCH PROPOSAL

This section outlines a facial authentication approach

that employs MFA and ensures the irreversibility, re-

vocability, and unlinkability of registration objects.

It advocates for a database devoid of biometric data,

facilitating secure and effective authentication via

CNN-defined FRS. The approach enhances existing

systems by adding validation steps to improve the se-

curity and privacy of biometric data both in transit and

within stored databases.

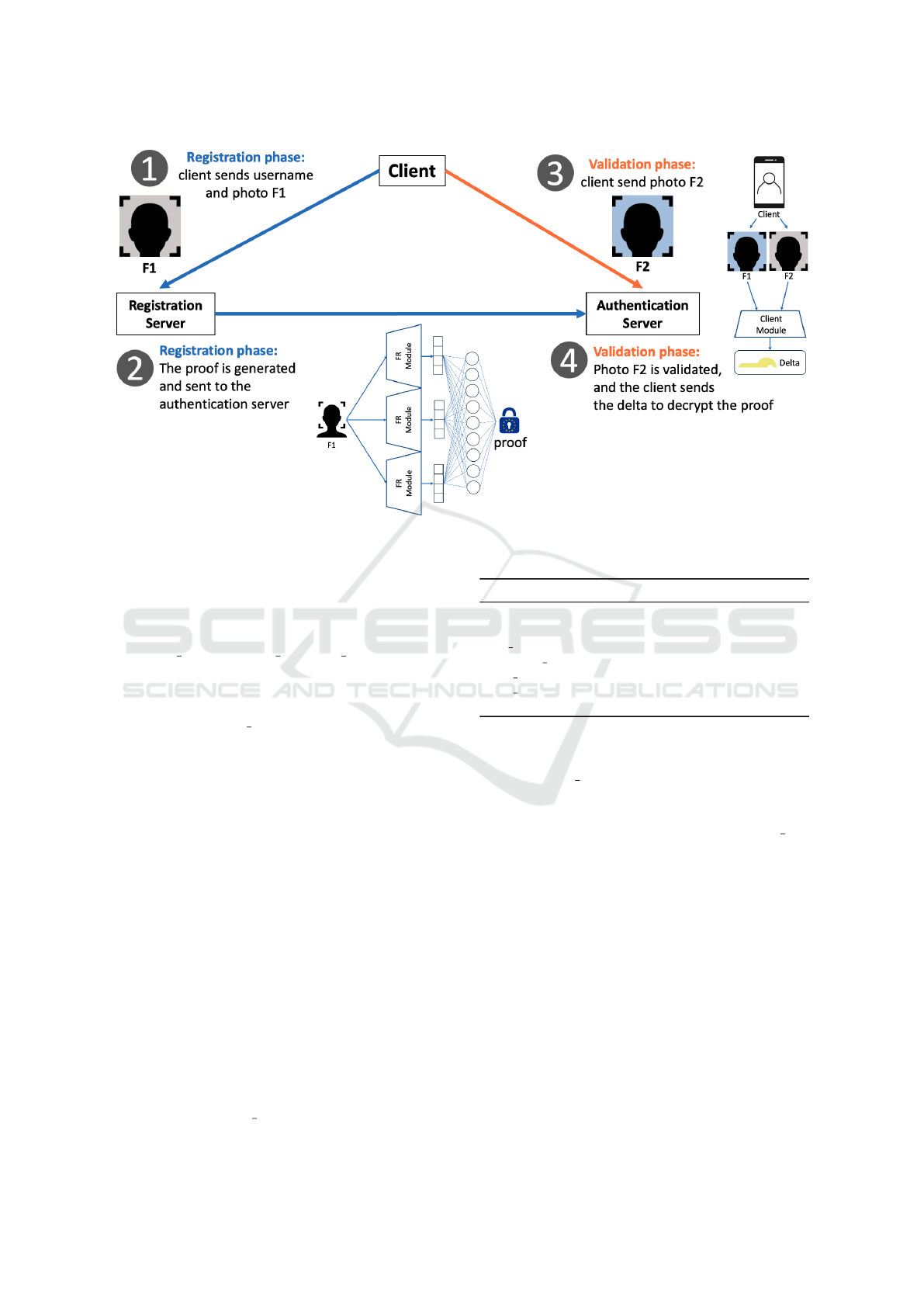

In the following Subsection 3.1, we will present

a detailed overview of the proposed system, which

encompasses two primary functional requirements:

the Registration Phase and the Validation Phase.

These phases involve interactions between the Client

and two designated servers responsible for biomet-

ric recognition and authentication: the Registration

Server and the Authentication Server. This interac-

tion is illustrated in Fig. 1.

3.1 Overview of the Approach

Our facial authentication system consists of three key

entities: the Client, the Registration server, and the

Authentication server. The system operates in two

distinct phases: the Registration Phase (RP) and the

Validation Phase (VP). Below, we present these two

phases.

The RP begins when a Client makes a request

to the Registration server. The Client submits a fa-

cial image, referred to as Frame 1 (F

1

). The Regis-

tration server validates F

1

against a security check-

list, which may include requirements outlined by the

International Civil Aviation Organization (ICAO). If

F

1

is determined to represent a valid and coherent

human face, the Registration of the client proceeds.

This Registration process entails the creation of sev-

eral cryptographic objects, including the username,

opt key, biometric key, user hash, user key, and au-

thentication proo f , which will be elaborated upon in

Sections 3.2 and 3.3.

The Registration and Authentication servers com-

municate, allowing the Authentication server to store

the relevant cryptographic objects. Subsequently,

the Registration server shares the cryptographic ob-

jects with the Client, excluding the user

hash, the

user key, and the proo f , which remain solely with

the servers. The RP concludes when the Registration

ICPRAM 2025 - 14th International Conference on Pattern Recognition Applications and Methods

510

Figure 1: Architecture of the proposed approach: an illustration of the Registration (blue) and the Validation Phase (orange).

Server deletes F

1

and all previously generated crypto-

graphic objects, ensuring it retains no persistent mem-

ory of these items.

The Authentication server and the Client do not

have the responsibility to generate cryptographic keys

(e.g., opt key, biometric key, user key). This re-

sponsibility is delegated to the Registration server,

which selects appropriate and secure cryptographic

functions for generating these objects. These ob-

jects, including user key and authentication proo f ,

are unique to each registration and exist solely on

the server side. The authentication proo f is crucial

for authentication during the validation phase, which

relies on hashing functions. This approach utilizes

hashing to prevent the storage of biometric data on

the server side, thereby mitigating the risk of expos-

ing sensitive biometric information from the client’s

facial data F

1

. In the event of a database breach,

it should not be possible to retrieve any biometric

information from the authentication proo f . Conse-

quently, the photograph used for registration, F

1

, may

be reused to create new and unique cryptographic ob-

jects.

Table 1 presents the data recorded by each entity

upon the completion of the RP.

The VP occurs between the Client and the Au-

thentication server. In a secure manner, the Client

submits their username and a new facial image, re-

ferred to as Frame 2 (F

2

). Initially, authentication is

conducted through a challenge presented by the Au-

thentication server, wherein the Client proves their

identity using the opt

key, resulting in Proof 1 (P

1

).

Table 1: Attributes required in the registration phase.

Parameters Registration Authentication Client

F

1

× × ✓

username × ✓ ✓

ot p key × ✓ ✓

biometric key × ✓ ✓

user hash × ✓ ×

user key × ✓ ×

proo f × ✓ ×

Subsequently, the Client generates Proof 2 (P

2

) by

extracting biometric features from F

2

and utilizing

their biometric key, which was obtained during the

RP. The Authentication server utilizes P

2

to verify

that the Client has accurately extracted the biomet-

ric features from F

2

and applied their biometric key.

Following this, the Client produces a parameter that

we designated by delta, which enables the Authen-

tication server to recalculate the registered biomet-

ric features (through mathematical functions detailed

in Section 3.3). The Authentication server then pro-

cesses these biometric features and decrypts the proof

to validate its integrity. Upon successful validation,

the Client will be authenticated if the biometric tem-

plates from Frame 1 (F

1

) and Frame 2 (F

2

) are found

to be identical, exceeding a predefined threshold.

In the literature, various proposals for biometric

recognition and authentication utilize biometric tem-

plates, applying distance or similarity functions. Our

approach also validates similarity; however, it strate-

gically incorporates cryptographic functions to ensure

that biometric templates are neither stored nor trans-

Towards Secure Biometric Solutions: Enhancing Facial Recognition While Protecting User Data

511

mitted over the network. Table 2 presents the param-

eters required during the VP.

For authentication to be considered valid, four

conditions must be met: (1) the biometric registra-

tion features, BT

1

, and the biometric validation fea-

tures, BT

2

are similar, as the similarity function’s re-

sult exceeds the threshold; (2) the Client presents P

2

to demonstrate that they have created a valid protected

version of the biometric validation template, BT P

2

,

using their unique biometric key; (3) the Authentica-

tion server utilizes the delta to obtain the biometric

registration features BT

1

. Subsequently, it recalcu-

lates the protected version of the biometric registra-

tion template BT P

1

, since both BT

1

and BT P

1

are not

stored in the server database; (4) the Authentication

server utilizes BT P

1

to decrypt and validate the con-

tents of the authentication proo f .

Table 2: Attributes required in the validation phase.

Parameters Authentication Client

username ✓ ✓

ot p key ✓ ✓

P

1

✓ ✓

biometric key ✓ ✓

F

1

× ✓

BT

1

× ✓

BT P

1

× ✓

F

2

✓ ✓

BT

2

× ✓

BT P

2

× ✓

delta ✓ ✓

P

2

✓ ✓

BT

′

2

✓ ×

BT P

′

2

✓ ×

BT P

′

1

✓ ×

proo f ✓ ×

user hash ✓ ×

user key ✓ ×

BT

′

1

✓ ×

threshold ✓ ×

The Subsections 3.2 and 3.3 detail this approach,

including the algorithm and the messages exchanged

between entities during the registration and validation

phases, respectively.

3.2 Registration Phase

Clients and Authentication servers rely on Registra-

tion servers for critical functions in recognition and

authentication processes. The Registration servers

evaluate whether the submitted images meet the nec-

essary security requirements and generate reliable

proofs for authentication. Clients trust Registration

servers to protect their biometric data, while Authen-

ticators depend on these servers to verify that a sub-

mitted image is a true representation of the client. As

a result, both Clients and Authenticators have a vested

interest in ensuring transparency in the operational

procedures of Registration servers and supporting the

adoption of open-source code.

In the RP, the Registration server will need a set

of random parameters to decompose biometric data

and transform it into a fixed code, referred to as the

authentication proo f . To achieve this, the Registra-

tion server utilizes a PRNG to generate the parame-

ters known as biometric key, ot p key and user key in

a pseudo-random manner.

The RP begins when the Client submits an image,

denoted as F

1

. This image undergoes processing to

recognize the face and extract biometric features. An

algorithm is then applied to evaluate whether the im-

age meets several required criteria (ICAO), including

sufficient lighting, absence of shadows, full visibility

of the face, and acceptable facial expressions. This as-

sessment is conducted using neural networks that val-

idate the client’s face and ultimately generate a vector

containing the extracted facial characteristics. Sub-

sequently, from F

1

, a feature vector BT

1

comprising

1024 floats is produced using a CNN. This embed-

ding encapsulates the sensitive biometric information

that we aim to protect in this study.

To safeguard biometric characteristics, we employ

a decomposition and transformation process to obfus-

cate the biometric embeddings. The resulting values

are decomposed, shuffled, and then combined with a

biometric key, yielding a fixed-size code. This pro-

cess functions similarly to a hash function; any mod-

ification to either the biometric template BT

1

or the

biometric key results in a distinctly different output.

Nevertheless, the data remains transformed, allowing

for subsequent facial recognition within this modified

domain, which contrasts with traditional hash func-

tions. In this context, we utilize the Biometric Tem-

plate Protection Function known as PolyProtect, as

proposed in (Hahn and Marcel, 2022b), to generate

cryptographic keys and initialization vectors for the

AES-256 symmetric encryption algorithm used in this

study. This deterministic implementation ensures that

identical inputs consistently produce the same output.

This design choice is intentional, as it aims to main-

tain the accuracy of the recognition system, which

will be evaluated in Section 4.

In our implementation, the function accepts a fea-

ture vector BT

1

and a biometric key as inputs to gen-

erate a cryptographic key and an initialization vec-

tor (IV) for the encryption process. This protection

function, similar to PolyProtect (Hahn and Marcel,

2022b), irreversibly distorts biometric information,

thereby increasing its entropy and transforming it into

ICPRAM 2025 - 14th International Conference on Pattern Recognition Applications and Methods

512

a fixed code that deviates from the distribution of the

original biometric features. We have implemented a

modified version of the PolyProtect algorithm. Ini-

tially, the embedding V , which contains 1024 val-

ues, is divided into 32 non-overlapping sets (m = 32),

analogous to the case in which there is zero overlap in

the PolyProtect algorithm (Hahn and Marcel, 2022b).

The values of the embedding are then mapped using

random coefficients c and exponents e, which are de-

rived from the biometric key generated by the PRNG.

A total of 32 terms are generated from a vector of

1024 values. Equation 1 corresponds to the genera-

tion of the first two terms, where V represents the ini-

tial embedding. For each subsequent term, the most

significant digit of the float value is retained, while

the remaining digits are discarded. For instance, if a

float value is 0.00005678, the digit ’5’ is retained, and

all other digits are disregarded. In cases where the se-

lected digit is zero, a random digit is generated using

the PRNG, with the biometric key serving as the seed.

These selected digits are then concatenated to form a

key and IV for the AES-256 encryption algorithm.

T

1

=

m

∑

i=1

c

i

· V

e

i

i

, T

2

=

2m

∑

i=m+1

c

i

· V

e

i

i

(1)

The Registration server extracts the biometric

characteristics, generating a biometric template BT

1

(eq.2), which is then transformed and canceled to

create the protected biometric template BT P

1

(eq.3).

This transformation is conducted through our Biomet-

ric Template Protection method, BT P, initialized with

the biometric

key (based on the PolyProtect strategy).

CNN(valid f rame) = BT

1

(2)

BTP(biometric

key, BT

1

) = BT P

1

(3)

A hash function is applied to the BT P

1

to generate

the user hash parameter (eq.4). Finally, the proo f is

the encryption (E) of the user key combined with the

user hash, using BT P

1

as the key (eq.5).

hash function(BT P

1

) = user hash (4)

proo f = E(BT P

1

, user key + user hash) (5)

The Authentication server stores username,

opt key, biometric key, user hash, user key, and

proo f , and is unaware of the biometric charac-

teristics, which prevents it from decrypting the

proo f . The Client stores the username, opt key and

biometric key in a successful registration. Finally, all

parameters are discarded on the Registration server.

3.3 Validation Phase

The validation phase aims to authenticate a legitimate

Client who has been previously registered. In a se-

cure communication, the Client sends their username

and a new facial image F

2

, which differs from the

previously submitted, F

1

. Subsequently, the authen-

tication server retrieves the corresponding user key

and ot p key from the database using the provided

username. The server then generates a Hash based

Message Authentication Code (HMAC) by applying

an HMAC function that uses the user key along with a

randomly generated string produced by a PRNG func-

tion: MAC = HMAC(user key, PRNG()).

The MAC code is encrypted (E) using disposable

codes generated by the TOTP function, which is ini-

tialized with the client’s ot p key (eq. 6), producing

the cryptographic object P

1

(eq. 7). The TOTP func-

tion creates an authentication mechanism using tem-

porary unique codes that are valid for a short period,

specifically 30 seconds.

TOTP(ot p key) = unique code

i

(6)

E(unique code

i

, MAC) = P

1

(7)

The Client begins by decrypting (D) the object P

1

with the disposable code (eq. 8) obtained from the

TOTP function initialized with the ot p key (eq. 9).

Next, the CNN model is used to process two pho-

tographs, the registration photo F

1

and the validation

photo F

2

, to obtain their respective embeddings BT

1

(eq. 10) and BT

2

(eq. 11). The delta is then calcu-

lated as the distance between these two embeddings

(eq. 12). Our transformation function BTP initialized

with the biometric key is applied to BT

2

, canceling

out the biometric characteristics and producing BT P

2

(eq. 13). Subsequently, the Client encrypts (E) the

MAC with the BT P

2

, producing the encrypted object

P

2

(eq. 14). P

2

and delta are subsequently transmitted

to the Authentication server.

D(unique code

i

, P

1

) = MAC (8)

TOTP(ot p key) = unique code

i

(9)

CNN(F

1

) = BT

1

(10)

CNN(F

2

) = BT

2

(11)

dist(BT

1

, BT

2

) = delta (12)

BTP(biometric key, BT

2

) = BT P

2

(13)

E(BT P

2

, MAC) = P

2

(14)

The Authentication server uses the CNN model to

generate BT

′

2

from F

2

, previously received (eq. 15).

The biometric features BT

′

2

are transformed using our

BT P function initialized with the biometric key, pro-

ducing BT P

′

2

(eq. 16). The server decrypts (D) P

2

with BT P

′

2

(eq. 17) and validates if this result matches

the MAC constructed previously (eq. 18). If it is not

possible to obtain the exact same MAC, then the pro-

cedure is terminated as unauthorized. Next, the server

obtains the biometric characteristics BT

′

1

by applying

Towards Secure Biometric Solutions: Enhancing Facial Recognition While Protecting User Data

513

the delta to the vector BT

′

2

(eq. 19). To produce BT P

′

1

,

the biometric characteristics BT

′

1

are canceled using

the BT P function initialized with the biometric key

(eq. 20). The server decrypts (D) the proo f with the

BT P

′

1

(eq. 21) and tests if the result is equal to the

user key combined with the user hash. Finally, the

procedure is concluded as authorized if the similarity

between BT

′

1

and BT

′

2

is above a certain threshold (eq.

22).

Upon successful validation, the Authentication

server acquires the biometric characteristics BT

1

and

BT

2

for facial recognition purposes, which are not

stored in the server database. By utilizing distinct

keys, it is feasible to generate new and different

proo f s for the same image F

1

.

This approach was designed to support various

hashing and encryption methods; thus, the selected

cryptographic functions and their implementation will

be discussed in Subsection 3.4.

CNN(F

2

) = BT

′

2

(15)

BTP(biometric key, BT

′

2

) = BT P

′

2

(16)

D(BT P

′

2

, P

2

) = MAC

′

(17)

MAC ⇐⇒ MAC

′

(18)

dist(BT

′

2

, delta) = BT

′

1

(19)

BTP(biometric key, BT

′

1

) = BT P

′

1

(20)

D(BT P

′

1

, proo f ) == user key

′

+ user hash

′

(21)

similarity(BT

′

1

, BT

′

2

) > threshold (22)

3.4 Implementation Details

The approach presented was implemented using

Python and evaluated on a server running Ubuntu

20.04, equipped with an AMD Ryzen 7 5700G pro-

cessor and 58GB of RAM. As PRNG, we utilized the

SystemRandom function to produce the necessary ran-

dom parameters. For symmetric encryption, we chose

AES-256, which is available through the cryptogra-

phy library. Additionally, we utilized SHA-512 as

hashing function, imported from the hashlib library.

The BTP function, as previously detailed in Subsec-

tion 3.2, was developed by our team, using the strat-

egy of (Hahn and Marcel, 2022b).

Biometric features are typically evaluated through

a similarity function, as demonstrated in prior studies.

In our proposed approach, however, we introduce de-

formations to these data. In Section 4, we present the

impact of this approach on the effectiveness of facial

authentication.

4 CRITICAL ANALYSIS

This section outlines the evaluation plan for the pro-

posed approach, detailing the benchmarks and se-

lected datasets, as well as presenting the results of the

experiments conducted in subsection 4.1. In subsec-

tion 4.2, a critical assessment of the method is pro-

vided, justifying the design choices made and dis-

cussing the advantages and disadvantages that these

choices impose on FRS.

4.1 Experiments and Results

The Subsection is structured into datasets, CNN

model, and results.

Datasets. The criterion for selecting the data was

the preference for high-quality datasets with mini-

mal noise, along with the availability. Therefore, this

work utilizes models trained on the MSIM-V2 dataset

(Deng et al., 2019), known for its lower noise lev-

els compared to datasets such as the MS-Celeb-1M

dataset (Guo et al., 2016). The datasets used for val-

idation include LFW (Huang et al., 2008), AgeDB-

30 (Moschoglou et al., 2017), CALFW (Zheng

et al., 2017), CPLFW (Zheng and Deng, 2018),

RFW (Wang et al., 2019), and XQLFW (Knoche

et al., 2021). These validation datasets present a chal-

lenge as they consist of in-the-wild data, where many

frames may not meet quality standards (such as blur,

pixelation, or closed eyes). The images are 112x112

in size and are aligned with the guidelines set forth in

ArcFace (Deng et al., 2019). Each dataset comprises

6000 test cases.

CNN Model. The MagFace model was selected be-

cause, based on our current knowledge, it consistently

produces results that are at the forefront of the field.

The neural network was instantiated using the check-

point files provided by the authors of MagFace af-

ter training with the MSIM-V2 dataset, employing

stochastic gradient descent as the optimization algo-

rithm (Meng et al., 2021). The cosine distance was

utilized as the similarity metric for comparing the fea-

ture embeddings.

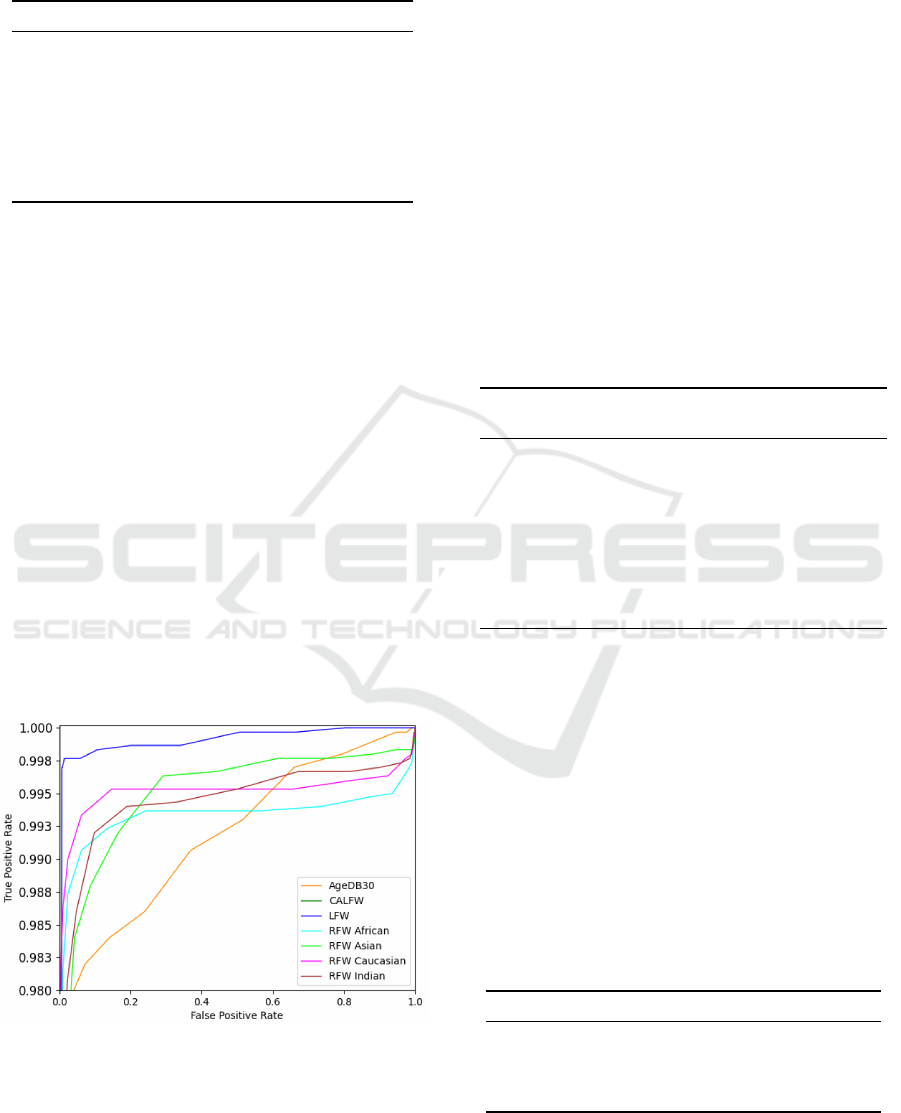

Results. The results obtained for the AgeDB-30,

CALFW, LFW, RFW (African, Caucasian, Indian),

and XQLFW benchmarks are presented in Table 3.

Our approach performed well on most benchmarks in

terms of accuracy, with the highest accuracy achieved

on LFW at 99.43%, followed closely by AgeDB-

30 and RFW African/Caucasian/Indian at 98%. The

Equal Error Rate (EER) values were relatively low for

most datasets, indicating good performance in terms

of false match rate and false non-match rate. The

LFW dataset had the lowest EER at 0.62%, while

ICPRAM 2025 - 14th International Conference on Pattern Recognition Applications and Methods

514

Table 3: Our approach evaluated in different benchmarks

by the following metrics: accuracy, standard deviation (std),

EER, and AUC.

Dataset Accuracy +-std EER AUC

AGEDB30 0.981 +-0.009 0.02320 0.99176

CALFW 0.958 +-0.036 0.52459 0.42706

LFW 0.994 +-0.004 0.00624 0.99647

African 0.986 +-0.004 0.01587 0.99323

Asian 0.976 +-0.006 0.02358 0.99474

Caucasian 0.989 +-0.003 0.01289 0.99515

Indian 0.982 +-0.007 0.02032 0.99436

XQLFW 0.837 +-0.019 0.17128 0.90987

CALFW and XQLFW had the highest, with rates of

52% and 17%, respectively. The AUC values were

also high on most datasets, with LFW again lead-

ing with an AUC of 99.65%. The other datasets, in-

cluding AgeDB-30, RFW African, RFW Asian, RFW

Caucasian, and RFW Indian, also showed high AUC

values above 99%. Overall, the findings suggest that

our approach excelled on the benchmark datasets,

particularly on LFW, demonstrating high accuracy

and discriminative performance across various demo-

graphic groups. The ROC curve in Fig. 2 further

illustrates the system’s performance. Although the

proposed technique maintains a high level of accu-

racy without compromising overall performance, fu-

ture research efforts should focus on enhancing per-

formance on datasets with lower accuracy and higher

Equal Error Rate (EER) values, such as the XQLFW

dataset. However, it is important to note that tests on

low-quality datasets should be approached with cau-

tion. Further investigation is necessary to improve

performance in others benchmarks.

Figure 2: ROC curve evaluating our approach across differ-

ent benchmarks.

Table 4 presents the True Acceptance Rate (TAR)

at a False Acceptance Rate (FAR) ranging from

0.0001 to 0.1 for various validation datasets. The

AgeDB-30 dataset demonstrates a high TAR of

98.94%, whereas the CALFW dataset shows a TAR

of 94.17% at a FAR of 0.0001. The CPLFW dataset

initially exhibits a lower TAR of 28.95%, which in-

creases substantially to 98.25% at a FAR of 0.01.

On the other hand, the LFW dataset starts with a

modest TAR of 28.3% at a FAR of 0.0001 but im-

proves significantly to 99.91% at a FAR of 0.01. The

RFW dataset generally displays high TAR values at

a FAR of 0.0001, ranging from 97.47% to 99.75%.

In comparison, the XQLFW initially shows a TAR of

52.10% at a FAR of 0.0001 but increases as the FAR

values rise. The diverse performance of face recogni-

tion systems across different datasets is highlighted,

with some datasets achieving high TAR even at low

FAR values, while others exhibit substantial enhance-

ments as FAR increases.

Table 4: Accuracy of our approach (%) presenting TAR in

FARs ranging from 0.0001 to 0.1 across different bench-

marks.

Validation TAR@FAR

Datasets 0.0001 0.001 0.01 0.1

AGEDB30 98.94 99.49 99.49 99.53

CALFW 94.17 98.99 98.99 98.99

CPLFW 28.95 97.78 98.25 98.25

LFW 28.3 53.19 99.91 99.91

RFW African 99.31 99.79 99.88 99.91

RFW Asian 97.47 99.16 99.88 99.93

RFW Caucasian 99.75 99.89 99.94 99.94

RFW Indian 99.22 99.73 99.84 99.84

XQLFW 52.10 74.42 75.19 86.18

The results demonstrate that our approach

achieves performance levels that are comparable to

the current state of the art. When compared to

MagFace (Meng et al., 2021) as a baseline, our

method exhibits minimal differences of 0.08, 1.36,

2.84, and 5.38 on LFW, AgeDB-30, CALFW, and

CPLFW datasets, respectively (see Table 5). Our

design integrates various protective strategies for fa-

cial recognition while facilitating accurate decision-

making through a systematic comparison process.

Table 5: Verification accuracy (%) on the LFW, AgeDB-30,

CALFW, and CPLFW benchmarks for the ArcFace (Deng

et al., 2019), MagFace (Meng et al., 2021), and our pro-

posed method.

Datasets ArcFace MagFace Our Gain

LFW 99.81 99.83 99.91 0.08

AgeDB30 98.05 98.17 99.53 1.36

CALFW 95.96 96.15 98.99 2.84

CPLFW 92.72 92.87 98.25 5.38

Towards Secure Biometric Solutions: Enhancing Facial Recognition While Protecting User Data

515

4.2 Critical Evaluation

The registration server operates as a Trusted Third-

Party (TTP), managing temporary and revocable bio-

metric records. To ensure its trustworthiness, it is

essential that our approach is both open-source and

transparent. This transparency facilitates analysis, en-

hancement, and the detection of vulnerabilities.

The advantage of this approach lies in the fact that

the database does not store biometric data. As a re-

sult, it is not possible to recognize users unless they

can be linked to the parameters username, user key,

or biometric key. To mitigate this risk, it is essen-

tial to adopt best practices by generating random pa-

rameters for these attributes. This way, identifying

clients through the database becomes challenging or

even impossible without additional sources of infor-

mation, such as network traffic captures or system

logs.

Brute force attacks on servers can be mitigated

through the implementation of Completely Auto-

mated Public Turing test to tell Computers and Hu-

mans Apart (CAPTCHA), which are designed to dif-

ferentiate between human users and automated algo-

rithms. We recommend hCaptcha

2

due to its strong

emphasis on user privacy, which can be particularly

advantageous for companies that prioritize compli-

ance with data protection regulations such as the Gen-

eral Data Protection Regulation (GDPR). Addition-

ally, protection against Distributed Denial-of-Service

(DDoS) attacks is enhanced by leveraging an external

service, thereby preventing the extensive exploitation

of computational resources associated with FRS.

In FRS, it is crucial to achieve a balance between

the accuracy of authentication and the protection of

biometric data privacy. A drawback of the current

approach is that the authentication server is required

to compute the biometric features of the client, re-

ferred to as BT

1

and BT

2

. This necessity creates a

compromise between the client’s privacy and the ap-

plication’s functionality, facilitating client authentica-

tion through facial recognition. The complexities of

this authentication process revolve around the server’s

ability to determine whether delta and BT

2

are de-

rived from the same client data represented by BT

1

.

This verification relies on the assumption that BT

1

and

BT

2

exhibit similar statistical distributions and that

they diverge from one another by a defined parameter,

delta. As a result, the system aims to achieve reliable

authentication while simultaneously safeguarding the

privacy of the biometric data stored in its persistent

database.

To successfully impersonate a user, an attacker

2

https://www.hcaptcha.com

would need to obtain the user’s registration photo-

graph F

1

, a second validation photograph F

2

, the

username, the opt key, the biometric key, and the

corresponding CNN model to generate BT

1

, BT

2

,

delta, and BT P

2

. This could be achievable if the at-

tacker gains unauthorized access to the client’s de-

vice or the authentication server. A Man-in-the-

Middle (MITM) position would only be feasible if the

communications were not conducted securely, for in-

stance, without the use of Transport Layer Security.

The proposed solution has several drawbacks that

suggest areas for future research. First, the registra-

tion photo is stored on the client side, meaning that

losing it would necessitate a new photo submission

for biometric registration. Second, compliance with

guidelines such as the ICAO standard is crucial to en-

sure the photo meets biometric requirements. Third,

both F

1

and F

2

are static objects; if an attacker ac-

cesses the client device, these could be exploited for

spoofing.

As a future direction, the solution could incor-

porate Active Liveness Detection, utilizing dynamic

video and audio inputs to enhance security against

spoofing. Lastly, refining the registration process to

better align with ICAO standards could improve reli-

ability.

5 CONCLUSIONS

In conclusion, our approach to MFA for facial recog-

nition technology prioritizes the privacy of biomet-

ric data by not storing biometric data. Instead, it

employs cryptographic algorithms to generate signa-

tures (proofs) confirming the user’s identity. The re-

sults indicate excellent performance in face recogni-

tion tasks across various benchmark datasets, demon-

strating high accuracy and AUC values, particularly

on the LFW dataset. The TAR at different FAR lev-

els further underscores the reliability of the method,

placing it on par with state-of-the-art solutions, albeit

with slight variances in performance metrics.

Overall, our approach excels by integrating mul-

tiple techniques to enhance precision and efficacy

of FRS, paving the way for advanced applications

across numerous domains. Notably, it avoids the stor-

age of biometric data and its representations within

a database; the generated proofs do not contain any

biometric information and can be revoked or recre-

ated by simply changing the key. Additionally, the

use of standard algorithms and hash functions facili-

tates the creation of robust proofs, leveraging flexibil-

ity in combination with other CNN and cryptographic

algorithms.

ICPRAM 2025 - 14th International Conference on Pattern Recognition Applications and Methods

516

Nevertheless, certain limitations persist, as secu-

rity depends on the careful validation of data submit-

ted by the client. Future work could focus on incorpo-

rating methods for image assessment and live detec-

tion techniques to further enhance the security of our

approach.

ACKNOWLEDGEMENTS

This work has been supported by the Fundac¸

˜

ao

para a Ci

ˆ

encia e a Tecnologia (FCT) and the

Fundo Social Europeu (FSE) through research grant

2023.02790.BD and by project UIDB/00048/20202.

The authors would like to thank the INCM, ISR-UC,

and CISUC. The computational part of this study was

supported by VISTeam at ISR-Coimbra.

REFERENCES

Abdullahi, S. M., Sun, S., Wang, B., Wei, N., and Wang,

H. (2024). Biometric template attacks and recent pro-

tection mechanisms: A survey. Information Fusion,

103:102144.

Bahmanisangesari, S. (2024). A novel transformer-based

multi-step approach for predicting common vulnera-

bility severity score.

Boddeti, V. N. (2018). Secure face matching using fully

homomorphic encryption. In 2018 IEEE 9th interna-

tional conference on biometrics theory, applications

and systems (BTAS), pages 1–10. IEEE.

Dabbah, M., Woo, W., and Dlay, S. (2007). Secure authen-

tication for face recognition. In 2007 IEEE sympo-

sium on computational intelligence in image and sig-

nal processing, pages 121–126. IEEE.

Dang, T. and Choi, D. (2020). A survey on face-based

cryptographic key generation. Smart Media Journal,

9(2):39–50.

Delac, K. and Grgic, M. (2004). A survey of biometric

recognition methods. In Proceedings. Elmar-2004.

46th International Symposium on Electronics in Ma-

rine, pages 184–193. IEEE.

Deng, J., Guo, J., Xue, N., and Zafeiriou, S. (2019). Ar-

cface: Additive angular margin loss for deep face

recognition. In Proceedings of the IEEE/CVF con-

ference on computer vision and pattern recognition,

pages 4690–4699.

Dong, X., Wong, K., Jin, Z., and Dugelay, J.-l. (2019).

A cancellable face template scheme based on nonlin-

ear multi-dimension spectral hashing. In 2019 7th

International Workshop on Biometrics and Forensics

(IWBF), pages 1–6. IEEE.

Drozdowski, P., Buchmann, N., Rathgeb, C., Margraf, M.,

and Busch, C. (2019). On the application of homo-

morphic encryption to face identification. In 2019

International Conference of the Biometrics Special In-

terest Group (BIOSIG), pages 1–5.

Drozdowski, P., Stockhardt, F., Rathgeb, C., Osorio-Roig,

D., and Busch, C. (2021a). Feature fusion methods

for indexing and retrieval of biometric data: Applica-

tion to face recognition with privacy protection. IEEE

Access, 9:139361–139378.

Drozdowski, P., Stockhardt, F., Rathgeb, C., Osorio-Roig,

D., and Busch, C. (2021b). Feature fusion methods

for indexing and retrieval of biometric data: Applica-

tion to face recognition with privacy protection. IEEE

Access, 9:139361–139378.

Ekka, M. U., Mze, O. A., Singh, T., and Raghava, N.

(2022). Attendance management system using mod-

ern face recognition and gesture recognition using

deep learning. In Advances in Manufacturing Tech-

nology and Management: Proceedings of 6th Inter-

national Conference on Advanced Production and In-

dustrial Engineering (ICAPIE)—2021, pages 527–

535. Springer.

El-Shafai, W., Mohamed, F. A. H. E., Elkamchouchi, H. M.,

Abd-Elnaby, M., and Elshafee, A. (2021). Efficient

and secure cancelable biometric authentication frame-

work based on genetic encryption algorithm. IEEE

Access, 9:77675–77692.

Engelsma, J. J., Jain, A. K., and Boddeti, V. N. (2022).

Hers: Homomorphically encrypted representation

search. IEEE Transactions on Biometrics, Behavior,

and Identity Science, 4(3):349–360.

Gioacchini, L., Mellia, M., Drago, I., Delsanto, A., Sira-

cusano, G., and Bifulco, R. (2024). Autopenbench:

Benchmarking generative agents for penetration test-

ing. arXiv preprint arXiv:2410.03225.

Guo, Y., Zhang, L., Hu, Y., He, X., and Gao, J. (2016). Ms-

celeb-1m: A dataset and benchmark for large-scale

face recognition. In Computer Vision–ECCV 2016:

14th European Conference, Amsterdam, The Nether-

lands, October 11-14, 2016, Proceedings, Part III 14,

pages 87–102. Springer.

Hahn, V. K. and Marcel, S. (2022a). Biometric template

protection for neural-network-based face recognition

systems: A survey of methods and evaluation tech-

niques. IEEE Transactions on Information Forensics

and Security, 18:639–666.

Hahn, V. K. and Marcel, S. (2022b). Towards protecting

face embeddings in mobile face verification scenar-

ios. IEEE Transactions on Biometrics, Behavior, and

Identity Science, 4(1):117–134.

Huang, G. B., Mattar, M., Berg, T., and Learned-Miller,

E. (2008). Labeled faces in the wild: A database

forstudying face recognition in unconstrained envi-

ronments. In Workshop on faces in’Real-Life’Images:

detection, alignment, and recognition.

Jindal, A. K., Shaik, I., Vasudha, V., Chalamala, S. R., Ma,

R., and Lodha, S. (2020). Secure and privacy preserv-

ing method for biometric template protection using

fully homomorphic encryption. In 2020 IEEE 19th

international conference on trust, security and privacy

in computing and communications (TrustCom), pages

1127–1134. IEEE.

Juels, A. and Wattenberg, M. (1999). A fuzzy commitment

scheme. In Proceedings of the 6th ACM conference

Towards Secure Biometric Solutions: Enhancing Facial Recognition While Protecting User Data

517

on Computer and communications security, pages 28–

36.

Keller, D., Osadchy, M., and Dunkelman, O. (2020). Fuzzy

commitments offer insufficient protection to biometric

templates produced by deep learning. arXiv preprint

arXiv:2012.13293.

Keller, D., Osadchy, M., and Dunkelman, O. (2021). Invert-

ing binarizations of facial templates produced by deep

learning (and its implications). IEEE Transactions on

Information Forensics and Security, 16:4184–4196.

Kirchgasser, S., Uhl, A., Martinez-Diaz, Y., and Mendez-

Vazquez, H. (2020). Is warping-based cancellable

biometrics (still) sensible for face recognition? In

2020 IEEE International joint conference on biomet-

rics (IJCB), pages 1–9. IEEE.

Knoche, M., Hormann, S., and Rigoll, G. (2021). Cross-

quality lfw: A database for analyzing cross-resolution

image face recognition in unconstrained environ-

ments. In 2021 16th IEEE International Confer-

ence on Automatic Face and Gesture Recognition (FG

2021), pages 1–5. IEEE.

Lala, S. K., Kumar, A., and Subbulakshmi, T. (2021).

Secure web development using owasp guidelines.

In 2021 5th International Conference on Intelli-

gent Computing and Control Systems (ICICCS), pages

323–332. IEEE.

Li, S. and Kot, A. C. (2010). Privacy protection of fin-

gerprint database. IEEE Signal Processing Letters,

18(2):115–118.

Li, S. and Kot, A. C. (2012). Fingerprint combination for

privacy protection. IEEE transactions on information

forensics and security, 8(2):350–360.

Ma, Y., Wu, L., Gu, X., He, J., and Yang, Z. (2017). A se-

cure face-verification scheme based on homomorphic

encryption and deep neural networks. IEEE Access,

5:16532–16538.

Manisha and Kumar, N. (2020). On generating cancelable

biometric templates using visual secret sharing. In

Arai, K., Kapoor, S., and Bhatia, R., editors, Intelli-

gent Computing, pages 532–544, Cham. Springer In-

ternational Publishing.

Marcel, S., Fierrez, J., and Evans, N. (2023). Hand-

book of Biometric Anti-Spoofing: Presentation Attack

Detection and Vulnerability Assessment, volume 1.

Springer.

Meng, Q., Zhao, S., Huang, Z., and Zhou, F. (2021).

Magface: A universal representation for face recog-

nition and quality assessment. In Proceedings of the

IEEE/CVF conference on computer vision and pattern

recognition, pages 14225–14234.

Moschoglou, S., Papaioannou, A., Sagonas, C., Deng, J.,

Kotsia, I., and Zafeiriou, S. (2017). Agedb: the first

manually collected, in-the-wild age database. In pro-

ceedings of the IEEE conference on computer vision

and pattern recognition workshops, pages 51–59.

Mousa, A., Karabatak, M., and Mustafa, T. (2020).

Database security threats and challenges. In 2020 8th

International Symposium on Digital Forensics and Se-

curity (ISDFS), pages 1–5. IEEE.

National Inst Of Standards And Technology Gaithersburg

Md (2001). Advanced Encryption Standard (AES).

https://apps.dtic.mil/sti/citations/ADA403903. (ac-

cessed: April, 2024).

Osorio-Roig, D., Rathgeb, C., Drozdowski, P., and Busch,

C. (2021). Stable hash generation for efficient privacy-

preserving face identification. IEEE Transactions on

Biometrics, Behavior, and Identity Science, 4(3):333–

348.

Ramu, T. and Arivoli, T. (2012). Biometric template se-

curity: an overview. In Proceedings of International

Conference on Electronics, volume 65.

Ratha, N. K., Connell, J. H., and Bolle, R. M. (2001).

Enhancing security and privacy in biometrics-based

authentication systems. IBM systems Journal,

40(3):614–634.

Rui, Z. and Yan, Z. (2019). A survey on biometric authen-

tication: Toward secure and privacy-preserving iden-

tification. IEEE Access, 7:5994–6009.

Sarkar, A. and Singh, B. K. (2020). A review on perfor-

mance, security and various biometric template pro-

tection schemes for biometric authentication systems.

Multimedia Tools and Applications, 79(37):27721–

27776.

Schroff, F., Kalenichenko, D., and Philbin, J. (2015).

Facenet: A unified embedding for face recognition

and clustering. In Proceedings of the IEEE con-

ference on computer vision and pattern recognition,

pages 815–823.

Singh, A., Srivastva, R., and Singh, Y. N. (2021). Plexnet:

An ensemble of deep neural networks for biometric

template protection. International Journal of Ad-

vanced Computer Science and Applications, 12(4).

Smith, R. and Xu, J. (2011). A survey of personal privacy

protection in public service mashups. In Proceedings

of 2011 IEEE 6th International Symposium on Service

Oriented System (SOSE), pages 214–224. IEEE.

Tremoc¸o, J., Medvedev, I., and Gonc¸alves, N. (2021). Qual-

face: Adapting deep learning face recognition for id

and travel documents with quality assessment. In

2021 International Conference of the Biometrics Spe-

cial Interest Group (BIOSIG), pages 1–6.

Wang, M., Deng, W., Hu, J., Tao, X., and Huang, Y. (2019).

Racial faces in the wild: Reducing racial bias by in-

formation maximization adaptation network. In Pro-

ceedings of the ieee/cvf international conference on

computer vision, pages 692–702.

Wu, H., Wu, J., Wu, R., Sharma, A., Machiry, A., and

Bianchi, A. Veribin: Adaptive verification of patches

at the binary level.

Zheng, T. and Deng, W. (2018). Cross-pose lfw: A database

for studying cross-pose face recognition in uncon-

strained environments. Beijing University of Posts

and Telecommunications, Tech. Rep, 5(7):5.

Zheng, T., Deng, W., and Hu, J. (2017). Cross-age

lfw: A database for studying cross-age face recogni-

tion in unconstrained environments. arXiv preprint

arXiv:1708.08197.

ICPRAM 2025 - 14th International Conference on Pattern Recognition Applications and Methods

518