A Preliminary Study on the Use of Generative Artificial Intelligence

to Enrich Dashboards

Mikel Villamañe

a

, Aitor Renobales-Irusta

b

and Ainhoa Álvarez

c

Department of Computer Languages and Systems University of the Basque Country UPV/EHU, Spain

Keywords: GenAI, Learning Analytics Dashboards.

Abstract: The use of dashboards to show information to teachers in educational environments is a widespread technique.

However, teachers often have problems to understand the charts and therefore to take decisions based on the

information shown. This is, often dashboards just show information and do not help teachers to interpret

which problems do students have with the course and, consequently, dashboards do not assist teachers to

provide adequate interventions. In this context, the aim of this work is to analyse whether the use of Generative

artificial intelligence (GenAI) can help teachers understanding dashboards and decide in turn when to provide

interventions and what kind of interventions could be the most beneficial for the students.

1 INTRODUCTION

The field of learning analytics (LA) is oriented to

collecting, analysing and reporting data so that the

learning and the environment in which it occurs are

understood and can be optimized. The Clow’s

learning analytics cycle (Clow, 2012) includes four

phases: learners, data, metrics or analytics and

interventions. In this work we have cantered in the

analytics step of the Learning Analytics cycle (Clow,

2012). The objective of this step is to process the

acquired data and try to provide insights regarding the

learning process. The results obtained from analysing

data should be adequately reported and presented so

that it really helps supporting the learning and

teaching process (Yan et al., 2024). One of the main

ways to provide those insights is the use of

dashboards and visualizations.

There is a growing interest in learning analytics

dashboards with the objective of supporting teachers

in their teaching process (Matcha et al., 2020).

However, it has been detected that understanding

dashboards and making decisions taking into account

that information is challenging for teachers

(Pozdniakov et al., 2023). Therefore, teachers need

tools that help them understand those dashboards. In

a

https://orcid.org/0000-0002-4450-1056

b

https://orcid.org/0000-0002-2148-9269

c

https://orcid.org/0000-0003-0735-5958

this aspect, according to (Pozdniakov et al., 2023),

there are two main strategies to overcome the

problems teachers face when using dashboards:

improve teachers data and visual literacy and improve

the explanatory capabilities of dashboards.

Recently, Generative artificial intelligence

(GenAI) has appeared and several authors have

indicated that using it could help overcoming the

problems detected when teachers use dashboards. For

example, (Yan et al., 2024) indicate that GenAI can

be used in all the steps of the Clow’s learning

analytics cycle (Clow, 2012) and propose that the use

of GenAI could provide rich explanations about the

dashboards and facilitate their comprehension.

The work presented in this paper is related to

whether GenAI can help enriching current

dashboards in order to improve teachers’

comprehension and analysis of the data shown. This

in turn will drive teachers to provide adequate and

informed interventions.

The main objective of the work was to carry out a

first approximation to the possibility of using GenAI

to give answer to the visual literacy and explainability

problems teachers’ face when they are in front of

dashboards.

With that objective in mind, the enrichment of the

dashboards with GenAI generated output has been

Villamañe, M., Renobales-Irusta, A. and Álvarez, A.

A Preliminary Study on the Use of Generative Artificial Intelligence to Enrich Dashboards.

DOI: 10.5220/0013265600003932

In Proceedings of the 17th International Conference on Computer Supported Education (CSEDU 2025) - Volume 2, pages 265-272

ISBN: 978-989-758-746-7; ISSN: 2184-5026

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

265

analysed. The idea was to include a button next to

each chart of the dashboard to show a GenAI

generated explanation in order to improve the

understanding of the dashboard and helping the

interpretation of the visualization. In this first attempt,

the objective was to answer the following two

research questions:

RQ1: Can the use of GenAI facilitate teachers

understanding the charts used in dashboards?

This is, can the use of GenAI help improving

teachers visual literacy?

RQ2: Can the use of GenAI help providing

explanations to teachers in order to facilitate

interpreting the shown data and taking

decisions?

This paper presents the prompt engineering

process carried out as a first approach to see how

effectively can GenAI answer the research questions

and help the Analytics step of the LA cycle. This

paper is organized as follows. First, a related work

section is presented. After, the followed prompt

engineering process is depicted followed by a

discussion section. Finally, some conclusions are

drawn and future work lines outlined.

2 RELATED WORK

The use of technology-enhanced learning

environments has seen a rise in the last years (Matcha

et al., 2020). This, in turn, has generated the

possibility of collecting student related data that can

be used to improve learning experiences. In this

context, Learning Analytics Dashboards are widely

used as they help target users to visually comprehend

student performance (Pinargote et al., 2024). There

exist many learning analytics dashboards oriented to

support teachers. Dashboards introduce different

charts to represent student data, being bar charts and

radar charts the most used ones. The information

displayed on these charts is typically limited to a

single individual or to the comparison of one

individual with its respective group (Matcha et al.,

2020).

For the prompt engineering process presented in

this paper, we have taken a set of real charts from the

dashboard of the AdESMuS system (Villamane et al.,

2020). This system was selected because the teachers

participating in the study had already worked with

this system and it provides the most habitual

perspectives of data: information regarding a single

student and information of a student contextualized in

the group he or she belongs to. For individual students

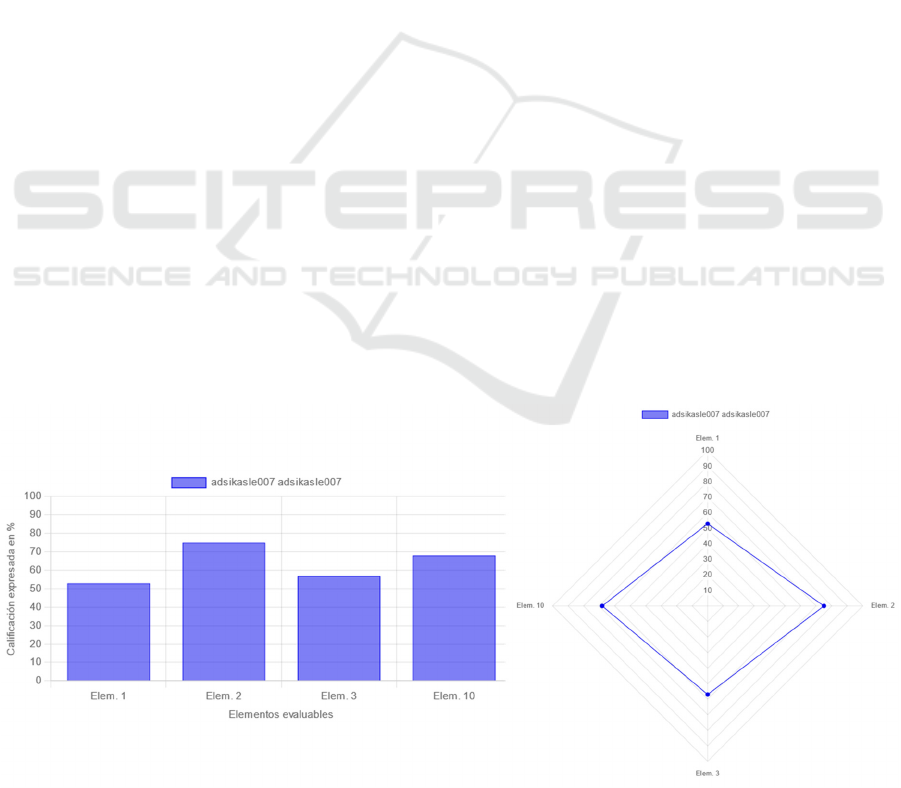

(see Figure 1), the system includes the visualization

of data using both bar and radar plots. For the student

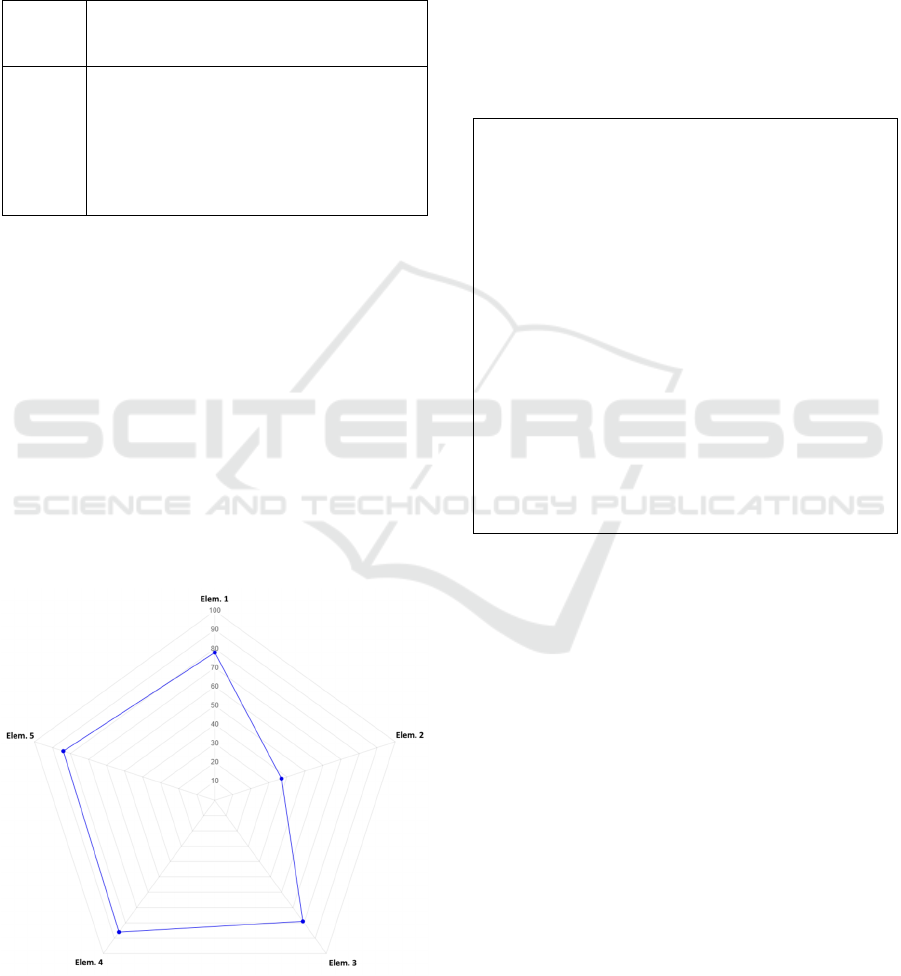

data against her or his group (see

Figure 2

), AdESMuS

includes visualizations using bar, radar and violin

plots.

This type of charts are widely used, but teachers

still find it “challenging to make sense of LA

dashboards” (Pozdniakov et al., 2023). In that paper

the authors propose two different strategies to address

this problem: Improve teacher’s data literacy and

improve explanatory features of current dashboards.

Data literacy is defined by (Gašević & Merceron,

2022) as “educators’ ability to use data effectively

and responsibly” .However, usually teachers face

dashboards and not data so what is required is visual

literacy defined as “the skills required by teachers to

interact with LA dashboards” (Pozdniakov et al.,

Figure 1: Visualizations for individual students.

CSEDU 2025 - 17th International Conference on Computer Supported Education

266

Figure 2: Visualizations of individual students against the group.

2023). Even if progress has been done in this area,

teachers still have problems to understand

information provided in dashboards (Pozdniakov et

al., 2023). Therefore, tools to reduce the visual

literacy lack of teachers are required.

Moreover, usually dashboards only show the

information in charts where teachers have to search

for insights. Some authors have introduced the idea of

explanatory dashboards whose main goal is to present

and communicate insights (Echeverria et al., 2018).

Improving those explanatory features of dashboards

has become a challenge to face up to, in order to give

a more contextualized feedback.

In this area, (Pinargote et al., 2024) indicate that

currently there is a gap in research related to the

generation of feedback mechanisms and narrative

elements in LA dashboards. Our research is centred

in trying to answer some aspects related to this gap.

Next, the prompt engineering process followed to

answer the research questions is described.

3 PROMPT ENGINEERING

PROCESS

We have carried out an iterative prompt engineering

process to determine how to best formulate the

prompts in order to obtain outputs that could help to

reduce the problems teachers have when using

dashboards. As GenAI tool for our work, we have

used ChatGPT and its ChatGPT-4o model. All the

prompts have included the related chart generated in

the AdESMuS dashboard.

The prompt engineering process has been guided

by the CLEAR framework (Lo, 2023) and the

strategies and tactics guide for prompt engineering

provided by the OpenAI platform (OpenAI, 2024).

According to the CLEAR framework, the prompts

should be: concise, logical (structured and coherent),

explicit, adaptive (experiment with various prompt

formulations) and reflective. In the OpenAI guide

among other aspects, it is indicated the relevance of:

Identifying the errors or hallucinations in the

output

Output customization: tailor the output

generated by the Large Language Model

(LLM)

Prompt improvement: Question refinement and

alternative approaches

To carry out this process, two teachers have been

involved in the prompt generation and improvement

process.

The carried out prompt engineering process where

prompt improvement has been developed, has

included several rounds of the cycle outlined in

Figure

3

. Those rounds are described next.

Figure 3: Prompt engineering process.

Prompt Generation /

Refinement

Output Analysis

A Preliminary Study on the Use of Generative Artificial Intelligence to Enrich Dashboards

267

3.1 Round 1- General Prompts

In the first round, some general set of prompts (see

Table 1) were generated in order to analyse what

GenAI could provide when given different types of

charts.

Table 1: Initial General Prompts.

Promt1 Give me an interpretation of the following

chart

Prompt2 I am a teacher and the following bar chart

shows the grades (expressed as a percentage

(%)) on the assessable items (on the

horizontal axis) for student adikasle007 in

one of my courses. Could you make an

interpretation of the data?

The analysis of the output obtained in this first

round was centred on whether the output contained

errors or hallucinations.

Those prompts were tested different chart types

obtained with the AdESMuS system, and two

circumstances that generate hallucinations in the

output were detected. When radar plots were

provided, GenAI was not able to identify all the data

in the chart, incorrectly identifying some of the

values. For Figure 4 for example, the system

identified Elem3 as having a value of 20 when it

really is 80. Similarly, with bar charts including many

assessable items and therefore many bars (10 for

example), GenAI was not able to identify the correct

values for the last elements.

Figure 4: Radar chart.

3.2 Round 2- Eliminating

Hallucinations

Taking into account the previous results, new

prompts were generated. To solve the problems about

the GenAI not being able to correctly read the data

from the image, the prompts, in addition to the charts,

included text data regarding the assessment of the

different elements (see Table 2). Giving this

information in the prompt solved the previous

hallucinations.

Table 2: Prompt including text data.

I am a teacher and the following bar chart shows the

grades (expressed as a percentage (%)) on the assessable

items (on the horizontal axis) for student adsikasle007 in

the course ADSI. Could you make an interpretation of

the data?

Customize the explanations with the name of the student

and replacing the names on the x-axis of the graph with

the names in the following list:

Elem.1 = Partial Exam 1, Elem.2 = Partial Exam 2,

Elem.3 = Partial Exam 3, Elem.10 = Practical Part.

Please note in your explanations that the percentage

grades for each element are:

Elem1 = 53

Elem2 = 75

Elem3 = 57

Elem10 = 68

In addition, the minimum grades for each of the

elements are: Elem.1=0 Elem.2=0 Elem.3=0 elem.10=0

And the maximum ratings for each element are: Elem.1

= 10 elem.2 = 10 Elem.3 = 10 Elem.10 = 2.5

As giving the data as text in the prompt solved the

problem of hallucinations in the output, we tested the

produced output giving only the text data and

eliminating the chart from the prompt. The

conclusion from the analysis was that providing both

text data and image, generated richer results.

3.3 Round 3- Analysing and Improving

Output

In this round, we used promt1 and prompt2 of Table

1 enriched with the text data and tested them with all

the charts in

Figure 1

and

Figure 2

.

When analysing the output, we detected that it

contained a breakdown interpretation for each

element in the chart and then a general interpretation.

For the second prompt, the system provided a set of

suggested actions at the end. The system also

provided a general explanation of the chart type using

the data in the image (see Table 3).

CSEDU 2025 - 17th International Conference on Computer Supported Education

268

Table 3: General explanation for the bar chart generated by

ChatGPT.

The chart represents the performance or evaluation

results of a student (named adsikasle007) across four

categories or "elements," labelled Elem. 1, Elem. 2,

Elem. 3, and Elem. 10.

Here’s a breakdown of the chart:

1. X-axis (Horizontal):

o Represents "Elementos evaluables"

(Evaluable elements), which are the

different categories or components that the

student was assessed on.

o The four elements shown are Elem. 1, Elem.

2, Elem. 3, and Elem. 10.

2. Y-axis (Vertical):

o Represents "Calificación expresada en %"

(Scores expressed as percentages).

o The percentage scale ranges from 0% to

100%, indicating the score achieved for each

evaluable element.

3. Bars:

o Each bar is coloured blue and represents the

percentage score the student obtained in

each evaluable element.

Elem. 1: Score of around 50%.

Elem. 2: Score of around 75%.

Elem. 3: Score of around 55%.

Elem. 10: Score of around 60%.

4. Legend:

o The legend at the top shows the student’s

name, adsikasle007, which is associated

with the blue colou

r

of the bars.

The output shown in Table 3 can help with the

problem of visual literacy. However, to avoid

showing already known information to the users, this

information should not be always shown, but only

until the teacher is able to understand the chart.

Moreover, it has been detected that the given

output is very long and includes information

regarding different aspects that can give an answer to

different problems teachers have when facing

dashboards. Besides, some of the provided

information is not always needed by teachers.

Therefore, in the refinement the prompts were

divided to provide output regarding three aspects that

can give answer to different problems:

General explanation of the chart

Interpretation of the chart: element by element

and in general

Conclusions and recommendations

The first one will be used to facilitate reading the

chart, the second one to interpret the data and the last

one to help determining when to provide

interventions and of which type.

Considering this, the prompt engineering process

continued with the divided prompts. This way, having

three different prompts, each one can be used only

when required according to the teacher’s needs.

3.4 Round 4- Divided Prompt Analysis

In this round, different prompts for the three aspects

detected in the analysis of round three were created.

The prompts were refined

, their output customized in

order to obtain adequate answers by the GenAI and

context (indicating that the receptor of the output will

be a teacher) was also provided.

Next the prompt used for the interpretation aspect

for the case of a violin plot that compares the results

of a student with the results of the group the student

belongs to is shown (see Table 4).

Table 4: Prompt for the interpretation aspect of the

comparison violin chart.

I am a teacher and this image refers to the grades

obtained by a student, whose name is adsikasle007, and

the averages obtained in his group cadsiaprobamos, in

different evaluable elements of a subject in different

evaluable elements of the subject ADSI. Make me an

interpretation of the graph by personalizing the

explanations with the name of the person and replacing

the names that appear on the x-axis of the graph with

those in the following list: Elem.1 = Partial Exam 1,

Elem.2 = Partial Exam 2, Elem.3 = Partial Exam 3,

Elem.10 = Practical Part. Note in your explanations that

the student's percentage grades for each element are:

Elem1 = 53, Elem2 = 75, Elem3 = 57, Elem10 = 68.

Group % grades are: Elem.1 -> mean 39.50 and median

51.50, Elem.2 -> mean 37 and median 36.50, Elem.3 ->

mean 32.67 and median 41, Elem.10 -> mean 68 and

median 68. In addition, The minimum scores for each of

the items are: Elem.1=0 Elem.2=0 Elem.3=0 elem.10=0

And the maximum ratings for each item are: Elem.1 =

10 elem.2 = 10 Elem.3 = 10 Elem.10 = 2.5.

I want the output with these two sections:

*Detailed interpretation-> in this section I want a

personalized and detailed interpretation for each

evaluable element. Put for each element an

interpretation section, don't put a qualification section

and in the appropriate cases make a comparison with the

other elements.

*General interpretation-> in this section I want an

interpretation in general, without going element by

element. In this section I do not want strategies for

improvement to appear.

As the obtained results were satisfying according

to the participating teachers, the prompt generation

process stopped in this round and the obtained final

results are discussed in the next section.

A Preliminary Study on the Use of Generative Artificial Intelligence to Enrich Dashboards

269

4 DISCUSSION

The explanations, interpretations and conclusions

provided by ChatGPT to the prompts generated in the

last round have been analysed. The results and

discussion of the analysis are shown in this section.

For the explanation aspect, it can be seen in the

output shown in Table 5 that the system explains what

a violin chart is, but it also gives an example taking

into account the data in the provided chart. The

example description is not predefined or hard-coded

and can therefore be adapted to the context. This

contextualizes the explanation and facilitates the

teacher not only understanding the chart but

improving part of their visual literacy capability so

that in the near future they would not need this kind

of explanation. This is, this output allows giving a

satisfactory answer to RQ1.

Table 5: Output related to explanation of the chart.

A violin plot is used to visualise the distribution of a data

set. In this case, the graph shows the grades obtained in

different assessable items, both by a group of students

(blue bars) and by an individual student (red diamonds).

The width of the blue bars in the graph indicates the

density of the grades, i.e. how many students obtained

certain grades. The wider the bar at a specific point, the

more students obtained that grade. The circle in the

centre of each blue bar represents the group mean.

How to interpret this graph is explained below with

a concrete example.

Let us imagine ‘Elem. 1’ (which corresponds to

“Midterm 1”):

- The blue bar shows that most of the students in the

group scored around 39.50 points.

- The red diamond indicates the grade obtained by

the particular student, which is above the group average.

This means that the student scored higher than most

of his or her peers in ‘Elem. 1’.

Similarly, you can look at the other assessable

elements:

- ‘Elem. 2’ (Partial Exam 2) shows that the student

scored well above the majority of his/her peers.

- ‘Elem. 3’ (Midterm 3) also shows a mark above the

majority.

- ‘Elem. 10’ (Practical Part) indicates that the

student's grade is in line with the majority of his or her

peers.

In summary, this graph allows you to visually

compare the performance of a particular student with the

distribution of grades of the group in different

assessments.

Regarding the data interpretation, the GenAI has

been asked to produce the output with two

differentiated parts as shown in Table 6: element by

element detailed interpretation and overall

interpretation of the chart.

Table 6: Output related to the interpretation aspect of the

chart.

## Detailed interpretation

1. **Midterm 1 (Elem. 1)** ** **Interpretation**:

adsikasle007 scored 53%, equivalent to **5.3 out of

10**.

**Interpretation**: adsikasle007 scored 53%,

equivalent to **5.3 points out of 10**. This result

reflects a moderate performance in the first partial exam,

being the lowest in comparison to the other assessable

items, suggesting difficulties in this first assessment.

2. Midterm Exam 2 (Element 2)**

**Interpretation**.

**Interpretation**: In Partial Exam 2, adsikasle007

achieved 75%, i.e. **7.5 points out of 10**. This is

adsikasle007's best performance among the midterm

exams, showing significant progress from Midterm 1,

with a stronger understanding of the content.

3. **Midterm Exam 3 (Elem. 3)** **

**Interpretation**.

**Interpretation**: In Midterm 3, adsikasle007

scored 57%, which corresponds to **5.7 points out of

10**. Although slightly better than in the first midterm,

this result is lower than in the second midterm, showing

some inconsistency in theoretical performance.

4. Practical Part (Elem. 10)** **Practical Part

(Elem. 10)**.

**Interpretation**: In the Practical Part,

adsikasle007 scored 68%, equivalent to **1.7 points out

of 2.5**. This result shows a good practical ability, with

a performance that is close to the result of Partial Exam

2. However, there is a small difference between

theoretical and practical performance.

### Overall interpretation

Overall, adsikasle007 has shown variable

performance in the different assessable elements of the

ADSI subject. The **Midterm 2** stands out as his best

result with 75%, while **Midterm 1** shows the lowest

performance with 53%. Performance in the **Practical

Part** is positive at 68%, suggesting that adsikasle007

can effectively apply theoretical knowledge. Although

there are fluctuations in the midterm grades, overall,

adsikasle007 has shown satisfactory performance.

This allows the system to provide first some

general explanations and then going element by

element creating richer explanations that are adapted to

each type of chart and to the data displayed in the chart.

Regarding the conclusions, the output obtained is

shown in Table 7. In the output in can be seen that it

proposes some recommendations that can be taken into

account by teachers. This is, this output can help

teachers understand what is happening and also

recommend teachers to take some decisions to improve

the course taking into account the collected data.

CSEDU 2025 - 17th International Conference on Computer Supported Education

270

Table 7: Output related to the conclusions of the chart.

The conclusions that can be drawn from the graphical

data reflect adsikasle007's generally above-average

performance in the “Cadsi Aprobamos” group,

especially in three of the four assessable items. This

indicates a good overall performance of the student in

comparison to his group peers, with one particular

exception.

1. **Use Case Test (Elem. 1)**: The student

adsikasle007 scores 53%, outperforming the group,

which scores an average of 39%. This suggests a

relatively solid knowledge in this topic, although he

could benefit from reinforcement in certain aspects to

achieve an even higher score.

2. **Domain Model Test (Element 2)**: This is one

of the strengths of adsikasle007, who scores 75%,

significantly above the 37% of the group. Here the

student shows a deep understanding of the topic, clearly

standing out above the average.

3. **Analysis and Design Test (Element 3)**: With

57%, adsikasle007 also outperforms the group (32%),

but this score still reflects an opportunity for

improvement. In this assessable element, both the

student and the group could use more focus on the

concepts of analysis and design.

4. **Final practical documentation (Elem. 10)**: In

this case, adsikasle007 is exactly in line with the group

average (68%). Despite being in line with his peers, there

is room for improvement to reach the maximum score.

**Recommendation**: Although adsikasle007

performs quite well, particularly in the Domain Model

Exam, it would be useful for him to strengthen his

knowledge in analysis and design. In addition, perfecting

the final Documentation could guarantee him an even

higher score.

The output provided for both the interpretation

and conclusion aspect facilitates interpreting the

charts and deciding whether to provide some

interventions or not. Moreover, the output proposes

some interventions, so the output facilitate

interpretation and decision taking, what gives answer

to RQ2.

5 CONCLUSIONS

LA dashboards are widely used, but teachers need

tools that facilitate their understanding and

interpretation of the data to provide adequate

contextualized interventions to students. The work

presented in this paper aims to analyse whether

enriching LA dashboards with the use of GenAI can

give answer to the current problems that present LA

dashboards.

We have carried out a prompt engineering process

to see which type of output could be generated to

facilitate understanding the charts (RQ1) and

facilitate their interpretation and the decision taking

process based on that interpretation (RQ2).

Taking into account the participating teachers’

opinion, we have obtained quite good results

regarding both research questions. In the near future

we plan to carry out a study showing teachers the

enriched dashboards to evaluate in a real context

whether interpretations and conclusions regarding the

charts shown in the enriched dashboard really makes

easier for them to take decisions on their courses.

We also plan to include in the dashboard an option

for the teacher to create their own prompts and

directly ask GenAI different questions regarding the

charts in the dashboard.

ACKNOWLEDGEMENTS

This work was partially funded by the Department of

Education, Universities and Research of the Basque

Government (ADIAN, IT-1437-22) and grant

RED2022-134284-T.

REFERENCES

Clow, D. (2012). The learning analytics cycle: Closing the

loop effectively. Proceedings of the 2nd International

Conference on Learning Analytics and Knowledge,

134–138. https://doi.org/10.1145/2330601.2330636

Echeverria, V., Martinez-Maldonado, R., Shum, S. B.,

Chiluiza, K., Granda, R., & Conati, C. (2018).

Exploratory versus Explanatory Visual Learning

Analytics: Driving Teachers’ Attention through

Educational Data Storytelling. Journal of Learning

Analytics, 5(3), Article 3. https://doi.org/10.18608/

jla.2018.53.6

Gašević, D., & Merceron, A. (2022). The Handbook of

Learning Analytics (C. Lang, G. Siemens, & A. F.

Wise, Eds.; 2nd ed.). SOLAR. https://doi.org/

10.18608/hla22

Lo, L. S. (2023). The CLEAR path: A framework for

enhancing information literacy through prompt

engineering. The Journal of Academic Librarianship,

49(4), 102720. https://doi.org/10.1016/j.acalib.2023.10

2720

Matcha, W., Uzir, N. A., Gašević, D., & Pardo, A. (2020).

A Systematic Review of Empirical Studies on Learning

Analytics Dashboards: A Self-Regulated Learning

Perspective. IEEE Transactions on Learning

Technologies, 13(2), 226–245. IEEE Transactions on

Learning Technologies. https://doi.org/10.1109/TLT.2

019.2916802

A Preliminary Study on the Use of Generative Artificial Intelligence to Enrich Dashboards

271

OpenAI. (2024). Six strategies for getting better results. Six

Strategies for Getting Better Results. https://platform.

openai.com/docs/guides/prompt-engineering

Pinargote, A., Calderón, E., Cevallos, K., Carrillo, G.,

Chiluiza, K., & Echeverria, V. (2024). Automating data

narratives in Learning Analytics Dashboards using

GenAI. 2024 Joint of International Conference on

Learning Analytics and Knowledge Workshops, 150–

161. https://research.monash.edu/en/publications/auto

mating-data-narratives-in-learning-analytics-dashboar

ds-using

Pozdniakov, S., Martinez-Maldonado, R., Tsai, Y.-S.,

Echeverria, V., Srivastava, N., & Gasevic, D. (2023).

How Do Teachers Use Dashboards Enhanced with Data

Storytelling Elements According to their Data

Visualisation Literacy Skills? LAK23: 13th

International Learning Analytics and Knowledge

Conference, 89–99. https://doi.org/10.1145/3576050.

3576063

Villamane, M., Alvarez, A., & Larranaga, M. (2020).

CASA: An Architecture to Support Complex

Assessment Scenarios. IEEE Access, 8, 14195–14206.

https://doi.org/10.1109/access.2020.2966595

Yan, L., Martinez-Maldonado, R., & Gasevic, D. (2024).

Generative Artificial Intelligence in Learning

Analytics: Contextualising Opportunities and

Challenges through the Learning Analytics Cycle.

Proceedings of the 14th Learning Analytics and

Knowledge Conference, 101–111. https://doi.org/10.11

45/3636555.3636856

CSEDU 2025 - 17th International Conference on Computer Supported Education

272