SWeeTComp: A Framework for Software Testing Competency

Assessment

Nayane Maia

1,2 a

, Ana Carolina Oran

1 b

and Bruno Gadelha

1 c

1

Institute of Computing, Federal University of Amazonas (UFAM), CEP 69080-900, Manaus, AM, Brazil

2

Eldorado Research Institute, CEP 69057-000, Manaus, AM, Brazil

Keywords:

Software Testing, Soft Skill, Hard Skill, Software Engineering Competencies, Competency Assessment.

Abstract:

The quality of the process and product is critical for competitiveness in the software industry. Software testing,

which spans all development phases, is essential to assess product quality. This requires testing professionals

to master various technical and general skills. To address the competency gap in testing teams, a competency

assessment of all team members is necessary. In response, SWeeTComp (A Framework for Software Testing

Competency Assessment) was developed as a self-assessment tool to identify competency gaps. A study

with 22 participants from a Software Engineering course at the Federal University of Amazonas evaluated

SWeeTComp’s effectiveness in identifying competencies and gaps. Participants also provided feedback on its

usability and effectiveness. Results show that SWeeTComp helped participants identify their strengths and

weaknesses. Feedback was positive, though areas for improvement, such as clearer instructions and more

detailed feedback, were noted.

1 INTRODUCTION

The software industry continually evolves to meet

market demands, emphasizing the need for high-

quality products. Developing such products requires

specific skills and best practices across development

and operation phases (Casale et al., 2016). Software

testing is crucial for assessing product quality and

spans all stages of the development lifecycle (Press-

man and Maxim, 2021). With recent technologi-

cal advancements and the demand for rapid, reliable

delivery, the complexity of software testing has in-

creased (Valle et al., 2023), highlighting the impor-

tance of assessing the competencies of testing pro-

fessionals to ensure effective processes and product

quality (Maia et al., 2023).

Competency in software engineering encom-

passes the knowledge, skills, and attitudes required

for development tasks. The Software Engineering

Competency Model (SWECOM) (IEEE, 2014) and

the Software Engineering Body of Knowledge (SWE-

BOK) (IEEE, 2024) categorize competencies into

cognitive skills, behavioral attributes, and technical

a

https://orcid.org/0000-0003-3642-4772

b

https://orcid.org/0000-0002-6446-7510

c

https://orcid.org/0000-0001-7007-5209

skills. In software testing, technical competencies in-

clude areas such as test planning, infrastructure, tech-

niques, and defect tracking. This study focuses on a

defined set of technical competencies, organized into

specific categories and activities, detailed further in

the supplementary material (Maia et al., 2024).

Effective software testing depends on the skills,

intuition, and experience of testers (Kaner et al.,

2011). Competency management must address both

technical and non-technical skills (Pereira et al.,

2010). Assigning tasks to unqualified teams risks

compromising results and causing delays due to re-

work (Marques et al., 2013). Proper skill allocation

is critical for project success (Ahmed et al., 2015),

and a well-managed testing process directly influ-

ences product quality (Pressman and Maxim, 2021);

(Juristo et al., 2004).

Challenges in understanding role responsibilities

in the Competency Mapping Model, as discussed by

(Maia et al., 2023), highlighted the increasing flexibil-

ity in organizational structures, where rigid role clas-

sifications are less common. This identified the need

for a new framework to address these gaps, leading to

the development of SWeeTComp.

This study introduces SWeeTComp (Software

Testing Competency Assessment Framework) was

created to support self-assessment and identify gaps

Maia, N., Oran, A. C. and Gadelha, B.

SWeeTComp: A Framework for Software Testing Competency Assessment.

DOI: 10.5220/0013276600003929

In Proceedings of the 27th International Conference on Enterprise Information Systems (ICEIS 2025) - Volume 2, pages 185-192

ISBN: 978-989-758-749-8; ISSN: 2184-4992

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

185

in software testing competencies. Building on prior

research comparing expected and actual competency

levels (Maia et al., 2023), the framework adapts the

set of competencies described in SWECOM (IEEE,

2014) to accommodate larger and more diverse test-

ing teams. It addresses challenges such as aligning

competencies with team roles and defining appropri-

ate competency levels within organizational contexts.

The framework categorizes technical competencies

into specific domains and activities.

This article analyzes user perceptions of SWeeT-

Comp, focusing on its usefulness, ease of use, work-

place relevance, and the quality of results. It also

identifies challenges faced by users and suggests op-

portunities for improvement. The study contributes to

enhancing software testing practices by aligning indi-

vidual and organizational competencies with industry

needs.

2 METHODOLOGY

This research employed a combination of qualitative

and quantitative analyses to obtain a comprehensive

understanding of the user experience with SWeeT-

Comp. The study identified successful aspects, areas

requiring improvement, and challenges perceived by

participants. This integrated approach aims to inform

continuous enhancements and optimizations, ensur-

ing the tool’s perceived utility aligns with users’ ex-

pectations and needs.

To assess user acceptance of SWeeTComp, this

research adopted the Technology Acceptance Model

(TAM) (Davis et al., 1989), which is widely recog-

nized as one of the most frequently employed frame-

works for analyzing technology acceptance among

users.

2.1 Questionnaire Specification

The questionnaire was structured into three sections:

(i) Sociodemographic characterization, designed to

profile the participants; (ii) Self-assessment of tech-

nical competency levels corresponding to each phase

of the testing process; and (iii) Evaluation of the

proposed framework’s acceptance using the Technol-

ogy Acceptance Model (TAM). Additional details are

provided in the supplementary material (Maia et al.,

2024).

2.2 Data Collection

This study involved undergraduate students from a

Software Engineering program at Federal Univer-

sity of Amazonas, focusing on their perceptions of

SWeeTComp as a framework for software testing

competency assessment. Data was collected in the

classroom during the Verification, Validation, and

Testing course, taught to fifth-semester students.

All participants voluntarily agreed to participate

in the study by signing an Informed Consent Form

(ICF), ensuring the confidentiality of the data pro-

vided. Participant names were included in the forms

to facilitate the analysis of their competency levels at

the beginning and end of the discipline. The compe-

tency assessment questionnaire was re-administered

at the conclusion of the course to track and evaluate

the progression of competency levels over time.

The study involved 22 participants, the majority of

whom were between 18 and 24 years old (77.27%). In

terms of gender distribution, the sample was predom-

inantly male (68.18%). Regarding prior experience

in software testing, 40.91% of participants reported

no experience in software development, while 22.7%

indicated having industry experience specifically re-

lated to software testing.

When evaluating prior knowledge of software

testing, 50% of participants stated they had no knowl-

edge beyond what was acquired during the course.

However, 31.8% reported some level of industry ex-

perience, and 27.3% mentioned exposure to the topic

through academic activities outside this course.

3 EVALUATED ARTIFACT: THE

SWeeTComp FRAMEWORK

The SWeeTComp (A Framework for Software Test-

ing Competency Assessment), incorporates techni-

cal competencies relevant to the software tester role,

aligned with the core activities of the software testing

process: Software Testing Planning, Software Test-

ing Infrastructure, Software Testing Techniques, Soft-

ware Testing Measurement, and Defect Tracking. The

SWeeTComp allows users to assign knowledge levels

to each competency presented in a questionnaire.

In this research, we adopted the definition of

framework according to the Cambridge Dictionary

(Cambridge University, 2025), which describes it as

a supporting structure around which something can

be built and a system of rules, ideas, or beliefs used to

plan or decide something. Frameworks are often used

as tools to address specific issues within a domain.

They provide support in decision-making by present-

ing organized processes, procedures, techniques, and

tools, offering structured options as potential solu-

tions (Shehabuddeen et al., 2000).

The main objective of this framework is to system-

ICEIS 2025 - 27th International Conference on Enterprise Information Systems

186

atize the assessment of competencies within a soft-

ware testing team, considering the most required tech-

nical skills for the software tester role available in

SWECOM (IEEE, 2014). Through the evaluation of

competency levels in the product quality assurance

process, the aim is to efficiently identify potential ex-

pertise that may not have been previously recognized,

as well as gaps that can be developed according to

project needs. This approach is intended to enhance

team productivity and the quality of both the pro-

cess and the product and provides the improvement

of skills management. The full version of the SWeeT-

Comp framework is available in the supplementary

material (Maia et al., 2024).

To develop the SWeeTComp, we conducted a gap

analysis between the technical competencies of the

framework by (Salda

˜

na-Ramos et al., 2012) and the

technical testing competencies of SWECOM. The

goal was to identify potential gaps between the com-

petencies outlined in both artifacts. We observed that

the competencies listed in SWECOM encompassed

all the technical competencies from (Salda

˜

na-Ramos

et al., 2012). Therefore, SWeeTComp is based solely

on the technical competencies extracted from SWE-

COM, organized into Test Planning (17 questions),

Test Infrastructure (12 questions), Test Techniques

(12 questions), and Measurement and Defect Track-

ing (15 questions) sections. Each section contains

competencies aligned with software tester roles, with

professionals assigning competency levels.

Table 1: Competency Levels Assessment.

Competency Level Meaning

Follow Performs the activity following in-

structions

Watch/Assist Performs the activity under supervi-

sion

Participate/Execute Performs the activity independently

Lead/Conduct Supervises and/or leads the activities

Create Responsible for creating new ap-

proaches and solutions

The main differences between the two artifacts,

in addition to the total number of competencies,

are in the format of the questions and the response

options. While SWECOM presents competencies

through statements already linked to their levels, with

responses limited to ’Has’, ’Needs’ and ’Lacks’;

SWeeTComp presents competencies in the form of

questions, with options related to the levels of mas-

tery that the professional must attribute to each one.

In short, SWECOM seeks to identify the presence or

absence of competencies, while SWeeTComp aims to

assess the level of mastery of each competency by the

professional, as showed in Table 1.

4 RESULTS AND DISCUSSIONS

This section presents the results and discussions re-

garding of the data collected during the empirical

study using all artifacts described in Section 3. We

present the findings from the responses provided by

the participants (students in the role of testers) to the

post-study questionnaire.

4.1 Analysis of Sweetcomp’s Utility

Perception

Figure 1 illustrates the participants’ perceptions, ad-

dressing the following question:

Q1. I find SWeeTComp useful in identifying my

skill level in activities related to software testing:

A majority of respondents (45.45%) strongly agree

that SWeeTComp is useful for assessing competen-

cies in software testing activities. This indicates that

many users consider SWeeTComp a valuable tool

for this purpose. Additionally, a considerable num-

ber (22.73%) partially agree, while a similar propor-

tion (22.73%) fully agrees with its usefulness. How-

ever, neutral responses (4.55%) and partial disagree-

ment (4.55%) suggest some users are uncertain or un-

convinced about its effectiveness. The analyses re-

flect diverse perceptions, from positive feedback to

concerns about clarity and applicability. Integrat-

ing these insights into tool development can enhance

user satisfaction. Some participants find SWeeT-

Comp aligns with expectations, facilitating compe-

tency assessments and matching their skills. Improve-

ment areas include clarity in terms and questions,

connecting with specific work tasks, and understand-

ing the tool’s benefits. Participants’ experiences and

knowledge significantly influence their perception of

SWeeTComp’s utility, with some finding it beneficial

based on existing knowledge, while others express

disinterest due to limited involvement in testing.

Figure 1: SWeeTComp - Perceived Usefulness.

SWeeTComp: A Framework for Software Testing Competency Assessment

187

4.2 Analysis of SWeeTComp’s Ease of

Use Perception

Figure 2 illustrates the participants’ perceptions, aim-

ing to address the following questions:

Q2. My interaction with SWeeTComp is clear

and understandable: The majority of participants

(31.82%) strongly agree that the interaction with

SWeeTComp is clear and understandable, indicating a

significant positive perception. A considerable num-

ber (18.18%) partially agree, suggesting overall posi-

tivity with room for improvement. Neutral responses

(13.64%) indicate ambivalence or indecision among

participants about the clarity of interaction. Disagree-

ments (9.09% strongly disagree and 13.64% partially

disagree) highlight a minority perceiving the inter-

action as not clear. Qualitative responses offer di-

verse perspectives on clarity, emphasizing positive

aspects and areas for improvement in usability and

communication. These insights guide future adjust-

ments, with positive feedback indicating satisfaction,

while neutral and dissenting responses identify oppor-

tunities for improvement. The overall suggestion is

to enhance clarity in communication and interaction,

possibly through improvements in documentation, in-

structions, or interfaces for a more intuitive user ex-

perience.

Figure 2: SWeeTComp - Perceived Ease of Use.

Q3. Interacting with SWeeTComp does not re-

quire much mental effort: A majority of participants

(36.36%) partially agree that interacting with SWeeT-

Comp requires some mental effort, indicating a per-

ception of complexity or effort. A smaller propor-

tion (18.18%) partially disagrees, suggesting a con-

siderable portion finds the interaction mentally de-

manding. Neutral responses (4.55%) and total dis-

agreement (9.09% Completely Disagree and 9.09%

Strongly Disagree) show a division among partici-

pants regarding the ease of interaction. With par-

tial agreement being the most frequent response, the

majority acknowledges some level of mental effort.

This diversity underscores the importance of consid-

ering different user experiences and needs when de-

signing and enhancing SWeeTComp’s usability. Re-

sponses indicating the need for mental effort sug-

gest opportunities to enhance SWeeTComp’s inter-

face or design for improved usability and reduced

cognitive load. Leveraging feedback on mental effort

can identify specific areas for simplification, creating

a more seamless and intuitive user experience. Var-

ied responses suggest ease of interaction with SWeeT-

Comp may depend on users’ individual experience

and knowledge. Qualitative insights highlight poten-

tial improvements, including language simplification,

questionnaire length reduction, and instruction clari-

fication, aiming for a more positive and efficient user

experience.

Q4. I find SWeeTComp easy to use: Most partic-

ipants (36.36%) strongly agree that SWeeTComp is

easy to use, with 27.27% partially agreeing, indicat-

ing an overall positive view but room for improve-

ment. Minority responses (4.55% neutral, 4.55%

strongly disagree, and 18.18% partially disagree) sug-

gest some participants do not find SWeeTComp easy

to use. The majority recognizes SWeeTComp’s us-

ability, meeting expectations for many users. Dis-

agreement and neutral responses highlight areas for

improvement, providing insights to enhance the user

experience. Perceptions of SWeeTComp’s ease of use

may vary based on individual factors. Qualitative in-

sights reveal strengths like language clarity and sim-

plicity, along with areas for improvement such as for-

matting and the intuitiveness of the ”not applicable”

option. These insights will guide adjustments in the

SWeeTComp interface.

Q5. The questions in SWeeTComp are easy to un-

derstand: Most participants (40.91%) partially dis-

agree that SWeeTComp questions are easy to un-

derstand, indicating a significant perception of com-

plexity. A considerable proportion (31.82%) par-

tially agrees, suggesting some find the questions un-

derstandable to some extent but face challenges in

other aspects. Low neutral responses (4.55%) indicate

clear opinions on question comprehension. Disagree-

ment responses (9.09% strongly disagree and 13.64%

strongly agree) show a significant portion perceives

the questions as difficult to understand. The majority

finds SWeeTComp questions challenging, indicating

a need for revisions. Specific areas for improvement

include clarity, conciseness, and language in question

formulation. Varied responses suggest perceptions

may depend on users’ experience, knowledge, and

contextual factors. These qualitative insights high-

light challenges in understanding SWeeTComp ques-

tions, such as lack of experience, technical knowl-

edge, and the need for clarity.

ICEIS 2025 - 27th International Conference on Enterprise Information Systems

188

4.3 Analysis of Sweetcomp’s Usage

Intent Perception

Figure 3 illustrates the participants’ perceptions, aim-

ing to address the following questions:

Figure 3: SWeeTComp - Usage Intent.

Q6. Assuming I had access to SWeeTComp, I in-

tend to use it in the future: A substantial number of

participants express a neutral inclination toward fu-

ture usage of SWeeTComp, accounting for 27.27% of

responses. Positive intent for usage (Partially Agree

and Completely Agree) constitutes 50%, signifying

that half of the participants exhibit some level of in-

terest in utilizing the tool in the future. The response

Strongly Disagree is relatively minimal (4.55%), in-

dicating a small fraction of participants who unequiv-

ocally lack interest. The analysis reveals diverse lev-

els of interest among participants regarding the future

use of SWeeTComp. Further exploration of the rea-

sons behind neutral responses and efforts to improve

communication about the tool’s benefits can poten-

tially enhance the intention to use. These qualitative

responses present various perspectives on the inclina-

tion to use SWeeTComp in the future, with recurring

themes such as the absence of a personal use case,

context dependence, and professional relevance influ-

encing participants’ intentions toward the tool.

4.4 Analysis of Sweetcomp’s Relevance

at Work Perception

Figure 4 illustrates the participants’ perceptions, aim-

ing to address the following questions:

Q7. In identifying competency levels in activities

related to software testing, using SWeeTComp is

important: The majority of participants (86.36%)

agree to varying degrees that using SWeeTComp is

important for identifying competencies in software

testing. Specifically, 22.73% partially and completely

agree, while 40.91% strongly agree with SWeeT-

Comp’s importance in this context. Conversely,

4.55% of participants partially disagree with the tool’s

importance, and 9.09% maintain a neutral stance.

Figure 4: SWeeTComp - Relevance at Work.

These qualitative justifications offer diverse perspec-

tives on SWeeTComp’s perceived importance in com-

petency identification, emphasizing its utility, scope,

and effectiveness. Additionally, they acknowledge the

existence of alternative methods for measuring com-

petencies.

Q8. In identifying the level of competencies in

software testing-related activities, using SWeeT-

Comp is relevant: The majority of participants find

SWeeTComp highly relevant for assessing compe-

tencies in software testing. ”Strongly Agree” re-

sponses are most frequent, at 36.36%, with ”Com-

pletely Agree” responses also significant, at 31.82%.

This widespread agreement indicates SWeeTComp’s

perceived relevance. Together, these responses ex-

ceed 68%, demonstrating a high perception of its rel-

evance. Qualitative justifications highlight its utility,

organization, scope, and effectiveness while recogniz-

ing the importance of considering different contexts

and technologies.

4.5 Analysis of SWeeTComp’s Quality

of Results Perception

Figure 5 illustrates participants’ perceptions, mainly

showing positive views, with only one negative per-

ception regarding the quality of SWeeTComp.

Figure 5: SWeeTComp - Quality of Results.

Q9. The quality of the results I obtain from

SWeeTComp is high: The neutral option received

SWeeTComp: A Framework for Software Testing Competency Assessment

189

the highest percentage of responses (50%), indicat-

ing many participants lack a clear opinion on the

quality of SWeeTComp results. A significant por-

tion (36.36%) partially agrees with the results’ qual-

ity, suggesting recognition of some value but with

reservations. Extreme options (Completely Disagree,

Strongly Agree, Completely Agree) received low per-

centages (4.55% each), indicating that few partici-

pants expressed strong opinions. The neutral percep-

tion may suggest a lack of clarity on evaluation cri-

teria or varied experience with SWeeTComp results.

Feedback emphasizes the need for detailed feedback

to enhance perceived result quality, with positive and

negative responses highlighting strengths and areas

for improvement. Qualitative responses reflect di-

verse perceptions and challenges users face, suggest-

ing improvements in communication, result delivery,

and guidance on interpretation and effective use.

Q10. I have no issues with the quality of SWeeT-

Comp results: The neutral option received the high-

est percentage of responses (50%), indicating many

participants did not strongly express their opinion

about result quality. Partially Agree and Strongly

Agree (31.82%) show a significant portion agrees

with the results, recognizing some value, although

with possible reservations. Completely Disagree

and Completely Agree (9.09% each) received rel-

atively low percentages, suggesting only a minor-

ity expressed strong opinions. The high percent-

age of neutral responses suggests potential ambigu-

ity in evaluation criteria or varied experience with

SWeeTComp results. Responses at both extremes in-

dicate strong opinions about result quality, influenced

by factors like understanding evaluation criteria and

previous experience with similar tools. Participants

highlighted a need for improved communication and

transparency in delivering and interpreting SWeeT-

Comp results. Lack of familiarity with evaluation cri-

teria can impact the accuracy of assessment of result

quality. Additional support and guidance to users are

crucial for maximizing the benefits of SWeeTComp

results.

Q11. I rate the results of SWeeTComp as excel-

lent for identifying the level of competencies in ac-

tivities related to software testing: A minority of

participants (4.55%) strongly disagreed with SWeeT-

Comp’s excellence in competency identification, pos-

sibly indicating skepticism. Another small fraction

(4.55%) expressed partial disagreement, suggesting

reservations. The majority (50%) chose the neutral

option, indicating mixed opinions. A significant por-

tion (13.64%) partially agreed, acknowledging merit

but with reservations. A substantial number (18.18%)

strongly agreed, indicating belief in SWeeTComp’s

effectiveness. A smaller proportion (9.09%) com-

pletely agreed, signifying trust in SWeeTComp’s ca-

pability. Most participants took a neutral stance, in-

dicating uncertainty or lack of clarity. Responses in

the ’Partially Agree’ and ’Strongly Agree’ categories

suggest varying levels of conviction among users.

The absence of strong disagreement implies overall

acceptance. Concerns about result access highlight

the need for clear delivery. Uncertainty underscores

the importance of an intuitive interface and clear in-

structions. Despite concerns, a positive outlook sug-

gests value with enhancements.

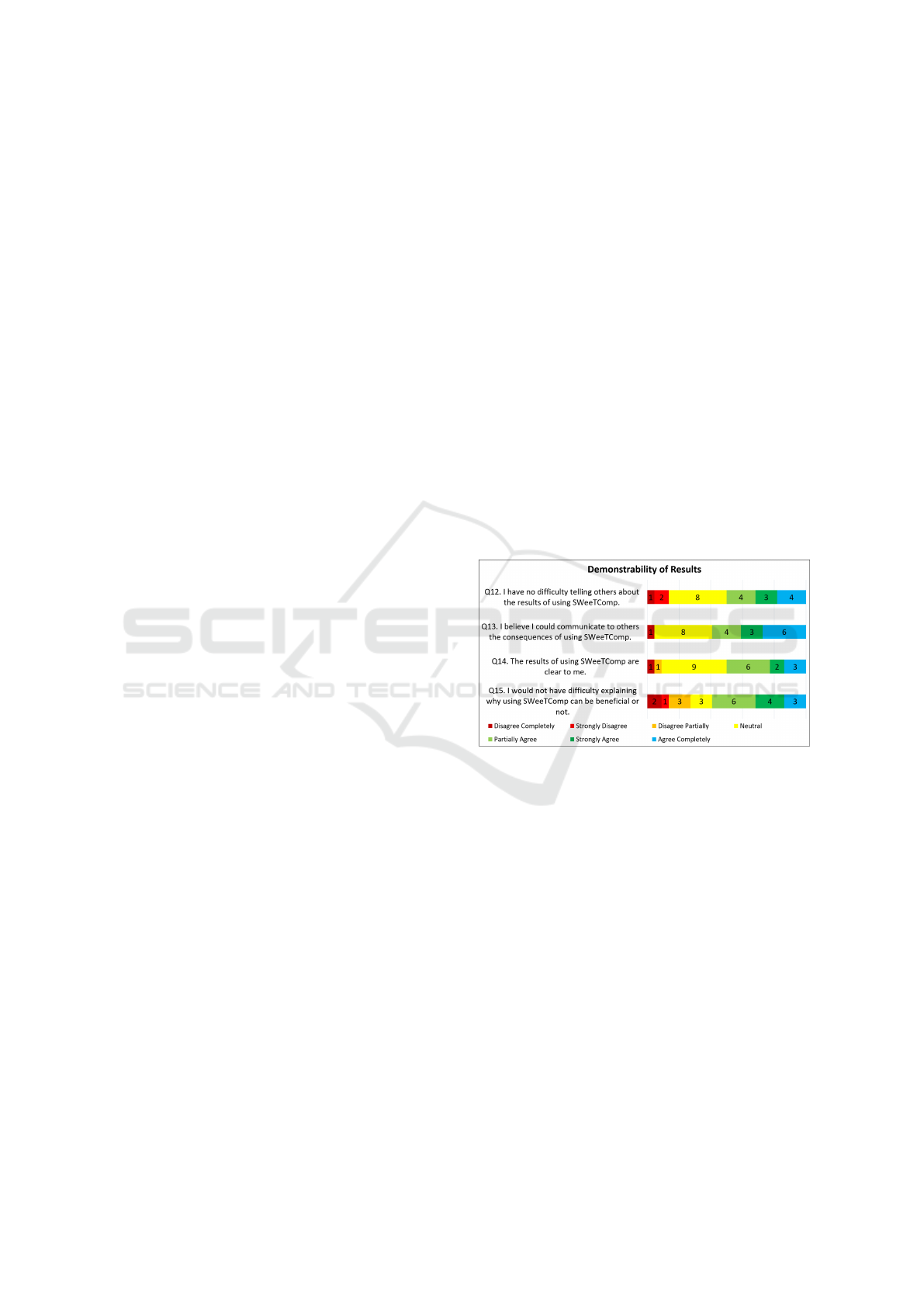

4.6 Analysis of Sweetcomp’s

Demonstrability of Results

Perception

Figure 6 illustrates the participants’ perceptions, pre-

dominantly reflecting positive views, with only one

negative perception regarding the demonstrability of

SWeeTComp.

Figure 6: SWeeTComp - ”Demonstrability of Results”.

Q12. I have no difficulty telling others about

the results of using SWeeTComp: The majority

(36.36%) had a neutral stance, indicating an ambigu-

ous view or no definitive opinion on SWeeTComp’s

demonstrability. A substantial portion (18.18%) par-

tially agrees, acknowledging positive aspects and ar-

eas for improvement. Another significant group

(18.18%) completely agrees, suggesting some users

find SWeeTComp highly effective in demonstrating

results. A smaller portion (13.64%) strongly agrees,

indicating a positive perception among these partic-

ipants. A few participants (4.55%) completely dis-

agree, possibly reflecting a lack of clarity or expe-

rience in presenting results. A slightly larger group

(9.09%) strongly disagrees, pointing to potential im-

provements needed in the presentation or accessibil-

ity of results. Notably, no participant expressed par-

tial disagreement, emphasizing that demonstrability

is not a middle point of contention. The prevalence

ICEIS 2025 - 27th International Conference on Enterprise Information Systems

190

of neutral responses suggests an opportunity to im-

prove communication about SWeeTComp’s ability to

present results. Additionally, disagreement responses

highlight specific areas needing adjustments to bet-

ter align with user expectations, particularly regarding

feedback to the user.

Q13. I believe I could communicate to others the

consequences of using SWeeTComp: The majority

of participants (36.36%) took a neutral stance regard-

ing their ability to communicate the consequences of

using SWeeTComp. This suggests a lack of clarity in

conveying the implications, indicating that many par-

ticipants may not feel entirely confident in explain-

ing the tool’s effects. A significant portion agrees, to

varying degrees (18.18% Partial + 13.64% Strongly

+ 27.27% Completely), that they could communicate

the consequences of using SWeeTComp. These par-

ticipants express confidence in their ability to explain

the tool’s implications, reflecting a positive indication

of understanding and effective communication about

SWeeTComp results. Complete disagreement is rel-

atively low (4.55%), indicating that only a small per-

centage of participants strongly disagree with the abil-

ity to communicate the consequences.

Q14. The results of using SWeeTComp are evi-

dent to me: The majority of participants (40.91%)

were neutral about the clarity of SWeeTComp results,

showing uncertainty. A substantial portion agreed

to various extents (27.27% Partial + 13.64% Com-

pletely), suggesting perceived effectiveness. Com-

plete and partial disagreement was relatively low

(4.55% Completely + 4.55% Partially), indicating

only a small percentage disagreed. This suggests that,

even if neutral, most participants do not strongly dis-

agree. Insights provided emphasize areas needing

clarification or improvement in communication and

presentation of SWeeTComp results, highlighting the

importance of enhancing clarity and understandabil-

ity for user adoption. Participant justifications under-

score the significance of experience, context, and clar-

ity in presenting results, guiding improvements for

enhanced demonstrability.

Q15. I would not have difficulty explaining why us-

ing SWeeTComp can be beneficial or not: A signif-

icant portion agrees, to varying degrees (27.27% Par-

tial + 18.18% Strongly + 13.64% Completely), that

they would not have difficulty explaining the poten-

tial benefits of using SWeeTComp. These participants

expressed confidence in their ability to articulate the

advantages of the tool. Complete and partial disagree-

ment is relatively low (9.09% Completely + 4.55%

Strongly + 13.64% Partially), indicating only a small

percentage disagree regarding the ease of explaining

the benefits. This suggests that the majority of par-

ticipants, even if neutral, do not strongly object. A

considerable proportion (13.64%) adopted a neutral

stance, possibly due to a lack of clarity or knowledge

about the perceived advantages. Overall, responses

vary from a positive understanding of the benefits to

specific concerns. This analysis highlights the need to

improve communication and accessibility of results to

enhance understanding and perceived utility.

5 LIMITATIONS AND THREATS

TO VALIDITY

This section outlines the limitations of the methodol-

ogy and potential threats to the validity of the research

on users’ perception of SWeeTComp, along with the

corresponding mitigations. Firstly, the research sam-

ple was limited to academics in software testing, po-

tentially restricting the generalization of competen-

cies to roles beyond testing. To address this, consid-

ering that studies such as those by Host et al. (2000)

(H

¨

ost et al., 2000) and Salman et al. (2015) (Salman

et al., 2015) have demonstrated that students can ad-

equately represent industry professionals, these stu-

dents were deemed a suitable sample for evaluating

the framework. Secondly, data collection relied on

participants’ self-perception, introducing subjectivity

that may affect the accuracy of conclusions. There is

a potential researcher bias in the creation of the ques-

tionnaire and the analysis of the results. To mitigate

this, all artifacts and results were reviewed by two

renowned researchers in software engineering and a

professional with 10 years of experience. This peer

review process aimed to ensure the objectivity and re-

liability of the study. These mitigations are intended

to enhance the validity of the findings and the relia-

bility of the conclusions drawn from the study.

6 CONCLUSION

The study aimed to evaluate the effectiveness of

SWeeTComp, a framework developed to assess soft-

ware testing competencies. Data was collected from

participants enrolled in a Software Engineering pro-

gram at a Federal University, who were asked to com-

plete self-assessments of their competencies in soft-

ware testing. The results from the Technology Ac-

ceptance Model (TAM) were analyzed to gauge user

acceptance and inform potential improvements to the

framework. These include enhancing the user in-

terface for better usability, refining instructions for

ease of use, providing real-time feedback during

SWeeTComp: A Framework for Software Testing Competency Assessment

191

questionnaire completion, and generating detailed re-

ports post-assessment. Additionally, expanding the

range of relevant competencies, incorporating prac-

tical evaluation elements, allowing customization for

different testing contexts, and ensuring regular up-

dates to keep the tool aligned with emerging trends

are important steps for future development. Future

work should focus on expanding the sample to include

industry professionals, incorporating real-world con-

texts into evaluations, and adding more customization

options. By adopting an iterative approach and incor-

porating continuous user feedback, SWeeTComp can

evolve into a more robust tool for assessing software

testing competencies, effectively bridging the gap be-

tween academia and industry. Software industry can

benefit from SWeeTComp by assessing software test-

ing team skills, identify gaps, and align individual

competencies with organizational goals, improving

team performance and product quality. For academia,

it identifies areas for enhancing educational programs,

ensuring that graduates possess the skills required by

industry. By aligning curricula with real-world com-

petencies, SWeeTComp bridges the gap between the-

oretical education and practical skills, thus increasing

employability.

ACKNOWLEDGEMENTS

This research was supported by Eldorado Research

Institute and was carried out with the support of the

Coordination for the Improvement of Higher Edu-

cation Personnel - Brazil (CAPES-PROEX) – Fund-

ing Code 001. Additionally, this work was partially

funded by the Foundation for Research Support of the

State of Amazonas – FAPEAM – through the PDPG

project. We also thank the USES research group for

their support and practitioners for their voluntary par-

ticipation in the study.

REFERENCES

Ahmed, F., Capretz, L. F., Bouktif, S., and Campbell, P.

(2015). Soft skills and software development: A re-

flection from the software industry. arXiv preprint

arXiv:1507.06873.

Cambridge University, P. (2025). Cambridge dictionary on-

line. https://x.gd/mbrBZ. Accessed on: January 20,

2025.

Casale, G., Chesta, C., Deussen, P., Di Nitto, E., Gouvas, P.,

Koussouris, S., Stankovski, V., Symeonidis, A., Vlas-

siou, V., Zafeiropoulos, A., et al. (2016). Current and

future challenges of software engineering for services

and applications. Procedia computer science, 97:34–

42.

Davis, F. D., Bagozzi, R. P., and Warshaw, P. R. (1989).

User acceptance of computer technology: A compar-

ison of two theoretical models. Management science,

35(8):982–1003.

H

¨

ost, M., Regnell, B., and Wohlin, C. (2000). Using stu-

dents as subjects—a comparative study of students

and professionals in lead-time impact assessment.

Empirical Software Engineering, 5:201–214.

IEEE, C. S. (2014). The Software Engineering Competency

Model (SWECOM). IEEE Computer Society.

IEEE, C. S. (2024). Guide to the Software Engineering

Body of Knowledge (SWEBOK Guide), Version 4.0.

IEEE Computer Society.

Juristo, N., Moreno, A. M., and Vegas, S. (2004). Review-

ing 25 years of testing technique experiments. Empir-

ical Software Engineering, 9(1):7–44.

Kaner, C., Bach, J., and Pettichord, B. (2011). Lessons

Learned in Software Testing: A Context-Driven Ap-

proach. John Wiley & Sons.

Maia, N., Oran, A. C., and Gadelha, B. (2023). Expecta-

tion vs reality: Analyzing the competencies of soft-

ware testing teams. In ICEIS (2), pages 152–159.

Maia, N., Oran, A. C., and Gadelha, B. (2024). Supple-

mentary Material (SWeeTComp: A Framework for

Software Testing Competency Assessment). https:

//doi.org/10.6084/m9.figshare.25943854.

Marques, A. B., Carvalho, J. R., Rodrigues, R., Conte, T.,

Prikladnicki, R., and Marczak, S. (2013). An ontol-

ogy for task allocation to teams in distributed software

development. In 2013 IEEE 8th International Confer-

ence on Global Software Engineering, pages 21–30.

IEEE.

Pereira, T. A. B., dos Santos, V. S., Ribeiro, B. L., and

Elias, G. (2010). A recommendation framework for

allocating global software teams in software product

line projects. In Proceedings of the 2nd International

Workshop on Recommendation Systems for Software

Engineering, pages 36–40.

Pressman, R. S. and Maxim, B. R. (2021). Engenharia de

software-9. McGraw Hill Brasil.

Salda

˜

na-Ramos, J., Sanz-Esteban, A., Garc

´

ıa-Guzm

´

an, J.,

and Amescua, A. (2012). Design of a competence

model for testing teams. IET Software, 6(5):405–415.

Salman, I., Misirli, A. T., and Juristo, N. (2015). Are

students representatives of professionals in software

engineering experiments? In 2015 IEEE/ACM 37th

IEEE international conference on software engineer-

ing, volume 1, pages 666–676. IEEE.

Shehabuddeen, N., Probert, D., Phaal, R., and Platts, K.

(2000). Management representations and approaches:

exploring issues surrounding frameworks. Bam, pages

1–29.

Valle, P. H. D., Vilela, R. F., Guerino, G., and Silva, W.

(2023). Soft and hard skills of software testing pro-

fessionals: A comprehensive survey. In Proceedings

of the XXII Brazilian Symposium on Software Quality,

pages 90–99.

ICEIS 2025 - 27th International Conference on Enterprise Information Systems

192