Remote Emotional Interactions via AI-Enhanced Brain-to-Body

Neurophysiological Interface

Geovanna Evelyn Espinoza Taype

a

, Maria Cec

´

ılia Calani Baranauskas

b

and Julio Cesar Dos Reis

c

University of Campinas, Campinas, Brazil

Keywords:

Socio-Emotional Interactions, Enactive, Socioenactive, Emotion, Emotional Contagion, AI, ML, Machine

Learning, EEG, ECG, BCI, Brain-Computer Interface, SVM, Support Vector Machine, Heart Rate, Brain.

Abstract:

The rapid growth of Artificial Intelligence (AI) has led to the emergence of Human-AI Interaction. This

area explores how humans and AI systems can effectively collaborate and communicate. Recent studies have

shown that using traditional approaches might not be adequate to capture issues arising from the combination

of methods of these disciplines. A recent approach emerging in human-computer interaction (HCI), the so-

cioenactive approach, represents a new possibility for capturing aspects in the confluence of AI and HCI due

to its focus on the social-physical-digital coupling. In socioenactivity studies, the brain, body, senses, percep-

tion, cognition, sensorimotor, and emotions in interactions with people, physical objects, and computational

systems. This study investigates and develops a socioenactive system empowered with AI that is designed

to foster and enhance socio-emotional interactions between participants who are connected remotely. Our

solution has the potential to significantly impact the field of Human-AI Interaction, by providing a deeper

understanding of the interaction and coupling between human-AI through the socioenactive system. The so-

cioenactive scenario involves a socioenactive system based on BCI (Brain Computer Interface) composed of

several components: a mind wave device, smartwatch, parrot robot, and Aquarela Virtual system (which in-

volves physical QR toys). These components are connected to share data remotely. The mind wave device and

smartwatch collect neurophysiological information, and AI algorithms process this data to recognize emotions

evoked by a parrot robot and the Aquarela Virtual. The AI component uses a machine learning technique to

recognize emotions in brain waves (EEG) data. Our solution explores tree algorithms to recognize emotions in

heart rate (ECG) data. Our evaluation, conducted in a workshop with participants from different nationalities

and ages, demonstrates that the socioenactive system with embedded AI is a key driver of socio-emotional

interactions. The system’s ability to interpret and utilize neurophysiological information to facilitate dynamic

coupling between humans and technological processes might significantly advance Human-AI Interaction.

1 INTRODUCTION

Socioenactive systems are an emerging approach

characterized by interfaces driven by bodily involve-

ment in scenarios of social-physical-digital coupling

(Baranauskas, 2015) (Baranauskas et al., 2024).

These systems can consider the human body’s uncon-

scious neurophysiological signals (Kaipainen et al.,

2011). These signals reflect emotional states trans-

ported from the brain to all parts of the human body

through the autonomic and cardiac nervous systems

(Ivonin et al., 2013) (Smith and Lane, 2015). Al-

though socioenactive systems involve a promising

field for investigations considering neurophysiologi-

a

https://orcid.org/0000-0002-9038-6351

b

https://orcid.org/0000-0002-4830-5298

c

https://orcid.org/0000-0002-9545-2098

cal information, this field has not yet been explored

using AI (Rodrigues Filho and Nogueira, 2022).

Moreover, the proliferation of AI has allowed the

treatment of these neurophysiological signals. How-

ever, questions have arisen about how people interact

with systems that contain AI (Jiang et al., 2024). To

fill this gap, this research addresses studying human-

computer-AI interaction by adopting the socioenac-

tive approach, specifically considering emotional neu-

rophysiological information in human-computer in-

teraction through AI. Therefore, our main objective

was to build a socioenactive system that embeds

AI, which allows remote socio-emotional interactions

among people. To achieve this objective, we re-

view technologies that involve internal aspects of the

human body. We found that Brain-Computer Inter-

faces (BCI) are systems that study human neurolog-

Espinoza Taype, G. E., Calani Baranauskas, M. C. and Cesar Dos Reis, J.

Remote Emotional Interactions via AI-Enhanced Brain-to-Body Neurophysiological Interface.

DOI: 10.5220/0013280500003929

In Proceedings of the 27th International Conference on Enterpr ise Information Systems (ICEIS 2025) - Volume 2, pages 533-543

ISBN: 978-989-758-749-8; ISSN: 2184-4992

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

533

ical (EEG) information to identify patterns in brain

waves. So, once these patterns are identified, the BCI

sends commands to a desired action, such as con-

trol of a cursor or a prosthetic limb, among others.

In this research, we used BCI to recognize emotions

in brain waves; besides, we used heart rate informa-

tion to identify emotions in physiological informa-

tion. Once the emotion is identified in brain waves

and/or heart rate, action commands are sent to the

system to evoke socio-emotional interaction between

two people who are remotely connected through the

internet. The system that includes a parrot robot and

the Aquarela Virtual system (Duarte et al., 2022) is

intended to promotes emotional interactions between

the people who use the socioenactive system. The re-

search questions that we will answer with this study

are: (RQ1) Does the socioenactive system embed-

ded with AI, involve an interface driven by neuro-

physiological and bodily expression? (RQ2) Does the

socioenactive system embedded with AI constitute

a dynamic coupling between human and technolog-

ical process? (RQ3) Is it possible to promote remote

socio-emotional interactions by unconscious body re-

actions in socioenactive systems embedded with AI?

To evaluate our proposal, we conducted experiments

that considered 9 participants (from Brazil, Peru, and

Africa). The participants were organized in groups

of 2 people to allow remote interactions among them.

The system, through a smartwatch (ECG) and mind

wave (EEG) devices, collected neurophysiological in-

formation, and the AI analyzed the neurophysiologi-

cal information and sent commands to the parrot robot

and Aquarela Virtual system when emotions were

identified in brain waves and heart rate. We eval-

uated the neurophysiological information to identify

the number of times that the system recognized emo-

tions and the number of emotional interactions that

the system promoted among the participants.

The results showed that the socioenactive sys-

tem, through the AI, recognized emotions in partic-

ipants’ neurophysiological information and sent com-

mands to the parrot robot and Aquarela Virtual to ex-

press voice emotions and animations. These interac-

tions through the system allowed socio-emotional in-

teraction to appear among the participants. In con-

clusion, the socioenactive system enriched with AI

drove socio-emotional interactions, expressed by neu-

rophysiological and bodily involvement. In this pro-

cess, a dynamic coupling between human and techno-

logical processes that involved AI was developed.

This article is organized as follows. Section 2

presents a literature review. Section 3 describes the

socioenactive system built to promote remote socio-

emotional interactions. Section 4 describes how AI

is embedded in the socioenactive system. Section 5

presents a case study, the participants, the methods

used, and the evaluation of results. Finally, the dis-

cussions 6 and conclusions 7 follow.

2 RELATED WORK

We explored the literature about studies related to AI

and enactive/socioenactive systems, and a few works

(Rodrigues Filho and Nogueira, 2022) were found re-

garding these fields. Then, we looked for studies that

involved AI, emotions, enactive, socioenactive, and

BCI. We found two studies (Kaipainen et al., 2011)

and (Gonc¸alves et al., 2021) that involved the enac-

tive/socioenactive field, AI, and emotions. We found

the studies of (Wang et al., 2020) and (Jiang et al.,

2019), which are related to BCI and AI. The study of

Kaipainen et al. (Kaipainen et al., 2011) is an inter-

esting research that shows an enactive system based

on human psychophysiological reactions. The system

mounts a film based on a person’s psychophysiolog-

ical expressions. The system is built with minimal-

ist aspects and uses facial electromyography (EMG),

heart rate (HR), and electrodermal activity (EDA) to

measure emotional expressions. The film is mounted

by the system based on psychophysiological expres-

sions and a spatial ontology. The ontology contains a

repertoire with notations and some automated anal-

ysis. This study aimed to show how unconscious

interaction can occur in the interaction of human-

computer. Despite this work being an excellent ref-

erence for our research, it does not tackle the social

aspects. The research proposed by Gonc¸alves et al.

(Gonc¸alves et al., 2021) presented an architecture of

a socioenactive system with AI. The AI in the system

is used to recognize emotional states in participants’

faces. Despite this study involving AI and socioen-

active systems, it was still exploratory. Wang et al.

(Wang et al., 2020) involved the human-AI interac-

tion promoted by BCI and a neurohaptic interface.

The system was built to connect two remote people

through the internet. The two people wear a BCI

(based on EEG) device and a haptic armband. When

the system recognizes an emotion from one person,

“feelings of missing someone,” the system transmits

commands to the haptic armband of the remote per-

son. The system shows the image of the person who

transmitted the “missing you” signals to the remote

person on a screen. As we can see, the system al-

lows human-AI emotional interactions; however, this

study does not explore the socioenactive aspects of

interaction. Jian et al. (Jiang et al., 2019) also in-

volves BCI in social interactions (multi-person brain-

ICEIS 2025 - 27th International Conference on Enterprise Information Systems

534

to-brain). They show the power of brain waves in so-

cial interactions. In this study, three people interacted

by playing the Tetris game remotely; the participants,

through their brain waves, sent commands to the sys-

tem to turn the block in the Tetris game. Consider-

ing a conventional social network, this work shows

a social network of brains connected to interact be-

tween them through thinking. The results point to

future brain-to-brain interfaces that enable coopera-

tive problem-solving by humans using a “social net-

work” of connected brains. Although this study in-

volves BCI and AI, they do not delve deep into the so-

cioenactive field of socio-emotional interactions. Ex-

isting studies are focused on the field of socioenac-

tive/enactive or BCI, but there are no studies that com-

bine these two paradigms. On one side, the BCI stud-

ies show that neural data are relevant in social inter-

actions. Thus, technological devices could give us

brain and body internal neurophysiological informa-

tion. On the other hand, socioenactive perspectives

study and use neurophysiological information in so-

cial interactions, but there are not yet profound studies

in this field. Therefore, this gap shows an opportunity

for this research project.

3 BUILDING A SOCIOENACTIVE

SYSTEM BASED ON BCI

We define a scenario by considering socioenactive di-

mensions (social, physical, and digital) to build a so-

cioenactive system.

3.1 A Scenario for Socio-Emotional

Interactions with AI

When we contact an animal, we often become unin-

hibited in expressing our emotions because they un-

leash an immediate and natural emotional response in

us (Gee et al., 2017)(Fellous, 2004). This is why they

are usually used in therapies. Our proposal scenario

involves a toy parrot as an object embedded with tech-

nology. Figure 1 shows the technological components

of our experimentation scenario.

In the scenario, at least two participants are re-

motely connected via the internet, facilitating com-

munication and interaction. One of them uses a

wristband and a headband as wearable devices. Ob-

jects with embedded ubiquitous technologies, like

parrots, are used for experimentation. A system is

set up on the laptop to control the technological com-

ponents. The technological devices (wristband and

headband) and objects embedded with technology are

Figure 1: A scenario for socio-emotional interactions with

AI.

linked to the computer. This scenario intends to join

two remotely connected people and promote socio-

emotional interactions through the proposed socioen-

active system. Through this scenario, we can study

the AI interactions and coupling between humans and

the socioenactive system with AI.

3.1.1 Building the Socioenactive System

Considering the previous scenario, the building of the

socioenactive system involves focusing on the digital

dimension, taking into account the physical and social

dimensions. Therefore, the artifacts defined and de-

signed for the socioenactive system involved a mind

wave, a smartwatch, a parrot robot, and the Aquarela

Virtual system ((Duarte et al., 2022)). These artifacts

involve devices and computational systems with dif-

ferent programming languages and operational sys-

tems, which adds complexity to the system’s devel-

opment. Therefore, we used an architecture based on

components to build the socioenactive system, and it

involved the following technological components:

• Brain Wave Component. This is the headband

(wearable device) that allows catching brain wave

data, specifically EEG information. The informa-

tion collected by this device is stored in a com-

puter.

• Smartwatch Component. This is the wristband,

which is a wearable device that allows the collec-

tion of heart rate data, precisely ECG information.

The information gathered by this device is stored

in the smartwatch’s memory.

• Controller Component. This is a component in

charge of managing or directing the input and/or

output data flow between the components as a

function to command the components.

• EEG Component. This component is in charge

of processing the data collected by the brain wave

component, sending this data, and receiving com-

mands from the AI component. This application

connects the brain wave component and the AI

component.

Remote Emotional Interactions via AI-Enhanced Brain-to-Body Neurophysiological Interface

535

• ECG Component. This component allows the

collection of preprocessed heart rate data in real-

time from the smartwatch. Besides, it includes an

algorithm to identify emotional states in heart rate

data. The algorithm result is sent to the controller

component.

• AI Component. This component contains an AI

algorithm. It allows for identifying emotional

states in brain waves. The result of this compo-

nent is sent to the controller component.

• Aquarela Component. Aquarela Virtual is a sys-

tem created and developed by the (Duarte et al.,

2022). It is a web application that allows re-

mote socio-emotional interactions between peo-

ple. This application was built to allow physi-

cal activities among children to be geographically

distributed. Besides Aquarela being a web appli-

cation, it involves body expression and interaction

through playful physical objects. This paradigm

differs from using mouse, keyboard, and touch

screen in conventional web applications. It uses

objects with QR codes, which are perceived com-

putationally by the application to evoke socio-

emotional interactions among the participants.

We adapted and connected the Aquarela system

to build the Parrot’s scenario to attain our pur-

poses. As a result, we got the Aquarela compo-

nent, which was connected to the controller com-

ponent. The emotional state identified by the AI

component is sent to the controller component,

and it triggers an emotional state to the Aquarela

component to initiate a sound and animation of the

emotional state.

• Parrot Robot Component. This component is

an object embedded with ubiquitous technology

(Raspberry Pi). The object has the color and shape

of a blue parrot, and it emits sounds like a real

parrot, so we call it a parrot robot. It has the func-

tion of triggering a sound related to an emotional

state. When this component receives an emotional

state from the Controller component, it triggers an

emotional state.

The smartwatch and mind wave components are

in charge of gathering a person’s neurophysiological

information. Two robot parrots and the Aquarela Vir-

tual are in charge of promoting emotional interactions

between participants. These components are linked

to the laptop/computer through the other components

in the system. The BCI involved in the system al-

lows the processing of neurophysiological informa-

tion collected by the Mind Wave device (EEG) and

transforms it into commands. Figure 2 shows the re-

lationship and link between the components.

Figure 2: Components built for the socioenactive system in

a scenario of socio-emotional interaction with AI.

4 EMBEDDING AI IN THE

SOCIENACTIVE SYSTEM

We present how AI was embedded in the previously

created socioenactive system scenario. We describe

the AI component in the following.

4.1 An AI Model to Recognize Emotions

in Brain Signals

We present the steps followed to embed AI in the so-

cioenactive system. The objective was to use AI to

recognize emotional states in brain waves in real time

in the socioenactive system.

A) Choosing an AI Algorithm

To include AI in our socioenactive system, we have

reviewed machine learning and deep learning tech-

niques applied in AI for brain waves. We found more

articles using machine learning than deep learning

regarding emotion classification/recognition in brain

waves (EEG). The literature review results showed

that Support Vector Machine (SVM) is a machine

learning method largely used as a kernel for classi-

fication tools. In the last years, the SVM algorithm

has been mainly used in EEG classification to study

emotions, as described in articles [(Blanco-R

´

ıos et al.,

2024), (Huang et al., 2023), (Jianbiao et al., 2023),

(Sacc

´

a et al., 2018), (Sai et al., 2017)].

B) Choosing a Dataset

In previous work, we have developed a dataset

of emotional brain waves (Espinoza Taype et al.,

2023). This EEG brain waves dataset was col-

lected from 21 people and involved basic emotional

states: happiness, sadness, fear, and anger, each one

with 1201, 1311, 1311, and 1486 records, respec-

tively. The dataset contains frequency bands data:

delta’,’theta’,’alpha’, ’beta’, and ’gamma’. These data

were analyzed using the Fourier Transform method;

ICEIS 2025 - 27th International Conference on Enterprise Information Systems

536

the result showed features in amplitude and frequency

to differentiate emotional states in brain waves. Con-

sidering this dataset, we continue with the following

step.

C) Pre-processing the Dataset

We prepared and cleaned the dataset to make it more

suitable for our machine-learning algorithm (which

will be shown in the following step). This step aims

to reduce the complexity, prevent overfitting, and im-

prove the model’s overall performance. The dataset

contains data on brain waves of four emotional states:

happiness, sadness, fear, and anger. The process used

to pre-process the data involved the following steps:

removing duplicates, removing irrelevant data, con-

verting data type, clear formatting, fixing errors, and

handling missing values.

D) Dataset Organization

In this step, the brain waves data was organized to

train and test the machine learning algorithm. We

split our dataset into two subsets: training and testing

with 70% and 30% of data, respectively (Uc¸ar et al.,

2020). The training data was used to train the machine

learning algorithm. The testing data was used to eval-

uate the accuracy of the trained algorithm. Both data

groups contain four data classes: happiness, sadness,

fear, and anger, each one with 1201, 1311, 1311, and

1486 brain wave records, respectively.

E) Model Development

For the data pre-processing and the development

of the machine learning model, we use the follow-

ing libraries: Phython, Pandas, Numpy, Matplotlib,

Sklearn, and Seaborn.

In the beginning, the model was developed to clas-

sify two signal classes (happiness and sadness). Af-

terward, it was adapted to classify four signal classes

(happiness, sadness, fear, and anger). The objective in

developing these two models was to identify the lev-

els of accuracy that the model could achieve in clas-

sifying two and four emotion types. The results are

shown in the next step.

F) Model Results

After the Machine Learning model development, we

executed it to get classification results. So, we used

the confusion matrix, which is a table used to evaluate

the performance of a machine-learning algorithm. It

allows us to evaluate the accuracy of the signal clas-

sification. The confusion matrix in Figure 3 and 4

show how many samples were correctly and incor-

rectly classified by the algorithm in each class. The

SVM model produced can be accessed in the follow-

ing link ”SVM to classify emotions in brain waves”.

Classification of Two Emotions

Figure 3 shows the confusion matrix for a binary class

dataset consisting 1201 samples in the positive emo-

tional state class (happiness) and 1663 in the negative

emotional state class (sadness) of the test set.

In Figure 3, the SVM algorithm accurately pre-

dicted 357 brain waves as positive emotion (happi-

ness) and 196 as negative emotion (sadness). How-

ever, 146 brain waves were misclassified as negative

emotions when they were positive, and 161 were in-

correctly classified as positive emotions when they

were negative emotions. Therefore, the prediction

was 69% of precision for happiness emotion (posi-

tive emotion) and 57% for sadness emotion (negative

emotion).

Figure 3: The SVM algorithm prediction accurately pre-

dicted 553 (357 happiness, 196 sadness) brain waves and

misclassified 307 (161 happiness, 146 sadness).

Classification of Four Emotions

We have carried out the SVM algorithm again for

multiclass classification, this time with four emotions:

happiness, sadness, fear, and anger. The dataset was

composed by 1201, 1663, 1486, and 1311 brain waves

for happiness, sadness, fear, and anger emotions. The

results show precisions in predictions of 28%, 38%,

27%, and 25% for happiness, sadness, fear, and anger,

respectively. Figure 4 shows 38, 237, 206, and 40

brain waves correctly classified, while the remaining

brain waves were misclassified.

Figure 4: The SVM algorithm prediction of happiness, sad-

ness, fear, and anger emotions.

We observe that the accuracy in classifying two

emotional states is better compared to classifying four

emotional states. According to (Mathur and Foody,

2008), a multiclass classification may require more

major support vectors than a binary class, which

means, it requires a series of optimizations and classi-

fication parameters for multiclass. In an evaluation of

Remote Emotional Interactions via AI-Enhanced Brain-to-Body Neurophysiological Interface

537

multiclass classification, (Foody and Mathur, 2004)

states that SVMs were originally defined as binary

classifiers, and their use for multiclass classifications

is more problematic. So, it is necessary to use strate-

gies that could reduce the multiclass problem to a set

of binary problems. Multiclass problems are com-

monly encountered. Currently, researchers are ad-

dressing to board this problem (for instance, (Ke et al.,

2024), (Nie et al., 2024), (Lai et al., 2024), among

others).

G) Embedding the AI Model in the Socioenactive

System

After developing, training, and getting the model’s re-

sults, the next step involves embedding the model in

the socioenactive system. For this purpose, we fol-

lowed the next steps:

• G.1 Zipping the Model

To use the model in applications, we compress

and serialize it using the joblib and pickle libraries

in Python. The zip model is now ready to be used

like a box containing inputs and outputs. So, it is

possible to send brain wave values to this box, and

it will respond and answer (with the emotional

state identified).

• G.2 AI Application

After compressing the model, we developed an

application that contains the zip model, which is

connected to the whole socioenactive system. We

used the Flask framework to develop the applica-

tion. As a result, the AI application is connected

to the whole socioenactive system, which allows

it to receive brain wave data and respond to an

emotional state. The application is available in the

following link AI application to classify emotions

in brain waves

• G.3 Model Implementation

After creating a ML model to classify emotions in

brain wave signals, the next step was to deploy the

model on a server.

4.2 Emotion in Heart Rate

After implementing the AI based on brain waves, we

added heart rate physiological measurements to im-

prove the AI results. So, we worked with the ECG

component (Smartwatch).

We inquired about heart rate peaks to extract

knowledge from the heart rate signals collected by

the smartwatch application. According to (Pollreisz

and TaheriNejad, 2017), the peaks always have sim-

ilar heights. Hence, the peaks were categorized into

two groups with different heights. A peak’s height

is lower than 100 is classified as a slight peak; if

it is higher than 100, it is considered a significant

peak. The rationale behind the value 100 involves

estimations using the max value in the sequence of

heart rate. Thus, we analyzed all signals, and when-

ever a small or large peak was detected, its respective

counter was increased. These statistical analyses were

then used to categorize the signals into two groups:

(i) Signals with a height over 100 as happiness emo-

tion, and (ii) signals with a height lower than 100 were

classified as sadness emotion. 5) Classification: The

classification is done via a decision tree based on sta-

tistical information. First, it starts by analyzing the

peaks. For example, if there are sequences of signif-

icant peaks in the measured signal, a counter is in-

creased for the happiness emotion. However, if there

are minor peaks in the signal, the counter for the sad-

ness emotion is increased. At the end, the probability

of occurrence of each emotion is calculated in per-

centage based on the value of the counters for each

emotion.

This algorithm was joined with the AI developed

previously to give autonomy to the socioenactive sys-

tem regarding the decisions of emotion recognition in

neurophysiological information.

5 CASE STUDY:

SOCIO-EMOTIONAL

INTERACTIONS PROMOTED

BY A SOCIOENACTIVE

SYSTEM WITH AI

To experiment with the socioenactive system embed-

ded with AI, we carried out a workshop at the Univer-

sity of Campinas (UNICAMP). The workshop lasted

one week, with one day as the main one (Satur-

day). During the workshop week, we invited stu-

dents/people to volunteer and experience the system.

One day, two students from the UNICAMP (age 20-

23) participated, and on the main day, seven children

between 5 and 10 years old participated, accompanied

by their parents/relatives.

5.1 Methods

In the experiment, two participants were remotely

connected via the internet through the socioenactive

system, each one of them in a different ambient. One

participant wears the smartwatch and mind wave de-

vices on his wrist and head, respectively. In each am-

bient, a parrot robot, physical toys with QR codes, and

a screen to display the Aquarela Virtual were placed

around each participant. To capture facial, vocal,

ICEIS 2025 - 27th International Conference on Enterprise Information Systems

538

and postural expressions, cameras were positioned in

front of the participants to record their movements

and body language. Additionally, Google Meet was

utilized to record the participants during the interac-

tion.

A participant began interacting with the physical

QR toys and the parrot robot, holding the QR toys

up to the laptop’s camera. Through this action, the

participant shares their emotions and engages with

the other participant on the opposite side and also

with the parrot robot and Aquarela Virtual. Consid-

ering the emotion shared by the participant, the parrot

robot triggers voice emotional expressions, and the

Aquarela Virtual playes sounds showing animations

with digital emotional emoticons and toys. Intrinsi-

cally, the physical toys with QR codes inspired emo-

tional features through their designs and colors.

The socioenactive system, through the smartwatch

and mind wave devices collected the participants’

brain wave and heart rate data (when they were in-

teracting). In real-time, the system (with the AI) pro-

cesses the collected data to recognize an emotional

state; thus, when an emotion is identified, the sys-

tem triggers socio-emotional interactions to the parrot

robot and Aquarela Virtual. It means, the AI worked

during the experimentation, identifying participants’

emotional states from the neurophysiological infor-

mation and transforming these signals in the parrot

robot actions and reactions in Aquarela Virtual.

5.2 Evaluation Results

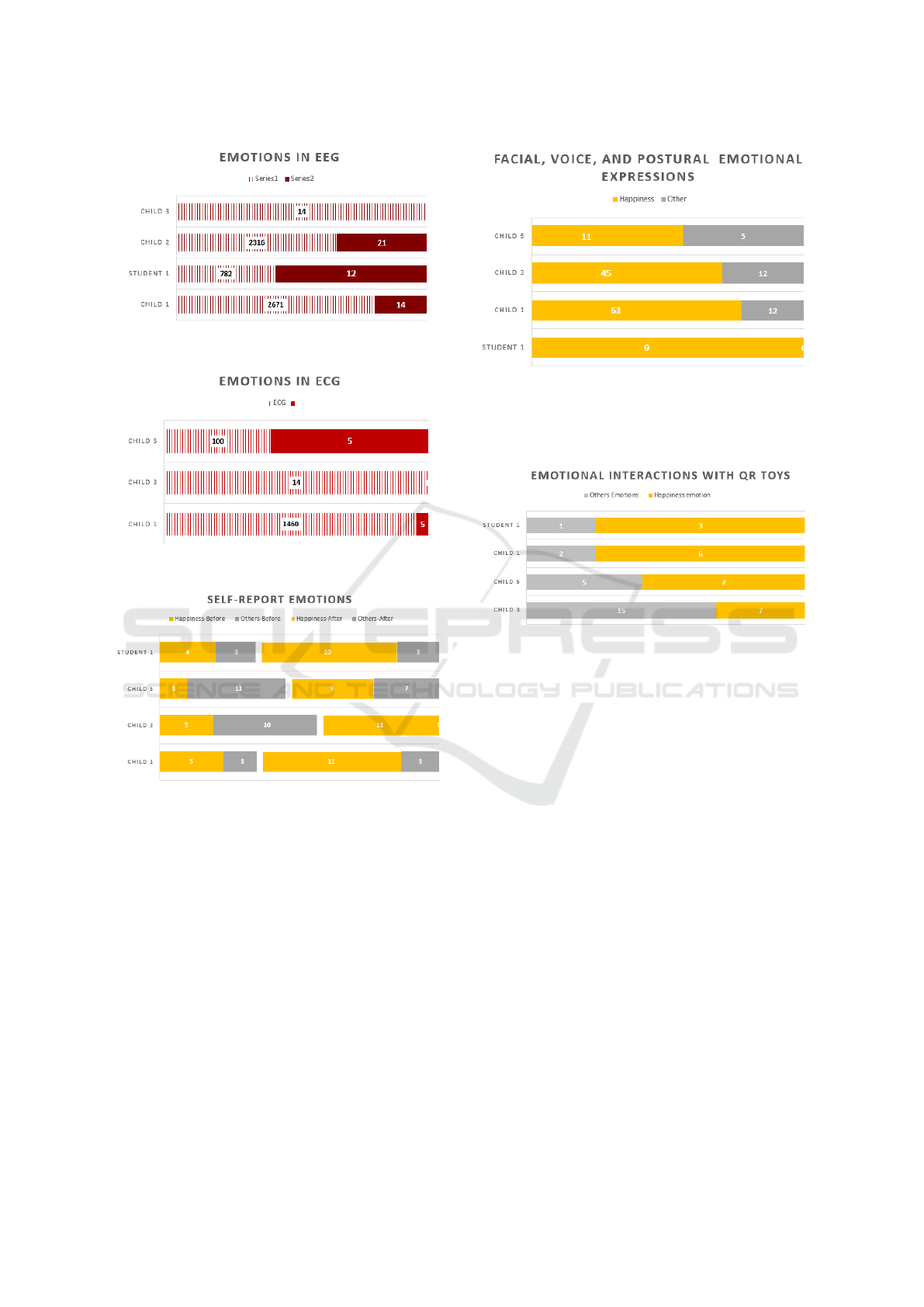

The analysis of neurophysiological information col-

lected by the Mind Wave (EEG) and Smartwatch

(ECG) shows the results in Figures 5 and 6 respec-

tively. For instance, considering the EEG analysis,

from 2671 brain waves, the happiness emotion was

identified 14 times in child one. From 782 brain sig-

nals in student number one, 12 times the AI identi-

fied happiness emotion. In child number two, from

2316 brain waves, 21 times were identified as hap-

piness emotions. Child number three had 14 brain

waves collected, but unfortunately, she had several

buns in her head, and she did not want to use the mind

wave device, so it was impossible for the AI to iden-

tify emotions in brain waves. Regarding heart rate

(ECG) analysis (Figure 6), child number one recorded

1460 heart rates and 5 times was identified happiness

emotion. For child number five, 100 heart rates were

collected, and 5 times were identified the happiness

emotion. In child number three, 14 heart rate signals

were collected; unfortunately, the child pushed some

buttons in the smartwatch and stopped the data col-

lection.

As we can see, emotional expression of happiness

was identified in brain waves and heart rate signals,

while other emotions (sadness) were not identified.

We could corroborate these results through the anal-

ysis of the self-report questionnaires filled out by the

children (with the help of a relative/monitor) before

and after the experimentation in the workshop (Figure

7). The self-report questionnaire shows an increase in

happiness emotion after the socioenactive experience

from 5 to 11, while the other emotions (sadness, fear,

and anger) remain in the same value in child number

(1) (Figure 7 shows these results). In child number

three, happiness went from 5 to 10, while negative

emotions disappeared. For child number five, happi-

ness increased in 6, and negative emotions reduced

in 4. Student number one (1) ’s happiness increased

from 4 to 10, and negative emotions remained at the

same value (3).

The analysis of facial, voice, and postural expres-

sions also showed an increase in emotional expres-

sions in participants (Figure 8). For instance, child

number one showed 63 times facial/voice/postural ex-

pressions of happiness while others emotional ex-

pressions were 12 times. Child number three and

five showed 45 and 11 times facial, voice, and pos-

tural expressions of happiness respectively. Student

one showed 9 times happiness expressions. Figure 8

shows these results.

These results show that the happiness emotion

(positive) was expressed by neurophysiological and

body (facial, voice, and postural) expressions. When

we analyzed the socio-emotional interactions with the

system, we could identify that the participants used

the physical objects (toys with QR codes) to share

emotions between them through the system. Figure

9 shows the times when the participants interacted in-

tentionally (consciously), sharing emotions using the

QR toys through the system. For instance, child 3 in-

teracted 22 times emotionally through the toys; from

them, 7 were happy, and 15 were in negative emo-

tions (sadness, fear, or anger). Child number one

shared happiness emotions 6 times and other emo-

tions 2 times. Child number five interacted happily

7 times, while 5 times were other negative emotions.

Student one was 3 times happy, and 1 time felt another

emotion.

5.2.1 Regarding the Socioenactive Coupling

In spite of our brain and body’s emotional expres-

sions being a complex phenomenon, we could syn-

thesize the following: the brain expresses emo-

tions through neuron excitation, neurotransmitters in

charge of transmitting the signals excitation from neu-

ron to neuron generating electrical impulses in the

Remote Emotional Interactions via AI-Enhanced Brain-to-Body Neurophysiological Interface

539

Figure 5: Emotional expressions identified by the AI in

brain wave.

Figure 6: Emotional expressions identified by the AI in

heart rate.

Figure 7: Self-report questionnaire filled by the participants

stating their emotional states before and after the socio-

emotional experience in the workshop.

brain which we collect through the Mind Wave (EEG

device component). Our body expresses emotions

through heart rate intensities, whereas blood is lob

with intensities to the body and brain. The Smart-

watch (ECG device component) collected this heart

rate data. Thus, these brain and body signals be-

come emotional neurophysiological information that

is transmitted through the socioenactive system and

internet from person to person. The emotional neu-

rophysiological expressions from one person become

emotional expressions in the other person (voice,

face, body emotional expressions). As a consequence,

the neurophysiological emotional contagion was pro-

moted by AI.

Figure 8: Body (facial, voice, and postural) emotional ex-

pressions identified in the participants during the experience

with the socioenactive system in the workshop. A camera

recorded the participant’s facial, voice, and postural expres-

sions.

Figure 9: Emotional interactions identified by the system

when the participant interacted with the QR toys (through

the lecture on QR codes stuck in the toys).

The experimentation in the case study showed us

that socioenactive participation involves the continu-

ous coupling of different points of interconnections

between humans and computers in socioenactive en-

vironments. Externally and internally, our body in-

teracts with other humans and physical objects on

a conscious and unconscious level. Externally, our

body expresses emotions through our facial, postural,

and voice expressions. Besides, internally, our body

and brain, through the cardiac system, neural sys-

tem, other systems, and organs, are involved in a dy-

namic process in which we express emotions through

neurophysiological reactions. It means our body and

brain involve internal and external dimensions, that

are conscious and unconscious of interactions with

humans and physical things. The socioenactive cou-

pling allows us to surpass these dimensions from in-

ternal to external and from external to internal orbits

in dynamic, continuous, complex, and infinitive feed-

back loops through perception, cognition, sensorimo-

tor, and emotions. This means that dynamic loops

between the human brain, body, hands, eyes, and so

on, and the space surrounding him/her shape an in-

terwoven and fluid dynamic process that is constantly

ICEIS 2025 - 27th International Conference on Enterprise Information Systems

540

forming and re-forming over short periods of time.

This complex dynamic system reshapes, re-wires, and

re-models the human brain and body, which is called

embodiment.

The socioenactive system allows explicit, inten-

tional, conscious, and implicit unconscious control of

the system, which allows participants to interact by

embodied action. The interaction involved is con-

scious and intentional, controlled by embodied ac-

tion, and unconsciously controlled by the body’s neu-

rophysiological reactions. The conscious side was

managed by body action when the participant inter-

acted with physical objects (showing the QR toys to

the camera) to share emotions through the system.

The unconscious side was managed by the system

through the smartwatch, mind wave device, and the

AI algorithm. The challenge was on the unconscious

side to recognize emotions in brain waves and heart

rate in real-time. The AI embedded in the socioe-

nactive system allows the interpretation of emotions

from neurophysiological reactions, which allows the

system to adopt a dynamic and autonomous behavior.

The socioenactive system acted as a mediator of the

emotional dynamic coupling between the participants

connected remotely. The AI in charge of interpreting

emotions in the brain and heart rate gives to the sys-

tem emotional behaviors. It means the system acted

and reacted based on the conscious and unconscious

emotions of the participants in a coordinated way with

its components (smartwatch, mind wave, parrot robot,

Aquarela Virtual, and whole socioenactive system).

For example (Figure 10), when the AI recog-

nizes emotion in a participant’s brain waves or heart

rate, the system coordinates with the parrot robot

and Aquarela Virtual to play emotional sounds and

displays emotional animations with digital emoti-

cons. Thus, the participants may express in the sys-

tem unconscious personal emotions from neurophys-

iological actions, transmitting them to other partici-

pants who are connected remotely. The other partici-

pants expressed conscious emotions using the QR toy.

Then, the system allows a recursive interaction be-

tween the participants at conscious and unconscious

levels.

Figure 10: Emotional interactions between participants at

conscious and unconscious levels.

The dynamic montage (Figure 10) of the system

is mapped considering the neurophysiological emo-

tional expressions and body action expressions (when

the participants interact through toys with QR codes).

The system includes sequential instructions and deci-

sion algorithms, in charge of the dynamic montage.

Figure 11 shows when two participants interacted

emotionally through the system remotely; on the left

side, a participant using the smartwatch, mind wave,

and the toys with QR is transmitting emotions to the

participants on the right side. The participants on

the right side answered by sending emotions through

the interaction with the emotional toys. For instance,

child one’s EEG reflected 14 times emotions, his

ECG 5 times, and his emotional interaction with the

toys showed 8 times; these emotions were transmitted

through the system to child number two. This child

(2) answered child number one with emotional inter-

actions through the QR toys 13 times. The same oc-

curred with the other participants; child number three

sent 21 (EEG), 5 (ECG), and 22 (toys) emotional ex-

pressions to child number four, who answered with 11

emotional expressions through the toys. Child num-

ber five expresses her emotions through the toys 12

times, and she receives 10 times emotional reactions

(through the toys) by child number six. Student num-

ber one transmitted emotional expressions, sending

12 (EEG) and 4 (QR), having a response of 4 times

(QR) from student number two. Therefore, answering

the (RQ2) ” Does the socioenactive system embedded

with AI constitute a dynamic coupling between hu-

man and technological process?”, the answer is yes

because the system allowed dynamic coupling of co-

ordinated actions between two participants. The emo-

tional expressions (neurophysiological and bodily in-

volvement) from one participant become impressions

(that at the same time become emotional expressions)

for the other participant.

In order to answer the research question (RQ1), ”

Does the socioenactive system embedded with AI in-

volve an interface driven by neurophysiological and

bodily expression?”, the answer is yes because the

socioenactive system through the AI (EEG and ECG

measures) and QR toys recognized emotions in partic-

ipant’ neurophysiological signals and bodily involve-

ment, driving socio-emotional interactions to another

participant who was in another remote ambiance. The

interface driven by neurophysiological and bodily in-

volvement occurred 19 (EEG and ECG) and 8 (QR

toys) times in child number one. Child number three

had 26 (EEG and ECG) and 22 (QR toys) interactions

driven by neurophysiological and bodily involvement

respectively. Child number five had 12 interactions by

bodily involvement (with QR toys). Student number

Remote Emotional Interactions via AI-Enhanced Brain-to-Body Neurophysiological Interface

541

one had 12 interactions by EEG and 4 interactions by

QR toys (Figure 11).

Figure 11: Emotional expressions of happiness in brain

waves, heart rate, body (facial, voice, postural) expressions,

and self-report questionnaire.

As a final result, and answering the (RQ3) ”Is it

possible to promote remote socio-emotional interac-

tions by unconscious body reactions in socioenactive

systems embedded with AI?” The answer is yes; the

socioenactive system, through a person’s neurophys-

iological body responses, facilitates emotional inter-

action with another individual and physical objects

(such as the robot parrot) located in a remote envi-

ronment.

6 DISCUSSION

The EEG and ECG (neurophysiological) emotional

measures showed different variations by each partici-

pant. In some participants, the EEG and/or ECG mea-

sures were more intense compared to other partici-

pants. According to Stenberg (Stenberg, 1992), these

variations are affected by the personality and can vary

in each participant, affecting the EEG and ECG mea-

surements made by the AI.

The analysis of self-report questionnaires, neuro-

physiological information, bodily actions, and facial,

voice, and postural expressions showed relationships

in emotional expressions.

The socioenactive system allowed remote com-

munication by conscious and unconscious body reac-

tions to participants in remote environments through

neurophysiological signals.

The data analysis and results showed that the

socioenactive system achieved coupling regarding

human-computer-AI in unconscious (neurophysio-

logical) and conscious (bodily involvement) levels.

The coupling originated from the brain and body of

one participant to reach the brain and body of the

other remote participant through various dynamic

processes and components of the socioenactive

system (described in Section 5.2.1).

7 CONCLUSION

This research demonstrated how to embed a socioen-

active system with AI and the steps that involve this

challenge. Our solution used the SVM algorithm to

recognize emotional states in neural information col-

lected by a Mind Wave headset. Our study added a

decision tree algorithm to the AI to identify emotions

in heart rate (ECG) data collected by a smartwatch.

Emotion recognition involves neural information and

other physiological information. The experiment re-

sults showed that the socioenactive system embedded

with AI allowed socio-emotional interactions driven

by participants’ neurophysiological information and

bodily involvement.

Future work involves exploring different feature

extraction and learning methods for AI to improve the

accuracy of the induced classifiers. Furthermore, the

dataset could be improved by applying data augmen-

tation techniques and adding data.

We are now working on an ongoing project

to propose a framework that guides the build-

ing of such socioenactive systems. The in-

formation (dataset and code) produced in this

study can be accessed at the following link:

https://evelynespinozataype.github.io/romotica/.

ACKNOWLEDGEMENTS

This work is sponsored by the S

˜

ao Paulo Research

Foundation (FAPESP) (grant 2021/12984-1), and

CNPq #309442/2023-0. The authors thank Fabricio

Matheus Gonc¸alves, Marleny Luque Carbajal, and

Leia Loyolla de Carvalho for their support in con-

ducting the workshop. The opinions expressed in this

work do not necessarily reflect those of the funding

agencies.

REFERENCES

Baranauskas, M. C. C. (2015). Sistemas s

´

ocio-

enativos: investigando novas dimens

˜

oes no de-

sign da interac¸

˜

ao mediada por tecnologias de

informac¸

˜

ao e comunicac¸

˜

ao. FAPESP Thematic

Project (2015/165280).

Baranauskas, M. C. C., Duarte, E. F., and Valente, J. A.

(2024). Socioenactive interaction: Addressing in-

tersubjectivity in ubiquitous design scenarios. In-

ICEIS 2025 - 27th International Conference on Enterprise Information Systems

542

ternational Journal of Human–Computer Interaction,

40(13):3365–3380.

Blanco-R

´

ıos, M. A., Candela-Leal, M. O., Orozco-Romo,

C., Remis-Serna, P., V

´

elez-Saboy

´

a, C. S., Lozoya-

Santos, J. d. J., Cebral-Loureda, M., and Ram

´

ırez-

Moreno, M. A. (2024). Real-time eeg-based emo-

tion recognition for neurohumanities: perspectives

from principal component analysis and tree-based

algorithms. Frontiers in Human Neuroscience,

18:1319574.

Duarte, E. F., Mendoza, Y. L. M., de Queiroz, M. J. N.,

de Oliveira Gaia Pimenta, J., da Silva, J. V., and

Baranauskas, M. C. C. (2022). Aquarela virtual: De-

sign e desenvolvimento de um sistema socioenativo

em contexto de isolamento social. Relat

´

orio t

´

ecnico.

Espinoza Taype, G. E., Dos Reis, J. C., and

Calani Baranauskas, M. C. (2023). A socioen-

active perspective on emotion contagion and senses:

Analyzing the phenomenon via eeg signals. In

Proceedings of the XXII Brazilian Symposium on

Human Factors in Computing Systems, pages 1–11.

Fellous, J.-M. (2004). From human emotions to robot emo-

tions. Architectures for Modeling Emotion: Cross-

Disciplinary Foundations, American Association for

Artificial Intelligence, pages 39–46.

Foody, G. M. and Mathur, A. (2004). A relative evalua-

tion of multiclass image classification by support vec-

tor machines. IEEE Transactions on geoscience and

remote sensing, 42(6):1335–1343.

Gee, N. R., Fine, A. H., and McCardle, P. (2017). How

animals help students learn: Research and practice

for educators and mental-health professionals. Taylor

& Francis.

Gonc¸alves, D. A., Caceffo, R. E., Valente, J. A., and

Baranauskas, M. C. C. (2021). Design of socioe-

nactive systems based on physiological sensors and

robot behavior in educational environments. Revista

Brasileira de Inform

´

atica na Educac¸

˜

ao, 29:1356–

1376.

Huang, Q., Wang, C., Ye, Y., Wang, L., and Xie, N. (2023).

Recognition of eeg based on improved black widow

algorithm optimized svm. Biomedical Signal Process-

ing and Control, 81:104454.

Ivonin, L., Chang, H.-M., Chen, W., and Rauterberg, M.

(2013). Automatic recognition of the unconscious re-

actions from physiological signals. In Human Factors

in Computing and Informatics: First International

Conference, SouthCHI 2013, Maribor, Slovenia, July

1-3, 2013. Proceedings, pages 16–35. Springer.

Jianbiao, M., Xinzui, W., Zhaobo, L., Juan, L., Zhongwei,

Z., and Hui, F. (2023). Eeg signal classification of

tinnitus based on svm and sample entropy. Computer

Methods in Biomechanics and Biomedical Engineer-

ing, 26(5):580–594.

Jiang, L., Stocco, A., Losey, D. M., Abernethy, J. A., Prat,

C. S., and Rao, R. P. (2019). Brainnet: a multi-

person brain-to-brain interface for direct collaboration

between brains. Scientific reports, 9(1):6115.

Jiang, T., Sun, Z., Fu, S., and Lv, Y. (2024). Human-ai inter-

action research agenda: A user-centered perspective.

Data and Information Management, page 100078.

Kaipainen, M., Ravaja, N., Tikka, P., Vuori, R., Pugliese,

R., Rapino, M., and Takala, T. (2011). Enactive sys-

tems and enactive media: embodied human-machine

coupling beyond interfaces. Leonardo, 44(5):433–

438.

Ke, T., Ge, X., Yin, F., Zhang, L., Zheng, Y., Zhang,

C., Li, J., Wang, B., and Wang, W. (2024). A

general maximal margin hyper-sphere svm for multi-

class classification. Expert Systems with Applications,

237:121647.

Lai, Z., Liang, G., Zhou, J., Kong, H., and Lu, Y. (2024).

A joint learning framework for optimal feature ex-

traction and multi-class svm. Information Sciences,

671:120656.

Mathur, A. and Foody, G. M. (2008). Multiclass and binary

svm classification: Implications for training and clas-

sification users. IEEE Geoscience and remote sensing

letters, 5(2):241–245.

Nie, F., Hao, Z., and Wang, R. (2024). Multi-class support

vector machine with maximizing minimum margin. In

Proceedings of the AAAI Conference on Artificial In-

telligence, volume 38, pages 14466–14473.

Pollreisz, D. and TaheriNejad, N. (2017). A simple al-

gorithm for emotion recognition, using physiological

signals of a smart watch. In 2017 39th annual interna-

tional conference of the ieee engineering in medicine

and biology society (EMBC), pages 2353–2356. IEEE.

Rodrigues Filho, C. R. and Nogueira, Y. L. B. (2022). An-

alyzing enactive ai implementations on enactive ai

terms.

Sacc

´

a, V., Campolo, M., Mirarchi, D., Gambardella, A.,

Veltri, P., and Morabito, F. C. (2018). On the clas-

sification of eeg signal by using an svm based algo-

rithm. Multidisciplinary approaches to neural com-

puting, pages 271–278.

Sai, C. Y., Mokhtar, N., Arof, H., Cumming, P., and

Iwahashi, M. (2017). Automated classification and

removal of eeg artifacts with svm and wavelet-ica.

IEEE journal of biomedical and health informatics,

22(3):664–670.

Smith, R. and Lane, R. D. (2015). The neural basis

of one’s own conscious and unconscious emotional

states. Neuroscience & Biobehavioral Reviews, 57:1–

29.

Stenberg, G. (1992). Personality and the eeg: Arousal

and emotional arousability. Personality and Individ-

ual Differences, 13(10):1097–1113.

Uc¸ar, M. K., Nour, M., Sindi, H., and Polat, K. (2020).

The effect of training and testing process on machine

learning in biomedical datasets. Mathematical Prob-

lems in Engineering, 2020(1):2836236.

Wang, K.-J., Zheng, C. Y., Shidujaman, M., Wairagkar,

M., and von Mohr, M. (2020). Jean joseph v2. 0

(remotion): make remote emotion touchable, seeable

and thinkable by direct brain-to-brain telepathy neuro-

haptic interface empowered by generative adversarial

network. In 2020 IEEE International Conference on

Systems, Man, and Cybernetics (SMC), pages 3488–

3493. IEEE.

Remote Emotional Interactions via AI-Enhanced Brain-to-Body Neurophysiological Interface

543