Optimizing Automotive Inventory Management:

Harnessing Drones and AI for Precision Solutions

Qian Zhang

1 a

, Dan Johnson

2

, Mark Jensen

2

, Connor Fitzgerald

2

,

Daisy Clavijo Ramirez

2

and Mia Y. Wang

2 b

1

Department of Engineering, College of Charleston, SC, U.S.A.

2

Department of Computer Science, College of Charleston, SC, U.S.A.

fi

Keywords:

Inventory Management, Deep Learning, UAV Drones, Computer Vision, Artificial Intelligence.

Abstract:

Inventory errors within the automotive manufacturing industry pose significant challenges, incurring substan-

tial financial costs and requiring extensive human labor resources. The inherent inaccuracies associated with

traditional inventory management practices further exacerbate the issue. To tackle this complex problem,

this paper explores the integration of cutting-edge technologies, including UAV (Unmanned Aerial Vehicle)

drones, computer vision, and deep learning models, for monitoring inventory in parking lots adjacent to man-

ufacturing plants and harbors before vehicle shipment. These technologies enable real-time, automated in-

ventory tracking and management, offering a more accurate and efficient solution to the problem. Leveraging

drones equipped with high-resolution cameras, the system captures real-time imagery of parked vehicles and

their components, while deep learning models facilitate precise inventory analysis. This forward-looking ap-

proach not only mitigates the costs associated with inventory errors but also equips manufacturers with the

agility to optimize their production processes, ensuring competitiveness within the automotive industry.

1 INTRODUCTION

Inventory errors in vehicle manufacturing pose a sig-

nificant challenge for the industry, with far-reaching

implications. Such errors can be highly costly, im-

pacting financial resources, operational efficiency,

and human labor. Mismanagement of critical parts

and components often leads to production delays, ma-

terial wastage, and increased operational costs. Given

the reliance of vehicle assembly lines on just-in-time

(JIT) production systems, inaccuracies in inventory

tracking can disrupt entire supply chains, resulting

in costly production stoppages and backlogs (Sharma

and Gupta, 2020), (Kros et al., 2019). Moreover,

manual inventory management, heavily dependent on

human labor, is prone to errors, including miscounts

and inaccuracies, exacerbating these challenges (Su

et al., 2021). These inefficiencies often lead to re-

source misallocation, such as over-investment in un-

necessary parts and delays caused by critical short-

ages (Khan and Yu, 2022).

a

https://orcid.org/0000-0003-3166-4291

b

https://orcid.org/0000-0003-2954-0855

Addressing inventory errors in vehicle manufac-

turing is not merely a financial concern but a strategic

imperative. To mitigate these challenges, manufac-

turers are increasingly adopting modern technologies

such as automation, RFID (Radio-Frequency Identifi-

cation) systems, and advanced inventory management

software (Ota et al., 2019). These solutions aim to

enhance precision, streamline operations, and reduce

costs associated with downtime, excess inventory, and

labor inefficiencies. Accurate inventory management

is critical for ensuring seamless vehicle production,

sustaining profitability, and meeting customer expec-

tations in a highly competitive automotive market.

To tackle the persistent challenges of inventory

errors and enhance inventory management efficiency

in the automotive industry, this paper proposes a

real-time vehicle inventory system leveraging drones,

computer vision, and deep learning. The proposed

system focuses on monitoring inventory in parking

lots outside manufacturing plants or harbors before

vehicles are shipped. Drones equipped with high-

resolution cameras are deployed to capture real-time

aerial imagery of parked vehicles and their associated

components. These images are analyzed using com-

1140

Zhang, Q., Johnson, D., Jensen, M., Fitzgerald, C., Ramirez, D. C. and Wang, M. Y.

Optimizing Automotive Inventory Management: Harnessing Drones and AI for Precision Solutions.

DOI: 10.5220/0013284900003890

In Proceedings of the 17th International Conference on Agents and Artificial Intelligence (ICAART 2025) - Volume 3, pages 1140-1145

ISBN: 978-989-758-737-5; ISSN: 2184-433X

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

puter vision algorithms, enabling precise recognition

and tracking of vehicles and parts within the inventory

(Ota et al., 2019). The incorporation of deep learn-

ing further enhances the system’s capabilities, provid-

ing real-time communication, data analysis, and ac-

tionable insights. This innovative approach not only

improves inventory accuracy but also supports better

decision-making and resource allocation, paving the

way for a more efficient and cost-effective inventory

management process.

The remaining sections of the paper are organized

as follows: Section 2 presents an overview of current

solutions for vehicle detection employing drone and

AI technologies. In Section 3, a comprehensive de-

scription of the proposed system is provided. Section

4 delves into the details of the experiment’s design,

execution, and outcomes. Finally, Section 5 brings

the paper to a close, discussing its limitations and set-

ting the stage for future work.

2 LITERATURE REVIEW

Efficient inventory management is crucial in modern

manufacturing, particularly in the automotive indus-

try, where just-in-time (JIT) production systems de-

mand precise control of parts and components. In-

ventory errors can result in significant disruptions,

such as production delays, resource misallocation,

and increased operational costs (Sharma and Gupta,

2020)(Kros et al., 2019). Sharma and Gupta (Sharma

and Gupta, 2020) emphasized the impact of manual

tracking inefficiencies in JIT systems, advocating for

the adoption of automated systems. Similarly, Kros et

al. (Kros et al., 2019) conducted an empirical inves-

tigation into inventory accuracy in automotive manu-

facturing, concluding that technological innovations,

including automated tools, are necessary to address

persistent inaccuracies.

Modern technologies, such as Radio-Frequency

Identification (RFID) and AI-based solutions, have

emerged as effective strategies for overcoming inven-

tory management challenges. Su et al. (Su et al.,

2021) demonstrated the ability of RFID systems to

reduce human error rates and provide real-time track-

ing of inventory, although these systems often require

substantial initial investment and infrastructure adap-

tation. More recently, drones equipped with AI capa-

bilities have gained attention for their potential to rev-

olutionize inventory monitoring. Ota et al. (Ota et al.,

2019) highlighted the advantages of using drones for

automated vehicle detection and monitoring, empha-

sizing their ability to conduct real-time aerial surveil-

lance and reduce reliance on manual labor.

Data Acquisition:

Drone capturing

aerial footage.

Preprocessing:

Video resolution

adjustment and

image formatting.

Detection: Object

detection using

YOLO-v8-OBB.

Filtering:

Confidence score-

based result

refinement.

Output: Annotated

images/videos and

vehicle counts

displayed.

Figure 1: System Overview.

In the realm of vehicle detection and tracking,

drones have been successfully employed in vari-

ous applications. Bisio et al. (Bisio et al., 2021)

conducted a comprehensive performance evaluation

of leading deep learning (DL)-based object detec-

tion techniques, focusing on the RetinaNet frame-

work within the context of the VisDrone-benchmark

dataset. Their study provided critical insights into pa-

rameter optimization and model selection, setting a

foundation for intelligent vehicle detection systems

in smart cities. Wang et al. (Wang et al., 2016) in-

troduced a UAV-based vehicle detection and tracking

system designed for traffic data collection. Their sys-

tem leveraged image registration, feature extraction,

and tracking across consecutive UAV frames to dy-

namically detect and track vehicles with high accu-

racy. Similarly, Xiang et al. (Xiang et al., 2018)

proposed a novel framework for vehicle counting

using UAVs, integrating techniques like pixel-level

foreground detection, image registration, and online-

learning tracking to handle both static and dynamic

backgrounds. Their results demonstrated over 90%

accuracy in vehicle counting for fixed-background

videos and 85% accuracy for dynamic ones, show-

casing the efficacy of UAV-based solutions in traffic

monitoring.

These advancements underscore the potential of

drones combined with AI technologies for inventory

management and vehicle monitoring. Deep learning

models, such as those evaluated by Bisio et al. (Bi-

sio et al., 2021), have proven highly effective in ob-

ject detection, while UAV-based systems like those

described by Wang et al. (Wang et al., 2016) and Xi-

ang et al. (Xiang et al., 2018) demonstrate versatility

in diverse scenarios. The proposed system builds on

these developments by integrating drones, computer

vision, and deep learning to improve inventory accu-

racy, resource allocation, and operational efficiency in

the automotive industry.

Optimizing Automotive Inventory Management: Harnessing Drones and AI for Precision Solutions

1141

3 METHODOLOGY

3.1 System Overview

The proposed system integrates unmanned aerial ve-

hicles (UAVs), computer vision, and deep learning

to provide an automated solution for vehicle inven-

tory management in parking lots. Designed for envi-

ronments such as manufacturing plants and harbors,

the system offers real-time, accurate vehicle count-

ing, minimizing errors and enhancing operational ef-

ficiency. The overveiew of the system is showing in

Figure 1.

A DJI Mavic Pro drone, equipped with a high-

resolution camera, captures aerial footage of parking

lots. Its extended flight range and stability make it

ideal for covering large areas efficiently. The captured

footage is preprocessed to a standardized 720p resolu-

tion to ensure consistent input quality for subsequent

analysis.

Vehicle detection is performed using the YOLO-

v8-OBB (You Only Look Once Version 8 with Ori-

ented Bounding Boxes) deep learning model. This

advanced object detection approach is particularly ef-

fective for densely packed parking lots, as its oriented

bounding boxes align with vehicle orientations, im-

proving detection precision and reducing overlaps or

false positives. To further enhance detection reliabil-

ity, the system applies a confidence threshold of 0.8

(80%), processing only high-confidence detections.

The analysis pipeline is implemented using

Python libraries, including OpenCV for image pro-

cessing, NumPy for efficient numerical computations,

and the Supervision library for managing detection re-

sults. Users interact with the system through a sim-

ple HTML interface built with Flask, where video or

image files can be uploaded. The system processes

the input and outputs annotated frames with bound-

ing boxes, confidence scores, and vehicle counts in

real time.

3.2 Dataset

The dataset used in this study consists of 381 im-

ages captured using a DJI Mavic Pro drone, which

was flown by the researchers over various parking

lots, primarily located at grocery stores and apart-

ment complexes. The drone was flown at altitudes

ranging from 25 to 35 meters to ensure optimal cov-

erage and image quality. Each image was manually

annotated with oriented bounding boxes around ve-

hicle instances, resulting in a total of 2,843 annota-

tions. The annotation process followed specific visi-

bility criteria, requiring at least 60% of a vehicle to be

visible, with both windshields clearly discernible. Ve-

hicles that were occluded by trees were excluded from

the annotations to prevent misidentification of trees as

cars. This ensures that only visible vehicles are in-

cluded, contributing to the model’s accuracy during

training.

The dataset is divided into three subsets, follow-

ing a standard 60/20/20 split: 741 images for train-

ing, 73 for validation, and 61 for testing. This par-

titioning allows for a comprehensive evaluation of

the model’s performance on unseen data and helps

mitigate the risk of overfitting. Before training, the

images undergo several preprocessing steps to stan-

dardize and enhance their quality. The images are

auto-oriented to maintain consistent orientation, then

resized to fit within a 640x640 pixel frame, with

white borders added as necessary. To further enhance

the dataset’s diversity and improve the model’s ro-

bustness, data augmentation techniques are applied.

These include horizontal flipping, 90° clockwise and

counter-clockwise rotations, cropping with a zoom

variation between 0% and 10%, saturation adjust-

ments within a range of -21% to +21%, and the in-

troduction of noise in up to 0.14% of the pixels. Each

training image is augmented to generate three varia-

tions, thereby expanding the training set and increas-

ing the diversity of vehicle appearances and orienta-

tions the model is exposed to.

The main challenges during the creation of the

dataset were determining an appropriate threshold

for vehicle visibility and handling occlusions caused

by trees. These issues were addressed through it-

erative refinement of annotation guidelines, ensuring

that only clear, visible vehicles were included in the

dataset. The diversity of parking lots, varying lighting

conditions, and different vehicle orientations create a

challenging and realistic environment for vehicle de-

tection. These characteristics, along with the prepro-

cessing steps, ensure that the model is well-equipped

to generalize to new and unseen parking lot scenarios.

3.3 Neural Network Model

The system utilizes the YOLO-v8-OBB (You Only

Look Once Version 8 with Oriented Bounding Boxes)

model, which is particularly well-suited for this task

due to its ability to detect vehicles with high preci-

sion. Unlike traditional models that use axis-aligned

bounding boxes, YOLO-v8-OBB employs oriented

bounding boxes that align with the orientation of each

vehicle. This alignment allows the bounding boxes

to more tightly enclose the vehicles, thereby reduc-

ing the number of overlapping boxes and minimiz-

ing false detections. Furthermore, oriented bound-

ICAART 2025 - 17th International Conference on Agents and Artificial Intelligence

1142

Figure 2: The Final Precision-Recall Curve for the YOLO-

v8 OBB Model.

ing boxes help reduce noise during training by ex-

cluding background elements and irrelevant objects,

which leads to more precise training and improved

accuracy during inference. Another advantage of us-

ing YOLO-v8-OBB is its ability to efficiently uti-

lize space, which is especially important in densely

packed parking lots where vehicles are often parked

closely together. This enables the model to accurately

distinguish between adjacent vehicles, increasing de-

tection accuracy in these challenging environments.

In addition to the benefits provided by the use of

oriented bounding boxes, the YOLO-v8 architecture

was selected for its efficiency and accuracy in vehi-

cle detection. The YOLO-v8-OBB model processes

video frames in real time, detecting vehicles frame

by frame. The supervision library outputs bounding

boxes around the detected vehicles with a confidence

level greater than 80%. For each frame, the system

displays a current count of detected vehicles with a

confidence level below 80%. In the case of an im-

age input, the model outputs a total count of detected

vehicles. The deployment of the system is facilitated

through a local web application that allows users to

upload either video or image files for analysis. By

leveraging advanced technologies and libraries, the

proposed system provides an efficient solution for

real-time inventory management, addressing the chal-

lenges of inventory errors in the automotive industry.

4 RESULTS AND DISCUSSION

4.1 Results

To evaluate the performance of the YOLO-v8-OBB

model, a dataset consisting of aerial drone footage

was collected (see Section 3), and various annotation

policies were tested. The model was trained using the

annotated data, and training metrics were recorded to

Figure 3: The Confusion Matrix for the final YOLO-v8

OBB model.

monitor its performance. After training, the model

was evaluated on previously unseen footage to pro-

vide a qualitative and functional comparison.

The performance of the model was assessed us-

ing Average Precision (AP), a metric that combines

both precision and recall. AP is computed during

the evaluation phase and reflects the accuracy of the

model in detecting a single class—passenger vehicles.

This metric is closely related to Mean Average Preci-

sion (mAP), which is used when evaluating models

trained on multiple classes, but in this case, only a

single class is considered. The AP is derived from

the precision and recall values, and is represented by

the Area Under the Precision-Recall Curve (AUC),

shown in Figure 2. During the validation phase, the

model achieved an AP of 0.988, or 98.8%, indicating

high detection accuracy.

Intersection over Union (IoU) is used to deter-

mine the overlap between predicted and ground truth

bounding boxes, where a prediction is considered a

true positive if its IoU exceeds a certain threshold.

Precision and recall are then calculated, with preci-

sion being the ratio of true positives to the total num-

ber of predictions, and recall being the ratio of true

positives to the total number of actual vehicles in the

dataset. The confusion matrix, as shown in Figure 3,

provides a more detailed breakdown of the model’s

classification performance, showing the true positive,

false positive, and false negative counts.

4.2 Discussion

During the deployment of the YOLO-v8-OBB model,

several challenges were encountered that impacted its

performance. One significant issue was occlusion,

where vehicles were partially obstructed by objects

such as trees or equipment. While the model demon-

strated the ability to detect some occluded vehicles,

Optimizing Automotive Inventory Management: Harnessing Drones and AI for Precision Solutions

1143

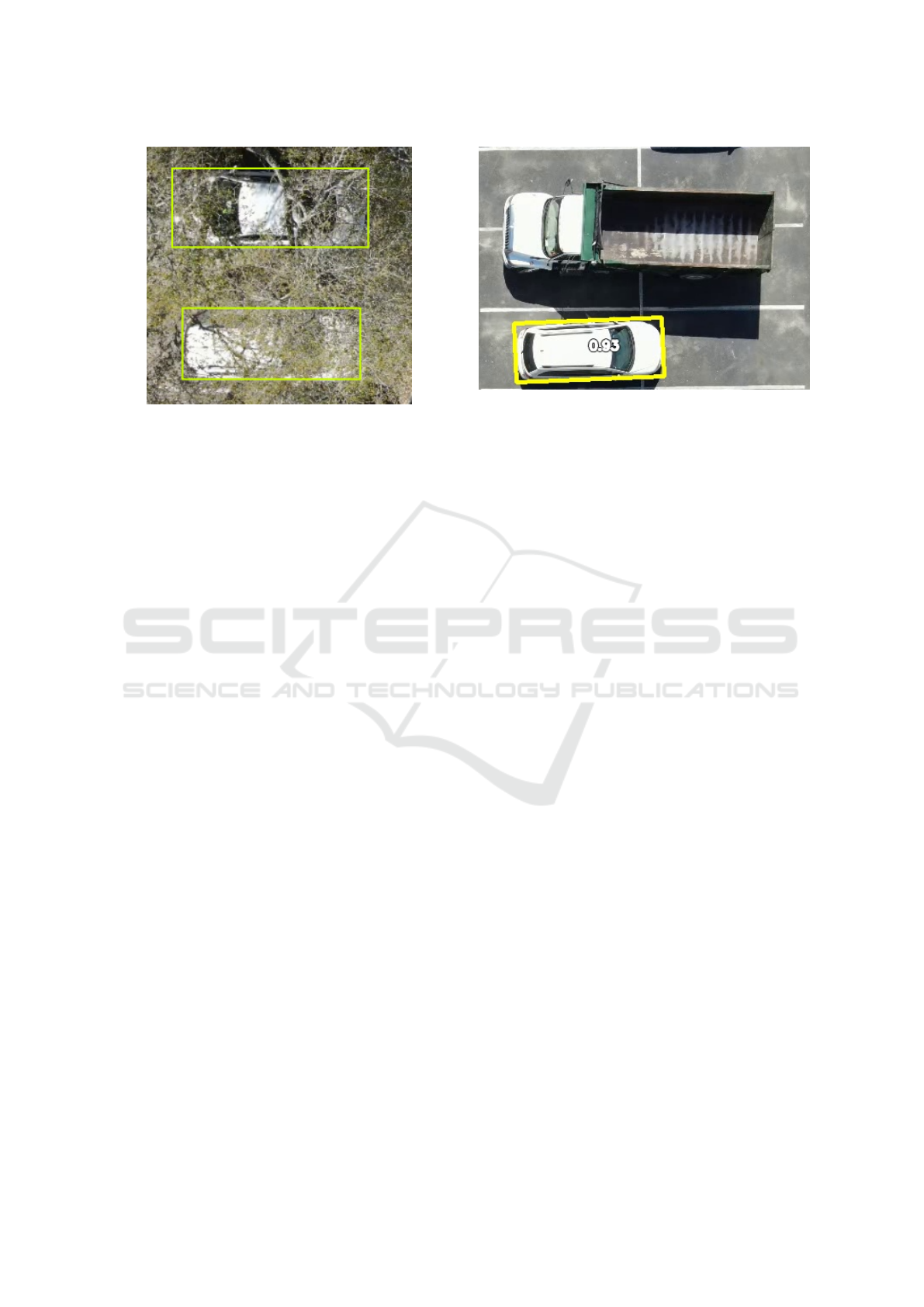

Figure 4: Occluded vehicles captured by YOLO v8 OBB

model.

occasionally outperforming human observers (Figure

4), it also produced misclassifications in certain sce-

narios. These results indicate that further refinement

of the annotation policies, particularly for partially

occluded vehicles, could improve the model’s robust-

ness and accuracy in such cases.

Another limitation observed was the edge-of-view

problem. Vehicles entering the frame from the periph-

ery were sometimes misclassified as smaller objects,

revealing a weakness in the model when handling pe-

ripheral areas of the image. This issue could poten-

tially be addressed by incorporating a more diverse set

of footage from multiple angles, although this would

require additional data collection, which was outside

the scope of the current study.

Moreover, the model’s performance was found to

be sensitive to the altitude of the drone. Variations

in drone height led to decreased confidence in predic-

tions, suggesting that the model could benefit from

either maintaining a more consistent altitude during

data collection or utilizing multi-perspective data to

mitigate the effects of height variation. Future re-

search could explore strategies such as incorporating

footage from different vantage points or adjusting for

varying altitudes to enhance detection accuracy under

diverse conditions.

Despite these challenges, the system demon-

strated the capability to process video streams and

make accurate real-time predictions, validating the

feasibility of using the YOLO-v8-OBB model for ve-

hicle detection in practical applications. The model

effectively distinguished between passenger vehicles

and other vehicle types, as illustrated in Figure 5, fur-

ther reinforcing its potential for real-world deploy-

ment in automotive inventory management.

Figure 5: A classification of a passenger vehicle next to a

commercial truck.

5 CONCLUSION

This paper presented an innovative approach to au-

tomotive inventory management using UAV drones,

computer vision, and deep learning models, specif-

ically the YOLO-v8-OBB model. The integration

of these technologies offers a significant improve-

ment over traditional inventory methods, addressing

issues of inaccuracy while enhancing operational ef-

ficiency and reducing costs. The YOLO-v8-OBB

model demonstrated high precision in vehicle detec-

tion, achieving an average precision (AP) of 98.8%

during validation. Its real-time detection capability

and effectiveness in complex parking environments

make it a promising solution for automating inventory

management in the automotive industry.

However, several challenges were identified dur-

ing the implementation of the system. Issues such as

occlusions, where vehicles were partially obstructed

by other objects, and the edge-of-view problem,

where vehicles entering from the periphery were dif-

ficult to classify, posed limitations. These challenges

impacted the model’s accuracy and highlighted areas

for further improvement. Additionally, maintaining a

consistent drone altitude was critical for optimal pre-

diction confidence. Future work will focus on ad-

dressing these challenges by refining annotation poli-

cies, improving data processing methods, and explor-

ing the use of alternative data sources or perspectives.

Further research into edge computing solutions and

the integration of more sophisticated models could

also enhance the system’s real-time performance and

scalability.

ICAART 2025 - 17th International Conference on Agents and Artificial Intelligence

1144

REFERENCES

Bisio, I., Haleem, H., Garibotto, C., Lavagetto, F., and Scia-

rrone, A. (2021). Performance evaluation and analy-

sis of drone-based vehicle detection techniques from

deep learning perspective. IEEE Internet of Things

Journal, 9(13):10920–10935.

Khan, M. and Yu, S. (2022). Inventory management chal-

lenges in the automotive sector. Journal of Supply

Chain Management, 58(1):90–102.

Kros, J. F. et al. (2019). Inventory accuracy: An empirical

investigation in automotive manufacturing. Produc-

tion and Operations Management, 28(7):1736–1752.

Ota, J. et al. (2019). Drones and ai for automated vehicle

detection and monitoring. Robotics and Autonomous

Systems, 118:89–100.

Sharma, S. and Gupta, R. (2020). Impact of inventory errors

in just-in-time manufacturing. International Journal

of Production Research, 58(10):3115–3132.

Su, Q. et al. (2021). Reducing manual inventory errors us-

ing rfid technology. IEEE Transactions on Industrial

Informatics, 17(4):2468–2477.

Wang, L., Chen, F., and Yin, H. (2016). Detecting and

tracking vehicles in traffic by unmanned aerial vehi-

cles. Automation in construction, 72:294–308.

Xiang, X., Zhai, M., Lv, N., and El Saddik, A. (2018). Ve-

hicle counting based on vehicle detection and tracking

from aerial videos. Sensors, 18(8):2560.

Optimizing Automotive Inventory Management: Harnessing Drones and AI for Precision Solutions

1145