QR Code Detection with Perspective Correction and Decoding in

Real-World Conditions Using Deep Learning and Enhanced Image

Processing

David Joshua Corpuz

1

, Lance Victor Del Rosario

1

, Jonathan Paul Cempron

1

, Paulo Luis Lozano

2

and Joel Ilao

1

1

College of Computer Studies, De La Salle University, Manila, Philippines

2

DLSU Innovation and Technology Office, De La Salle University, Manila, Philippines

{david joshua corpuz, lv delrosario, jonathan.cempron, paulo.lozano, joel.ilao}@dlsu.edu.ph

Keywords:

Decoding, Edge Detection, Perspective Correction, QR Code, YOLO.

Abstract:

QR codes have become a vital tool across various industries, facilitating data storage and accessibility in

compact, scannable formats. However, real-world environmental challenges, including lighting variability,

perspective distortions, and physical obstructions, often impair traditional QR code readers such as the one

included in OpenCV and ZBar, which require precise alignment and full code visibility. This study presents

an adaptable QR code detection and decoding system, leveraging the YOLO deep learning model combined

with advanced image processing techniques, to overcome these limitations. By incorporating edge detection,

perspective transformation, and adaptive decoding, the proposed method achieves robust QR code detection

and decoding across a range of challenging scenarios, including tilted angles, partial obstructions, and low

lighting. Evaluation results demonstrate significant improvements over traditional readers, with enhanced ac-

curacy and reliability in identifying and decoding QR codes under complex conditions. These findings support

the system’s application potential in sectors with high demands for dependable QR code decoding, such as lo-

gistics and automated inventory tracking. Future work will focus on optimizing processing speed, extending

multi-code detection capabilities, and refining the method’s performance across diverse environmental con-

texts.

1 INTRODUCTION

QR codes, or Quick Response codes, have become

an indispensable tool in modern data storage and ac-

cessibility, offering a convenient way to encode in-

formation in a compact, scannable format. Indus-

tries ranging from retail and logistics to healthcare

and event management have adopted QR codes due

to their speed and reliability. However, QR code

usage in uncontrolled environments often encoun-

ters real-world challenges such as variations in light-

ing (Li et al., 2022), perspective distortions (Kar-

rach et al., 2020), and physical obstructions (Liu

and Xu, 2020). Traditional QR code readers like

OpenCV (bin Mahmod et al., 2023) and ZBar (Fer-

ano et al., 2022), which rely on pattern matching and

orientation-specific scanning, are often ineffective in

these scenarios, as they require precise alignment and

full pattern visibility. Therefore, enhancing the ac-

curacy and robustness of QR code detection and de-

coding has become essential to expanding its practical

applications.

This study seeks to develop an adaptable and ac-

curate QR code detection and decoding system capa-

ble of overcoming the limitations of traditional meth-

ods. By leveraging deep learning, specifically the

YOLO object detection model, combined with ad-

vanced image processing techniques, the study aims

to create a solution that excels in challenging condi-

tions. The objective is to demonstrate the effective-

ness of this system in achieving reliable detection and

decoding in complex environments, including scenar-

ios where QR codes are tilted, in a far location, or

subjected to poor lighting.

The study contributes to the field of computer vi-

sion by presenting a novel, multistep pipeline that

combines object detection with image processing to

enhance QR code decoding accuracy. The primary

contributions are: (1) the integration of YOLO for ro-

bust QR code localization across various orientations

Corpuz, D. J., Rosario, L. V., Cempron, J. P., Lozano, P. L. and Ilao, J.

QR Code Detection with Perspective Correction and Decoding in Real-World Conditions Using Deep Learning and Enhanced Image Processing.

DOI: 10.5220/0013287200003912

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 20th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2025) - Volume 3: VISAPP, pages

685-690

ISBN: 978-989-758-728-3; ISSN: 2184-4321

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

685

and distortions; (2) the application of edge detection

and perspective transformation for distortion correc-

tion; and (3) a comparative evaluation with existing

QR code readers to highlight performance gains in

accuracy and reliability. These contributions demon-

strate the potential for improved QR code reading ca-

pabilities in fields where QR codes are widely used,

such as supply chain management and automated in-

ventory tracking.

2 LITERATURE REVIEW

2.1 QR Code Detection Techniques

Traditional QR code readers, such as OpenCV (bin

Mahmod et al., 2023) and ZBar (Ferano et al., 2022),

often exhibit limitations when faced with real-world

complexities, including varying lighting conditions,

perspective distortions and occlusions. These meth-

ods typically assume a good perspective on the code

being scanned and rely on pattern recognition, which

can result in decoding failures under suboptimal con-

ditions (Barzazzi, 2023). Recent studies have in-

vestigated alternative approaches, such as histogram

equalization (Su et al., 2023) and Generative Adver-

sarial Network (GAN)-based image refinement (Dong

et al., 2024; Zheng et al., 2023; Uehira and Unno,

2023), to enhance image quality and improve read-

ability. While these methods address specific chal-

lenges, they often do not sufficiently accommodate

situations involving significant angular distortions or

partial obstructions.

2.2 Object Detection in Computer

Vision

The You Only Look Once (YOLO) family of mod-

els has emerged as a widely adopted framework for

object detection due to its ability to balance speed

and accuracy (Kaur and Singh, 2023; Redmon et al.,

2016). YOLO’s application in object detection tasks

has demonstrated effective handling of various visual

conditions (Wang et al., 2023), making it a suitable

candidate for detecting QR codes in complex settings.

By integrating YOLO into the QR code detection pro-

cess, this study aims to enhance detection reliabil-

ity even in scenarios where traditional detection al-

gorithms struggle due to partial occlusions or non-

standard viewing angles.

2.3 Image Processing for Enhanced

Decoding

Advanced image processing techniques, such as edge

detection (Su et al., 2021; Orujov et al., 2020) and

perspective trnsformation(Hou et al., 2020; Mutha-

lagu et al., 2020), are increasingly applied to address

image distortions and improve decoding accuracy.

Edge detection facilitates the identification of key cor-

ner points (Su et al., 2021), essential for aligning

and correcting the QR code image through perspec-

tive transformation (Muthalagu et al., 2020). Stud-

ies indicate that combining multiple image process-

ing steps, including grayscale conversion (Selva Mary

and Manoj Kumar, 2020) and adaptive thresholding

(Liao et al., 2020; Xing et al., 2021; Guo et al.,

2022), can significantly improve the decoding success

rate, especially under challenging lighting conditions.

The present study leverages these methods to create a

multi-stage pipeline, ensuring robustness in QR code

decoding.

3 METHODOLOGY

3.1 Data Collection and Preprocessing

A comprehensive dataset was constructed to repre-

sent real-world challenges in QR code detection. It

included 1,338 images with QR codes presented un-

der various orientations, distances, and lighting con-

ditions. The dataset was downloaded (N.D, 2022)

and manually annotated with bounding boxes around

each QR code, facilitating accurate model training

and evaluation. To ensure consistency, the dataset was

divided into training (87%), validation (8%), and test-

ing (5%) sets and was managed within the Roboflow

platform. This setup enabled controlled annotation

and versioning, supporting reproducible results across

model iterations.

3.2 YOLO Detection Model

YOLOv5-640 was chosen for its advanced object

detection capabilities, which are well-suited for de-

tecting objects in diverse environments. Training

was conducted on the annotated dataset through

Roboflow’s platform, resulting in high-performance

metrics: mean Average Precision (mAP) of 99.1%,

precision of 99.3%, and recall of 99.1%. Post-

training, the YOLO model was employed to identify

and crop QR code regions from images, as shown in

Fig. 1, serving as the foundation for further image

VISAPP 2025 - 20th International Conference on Computer Vision Theory and Applications

686

processing steps. The cloud-based inference provided

by Roboflow facilitated scalable and efficient testing,

especially in varied lighting and distortion scenarios.

In addition, the system is executed locally to compare

the results with the cloud-based system.

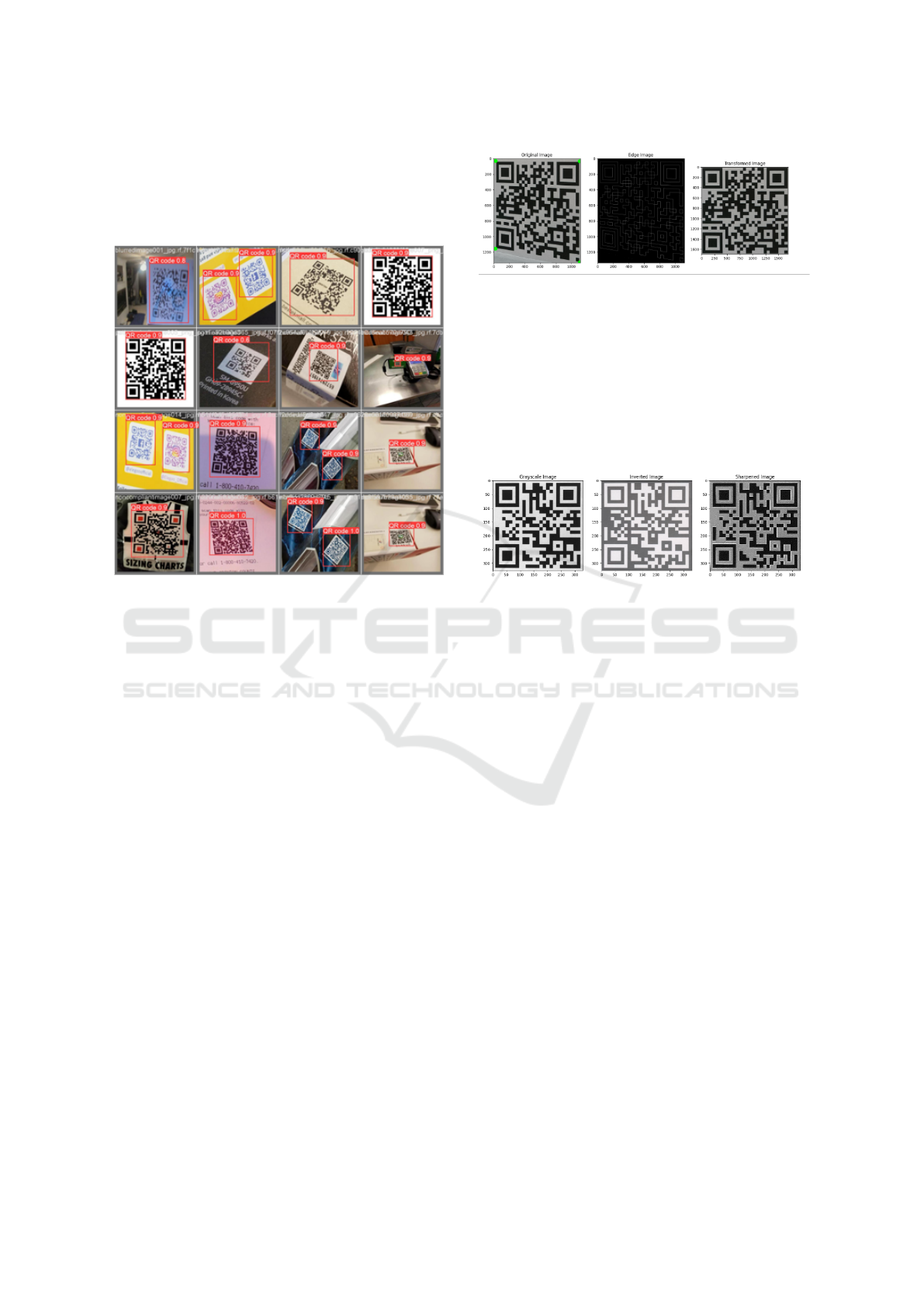

Figure 1: QR code detection with the YOLO model.

3.3 Edge Detection and Perspective

Correction

After detecting the QR code region, edge detection

was applied using the Canny edge detector with opti-

mized threshold values to maximize accuracy in cor-

ner identification. The optimized threshold values

were chosen through hyperparameter tuning. Differ-

ent thresholds were tested and the most QR codes

detected among a chosen subset of the dataset were

chosen. Sample images are shown in Fig. 2. De-

tecting the four corner points allowed for perspective

transformation, a technique that corrects image distor-

tions and aligns the QR code for accurate decoding.

OpenCV’s perspective transformation tool was used

to achieve alignment, ensuring the QR code’s orienta-

tion was corrected for optimal readability. To account

for minor inaccuracies in corner detection, an addi-

tional padding variable was introduced, which pro-

vided robustness by accommodating slight deviations

that could impact decoding accuracy.

3.4 Decoding

Following perspective correction, the decoding phase

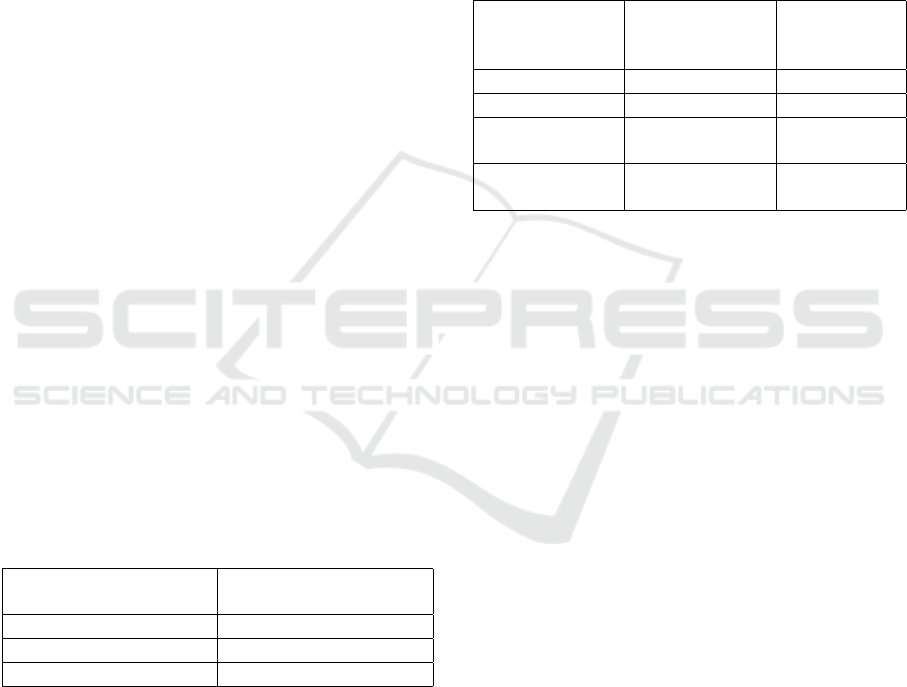

applied various scaling factors and image process-

ing techniques, including grayscale conversion, color

inversion, and sharpening filters. These processing

Figure 2: Perspective transformation of QR code after Edge

Detection.

techniques are shown in Fig. 3. Then each image

version processed was analyzed using OpenCV’s QR

decoding library, maximizing the probability of suc-

cessful decoding under a variety of conditions. This

multiscale approach allowed the solution to handle

different levels of image quality and complexity, en-

hancing robustness against challenges like low con-

trast and partial obstructions.

Figure 3: Applied image processing techniques on a QR

code.

3.5 Evaluation

The performance of the proposed approach was as-

sessed through several key evaluation metrics.

• Detection Accuracy - Assessed by comparing

the YOLO-detected QR code regions with ground

truth annotations, measuring the system’s ability

to accurately identify QR codes under varied con-

ditions.

• Decoding Success Rate - The proportion of QR

codes successfully decoded after detection, re-

flecting the solution’s robustness across different

environmental conditions.

• Distance Tolerance - Determined by testing the

maximum distance at which accurate QR code de-

tection and decoding could be achieved, providing

insight into the effective range of the solution.

• Angle Tolerance - Evaluated by positioning QR

codes at increasing angles until decoding failure,

measuring the maximum angular distortion the

system could accommodate.

• Mean Read Time - Average processing time from

detection to de-coding, providing insight into the

trade-off between processing speed and accuracy.

Although the proposed method prioritizes accu-

racy, the mean read time metric offers a compar-

QR Code Detection with Perspective Correction and Decoding in Real-World Conditions Using Deep Learning and Enhanced Image

Processing

687

ison against traditional readers like OpenCV and

ZBar.

4 RESULTS AND DISCUSSION

4.1 Accuracy and Robustness

The proposed solution was evaluated against other

QR code readers, specifically the one included in

OpenCV and ZBar, on a dataset encompassing var-

ious conditions. Results indicate that the solution

demonstrates significantly higher decoding accuracy

and robustness, consistently outperforming the base-

line methods in identifying and decoding QR codes

under non-ideal conditions. This robustness is evi-

dent across a range of angles, distances, showcasing

the adaptability of the proposed approach.

4.2 Distance and Angle Tolerance

Testing

Extensive tests were conducted to determine the max-

imum readable distance and angle tolerance as shown

in Fig. 5. The proposed solution maintains decoding

accuracy at distances significantly greater than those

achieved by OpenCV and ZBar as shown in Fig. 4.

Additionally, it maintains decoding functionality at an

angular distortion tolerance of up to 80 degrees, sur-

passing the limits of ZBar (70 degrees) and OpenCV

(52 degrees) as noted in Table 1. These findings con-

firm the method’s effectiveness for applications where

QR codes are often viewed from challenging angles or

distances.

Table 1: QR Code Decoding Maximum Angle of Distortion

Comparison.

QR Reader Maximum Angle of

Distortion

OpenCV 52 degrees

ZBar 70 degrees

Ours 80 degrees

4.3 Decoding Efficiency and Processing

Speed

While the proposed method demonstrated superior

decoding accuracy, it exhibited a higher mean read

time, as shown in Table 2, averaging 439 millisec-

onds for the cloud-based system and 92 milliseconds

for the local-based system, compared to 36 millisec-

onds for OpenCV and 46 milliseconds for ZBar. This

increased processing time reflects a trade-off designed

to prioritize accuracy, making the method particularly

suited for applications where reliability is more crit-

ical than speed, such as inventory management in

warehouses. When comparing the cloud-based and

local-based implementations of the proposed system,

the local implementation performs better, reducing

the processing time by more than 300 milliseconds.

This behavior is expected for both systems. Although

the proposed approach, when run locally, is slower

than conventional readers, the processing time re-

mains within an acceptable range.

Table 2: QR Code Reader Performance Comparison.

QR Reader Total success-

fully decoded

QR codes

Mean Read

Time

OpenCV 214 36 ms.

ZBar 352 46 ms.

Ours

(cloud-based)

459 439 ms.

Ours

(local-based)

459 92 ms.

4.4 Comparative Performance Analysis

In comparative testing, also shown in Table 2, the so-

lution successfully decoded 459 QR codes, compared

to 352 by ZBar and 214 by OpenCV. This 30% im-

provement over conventional readers underscores the

utility of the proposed approach for applications with

complex environmental variables, validating its effec-

tiveness for real-world scenarios where traditional QR

code readers may falter due to environmental incon-

sistencies.

5 CONCLUSIONS

The study demonstrates that integrating YOLO, edge

detection, and perspective transformation signifi-

cantly enhances QR code detection and decoding in

challenging conditions. The solution’s superior per-

formance over traditional readers confirms its poten-

tial for reliable QR code reading in complex applica-

tions.

The robustness of the proposed approach makes

it particularly suited for industries such as logistics,

warehousing, and automated systems that require de-

pendable QR code decoding. Its ability to handle an-

gular distortions, and varying lighting conditions ren-

ders it versatile in diverse real-world settings.

To enhance the effectiveness and versatility of QR

code detection, it is recommended to implement sup-

port for detecting multiple QR codes within a single

VISAPP 2025 - 20th International Conference on Computer Vision Theory and Applications

688

Figure 4: Code Decoding Maximum Distance Comparison.

Figure 5: Testing samples: (a) live video, (b) sourced

dataset, (c) synthetic.

image. This capability would enable the solution to

operate efficiently in environments where numerous

QR codes are present simultaneously, thereby broad-

ening its range of applications.

Transitioning from the Roboflow API to a locally

trained object detection model is advisable, as this ap-

proach would allow for greater control over the train-

ing process and minimize dependency on external ser-

vices. Such a transition could lead to enhanced effi-

ciency and customization, ensuring that the model is

specifically tailored to particular needs and use cases.

It is also essential to develop a model that ensures

the bounding box precisely encloses each QR code, or

alternatively, to implement a method that determines

optimal padding for each detection. This improve-

ment would increase the accuracy of QR code isola-

tion, thereby minimizing decoding errors and enhanc-

ing reliability.

Optimizing the process of identifying corner co-

ordinates, particularly around edge detection, is also

crucial. Implementing code that adjusts to vari-

ous thresholds for hysteresis in edge detection would

likely improve the accuracy of QR code boundary

identification, especially in complex image contexts.

Further, the integration of advanced image pro-

cessing techniques could substantially improve QR

code decoding. By exploring methods such as adap-

tive thresholding, noise reduction, and contrast en-

hancement, the readability of QR codes under chal-

lenging conditions may be significantly enhanced,

thus increasing the robustness of the solution.

In addition, optimizing the read time of the so-

lution could enhance its practical utility. Techniques

QR Code Detection with Perspective Correction and Decoding in Real-World Conditions Using Deep Learning and Enhanced Image

Processing

689

such as parallel processing, algorithmic enhance-

ments, or hardware acceleration may reduce read

times without compromising accuracy. Conducting

tests across a broader variety of QR code types and

environmental conditions would provide valuable in-

sights into performance, ensuring the solution re-

mains reliable and effective across diverse scenarios.

REFERENCES

Barzazzi, D. (2023). A quantitative evaluation of the qr

code detection and decoding performance in the zxing

library.

bin Mahmod, M. N., binti Ramli, M., and Yasin, S. N. T. M.

(2023). Qr code detection using opencv python with

tello drone.

Dong, H., Liu, H., Li, M., Ren, F., and Xie, F. (2024). An al-

gorithm for the recognition of motion-blurred qr codes

based on generative adversarial networks and atten-

tion mechanisms. International Journal of Computa-

tional Intelligence Systems, 17(1):83.

Ferano, F. C. A., Olajuwon, J., and Kusma, G. (2022).

Qr code detection and rectification using pyzbar and

perspective transformation. 15th November 2022,

100(21):120–127.

Guo, S., Wang, G., Han, L., Song, X., and Yang, W.

(2022). Covid-19 ct image denoising algorithm based

on adaptive threshold and optimized weighted me-

dian filter. Biomedical Signal Processing and Control,

75:103552.

Hou, Y., Zheng, L., and Gould, S. (2020). Multiview de-

tection with feature perspective transformation. In

Computer Vision–ECCV 2020: 16th European Con-

ference, Glasgow, UK, August 23–28, 2020, Proceed-

ings, Part VII 16, pages 1–18. Springer.

Karrach, L., Pivar

ˇ

ciov

´

a, E., and Bo

ˇ

zek, P. (2020). Identifi-

cation of qr code perspective distortion based on edge

directions and edge projections analysis. Journal of

imaging, 6(7):67.

Kaur, R. and Singh, S. (2023). A comprehensive review

of object detection with deep learning. Digital Signal

Processing, 132:103812.

Li, J., Zhang, D., Zhou, M., and Cao, Z. (2022). A motion

blur qr code identification algorithm based on feature

extracting and improved adaptive thresholding. Neu-

rocomputing, 493:351–361.

Liao, J., Wang, Y., Zhu, D., Zou, Y., Zhang, S., and Zhou, H.

(2020). Automatic segmentation of crop/background

based on luminance partition correction and adaptive

threshold. IEEE Access, 8:202611–202622.

Liu, W. and Xu, Z. (2020). Some practical constraints

and solutions for optical camera communication.

Philosophical Transactions of the Royal Society A,

378(2169):20190191.

Muthalagu, R., Bolimera, A., and Kalaichelvi, V. (2020).

Lane detection technique based on perspective trans-

formation and histogram analysis for self-driving cars.

Computers & Electrical Engineering, 85:106653.

N.D, D. (2022). Qr-code-detection object detection dataset

and pre-trained model by deep.

Orujov, F., Maskeli

¯

unas, R., Dama

ˇ

sevi

ˇ

cius, R., and Wei, W.

(2020). Fuzzy based image edge detection algorithm

for blood vessel detection in retinal images. Applied

Soft Computing, 94:106452.

Redmon, J., Divvala, S., Girshick, R., and Farhadi, A.

(2016). You only look once: Unified, real-time ob-

ject detection.

Selva Mary, G. and Manoj Kumar, S. (2020). Secure

grayscale image communication using significant vi-

sual cryptography scheme in real time applications.

Multimedia Tools and Applications, 79(15):10363–

10382.

Su, Q., Qin, Z., Mu, J., and Wu, H. (2023). Rapid detection

of qr code based on histogram equalization-yolov5.

In 2023 7th International Conference on Electrical,

Mechanical and Computer Engineering (ICEMCE),

pages 843–848. IEEE.

Su, Z., Liu, W., Yu, Z., Hu, D., Liao, Q., Tian, Q.,

Pietik

¨

ainen, M., and Liu, L. (2021). Pixel difference

networks for efficient edge detection. In Proceedings

of the IEEE/CVF international conference on com-

puter vision, pages 5117–5127.

Uehira, K. and Unno, H. (2023). Study on recognition tech-

nology of qr code superimposed on images using gan.

In 2023 7th International Conference on Imaging, Sig-

nal Processing and Communications (ICISPC), pages

6–10. IEEE.

Wang, J., Yang, P., Liu, Y., Shang, D., Hui, X., Song, J., and

Chen, X. (2023). Research on improved yolov5 for

low-light environment object detection. Electronics,

12(14):3089.

Xing, H., Zhu, L., Feng, Y., Wang, W., Hou, D., Meng, F.,

and Ni, Y. (2021). An adaptive change threshold selec-

tion method based on land cover posterior probability

and spatial neighborhood information. IEEE Journal

of Selected Topics in Applied Earth Observations and

Remote Sensing, 14:11608–11621.

Zheng, J., Zhao, R., Lin, Z., Liu, S., Zhu, R., Zhang, Z.,

Fu, Y., and Lu, J. (2023). Ehfp-gan: Edge-enhanced

hierarchical feature pyramid network for damaged qr

code reconstruction. Mathematics, 11(20):4349.

VISAPP 2025 - 20th International Conference on Computer Vision Theory and Applications

690