A Long-Term Study of the Pandemic Impact on Education: A Software

Engineering Case

Kattiana Constantino

1 a

, Pedro Garcia

2 b

and Eduardo Figueiredo

3 c

1

Federal University of Jequitinhonha and Mucuri Valleys (UFVJM), Brazil

2

Federal University of Ouro Preto (UFOP), Brazil

3

Federal University of Minas Gerais (UFMG), Brazil

Keywords:

COVID-19, Education, Software Engineering Course, Undergraduate.

Abstract:

The COVID-19 pandemic has had an unprecedented and widespread impact on education, significantly af-

fecting students worldwide. Although many studies have investigated this impact in the last 5 years, we still

lack empirical knowledge about the long-lasting impact of this most recent pandemic on performance in the

2020’s generation of students. This quantitative study aims to evaluate the long-term impact of the pandemic

on student performance, especially in the Software Engineering course. To achieve this goal, we collected

historical data from Software Engineering students three years – prior to (2019) and post (2022 and 2023)

COVID-19 – such as their grades in repeated questions throughout the semesters. We employed statistical

methods to analyze this quantitative data. As one of our results, we identified that the pandemic negatively

influenced student performance in 2022, especially in the first-semester post-pandemic. However, we also

observed that the impact was mitigated in the following semesters of 2022 and 2023. This result is a breath

of hope since it suggests that the current generation of students has overcome the challenges imposed by the

pandemic period.

1 INTRODUCTION

Several pandemics have occurred in human history

and affected the human life, such as education and

economy (Piret and Boivin, 2021). However, the im-

pact of the COVID-19 pandemic on education is both

unprecedented and widespread in education history,

impacting over 1.5 billion students in 195 countries

by school and university closures. In fact, (Willies,

2023) reports that 87% of the world’s student pop-

ulation was somehow affected by COVID-19 school

closures. As a result, almost overnight, many schools

and education systems began to offer education re-

motely (Barr et al., 2020) (Ravi et al., 2021) (de Souza

et al., 2021). Examples of remote learning solutions

include learning platforms, educational applications,

and resources to help students and educators.

Previous work (Hebebci et al., 2020) (Mooney

and Becker, 2021) has investigated the impact of

the COVID-19 pandemic on education. Such stud-

ies have examined, for instance, the effects of remote

learning on student performance and engagement in

a

https://orcid.org/0000-0003-4511-7504

b

https://orcid.org/0009-0005-7744-697X

c

https://orcid.org/0000-0002-6004-2718

several education fields, including Computer Science

(CS) or Software Engineering (SE) courses. For in-

stance, de Deus et al. (2020) investigated how the

Emergency Remote Education (ERE) has been con-

ducted by lecturer in the field of Computer Science in

Brazil, in response to the COVID-19 pandemic. Be-

sides, Barr et al. (2020) investigated the impact of the

COVID-19 pandemic on their eight-week undergrad-

uate Software Engineering program, particularly dur-

ing the lockdown period, focusing on the rapid shift

to online learning across three distinct modules.

In another perspective, Lin and Hou (2023) ex-

plored how students’ educational background and

family income influence their experiences with online

learning compared to traditional in-person courses.

However, as far as we are concerned, no previous

work has quantitatively investigated the long-term im-

pact of this pandemic on student performance.

To fill this gap, this paper presents an empirical

study based on historical data to assess the impact

of the COVID-19 pandemic on the performance of

students, focusing on a Software Engineering course.

We analyzed student grades for five years, from 2019

to 2023, i.e., before and after COVID-19. We es-

tablished a standardized evaluation protocol, ensuring

continuity by the same professor assessing all par-

Constantino, K., Garcia, P. and Figueiredo, E.

A Long-Term Study of the Pandemic Impact on Education: A Software Engineering Case.

DOI: 10.5220/0013295200003932

In Proceedings of the 17th International Conference on Computer Supported Education (CSEDU 2025) - Volume 2, pages 971-982

ISBN: 978-989-758-746-7; ISSN: 2184-5026

Copyright © 2025 by Paper published under CC license (CC BY-NC-ND 4.0)

971

ticipant responses consistently throughout the entire

study duration. As our focus is not on remote teach-

ing, we excluded 2020 and 2021 from our analysis be-

cause these two years did not apply in-person teach-

ing. Therefore, this study involved 297 students in

the three years (2019, 2022, and 2023) of a Software

Engineering course where all students had in-person

classes and exams. The course covers many Soft-

ware Engineering topics, from requirements analysis

to software design, implementation, and testing. Stu-

dent performance was assessed based on the grades

obtained in the course’s in-person exams. To make

grades comparable across years, we selected 36 exam

questions that appeared in more than one semester.

With them, we could compare student grades on the

same questions in two (or more) semesters.

Based on exam questions that repeated over

semesters before (2019-1 and 2019-2) and immedi-

ately after (2022-1) the COVID-19 pandemic, we ver-

ify that the average grades of students were lower

in about 61% of questions after pandemic compared

to before pandemic. This result suggests that the

COVID-19 pandemic may have had a negative impact

on the performance of students. However, fortunately,

we also verified a significant improvement in student

performance from the first year (2022) to the second

year (2023) after the pandemic. By comparing these

two years, the grades of students increased in about

86% of the analyzed questions. This result suggests

that the pandemic does not have a prolonged impact

on students’ performance.

Our primary contributions can be summarized as

follows:

(i) We describe a quantitative research to inves-

tigate the impact of the pandemic on student

performance. We designed a robust method,

with well-defined hypotheses, research ques-

tions, and statistical analysis methods;

(ii) This research fills an important gap by analyzing

the longitudinal impact of COVID-19 on edu-

cation, moving beyond immediate effects to ex-

plore recovery trends;

(iii) The findings contribute to existing evidence to

ongoing debates about the long-term implica-

tions of remote learning during the pandemic;

(iv) This study provides valuable insights to educa-

tors, administrators, and policymakers in higher

education.

Our comprehensive replication package is readily

accessible online to facilitate future replications and

extensions

1

.

1

https://github.com/PedroClair/prePosCovid

The structure of this paper unfolds as follows.

Section 2 presents the context of our case study which

is a Software Engineering course. Section 3 outlines

the setup of our study, including its goal, two research

questions and steps. Furthermore, we analyze and re-

port the results of this study focusing on the two re-

search questions (Section 4). We also revisit the pos-

sible threats to the study validity in Section 5 and re-

lated work in Section 6. Finally, Section 7 concludes

this paper with directions for future work.

2 THE SOFTWARE

ENGINEERING COURSE

The Software Engineering Course (SE Course) is

a 60-hour course offered each semester for Bache-

lor’s degrees in Computer Science and Information

Systems. Its primary aim is to provide students

with the essential concepts and techniques for creat-

ing complex software systems (Sommerville, 2015)

(de Almeida Souza et al., 2017). The syllabus encom-

passes many subjects, including software develop-

ment processes, agile methods, software requirements

analysis and specification, software design, software

architecture, implementation, testing, and software

quality. We introduce each topic weekly and sequen-

tially throughout the semester. Each lecture uses con-

textualized problems to help the students understand a

given topic. Students practice their knowledge of the

topics by solving assessment exam questions through-

out the semester (Santos et al., 2015).

We considered six semesters for analysis and

discussion. Two semesters for the SE Course be-

fore COVID-19 pandemic and four semesters after

COVID-19. In each semester, the numbers of en-

rolled students ware 44, 40, 59, 54, 55, 45 for 2019-1,

2019-2, 2022-1, 2022-2, 2023-1 and, 2023-2, respec-

tively. To allow a fair comparison across semesters,

the same lecturer taught the same course syllabus us-

ing the same textbook (Sommerville, 2015) in all six

semesters under study. However, the social isolation

imposed due to the COVID-19 pandemic required

drastic changes in how we carry out our daily activ-

ities, including teaching activities in 2020 and 2021.

That it, the widely spread of COVID-19 has led the

educational institutions to invest in online learning

(2020 and 2021). For this reason, in this work, we

do not analyzed data, such as the student grades, in

these two years.

CSEDU 2025 - 17th International Conference on Computer Supported Education

972

3 STUDY SETTINGS

In this section, we delve into our study settings, high-

lighting two main pillars. First, we outline the goal

and research questions. Following them, we provide

a comprehensive guide for data acquisition and anal-

ysis, establishing a robust framework for conducting

this research study.

3.1 Study Goal and Research Questions

The goal of this work is to assess the impact of the

COVID-19 pandemic on the performance of students

in a Software Engineering course. To achieve this

goal, we formulated two Research Questions (RQs)

presented below.

• RQ

1

. What was the impact of the COVID-19 pan-

demic on student performance?

• RQ

2

. How long the impact of the COVID-19 pan-

demic on student performance lasts?

Therefore, for RQ

1

and RQ

2

, our interest is (i) to

understand the relationship between the periods be-

fore and after the pandemic and (ii) to investigate

about the impact of COVID-19 on performance of the

students, in the two years after the pandemic.

3.2 Hypotheses Formulation

We defined hypotheses for RQ

1

: the COVID-19 pan-

demic impact on student performance. To answer

RQ

1

, we compare the performance of students across

semesters and selected 36 exam questions that ap-

peared in more than one semester. Thus, RQ

1

was

turned into the null and alternative hypotheses as fol-

lows.

H

0

: There is no significant difference related

to the impact of the COVID-19 pandemic

on student performance in semesters before

(2019) and after (2022-1) this pandemic.

H

a

: There is significant difference related to

the impact of the COVID-19 pandemic on stu-

dent performance in semesters before (2019)

and after (2022-1) this pandemic.

We defined hypotheses for RQ

2

: the Long-Term

Impact of the COVID-19 Pandemic on students’ per-

formance in Software Engineering activities. As men-

tioned, to answer RQ

2

, we compare the grades of the

students for each question across semesters. Thus, the

null and alternative hypotheses are follows.

H

0

: There is no significant difference in stu-

dents performance from the first year after af-

ter COVID-19 (2022) to the second year after

COVID-19 (2023).

H

a

: There is significant difference in stu-

dents performance from the first year after af-

ter COVID-19 (2022) to the second year after

COVID-19 (2023).

Let µ be the average grades (RQ

1

and RQ

2

). Thus,

µ1 and µ2 denote the average grades of the students

across semesters. Then, the aforementioned set of hy-

potheses can be formally stated as:

H

0

: µ

1

= µ

2

H

a

: µ

1

̸= µ

2

To test all theses hypotheses, we considered 95%

confidence levels (ρ = 0.05).

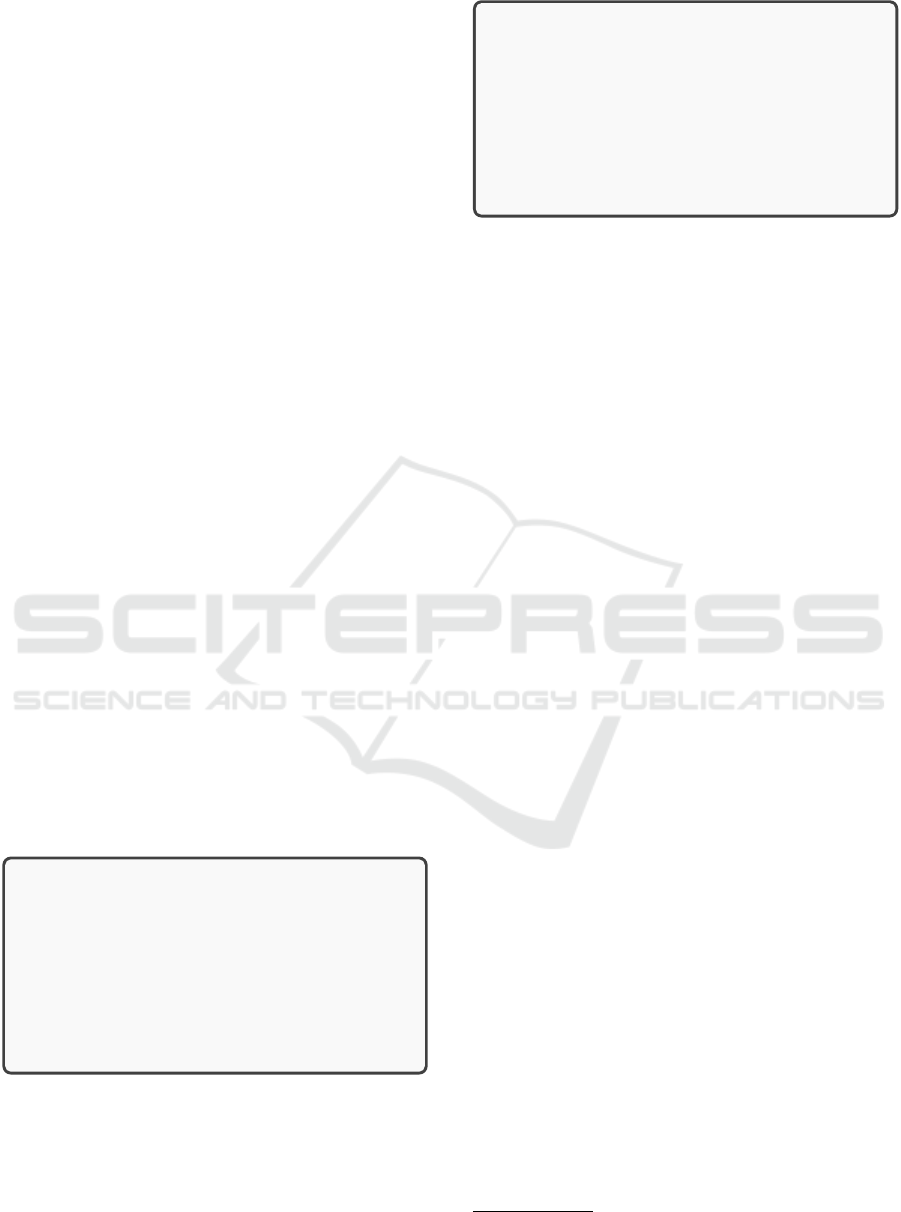

3.3 Study Steps

Planning Case Study. To answer the research ques-

tions, we planned and performed a case study to as-

sess the influence of the COVID-19 pandemic on stu-

dent performance, focusing on the Software Engi-

neering course, as shown in Figure 1.

Selecting Questions. We selected 36 exam ques-

tions that appeared in more than one semester during

the “pre” and “post”-pandemic periods throughout the

semesters of 2019, 2022, and 2023. This process in-

volves an analysis of the curriculum content. Follow-

ing this stage, the grades assigned to each question

were collected and standardized to the same scale, en-

suring a cohesive dataset for analysis. Furthermore,

the questions can be closed-ended, which leaves re-

sponses limited and narrowed to the given options,

or open-ended questions

2

, in which the students get

100% control over what they want to respond to. In

open-ended questions, they are not restricted by the

limited number of options. That is, they can write

their answers in more than one word, sentence, or

something longer, like a paragraph.

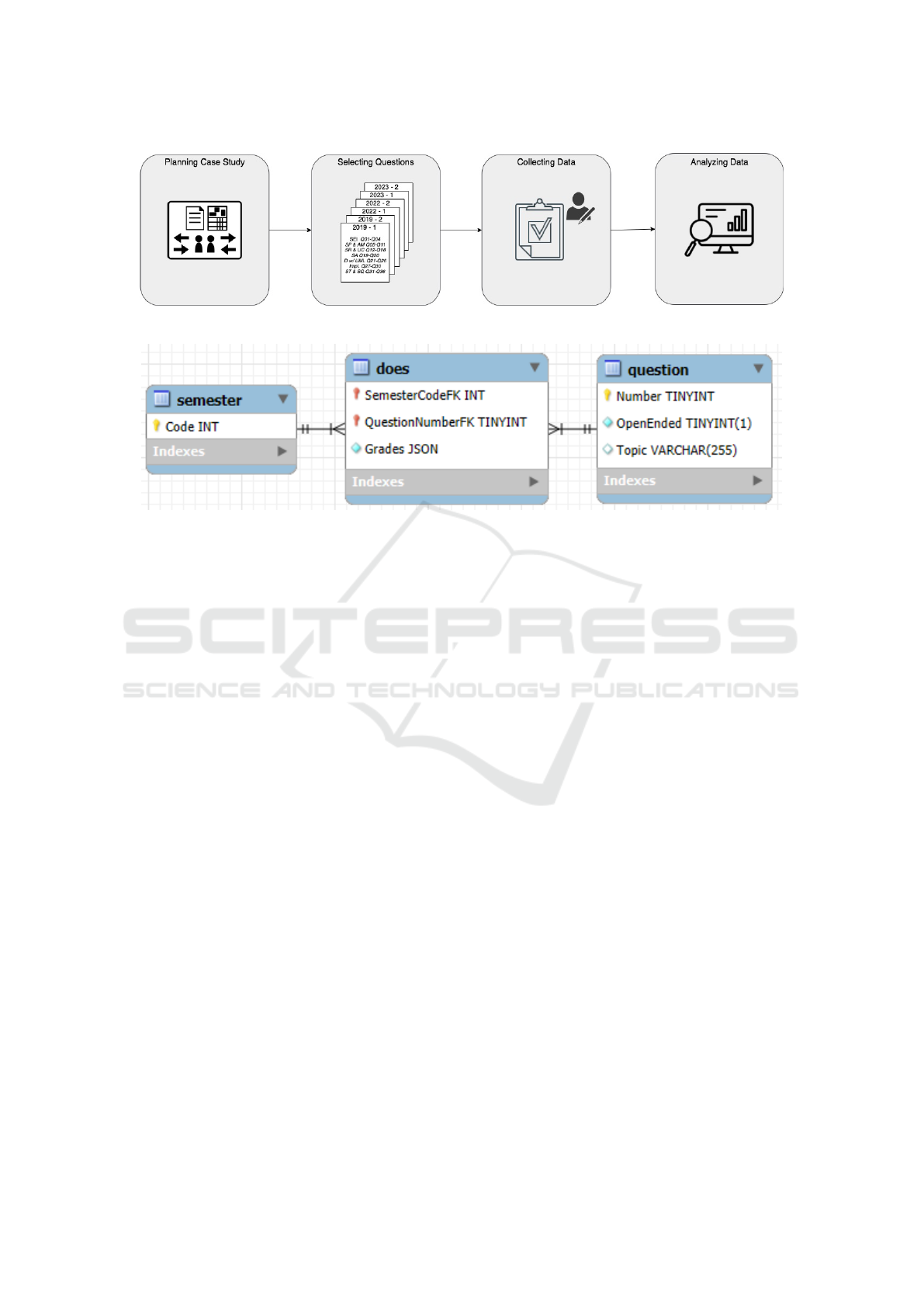

Collecting Data. We collected data from the exams

for the students enrolled in the SE Course. Figure 2

presents the proposed database model, which includes

three entities: Semester, Does, and Question.

The Semester entity represents the academic pe-

riod and is uniquely identified by the attribute Code,

which serves as the primary key. This attribute

ensures that each semester entry is distinct in the

database, supporting tracking and management of

specific academic terms.

2

We used “*” in figures and tables to mean that the

exam question was open-ended question.

A Long-Term Study of the Pandemic Impact on Education: A Software Engineering Case

973

Figure 1: The phases of the study.

Figure 2: Entity-Relationship Data Model Concept. Generate by Reverse Engineering in MySQL Workbench.

The Question entity represents individual ques-

tions used within semesters. It includes the attributes

Number, Open-Ended, and Topic. The Number is

the primary key, uniquely identifying each question.

Open-ended is a Boolean attribute indicating whether

the question requires an open-ended response. The

Topic attribute specifies the subject matter or theme

of the question, allowing for categorization and re-

trieval based on topic areas. Together, these attributes

support diverse question types and flexible question

categorization.

The Does entity captures the relationship between

semesters and questions, incorporating the attributes

of Grades. Grades record the score or grade associ-

ated with each semester-question combination. This

setup enables tracking of specific grades for each

question as it appears in different semesters.

Table 1 presents the questions for the seven top-

ics presented in the SE Course. To gather quantitative

data on student performance, specific questions about

the topics covered in the SE Course were carefully

selected and incorporated into assessment activities

throughout the semester. These questions were as-

signed predetermined scores, which were made public

in advance to the students (Figure 1).

Statistical Test. We gather comprehensive quantita-

tive data on the grades of exam questions across dif-

ferent semesters. It is crucial to note that all obser-

vations from both groups are independent. Since the

data exhibit a normal distribution, we employed the

unpaired Student’s T-test (Student, 1908) for com-

paring the two groups. To ensure this assumption

holds, we conducted the Shapiro-Wilk Normality Test

(Shapiro and Wilk, 1965) to verify if the population

follows a Normal distribution. However, if the data

deviate from normality, indicating a non-normal or

skewed distribution, we opt for the Mann-Whitney U

Test (Mann and Whitney, 1947) instead.

Analyzing Data. Finally, the grades assigned to

each of these questions were standardized to the

same scale, ensuring a cohesive dataset for analy-

sis. All data were analyzed, interpreted and reported

in the results. Besides, all questions and procedures

we followed are available online for future repli-

cations/extensions at https://github.com/PedroClair/

prePosCovid.

4 RESULTS

This section presents the key findings of our investiga-

tion into the impact of COVID-19 on student perfor-

mance. We address four fundamental aspects. First,

we examine the overall impact of COVID-19 on stu-

dent performance. Second, we analyze how this im-

pact has evolved throughout the pandemic. Third, we

conduct a year-by-year comparison to highlight trends

and identify any variations in performance. Finally,

we investigate the changes in average grades through-

out the study period. These analyses provide a com-

prehensive understanding of the multifaceted impact

of the pandemic on educational outcomes.

CSEDU 2025 - 17th International Conference on Computer Supported Education

974

Table 1: Topics and their questions.

Topics Question IDs

SE Introduction Q01, Q02, Q03*, and Q04

Software Processes and Agile Methods Q05, Q06*, Q07, Q08, Q09, Q10, and Q11

Software Requirements and Use Cases Q12, Q13*, Q14*, Q15, Q16*, Q17, and Q18*

Software Architecture Q19 and Q20

Design with UML Q21, Q22, Q23, Q24*, Q25*, and Q26*

Implementation Q27*, Q28*, Q29*, and Q30*

Software Testing and Software Quality Q31, Q32*, Q33*, Q34*, Q35*, and Q36*

Note: We used “*” to mean that exam question was open-ended question.

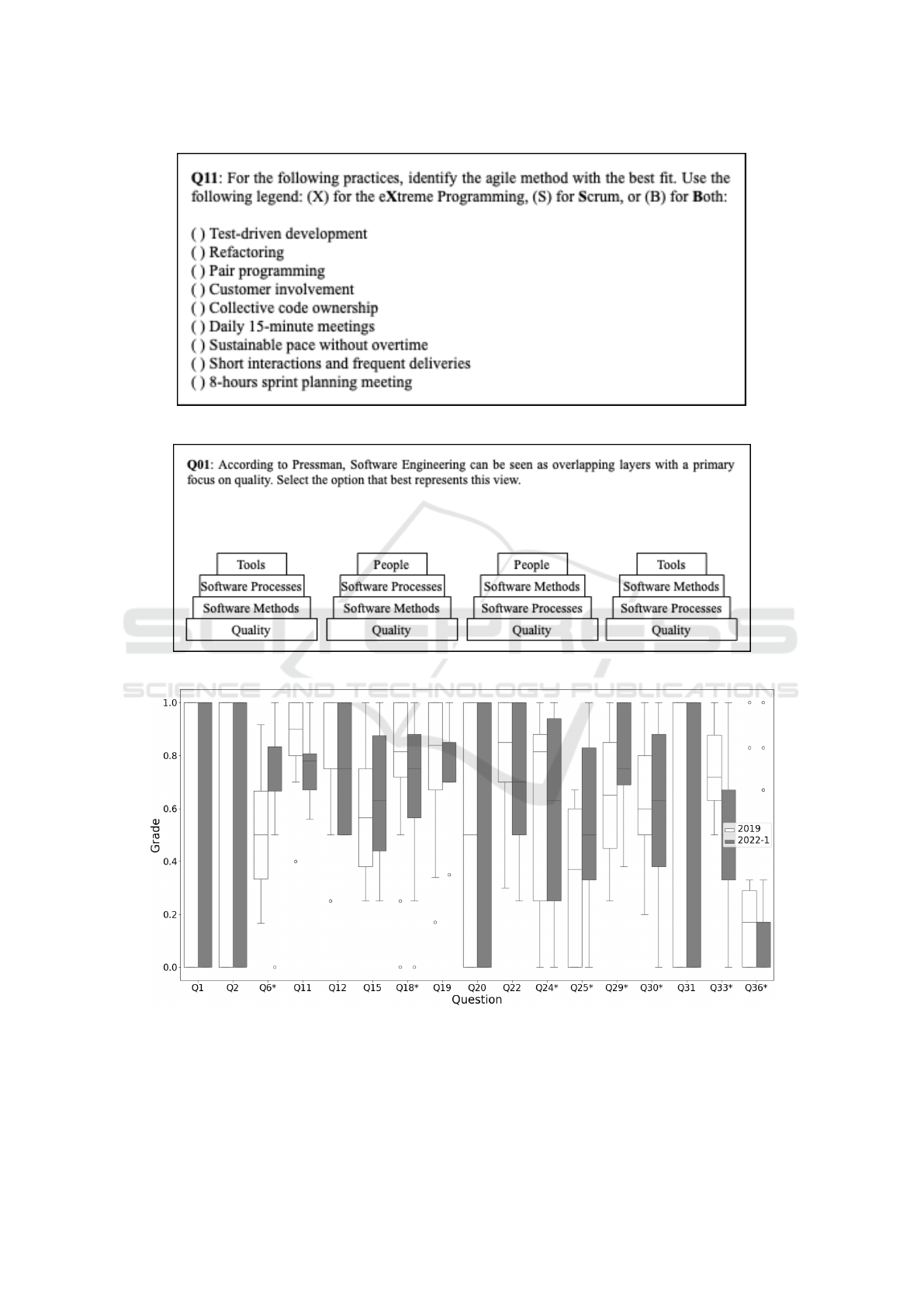

4.1 Assessing the Impact of COVID-19

Pandemic (Rq

1

)

The goal of RQ

1

was to assess the impact of the

COVID-19 pandemic on the performance of the stu-

dents in the SE Course before (2019) and immedi-

ately after (2022-1) COVID-19. Figure 5 compares

the general average grades obtained by students in the

class for each question. Only common questions for

the semesters (2019 versus 2022-1) were analyzed.

We considered the 2022-1 semester because it is just

after pandemic. That is, in 2022-1, the students re-

turned to in-person classes and exams. We observed a

decline in the average scores for 11 questions, includ-

ing Q01, Q11, Q12, and others.

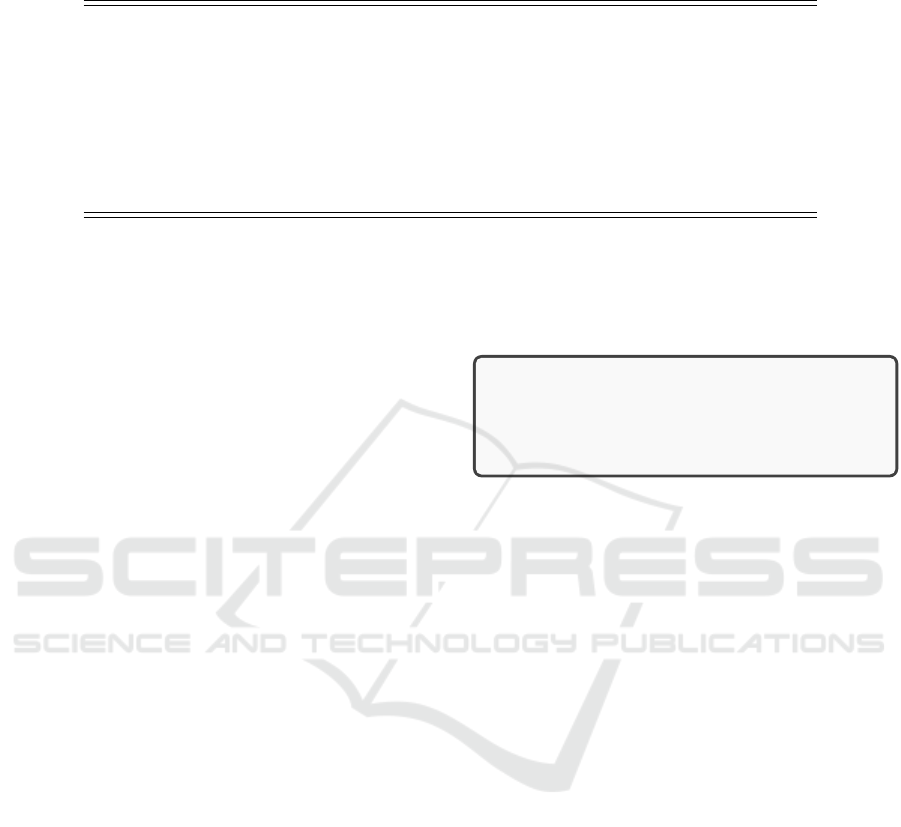

To illustrate a decrease in student performance

over time, consider the Figure 3 used in assessments

across multiple semesters. This question asks stu-

dents to identify the agile method that aligns best with

each practice. The answer choices are based on mate-

rials provided by the course instructor. Students have

the opportunity to check their answers and request a

review if they find discrepancies.

On the other hand, there was an increase in the

average scores for 7. It is interesting to observe that

the most increases in grades after pandemic occur in

open-ended questions, such as Q06 and Q29, indi-

cated by * in Figure 5. The reason might be related to

more flexible grading criteria applied to open-ended

questions after the COVID-19 pandemic. Our over-

all findings suggest that the pandemic may have had

a detrimental influence on the performance of the stu-

dents.

To test the hypotheses, we formulated in Sec-

tion 3.2, we applied a unpaired Mann-Whitney U

Test. Firstly, we applied the Normality Test using

the Shapiro Test (W = 0.874, ρ < .001). Note that,

the low ρ − value suggests a violation of the assump-

tion of normality. As we hypothesized, according to

the results of this non-parametric test (U = 378020,

ρ = 0.007), there is significant difference related to

the impact of the COVID-19 pandemic on student per-

formance across semesters (µ

2019

̸= µ

2022−1

).

RQ

1

Summary: The findings indicate that the

COVID-19 pandemic may have had a nega-

tive impact on the performance of students

across semester.

4.2 The Long-Term Impact of the

COVID-19 Pandemic (Rq

2

)

The aim of RQ

2

was to assess the long-lasting impact

of COVID-19 on students’ performance in Software

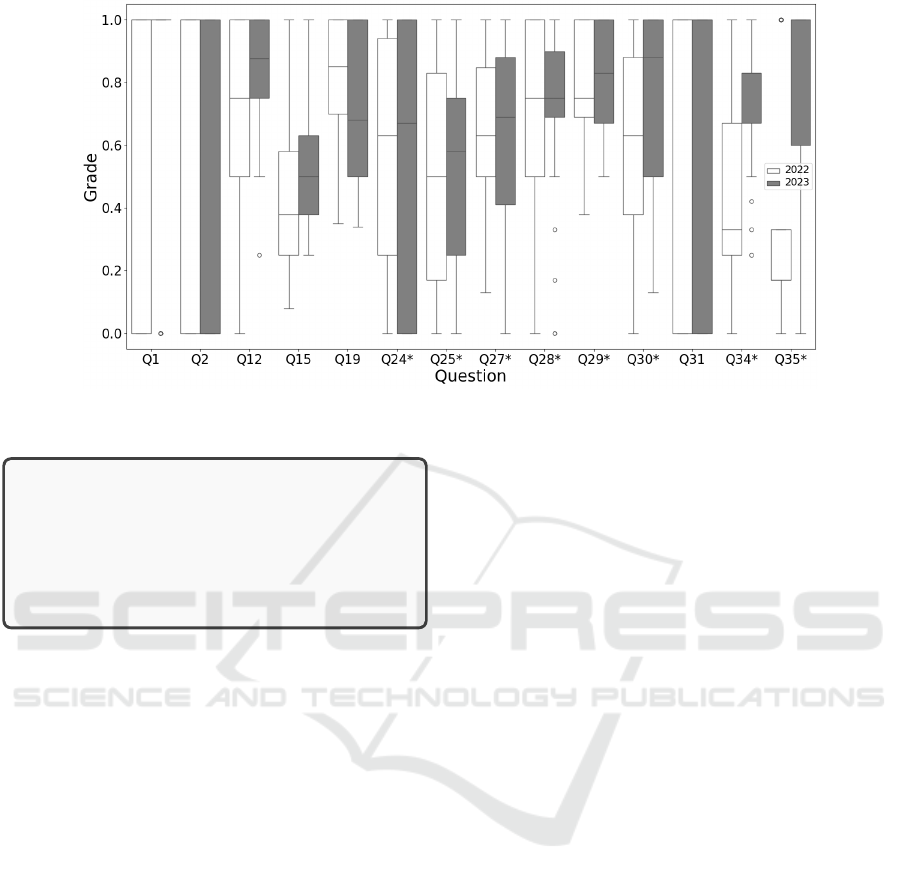

Engineering activities. Figure 6 compares the aver-

age grades students achieved for each question from

2022 to 2023. Based on these data, we observe a de-

crease in the average grade for only two questions

(Q02 and Q19) related to SE Introduction and Soft-

ware Architecture topics, respectively (they are both

closed-ended). Otherwise, there is an increase in the

average for 12 questions, such as Q01, Q12, Q34, and

Q35. As an example, Figure 4 shows the Q1 of our

database. Q01 allows for the possibility that none of

the options are correct. Q01 and Q12 questions are

closed-ended questions with answers limited and nar-

rowed to the given options. However, Q34 and Q35

are open-ended questions; as mentioned before, the

students had 100% control over the questions’ an-

swers. Besides, we can consider the flexible grad-

ing criteria applied to open-ended questions. Thus, it

is evident that student performance significantly im-

proved from the first (2022) year to the second year

(2023) post-pandemic.

To test the hypotheses outlined in Section 3.2, we

conducted an unpaired Mann-Whitney U test. Be-

fore, we assessed normality using the Shapiro-Wilk

test (W = 0.875, ρ < 0.001) which indicates a depar-

ture from normality assumptions. As hypothesized,

A Long-Term Study of the Pandemic Impact on Education: A Software Engineering Case

975

Figure 3: Statement of the Question 11 (Q11).

Figure 4: Statement of the Question 01 (Q01).

Figure 5: Common questions analyzed during the semesters in 2019 (prepandemic) and 2022-1 (post-pandemic). The exam

questions Q6, Q18, Q24-Q25, Q29, Q30, Q33, and Q36 are open-ended questions.

the results of this non-parametric test (U = 666506,

ρ < 0.001) reveal a significant difference related to

assess the long-lasting impact of COVID-19 on stu-

dents’ performance in Software Engineering activities

(µ

2022

̸= µ

2023

).

CSEDU 2025 - 17th International Conference on Computer Supported Education

976

Figure 6: Two years after COVID-19 pandemic. The exam questions Q24, Q25, Q27-Q30, Q34, and Q35 are open-ended

questions.

RQ

2

Summary: Our findings reveal a sig-

nificant improvement in student performance

from the first year to the second year follow-

ing the pandemic. Therefore, we argue that

the pandemic impact on the student perfor-

mance does not last long.

4.3 Year Comparison

In 2019, before the COVID-19 pandemic, the median

student grade was around 70%, as depicted in Fig-

ure 7. However, in 2020 and 2021, during the pan-

demic, we implemented emergency remote teaching

with an online model, prompting us to exclude data

from these years. By 2022, a real negative impact of

the pandemic on student performance emerged, with

grades decreasing to around 60%, as illustrated in Fig-

ure 7. Nonetheless, in the second year post-COVID-

19 (2023), there was an improvement in student per-

formance, with results recovering to pre-pandemic

levels (i.e., around 70%). We also noticed a larger

spread of grades in 2022, as indicated by the inter-

quartile range (Q3-Q1).

4.4 Average Grade

Table 2 presents the historical data on the general av-

erage grades obtained by questions in the Software

Engineering course over the three analyzed years

(2019, 2022 and 2023). As mentioned, we discarded

data obtained during the pandemic (2020 and 2021).

The first column corresponds to the set of questions

from the SE topics as presented in Table 1. The sec-

ond column (“Open?”) refers to whether the question

is an open-ended question (1) or closed-ended ques-

tion (0). The other columns correspond to the year

and semester in which they occurred. The general av-

erage grade that the students obtained was calculated

for the semesters in which it occurred. The “-” means

the question did not occur in the semester. Questions

Q01, Q11, Q12, Q18, Q30, and Q33 are examples

of average student grades showing a decline in the

first or second semester of 2022, the first year after

the pandemic. We can also see that students have

improved their grades in the following year (2023).

Therefore, the pandemic may not have a prolonged

impact on student performance.

5 THREATS TO VALIDITY

In this section, we delve into the comprehensive ex-

amination of potential threats to the study’s validity

and discuss biases that could have influenced the re-

sults. Drawing from Wohlin et al. (2012) proposed

categories, we discuss these threats and our respec-

tive actions to mitigate them below.

Construct Validity. Construct validity concerns the

alignment between theory and observation (Wohlin

et al., 2012). This type of threat may arise when for-

mulating the set of questions for each test. As part of

this case study, we selected questions for each class

over six semesters. To mitigate this threat, we thor-

oughly reviewed and discussed all experimental pro-

cedures. Another threat may arise when selecting the

questions for each test, which may not reflect student

performance. To mitigate this threat, we ensure that

A Long-Term Study of the Pandemic Impact on Education: A Software Engineering Case

977

Figure 7: Year comparison.

the selected questions align closely with the learning

objectives of the software engineering course.

Internal Validity. The internal validity is related to

uncontrolled aspects that may affect the study results

(Wohlin et al., 2012). Several studies (Dominik et al.,

2021) (Kanij and Grundy, 2020) have delved into the

ramifications of the COVID-19 pandemic on the lives

of professors. These investigations have highlighted

specific challenges, such as a significant increase in

workload due to the transition to online teaching, the

need for rapid adaptation of teaching materials to suit

the online format, and the daily stressors of managing

personal and professional responsibilities in a pan-

demic. The heightened stress levels may significantly

influence the assessment process, particularly in the

context of open-ended questions. Consequently, our

results may not reflect the reliability of open-ended

question evaluations. To address this limitation, we

implemented a consistent evaluation approach, where

a single professor assessed all responses of the par-

ticipants throughout the study period. However, it is

important to note that if different professors had been

involved in evaluating the questions, the outcomes

might have varied, potentially compromising the re-

liability of the study. This underscores the need for a

standardized approach in our assessment process.

External Validity. The external validity concerns

the ability to generalize the results to other environ-

ments (Wohlin et al., 2012). Since the study partic-

ipants were exclusively from the Software Engineer-

ing course at a single university in Brazil, the findings

are applicable only to similar contexts. This limitation

is shared with all case studies (Wohlin et al., 2012).

To enhance generalization, further studies based on

our findings should be conducted across other courses

and universities. Despite these limitations, the find-

ings provide valuable insights into the advantages,

limitations, and recommendations for improvements

to the Software Engineering courses.

Conclusion Validity. The conclusion validity con-

cerns issues that affect the ability to draw the cor-

rect conclusions from the study (Wohlin et al., 2012).

The findings outlined in this study primarily consist

of observations, recommendations, and insights in-

tended to guide future research endeavors. While we

have provided our own interpretation of student per-

formance analysis, it is important to acknowledge that

there may be additional significant insights within the

collected data that have not yet been explored or re-

ported.

6 RELATED WORK

Several studies investigated the academic perfor-

mance on Computer Science (CS) or Software Engi-

neering (SE) courses (Cruz et al., 2015) (Falessi et al.,

2018) (Akbulut et al., 2018) (Berkling and Neubehler,

2019) (G

¨

urer et al., 2019) (Ouhbi and Pombo, 2020)

before COVID-19 pandemic. For instance, G

¨

urer et

al. (2019) examined the effects of various factors such

as, demographic characteristics, achievement in com-

puter programming courses, perceived learning, and

computer programming self-efficacy on pre-service

computer science teachers’ attitudes towards com-

puter programming (ATCP). They identified and ana-

CSEDU 2025 - 17th International Conference on Computer Supported Education

978

Table 2: Average Grade.

ID Open? 2019-1 2019-2 2022-1 2022-2 2023-1 2023-2

Q01 0 - 0.72 0.68 0.65 - 0.80

Q02 0 - 0.53 0.60 0.48 - 0.40

Q03 1 0.58 - - - 0.64 -

Q04 0 0.82 - - - 0.77 -

Q05 0 0.95 - - - - 0.95

Q06 1 - 0.52 0.76 0.68 - -

Q07 0 - - 0.75 0.76 - -

Q08 0 0.67 - - - - 0.61

Q09 0 0.73 - - - 0.89 -

Q10 0 - - 0.69 0.73 - -

Q11 0 - 0.85 0.77 0.82 - -

Q12 0 - 0.83 0.75 0.73 - 0.84

Q13 1 - 0.66 - - - 0.80

Q14 1 0.51 - - 0.41 - -

Q15 0 - 0.58 0.65 0.35 - 0.52

Q16 1 0.71 0.71

Q17 0 0.88 - - - 0.91 -

Q18 1 - 0.78 0.68 0.83 - -

Q19 0 - 0.78 0.79 0.84 - 0.73

Q20 0 - 0.51 0.47 0.74 - -

Q21 0 0.72 - - - - 0.63

Q22 0 - 0.81 0.74 0.82 - -

Q23 0 0.70 0.84

Q24 1 - 0.59 0.56 - 0.58

Q25 1 - 0.32 0.54 0.48 0.51 -

Q26 1 0.47 - - 0.37 - -

Q27 1 0.72 - - 0.63 - 0.64

Q28 1 - - - 0.67 - 0.73

Q29 1 0.63 - 0.79 - 0.80 -

Q30 1 - 0.63 0.60 - 0.74 -

Q31 0 - 0.54 0.49 0.55 - 0.58

Q32 1 0.48 - - - 0.28 -

Q33 1 - 0.75 0.49 - - -

Q34 1 - - 0.44 - 0.72 -

Q35 1 - - 0.35 - 0.83 -

Q36 1 - 0.22 0.19 0.32 - -

lyzed the impact of various factors (performance, self-

efficacy, perceived learning) on these attitudes, pro-

viding valuable insights into how to motivate and sup-

port future computer science educators.

Other studies has been extensively researched the

impact of the COVID-19 pandemic on education (Ad-

nan and Anwar, 2020) (de Deus et al., 2020) (Barr

et al., 2020) (Crick et al., 2020) (Akhasbi et al., 2022)

(Lin and Hou, 2023) (Singh and Meena, 2023). These

studies have examined the effects of remote learning

education on student performance, engagement, and

well-being across various Computer Science (CS) or

Software Engineering (SE) courses.For example, Ad-

nan and Anwar (2020) investigated the attitudes of

Pakistani higher education students towards compul-

sory digital and distance learning university courses

during the COVID-19 pandemic. De Deus et al.

(2020) investigated how professors conducted Emer-

gency Remote Education (ERE) in the field of Com-

puter Science in Brazil in response to the COVID-19

pandemic.

Barr et al. discussed their experience deliver-

ing an eight-week undergraduate Software Engineer-

ing program during the pandemic, particularly dur-

ing the lockdown period. Reflecting on the rapid

shift to online learning across three distinct modules,

they emphasized the importance of prioritizing well-

being of the students. From this, they concluded

that there is no ”one-size-fits-all” approach to online

delivery in Software Engineering education. Nev-

ertheless, they believe it is still possible to offer a

pedagogically sound learning experience, even under

lockdown conditions, by adhering to established best

practices. These include breaking online lectures into

smaller, more accessible units, carefully structuring

group work and team composition, and actively using

student feedback to make real-time adjustments.

In contrast, other studies found no significant dif-

A Long-Term Study of the Pandemic Impact on Education: A Software Engineering Case

979

ferences between remote and in-person instruction

(Crick et al., 2020), these studies also highlighted

the challenges of remote learning, such as lack of ac-

cess to technology, limited opportunities for collabo-

ration, and increased distractions at home. They ana-

lyzed various aspects, such as attitudes towards online

education, challenges faced by educators, strategies

adopted during emergency remote teaching, and the

overall impact on teaching practices and institutions.

Therefore, the distinctive feature of these papers lies

in their exploration of the unprecedented challenges

and adaptations in education caused by the pandemic.

Nowadays, studies have begun to examine the

long-term effects of the pandemic on CS education.

Some studies suggest that the pandemic may have ex-

acerbated existing inequalities in CS education, with

students from disadvantaged backgrounds dispropor-

tionately affected (Lin and Hou, 2023). Additionally,

they explored how students’ educational background

and family income influence their experiences with

online learning and compare traditional in-person in-

struction from the perspective of Taiwanese students.

Other studies have explored the potential benefits of

remote learning, such as increased flexibility and ac-

cessibility (Singh and Meena, 2023). Their study

highlighted the discrepancy between expected and ac-

tual benefits of virtual classrooms, shedding light on

challenges faced by both faculty members and stu-

dents, and examining the moderation effects of these

challenges on perceived benefits. Our study comple-

ments these previous works by examining the com-

parative student performance in computer science,

specifically within the context of a SE course, before

and after the onset of the pandemic.

The topics have been studied in various coun-

tries worldwide, including but not limited to Aus-

tralia (Kanij and Grundy, 2020), Brazil (de Deus et al.,

2020), India (Singh and Meena, 2023), Israel (Fi-

toussi and Chassidim, 2021), Moroccan (Akhasbi

et al., 2022), Pakistan (Adnan and Anwar, 2020), Tai-

wan (Lin and Hou, 2023), the UK (Crick et al., 2020),

and potentially others given the global nature of the

COVID-19 pandemic and its impact on higher edu-

cation systems globally. It might have highlighted

the potential benefits and drawbacks of online edu-

cation in the field of Computer Science. During the

pandemic, studies provided crucial insights into how

faculty members and students have adapted to virtual

classrooms and the challenges they have faced. After

the pandemic, they offered valuable lessons for im-

proving online learning experiences and ensuring re-

silience in higher education systems. Utilizing a soft-

ware engineering course as a case study, we aim to

provide a more focused analysis of how the pandemic

has impacted student learning outcomes, we employ

quantitative methods, and overall educational expe-

riences in this particular field of Computer Science.

This provides valuable insights into the long-term ef-

fects of the pandemic on educational outcomes in this

field.

7 CONCLUSION AND FUTURE

WORK

The COVID-19 pandemic is a unique and vast event

that has profoundly impacted various aspects of hu-

man life, particularly education. This study aimed

to investigate the impact of the pandemic on stu-

dent performance, focusing on the Software Engi-

neering Course. To this end, we collected historical

data for 2019, 2022, and 2023 from the exams for

the students enrolled in the SE Course, specifically

the average grades of recurring assessments across

semesters. Our findings reveal an impact on student

performance, particularly during the first semester

2022. However, we also perceived that the impact de-

creased in the following semesters.

Future Work. We plan to conduct surveys among the

students (Souza et al., 2019) (Braun. et al., 2023) to

triangulate our results and better understand the per-

spective of the students related to the impact of the

COVID-19 pandemic on their performances. Follow-

ing this, we can investigate the impact of the pan-

demic on the performance of the students in SE course

topics, such as requirements, development processes,

or Software quality. By exploring these topics in more

detail, we aim to uncover new insights to improve the

course teaching. Another research opportunity is to

investigate the student frequency in the course to un-

derstand their engagement and participation in the ed-

ucational process (Figueiredo et al., 2014). Finally,

we can explore the impact of the pandemic on dif-

ferent groups, such as genders (male versus female).

we can conduct further studies based on our findings

in other courses and universities including qualitative

insights, such as student surveys.

ACKNOWLEDGEMENTS

This research was partially supported by Brazil-

ian funding agencies: CNPq (Grant 312920/2021-

0, PROFIX-JD 155774/2023-9 e 157416/2024-0),

CAPES, and FAPEMIG (Grants BPD-00460-22 and

APQ-01488-24).

CSEDU 2025 - 17th International Conference on Computer Supported Education

980

REFERENCES

Adnan, M. and Anwar, K. (2020). Online learning amid

the covid-19 pandemic: Students’ perspectives. Jour-

nal of Pedagogical Sociology and Psychology (JPSP),

2(1):45–51.

Akbulut, A., Catal, C., and Yıldız, B. (2018). On the effec-

tiveness of virtual reality in the education of software

engineering. Computer Applications in Engineering

Education (CAEE), 26(4):918–927.

Akhasbi, H., Belghini, N., Riyami, B., Cherrak, O., and

Bouassam, H. (2022). Moroccan higher education

at confinement and post confinement period: Review

on the experience. In 14th International Conference

on Computer Supported Education (CSEDU), pages

130–164.

Barr, M., Nabir, S. W., and Somerville, D. (2020). Online

delivery of intensive software engineering education

during the covid-19 pandemic. In IEEE 32nd Confer-

ence on Software Engineering Education and Training

(CSEE&T), pages 1–6.

Berkling, K. and Neubehler, K. (2019). Boosting student

performance with peer reviews: Integration and analy-

sis of peer reviews in a gamified software engineering

classroom. In IEEE Global Engineering Education

Conference (EDUCON), pages 253–262.

Braun., D., Rogetzer., P., Stoica, E., and Kurzhals, H.

(2023). Students’ perspective on ai-supported assess-

ment of open-ended questions in higher education.

In 15th International Conference on Computer Sup-

ported Education (CSEDU), volume 2, pages 73–79.

Crick, T., Knight, C., Watermeyer, R., and Goodall, J.

(2020). The impact of covid-19 and “emergency re-

mote teaching” on the uk computer science educa-

tion community. In Conference on United Kingdom

& Ireland Computing Education Research (UKICER),

pages 31–37.

Cruz, S., da Silva, F. Q., and Capretz, L. F. (2015). Forty

years of research on personality in software engineer-

ing: A mapping study. Computers in Human Behav-

ior, 46:94–113.

de Almeida Souza, M. R., Constantino, K. F., Veado, L. F.,

and Figueiredo, E. M. L. (2017). Gamification in soft-

ware engineering education: An empirical study. In

IEEE 30th Conference on Software Engineering Edu-

cation and Training (CSEE&T), pages 276–284.

de Deus, W. S., Fioravanti, M. L., de Oliveira, C. D., and

Barbosa, E. F. (2020). Emergency remote computer

science education in brazil during the covid-19 pan-

demic: Impacts and strategies. Brazilian Journal on

Informatics in Education (RBIE), 28:1032–1059.

de Souza, L., Felix, I., Ferreira, B., Brand

˜

ao, A., and

Brand

˜

ao, L. (2021). I know what you coded last sum-

mer. In Brazilian Symposium on Informatics in Edu-

cation (SBIE), pages 909–920.

Dominik, Fend, A., Scheffknecht, D., Kappel, G., and Wim-

mer, M. (2021). From in-person to distance learn-

ing: Teaching model-driven software engineering in

remote settings. In ACM/IEEE International Confer-

ence on Model Driven Engineering Languages and

Systems Companion (MODELS-C), pages 702–711.

Falessi, D., Juristo, N., Wohlin, C., Turhan, B., M

¨

unch, J.,

Jedlitschka, A., and Oivo, M. (2018). Empirical soft-

ware engineering experts on the use of students and

professionals in experiments. Empirical Software En-

gineering, 23:452–489.

Figueiredo, E., Pereira, J. A., Garcia, L., and Lourdes, L.

(2014). On the evaluation of an open software engi-

neering course. In 2014 IEEE Frontiers in Education

Conference (FIE) Proceedings, pages 1–8.

Fitoussi, R. and Chassidim, H. (2021). Teaching software

engineering during covid-19 constraint or opportu-

nity? In IEEE Global Engineering Education Con-

ference (EDUCON), pages 1727–1731.

G

¨

urer, M. D., Cetin, I., and Top, E. (2019). Factors affect-

ing students’ attitudes toward computer programming.

Informatics in Education (InfEdu), 18(2):281–296.

Hebebci, M. T., Bertiz, Y., and Alan, S. (2020). Investi-

gation of views of students and teachers on distance

education practices during the coronavirus (covid-19)

pandemic. International Journal of Technology in Ed-

ucation and Science (IJTES), 4(4):267–282.

Kanij, T. and Grundy, J. (2020). Adapting teaching of a soft-

ware engineering service course due to covid-19. In

2020 IEEE 32nd Conference on Software Engineering

Education and Training (CSEE&T), pages 1–6.

Lin, A. F. Y. and Hou, A. Y. C. (2023). Quality and inequal-

ity: Students’ online learning experiences amidst the

covid-19 pandemic in taiwan. In Crafting the Future

of International Higher Education in Asia via Systems

Change and Innovation: Reimagining New Modes of

Cooperation in the Post Pandemic, pages 171–190.

Mann, H. B. and Whitney, D. R. (1947). On a Test of

Whether one of Two Random Variables is Stochasti-

cally Larger than the Other. The Annals of Mathemat-

ical Statistics, 18(1):50 – 60.

Mooney, C. and Becker, B. A. (2021). Investigating the im-

pact of the covid-19 pandemic on computing students’

sense of belonging. ACM Inroads, 12(2):38–45.

Ouhbi, S. and Pombo, N. (2020). Software engineer-

ing education: Challenges and perspectives. In

IEEE Global Engineering Education Conference

(EDUCON), pages 202–209.

Piret, J. and Boivin, G. (2021). Pandemics throughout his-

tory. Frontiers in microbiology (Front. Microbiol.),

11:631736.

Ravi, P., Ismail, A., and Kumar, N. (2021). The pandemic

shift to remote learning under resource constraints.

Proceedings of the ACM on Human-Computer Inter-

action (PACMHCI), 5(CSCW2):1–28.

Santos, A., Vale, G., and Figueiredo, E. (2015). Does

online content support uml learning? an empirical

study. Brazilian Symposium on Software Engineering

(SBES), pages 36–47.

Shapiro, S. S. and Wilk, M. B. (1965). An analysis

of variance test for normality (complete samples)†.

Biometrika, 52(3-4):591–611.

Singh, A. K. and Meena, M. K. (2023). Online teaching

in indian higher education institutions during the pan-

demic time. Education and Information Technologies

(EAIT), pages 1–51.

A Long-Term Study of the Pandemic Impact on Education: A Software Engineering Case

981

Sommerville, I. (2015). Software Engineering. 10th Edi-

tion, volume 10. Addison-Wesley.

Souza, M., Moreira, R., and Figueiredo, E. (2019). Students

perception on the use of project-based learning in soft-

ware engineering education. In XXXIII Brazilian Sym-

posium on Software Engineering (SBES), pages 537–

546.

Student (1908). The probable error of a mean. Biometrika,

pages 1–25.

Willies, D. (2023). The impact of covid-19 pandemic on

the education system in developing countries. African

Journal of Education and Practice (AJEP), 9(1):15–

27.

Wohlin, C., Runeson, P., H

¨

ost, M., Ohlsson, M. C., Reg-

nell, B., and Wessl

´

en, A. (2012). Experimentation in

software engineering. Springer.

CSEDU 2025 - 17th International Conference on Computer Supported Education

982