Towards JPEG-Compression Invariance for Adversarial Optimization

Amon Soares de Souza

1 a

, Andreas Meißner

2 b

and Michaela Geierhos

1 c

1

University of the Bundeswehr Munich, Research Institute CODE, Werner-Heisenberg-Weg 39, 85577 Neubiberg, Germany

2

ZITiS, Big Data, Zamdorfer Str. 88, 81677 Munich, Germany

Keywords:

Adversarial Optimization, Adversarial Attacks, Image Classification.

Abstract:

Adversarial image processing attacks aim to strike a fine balance between pattern visibility and target model

error. This balance ideally results in a sample that maintains high visual fidelity to the original image, but

forces the model to output the target of the attack, and is therefore particularly susceptible to transformations

by post-processing such as compression. JPEG compression, which is inherently non-differentiable and an

integral part of almost every web application, therefore severely limits the set of possible use cases for at-

tacks. Although differentiable JPEG approximations have been proposed, they (1) have not been extended to

the stronger and less perceptible optimization-based attacks, and (2) have been insufficiently evaluated. Con-

strained adversarial optimization allows for a strong combination of success rate and high visual fidelity to

the original sample. We present a novel robust attack based on constrained optimization and an adaptive com-

pression search. We show that our attack outperforms current robust methods for gradient projection attacks

for the same amount of applied perturbation, suggesting a more effective trade-off between perturbation and

attack success rate. The code is available here: https://github.com/amonsoes/frcw.

1 INTRODUCTION

Adversarial attacks provide a straightforward way to

improve and evaluate the robustness of deep learn-

ing models. Methods that project the input based

on the sign of the gradient of a surrogate model are

commonly used to improve model robustness because

they are less computationally intensive and can be

used in the inner loop of adversarial training (Madry

et al., 2018). In contrast, attacks based on adversar-

ial optimization assess model robustness by solving

a computationally expensive constrained optimiza-

tion problem that generates adversarial samples that

closely resemble the original image while fooling the

target model (Szegedy et al., 2014).

Optimally, the adversarial sample is the sample

closest to the original image (according to some dis-

tortion measure) that forces the model to output the

target (Szegedy et al., 2014). This fine balance is eas-

ily disrupted by transformations that change pixels or

groups of pixels, such as compression. JPEG com-

pression is an integral part of almost every applica-

tion that processes and stores images or other data,

a

https://orcid.org/0009-0000-7978-1281

b

https://orcid.org/0000-0002-6200-7553

c

https://orcid.org/0000-0002-8180-5606

severely limiting the use cases for attacks. Since this

type of compression is inherently non-differentiable,

it cannot easily be used in an optimization scheme

(Shin and Song, 2017). While there have been suc-

cessful attempts to incorporate a differentiable ap-

proximation into gradient projection-based attacks,

these works have not attempted to do the same for

optimization-based attacks, which are often less no-

ticeable and harder to defend against.

(a) Original (b) RCW

Figure 1: Comparison of the adversarial samples generated

by RCW with the original sample. Zooming in, you can see

that high frequency details have been removed.

Our RCW attack builds on Carlini and Wagner

(2017). Current approaches mainly rely on a gradient

ensemble over a set of quality settings. However, gra-

dient ensembles would introduce an additional inner

166

Soares de Souza, A., Meißner, A. and Geierhos, M.

Towards JPEG-Compression Invariance for Adversarial Optimization.

DOI: 10.5220/0013300200003912

Paper published under CC license (CC BY-NC-ND 4.0)

In Proceedings of the 20th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISIGRAPP 2025) - Volume 3: VISAPP, pages

166-177

ISBN: 978-989-758-728-3; ISSN: 2184-4321

Proceedings Copyright © 2025 by SCITEPRESS – Science and Technology Publications, Lda.

loop into the adversarial optimization, and resulting

in undesirably long computation times. Instead of us-

ing gradient ensemble methods, this attack performs a

search for the JPEG quality factor by querying the tar-

get system once. This produces a pair (x

x

x, x

x

x

′

), where

x

x

x

′

is the compressed output of the target system. We

use this pair to perform a search for the quality set-

ting used by minimizing the L

2

distance from x

x

x

′

to

JPEG(x

x

x, q), where JPEG is our JPEG algorithm and

q is the quality setting. This search eliminates the

need to query every possible quality setting to per-

form compression, and finds the optimal quality set-

ting in a fraction of the steps compared to a brute-

force approach. By incorporating the differentiable

JPEG approximation into constrained adversarial op-

timization, we show that adversarial attacks do not re-

quire a high order of perturbation magnitude to over-

come compression. Adversarial samples generated by

RCW retain high visual fidelity and are still effec-

tive (see Figure 1). For further comparisons between

RCW-generated adversarial samples and their respec-

tive original images, see Figure 3. To summarize our

contributions in this paper:

1. We introduce a differentiable JPEG approxima-

tion for optimization-based attacks, which has

only been applied to gradient projection-based at-

tacks (Shi et al., 2021; Reich et al., 2024).

2. We propose an alternative to the gradient en-

semble methods found in the current approaches

(Shin and Song, 2017; Reich et al., 2024) in order

to successfully induce robustness against JPEG

compression with varying compression settings

for adversarial optimization.

3. In addition to white-box and black-box evalua-

tions and benchmarks on target models hardened

by adversarial training, we compare the perceived

distortion of our samples with those of the related

work. These experiments have not yet been ad-

dressed by the related work.

4. We show that our adversarial samples can over-

come compression while maintaining high image

fidelity, and report the differences in success rate

and average distortion compared to the current

state of the art. Our experiments indicate that our

attack results in a better balance between attack

success rate and applied distortion.

5. We extensively analyze our compression adap-

tation search procedure and perform an ablation

study that highlights the benefits of extending

optimization-based attacks to include the JPEG

approximation in the loss function as well as the

compression setting search for varying compres-

sion rates.

2 RELATED WORK

There is a rich body of work on adversarial attacks,

covering a variety of approaches and use cases.

Szegedy et al. (2014) introduced adversarial sam-

ples by performing constrained optimization on the

input using an adversarial loss. Optimization-based

attacks require a computationally expensive process,

but are usually effective because (1) it is impractical

to use optimization-based attacks in adversarial train-

ing, and (2) they usually result in an optimum where

the attack fools the model with a minimum required

distortion (Carlini and Wagner, 2017).

Gradient projection methods work very differ-

ently. As their name implies, these methods project

the input in the direction of the sign of the gradient to

increase the loss of the model. They are often used to

perform adversarial training (Goodfellow et al., 2015;

Wang and He, 2021). As far as distortion is con-

cerned, these latter methods are usually L

∞

bounded,

which means that these attacks often result in pertur-

bations where most pixels are changed to their max-

imum extent. Optimization-based attacks often use

the L

2

norm as a constraint, resulting in a distortion

that is not maximized for every pixel (Goodfellow

et al., 2015; Carlini and Wagner, 2017; Wang and He,

2021). In terms of use cases, both approaches can be

used as the basis for targeted and untargeted attacks,

in both white box and black box environments.

While there have been considerations that address

undesirable characteristics of these attacks, such as

attack visibility, the lack of smoothness (Luo et al.,

2022), and the challenges of deploying attacks in the

physical world (Kurakin et al., 2017), most attacks

only consider settings in the uncompressed domain.

This is surprising, given that JPEG compression can

easily suppress the adversarial noise of most attacks,

and is even considered to function as an adversarial

defense by various defense methods (Liu et al., 2019).

Shi et al. (2021) successfully produce adversarial

images resistant to JPEG compression by introduc-

ing a procedure called adversarial rounding. Instead

of distorting pixel values, this method makes adjust-

ments in the patched discrete cosine transform (DCT)

projection of an initial adversarial sample produced

by FGSM (Goodfellow et al., 2015) and BIM (Ku-

rakin et al., 2017). They distinguish between fast ad-

versarial rounding and iterative adversarial round-

ing. The first method produces an adversarial DCT

projection by quantizing the DCT patches in the di-

rection of the gradient to increase the model loss. This

approach also prioritizes DCT components that have

a greater impact on the model decision (Shi et al.,

2021).

Towards JPEG-Compression Invariance for Adversarial Optimization

167

Shin and Song (2017) propose a method to include

a differentiable JPEG approximation in projection-

based attacks, specifically to target models that use

JPEG as a defense. They argue that JPEG, being a

lossy compression method, results in an image that

preserves semantic details but discards the adversarial

perturbations, making the attack less effective. Quan-

tization in JPEG involves rounding the coefficients

obtained by the DCT transform to the nearest inte-

ger. This produces gradients that are everywhere 0,

making the function non-differentiable. They design

an approximation that adds the cubed difference be-

tween the original coefficient and the rounded coeffi-

cient during quantization. They extend FGSM (Good-

fellow et al., 2015) and BIM (Kurakin et al., 2017)

with their JPEG approximation, allowing them to in-

corporate compression into the gradient computation.

However, they only extend attacks based on gradient

projection and omit optimization-based attacks (Shin

and Song, 2017). Improving on the work of Shin and

Song (2017), Reich et al. (2024) also include a dif-

ferentiable JPEG approximation in projection-based

attacks, but they extend the surrogate approach by re-

modeling the computations to obtain the quantization

table.

Other work suggests that the reliability of attacks

can be inherently improved by considering additional

characteristics of adversarial attacks. Zhao et al.

(2020) propose to create adversarial examples by per-

turbing images with respect to the perceptual color

distance (PerC). They argue that color distances are

less perceptible because color perceived by the hu-

man visual system (HVS) does not change uniformly

with distance in the RGB space. Instead of using a

traditional L

p

norm as a constraint during optimiza-

tion, they use the CIEDE2000 color metric. They

also introduce an alternating optimization procedure

called PerC-AL, which computes the adversarial loss

for backpropagation when the sample is not adversar-

ial, and the image quality loss with CIEDE2000 when

the sample is adversarial (Zhao et al., 2020).

3 METHOD

In the following section, we define the threat model in

which we conduct our attack to bypass the target sys-

tem’s JPEG compression. After outlining the proce-

dure, we will examine the characteristics of the RCW

attack. We use the standard definition of adversarial

samples, where δ

δ

δ is the perturbation, x

x

x is the original

input, y ∈ Y

Y

Y is the ground truth, ε is the maximum per-

turbation threshold, f is the target model and θ

f

are its

parameters. A sample is adversarial if the following

holds (Szegedy et al., 2014; Goodfellow et al., 2015;

Kurakin et al., 2017; Shin and Song, 2017; Zhao et al.,

2020; Wang and He, 2021; Luo et al., 2022):

1. x

x

x + δ

δ

δ ∈ [0, 1]

2. f (x

x

x + δ

δ

δ;θ

f

) = ˆy ; ˆy ∈ Y

Y

Y \ y

3. ∀δ ∈ δ

δ

δ:|δ| ≤ ε

In the following, x

x

x + δ

δ

δ equals x

x

x

adv

. We define a threat

model, outline the attack procedure, and propose the

robust Carlini and Wagner attack method (RCW).

3.1 Threat Model

Akhtar et al. (2021) define a threat model as the adver-

sarial conditions against which a defense mechanism

is tested to verify its effectiveness. We adapt this con-

cept and define threat model as an interaction between

an adversary and a target system. In this interaction,

the adversary tries to force the target model, which

is part of the target system’s environment, to produce

false output. In all of our diagrams (see Figure 2 and

Figure 4), the red elements are features of the adver-

sary, while the blue elements are features of the target

system. Both terms are defined below.

3.1.1 Target System

Our approach requires that a target system includes

at least a target JPEG compression algorithm J

target

that compresses the input x

x

x, and a target model φ that

processes the compressed input to produce the desired

output. As a minimal working example, our target

system can be defined as

T (J

target

, q

target

, φ, x

x

x) = φ(J

target

(x

x

x, q

target

)) (1)

In real-world applications, such a target system is of-

ten found in social media, where user-uploaded im-

ages are compressed and then processed by a model

that performs some desired task.

3.1.2 Adversary

Akhtar et al. (2021) define an adversary as the agent

(i.e., the attacker) who creates an adversarial example.

Based on this definition, we define our adversary as

follows. Let Attack be an adversarial attack and x

x

x

be the input. The output of Attack is an adversarial

sample x

x

x

adv

computed using a surrogate model

ˆ

φ.

A(Attack, x

x

x,

ˆ

φ) = Attack(x

x

x;

ˆ

φ) (2)

In our scenario, the adversary can also query the tar-

get’s JPEG algorithm J

target

to compress the input x

x

x.

VISAPP 2025 - 20th International Conference on Computer Vision Theory and Applications

168

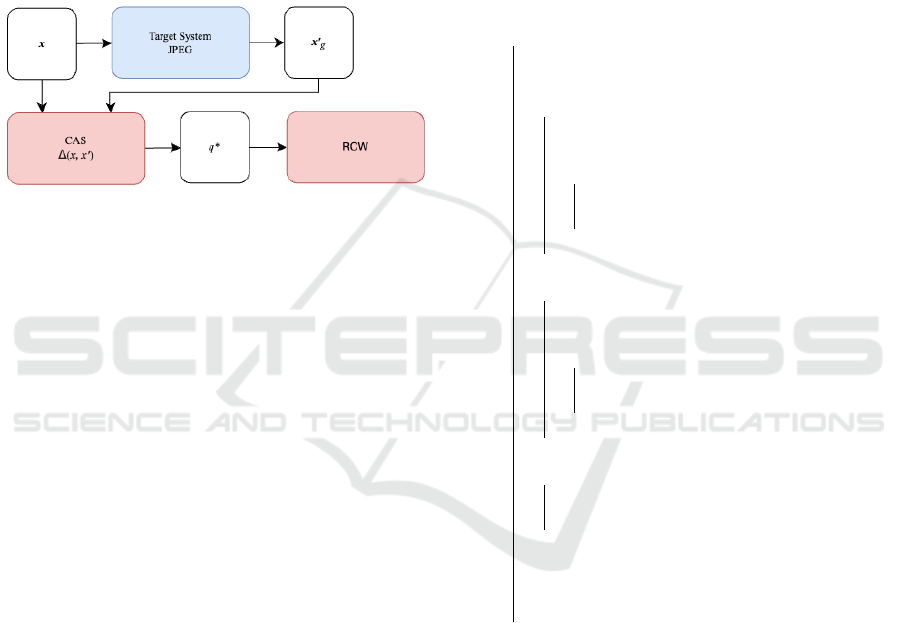

3.2 Attack Procedure

For attacks performed with our method, we pro-

vide a complete outline of the workflow in Figure 2.

First, we query the target system’s JPEG compres-

sion J

target

(x

x

x, q

target

) with x

x

x to obtain the compressed

counterpart x

x

x

′

g

. Both are passed as a tuple (x

x

x, x

x

x

′

g

)

to a procedure called compression adaptation search,

which returns the quality factor q

∗

that best mimics

the compression setting q

target

. This quality factor is

then passed to RCW, our Attack, to compute the ro-

bust adversarial sample x

x

x

adv

.

Figure 2: Graphical representation of the RCW attack flow.

The attack requires a query to the target system’s JPEG al-

gorithm. It then performs CAS to find the best compression

setting q

∗

.

3.2.1 Compression Adaptation Search (CAS)

The output of the query is used to perform a line

search that minimizes the L

2

distance from x

x

x

′

g

to

J(x

x

x, q), where J is our JPEG algorithm and q is the

quality setting. The goal of this search, which we call

compression adaptation search (CAS), is to find the

quality setting q

∗

that best mimics the quality setting

q

target

of the target system’s JPEG algorithm J

t

. Let

∆ be the distance L

2

. Let x

x

x be the uncompressed im-

age and x

x

x

′

g

be the compressed target image, which is

the output of the JPEG compression algorithm of the

target system J

target

. CAS has several parameters to

control the search. Let p be the direction of the line

search (e.g., whether the value of q is ascending or de-

scending). s

t

is the step size, decreasing continuously

by τ. It is used to scale the step size, which is given by

the distance d

t

= ∆(J(x

x

x, q

t

), x

x

x

′

g

). Let q

t

be the current

quality setting, randomly initialized with an integer in

the range {1, 99} in q

0

. In a few cases an intermedi-

ate q

t

resulted in a higher distance d

t+1

, even though

the search was approaching q

∗

in the right direction.

Therefore we allow for 2 exploration steps (denoted

as β) before changing the search direction in case d

t+1

does not improve on d

t

. Finally, let γ be an early ter-

mination criterion that stops the search if d

t

does not

improve for ten steps. The whole procedure is given

in Algorithm 1.

Input: x

x

x ; // uncompressed image

Input: x

x

x

′

g

; // compressed target image

Input: d

g

; // target distance

Result: q

∗

; // best quality setting

p ← −1 ; // search direction

τ ← 0.99 ; // temperature

s

0

← 1.0 ; // schedule

d

0

← 1e10 ; // init best distance

d

∗

← d

0

; // best distance

q

0

← r(1, 99) ; // random init of q

q

∗

← q ; // best q

γ ← 0 ; // early termination criterion

β ← 0 ; // exploration criterion

while d

∗

> d

g

do

x

x

x

′

← J(x

x

x, q

t

);

d

t+1

← ∆(x

x

x

′

, x

x

x

′

g

);

if d

t+1

≥ d

t

then

γ ← γ + 1;

β ← β + 1;

if β >= 2 then

p ← p · −1;

β ← 0;

end

end

else if d

t+1

< d

t

then

γ ← 0;

β ← 0;

if d

t+1

< d

∗

then

d

∗

← d

t+1

;

q

∗

← q

t

;

end

end

if γ > 10 then

/* quit search early */

return q

∗

;

end

s

t+1

← s

t

· τ;

q

t+1

←

min(max(q

t

+ p · (s

t+1

· d

t+1

), 1), 99);

end

return q

∗

Algorithm 1: Compression Adaptation Search (CAS).

3.2.2 RCW

Based on adversarial optimization, our attack uses a

differentiable approximation of JPEG along with the

output q

∗

of CAS to compute the robust adversarial

sample x

x

x

adv

.

Differentiable JPEG. JPEG compression is inher-

ently difficult to use with gradient descent. This is

due to some internal computations that are not differ-

entiable. There are four steps in JPEG encoding: (1)

Towards JPEG-Compression Invariance for Adversarial Optimization

169

(a) Original (b) RCW (c) Original (d) RCW

(e) Original (f) RCW (g) Original (h) RCW

(i) Original (j) RCW (k) Original (l) RCW

Figure 3: Comparison of the adversarial samples produced by RCW to the original sample.

color conversion, where the RGB is mapped to the

YcbCr color space (2) chroma subsampling, where

the two chroma channels, Cb and Cr, are downsam-

pled by a factor (3) patched DCT, which usually first

divides the input into 8x8 patches and then calcu-

lates the DCT for each patch, and (4) quantization,

which maps the output of the DCT to an integer by

a quantization table that is predefined by the cho-

sen JPEG quality (Shin and Song, 2017; Reich et al.,

2024). The fourth step, quantization, relies on round-

ing and floor functions, resulting in gradients that are

almost always zero. Shin and Song (2017) proposed

a polynomial approximation of the rounding function

⌊x

x

x⌉

approx

= ⌊x

x

x⌉ + (x

x

x + ⌊x

x

x⌉)

3

and they additionally re-

formulate the scaling of the quantization table by the

JPEG quality. Other methods approximate the non-

differentiable function of the compression by using a

straight-through estimator. This method uses the true,

non-differentiable method for the forward pass and a

constant gradient of one in the backward pass (Reich

et al., 2024). For our purposes, we use the surrogate

model approach outlined in Reich et al. (2024), which

extends the existing surrogate approach of Shin and

Song (2017) by remodeling the computations to ob-

tain the quantization table.

Adversarial Optimization. Adding a compression

approximation term to the adversarial optimization

can yield stronger, more reliable targeted adversarial

samples that maintain high visual fidelity to the origi-

nal sample. Based on Carlini and Wagner (2017), we

adapt their method to compute the adversarial loss by

extending the loss computation to include compres-

sion in the backward pass. The adversarial loss func-

tion f measures the effectiveness of the adversarial

sample. Let t be the index of the target label, q the

compression quality, x

x

x

adv

the adversarial sample, κ

the confidence factor (which increases the probability

of success for additional distortion), and J

d

the differ-

entiable JPEG compression in Equation 1. Further-

more, let Z be a mapping of an input to a set of logits,

where each logit represents a class. The underlying

parameters of Z are provided by the surrogate model

VISAPP 2025 - 20th International Conference on Computer Vision Theory and Applications

170

Figure 4: Graphical representation of our experiment pipeline.

ˆ

φ. The confidence factor κ controls the desired effec-

tiveness of the adversarial sample, with higher values

of κ requiring a more effective adversarial perturba-

tion.

f (x

x

x

adv

, y

t

;q) =

max{max[Z(J

d

(x

x

x

adv

);q)

i

: i ̸= t] − Z(J

d

(x

x

x

adv

);q)

t

, −κ}

(3)

An appropriate full-reference image quality metric

imposes the constraint. Let χ be an appropriate full-

reference image quality metric that evaluates the orig-

inal sample x

x

x and its adversarial counterpart x

x

x

adv

,

where χ measures the visual fidelity of x

x

x

adv

to x

x

x. Let

c be a trade-off constant that balances the adversarial

loss f with the image quality loss. Our complete loss

function can be defined as:

ψ(x

x

x, x

x

x

adv

, y

t

, q) = χ(x

x

x, x

x

x

adv

) + c · f (x

x

x

adv

, y

t

;q) (4)

Accounting for Varying Compression Magnitudes.

This sets up the constrained optimization problem for

finding an appropriate adversarial sample using RCW

(see Figure 2). However, in the current design, we

would have to guess the correct quality setting q to

use in Equation 2.

Current attacks account for different JPEG com-

pression rates by using a gradient ensemble over a set

of compression values (Shin and Song, 2017; Reich

et al., 2024). Using this approach in adversarial op-

timization would require an additional inner loop for

the gradient ensemble computation and would dras-

tically increase the computation time, as the attack

would require n × m successive forward- and back-

ward calls (instead of n) to the surrogate model

ˆ

φ to

compute the adversarial sample, where n is the num-

ber of steps and m is the set of compression settings

for the gradient ensemble method.

Therefore, the correct quality setting q

∗

is first

computed by CAS (see Section 3.2.1), RCW mini-

mizes the adversarial loss by Equation 2, using the

estimate q

∗

as the compression setting. The adversar-

ial optimization problem can be defined as follows.

Let δ

δ

δ be the perturbation that is added to x

x

x to obtain

x

x

x

adv

.

min

δ

δ

δ

ψ(x

x

x, x

x

x

adv

, y

t

, q

∗

) (5)

4 EXPERIMENTS

To perform well at all compression settings, reliable

attacks are needed. Therefore, we perform all tests on

every q ∈ {70, 80, 90}. This range is usually consid-

ered for current work using compression (Cozzolino

et al., 2023). For a fair comparison with the state of

the art, we report the success rate for each q using the

same amount of distortion (expressed by

¯

D). If the

distortion varies in between compression settings, we

report the average distortion of all compression set-

tings. Due to different underlying mechanisms, not

all attacks share the same set of hyperparameters. We

only perform targeted attacks, where the target is the

most likely label next to the ground truth. This is sim-

ilar to the untargeted attacks. Our surrogate model

ˆ

φ

φ

φ

is a ResNet (He et al., 2016) pre-trained on the re-

spective test dataset. We will consider three scenar-

ios: (1) white box (

ˆ

φ

φ

φ = φ

φ

φ), (2) black box(

ˆ

φ

φ

φ ̸= φ

φ

φ), and

(3) white box models where the model has been hard-

ened by adversarial training. Our results can be found

in the corresponding Table 1, Table 2, and Table 3.

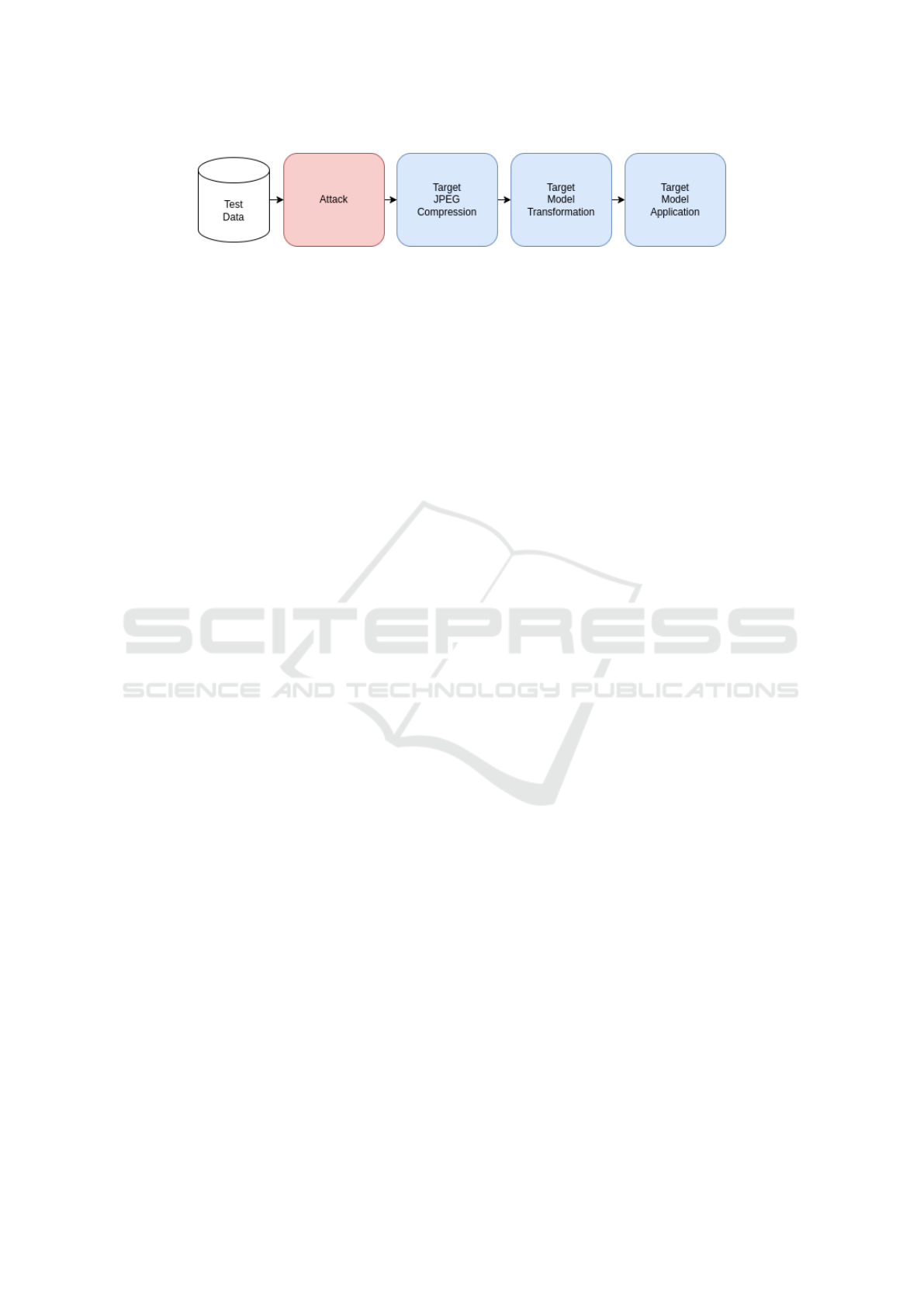

4.1 Pipeline

For a realistic scenario, we design our experiment

pipeline as follows. (1) Test Data: We load the

data and apply basic transformations such as center-

cropping and resizing. (2) Attack: We apply the at-

tack to the image and project the result to the orig-

inal [0, 1] range. (3) Target JPEG Compression:

To simulate typical behavior in web applications, we

now apply the JPEG compression. (4) Target Model

Transformation: We apply the transformations re-

quired by the target model. (5) Target Model Appli-

cation: The compressed and transformed adversarial

sample is applied to the target model. Figure 4 illus-

trates the process.

4.2 Settings

We compare our RCW method with three state-of-

the-art approaches: Two iterative attacks with differ-

ent JPEG approximations (Reich et al., 2024; Shin

and Song, 2017), called JpegIFGSM, and Fast Adver-

Towards JPEG-Compression Invariance for Adversarial Optimization

171

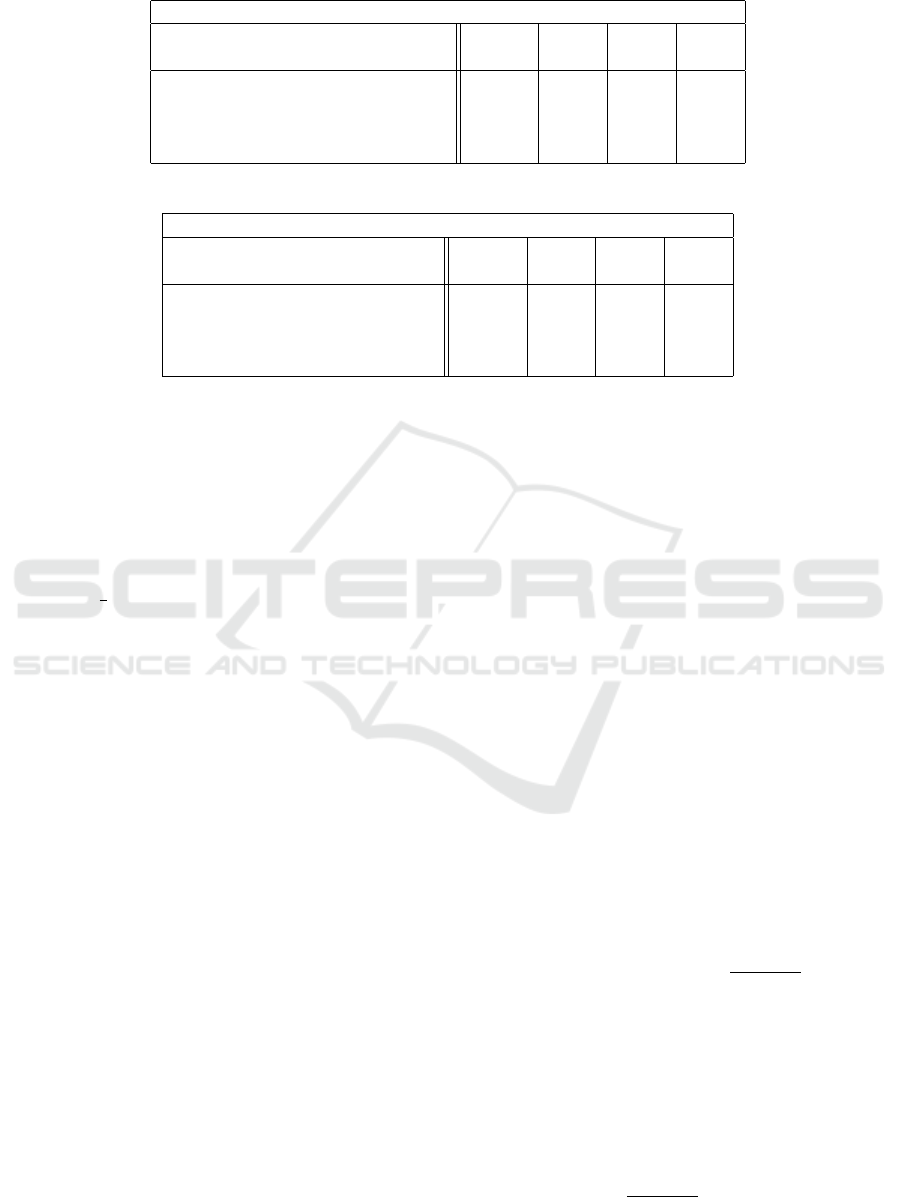

Table 1: Conditional average distortion

¯

D and attack success rate (ASR) per compression setting q in a white box scenario.

White Box

CAD ASR ASR ASR

Attack

¯

D q=70 q=80 q=90

JpegIFGSM (Reich et al., 2024) 0.1340 0.193 0.328 0.343

JpegIFGSM (Shin and Song, 2017) 0.1330 0.178 0.308 0.338

FAR (Shi et al., 2021) 0.1218 0.019 0.018 0.023

RCW (ours) 0.1210 0.642 0.662 0.663

Table 2: Conditional average distortion

¯

D and attack success rate (ASR) per compression setting q in a black box scenario.

Black Box

CAD ASR ASR ASR

Attack

¯

D q=70 q=80 q=90

JpegBIM (Reich et al., 2024) 0.1331 0.067 0.063 0.049

JpegBIM (Shin and Song, 2017) 0.1320 0.061 0.060 0.045

FAR (Shi et al., 2021) 0.2306 0.081 0.081 0.078

RCW (ours) 0.0873 0.066 0.061 0.044

sarial Rounding (FAR) (Shi et al., 2021). Our settings

are chosen so that the amount of distortion caused

by the attacks is roughly equal. For RCW, we set

c to 0.5, the learning rate α to 1e-05, and the num-

ber of optimization steps n to 10,000. When run-

ning CAS for RCW, we set the temperature τ to 0.99.

For JpegIFGSM, we set the L

∞

perturbation bound to

ε = 0.0004, the number of steps to n = 7, and the step

size to α =

ε

n

. For FAR, we use ε=9e-05 for the base

adversarial sample and set η = 0.3 to compute the

percentile of the DCT components that are adjusted.

JpegIFGSM (Shin and Song, 2017; Reich et al., 2024)

accounts for different compression strengths by com-

puting and ensembling the gradient over a set of N

compression values. In our experiments, we set N to

6, which means that compression settings from 99 to

70, in decrements of 5, are used to compute the gradi-

ent. FAR (Shi et al., 2021) does not use any procedure

to account for different compression rates, so we set

q = 80 for all of its runs.

4.3 Test Data

All of our experiments use the NIPS 2017 adversar-

ial competition dataset (Kurakin et al., 2018). This

dataset consists of 1,000 images from the ImageNet-

1K challenge, which contains a wide variety of image

classes and presents a challenging and realistic prob-

lem. In addition to benchmarking against standard at-

tacks, this dataset allows us to compare our method

with related approaches. We do not evaluate on the

CIFAR datasets, as some work (Tram

`

er et al., 2018)

suggests that the methods tested on these datasets

show poor generalization to more complicated tasks.

4.4 Evaluation Metrics

Our experimental results using the following metrics

ASR and CAD can be found in Table 1, Table 2, and

Table 3, while the results using the metrics MAD and

DISTS are shown in Table 4.

4.4.1 Attack Success Rate (ASR)

The frequency with which an attack successfully

causes the target network to misclassify inputs should

be quantified in an appropriate metric. To accurately

measure the performance of the attack, we define a

subset X

X

X

t

of the original test dataset X

X

X that contains

data points that were initially correctly classified by

the target network. Within this subset, the proportion

of data points for which the attack caused a misclas-

sification is called the attack success rate (ASR). Let

t be the ground truth of a data point x

x

x, φ the target

network, N the number of data points in X

X

X

t

, and α the

attack (Wang and He, 2021).

X

X

X

t

= {x

x

x ∈ X

X

X|φ(x

x

x, θ) = t} (6)

X

X

X

success

t

= {x

x

x ∈ X

X

X

t

|φ(α(x

x

x), θ) ̸= t} (7)

ASR(φ(X

X

X

t

, θ), T ) =

|X

X

X

success

t

|

N

(8)

4.4.2 Conditional Average Distortion (CAD)

In addition to ASR, the conditional average distortion

¯

D

D

D measures the average distance of an adversarial ex-

ample

ˆ

x

x

x = f (x

x

x) from the original data point x

x

x, where

x

x

x ∈ X

X

X

success

t

. This distance is measured using the L2

norm, which was selected as the distortion metric.

¯

D( f , X

X

X

success

t

) =

1

|X

X

X

success

t

|

∑

x

x

x∈X

X

X

success

t

| f (x

x

x) − x

x

x|

2

(9)

VISAPP 2025 - 20th International Conference on Computer Vision Theory and Applications

172

Since FAR (Shi et al., 2021) produces JPEG im-

ages, we compare the adversarial sample produced by

FAR with the compressed version of the respective

original image, compressed with the same quality set-

ting as FAR uses internally. This way, only the distor-

tion caused by the attack is measured, as intended.

4.4.3 Most Apparent Distortion (MAD)

Fezza et al. (2019) compared several full-reference

image quality metrics and found that most apparent

distortion (MAD) was most consistent with human

perception. Based on this finding, we will use L

p

norms, such as

¯

D, exclusively as distortion measures,

while using MAD and DISTS to estimate the per-

ceived distortion of adversarial samples. MAD is

a weighted linear combination of two components:

the near-threshold distortion D

near

, which captures

early human vision, and the suprathreshold distortion

D

supra

, which captures more obvious distortions. Let

α, β be the balancing scalars.

MAD(x

x

x

adv

, x

x

x) = α · D

near

(x

x

x

adv

, x

x

x) + β · D

supra

(x

x

x

adv

, x

x

x)

(10)

4.4.4 Deep Image Structure and Texture

Similarity (DISTS)

In addition to MAD, we include a newer full-

reference image quality evaluation method. Deep Im-

age Structure and Texture Similarity (DISTS) (Ding

et al., 2022) is a model-based quality score that per-

forms well with human perceptual scores on tradi-

tional image quality evaluation databases. Unlike

existing image quality scoring methods, DISTS pro-

vides good human quality scores for both textures and

natural photographs (Ding et al., 2022). It scores an

image based on the weighted linear combination of a

structural similarity model S and a textual similarity

model T . Let α, β be balancing scalars, l the number

of layers in the networks, and w

i

their corresponding

weights.

DIST S(x

x

x

adv

, x

x

x) =

l

∑

i

w

i

(α · S(x

x

x

adv

, x

x

x) + β · T (x

x

x

adv

, x

x

x))

(11)

4.5 White Box Results

Here we measure the performance of our attacks in

terms of ASR and CAD against their respective base-

lines over a range of compression rates.

Table 1 shows the success rates of the attacks with

approximately equal distortion (

¯

D). Although FAR

(Shi et al., 2021) produces compressed adversarial

images, it fails to maintain attack effectiveness af-

ter the additional JPEG compression present in our

pipeline. RCW results in a strong optimum, with

superior success rates and minimal distortion levels.

The attacks based on JPEG approximation and gra-

dient projection perform well for stronger ε and thus

higher distortion rates, but they fail to be effective for

smaller distortion rates.

4.6 Black Box Results

Similar to the white box evaluations, we measure the

performance of our attacks in terms of success rate

and average distortion compared to their respective

baselines over a range of compression rates. How-

ever, in these experiments, the target network is un-

known. To simulate this scenario, we define the target

model with a different architecture and weights than

the surrogate model. For our experiments, the tar-

get model is InceptionV3 (Szegedy et al., 2016) pre-

trained on ImageNet.

Table 2 shows the results of the attacks in a black

box scenario. For smaller distortion rates, as required

in this work, all attacks fail to fool the target model

with different weights than the surrogate model

ˆ

φ.

This is because black box attacks are a much more

challenging problem than white box attacks, espe-

cially in combination with JPEG compression. FAR

gives slightly better results than RCW, but with more

than twice the distortion.

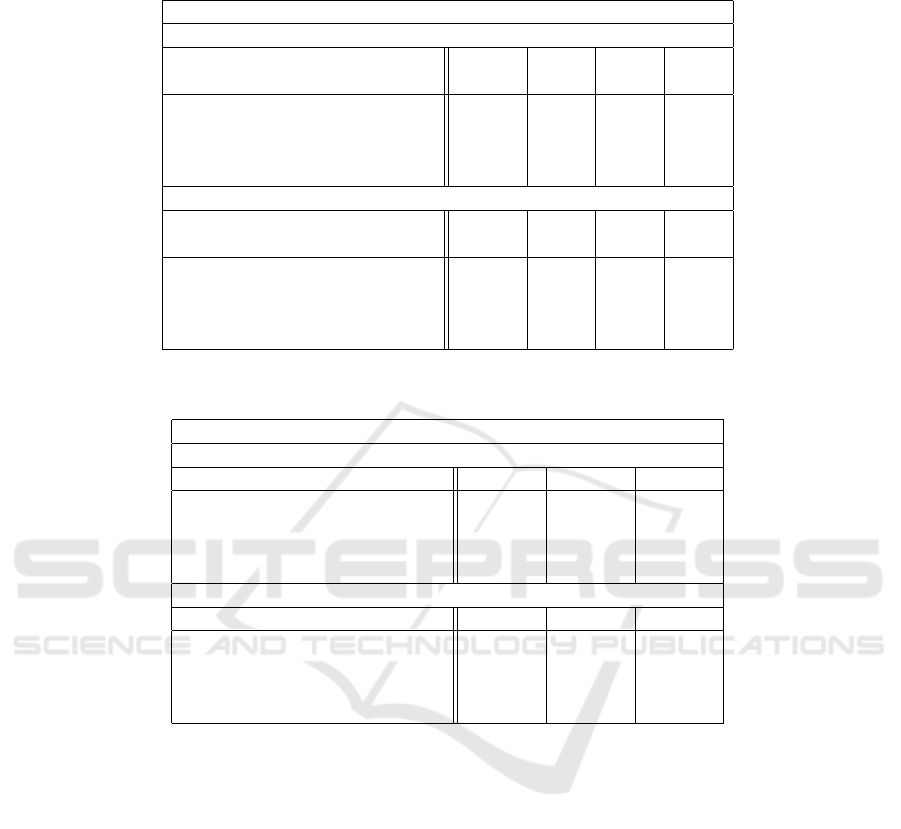

4.7 Hardened White Box Results

In the following, we present the results of our attack

on models hardened by adversarial training. Adver-

sarial training is currently the preferred way to make

models more robust against adversarial attacks. We

will compare two ResNets that were trained with the

most prominent adversarial training protocols: PGD

adversarial training (Madry et al., 2018) and FBF ad-

versarial training (Wong et al., 2020).

Table 3 shows the results of the experiments per-

formed on the hardened models. For the model that

was trained with the FBF protocol, we see that all gra-

dient projection attacks (Shi et al., 2021; Reich et al.,

2024) struggle to maintain the success rate. RCW,

which is based on adversarial optimization, manages

to bypass the defenses and achieves high success rates

at low distortion rates. Similarly, RCW achieves the

best success rates for models hardened by the PGD

adversarial training protocol. Although the samples

were slightly more distorted than the FBF protocol ex-

periments, they were still less distorted than any other

related work we benchmarked against, with higher

Towards JPEG-Compression Invariance for Adversarial Optimization

173

Table 3: Conditional average distortion

¯

D and attack success rate (ASR) per compression setting q in a scenario where the

target model was trained using either PGD or FBF adversarial training.

Defense Models Experiments

FBF

CAD ASR ASR ASR

Attack

¯

D q=70 q=80 q=90

JpegBIM (Reich et al., 2024) 0.1450 0.078 0.088 0.089

JpegBIM (Shin and Song, 2017) 0.1450 0.078 0.088 0.089

FAR (Shi et al., 2021) 0.1435 0.013 0.013 0.026

RCW (ours) 0.1042 0.755 0.726 0.576

PGD

CAD ASR ASR ASR

Attack

¯

D q=70 q=80 q=90

JpegBIM (Reich et al., 2024) 0.2901 0.053 0.065 0.055

JpegBIM (Shin and Song, 2017) 0.2853 0.050 0.057 0.058

FAR (Shi et al., 2021) 0.4786 0.007 0.008 0.015

RCW (ours) 0.2087 0.798 0.808 0.641

Table 4: This table shows the amount of perceived distortion of the adversarial samples. The success rates obtained were

lower or equal to the those obtained by RCW. Lower values are better for both MAD and DISTS.

Perceived Distortion

MAD

Attack 70 80 90

JpegBIM (Reich et al., 2024) 0.6980 0.1890 0.1889

JpegBIM (Shin and Song, 2017) 0.6636 0.1752 0.1757

FAR (Shi et al., 2021) 66.4244 66.6663 67.6681

RCW (ours) 0.0015 0.0011 0.0006

DISTS

Attack 70 80 90

JpegBIM (Reich et al., 2024) 0.0180 0.0117 0.0118

JpegBIM (Shin and Song, 2017) 0.0177 0.0115 0.0115

FAR (Shi et al., 2021) 0.1267 0.1070 0.1074

RCW (ours) 0.0015 0.0012 0.0009

success rates. To account for the fact that RCW has

higher distortion rates in the case of the PDG Resnet,

we adjust the settings of other methods to allow for

higher distortion rates and thus higher success rates as

well. For FAR (Shi et al., 2021) we use ε = 9e − 05.

Similarly, we increase ε to 0.0008 for the ensemble

methods (Shin and Song, 2017; Reich et al., 2024).

4.8 Comparison of Perceived Distortion

Although L

p

norms are still widely used to quan-

tify the distortion in adversarial samples, many stud-

ies have found that they correlate poorly with human

perception (Fezza et al., 2019). Therefore, an im-

portant quality to consider in adversarial samples is

the amount of perceived distortion. This is the over-

all quality or fidelity of a sample as estimated by

the human visual system. In this work, we use only

L

p

(

¯

D) norms as a measure of actual distortion and

MAD/DISTS as a measure of perceived distortion.

Note that we are testing for small distortion values, so

all perceived distortion measures will be correspond-

ingly small. Since adversarial samples are variable in

distortion, we set the ASR as the baseline for compar-

ison, with hyperparameters chosen so that the success

rate is approximately equal to or less than the success

rate of RCW in an appropriately small parameter grid

in the white box scenario.

Table 4 shows the perceived distortion values ob-

tained by the image quality evaluation methods. Al-

though RCW always achieves a higher or equal suc-

cess rate compared to the related work, its samples are

much less distorted according to the perceived distor-

tion metrics.

VISAPP 2025 - 20th International Conference on Computer Vision Theory and Applications

174

5 LIMITATIONS AND ETHICS

5.1 Analysis of the Compression

Adaptation Search

Here, we analyze how well CAS approximates the

true quality setting of the target system. We also mo-

tivate the search-based approach described in Section

3.2.1 by comparing it to a brute-force method that it-

erates over the entire set of possible compression in-

tensities Q

Q

Q = {1, ..., 99} to find the q with the smallest

distance. Finally, we will perform an ablation study to

isolate the effectiveness of both the JPEG approxima-

tion loss function extension and CAS in RCW.

5.1.1 Compression Estimation Analysis

For a target quality of 70, we run RCW on the test

dataset and report the quality settings found by the

search. We initialize the search with a budget of 150

steps and the temperature scalar τ, which progres-

sively reduces the step size, set to 0.99. CAS returned

the correct quality setting of 70 in every case. This

ensures that using CAS instead of the aforementioned

brute-force approach above will not have a negative

impact on RCW’s attack success rate of RCW by in-

advertently using an incorrect quality setting.

5.1.2 Benchmark Against Brute Force

A thorough comparison of CAS with the brute-force

method outlined above requires an analysis of the per-

formance differences in terms of the number of steps

needed to reach an optimal q. As shown in Figure 6,

CAS takes an average of 23 steps to reach q

∗

com-

pared to a brute-force approach, which requires the

Figure 5: This chart shows the average number of steps re-

quired by CAS to reach q

∗

over a set of compression values

in 10-increments.

processing of each quality setting and therefore takes

100 steps to reach the optimal q.

5.1.3 Ablation Study

To evaluate the benefit of the compression adaptation

feature in RCW, we perform an ablation study by set-

ting the compression value used for the gradient com-

putation to a fixed value of q = 80 (as was done for

other non-adaptive or non-ensemble methods such as

FAR (Shi et al., 2021), see Section 4.2.). In our ex-

periments, we will refer to this version of the attack as

approximate JPEG. This attack optimizes similarly to

RCW (see Equation 5), with the exception of q = 80.

min

δ

δ

δ

ψ(x

x

x, x

x

x

adv

, y

t

, q = 80) (12)

Finally, we include the original C&W attack by

Carlini and Wagner (2017), which is the basis for

RCW. This attack does not take compression into ac-

count. Table 5 shows the results of our ablation study.

As shown, C&W (Carlini and Wagner, 2017) does

not achieve acceptable success rates. As expected,

including a JPEG approximation in the loss function

with a fixed q results in high success rates for that par-

ticular q, but the model does not generalize to other

quality settings. Not surprisingly, the less compres-

sion is used, the more effective C&W becomes. Fi-

nally, adding CAS results in RCW and in an attack

that can successfully adapt to different compression

rates.

5.2 Ethical Concerns

The study of adversarial attacks in machine learn-

ing presents both opportunities and ethical challenges.

On the one hand, these attacks are invaluable for iden-

tifying weaknesses in models, allowing researchers

to design systems that are more robust and secure.

By understanding the ways in which models can be

manipulated, researchers can develop defenses that

prevent such exploits, ultimately making the use of

machine learning more reliable, especially when it

comes to high-security applications. However, the

same research also raises significant ethical concerns,

as the knowledge gained can be used for malicious

purposes. Adversarial attacks can be used to deceive

AI systems, bypass security measures, or even ma-

nipulate information. This can have harmful conse-

quences. While adversarial research is essential for

progress, it must be conducted with careful consider-

ation of its potential for abuse. It must balance inno-

vation with the responsibility to protect against mali-

cious exploitation.

Towards JPEG-Compression Invariance for Adversarial Optimization

175

Table 5: Conditional average distortion

¯

D and attack success rate (ASR) per compression setting q in a white box scenario.

This ablation study compares C&W (Carlini and Wagner, 2017), a robust iterative attack with a fixed q for compression

approximation, and RCW, which uses the JPEG approximation and CAS.

Ablation

CAD ASR ASR ASR

Attack

¯

D q=70 q=80 q=90

C&W (Carlini and Wagner, 2017) 0.0665 0.061 0.109 0.221

+ Appr. JPEG 0.0684 0.131 0.664 0.115

+ CAS 0.1210 0.642 0.662 0.663

6 CONCLUSION & FUTURE

WORK

Constrained adversarial optimization formulations

provide an optimal basis for integrating differentiable

JPEG approximations. However, using ensemble

methods to account for different compression qual-

ity settings (Shin and Song, 2017) in target applica-

tions leads to long runtimes for attack methods that

optimize to find a good balance between effectiveness

and visual fidelity. We present a method that interro-

gates the target system once per sample and performs

a compression adaptation search to find an optimal

quality setting for the attack. Our approach allows us

to compute adversarial samples that successfully de-

feat JPEG compression while maintaining high visual

fidelity to the original sample. For nearly impercepti-

ble amounts of distortion, our model outperforms the

current state of the art in terms of success per pertur-

bation in all experiments conducted, even overcoming

a combination of compression and defensive strate-

gies.

We now discuss possible future work. Replacing

the gradient ensemble approach of existing methods

Shin and Song (2017); Reich et al. (2024) with our

compression adaptation search (CAS) suggests an ad-

vantage in terms of computational complexity, since

we avoid the need for an additional inner loop in the

optimization procedure (see Section 3.2.2). However,

for future work, these advantages need to be investi-

gated by conducting a performance benchmark that

compares RCW to an adversarial optimization pro-

cedure that incorporates the established gradient en-

semble method found in Shin and Song (2017) and

Reich et al. (2024). Furthermore, although our attack

can successfully bypass JPEG at different compres-

sion rates, there are other compression schemes that

work differently internally. For example, JPEG2000

replaces the DCT with a wavelet transform to com-

pute high frequency components (Taubman and Mar-

cellin, 2002). Future work is needed to address these

types of compression and have attacks successfully

bypass them.

REFERENCES

Akhtar, N., Mian, A., Kardan, N., and Shah, M. (2021). Ad-

vances in adversarial attacks and defenses in computer

vision: A survey. IEEE Access, 9:155161–155196.

Carlini, N. and Wagner, D. A. (2017). Towards evaluating

the robustness of neural networks. In 2017 IEEE Sym-

posium on Security and Privacy, SP 2017, San Jose,

CA, USA, May 22-26, 2017, pages 39–57. IEEE Com-

puter Society.

Cozzolino, D., Poggi, G., Corvi, R., Nießner, M., and Ver-

doliva, L. (2023). Raising the bar of ai-generated im-

age detection with CLIP. CoRR, abs/2312.00195.

Ding, K., Ma, K., Wang, S., and Simoncelli, E. P. (2022).

Image quality assessment: Unifying structure and tex-

ture similarity. IEEE Trans. Pattern Anal. Mach. In-

tell., 44(5):2567–2581.

Fezza, S. A., Bakhti, Y., Hamidouche, W., and D

´

eforges, O.

(2019). Perceptual evaluation of adversarial attacks

for cnn-based image classification. In 11th Interna-

tional Conference on Quality of Multimedia Experi-

ence QoMEX 2019, Berlin, Germany, June 5-7, 2019,

pages 1–6. IEEE.

Goodfellow, I. J., Shlens, J., and Szegedy, C. (2015). Ex-

plaining and harnessing adversarial examples. In Ben-

gio, Y. and LeCun, Y., editors, 3rd International Con-

ference on Learning Representations, ICLR 2015, San

Diego, CA, USA, May 7-9, 2015, Conference Track

Proceedings.

He, K., Zhang, X., Ren, S., and Sun, J. (2016). Deep resid-

ual learning for image recognition. In 2016 IEEE Con-

ference on Computer Vision and Pattern Recognition,

CVPR 2016, Las Vegas, NV, USA, June 27-30, 2016,

pages 770–778. IEEE Computer Society.

Kurakin, A., Goodfellow, I. J., and Bengio, S. (2017). Ad-

versarial examples in the physical world. In 5th In-

ternational Conference on Learning Representations,

ICLR 2017, Toulon, France, April 24-26, 2017, Work-

shop Track Proceedings. OpenReview.net.

Kurakin, A., Goodfellow, I. J., Bengio, S., Dong, Y., Liao,

F., Liang, M., Pang, T., Zhu, J., Hu, X., Xie, C., Wang,

J., Zhang, Z., Ren, Z., Yuille, A. L., Huang, S., Zhao,

Y., Zhao, Y., Han, Z., Long, J., Berdibekov, Y., Akiba,

T., Tokui, S., and Abe, M. (2018). Adversarial attacks

and defences competition. CoRR, abs/1804.00097.

Liu, Z., Liu, Q., Liu, T., Xu, N., Lin, X., Wang, Y., and Wen,

W. (2019). Feature distillation: Dnn-oriented JPEG

compression against adversarial examples. In IEEE

VISAPP 2025 - 20th International Conference on Computer Vision Theory and Applications

176

Conference on Computer Vision and Pattern Recogni-

tion, CVPR 2019, Long Beach, CA, USA, June 16-20,

2019, pages 860–868. Computer Vision Foundation /

IEEE.

Luo, C., Lin, Q., Xie, W., Wu, B., Xie, J., and Shen, L.

(2022). Frequency-driven imperceptible adversarial

attack on semantic similarity. In IEEE/CVF Confer-

ence on Computer Vision and Pattern Recognition,

CVPR 2022, New Orleans, LA, USA, June 18-24,

2022, pages 15294–15303. IEEE.

Madry, A., Makelov, A., Schmidt, L., Tsipras, D., and

Vladu, A. (2018). Towards deep learning models

resistant to adversarial attacks. In 6th International

Conference on Learning Representations, ICLR 2018,

Vancouver, BC, Canada, April 30 - May 3, 2018, Con-

ference Track Proceedings. OpenReview.net.

Reich, C., Debnath, B., Patel, D., and Chakradhar, S.

(2024). Differentiable JPEG: the devil is in the de-

tails. In IEEE/CVF Winter Conference on Applica-

tions of Computer Vision, WACV 2024, Waikoloa, HI,

USA, January 3-8, 2024, pages 4114–4123. IEEE.

Shi, M., Li, S., Yin, Z., Zhang, X., and Qian, Z. (2021). On

generating JPEG adversarial images. In 2021 IEEE

International Conference on Multimedia and Expo,

ICME 2021, Shenzhen, China, July 5-9, 2021, pages

1–6. IEEE.

Shin, R. and Song, D. (2017). JPEG-resistant adversarial

images. In NIPS 2017 workshop on machine learning

and computer security, volume 1.

Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J., and Wojna,

Z. (2016). Rethinking the inception architecture for

computer vision. In 2016 IEEE Conference on Com-

puter Vision and Pattern Recognition, CVPR 2016,

Las Vegas, NV, USA, June 27-30, 2016, pages 2818–

2826. IEEE Computer Society.

Szegedy, C., Zaremba, W., Sutskever, I., Bruna, J., Erhan,

D., Goodfellow, I. J., and Fergus, R. (2014). In-

triguing properties of neural networks. In Bengio, Y.

and LeCun, Y., editors, 2nd International Conference

on Learning Representations, ICLR 2014, Banff, AB,

Canada, April 14-16, 2014, Conference Track Pro-

ceedings.

Taubman, D. and Marcellin, M. (2002). Jpeg2000: stan-

dard for interactive imaging. Proceedings of the IEEE,

90(8):1336–1357.

Tram

`

er, F., Kurakin, A., Papernot, N., Goodfellow, I. J.,

Boneh, D., and McDaniel, P. D. (2018). Ensem-

ble adversarial training: Attacks and defenses. In

6th International Conference on Learning Represen-

tations, ICLR 2018, Vancouver, BC, Canada, April 30

- May 3, 2018, Conference Track Proceedings. Open-

Review.net.

Wang, X. and He, K. (2021). Enhancing the transfer-

ability of adversarial attacks through variance tuning.

In IEEE Conference on Computer Vision and Pat-

tern Recognition, CVPR 2021, virtual, June 19-25,

2021, pages 1924–1933. Computer Vision Foundation

/ IEEE.

Wong, E., Rice, L., and Kolter, J. Z. (2020). Fast is

better than free: Revisiting adversarial training. In

8th International Conference on Learning Represen-

tations, ICLR 2020, Addis Ababa, Ethiopia, April 26-

30, 2020. OpenReview.net.

Zhao, Z., Liu, Z., and Larson, M. A. (2020). To-

wards large yet imperceptible adversarial image per-

turbations with perceptual color distance. In 2020

IEEE/CVF Conference on Computer Vision and Pat-

tern Recognition, CVPR 2020, Seattle, WA, USA,

June 13-19, 2020, pages 1036–1045. Computer Vision

Foundation / IEEE.

Towards JPEG-Compression Invariance for Adversarial Optimization

177